1. Introduction

A ship’s diesel engine is the primary propulsion unit of a ship. Its reliability and stability are critical to the safe and economical operation of the vessel [

1]. Due to the complex structure of marine diesel engines and their long-term operation under high temperatures and pressure, abnormal conditions are likely to occur [

2]. The traditional maintenance of marine diesel engines mainly adopts the way of regular maintenance and after-sales maintenance [

3]. Regular maintenance requires downtime and a lot of money, which affects productivity and may not be able to predict all malfunctions and lead to timely repair, while after-sales maintenance cannot prevent accidents from happening and may threaten the safety of ship personnel and property in serious cases [

4]. Therefore, it is very important to study the early warning technology of the ship’s diesel engine.

With the rapid development of modern industrial technology, marine equipment systems are becoming more automated and intelligent [

5]. Conventional diesel engine detection and warning techniques are mostly concerned with the external parameters of the diesel engine. It is only when the fault has reached a certain level of deterioration that the parameters show a noticeable abnormality [

6]. This means that conventional methods of monitoring and alerting ships are unable to provide a timely warning. Failure warning technology is a system monitoring and prediction technology that can collect and analyze data from equipment or systems to predict possible failures and take timely action to avoid them [

7]. Fault warnings can improve the reliability and stability of the equipment and reduce the damage and maintenance costs caused by failures. Traditional fault warning methods are mainly based on rules and statistical models, and their accuracy and reliability are limited [

8]. DL techniques have achieved good results in the fields of image and speech and have therefore been introduced to fault warning for marine diesel engines [

9,

10,

11].

EGT is one of the most important heat parameters for diesel engines’ combustion and dynamical properties in current operating conditions [

12]. Because the exhaust gas temperature changes slowly and is less subject to disturbances, it is a strong indicator of failure. By predicting the vessel’s EGT, it is possible to effectively demonstrate the healthy performance of the diesel engine [

13]. This prediction method can help to identify potential faults early and take appropriate repair and maintenance measures to ensure the stability of the diesel engine.

There is less research on marine diesel engine fault warnings. Nguyen et al. introduced a new model for forecasting maintenance, which uses sensor measurements to estimate the likelihood of system failure in various time scales [

14]. Jiang et al. presented a new algorithm to diagnose the failure of a diesel engine by using a double-assistant two-aided diagnosis algorithm based on the high angle relation and the transient nonsmooth state of the diesel engine [

15]. Liu et al. introduced a kind of ship diesel engine fault alarm system based on CNN’s characteristic extracting ability and BiGRU’s time forecast ability [

16]. Liu et al. combined long- and short-term neural network (LSTM) characteristic extracting ability and time sequence storage ability to build a forecast model for marine diesel engine EGT [

17]. Patil et al. proposed a swarm-based Cauchy Particle Swarm Optimization (CPSO) method to search for the hyperparameters of LSTM to predict the optimal LSTM hyperparameters for temperature [

18]. Xie et al. presented a multilayer perceptron (CGMP) based on the convolutional gated recurrent unit (GRU) to predict the South China Sea surface temperature. Convolution CGMP can efficiently catch adjacent effects in space, while GRU and MLP can deal with historical information efficiently [

19]. Raptodimos et al. presented a nonlinear autoregression neural network (NARX) for the prediction of the exit temperature of a vessel’s primary engine and showed that NARX was well-performing and stable under different assumptions [

20].

Tan et al. developed a multistage prediction model for reheating steam in coal-fired power boilers based on an LSTM, which was able to accurately predict the reheat steam temperature within 2.5 min, providing an important reference for reheat steam temperature control [

21]. Yan et al. used a data-driven hybrid approach to locomotive axle temperature prediction using particle swarm optimization and gravitational search algorithm (PSoGSA) to optimize and integrate bidirectional long- and short-term memory (LSTM) network units to achieve locomotive axle temperature prediction [

22]. Cheliotis and his colleagues used the Expectation Behavior (EB) model and index-weighted moving mean to forecast the operation status of the primary engine [

23]. Karatug and his team used an artificial nerve network (ANN) to determine the real-time running state of diesel engines [

24]. Lazarus and his team used a fault tree and fault model to predict and locate faults in diesel engines [

25].

In the current research, researchers usually classify diesel engine fault prediction and early warning methods into two types: condition prediction and condition classification [

26]. Condition classification requires a lot of early failure information, but this method is not applicable, because early fault information on marine diesel engines is difficult to collect. Regular operation of the ship’s diesel engine is an important guarantee of the safety of the ship and its crew. Thus, we present a CNN-BiLSTM-Attention prediction model, which predicts the operating status of marine diesel engines based on real-time operating data. Then, the normal EGT and the predicted EGT of the ship’s diesel engine are used to calculate the Mahalanobia distance, and the function mapping method is used to construct the transformation function, to construct the ship’s EGT health index model. The fault warning values and thresholds are set in conjunction with 3

criteria, where the fault warning values are used to indicate that a fault may occur, and the fault thresholds are used to monitor whether the EGT monitoring index has crossed the limit and to warn of a fault. Finally, the validity of this approach has been experimentally proven.

The rest of the thesis is as follows: This article introduces the theoretical part of DL used, including CNN, BiLSTM, and the attention mechanism (Attention). The data processing

Section 3 introduces the sources of the prediction sample data and the data processing procedures such as data normalization and dimensionality reduction. The predictive model construction section presents how to adjust the model hyperparameters and the results of optimal hyperparameter selection. A fault warning model for marine diesel engine EGT is established, and the effectiveness of the model is verified through comparative experiments. In the fault warning

Section 4, the methods for determining the monitoring index alarm values and thresholds are presented and experimentally verified. In the conclusion

Section 5, the whole paper is summarized and prospects are presented.

2. Principles of Deep Learning Models

2.1. The Convolutional Neural Networks (CNN)

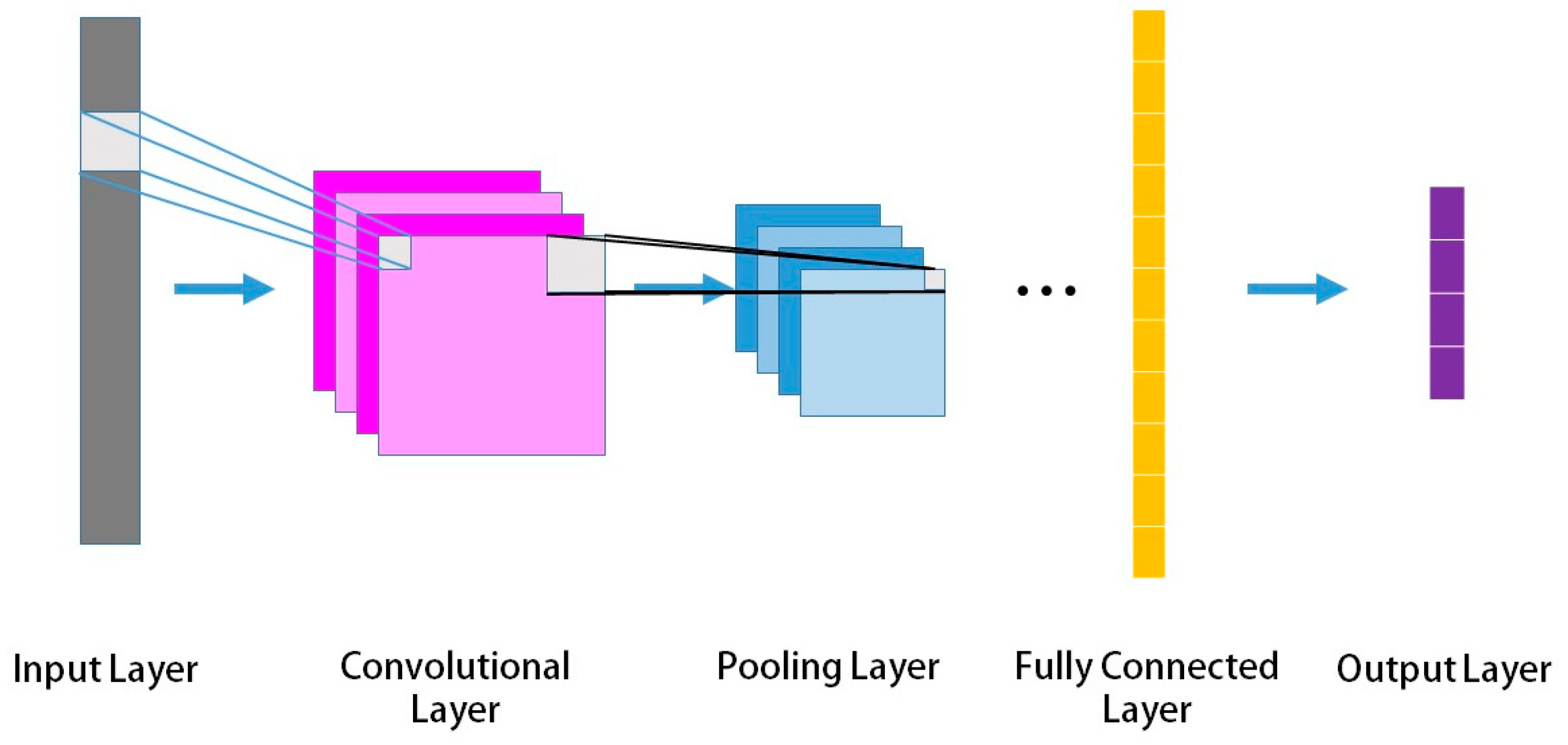

A convolutional neural network (CNN) is a set of layers consisting of an input layer, a convolutional layer, a pooling layer, and a full connection layer [

27]. Compared to conventional neural networks, this model is characterized by a convolution and a pooling layer. In the convolution layer, every neural network is associated with only a few adjacent nodes, and every convolution is composed of several cells that form a rectangle [

28]. After initialization of the convolution kernel, the convolution kernel obtains the proper weights. The convolution kernel has the advantage of minimizing the number of links between different layers while minimizing the risk of overfitting [

29]. Pooling layers, also known as subsampling layers, are usually available in the form of mean pooling and maximum pooling. Pooling can be thought of as a special type of convolution [

30]. The fully connected layer combines and integrates the features extracted by the convolution and pooling layers for classification, recognition, or prediction [

31]. The CNN architecture is illustrated in

Figure 1. Its convolution and pooling layers are calculated as shown in Formula (1).

In the formula, Ct and Pt are the output matrix sizes of the convolution and pooling layer operations, respectively. Ni1 and Ni2 are the input matrix sizes. f1 and f2 are the weights of the convolution and pooling layers, respectively. p1 and p2 are the number of padding fills in the convolution and pooling layers, respectively. s1 and s2 are the step sizes of the convolution and pooling layers, respectively.

CNN has shown that it is possible to extract features from data by convolution, pooling, weight distribution, etc. CNN is widely used in image processing, including two-dimensional convolutional neural networks (Convolution2d, 2DCNN), which can be applied in many fields such as image classification, object detection, image segmentation, etc. However, its dimension is different from that of time series, so it is not suitable for temporal sequence forecasts. To solve this problem, one-dimensional convolutional neural networks (Convolution1d, 1DCNN) are used to perform data mining on time-series data to extract local features of time-series data and improve the accuracy of prediction models [

32].

2.2. Long- and Short-Term Memory Neural Network Unit (LSTM)

LSTM is a variant of Recurrent Neural Network (RNN) and is a solution proposed to overcome the short-term memory problem for processing sequential data [

33]. Compared to traditional RNNs, LSTM has stronger memory and long-term dependency modeling capabilities. The key concept of LSTM is to introduce a structure called a “memory unit”, which stores and accesses the information at different time steps in the sequence [

34]. The memory unit consists of an oblivion gate, an input gate, and an output gate, which control the flow of information through a series of mathematical operations [

35]. The forgetting gate determines how much information in the memory state of the previous time step is retained during the current time step. The input gate determines which parts of the current input are to be stored at the current time step. The output of the input gate is multiplied by the input of the current time step to update the memory state. This gating mechanism allows the LSTM to selectively remember and forget information, allowing it to better handle long-term dependencies. The LSTM has performed well in many natural language processing tasks [

36].

The overall architecture of the LSTM model is composed of an input word

xt, a cell state

Ct, a temporary cell state

Dt, a hidden layer state

ht, a forgetting gate

ft, a remembering gate

it, and an output gate

Ot at time

t. The calculation works as follows: by forgetting the information and memorizing the new information in the cell state, the information that is useful for calculation at a later time is transferred, whereas the useless information is discarded, and the hidden level status

ht is the output at every time step. The input

xt of the current time step is first merged with the hidden state

ht−1 of the previous time step to obtain [

xt,

ht−1], which is activated by a sigmoid function to obtain the oblivious gate value

ft, where the sigmoid function is used to regulate the values flowing through the network. The input gate determines what information is to be added to the storage status from the input data in the present time step. The formula for generating the value

it for the memory gate is almost identical to the formula for the forgetting gate, the only difference being the target on which it will subsequently act. The second formula for the input gate is the current cell state

Dt. The input gate consists of a sigmoid activation function and a tanh function. Finally, the input gate multiplies the sigmoid output with the tanh output and adds the result to the current memory state. The structure and formula for the cell update are that the newly acquired forgetting gate value

ft is multiplied by the one obtained in the preceding time step, and then a result of the multiplication of the input gate value by the un-updated

Dt acquired in the present time stage, and the final result is acquired as a portion of the input in the subsequent time step. The output gate value

Ot is calculated in the same way as the forgetting gate and the input gate. The entire procedure of the output gating is the generation of the hidden state

ht.

Figure 2 illustrates the configuration of long-term and short-term storage networks, and the calculation equation is illustrated in (2).

In the formula, is the sigmoid function, Dt is the candidate neuron, bf, bi, bc, bo are the offset matrices of the forgetting gate, memory gate, temporary cell, and output gate parts, respectively, Wf, Wi, Wc, Wo are the weight coefficients of the forgetting gate, memory gate, temporary cell, and output gate parts, respectively, Ct−1 is the cell state input at time t−1, ht−1 is the output at time t−1, xt is the input at the current time, ht is the output and Ct is the cell state.

The LSTM is a powerful recurrent neural network structure that can effectively handle long-term dependencies in sequential data by introducing memory units and gating mechanisms [

37].

2.3. Bidirectional Long- and Short-Term Memory Neural Network Unit (BiLSTM)

In the problem of predicting the EGT of a ship’s diesel engine, the operating state of the diesel engine is influenced not only by information from the historical moment but also from the future moment. However, the conventional LSTM can only use the information from the historical moment to predict the state output at the future moment, and it cannot encode the information from backward to front. To solve this problem, a bidirectional long- and short-term memory network (BiLSTM) is proposed [

38]. BiLSTM consists of a combination of the forward LSTM and the reverse LSTM and can use information from both the past and the future to predict the operating state of the diesel engine at the current moment [

39]. Through forward and backward propagation, BiLSTM can capture bidirectional semantic dependencies more comprehensively, resulting in a better understanding of the temporal data [

40]. As a result, BiLSTM has better feature representation, stronger modeling capability, and better prediction representation in the marine diesel engine EGT prediction problem. BiLSTM is an effective model for improving the accuracy of a ship’s diesel engine EGT prediction.

As shown in

Figure 3, the BiLSTM model plugs the same input sequence into two LSTMs, the forward LSTM hidden layer state

hft and the reverse LSTM hidden layer

hbt, respectively, then connects the two hidden layers and plugs them together into the output layer for prediction to obtain the BiLSTM model output

ht. The formula is given in (3).

In the formula, w1 is the weighting coefficient from the input level to the forward LSTM, w2 is the weighting coefficient between the forward LSTM cell layers, w3 is the weighting coefficient from the input level to the reverse LSTM, w5 is the weighting coefficient between the backward LSTM cell layers, w4 is the weighting coefficient from the forward LSTM to the output layer, w6 is the weighting coefficient from the reverse LSTM to the output layer, and bft, bbt, and bot are the bias matrices of the respective parts.

2.4. Mechanisms of Attention (Attention)

Attention is a mechanism used to increase a model’s attention to input in different parts. It is used in many DL domains [

41]. Its operation is the calculation of a relevance score for each element in the input sequence: for the current moment and then weighting the input sequence according to the score to produce a representation that focuses more on the important elements [

42]. Thus, the model can selectively focus on the parts that are relevant to the current task, improving its expressiveness and ability to generalize. The introduction of an attention mechanism based on the BiLSTM network model can solve the long dependency problem, and so on [

43]. In summary, the Attention allows the model to better focus on the key information by dynamically weighting the input sequence, thereby improving the predictive power of the model.

As shown in

Figure 4, the input sequence is

xk, the hidden layer state of the input sequence is

ak, the attention weight of the hidden layer state of the historical input to the current input is

, and the hidden layer state value of the final node of the final output is

Ak. See (4) for the formula.

The last characteristic vector

Ak is the hidden vector of the last node. See (5) for the formula.

In the formula, is a matrix measuring the degree of influence between data, is the weight data, and C is the weight after weighting.

2.5. CNN-BiLSTM-Attention Prediction Model

Since the traditional single neural network can no longer meet the contemporary needs of complexity and diversity of marine diesel engine fault warning, in this article, an HNN is proposed to predict the failure of a ship’s diesel engine. The hybrid model combines CNN, BiLSTM, and Attention. Among them, CNN can be used to extract the local features of the data through the convolving operation. BiLSTM can capture the long-range dependencies in the sequences, and by using the CNN-BiLSTM model can make full use of the local and global information to make predictions. Attention can automatically learn the association scores of different positions in the input sequence, which enables the prediction model to further improve the model’s expressiveness and generalization ability. The CNN-BiLSTM-Attention model can reduce the dependence on noise and irrelevant information, and it can improve the robustness and stability of the model. The bidirectional LSTM can solve the dependency problem in long sequences that cannot be handled by the unidirectional LSTM, and it can increase the precision and validity of the model.

Figure 5 illustrates a CNN-BiLSTM-Attention prediction model.

3. Forecasting Process

3.1. Data Processing

This paper uses the actual operating data collected from a 6L34DF dual-fuel power generation diesel engine installed on an LNG carrier. The actual operating data were recorded using the principle of recording once per second. To decrease computation times, increase process speed, and decrease cabin noise impact, the 6 s interval was used to select a monitoring point for interval recording, and a total of 7200 surveillance sites were chosen, i.e., twelve hours of operating parameters. A total of eight operating parameters were selected for this diesel engine model, as can be seen in

Table 1.

The data collected were taken with the ship’s diesel engine running under stable operating conditions, with only small fluctuations. The selected thermal parameters contain a lot of information about the condition of the diesel engine. The EGT reflects combustion efficiency and engine performance. If the EGT is too high, it may mean incomplete combustion or problems with the diesel engine. The air cooler outlet temperature reflects whether the engine cooling water circulation is normal and whether the engine load is too high. The compressor speed reflects the load of the main engine. The gas inlet pressure reflects the working condition of the gas supply system and the stability of the gas quality. The EGT at the outlet of the compressor reflects the combustion efficiency of the ship’s main engine and the working condition of the compressor. The inlet pressure of the high-temperature water of the cylinder liner reflects the working condition of the ship’s cooling system, and the outlet temperature of the high temperature of the cylinder reflects the thermal load of the engine.

The obtained data were used to build a marine diesel engine EGT prediction data set, with 70% trained and 30% tested, to predict the EGT of the ship’s diesel engine and validate its effectiveness. To eliminate the influence of the magnitude and scale between different predictor parameters on the predictive effect, the collected sample data are normalized. The formula is shown in (6).

In this equation, Xnorm is the standard data, X is the raw sample data, and Xmax and Xmin are the maximal and minimal values of the sample data set, respectively.

3.2. Principal Component Analysis (PCA)

PCA is one of the most popular methods for reducing high-dimensional data to low-dimensional data. With PCA dimensionality reduction, the dimensionality of the data can be reduced while retaining the main information of the data. The reduced data set can be better visualized, processed, and analyzed while reducing computational complexity. However, some data may be lost due to dimension reduction, so it is necessary to consider the relationship between dimension reduction and information preservation.

As this paper acquires the data set of a ship diesel engine under normal operation as a multidimensional feature data set, the method of principal component analysis can simplify the model structure and improve the model convergence rate as well as the computational efficiency. The sample data collected were subjected to PCA using Python language code to perform dimensionality reduction on eight preselected thermal parameters to better understand the nature of the data. The formula is shown in (7).

In the formula, Fi is the score of the i-th principal component, Xj is the sample parameter, Wij is the weight of each variable of the principal component, and is the coefficient corresponding to each variable in the component matrix.

According to the results of the PCA of the eight parameters in the original sample, the KMO and Bartlett’s test showed a KMO of 0.786 and a Sig of 0.000, indicating that the degree to which the characteristic factors correlate with the parameters of the sample was high enough to meet the requirements of the PCA. Plots of principal component contributions and cumulative contributions are shown in

Figure 6.

The principal component analysis was completed to extract the principal components with eigenvalues greater than 0.5 and a variance contribution rate of approximately 95%. As illustrated in

Figure 6, the cumulative variance contribution rate of the top five main constituents was 94.145%, indicating that these five principal components can represent 94.145% of the information of the original eight operational parameters and can represent the total data of the selected samples. Therefore, this paper uses EGT

Tp, high-temperature water air cooler outlet temperature

T1, supercharger speed

N, engine load

L, and high-temperature water cylinder liner outlet temperature

T3 for marine diesel engine EGT prediction study, which reduces data redundancy and improves the diagnostic efficiency of the model.

3.3. Martens Distance Screening for Outliers

The Mahalanobia distance is a method used to measure the distance between multivariate data, taking into account the correlation between dimensions. In this experiment, the Mahalanobia distance was used to filter outliers, i.e., those outliers that are significantly different from other data points. For the data after the PCA dimensionality reduction process, the Mahalanobia distance between the EGT and the other four characteristic parameters was determined by calculating the Mahalanobia distance. The formula is shown in (8).

In the formula, DMH is the computed Mahalanobia distance, xi and yi are column vectors, and S−1 is the inverse matrix of the covariance.

Then, the Chi-square test was used to filter outliers for the EGT, with a total degree of freedom of 4 for the sample data set, and a critical value significance level of 0.005 was determined for the martingale distance. The formula is shown in (9).

In the formula, is the cardinality of freedom, is the cell observation, and is the cell premise probability.

According to the Chi-square test, the Chi-square degree of freedom was calculated to be 14.86026, so points with a martingale distance greater than 14.86026 were considered outliers. A total of 80 outliers were removed from the sample data by screening the outliers using the martingale distance, thus eliminating inaccurate and unrealistic sample data due to the particular working environment of the ship and improving the authenticity of the failure prediction.

To address the problem of discontinuity in the time series after outlier removal, we will compensate for the abnormal point using the multi-order Lagrangian value method. The formula is shown in (10).

In the formula, xt is the lost value at time t, n1 is the advance cycle, and n2 is the reverse cycle.

3.4. Indicators for Model Evaluation

Evaluating the model can help determine the accuracy and effectiveness of the model. First of all, it is necessary to study the generalization of a model to compare the various models and determine which one is superior or not. Next, we can progressively improve the performance of our models with these metrics. In this paper, four prediction evaluation metrics commonly used in machine learning are selected, namely, mean-squared error (

), root-mean-squared error (

), mean absolute error (

), and mean absolute percentage error (

). The formula is shown in (11).

In the formula, is the predicted value and is the actual value.

The is a measure of the extent to which the expected amount differs from the actual amount. The is the square root of the ratio of square deviation of predicted value from true value to ratio of observed value, . The is the absolute difference between the individual measurements and the mean. The eliminates the problem of mutually exclusive errors, so it is possible to accurately measure the size of the true prediction error. The reason that can describe accuracy is that it is often used as a statistical indicator of forecast accuracy itself, for example, in time-series forecasting.

3.5. Hybrid Neural Network Prediction Models

Since the input level of the HNN depends on the dimension of the input parameters, there are five input parameters, so the input level has five neurons. The model ultimately predicts that the output result will be the EGT only, so the number of neurons in the output layer is one. The HNN is made up of a CNN frame, a BiLSTM frame, and an Attention frame: the main hyperparameters of CNN and convolution and layer pooling., etc.; the main hyperparameters of BiLSTM are num layers, input size, seq len, batch size, epochs, learning rate, loss, optimizer, etc.; the main hyperparameters of the Attention layer are dropout, etc.

The above parameters were debugged several times, and the parameters were selected using the control variable method. MAE, RMSE, MSE, and MAPE were used as evaluation indicators for the combined prediction model, and the optimal parameters were selected according to

Table 2, and the optimal CNN-BiLSTM-Attention model was trained.

3.6. Analysing the Predicted Results

In this thesis, we input the sample data from the finished data into the training prediction model. The prediction result graph is shown in

Figure 7.

As shown in

Figure 7, the operation of a diesel engine is fairly stable over this period, there is little difference between expected and actual EGT, the range of data waves is within the normal operating range of the diesel engine, the prediction and the actual data were in good agreement, and there was a lot of overlap. The assessment indicators for forecast results are presented in

Table 3. The results show that the CNN-BiLSTM-Attention prediction model proposed in this thesis has high precision and accuracy.

To prove that the HNN combined prediction model proposed in this article is real and effective, and can have high reliability and accuracy in practical parameter prediction work, this paper uses experimental comparison to verify the model’s accuracy. The CNN-BiLSTM-Attention prediction model is compared with five prediction models, namely, RNN, LSTM, BiLSTM, CNN-LSTM, and CNN-BiLSTM. Before performing the experimental comparison, ensure that the input data set of each model is the same standard data set after data processing is completed, and ensure that the hyperparameters of the other models are the same as those of the CNN-BiLSTM-Attention prediction model. Ensure that the input parameters of the models and the conditions of the experimental models are as consistent as possible during the comparison experiments to better compare the prediction accuracies, model training speeds, etc. of the different models. By comparing the performance of the models under different evaluation metrics, it can accurately reflect the strengths and weaknesses of the models in terms of actual prediction work. A comparison of the predicted results from different models is shown in

Figure 8.

Indicators used to evaluate the different forecasting models are presented in

Table 4.

As shown in

Figure 8, the CNN-BiLSTM-Attention HNN prediction model has the best fit and the highest similarity to the actual sample data than other prediction models. As can be seen from

Table 4, the evaluation index of the CNN-BiLSTM-Attention prediction model has the lowest error compared to others, indicating a small discrepancy between the predicted result of a model and the actual value. In summary, the CNN-BiLSTM-Attention has a high prediction accuracy and can meet the basic application in predicting the EGT time series of marine diesel engines.

4. Fault Early Warning Research

4.1. Setting of the Monitoring Index

The EGT of a ship’s diesel engine depends on several factors. In this paper, through feature selection, five feature parameters with the highest correlation with the EGT are selected as the input parameters of the HNN, to obtain the predicted value of the EGT. A real-time monitoring method of the ship’s diesel engine EGT is proposed as a rapid assessment of the integrity, stability, and accuracy of the ship’s diesel engine, and to ensure that the failure warning of the ship’s diesel engine is achieved. The proposed ship’s diesel engine EGT fault warning method is based on the function mapping metric of the Mahalanobia distance, which first calibrates the sample similarity between the predicted and actual values by calculating the Mahalanobia distance between the predicted and actual values. The formula for its calculation is given in Equation (12).

In the formula, DMH is the computed Mahalanobia distance, xi, and yi are column vectors, and S−1 is the inverse matrix of the covariance.

Due to the high uncertainty of the magnitude of Mahalanobia‘s distance, a function mapping is considered to fix it within a certain range. The invention constructs a monitoring indicator function

MF, as in Equation (13), to map the Mahalanobia distance to the interval [0, 1], and uses this method to accurately and intuitively monitor the diesel engine operating conditions. Based on the monitoring indicator

MF, the operating condition of the diesel engine can be monitored more intuitively and effectively by setting the alarm value and the threshold value of the EGT, wherein the alarm value can provide the reference value of the possible abnormal operation of the ship’s diesel engine. The threshold value can provide the reference value of the failure of the ship’s diesel engine.

In the formula,

is the adjustment factor calculated according to Equation (14).

In the formula, is the mean Mahalanobia distance, MFQ is the confidence factor for the normal EGT, and in this paper, we make MFQ = 0.95.

According to Equation (13), the calculated martingale distance between the predicted and actual values of the EGT is used as an input with a definition range of , but the value range of MF is . The output value MF is positively correlated with the health status of the monitored EGT indicator so that the state change of the EGT can be consistently and accurately monitored using this mathematical conversion method.

After obtaining the EGT of

MF, this paper better characterizes the health of the EGT of a marine diesel engine by setting a monitoring index warning value and a threshold value to alert the reference value for a possible abnormal EGT, and a threshold value for an abnormal EGT criterion was used to set the EGT warning values and thresholds. The

criterion was used to set the EGT warning values and thresholds. First, the calculated

DMH under normal operation of the marine diesel engine was tested for normality of distribution. As shown in

Figure 9, the Mahalanobia distance between predicted and actual values lies between 0 and 8, and most of them lies between 0 and 4.

Then, the alarm value and the threshold value of the Mahalanobia distance are determined. The formula is shown in (15).

In the formula, is the average of Mahalanobia’s distance, and is Mahalanobia’s standard deviation.

Finally, the alarm value

MFw and the threshold

MFf for the monitoring index are calculated according to and using Equation (16).

The warning value and threshold value of the monitoring indices calculated by the function construction method can satisfy us to make a clear distinction between the normal or abnormal operating state of the EGT of the ship’s diesel engine.

Based on the method proposed above, the predicted EGT values obtained from the CNN-BiLSTM-Attention HNN predictions in this article were evaluated against the actual values of the sample for the monitoring index status. The monitoring indices for the sample data, as well as the healthy operating conditions alarm values and thresholds for the EGT, were calculated. The healthy operating alarm values and thresholds are shown in

Table 5.

A graph of the EGT monitoring index for a diesel engine in normal operation is shown in

Figure 10.

Figure 10 shows that the ship diesel engine EGT prediction value under normal working conditions of the monitoring index has been higher than the monitoring index threshold. Although the monitoring index exceeded the monitoring index warning value at some points in time, the monitoring threshold was not exceeded, and therefore, no marine diesel engine failure alarms occurred. During the monitoring intervals shown in the figure, the monitoring index values fluctuated smoothly within the normal range most of the time, indicating that the vessel was in normal sailing condition. It shows that the ship’s diesel engine failure prediction and early warning method proposed can meet the monitoring of the ship’s diesel engine EGT in the operation of state characteristics.

4.2. Experimental Verification of the Fault Warning Function

Since the model data are the actual operating data of the ship’s diesel engine, it is difficult to obtain fault data in the actual operation of marine diesel engines. Therefore, to validate the failure alert approach presented in this article, a manual linear adjustment of the data set was used to simulate the state data of a ship’s diesel engine at fault. The EGT of a ship’s diesel engine is mainly affected by combustion efficiency, load size, intake air temperature, and cooling system effect. This paper simulates the abnormally high EGT fault condition of a ship’s diesel engine caused by the clogging of the high-temperature water air cooler water pipe, which makes the air cooler unable to dissipate heat effectively and reduces the cooling effect of the air cooler. The monitoring index diagram of the abnormal increase in EGT is shown in

Figure 11.

As shown in

Figure 11, the high-temperature water air cooler blockage simulated by the manual linear adjustment data set causes the EGT to rise abnormally until it leads to the high temperature of the ship’s diesel engine and the shutdown fault. As shown in

Figure 11, the ship’s diesel engine EGT is in a relatively stable operating condition before the fault is introduced (sample sequence before 1110). The 0–50 sequence points fluctuate, but the fluctuation range is within the monitoring index threshold, and after a short fluctuation, it returns to the monitoring index warning value, so no fault alarm occurs during this period of operation. The monitoring index fluctuates steadily from 50 to 1100 and rarely exceeds the warning value, indicating that the ship’s diesel engine is in a stable operating condition. After the artificial introduction of faults (sample sequence after 1100), the monitoring index suddenly displayed large fluctuations and was extremely unstable; after crossing the monitoring index warning value, it did not fluctuate to the normal working range, but directly crossed the monitoring index threshold, fluctuated up and down for some time below the monitoring index threshold, and then fell off the cliff, resulting in the shutdown of the diesel engine if the temperature is too high. If the monitoring index exceeds the monitoring index warning value line, but does not exceed the monitoring index threshold line, a diesel engine early failure warning is generated. If the monitoring index continues to fluctuate below the threshold line, a diesel engine exhaust temperature abnormality fault alarm is generated. It can be seen that the marine diesel engine fault warning method proposed in this paper can satisfy both the real-time monitoring and evaluation of the exhaust temperature of the marine diesel engine, and it can also issue a fault warning alert for the exhaust temperature abnormality in a future period of time. The method has high precision and sensitivity and can basically meet the needs of modern marine diesel engine exhaust temperature fault warning.

5. Conclusions

This article presents an approach to the prediction of EGT in marine diesel engines using a CNN-BiLSTM-Attention prediction model. The main conclusions are as follows:

The normalization method is used in the data processing to eliminate the influence of the magnitude and order of the different prediction parameters. PCA is used to extract the characteristic parameters that have a greater influence on diesel EGT, simplify the model structure, and increase the model convergence rate and computational efficiency. The Mahalanobia distance outlier screening method can consider multidimensional data, adaptively identify outliers, and increase the precision of outlier monitoring to increase the precision of sample data.

The CNN-BiLSTM-Attention prediction model proposed in this article combines CNN, BiLSTM, and Attention. Among them, CNN can extract features from time-series data; BiLSTM can automatically learn the extracted time-series features; and Attention assigns weights to time-series features, which can better capture the sequence of fault-related features. It overcomes the limitations of the previous single neural network and can extract the temporal and spatial features of the EGT of the ship’s diesel engine more comprehensively and improve the prediction accuracy.

By conducting model comparison experiments, the prediction model proposed in this article was compared with RNN, LSTM, BiLSTM, CNN-LSTM, and CNN-BiLSTM prediction models to analyze the prediction results. According to the prediction model evaluation indices, the CNN-BiLSTM-Attention prediction model proposed in this article has higher prediction accuracy, indicating that the HNN prediction model proposed in this paper has certain advantages in predicting time series.

Addressing the issue of early warning of faults, this paper adopts the method of converting a mathematical function model by combining the Mahalanobia distance to construct the EGT monitoring index value of a ship’s diesel engine and uses the principle to set the alarm value and threshold value of the EGT monitoring index, with the alarm value used for fault warning indication and the threshold value used for fault warning. The proposed fault warning method can be verified through fault experiments, in that it can continuously monitor the operating status of diesel engines and provide a timely warning when an abnormal operation occurs, which can meet the health management needs of modern marine diesel engines.

To summarize, the CNN-BiLSTM-Attention-based marine diesel engine fault warning model has high prediction accuracy and early warning lead time, which can provide interpretable fault prediction results. The model can capture the characteristic patterns and provide early warning before the fault occurs, which is conducive to taking measures such as repair or spare parts in advance, reducing downtime and maintenance costs. It has important application value for fault prevention and the health management of marine diesel engines.

In our future work, we will not limit our research to one monitoring parameter, but also study other monitoring parameters, such as turbocharger EGT, to ensure the complete prediction results of diesel engines. The selection of prediction methods and the length of the prediction period are also worthy of further study, with a focus on improving the prediction capability for long time series. We will improve the model function and implement the fault classification function based on fault prediction, to provide the fault category together with the fault warning and facilitate subsequent repair and maintenance.