A High-Precision Detection Model of Small Objects in Maritime UAV Perspective Based on Improved YOLOv5

Abstract

:1. Introduction

2. Related Work

2.1. Feature Fusion Structure

2.2. Attention Mechanism Module

2.3. Spatial Pyramid Pooling Structure

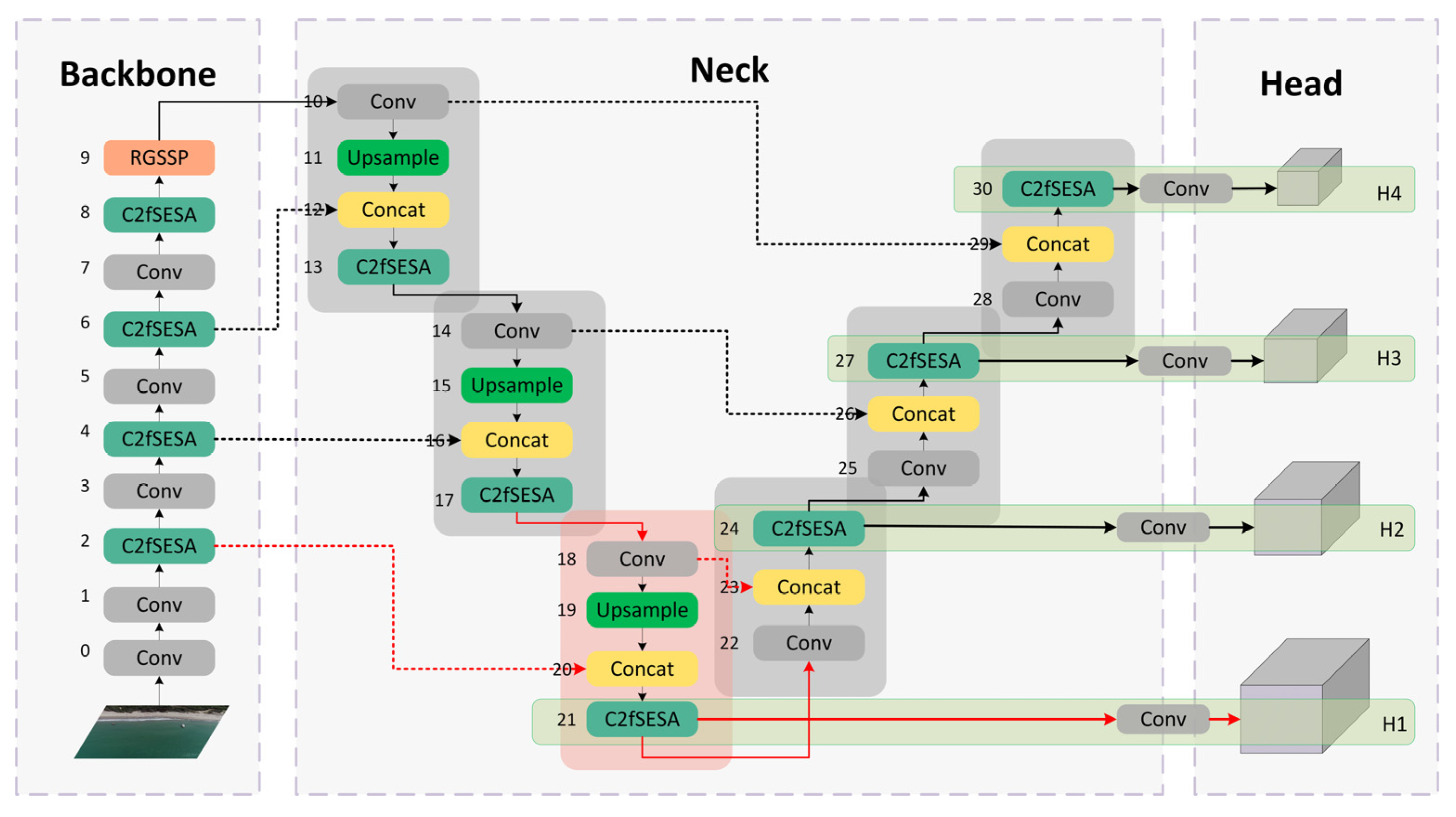

3. Methods: The YOLO-BEV Model

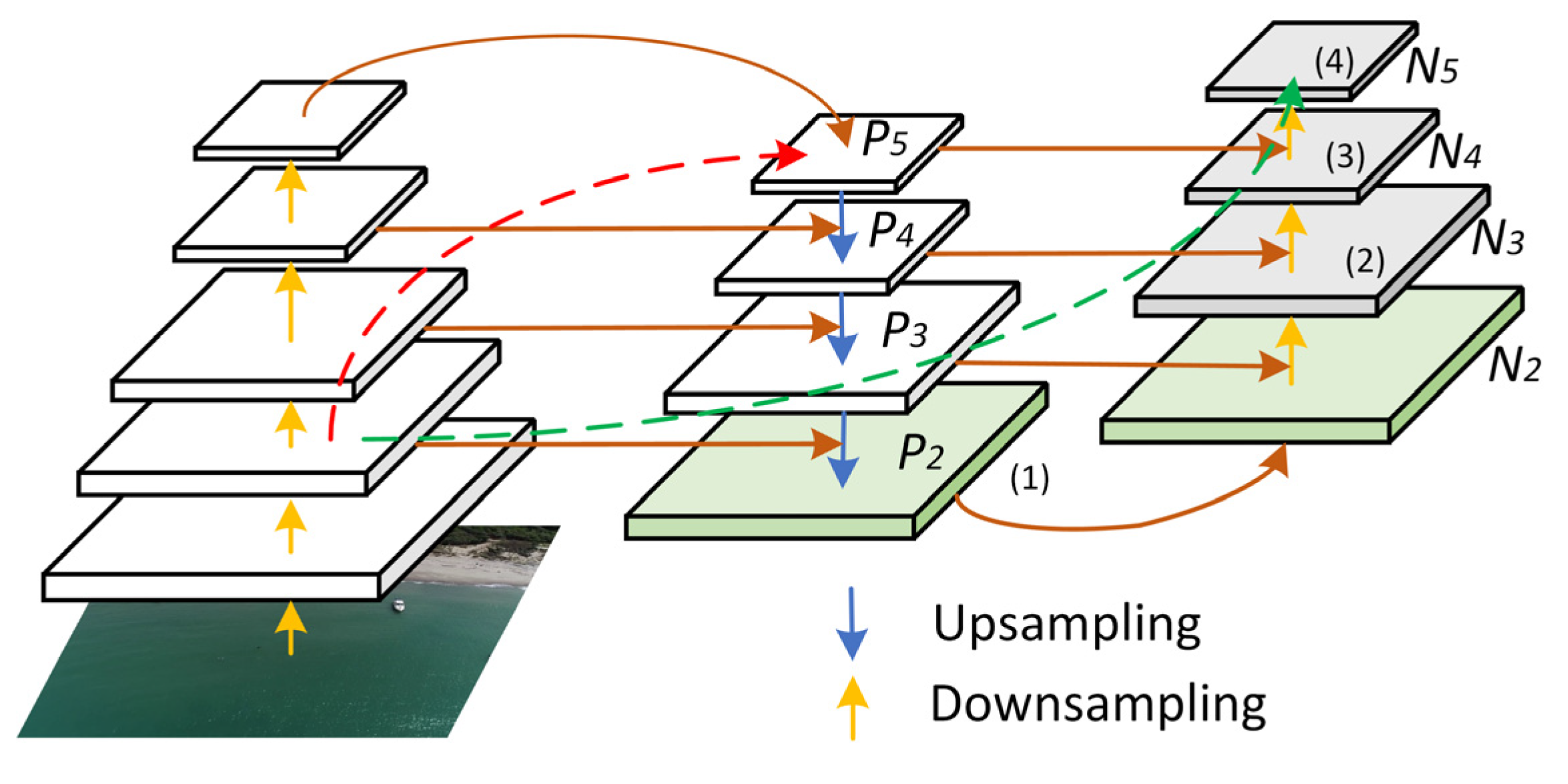

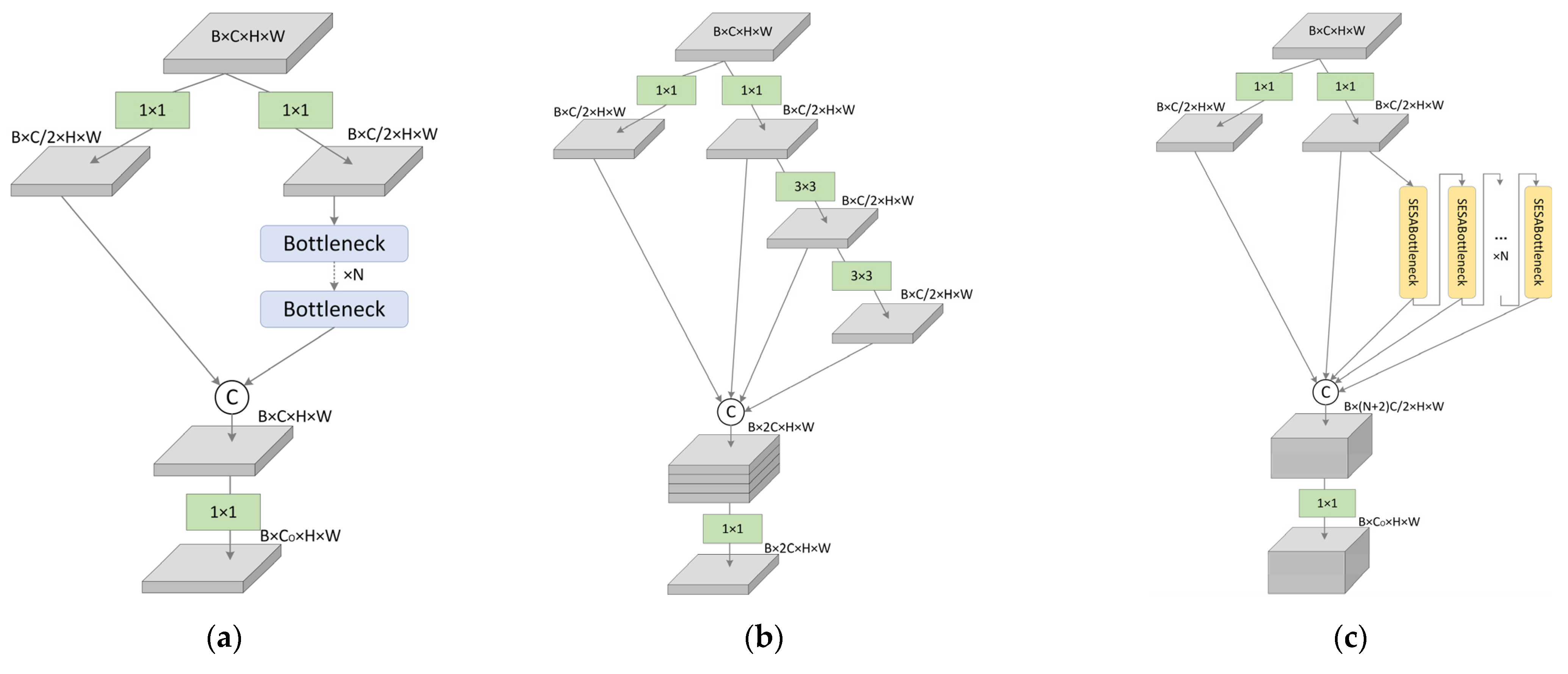

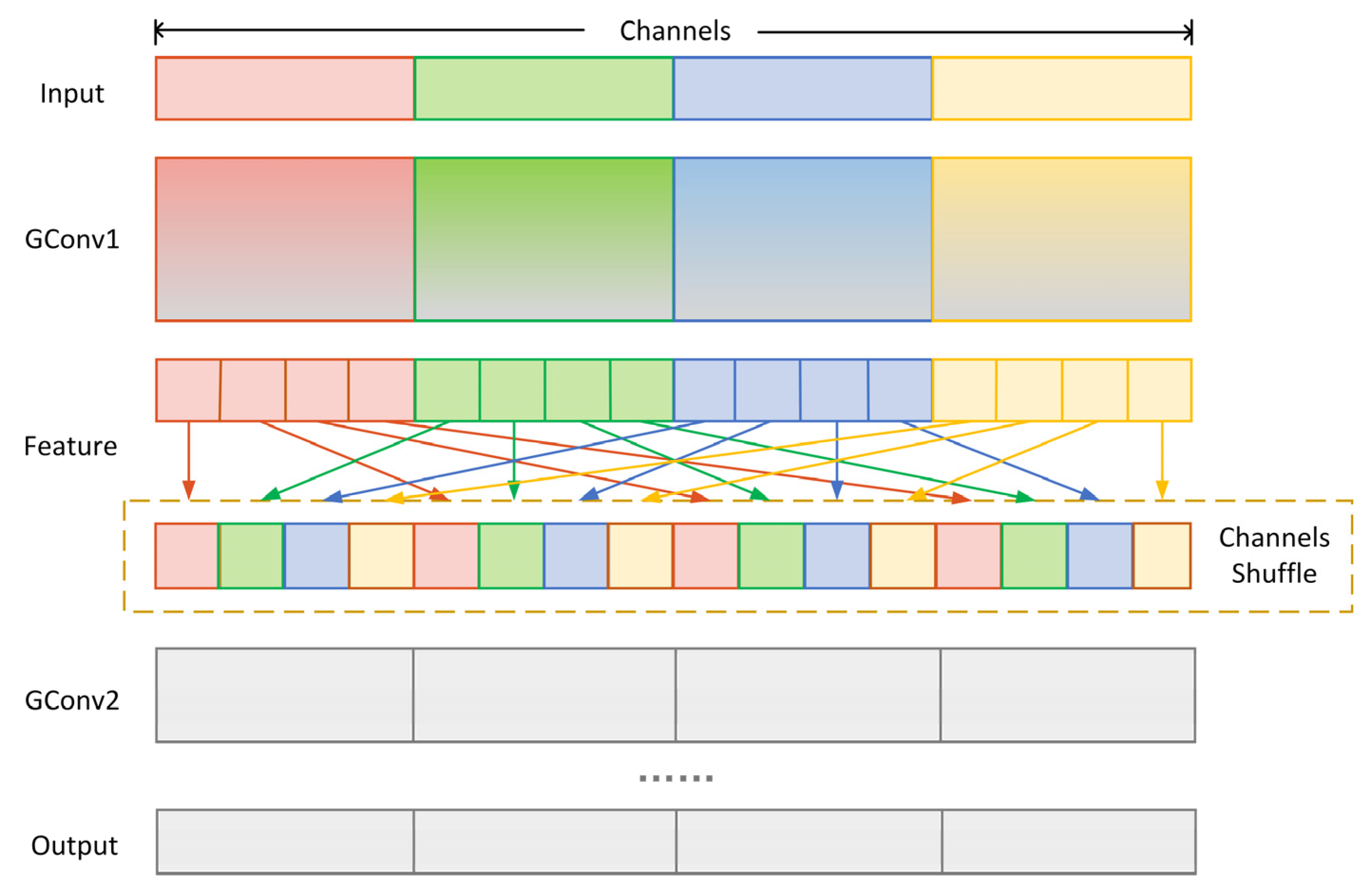

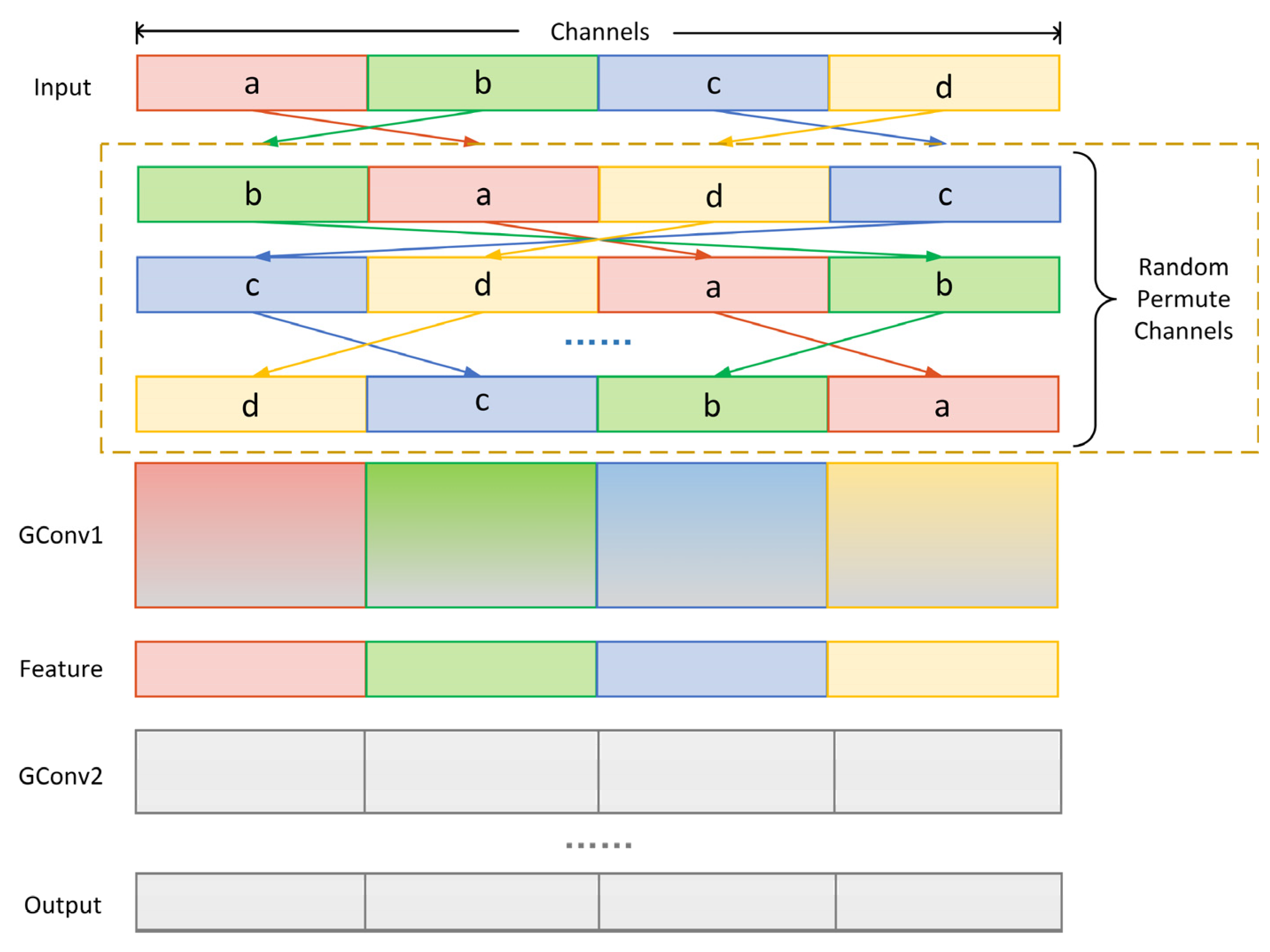

3.1. PAN+ Module

3.2. C2fSESA Module

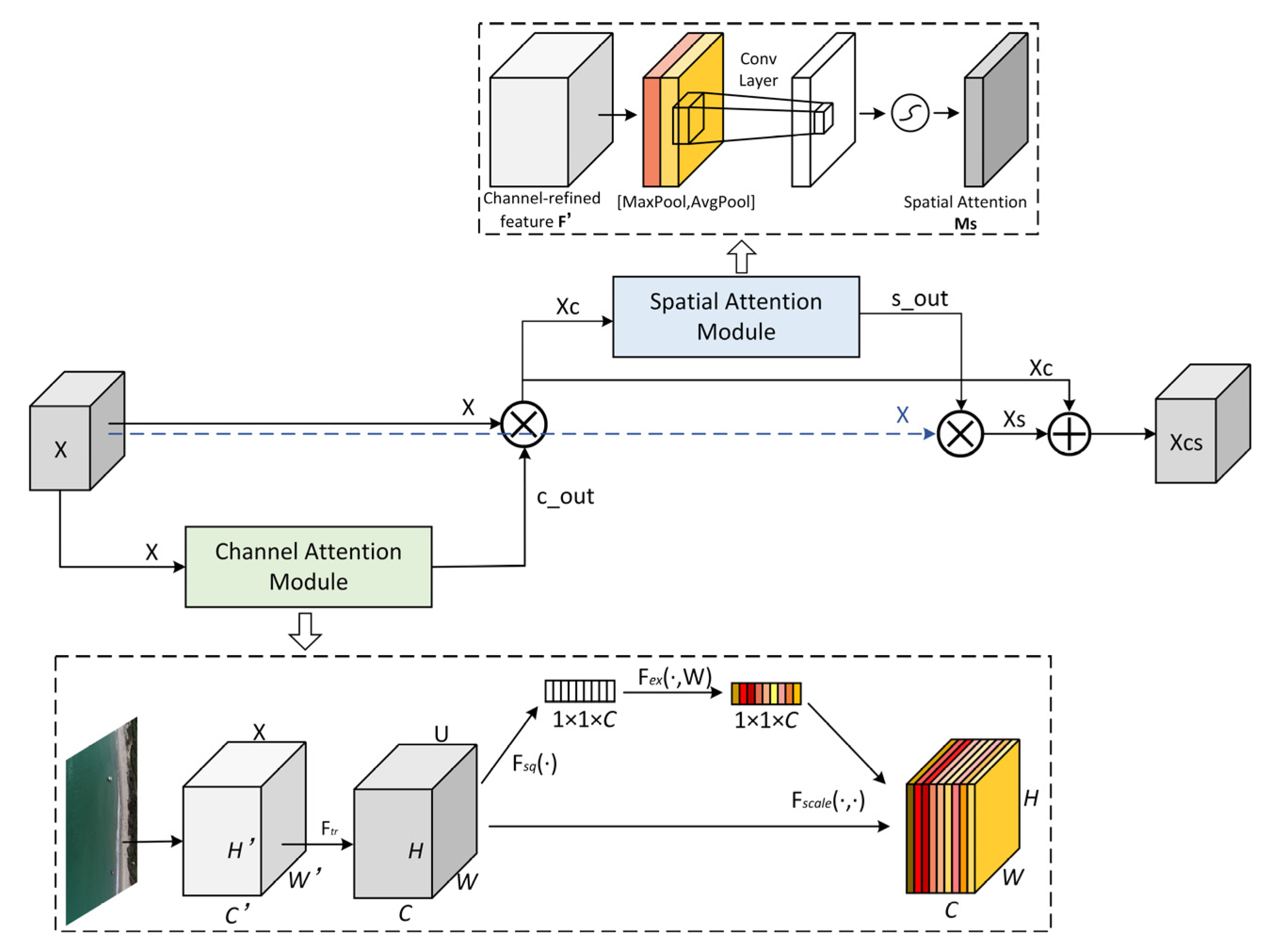

3.2.1. The SESA Attention Mechanism

3.2.2. The C2fSESA Module

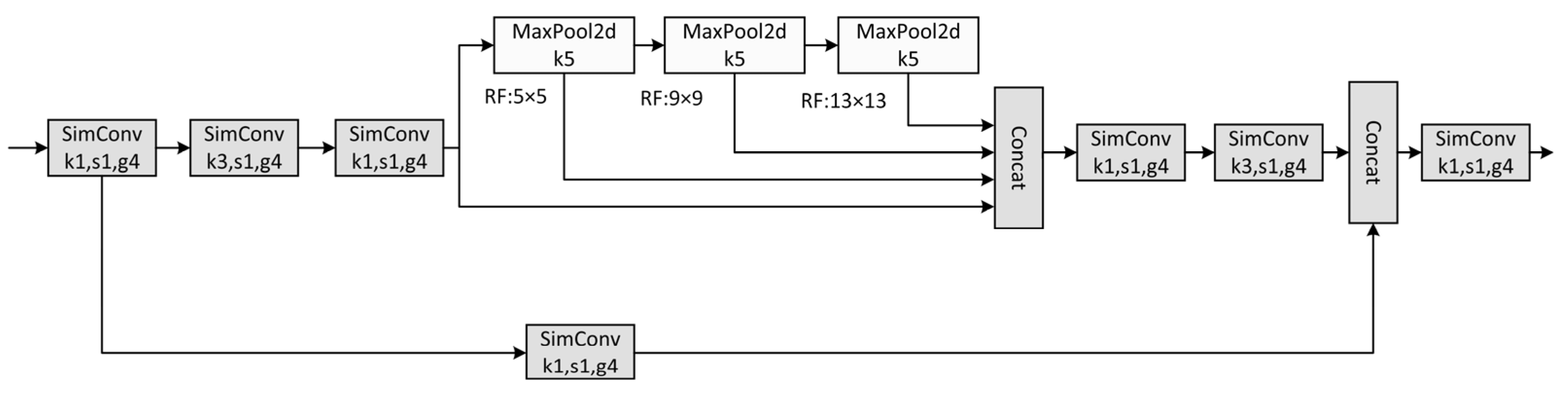

3.3. RGSPP Structure

4. Experiments and Results

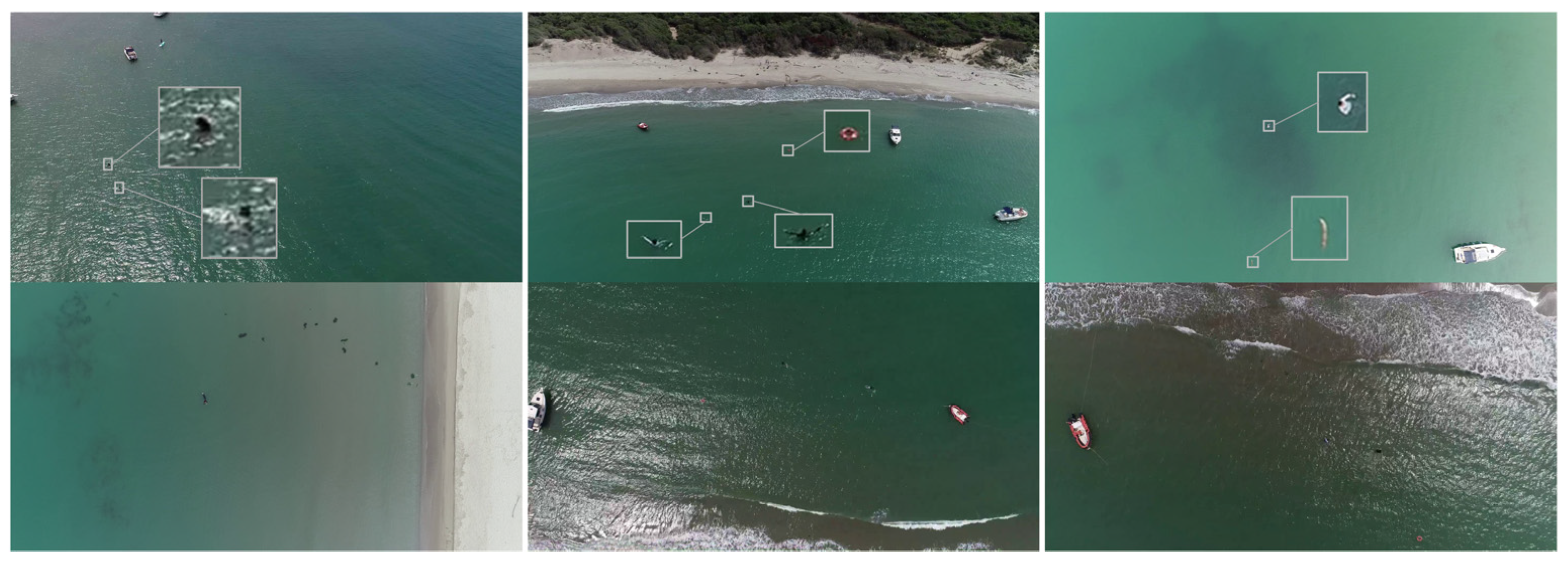

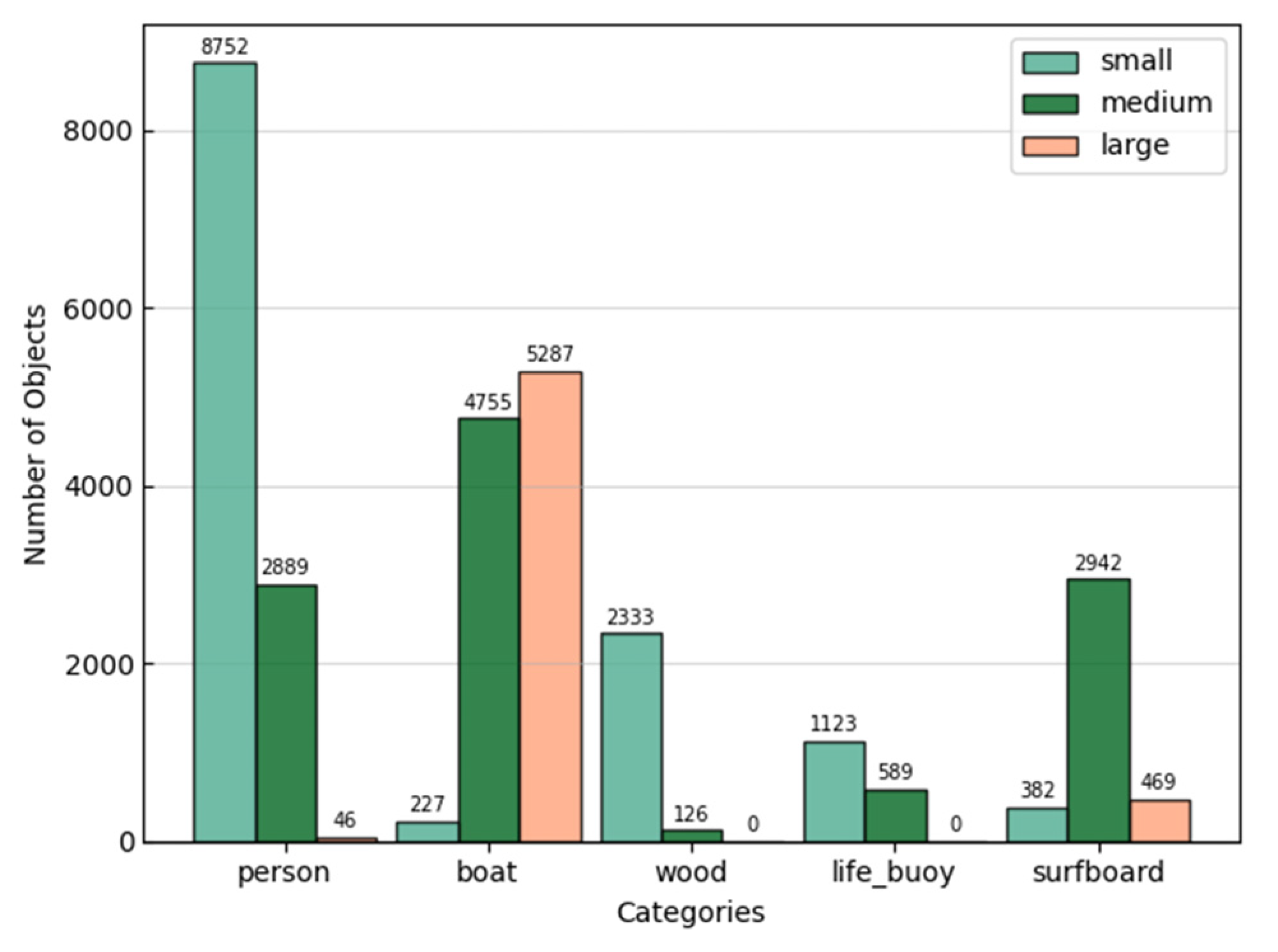

4.1. MOBDrone Dataset Based on an EO Sensor

4.2. The Evaluation Index

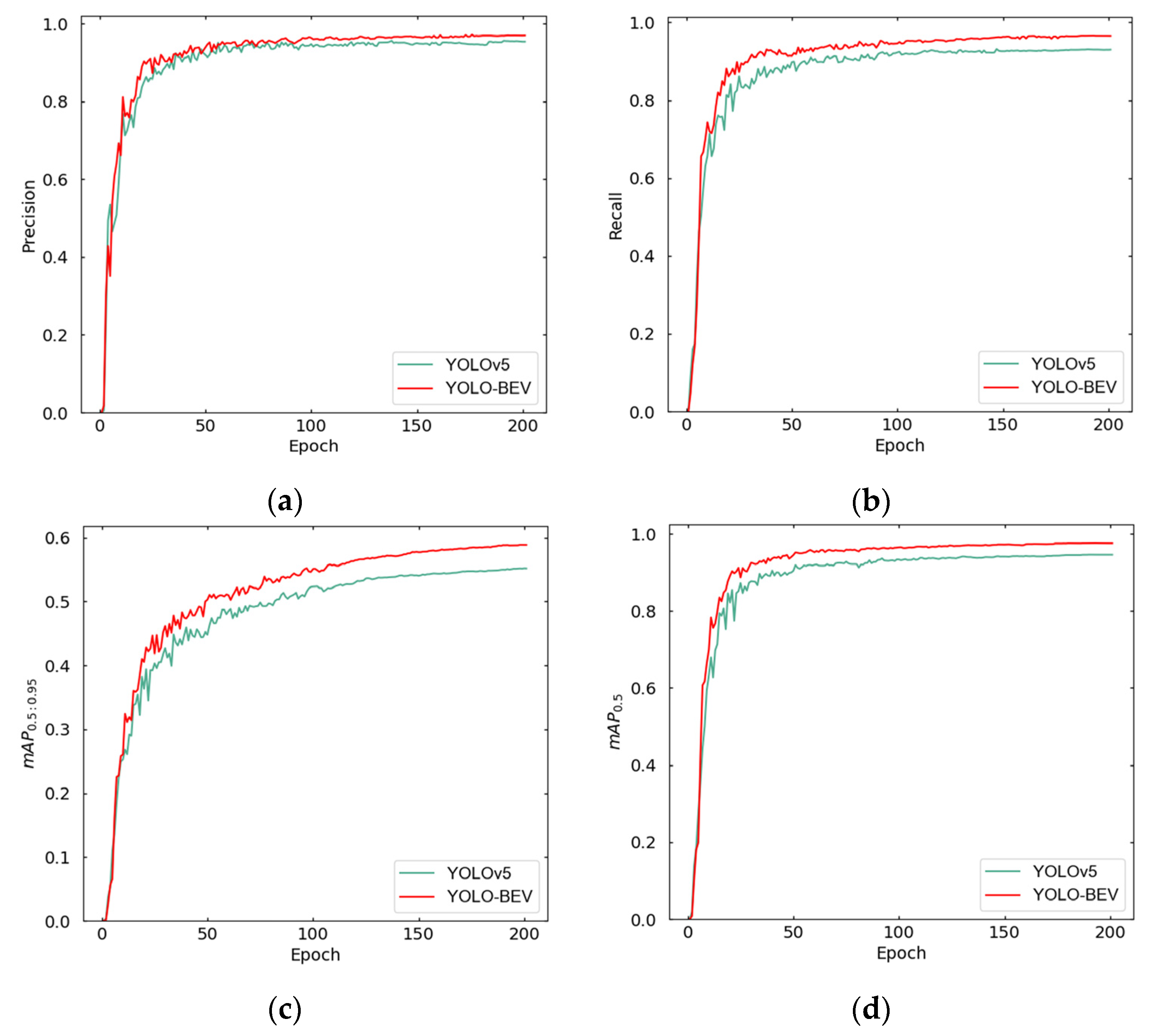

4.3. Performance of the Object-Detection Algorithm

4.3.1. Ablation Experiment

4.3.2. Comparisons of the YOLO-BEV Algorithm with Other Object-Detection Algorithms

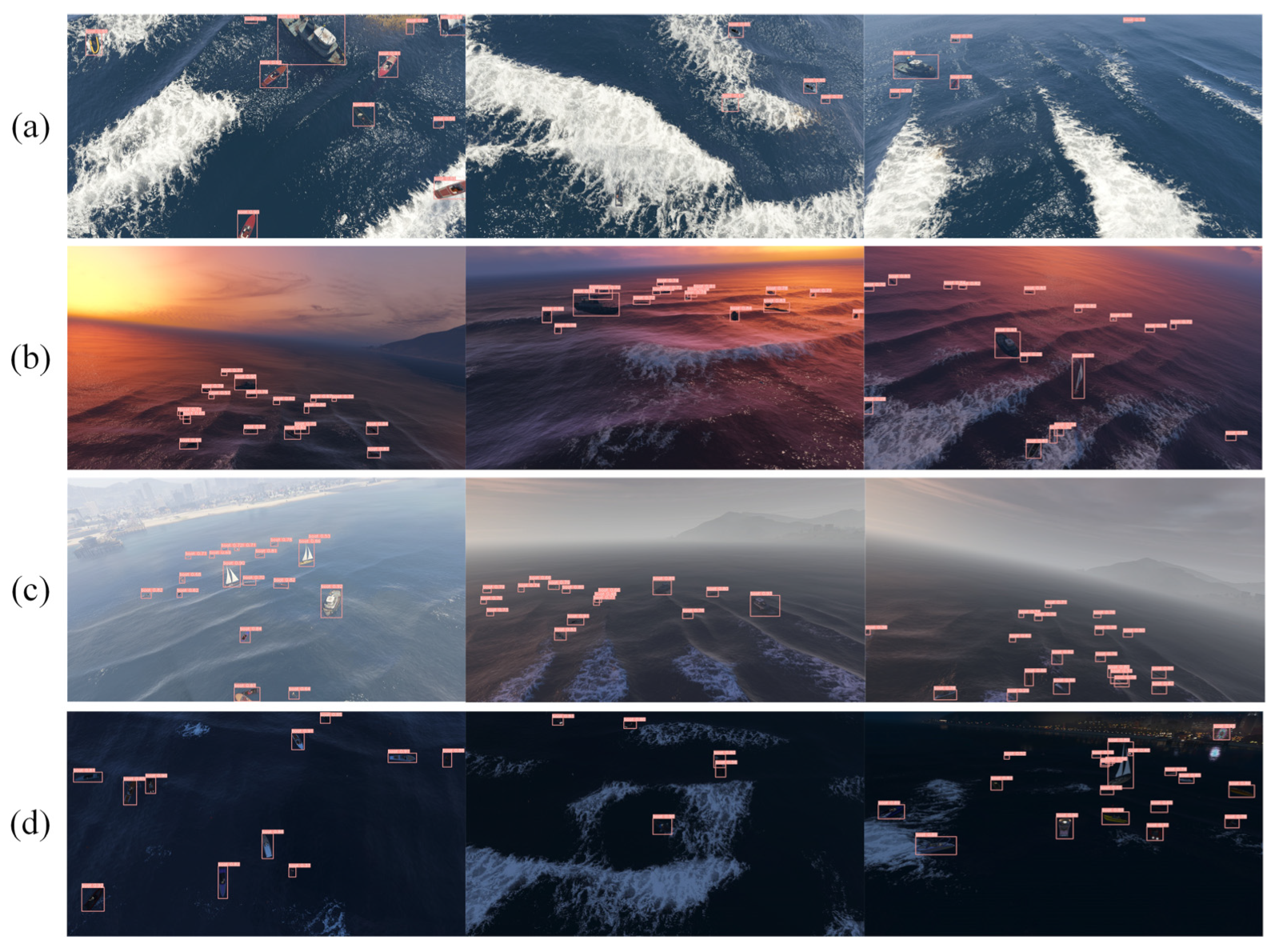

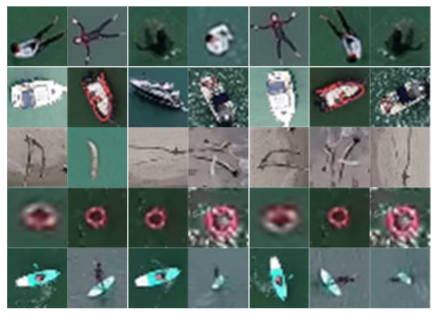

4.4. Visualization of Some Detection Results

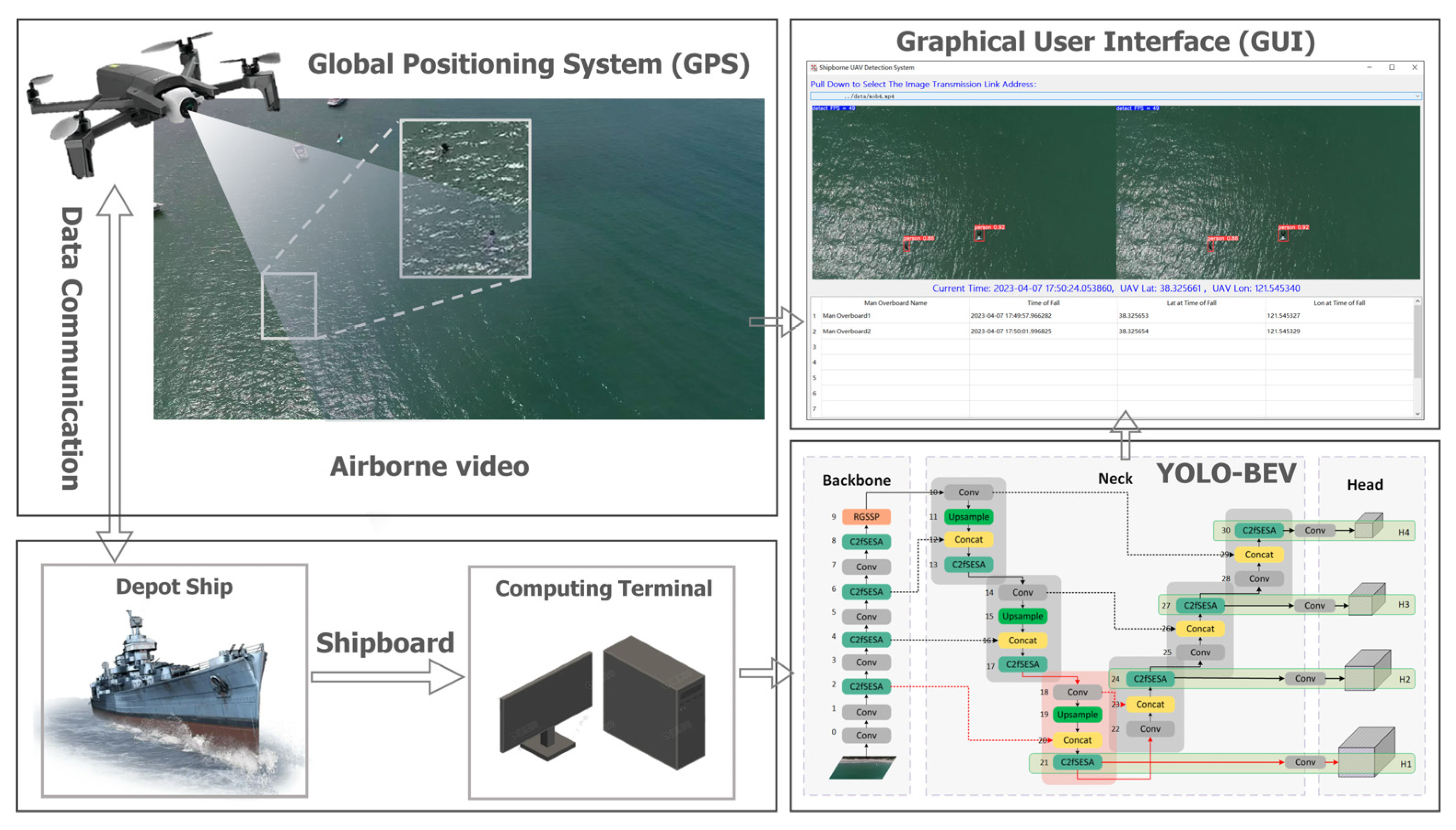

4.5. Architecture of the System

5. Discussion

5.1. Discussion of Results

5.2. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Leira, F.S.; Helgesen, H.H.; Johansen, T.A.; Fossen, T.I. Object Detection, Recognition, and Tracking from UAVs Using a Thermal Camera. J. Field Robot. 2021, 38, 242–267. [Google Scholar] [CrossRef]

- Chen, X.; Li, Z.; Yang, Y.; Qi, L.; Ke, R. High-Resolution Vehicle Trajectory Extraction and Denoising from Aerial Videos. IEEE Trans. Intell. Transport. Syst. 2021, 22, 3190–3202. [Google Scholar] [CrossRef]

- Guo, Q.; Liu, J.; Kaliuzhnyi, M. YOLOX-SAR: High-Precision Object Detection System Based on Visible and Infrared Sensors for SAR Remote Sensing. IEEE Sens. J. 2022, 22, 17243–17253. [Google Scholar] [CrossRef]

- Tan, Y.; Li, G.; Cai, R.; Ma, J.; Wang, M. Mapping and Modelling Defect Data from UAV Captured Images to BIM for Building External Wall Inspection. Autom. Constr. 2022, 139, 104284. [Google Scholar] [CrossRef]

- Gonçalves, J.A.; Henriques, R. UAV Photogrammetry for Topographic Monitoring of Coastal Areas. ISPRS J. Photogramm. Remote Sens. 2015, 104, 101–111. [Google Scholar] [CrossRef]

- Lyu, H.; Shao, Z.; Cheng, T.; Yin, Y.; Gao, X. Sea-Surface Object Detection Based on Electro-Optical Sensors: A Review. IEEE Intell. Transport. Syst. Mag. 2023, 15, 190–216. [Google Scholar] [CrossRef]

- Stojnić, V.; Risojević, V.; Muštra, M.; Jovanović, V.; Filipi, J.; Kezić, N.; Babić, Z. A Method for Detection of Small Moving Objects in UAV Videos. Remote Sens. 2021, 13, 653. [Google Scholar] [CrossRef]

- Wang, S.; Han, Y.; Chen, J.; He, X.; Zhang, Z.; Liu, X.; Zhang, K. Weed Density Extraction Based on Few-Shot Learning Through UAV Remote Sensing RGB and Multispectral Images in Ecological Irrigation Area. Front. Plant Sci. 2022, 12, 735230. [Google Scholar] [CrossRef]

- Yahyanejad, S.; Rinner, B. A Fast and Mobile System for Registration of Low-Altitude Visual and Thermal Aerial Images Using Multiple Small-Scale UAVs. ISPRS J. Photogramm. Remote Sens. 2015, 104, 189–202. [Google Scholar] [CrossRef]

- Kaljahi, M.A.; Shivakumara, P.; Idris, M.Y.I.; Anisi, M.H.; Lu, T.; Blumenstein, M.; Noor, N.M. An Automatic Zone Detection System for Safe Landing of UAVs. Expert Syst. Appl. 2019, 122, 319–333. [Google Scholar] [CrossRef]

- Harel, J.; Koch, C.; Perona, P. Graph-Based Visual Saliency. In Advances in Neural Information Processing Systems 19; Schölkopf, B., Platt, J., Hofmann, T., Eds.; The MIT Press: Cambridge, MA, USA, 2007; pp. 545–552. ISBN 978-0-262-25691-9. [Google Scholar]

- Duan, H.; Xu, X.; Deng, Y.; Zeng, Z. Unmanned Aerial Vehicle Recognition of Maritime Small-Target Based on Biological Eagle-Eye Vision Adaptation Mechanism. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 3368–3382. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, Y.; Dayoub, F.; Sunderhauf, N. VarifocalNet: An IoU-Aware Dense Object Detector. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8510–8519. [Google Scholar]

- Yang, J.; Xie, X.; Shi, G.; Yang, W. A Feature-Enhanced Anchor-Free Network for UAV Vehicle Detection. Remote Sens. 2020, 12, 2729. [Google Scholar] [CrossRef]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small Object Detection on Unmanned Aerial Vehicle Perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef]

- Ye, T.; Qin, W.; Li, Y.; Wang, S.; Zhang, J.; Zhao, Z. Dense and Small Object Detection in UAV-Vision Based on a Global-Local Feature Enhanced Network. IEEE Trans. Instrum. Meas. 2022, 71, 1–13. [Google Scholar] [CrossRef]

- Wang, C.; Shi, Z.; Meng, L.; Wang, J.; Wang, T.; Gao, Q.; Wang, E. Anti-Occlusion UAV Tracking Algorithm with a Low-Altitude Complex Background by Integrating Attention Mechanism. Drones 2022, 6, 149. [Google Scholar] [CrossRef]

- Chen, M.; Sun, J.; Aida, K.; Takefusa, A. Weather-Aware Object Detection Method for Maritime Surveillance Systems. Available online: https://ssrn.com/abstract=4482179 (accessed on 1 August 2023).

- Ye, T.; Qin, W.; Zhao, Z.; Gao, X.; Deng, X.; Ouyang, Y. Real-Time Object Detection Network in UAV-Vision Based on CNN and Transformer. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar] [CrossRef]

- Sharafaldeen, J.; Rizk, M.; Heller, D.; Baghdadi, A.; Diguet, J.-P. Marine Object Detection Based on Top-View Scenes Using Deep Learning on Edge Devices. In Proceedings of the 2022 International Conference on Smart Systems and Power Management (IC2SPM), Beirut, Lebanon, 10 November 2022; pp. 35–40. [Google Scholar]

- Cai, Y.; Luan, T.; Gao, H.; Wang, H.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. YOLOv4-5D: An Effective and Efficient Object Detector for Autonomous Driving. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.J.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. NAS-FPN: Learning Scalable Feature Pyramid Architecture for Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15 June 2019; pp. 7029–7038. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning spatial fusion for single-shot object detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 13708–13717. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11211, pp. 3–19. ISBN 978-3-030-01233-5. [Google Scholar]

- Zhou, K.; Tong, Y.; Li, X.; Wei, X.; Huang, H.; Song, K.; Chen, X. Exploring Global Attention Mechanism on Fault Detection and Diagnosis for Complex Engineering Processes. Process Saf. Environ. Prot. 2023, 170, 660–669. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. ECCV Trans. Pattern Anal. Mach. Intell. 2015, 37, 1094–1916. [Google Scholar] [CrossRef]

- Qiu, M.; Huang, L.; Tang, B.-H. ASFF-YOLOv5: Multielement Detection Method for Road Traffic in UAV Images Based on Multiscale Feature Fusion. Remote Sens. 2022, 14, 3498. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. Computer Vision and Pattern Recognition (CVPR). arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM. 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Cafarelli, D.; Ciampi, L.; Vadicamo, L.; Gennaro, C.; Berton, A.; Paterni, M.; Benvenuti, C.; Passera, M.; Falchi, F. MOBDrone: A Drone Video Dataset for Man OverBoard Rescue. In Image Analysis and Processing—ICIAP 2022; Sclaroff, S., Distante, C., Leo, M., Farinella, G.M., Tombari, F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2022; Volume 13232, pp. 633–644. ISBN 978-3-031-06429-6. [Google Scholar]

- Kiefer, B.; Ott, D.; Zell, A. Leveraging Synthetic Data in Object Detection on Unmanned Aerial Vehicles. In Proceedings of the 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2021. [Google Scholar]

| Model | Parameters | GFLOPS |

|---|---|---|

| SPPF | 7,033,114 | 16.0 |

| SimSPPF | 7,033,114 | 16.0 |

| SPPFCSPC | 13,461,274 | 21.1 |

| RGSPP | 6,888,218 | 15.9 |

| Class | Annotations | Samples |

|---|---|---|

| Person | 11,687 |  |

| Boat | 10,269 | |

| Wood | 2459 | |

| Lifebuoy | 1712 | |

| Surfboard | 3793 |

| Objects | Metric (Square Pixels) |

|---|---|

| Small | Area < 322 |

| Medium | 322 < area < 962 |

| Large | Area > 962 |

| Methods | P | C | R | AP1 | AP2 | AP3 | APS | ARS |

|---|---|---|---|---|---|---|---|---|

| YOLOv5 | × | × | × | 0.526 | 0.931 | 0.483 | 0.333 | 0.431 |

| MI | √ | × | × | 0.547 | 0.964 | 0.510 | 0.373 | 0.492 |

| MII | √ | √ | × | 0.557 | 0.969 | 0.513 | 0.390 | 0.498 |

| MIII | √ | √ | √ | 0.564 | 0.971 | 0.536 | 0.407 | 0.513 |

| Methods | mAP0.5:0.95 | mAP0.5 | mAP0.75 | APS | ARS |

|---|---|---|---|---|---|

| YOLOv5 | 0.526 | 0.931 | 0.483 | 0.333 | 0.431 |

| YOLO-BEV | 0.564 | 0.971 | 0.536 | 0.407 | 0.513 |

| Methods | Person | Boat | Wood | Surfboard | Life_Buoy |

|---|---|---|---|---|---|

| YOLOv5 (AP0.5:0.95) | 0.387 | 0.838 | 0.598 | 0.506 | 0.435 |

| YOLO-BEV (AP0.5:0.95) | 0.473 | 0.835 | 0.645 | 0.539 | 0.460 |

| Detection | Backbone | AP1 | AP2 | AP3 | APS | ARS | FPS |

|---|---|---|---|---|---|---|---|

| SSD | (1) | 0.455 | 0.897 | 0.418 | 0.227 | 0.360 | 22 |

| RetinaNet | (2) | 0.549 | 0.939 | 0.522 | 0.346 | 0.437 | 26 |

| Faster R-CNN | (2) | 0.554 | 0.953 | 0.495 | 0.366 | 0.470 | 11 |

| VarifocalNet | (2) | 0.560 | 0.960 | 0.529 | 0.397 | 0.494 | 15 |

| YOLOv3 | (3) | 0.544 | 0.936 | 0.519 | 0.353 | 0.450 | 32 |

| YOLOv5 | (4) | 0.526 | 0.931 | 0.483 | 0.333 | 0.431 | 53 |

| YOLOv7 | (4) | 0.551 | 0.944 | 0.528 | 0.359 | 0.465 | 37 |

| [24] | (5) | 0.551 | 0.957 | 0.522 | 0.361 | 0.474 | 23 |

| [26] | (3) | 0.547 | 0.940 | 0.491 | 0.350 | 0.463 | 40 |

| YOLO-BEV | (4) | 0.564 | 0.971 | 0.536 | 0.407 | 0.513 | 48 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Yin, Y.; Jing, Q.; Shao, Z. A High-Precision Detection Model of Small Objects in Maritime UAV Perspective Based on Improved YOLOv5. J. Mar. Sci. Eng. 2023, 11, 1680. https://doi.org/10.3390/jmse11091680

Yang Z, Yin Y, Jing Q, Shao Z. A High-Precision Detection Model of Small Objects in Maritime UAV Perspective Based on Improved YOLOv5. Journal of Marine Science and Engineering. 2023; 11(9):1680. https://doi.org/10.3390/jmse11091680

Chicago/Turabian StyleYang, Zhilin, Yong Yin, Qianfeng Jing, and Zeyuan Shao. 2023. "A High-Precision Detection Model of Small Objects in Maritime UAV Perspective Based on Improved YOLOv5" Journal of Marine Science and Engineering 11, no. 9: 1680. https://doi.org/10.3390/jmse11091680