Wave Height and Period Estimation from X-Band Marine Radar Images Using Convolutional Neural Network

Abstract

:1. Introduction

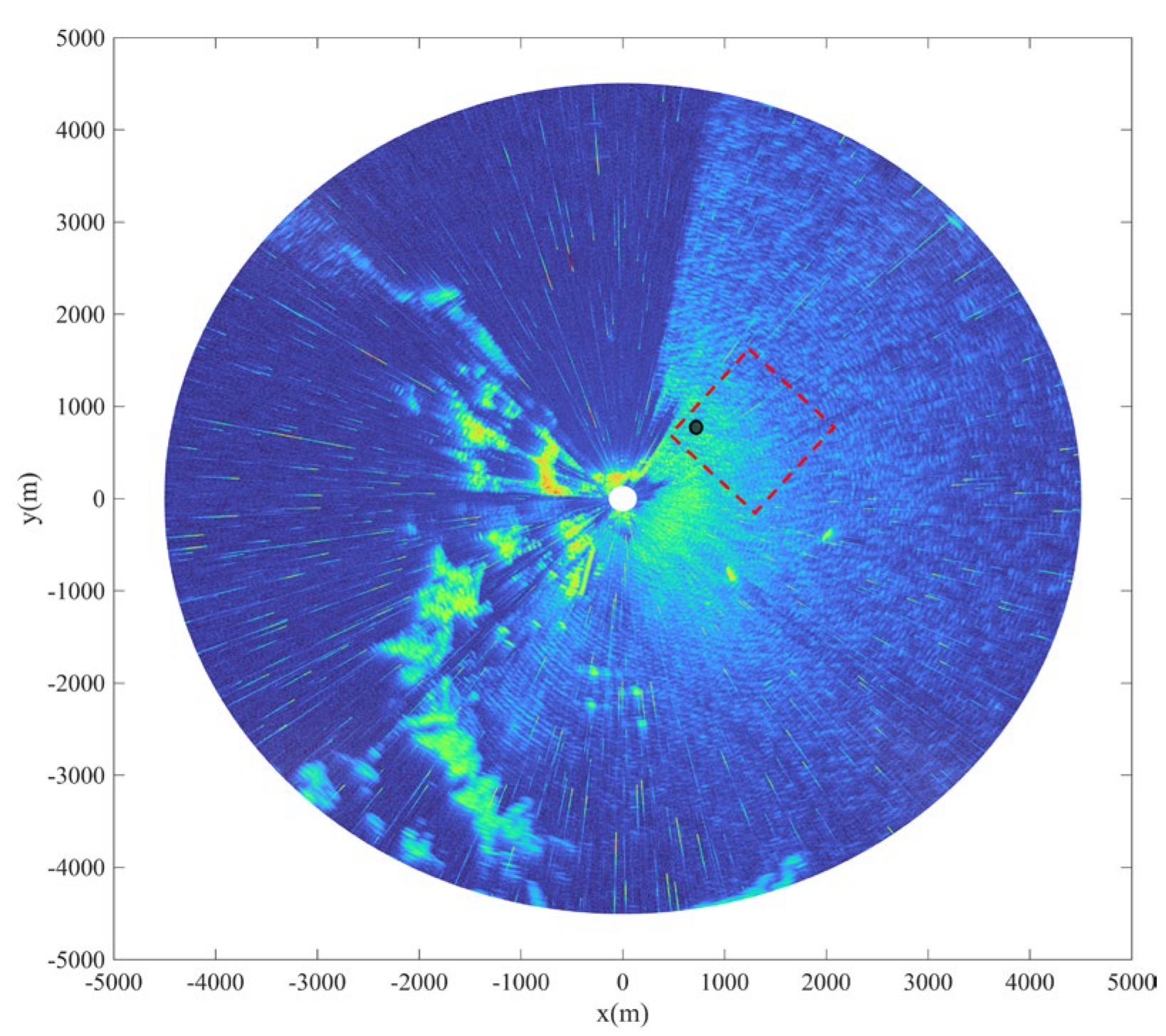

2. Data Pre-Processing

2.1. Median Filtering Based on the Two-Layer Decision

2.2. Adaptive Region Growing Repair Method

3. The CNNSA-Based Estimation Model

3.1. CNN

- (1)

- Convolutional and pooling layers. These are used to extract the basic features of the image, such as edges and texture;

- (2)

- Inception modules. Each “Inception” contains multiple parallel convolutional kernels and pooling operations to capture features at different scales and levels. The results of these parallel operations are cascaded together to form the module outputs, with the primary goal of improving the feature representation of the model without adding too many parameters;

- (3)

- Global average pooling layer. This layer averages the values of each channel of the feature map to generate a fixed-size feature vector. This reduces the fully connected layer’s dimensionality and helps reduce overfitting.

- (4)

- Fully connected layer. This layer integrates information from different features to capture complex relationships in the data. In this study, the regression task of the fully connected layer is utilized to estimate the HS and TS of the radar image.

3.2. Self-Attention

3.3. CNN-SA Model

4. Results

4.1. Data Overview

4.2. Model Train

4.3. Result Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Huang, W.M.; Gill, E.; An, J.Q. Iterative least-squares-based wave measurement using X-band nautical radar. IET Radar Sonar Nav. 2014, 8, 853–863. [Google Scholar] [CrossRef]

- Young, I.R.; Rosenthal, W.; Ziemer, F. A three-dimensional analysis of marine radar images for the determination of ocean wave directionality and surface currents. J. Geophys. Res. Ocean. 1985, 90, 1049–1059. [Google Scholar] [CrossRef]

- Lund, B.; Graber, H.C.; Romeiser, R. Wind Retrieval From Shipborne Nautical X-Band Radar Data. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3800–3811. [Google Scholar] [CrossRef]

- Dankert, H.; Horstmann, J.; Rosenthal, W. Ocean wind fields retrieved from radar-image sequences. J. Geophys. Res Ocean. 2003, 108, 3352. [Google Scholar] [CrossRef]

- Lund, B.; Graber, H.C.; Xue, J.; Romeiser, R. Analysis of Internal Wave Signatures in Marine Radar Data. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4840–4852. [Google Scholar] [CrossRef]

- Nieto-Borge, J.C.; Hessner, K.; Jarabo-Amores, P.; de la Mata-Moya, D. Signal-to-noise ratio analysis heights from X-band marine to estimate ocean wave radar image time series. IET Radar Sonar Nav. 2008, 2, 35–41. [Google Scholar] [CrossRef]

- Borge, J.C.N.; Soares, C.G. Analysis of directional wave fields using X-band navigation radar. Coast. Eng. 2000, 40, 375–391. [Google Scholar] [CrossRef]

- Gangeskar, R. Ocean current estimated from X-band radar sea surface images. IEEE Trans. Geosci. Remote Sens. 2002, 40, 783–792. [Google Scholar] [CrossRef]

- Senet, C.M.; Seemann, J.; Ziemer, F. The near-surface current velocity determined from image sequences of the sea surface. IEEE Trans. Geosci. Remote Sens. 2001, 39, 492–505. [Google Scholar] [CrossRef]

- Shen, C.; Huang, W.; Gill, E.W.; Carrasco, R.; Horstmann, J. An Algorithm for Surface Current Retrieval from X-band Marine Radar Images. Remote Sens. 2015, 7, 7753–7767. [Google Scholar] [CrossRef]

- Greenwood, C.; Vogler, A.; Morrison, J.; Murray, A. The approximation of a sea surface using a shore mounted X-band radar with low grazing angle. Remote Sens. Environ. 2018, 204, 439–447. [Google Scholar] [CrossRef]

- Huang, W.M.; Liu, X.L.; Gill, E.W. Ocean Wind and Wave Measurements Using X-Band Marine Radar: A Comprehensive Review. Remote Sens. 2017, 9, 39. [Google Scholar] [CrossRef]

- Alpers, W.; Hasselmann, K. Spectral signal to clutter and thermal noise properties of ocean wave imaging synthetic aperture radars. Int. J. Remote Sens. 1982, 3, 423–446. [Google Scholar] [CrossRef]

- Chen, X.W.; Huang, W.M. Spatial-Temporal Convolutional Gated Recurrent Unit Network for Significant Wave Height Estimation From Shipborne Marine Radar Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4201711. [Google Scholar] [CrossRef]

- An, J.Q.; Huang, W.M.; Gill, E.W. A Self-Adaptive Wavelet-Based Algorithm for Wave Measurement Using Nautical Radar. IEEE Trans. Geosci. Remote Sens. 2015, 53, 567–577. [Google Scholar]

- Ma, K.; Wu, X.; Yue, X.; Wang, L.; Liu, J. Array Beamforming Algorithm for Estimating Waves and Currents From Marine X-Band Radar Image Sequences. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1262–1272. [Google Scholar] [CrossRef]

- Navarro, W.; Velez, J.C.; Orfila, A.; Lonin, S. A Shadowing Mitigation Approach for Sea State Parameters Estimation Using X-Band Remotely Sensing Radar Data in Coastal Areas. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6292–6310. [Google Scholar] [CrossRef]

- Wei, Y.B.; Zheng, Y.; Lu, Z.Z. A Method for Retrieving Wave Parameters From Synthetic X-Band Marine Radar Images. IEEE Access 2020, 8, 204880–204890. [Google Scholar] [CrossRef]

- Gangeskar, R. An Algorithm for Estimation of Wave Height From Shadowing in X-Band Radar Sea Surface Images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3373–3381. [Google Scholar] [CrossRef]

- Liu, X.L.; Huang, W.M.; Gill, E.W. Wave Height Estimation from Shipborne X-Band Nautical Radar Images. J. Sens. 2016, 2016, 1078053. [Google Scholar] [CrossRef]

- Liu, X.L.; Huang, W.M.; Gill, E.W. Estimation of Significant Wave Height From X-Band Marine Radar Images Based on Ensemble Empirical Mode Decomposition. IEEE Geosci. Remote Sens. 2017, 14, 1740–1744. [Google Scholar] [CrossRef]

- Ludeno, G.; Serafino, F. Estimation of the Significant Wave Height from Marine Radar Images without External Reference. J. Mar. Sci. Eng. 2019, 7, 432. [Google Scholar] [CrossRef]

- Yang, Z.D.; Huang, W.M. Wave Height Estimation From X-Band Radar Data Using Variational Mode Decomposition. IEEE Geosci. Remote Sens. 2022, 19, 1505405. [Google Scholar] [CrossRef]

- Cornejo-Bueno, L.; Borge, J.N.; Alexandre, E.; Hessner, K.; Salcedo-Sanz, S. Accurate estimation of significant wave height with Support Vector Regression algorithms and marine radar images. Coast. Eng. 2016, 114, 233–243. [Google Scholar] [CrossRef]

- Park, J.; Ahn, K.; Oh, C.; Chang, Y.S. Estimation of Significant Wave Heights from X-Band Radar Using Artificial Neural Network. J. Korean Soc. Coast. Ocean Eng. 2020, 32, 561–568. [Google Scholar] [CrossRef]

- Kim, H.; Ahn, K.; Oh, C. Estimation of Significant Wave Heights from X-Band Radar Based on ANN Using CNN Rainfall Classifier. J. Korean Soc. Coast. Ocean Eng. 2021, 33, 101–109. [Google Scholar] [CrossRef]

- Duan, W.; Yang, K.; Huang, L.; Ma, X. Numerical Investigations on Wave Remote Sensing from Synthetic X-Band Radar Sea Clutter Images by Using Deep Convolutional Neural Networks. Remote Sens. 2020, 12, 1117. [Google Scholar] [CrossRef]

- Huang, W.M.; Yang, Z.D.; Chen, X.W. Wave Height Estimation From X-Band Nautical Radar Images Using Temporal Convolutional Network. IEEE J. Stars 2021, 14, 11395–11405. [Google Scholar] [CrossRef]

- Yang, Z.D.; Huang, W.M.; Chen, X.W. Evaluation and Mitigation of Rain Effect on Wave Direction and Period Estimation From X-Band Marine Radar Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5207–5219. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent Advances in Convolutional Neural Networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

| Type | Input Size | Output Size | Patch Size/Stride | Filters |

|---|---|---|---|---|

| Input | 256 × 256 | 256 × 256 × 1 | ||

| Convolution | 256 × 256 × 1 | 128 × 128 × 64 | 7 × 7/2 | 64 |

| Max pool | 128 × 128 × 64 | 64 × 64 × 64 | 3 × 3/2 | |

| Convolution | 64 × 64 × 64 | 64 × 64 × 64 | 1 × 1/1 | 64 |

| Convolution | 64 × 64 × 64 | 64 × 64 × 192 | 3 × 3/1 | 192 |

| 9 × Inception | 64 × 64 × 192 | 8 × 8 × 1024 | ||

| Self-attention | 8 × 8 × 1024 | 8 × 8 × 1024 | ||

| Average pool | 8 × 8 × 1024 | 1 × 1 × 1024 | 7 × 7/1 | |

| linear | 1 × 1 × 2 |

| Parameters | Value |

|---|---|

| Transmit frequency | 9.41 GHz |

| Polarization | Horizontal |

| Antenna rotation speed | 22 r/min |

| Range resolution | 7.5 m |

| Antenna height | 45 m |

| Horizontal beam width | 2° |

| Azimuth coverage | 360° |

| Method | Testing (Without Averaging) | Testing (With Averaging) | ||||

|---|---|---|---|---|---|---|

| RMSD (m) | CC | Bias | RMSD (m) | CC | Bias | |

| SNR | 0.56 | 0.64 | −0.20 | 0.54 | 0.65 | −0.20 |

| CNN | 0.45 | 0.76 | −0.04 | 0.41 | 0.77 | −0.04 |

| CNNSA | 0.35 | 0.85 | −0.03 | 0.30 | 0.86 | 0.04 |

| Method | Testing (Without Averaging) | Testing (With Averaging) | ||||

|---|---|---|---|---|---|---|

| RMSD (m) | CC | Bias | RMSD (m) | CC | Bias | |

| SNR | 0.60 | 0.65 | 0.12 | 0.57 | 0.65 | 0.11 |

| CNN | 0.46 | 0.74 | −0.10 | 0.35 | 0.77 | −0.1 |

| CNNSA | 0.37 | 0.89 | 0.03 | 0.27 | 0.91 | 0.03 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zuo, S.; Wang, D.; Wang, X.; Suo, L.; Liu, S.; Zhao, Y.; Liu, D. Wave Height and Period Estimation from X-Band Marine Radar Images Using Convolutional Neural Network. J. Mar. Sci. Eng. 2024, 12, 311. https://doi.org/10.3390/jmse12020311

Zuo S, Wang D, Wang X, Suo L, Liu S, Zhao Y, Liu D. Wave Height and Period Estimation from X-Band Marine Radar Images Using Convolutional Neural Network. Journal of Marine Science and Engineering. 2024; 12(2):311. https://doi.org/10.3390/jmse12020311

Chicago/Turabian StyleZuo, Shaoyan, Dazhi Wang, Xiao Wang, Liujia Suo, Shuaiwu Liu, Yongqing Zhao, and Dewang Liu. 2024. "Wave Height and Period Estimation from X-Band Marine Radar Images Using Convolutional Neural Network" Journal of Marine Science and Engineering 12, no. 2: 311. https://doi.org/10.3390/jmse12020311