Abstract

Underwater images often encounter challenges such as attenuation, color distortion, and noise caused by artificial lighting sources. These imperfections not only degrade image quality but also impose constraints on related application tasks. Improving underwater image quality is crucial for underwater activities. However, obtaining clear underwater images has been a challenge, because scattering and blur hinder the rendering of true underwater colors, affecting the accuracy of underwater exploration. Therefore, this paper proposes a new deep network model for single underwater image enhancement. More specifically, our framework includes a light field module (LFM) and sketch module, aiming at the generation of a light field map of the target image for improving the color representation and preserving the details of the original image by providing contour information. The restored underwater image is gradually enhanced, guided by the light field map. The experimental results show the better image restoration effectiveness, both quantitatively and qualitatively, of the proposed method with a lower (or comparable) computing cost, compared with the state-of-the-art approaches.

1. Introduction

In recent years, the exploration of the underwater environment has garnered significant attention, driven by the escalating scarcity of natural resources, in tandem with the expansion of the global economy. In the field of ocean engineering, a variety of applications increasingly depend on underwater images acquired via autonomous underwater vehicles (AUVs). These vehicles are employed for the purposes of exploring, comprehending, and engaging with marine environments [1]. However, underwater images frequently degrade due to attenuation, color distortion, reduced contrast, and noise from artificial lighting sources, caused by differences in depth, lighting conditions, water type, and the presence of suspended particles or floating debris in the water. It is required to deeply investigate the image restoration of underwater images [2,3].

In addition, due to the physical properties of light in water, red wavelengths disappear first, and orange and yellow wavelengths disappear in sequence as the water depth increases. However, green and blue light have relatively short wavelengths, and therefore can travel the longest distances in water [4]. Therefore, underwater images mainly have green or blue hues, causing the color deviation of the images [5], which leads to great limitations on underwater vision-based tasks such as classification, tracking, and detection.

Image restoration inherently poses challenges due to its ill-posed nature. Several image restoration techniques in the literature rely on prior knowledge or assumptions, along with learning strategies. They include non-physical models’ methods [6,7,8], physical models’ methods [4,9,10], and deep learning-based methods [1,11,12]. The non-physical model method mainly uses basic image-processing technology to modify pixel values in terms of contrast, brightness, saturation perspectives, etc., to improve the visual quality of underwater images. However, its effectiveness is limited by the lack of consideration of underwater physical degradation processes. Methods based on physical models mainly focus on accurately estimating medium transmission parameters, such as the medium transmittance and background light field or other key underwater imaging parameters, and can obtain clear images by inverting the physical model of underwater imaging. However, methods based on physical models are not easily adaptable and may not be suitable for complex and diverse realistic underwater scenes. This is because model assumptions about the underwater environment are not always reasonable, and it is challenging to evaluate multiple parameters simultaneously.

Relying on the rapid development of deep learning techniques with great success in numerous perceptual tasks, some deep learning-based single underwater image restoration frameworks have been presented. However, most existing deep learning-based methods often ignore the domain knowledge of underwater imaging and only perform end-to-end deep network training on synthetic databases. GAN (Generative Adversarial Network) architecture and its extension CycleGAN (Cycle-Consistent Adversarial Network) have been applied to underwater image restoration. However, it may generate unrealistic images with less content information than the original images. Artificially generated underwater images, whether on CNN-based or GAN-based model learning, may not be suitable for real underwater images, which will lead to inadequate underwater image reconstruction.

In summary, existing approaches for single underwater image restoration usually suffer from three shortcomings: insufficient color representation, incomplete reconstruction of image details, and increased computational complexity. To address these challenges, this paper introduces a lightweight end-to-end deep model that accounts for the impact of light fields in single underwater image restoration. To our knowledge, this is the first endeavor to introduce a background light field into the task of the color enhancement of underwater images. In addition, this simple and effective shading network has the advantages of fast processing and few parameters, without the need to manually adjust image pixel values or design a priori assumption. We use a carefully designed objective function to preserve image contour details and ensure that the output image quality will not be distorted. The experimental results show that our architecture achieves a high performance of image restoration in both qualitative and quantitative evaluations, when compared to the state-of-the-art underwater image enhancement algorithms.

The rest of the paper is organized as follows. Section 2 presents some related works of underwater image restoration. In Section 3, the proposed light field-domain learning framework for underwater image restoration is addressed. Section 4 presents the proposed model learning strategy for training our deep underwater image restoration network. Finally, some concluding remarks are made in Section 5.

2. Related Work

Image enhancement is a well-studied problem in the fields of computer vision and signal processing. Exploring the underwater world has become an active issue in recent years [5,13]. Underwater image enhancement has attracted much attention, as an essential measure to enhance the visual clarity of underwater images. Several techniques have been proposed, which can be divided into three categories: non-physical model-based methods, physical model-based methods, and deep learning-based methods.

2.1. Methods Based on Non-Physical Models

Methods based on non-physical models seek to enhance visual quality by adjusting image pixel values. Iqbal et al. employed different pixel range stretching techniques in RGB and HSV color spaces to enhance the contrast and saturation of underwater images [14]. Fu et al. proposed a two-step method for underwater image enhancement, including a color correction algorithm and a contrast enhancement algorithm [6]. Another study attempted to enhance underwater images based on Retinex models. Fu et al. [15] proposed a Retinex-based underwater image enhancement method, including color correction, layer decomposition, and enhancement. Zhang et al. [16] extended the Retinex-based method to a multi-scale underwater image enhancement framework in this task.

2.2. Methods Based on Physical Models

Traditional physics-based methods use atmospheric dehazing models to estimate transmitted and ambient light in the scene to restore pixel intensities [17]. Methods based on physical models approach the improvement of underwater images by treating it as an inverse problem. This involves estimating the latent parameters of the image formation model from a provided image. Most of these techniques depend on the following procedure: (1) construction of a degraded physical model; (2) estimation of unknown model parameter estimates; and (3) solving model equations with estimated parameters. One research direction involves adapting the dark channel prior (DCP) algorithm [18] for enhancing underwater images. In reference [19], DCP is merged with a compensation algorithm dependent on wavelength to recover underwater images. In a recent work, Akkaynak et al. [20] proposed an improved imaging model that accounts for the unique distortions associated with underwater light propagation [20]. This facilitates a more accurate color reconstruction and generally provides a better approximation of ill-posed underwater image enhancement problems. However, these methods still require the same scene depth and optical water volume measurements as before, which are characteristics of underwater optical imaging. Calvaris-Bianca et al. introduced a prior method that utilizes variations in attenuation across three channels of the RGB color space to estimate the transmission map of underwater scenes [21]. The concept underlying this prior method is that red light tends to degrade more rapidly compared to green and blue light in underwater settings. Galderan et al. introduced a method focusing on the red channel, to recover the lost contrast in underwater images by reinstating colors associated with shorter wavelengths [22]. Zhao et al. found that the background color of underwater images is related to the intrinsic optical properties of the water medium, and they improved the quality of degraded underwater images by deducing the inherent optical characteristics of water from the background color [23]. However, the inaccurate estimation of the physical model poses challenges in achieving the desired underwater image enhancement.

2.3. Deep Learning-Based Methods

In recent years, deep learning has made significant progress in solving low-level vision issues. These techniques can undergo training by employing synthetic pairs consisting of degraded images and their high-quality counterparts. However, underwater imaging models heavily rely on scenes and lighting conditions, including factors like temperature and turbidity. Present techniques rooted in deep learning can be categorized into two primary approaches: (1) developing end-to-end modules; and (2) utilizing deep network models to directly predict physical parameters and recover images using degradation models. Li et al. proposed WaterGAN, a deep learning-based underwater image enhancement model that simulates underwater images from aerial imagery and depth pairs in an unsupervised pipeline [12]. The authors employed synthetic training data and implemented a two-stage network for restoring underwater images, with a special focus on eliminating color bias. Li et al. proposed an underwater image enhancement model UWCNN, which is trained through ten types of underwater images, and underwater image synthesis is conducted using the refined underwater imaging model and corresponding underwater scene parameter pictures [24]. Zhu et al. proposed a weakly supervised underwater color transmission model based on cycle-consistent adversarial networks [25]. However, adopting these GAN-based methods results in unstable training and is prone to mode collapse. In recent years, CNN-based enhancement models have been proposed to address the challenges of low-light conditions underwater. Xie et al. introduced a model that separates a low-light underwater image into an illumination map and a reflectance map using a decomposition network, before feeding them into the restoration network. This network uses a normal-light underwater image without scattering as a reference to produce the restored images [26]. Meanwhile, Zhou et al. developed an underwater image restoration technique that integrates the Comprehensive Imaging Formation Model (CIFM) with both prior knowledge and unsupervised methods. This approach includes estimating the depth map using the Channel Intensity Prior (CIP) and employing Penetration Adaptation Dark Pixels (ADP) to reduce backscatter [27]. Both approaches are focused on mitigating the challenges posed by insufficient lighting in underwater environments. Ye et al. proposed an underwater neural rendering method [28] that automatically learns inherent degradation models from real underwater images, which helps create comprehensive underwater datasets with various water quality conditions. Fabbri et al. proposed a GAN model-based technique to improve the quality of visual underwater scenes, and to improve the vision-driven performance of autonomous underwater robots [29]. Li et al. established the famous underwater image dataset UIEB (Underwater Image Enhancement Benchmark) and proposed WaterNet, an underwater image enhancement network trained on this dataset [30]. Islam et al. presented a conditional generative adversarial network-based model for real-time underwater image enhancement [1]. Deep SESR (simultaneous enhancement and super-resolution) is a residual network-based generative model that learns to restore perceived image quality with a higher spatial resolution [31]. Naik et al. proposed a shallow neural network model called Shallow-UWnet that can maintain performance with fewer parameters [32]. Wang et al. proposed a transformer-based block called URTB [33], along with convolutional layers for underwater image processing. Furthermore, frequency-domain loss (FDL) is added to the overall loss learning to study the effect of frequency. Cong et al. inherited the advantages of two types of models: GAN-based methods and physical model-based methods. A physically model-guided GAN model called PUGAN [34] is proposed for underwater image processing. Inspired by [28], our proposed framework is an end-to-end deep network combined with the Retinex model to consider the impact of light fields. We also integrate a contour sketch module designed to enhance the performance of the network in areas where the quality degrades severely, thus improving the visual quality. Our network retains the advantages of convolutional network training, which can greatly shorten the convergence time, avoid the above problems, and achieve a better performance.

3. Proposed Framework

The Retinex theory is about the removal of unpleasant illumination effects from a given image. The Retinex algorithm separates illumination from reflectance in a given captured image, which can deal with the effects of lighting degradation in underwater imaging. According to the Retinex theory [35], the decomposition of the underwater image can be expressed as

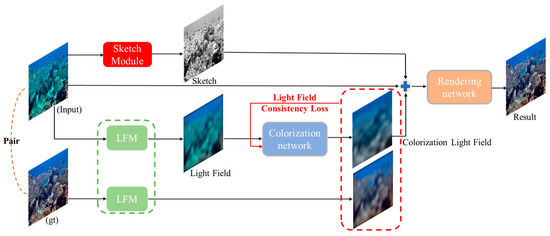

where represents the input underwater image, is the component of the illumination light, and R is the reflection component of the target object carrying the image detail information. Our method aims to improve the representation of underwater images by transforming the color of the underwater background light field, while preserving contour details. We present an overview architecture for underwater image enhancement in Figure 1. This architecture consists of four key modules: the sketch module, the light field detection module, the colorization network, and the rendering network. The input underwater image is processed through these modules to increase color representation and improve feature representation. The underwater image first passes through the sketch module and the light field detection module to generate three paths: the detail path, the original underwater image path, and the light field path. The light field path is then fed into the colorization network to obtain a restored color background light field. Also, image information is enhanced by tightly connecting the sketch path with the input image path and light field path. The combined images are then fed into the rendering network to obtain true-color underwater images. We will further explain the proposed method in the following subsections, including Section 3.1 (the light field detection module), Section 3.2 (the sketch module), Section 3.3 (the rendering network), and Section 3.4 (the loss function).

Figure 1.

Illustration of the proposed framework.

3.1. Light Field Detection Module

In [36], the underwater imaging model can be expressed as

where and are the underwater image and clean image, respectively; is the scene depth, is the scattering coefficient, and is the background light. In this underwater imaging model, the estimation of the physical model includes two key parameters: and . Due to the influence of light scattering, the optical properties of ground scenes and underwater scenes are very different, and parameters change randomly, which makes them difficult to accurately formulate by traditional physical models. To solve the above problems, we propose a light field detection module to extract the background light field in complex underwater scenes as much as possible. We define the underwater image in the dataset as . In Equation (1), the image can be decomposed into two components, and , which respectively represent the illumination light component in the underwater scene and the reflection detail component of the object. We apply a multi-scale log-domain Gaussian low-pass filter to to obtain an underwater background light field map:

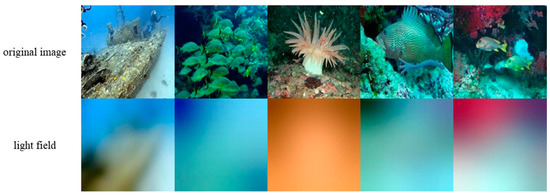

where represents the Gaussian blur function with the parameter. Referring to the MSR [37] proposed by Rahman, we set to 20, 60, and 90 based on experimental experience. In view of the deficiencies in the single-scale model, the MSR linearly combines enhanced images at different scales, and then performs multi-scale operations to estimate the results. We refer to the above idea and set to three values to perform different degrees of Gaussian blur operations on the input underwater image, to obtain a better background light field map. This method can have better results for different types of underwater images and improve the generalization of the network. As shown in Figure 2, we can obtain the background light field map of the real underwater image. It is obvious that our background light field map effectively filters out irrelevant object information and accurately represents underwater images.

Figure 2.

Illustration of the output from the light field module.

The background light field map primarily encompasses the inherent stylistic features found in diverse underwater scenes, but it does not contain the detailed structural information in the original underwater image. Theoretically, the background light field map can be regarded as two important parameters B and β in the underwater model, which offer very important information about underwater feature migration; however, the previous work ignored the importance of the β parameter in underwater imaging [38]. Our model can effectively capture a real underwater background light field and has excellent restoration performance.

3.2. Sketch Module

In our proposed method, underwater images are processed in a light field detection module, allowing us to extract valuable information about the background light field. However, in order to preserve the contour details of an image, accurate contours must be obtained from a clean reference image. To meet this requirement, we refer to [39] and propose a sketch module to extract image contours and incorporate them into the rendering network, enabling the network to learn and exploit valuable properties of detailed contours. Dense semantic mapping maps pixels in the sketch to the reference image based on the semantics of the pixels. This ensures that elements with similar semantics (such as underwater object outlines) in the sketch and reference image are matched accordingly. This is achieved through a deep neural network that learns to identify and align similar features between sketch and reference images. Sketch information, also called the detail map, is used to guide the restoration network to restore better structural representations of underwater images. A significant advantage of our approach is that it can effectively mitigate the distortion caused by the image-rendering process. By integrating the estimated detail map into the background rendering network, we enhance the network’s ability to accurately reproduce fine details in the restored images. Therefore, our proposed method can better preserve the contour details of the underwater image and reduce the distortion that may occur during the rendering process.

3.3. Colorization and Rendering Networks

In the colorization network, our image colorization framework adopts the U-Net architecture. This approach can accurately preserve image details and spatial hierarchies, through the integration of skip connections concatenating multi-scale feature maps. Additionally, this design facilitates the efficient generation of context and texture information across network layers, making U-Net particularly suitable for tasks such as image colorization. Specifically, for the input grayscale image, we downsize it by a factor of 2 in each sub-layer, perform LReLU activation and batch normalization, and then upsample the latent features of the output target image. Our colorization network aims to recover the light field of underwater images, to guide the network to restore better color representations. We anticipate that the restored light field will guide the rendering network to offer an improved color representation of the degraded images.

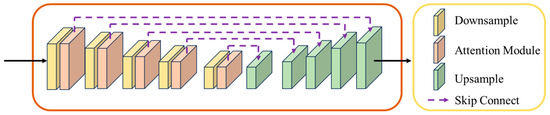

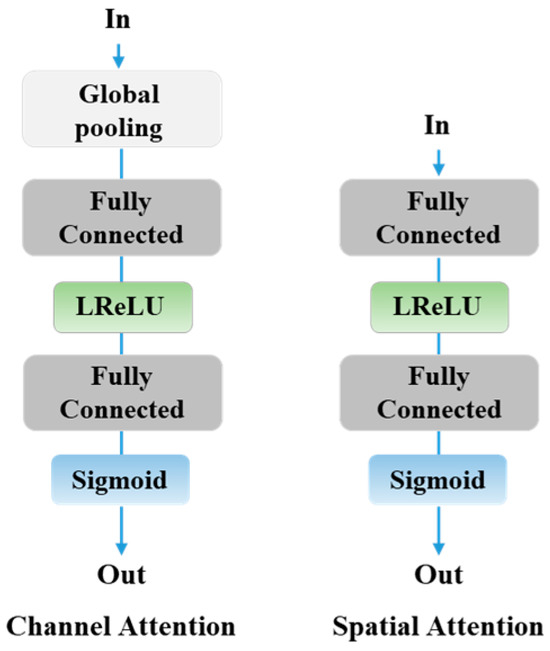

In the rendering network, in order to overcome the insufficiency of the underwater pairing dataset, we have also designed a U-Net-based network as our rendering network. In Figure 3, the properties of U-Net can be exploited to effectively train the network with only a small amount of data. To enhance the network’s performance further, we have integrated various attention modules. Considering the complex and diverse characteristics of underwater scenes, we first set the size of the convolutional layer to 1 × 1 and 3 × 3, using different receptive fields to obtain multi-scale features for fusion, while considering different spatial structures and color stabilization effects. We have also added a channel attention module and a spatial attention module, as shown in Figure 4. The channel attention module focuses on the network’s representation of significant feature channels. The spatial attention mechanism enables the network to focus on the complex light field distribution areas in the image and has a high attention ability. Residual connection is used to effectively prevent the vanishing gradient problem and improve the overall performance of the model.

Figure 3.

Illustration of the proposed rendering network.

Figure 4.

Illustration of channel attention and spatial attention modules.

3.4. Loss Function

In order to ensure that the restored image is close enough to the real underwater image, we introduce L1 loss as our basic reconstruction loss:

where is the restored underwater image, and is the ground truth image. In order to comply with underwater characteristics, UDC (Underwater Dark Channel) applies the dark channel priority principle [18], so we also integrate underwater dark channel loss, to ensure consistency between the rendered image and the original image in the dark channel. The underwater dark channel loss equation is defined as follows:

In order to effectively preserve the light field characteristics of real underwater images, we introduce a light field consistency loss based on the light field map to improve the quality of the light field. We utilize Equation (3), mentioned above, to generate the light field map. The following is the loss of the light field consistency:

where denotes a light field capture operation and is the ground truth light field. To preserve the perceptual and semantic understanding of the image, we also introduce the perceptual loss function that measures the difference between high-level features of two images, usually extracted from a pre-trained CNN [40]. The perceptual loss is calculated as the distance between the feature representation of the restored image and the ground truth clear image , and the perceptual loss equation is as follows:

where is the layer of the specified loss network. The final loss of our proposed network is computed as a pixel-level supervision using the sum of four losses, which is represented as follows:

where , , , and denote the tradeoff weights.

4. Experimental Results

To evaluate the performance of the proposed method, three well-known underwater augmentation datasets, UFO-120 [31], EUVP [1], and UIEB [30], are used in the experiments. It is virtually impossible to simultaneously capture realistic underwater scenes and corresponding ground truth images of different water types. In these three datasets, they use different methods, including human participants, to generate the ground truth for underwater scenes. UFO-120 [31] is a relatively new underwater image dataset that can be used for model-training tasks. Ground truth is generated through the style transfer method, with 1500 images used for training and 120 images used for testing. EUVP [1] used seven different photographic devices to collect underwater imagery and captured some from YouTube videos. Ground truth is generated by a trained CycleGAN, with 2185 images for training and 130 images for validation. UIEB [30] is a real-world underwater scene dataset that consists of 890 pairs of underwater images taken under different lighting conditions, with different color gamuts and contrasts. In addition, four well-known quantitative metrics—UIQM (Underwater Image Quality Measurement) [41], PSNR (Peak Signal-to-Noise Ratio), and SSIM (Structural Similarity Index) —are used to evaluate the image color restoration performance. The UIQM covers various factors that affect underwater image quality by utilizing Underwater Image Color Measurement (UICM) [41], Underwater Image Sharpness Measurement (UISM) [41], and Underwater Image Contrast Measurement (UIConM) [41].

Seven state-of-the-art methods regarding underwater enhancement based on deep learning are used for comparison, which are UGAN [29], WaterNet [30], FUnIE [1], Deep SESR [31], Shallow-UWnet [32], URTB [33], and PUGAN [34]. In addition to quantitative comparisons, we also conduct qualitative evaluations with seven existing methods, to further show the superiority of our method.

4.1. Network Training and Parameter Setting

The proposed method was implemented using PyTorch version 2.0 of the Python programming language on a PC equipped with an Intel® Core™ i7-8700k processor @3.70 GHz, 32 GB memory, and an NVIDIA RTX3090 GPU. The Adam optimizer [42] is used for model optimization, and the initial learning rate is set to 0.0002. After 100 epochs, we start to linearly decay the learning rate to avoid possible oscillations in the later training stage. The size of the training input patch is set to 256×256, and the network is trained for 400 epochs.

4.2. Performance Evaluation

Table 1 shows the results of the quantitative evaluation on the UFO-120, EUVP, and UIEB datasets in terms of the average PSNR, SSIM, and UIQM metrics, including UGAN [29], WaterNet [30], FUnIE [1], Deep SESR [31], Shallow-UWnet [32], URTB [33], and PUGAN [34], and the proposed method. According to Table 1, our proposed method is compared with seven state-of-the-art deep learning-based underwater enhancement methods. Except for UIQM, our proposed method has the best results compared to the other methods in terms of PSNR, and SSIM. A higher PSNR value indicates that the restored result is closer to the content of the clean image, while a higher SSIM value indicates that the restored result has a more similar structure and texture to the clean image. For the UIQM metric, higher values indicate better human visual perception. In addition, Figure 5 shows the qualitative evaluation results of all methods on the UFO-120 dataset. As shown in Figure 5, our proposed method exhibits a superior image quality compared to state-of-the-art methods, especially in terms of image detail and color representation. Furthermore, it is worth noting that, according to the results in Table 2, our proposed architecture achieves better performance with comparable parameters and computation.

Table 1.

Quantitative performance evaluations on UFO-120, EUVP, and UIEB datasets.

Figure 5.

Qualitative evaluation results on the UFO-120 dataset.

Table 2.

Complexity evaluations for difference methods.

4.3. Ablation Study

To verify the effectiveness of each component in our proposed network, the following ablation study is performed by removing the LFM or sketch modules. The results of the ablation study are shown in Table 3. Summarizing the results of our ablation study, it can be found that removing any component from the proposed method leads to image quality degradation in terms of image blurring, structure loss, and color deviation. In Table 3, the complete method shows the best restoration performance both quantitatively and qualitatively.

Table 3.

Quantitative results of ablation studies.

5. Conclusions

This paper proposes a simple yet effective underwater image enhancement method that includes the following sequences of steps: image contours extraction (sketch module), light field extraction (light field module), light field restoration (colorization network), and underwater restoration (rendering network). The main contribution of the proposed model is that it learns the light field representations of different underwater scenes and efficiently restores their color representation through a colorization network. Furthermore, a contour texture map is used as an attention weight to integrate detailed knowledge into the rendering network to ensure the fidelity of the rendered image. Through comprehensive quantitative and qualitative evaluations, our proposed model outperforms state-of-the-art deep learning-based methods, while requiring fewer parameters and computations.

Author Contributions

Conceptualization, C.-H.Y., M.-J.C. and C.-C.W.; Methodology, C.-H.Y.; Software, Y.-W.L. and Y.-Y.L.; Validation, Y.-Y.L.; Formal analysis, C.-H.Y.; Investigation, Y.-W.L.; Data curation, Y.-W.L.; Writing—original draft, Y.-W.L.; Writing—review & editing, C.-H.Y. and M.-J.C.; Visualization, Y.-Y.L.; Supervision, C.-H.Y.; Project administration, C.-H.Y.; Funding acquisition, C.-C.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Science and Technology Council, grant number MOST 110-2221-E-003-005-MY3.

Data Availability Statement

Data derived from public domain resources.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Yang, M.; Hu, J.; Li, C.; Rohde, G.; Du, Y.; Hu, K. An in-depth survey of underwater image enhancement and restoration. IEEE Access 2019, 7, 123638–123657. [Google Scholar] [CrossRef]

- Sahu, P.; Gupta, N.; Sharma, N. A survey on underwater image enhancement techniques. Int. J. Comput. Appl. 2014, 87, 13. [Google Scholar] [CrossRef]

- Berman, D.; Treibitz, T.; Avidan, S. Diving into haze-lines: Color restoration of underwater images. In Proceedings of the British Machine Vision Conference, London, UK, 4–7 September 2017; Volume 1. [Google Scholar]

- Schettini, R.; Corchs, S. Underwater image processing: State of the art of restoration and image enhancement methods. EURASIP J. Adv. Signal Process. 2010, 2010, 746052. [Google Scholar] [CrossRef]

- Fu, X.; Fan, Z.; Ling, M.; Huang, Y.; Ding, X. Two-step approach for single underwater image enhancement. In Proceedings of the International Symposium on Intelligent Signal Processing and Communication Systems, Xiamen, China, 6–9 November 2017; pp. 789–794. [Google Scholar]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing under-water images and videos by fusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar]

- Ghani, A.S.A.; Isa, N.A.M. Underwater image quality enhancement through composition of dual-intensity images and rayleigh-stretching. SpringerPlus 2014, 3, 757. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Chen, S.; Tang, Y.; Pang, Y.; Wang, J. Underwater image restoration based on minimum information loss principle and optical properties of underwater imaging. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 1993–1997. [Google Scholar]

- Li, C.; Guo, J.; Guo, C. Emerging from water: Underwater image color correction based on weakly supervised color transfer. IEEE Signal Process. Lett. 2018, 25, 323–327. [Google Scholar] [CrossRef]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 2017, 3, 387–394. [Google Scholar] [CrossRef]

- Cui, R.; Chen, L.; Yang, C.; Chen, M. Extended state observer-based integral sliding mode control for an underwater robot with unknown disturbances and uncertain nonlinearities. IEEE Trans. Ind. Electron. 2017, 64, 6785–6795. [Google Scholar] [CrossRef]

- Iqbal, K.; Odetayo, M.; James, A. Enhancing the low-quality images using unsupervised colour correction method. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Istanbul, Turkey, 10–13 October 2010; pp. 1703–1709. [Google Scholar]

- Fu, X.; Zhuang, P.; Huang, Y.; Liao, Y.; Zhang, X.-P.; Ding, X. A retinex-based enhancing approach for single underwater image. In Proceedings of the IEEE International Conference on Image Processing, Paris, France, 27–30 October 2014; pp. 4572–4576. [Google Scholar]

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar] [CrossRef]

- Yeh, C.-H.; Huang, C.H.; Kang, L.-W. Multi-scale deep residual learning-based single image haze removal via image decomposition. IEEE Trans. Image Process. 2019, 29, 3153–3167. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Chiang, J.; Chen, Y. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 2012, 21, 1756–1769. [Google Scholar] [CrossRef] [PubMed]

- Akkaynak, D.; Treibitz, T. Sea-thru: A method for removing water from underwater images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 1682–1691. [Google Scholar]

- Carlevaris-Bianco, N.; Mohan, A.; Eustice, R. Initial results in underwater single image dehazing. In Proceedings of the Oceans 2010 Mts/IEEE Seattle, Seattle, WA, USA, 20–23 September 2010; pp. 1–8. [Google Scholar]

- Galdran, A.; Pardo, D.; Picn, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Zhao, X.; Jin, T.; Qu, S. Deriving inherent optical properties from background color and underwater image enhancement. Ocean Eng. 2015, 94, 163–172. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Xie, Y.; Yu, Z.; Yu, X.; Zheng, B. Lighting the darkness in the sea: A deep learning model for underwater image enhancement. Front. Mar. Sci. 2022, 9, 921492. [Google Scholar] [CrossRef]

- Zhou, J.; Liu, Q.; Jiang, Q.; Ren, W.; Lam, K.-M.; Zhang, W. Underwater camera: Improving visual perception via adaptive dark pixel prior and color correction. Int. J. Comput. Vis. 2023, 1–19. [Google Scholar] [CrossRef]

- Ye, T.; Chen, S.; Liu, Y.; Ye, Y.; Chen, E.; Li, Y. Underwater light field retention: Neural rendering for underwater imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 488–497. [Google Scholar]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018; pp. 7159–7165. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Islam, M.J.; Luo, P.; Sattar, J. Simultaneous enhancement and super-resolution of underwater imagery for improved visual perception. arXiv 2020, arXiv:2002.01155. [Google Scholar]

- Naik, A.; Swarnakar, A.; Mittal, K. Shallow-uwnet: Compressed model for underwater image enhancement (student abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 19–21 May 2021. [Google Scholar]

- Wang, D.; Sun, Z. Frequency domain based learning with transformer for underwater image restoration. In Proceedings of the Pacific Rim International Conference on Artificial Intelligence, Shanghai, China, 10–13 November 2022; pp. 218–232. [Google Scholar]

- Cong, R.; Yang, W.; Zhang, W.; Li, C.; Guo, C.-L.; Huang, Q.; Kwong, S. PUGAN: Physical model-guided underwater im-age enhancement using GAN with dual-discriminators. IEEE Trans. Image Process. 2023, 32, 4472–4485. [Google Scholar] [CrossRef] [PubMed]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar]

- Akkaynak, D.; Treibitz, T. A revised underwater image formation model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6723–6732. [Google Scholar]

- Rahman, Z.; Jobson, D.J.; Woodell, G.A. Multi-scale retinex for color image enhancement. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 16–19 September 1996; Volume 3, pp. 1003–1006. [Google Scholar]

- Wang, N.; Zhou, Y.; Han, F.; Zhu, H.; Yao, J. Uwgan: Underwater gan for real-world underwater color restoration and dehazing. arXiv 2021, arXiv:1912.10269. [Google Scholar]

- Lee, J.; Kim, E.; Lee, Y.; Kim, D.; Chang, J.; Choo, J. Reference-based sketch image colorization using augmented-self reference and dense semantic correspondence. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13 June 2020; pp. 5801–5810. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Kinga, D.; Adam, J.B. A method for stochastic optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).