Abstract

In the field of Autonomous Surface Vehicle (ASV), research on advanced perception technologies is crucial for enhancing their intelligence and autonomy. In particular, laser point cloud registration technology serves as a foundation for improving the navigation accuracy and environmental awareness of ASV in complex environments. To address the issues of low computational efficiency, insufficient robustness, and incompatibility with low-power devices in laser point cloud registration technology for ASV, a novel point cloud matching method has been proposed. The proposed method includes laser point cloud data processing, feature extraction based on an improved Fast Point Feature Histogram (FPFH), followed by a two-step registration process using SAC-IA (Sample Consensus Initial Alignment) and Small_GICP (Small Generalized Iterative Closest Point). Registration experiments conducted on the KITTI benchmark dataset and the Pohang Canal dataset demonstrate that the relative translation error (RTE) of the proposed method is 16.41 cm, which is comparable to the performance of current state-of-the-art point cloud registration algorithms. Furthermore, deployment experiments on multiple low-power computing devices showcase the performance of the proposed method under low computational capabilities, providing reference metrics for engineering applications in the field of autonomous navigation and perception research for ASV.

1. Introduction

With the rapid development of Autonomous Surface Vehicle (ASV) technology, its applications in marine surveys, environmental monitoring, and waterway transportation are becoming increasingly widespread [1,2,3]. In the field of ocean engineering, three-dimensional point cloud registration technology provides vital support for a range of core tasks. For example, during the inspection of ports and waterways, as well as the maintenance and monitoring of large offshore structures such as wind farms, multi-temporal and multi-view point cloud registration enables intelligent assessment of structural health. Additionally, in scenarios such as floating debris monitoring, point cloud registration serves as the technical foundation for ASVs to track and locate target objects in real time and with high efficiency, thereby significantly enhancing the intelligence and automation of ocean engineering operations.

Efficient and reliable environmental perception is fundamental to achieving autonomous navigation and safe operation of ASVs [4]. Among various sensing technologies, Light Detection and Ranging (LiDAR) has become one of the core components in ASV perception systems due to its high accuracy, all-weather capability, and three-dimensional spatial sensing [5]. LiDAR not only enables target detection and tracking in complex marine environments [6,7,8], and three-dimensional environment mapping [9], but is also widely used in multi-sensor fusion [10] to enhance the robustness and intelligence of the system.

In recent years, significant progress has been made in the application of LiDAR in autonomous vehicles, with related algorithms and systems being widely adopted in road environments. However, the marine environment differs considerably from terrestrial traffic settings, exhibiting challenges such as dynamic water surface changes, sparse target features, and high background noise. These factors make it difficult to directly apply three-dimensional point cloud registration algorithms commonly used in autonomous driving to practical ASV applications. High-quality point cloud registration is essential for tasks such as multi-frame fusion, environmental mapping, and dynamic perception in ASVs [9]. Therefore, developing efficient, low-power, and highly adaptive point cloud registration methods for ASV scenarios holds significant theoretical and practical value.

Point cloud registration is a fundamental step for effectively integrating ASV data from multiple times, viewpoints, and sensors, and it plays an indispensable role in core tasks such as dynamic environment mapping, high-precision localization, and real-time target detection. For example, during ASV navigation, vessel motion can lead to spatiotemporal discrepancies in the sequential LiDAR point clouds. Only through precise point cloud registration can a complete and consistent three-dimensional environmental representation be achieved, which is crucial for subsequent path planning and obstacle avoidance. Furthermore, in complex scenarios involving multi-sensor collaborative perception, point cloud registration is also a key step for achieving data complementation and enhancement [10].

In typical ASV perception systems, point cloud registration is typically employed as a front-end module, immediately following raw data acquisition, to provide high-quality spatial data for advanced tasks such as environmental mapping, semantic segmentation, and multi-object tracking [6]. The accuracy and efficiency of registration directly affect the ASV’s ability to understand dynamic and complex marine environments, thereby influencing the overall safety and robustness of the system.

Three-dimensional LiDAR point cloud registration is one of the key technologies for achieving efficient navigation and environmental perception of ASV [11,12]. However, traditional point cloud registration methods primarily focus on scenarios related to autonomous vehicles in road environments [13], which makes their direct application to ASV yield unsatisfactory results. In road environments, the pose variations in vehicles mainly involve longitudinal and lateral translational movements. In contrast, ASV navigate on the water with six degrees of freedom (three translational movements and three rotational movements), including surge, sway, heave, roll, pitch, and yaw. This complexity considerably increases the computational difficulty of LiDAR point cloud registration on ASV. Given that ASV are often equipped with low-power and low-computational-capability devices, it becomes particularly important to develop a fast and stable low-power point cloud registration method to enhance the autonomous navigation and perception capabilities of ASV in complex environments.

In recent years, numerous studies have focused on the development of point cloud registration technology. Existing methods primarily concentrate on optimizing registration algorithms and feature-matching processes, such as the Iterative Closest Point (ICP) algorithm and its improved variants [14,15]. These methods demonstrate excellent performance in environments such as roads, tunnels, and indoor settings. However, when faced with the rapidly changing conditions of water surfaces and the complex six degrees of freedom motion of ASV, these methods often encounter issues related to low computational efficiency and poor robustness. Particularly for ASV applications, current research frequently fails to adequately account for the unique characteristics of the marine environment, leading to significant performance bottlenecks in practical applications [16]. The dynamic nature of water surfaces, combined with the inherent motion complexities of ASV, necessitates the development of more tailored point cloud registration approaches that can effectively handle these challenges.

Despite various optimization solutions proposed in existing research to improve the computational efficiency and robustness of point cloud registration, several significant shortcomings remain. Firstly, many studies have not focused on optimizing low-power computing devices, which results in challenges for real-time applications on resource-constrained platforms such as small robots, small drones, and ASV. Additionally, there is a scarcity of research specifically addressing LiDAR point cloud registration for ASV, and existing methods often fail to account for the unique characteristics of the marine environment during ASV navigation. These shortcomings diminish the accuracy and robustness of point cloud registration for ASV, potentially leading to failures in critical missions, which can result in economic losses and safety hazards. To mitigate these issues, it is essential to develop tailored methods that consider the specific operational conditions faced by ASV, ensuring reliable performance in diverse marine scenarios [17].

The primary objective of this paper is to propose a fast and stable method for scan-level point cloud registration on low-power platforms for ASV. The approach involves preprocessing the original point cloud using three-dimensional laser point cloud conditional filtering and point cloud compression algorithms to reduce the number of points. An improved Fast Point Feature Histograms (FPFH) algorithm is utilized to extract features from the point cloud, followed by the application of the Sample Consensus Initial Alignment (SAC-IA) algorithm to calculate potential correspondences and initial matches. Finally, precise registration is performed using Small_GICP. To evaluate the speed, robustness, and scalability of the proposed method, experiments at both scan-level and map-level point cloud registration are designed. Ablation studies are conducted to validate the contributions of each component of the proposed method to the robustness and runtime of point cloud registration. Ultimately, the proposed method is deployed on several commercially available low-power computing devices to assess the success rate and runtime of point cloud registration.

The structure of this paper is arranged as follows: First, we review the relevant literature and discuss existing point cloud registration techniques and their limitations. Then, we provide a detailed introduction to the proposed method, including point cloud filtering and compression, improved FPFH feature extraction, and point cloud precise registration based on SAC-IA and Small_GICP. Next, we present the experimental setup and results analysis, with a focus on evaluating the performance of the algorithm in scan-level and map-level point cloud registration, as well as its performance on low-power computing devices. Finally, we discuss the research findings and summarize the main contributions of the study along with future research directions.

2. Related Work

In current research, point cloud registration techniques can generally be divided into two categories: optimization-based registration methods and feature-based registration methods. Optimization-based registration methods, such as the ICP algorithm [18,19] and its improved versions, achieve precise registration of point clouds by iteratively optimizing the minimization of distance errors between point clouds [20,21]. However, this algorithm has high requirements for initial values and is prone to getting trapped in local optima. Magnusson [22] proposed the 3D Normal Distributions Transform (3D-NDT) algorithm, which characterizes local point clouds through statistical properties, representing each equally sized grid with a normal distribution and using the ICP algorithm for registration. Its advantage lies in abstracting point sets without the need to find corresponding points one by one, while its drawback is susceptibility to local minima. Although significant progress has been made in point cloud registration methods, a number of practical challenges remain in real-world application scenarios. Firstly, optimization-based registration methods (such as ICP and its variants) can achieve accurate alignment, but their practical applicability is often limited in large-scale scenes and complex environments due to sensitivity to initialization and the tendency to converge to local optima. Some improved algorithms attempt to overcome these limitations by introducing statistical modeling or global search strategies; however, these approaches significantly increase computational complexity, making it difficult to meet the stringent real-time requirements of many engineering applications.

Feature-based registration methods, such as the FPFH [23] combined with the Random Sample Consensus (RANSAC) algorithm, utilize feature matching algorithms to find corresponding feature points between two frames of point cloud data and compute the transformation relationship between them to achieve registration [24,25,26]. In recent years, learning-based methods have garnered more attention in this field. However, due to the lack of large-scale LiDAR datasets, the generalization ability of learning-based methods remains questionable [27]. Moreover, such approaches require high computational resources, typically accelerated by Graphics Processing Units (GPUs), making them unsuitable for low-power computing devices like marine ASV. Therefore, this paper does not consider learning-based methods. Both optimization-based and feature-based registration methods have their advantages and disadvantages [28,29]. To reduce the computational load and speed of point cloud registration, this study focuses primarily on the latter category, as feature-based methods are generally faster than optimization-based methods.

Feature-based registration algorithms first extract feature points from two frames of point cloud data and then use registration algorithms to find corresponding feature points in the two frames, establishing initial correspondences [30,31,32]. Finally, the initial transformation relationship between the two frames of point clouds is computed using the matched corresponding feature points. Commonly used feature extraction methods include FPFH [23], Rotated Projection Statistics (RoPS) [33], Signature of Orientation Histogram (SHOT), Intrinsic Shape Signatures (ISS) [34], and Scale-invariant Feature Transform (SIFT) [35]. The Point Feature Histogram (PFH) was proposed by Rusu et al., which utilizes the distribution statistics of the query point and its nearest neighbors to fill a multidimensional statistical histogram, resulting in a high computational cost [36]. FPFH is an improved version of PFH that simplifies the PFH descriptor by streamlining the computation of the distribution statistics of nearest neighbor points in the neighborhood, addressing the efficiency issue of PFH. The Viewpoint Feature Histogram (VFH) was introduced by Bradski et al. to improve the FPFH descriptor by expanding the calculation scope and adding additional statistical information, using point pairs between the center of the point cloud and all other points on the surface of the point cloud as computational units [37]. Feature descriptors exhibit strong descriptive power and rigid body invariance for point cloud data. They can retain a significant amount of effective information in the point cloud, overcoming noise to some extent and ensuring the stability of point cloud registration algorithms. Feature-based methods have demonstrated superior performance in terms of speed and robustness to initialization, making them a focal point of recent research. However, the stability of feature extraction and matching is highly dependent on the quality of the point cloud data. When the point cloud contains significant noise, occlusion, or outliers, the stability of features extracted by traditional descriptors (such as FPFH, SHOT, etc.) declines markedly, resulting in a significant decrease in registration accuracy.

Most of these feature-based registration algorithms are not sensitive to initial parameters, making them suitable for real-time registration and processing of large-scale point cloud data. However, such algorithms often exhibit poor robustness to noise and outliers, and may even fail to register in certain scenarios [33,38]. In recent years, many researchers have demonstrated that outlier-robust registration methods can achieve sufficiently fast scan-level registration speeds in the presence of noise and outliers [39,40,41,42,43,44]. However, these outlier-robust registration methods primarily focus on the solver aspect of registration and have only been tested on object-level or scan-level registration problems. When these methods are tested on submap-level or map-level registration problems, a significant decrease in registration speed can be observed [45].

The strategies for ensuring outlier removal focus on eliminating erroneous matches among corresponding points. One major example of this strategy is Guaranteed Outlier Removal (GORE), which employs deterministic and fast geometric operations to refine the input correspondences into a more concise set, ensuring that discarded matches are inconsistent with the globally optimal solution [46]. GORE effectively reduces the presence of outliers and ensures a globally optimal solution; however, its computational complexity compromises computational efficiency. Cai et al. [47] utilized fast pruning techniques to maintain the optimal invariance of the registration target. The Branch-and-Bound (BnB) algorithm they introduced can quickly identify the ideal registration parameters of the refined set. Yang et al. [39] redefined the registration problem by using truncated least squares as a robust cost function against most outliers. Their approach utilized graph theory to separate the estimates of scaling, rotation, and translation. This graph-based method eliminates outliers by identifying Maximum Cliques (MC). Since point cloud registration is analogous to graph nodes, and the links between nodes constrain the geometric relationships of the matches, this graph-based outlier removal method holds significant research value. Zhang et al. [48] designed a compatibility graph to describe the affinities between initial matches and sought the Maximum Clique (MC) in the graph to determine the precise transformation parameters. The MC method employs Singular Value Decomposition (SVD) to formulate transformation hypotheses for the selected cliques, resulting in high registration accuracy. However, as the number of correspondences increases, efficiency decreases due to the complexity of constructing the compatibility graph and searching for MCs. Although outlier-robust registration methods improve stability in the presence of noise and outliers, their computational efficiency is closely related to the scale of the problem. In particular, for map-level large-scale point cloud registration, the consumption of computational resources remains a major bottleneck restricting their engineering applications.

In summary, the limitations of current outlier rejection methods are evident when dealing with data that has a high proportion of outliers. In such cases, these algorithms demonstrate reduced efficiency and accuracy in registration tasks. Finding the optimal balance between efficiency and accuracy is a complex challenge [49].

3. Methods

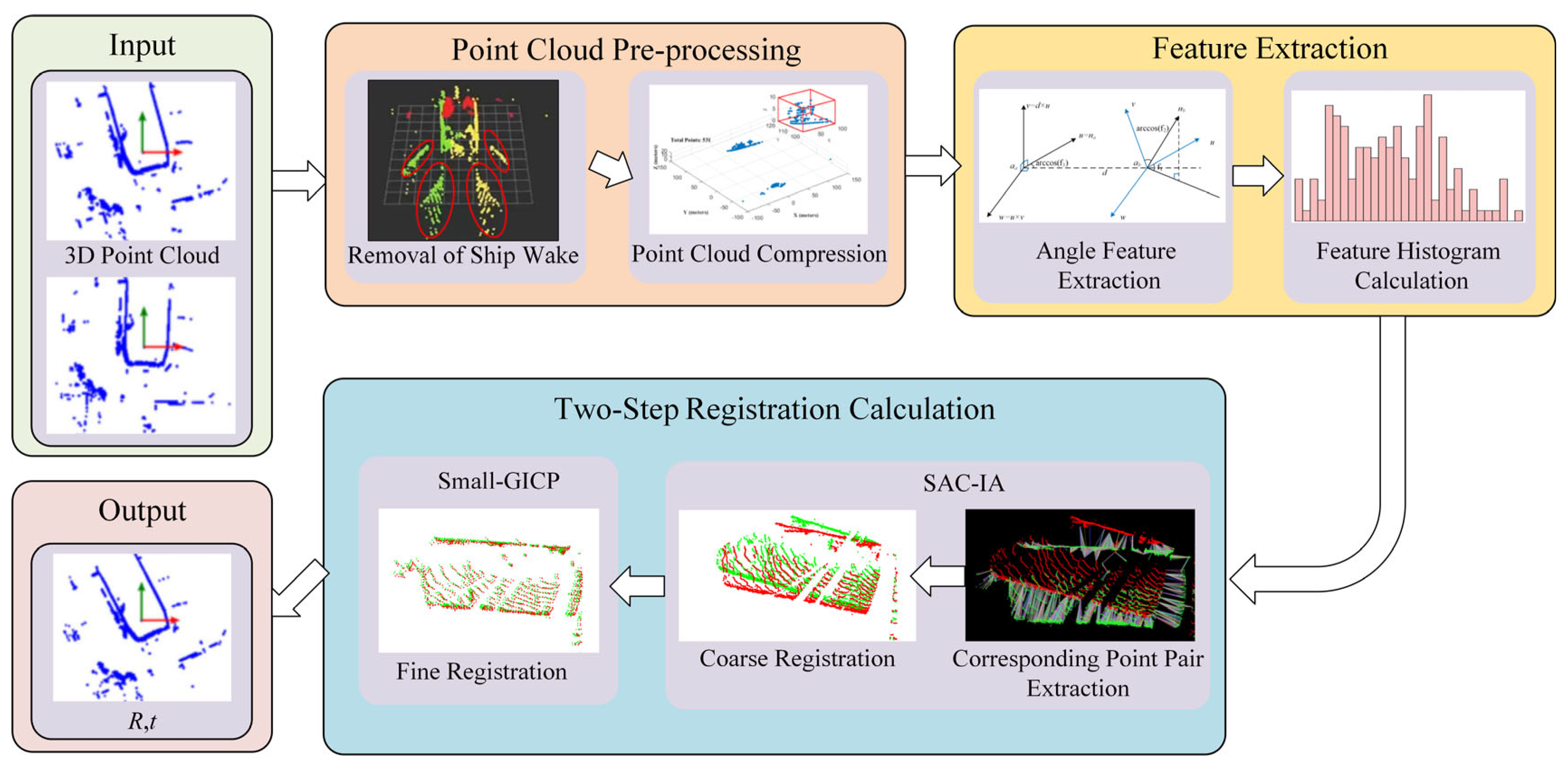

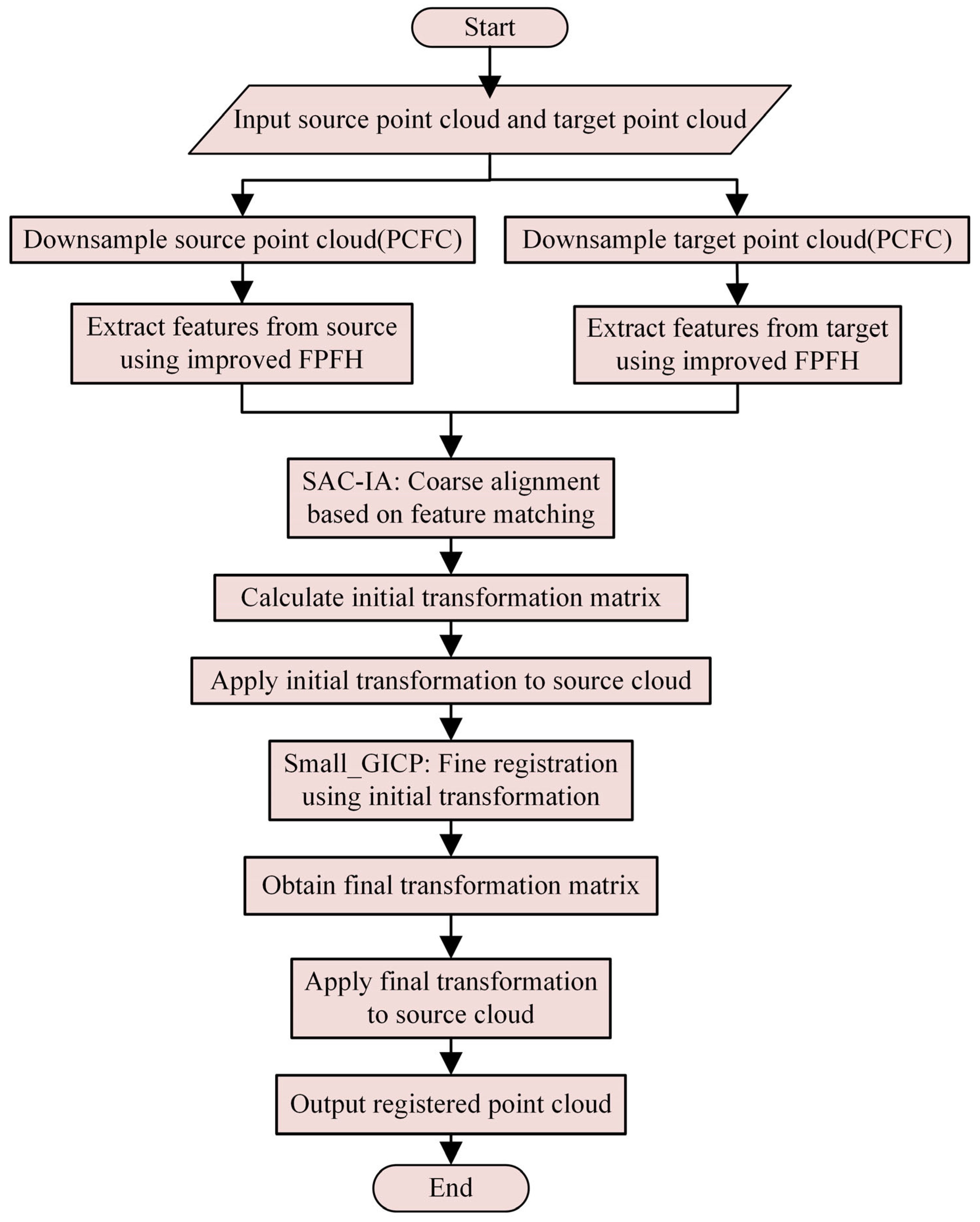

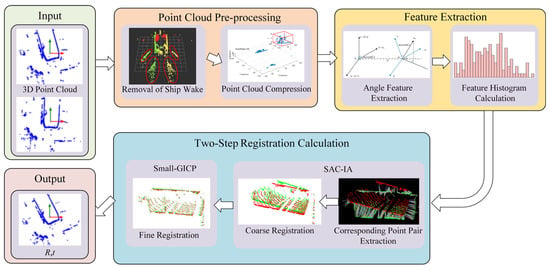

The overall framework of the proposed laser point cloud registration method based on improved FPFH feature extraction is shown in Figure 1.

Figure 1.

Framework for laser point cloud registration based on feature extraction.

This method consists of three parts: (a) point cloud preprocessing, which includes point cloud filtering and compression; (b) feature extraction based on improved FPFH; and (c) two-step registration computation based on SAC-IA and Small_GICP.

3.1. Point Cloud Filtering and Compression

In the processing of three-dimensional LiDAR point cloud data, point cloud filtering is one of the key steps to improve data quality and computational efficiency. The filtering process mainly involves the removal of redundant points and noise to effectively extract the areas of interest (such as point cloud data of ships). The goal of point cloud filtering is to minimize redundant information and noise interference while preserving environmental features, thereby providing a more efficient and streamlined dataset for subsequent point cloud processing and registration calculations.

Point cloud noise removal is aimed at addressing common interferences in marine environments, such as noise caused by reflections from water surface ripples. By employing a conditional filtering method based on point clouds to remove points with reflectivity lower than 10%, it can effectively eliminate noise in low reflectivity areas like water surface ripples, thereby retaining the valid point cloud above the water surface. This operation not only reduces interference factors but also aids in the extraction of point cloud features [50].

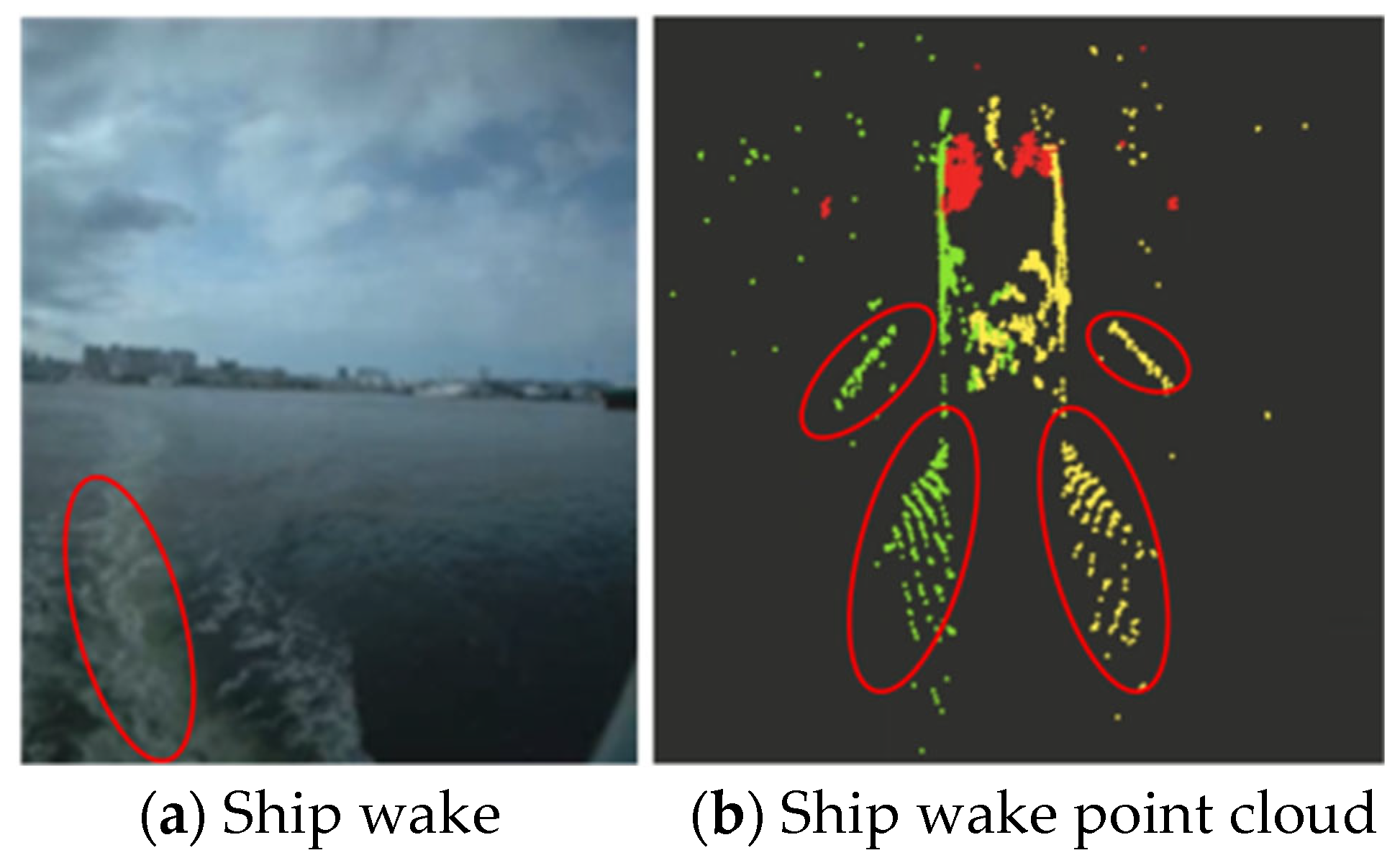

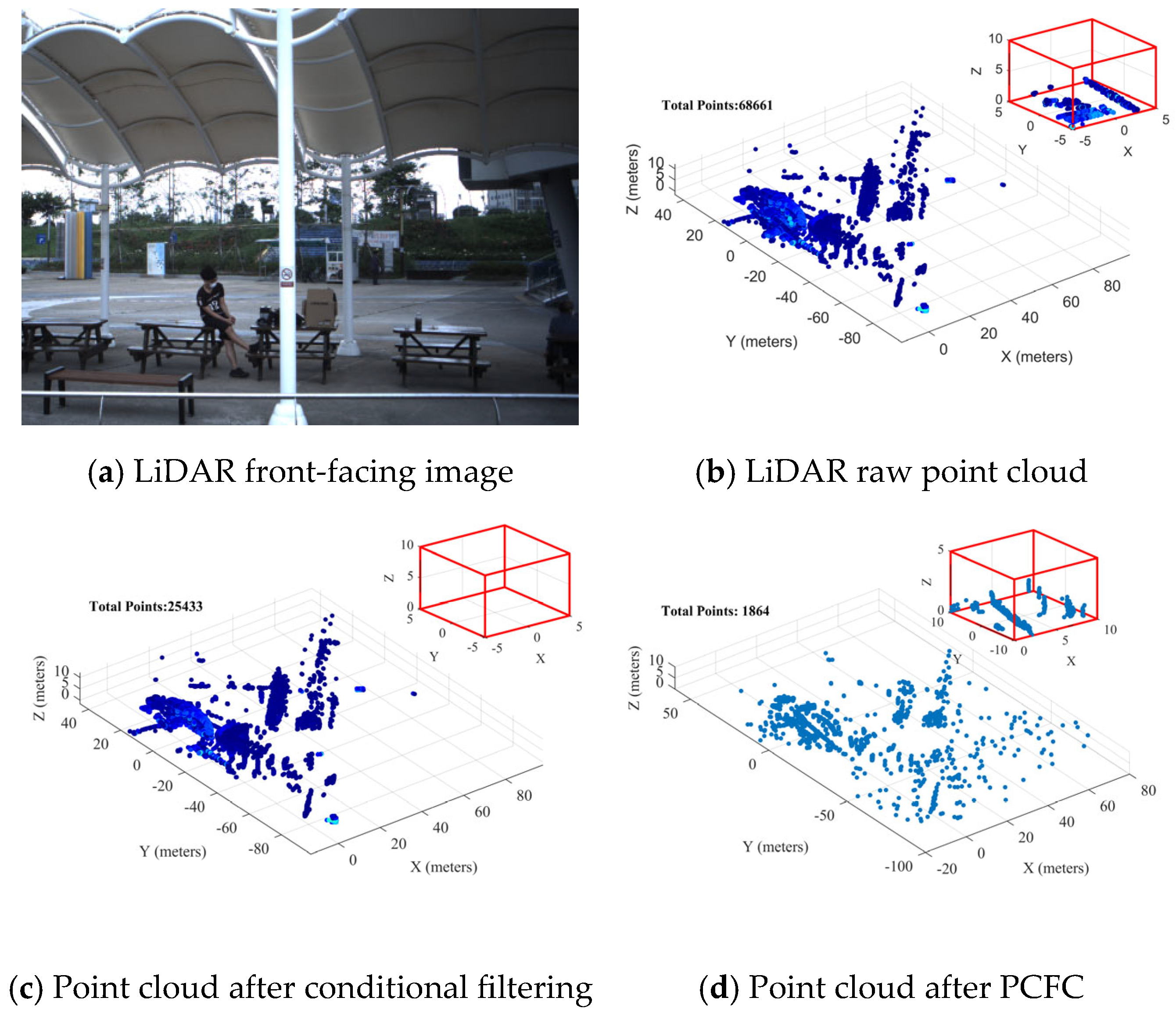

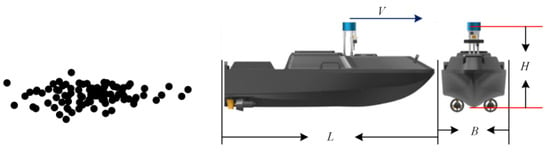

It is worth noting that during ASV navigation, there are significant ship wake waves around the hull, particularly on the sides and at the stern of the vessel. As shown in Figure 2, even after removing points with reflectivity lower than 10%, the wake point cloud and the hull point cloud of the vessel still cannot be eliminated. Since the point cloud of the vessel is stationary relative to the LiDAR, it does not contribute to the point cloud registration process, making it necessary to remove it to improve the efficiency of point cloud registration. This paper proposes a Point Cloud Filtering and Compression (PCFC) method tailored for marine environments, aiming to reduce the number of points while maximizing the preservation of features.

Figure 2.

Ship wake and its point cloud.

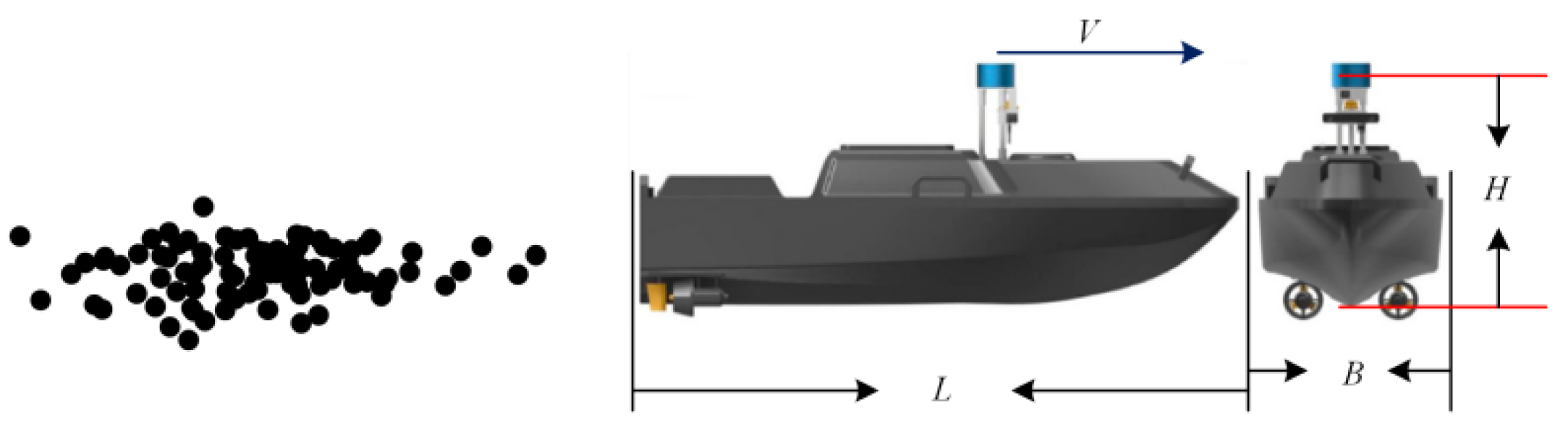

Considering that the ship wake point clouds are uniformly distributed on both sides and at the stern of the vessel, while they are less prevalent at the bow, a conditional filtering method based on the ASV’s hull dimensions and speed is proposed. As shown in Figure 3, when the ASV is moving forward, wake waves appear on both sides of the stern. The main process of this method is as follows: let the length of the vessel be L, the width be B, the speed be V, the LiDAR installation height be H, and the proportional coefficients be k1, k2, and k3. Taking the origin of the LiDAR as the reference point, a bounding box for the ship wake is generated with a length of k1VL, a width of k2VB, and a height of k3VH, along with the three-dimensional coordinate range. Here, k1, k2, and k3 are coefficients related to the ship’s speed, which can be fitted from the collected wake point clouds or roughly calculated using empirical formulas. In simple terms, k1, k2, and k3 are estimates of the approximate range of the hull and ship wake based on the speed and direction of the vessel.

Figure 3.

Schematic diagram of the bounding box of the ship wake based on hull dimensions and ship speed.

The removal of redundant point clouds primarily employs point cloud compression technology. To reduce the computational burden during the point cloud registration process, this paper utilizes the point cloud compression method proposed by Cao et al. [51], which iteratively fits point cloud surfaces with similar distance values and eliminates redundancy based on their spatial relationships. This method achieves a compression ratio of 40 to 80 times while maintaining high precision for downstream applications, making it very suitable for the point cloud registration calculations of resource-constrained ASV studied in this paper.

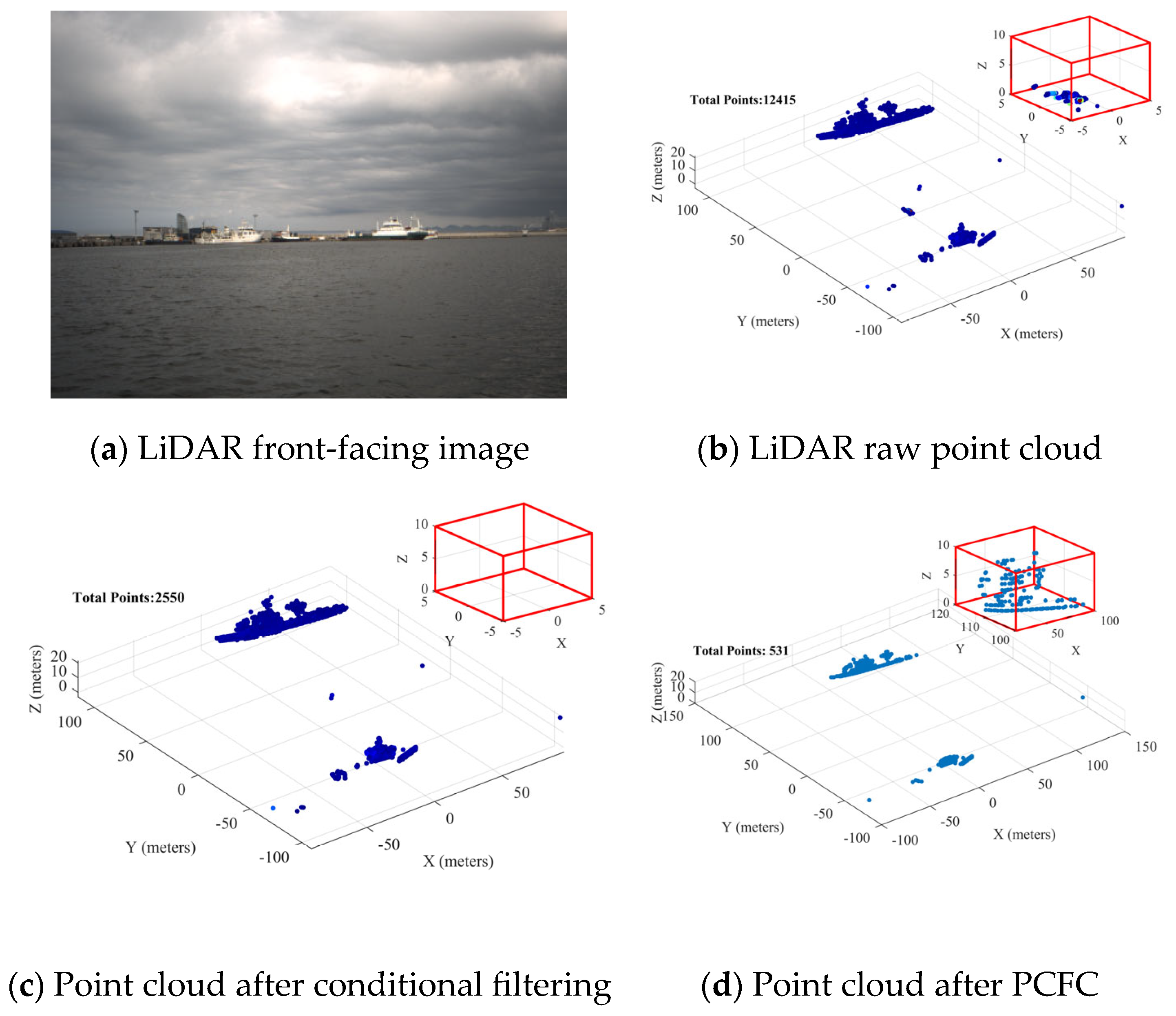

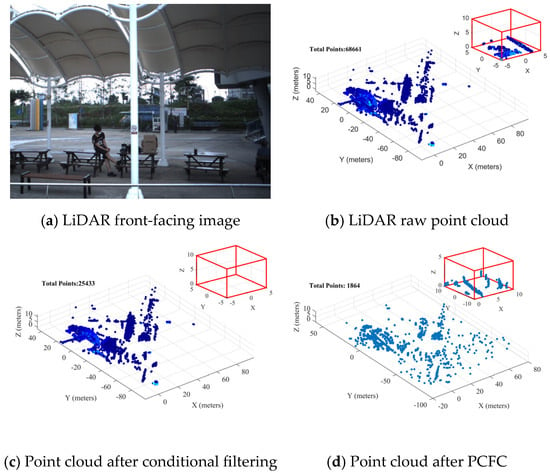

Figure 4 and Figure 5 show the effects of point cloud filtering on sparse and dense point clouds from the Pohang dataset, respectively. By combining conditional filtering and point cloud compression, the methods can adapt to different navigation environments, ensuring efficient noise removal while preserving important point cloud information in complex settings. As seen in Figure 4, the point cloud is relatively sparse in open water, and conventional filtering methods (such as statistical filtering) may easily eliminate isolated ship point clouds in the environment. The use of conditional filtering and point cloud compression can remove most of the redundant point clouds while retaining environmental features. Figure 4 demonstrates that conditional filtering effectively removed the wake point clouds around the vessel and the redundant point clouds of the vessel itself. Due to the sparse nature of the point cloud, only 2550 points remain after conditional filtering, and after point cloud compression, the number of points is reduced to 531. In contrast, in the case of dense point clouds, applying conditional filtering followed by point cloud compression effectively removes redundant points. As shown in Figure 5, the number of points after conditional filtering is 25,433, and after voxel filtering further removes redundant points, the final point cloud count is 1864.

Figure 4.

Filtering effect of sparse point clouds in open water scenarios.

Figure 5.

Filtering effect of dense point clouds in port waterway scenarios.

In order to ensure that the geometric features of the point cloud can be maximally restored after compression, this paper uses low compression ratios and high-precision parameter compression of the filtered point cloud. Figure 4d shows that the point clouds of distant ships in the sparse point cloud scenario remain closely arranged after compression; Figure 5d illustrates that in the dense scenario, the point cloud is evenly distributed and densely packed after practical low compression ratio compression, providing accurate point cloud features for downstream point cloud registration and object recognition. The enlarged box highlights the dock edge and the columns behind. Combining the local enlarged views of Figure 4 and Figure 5, it can be seen that, at compression ratios of 5 to 10 times, the point cloud still preserves the main geometric features.

In summary, whether in sparse or dense point cloud scenarios, the proposed PCFC method can simplify the original complex point cloud data while preserving key environmental features, making subsequent processing and analysis more convenient and efficient.

3.2. Improved FPFH Feature Extraction

After the point cloud data is processed using the PCFC method, the point cloud in the sparse scenario already exhibits distinct feature points, while in the dense scenario, many small objects’ point clouds are retained due to the absence of point cloud statistical filtering. Next, the improved FPFH is used to extract feature points from the point cloud while removing most of the less significant point clouds.

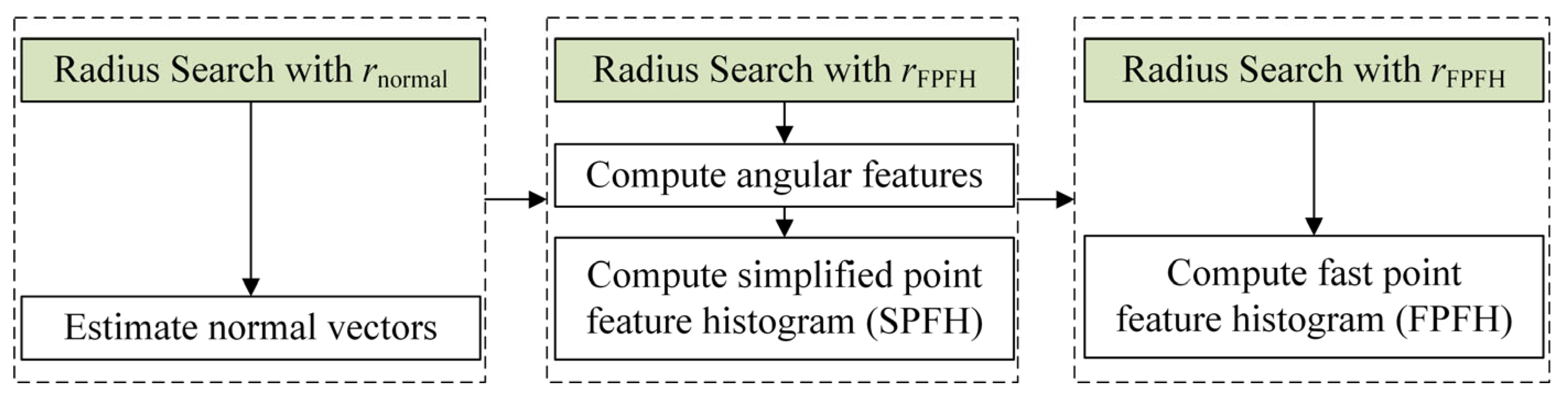

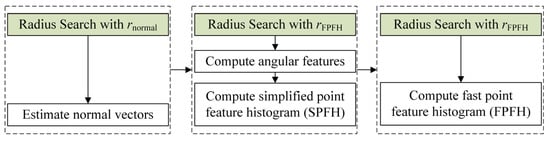

As shown in Figure 6, the process of traditional FPFH computation mainly follows three steps: (1) estimating the normal vectors for each point using neighboring points within a radius; (2) extracting angular features between the query point and its neighboring points within the radius, and then calculating the Simplified Point Feature Histograms (SPFH) based on the distribution of the angular features; (3) computing the FPFH by taking the weighted average of the SPFH of the neighboring points [23].

Figure 6.

Traditional FPFH framework.

However, traditional FPFH may not be suitable for real-time applications of onboard LiDAR in water scenarios. For example, applying FPFH to point clouds captured by a 64-line LiDAR sensor, with a point count ranging from 30 K to 80 K, requires more than 0.3 s of computation even when using multithreading techniques on consumer-grade laptops, not to mention that most ASV are equipped with low-power and low-computational devices [45]. A comprehensive analysis of previous studies on FPFH in the registration of laser point clouds reveals two computational bottlenecks in traditional FPFH. First, the radius search for neighboring points reduces computational efficiency (as shown in the green section of Figure 6). Second, the lack of reliability checks for points unnecessarily executes SPFH and FPFH calculations on unreliable points, resulting in a decline in the expressive capability of neighboring point FPFH features and reduced computational efficiency.

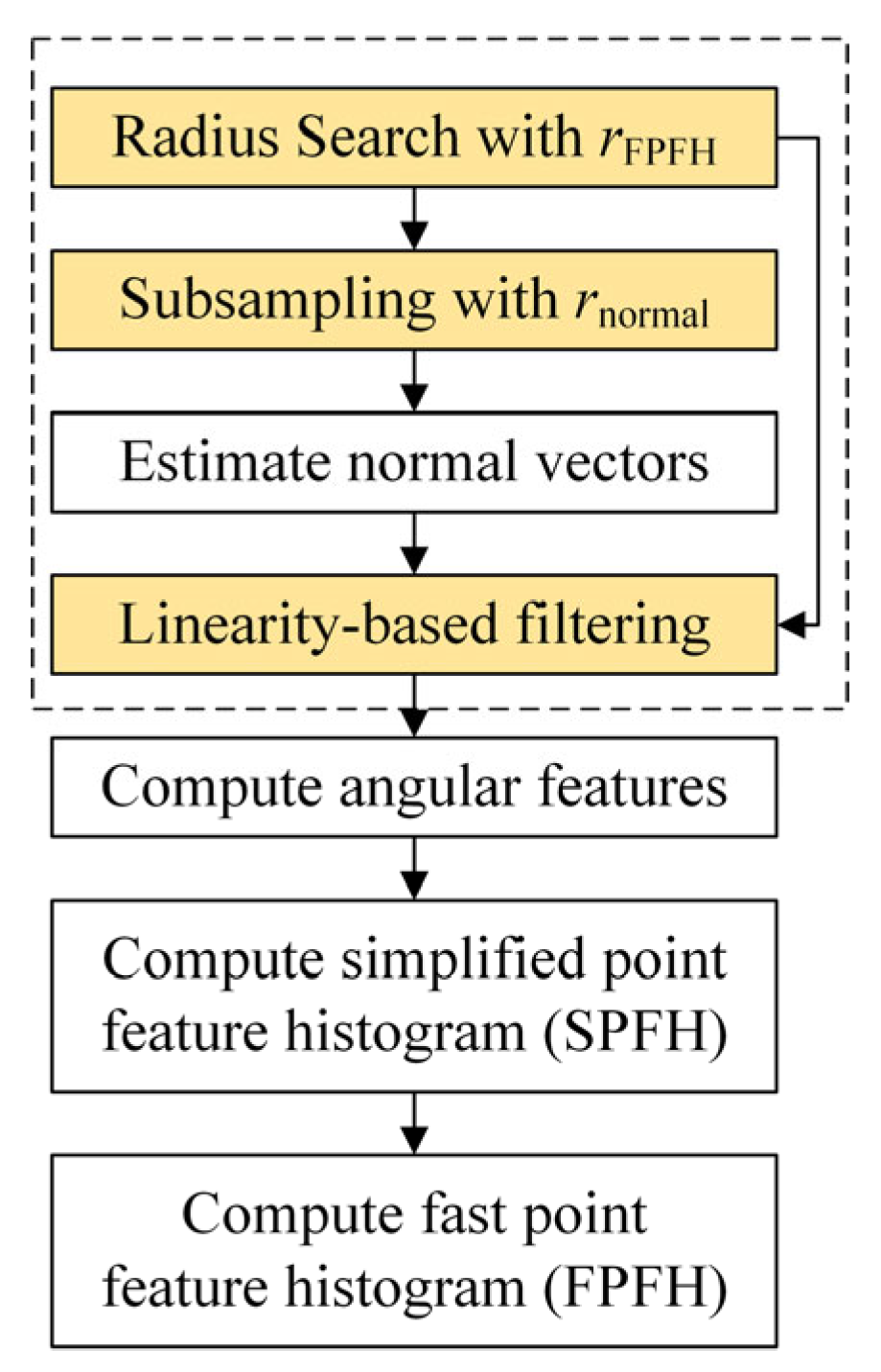

To address the above two computational bottlenecks, this paper improves the radius search strategy and applies linearity filtering based on Principal Component Analysis (PCA) to enhance computational efficiency. The improved FPFH framework is shown in Figure 7. First, to reduce unnecessary computational costs, a radius search is performed only once for each point using , and the results are reused. Then, neighboring points used for normal vector estimation are subsampled from the output of the radius search with and (i.e., ). On the other hand, a reliability check is conducted for each point; if the cardinality of is less than or the linearity exceeds , the point is excluded from the calculations of angular features, SPFH, and FPFH features. Here, and are user-defined thresholds.

Figure 7.

Improved FPFH framework.

Specifically, the radius search function is defined using a K-d tree , which outputs the indices of neighboring points I as follows:

where is the query point, is the radius for FPFH feature computation, P is the set of source point clouds or target point clouds; s denotes the index of points in P. Then, by using Equation (1), the neighboring points corresponding to the indices in I can be obtained.

The radius search aims to utilize the geometric properties between the query point and its neighboring points to create feature descriptors. However, if the number of neighboring points around the query point is too low, the normal vector of is likely to be inaccurate, ultimately degrading the quality of the feature descriptor. Therefore, if , the normal vector computation is skipped, and the query point is excluded from subsequent calculations. Otherwise, subsampling is performed from .

If , the normal vector n and its linearity are estimated using PCA. Note that if is too large, it indicates that follows a linear or point-like distribution, leading to ambiguous normal vector computation. This is because the orthogonal direction to the line is not uniquely determined. For this reason, , n and I are only retained when is satisfied. The improvement in this part corresponds to the linearity-based feature point filtering shown in Figure 7.

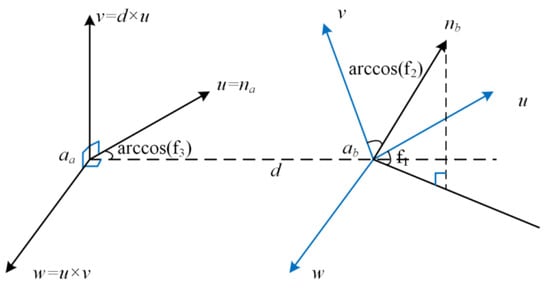

During the feature point filtering process, there are indices belonging to I that have not been assigned a normal vector. These indices typically correspond to points located at the boundary of the point cloud, where there are not enough neighboring points for normal vector estimation. However, these points are included in the neighborhood search of other points, as shown in Figure 8. Therefore, these indices are removed, and I is updated to , where is defined accordingly. Consequently, only the indices for which each satisfies are set as the final valid query index set J.

Figure 8.

Schematic diagram of the selection of boundary points in the point cloud.

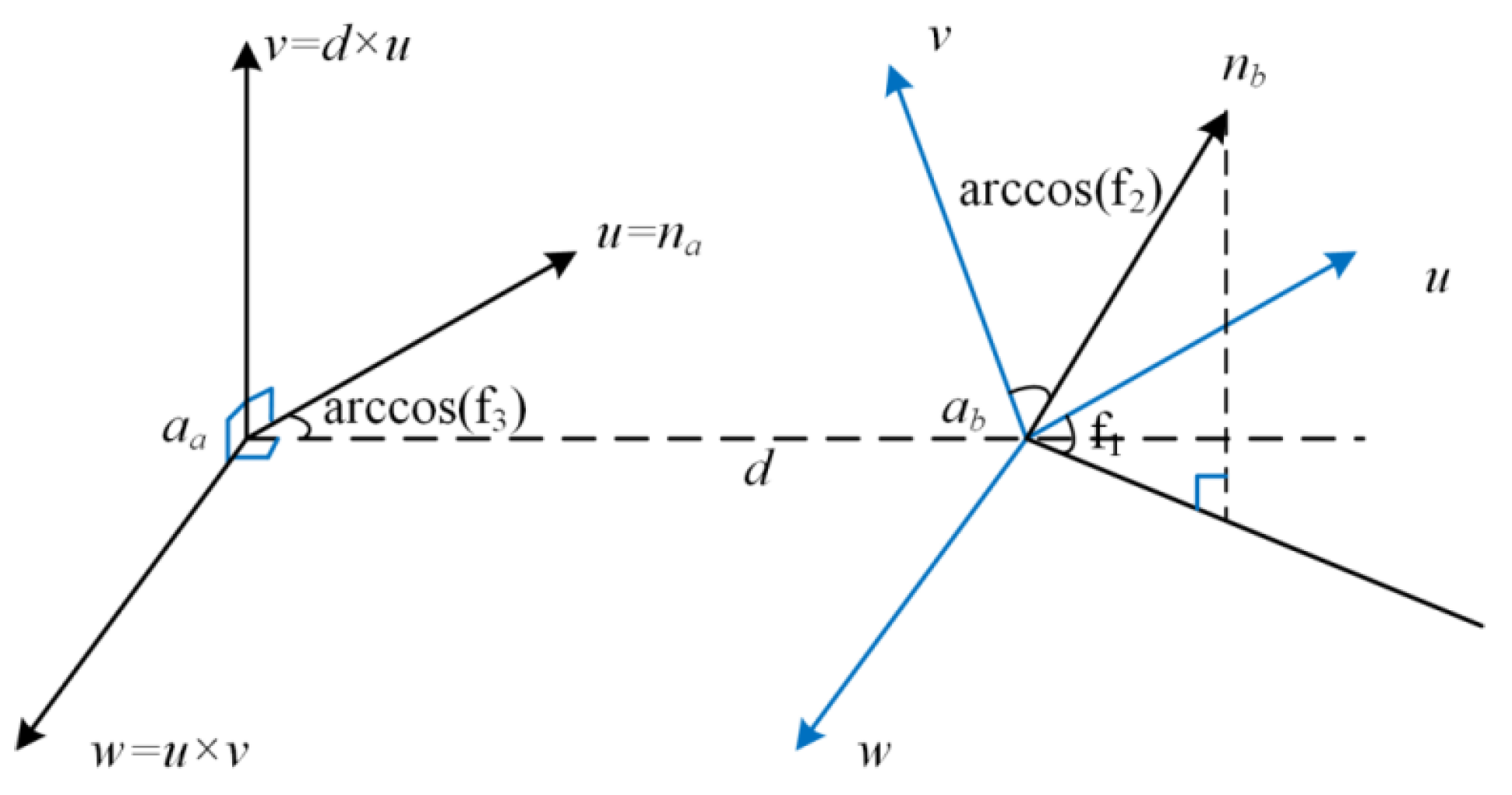

Finally, the Simplified Point Feature Histogram (SPFH) and Fast Point Feature Histogram (FPFH) features of the query point (i.e., the indices in J) are computed. Let the neighboring points of the query point be represented as , where is the index set. Among these two points, the points whose connecting vector (i.e., ) has a small angle with its estimated normal are designated as , while the points with a larger angle are selected as ; a local coordinate system , is established for and , as shown in Figure 9.

Figure 9.

Local coordinate systems of and .

Next, let the corresponding normal vectors of and be represented as and , respectively. The direction vector between and is defined as , where denotes the L2-norm. Based on the Darboux uvw framework [23], the three angle features f1, f2 and f3 are defined as follows:

where , , , , denotes the inner product, and × denotes the cross product.

Next, define a mapping function that maps the l-th angle feature fl (where l∈{1, 2, 3}) to the index of the l-th histogram, as follows:

where denotes the floor function, H represents the size of the histogram, and is a small positive number (to prevent erroneously returning H + 1 when fl equals fl,max); fl,min and fl,max denote the minimum and maximum values of fl, respectively. Then, the SPFH feature of is defined as , with each element defined as follows:

where if the condition is satisfied, outputs 1; otherwise, it outputs 0.

Finally, the FPFH feature of is defined as follows:

In the equation, represents the distance between and .

In summary, by minimizing redundant points and filtering out unreliable feature points in advance, the computation speed can be improved to a certain extent. Furthermore, unreliable normal vectors can lead to inaccuracies in the angular features in Equation (2), which in turn negatively impacts the quality of the SPFH and FPFH calculations from Equations (3) to (5).

3.3. Two-Step Registration Based on SAC-IA and Small_GICP

SAC-IA algorithm is a registration algorithm based on sample consensus. Unlike traditional registration algorithms that employ a greedy approach, the SAC-IA algorithm samples extensively from the correspondences of the source point cloud. It identifies points that have similar FPFH features in the target point cloud among these sampled points, thereby establishing correspondences between the two frames of point clouds and quickly finding a good initial transformation. To further clarify the two-step point cloud registration workflow based on SAC-IA and Small_GICP, a corresponding UML activity diagram has been added, as shown in Figure 10. This diagram intuitively illustrates the overall process, in which SAC-IA is used for initial coarse registration, followed by Small_GICP for fine registration.

Figure 10.

Two-step point cloud registration workflow based on SAC-IA and Small_GICP.

Although relatively reliable feature points have been extracted from the point cloud, providing too many correspondences inevitably reduces computational efficiency. To address the issue of decreased efficiency caused by an excessive number of correspondences, this paper improves the computational efficiency of SAC-IA by incorporating point feature similarity ranking. The algorithm steps are as follows:

Step 1: Select n (n > 3) sampled points from the source point cloud P, ensuring that the distance between any two sampled points is greater than a predetermined threshold dmin.

Step 2: For each sampled point, search for points in the target point cloud Q that have similar FPFH features, selecting the point with the highest FPFH feature similarity as the correspondence for the sampled point. Use cosine similarity as a scoring metric for these sampled point pairs, and select the pairs with the highest similarity among the n sampled pairs to compute the initial transformation matrix. Points with low feature significance are pre-filtered through feature ranking to ensure that the number of correspondences does not exceed .

Step 3: Calculate the initial rigid transformation matrix for the corresponding point pairs using SVD.

Based on the transformation matrix outlined above, the distance error and function corresponding to the transformed points are calculated using the Huber penalty function. The three steps mentioned are then repeated, selecting the rigid transformation matrix that yields the minimum error as the optimal transformation, which will be used for the initial registration.

Due to the insufficient accuracy of the initial registration results obtained from the SAC-IA algorithm, this paper employs Small_GICP to refine the point cloud registration using the initial matches as input. Small_GICP is an improved and optimized version of fast_gicp, providing efficient parallel computation for fine point cloud registration. The core registration algorithm of Small_GICP has been further optimized from fast_gicp, achieving up to a 2× speed improvement. Additionally, this algorithm supports OpenMP and Intel TBB as parallel backends, making it feasible to deploy and apply the proposed point cloud registration method on low-computation platforms (X86 architecture and Arm architecture).

4. Experimental Validation

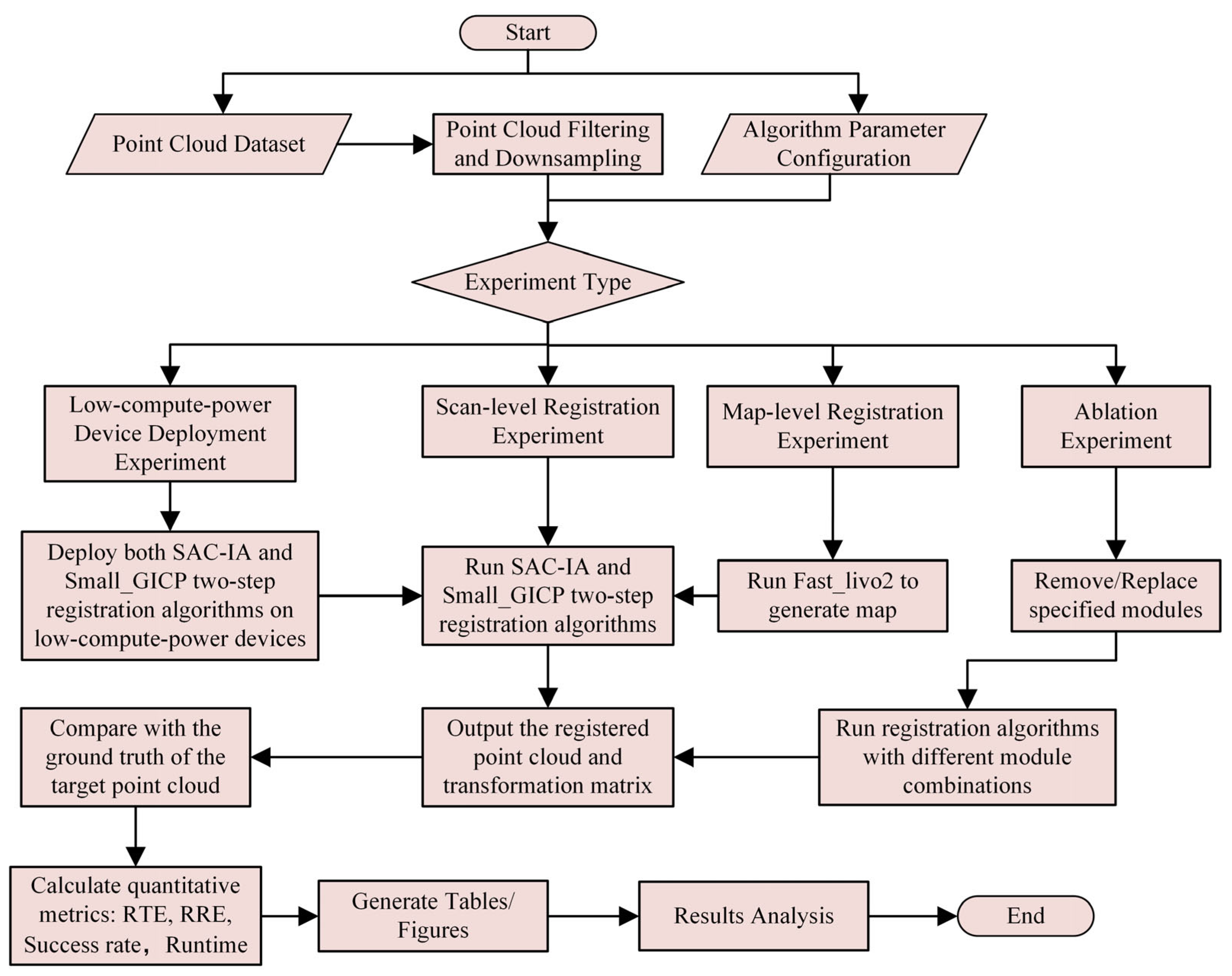

This paper evaluates the proposed point cloud registration method on the Pohang Canal dataset, assessing the registration from the scanning level to the map level. First, an overview of the Pohang dataset is provided, followed by a brief analysis of the similarities and differences between LiDAR datasets in water traffic scenarios and land traffic scenarios using the Pohang dataset as an example. Then, specific experimental setups and evaluation details are provided. The experimental results demonstrate that the proposed registration method not only outperforms state-of-the-art point cloud registration methods at the scanning level but also exhibits excellent scalability and applicability in both map-level registration and low-computation adaptability. To clearly illustrate the experimental validation workflow, an overview diagram is provided in Figure 11.

Figure 11.

Experimental validation workflow diagram.

4.1. Dataset

Pohang Canal Dataset: This dataset includes measurements obtained using various perception and navigation sensors, such as stereo cameras, infrared cameras, omnidirectional cameras, three LiDARs, maritime radar, global positioning systems, and attitude and heading reference systems [52]. The data were collected along a 7.5-kilometer waterway in Pohang, South Korea, as shown in Figure 12, which includes narrow canals, inner and outer ports, and coastal areas, and was conducted under diverse weather and visual conditions.

Figure 12.

Vessel trajectories and dataset scenes.

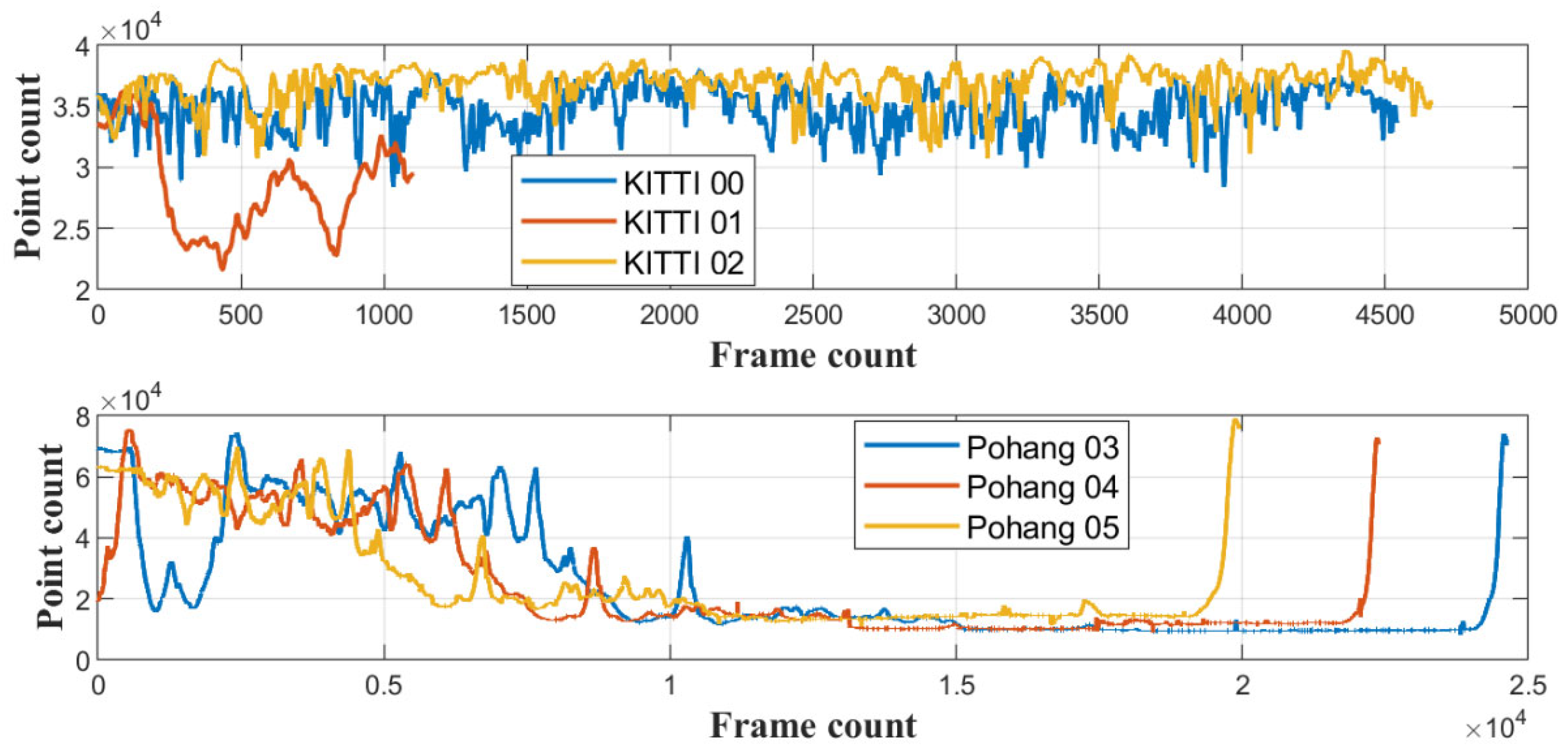

The KITTI dataset is a popular benchmark dataset in the field of autonomous driving. This paper compares the frame counts and the number of points per frame of the KITTI datasets 00, 01, and 02 with those of the Pohang datasets 03, 04, and 05. It is noteworthy that the KITTI dataset being compared consists of data collected by a 64-line LiDAR, while the Pohang dataset is collected by three LiDARs. Here, we only compare the point clouds collected by the 64-line primary LiDAR. As shown in Figure 13, the number of point clouds per frame in the KITTI dataset shows little fluctuation, whereas the number of point clouds in different frames of the Pohang dataset varies significantly. Combining with Figure 12, it can be observed that the number of point clouds during navigation in the narrow canal (Region 1 in Figure 12) and the inner port area (Region 2 in Figure 12) is generally greater than 40,000; while in the outer port area (Region 3 in Figure 12), the number ranges from 20,000 to 40,000. In the coastal waters (Region 4 in Figure 12), the number of point clouds is approximately only 10,000. In fact, this model of LiDAR can collect up to about 130,000 points per frame.

Figure 13.

Comparison of point cloud quantities between the primary LiDAR of the Pohang dataset and the LiDAR of the KITTI dataset.

4.2. Experimental Setup and Evaluation Metrics

In the experiments, the KITTI benchmark dataset and the 03 sequence of the Pohang dataset were used to evaluate the scan-level registration between the source point cloud and the target point cloud. To test the scalability of the proposed method, map-level point cloud evaluations were conducted for the registration method presented in this paper. For the map-level point cloud, W represents the size of the submap window. For example, W = 3 means that three frames of scan-level point clouds are used to construct the map point cloud. For the map-level point cloud, the output of the state-of-the-art SLAM based on Fast_livo2 [53] was utilized.

This paper uses the success rate as a quantitative metric to evaluate the robustness of point cloud registration [40]. Registration is considered successful if the translation and rotation errors are both below 2 m and 5°, respectively [54]. Since the point cloud publishing frequency of common mechanical LiDARs is 10 Hz, a runtime of less than 100 ms is regarded as achieving real-time performance. For successful registration results, the accuracy of the registration is evaluated using two metrics: Relative Translation Error (RTE) and Relative Rotation Error (RRE). RTE and RRE reflect the differences between the estimated pose transformation matrix and the actual pose change matrix between two frames, indicating the accuracy and stability of the algorithm in updating position and orientation. Their definitions are as follows:

In the equations, tn,GT and Rn,GT represent the ground truth of the n-th translation and rotation, respectively; Nsuccess denotes the number of successful registration results.

4.3. Key Parameter Settings

Due to the differences in environments exhibited by maritime traffic scenarios compared to road traffic, certain key parameters of the point cloud registration algorithm proposed in this paper should be adjusted according to the specific application context. For the point cloud filtering and compression algorithms, the length of the vessel L = 7.9 m, the width of the vessel B = 2.6 m, the speed of the vessel V can be obtained from the dataset based on the timestamp, the height of the LiDAR installation H = 2.1 m, and the scaling factor k1 = 20, k2 = 8, k3 = 1, (strictly speaking, k1, k2, k3 should be a function related to speed, but it is set as a constant here for simplification). The compression level for point cloud compression is set to 0, representing the lowest compression ratio and the highest precision. For the improved FPFH, the parameters are set as rFPFH = 0.5 m, rnormal = 0.3 m, = 10 and = 0.99. For the SAC-IA algorithm, the distance threshold is set to dmin = 1 m, = 100.

Additionally, the computer configuration used for the scan-level and map-level point cloud registration experiments in this paper includes an AMD Ryzen R5 5500 CPU with six cores and 12 threads, a base frequency of 3.6 GHz, 32 GB of memory, and an NVIDIA GeForce RTX 4060 Ti GPU with 16 GB of VRAM; the primary programming language used for the experiments is C++.

4.4. Evaluation of Scan-Level Point Cloud Registration

The experiment evaluates the performance of the proposed method in scan-level point cloud registration. To provide a clear comparison of the success rate and registration errors between the proposed method and other advanced registration methods, the point cloud conditional filtering module (removal of ship motion waves) of the proposed method is removed (as ship motion waves are not involved in the KITTI benchmark data). The proposed method is compared with learning-based methods [54,55,56,57,58], traditional point cloud registration methods [38,39,45,59], and the pose estimation module STD from classic loop closure detection methods [60] on the KITTI dataset.

As shown in Table 1, the success rate of scan-level point cloud registration demonstrates that the proposed method can robustly provide pose estimates. In comparison with deep learning-based methods, although the proposed method has removed the point cloud conditional filtering module, it still exhibits greater robustness than the learning-based approaches and achieves registration performance comparable to state-of-the-art methods. This indicates that the proposed method can attain the performance of deep learning-based methods even without any training procedures. Additionally, compared to the pose estimation module STD [60], the proposed method also demonstrates better registration performance. This suggests that the proposed method has the potential to improve the overall performance of the SLAM system when used as a front-end odometry for pose estimation.

Table 1.

The RTE, RRE, and success rates of various algorithms on the KITTI dataset.

In order to compare the performance of learning-based and traditional point cloud registration methods on the water scene dataset, the two best-performing methods from each category were selected from Table 1 to compare with the proposed method on the Pohang dataset. For the learning-based methods, the authors’ publicly available pre-trained model from the KITTI dataset was used to infer the Pohang dataset, and its registration performance was recorded. For the traditional registration methods, the authors’ publicly available source code was compiled and used to perform point cloud registration calculations on the Pohang dataset, with the registration performance recorded. Table 2 presents the registration performance of the learning-based methods Predator and D3Feat, as well as the traditional methods FPFH + FGR and FPFH + TEASER, in comparison with the proposed method on the Pohang dataset.

Table 2.

The RTE, RRE, and success rates of various algorithms on the Pohang dataset.

From Table 2, it can be observed that although there are some similarities between the KITTI dataset and the Pohang dataset (both containing street buildings and trees), the registration performance of the models trained on the KITTI dataset decreases when applied to the Pohang dataset. The registration success rates of Predator and D3Feat on the Pohang dataset drop significantly, and the registration errors among successfully registered results also increase considerably. This poor generalization performance of learning-based methods is why this paper opts for optimization based on traditional methods. The two traditional registration methods, FPFH + FGR and FPFH + TEASER, also see a significant decrease in registration success rates when applied to the Pohang dataset, but the registration errors among successful results do not increase significantly. The proposed method incorporates a vessel wave removal module, which provides better adaptability to water scenes, resulting in a slight improvement in registration performance compared to that on the KITTI dataset.

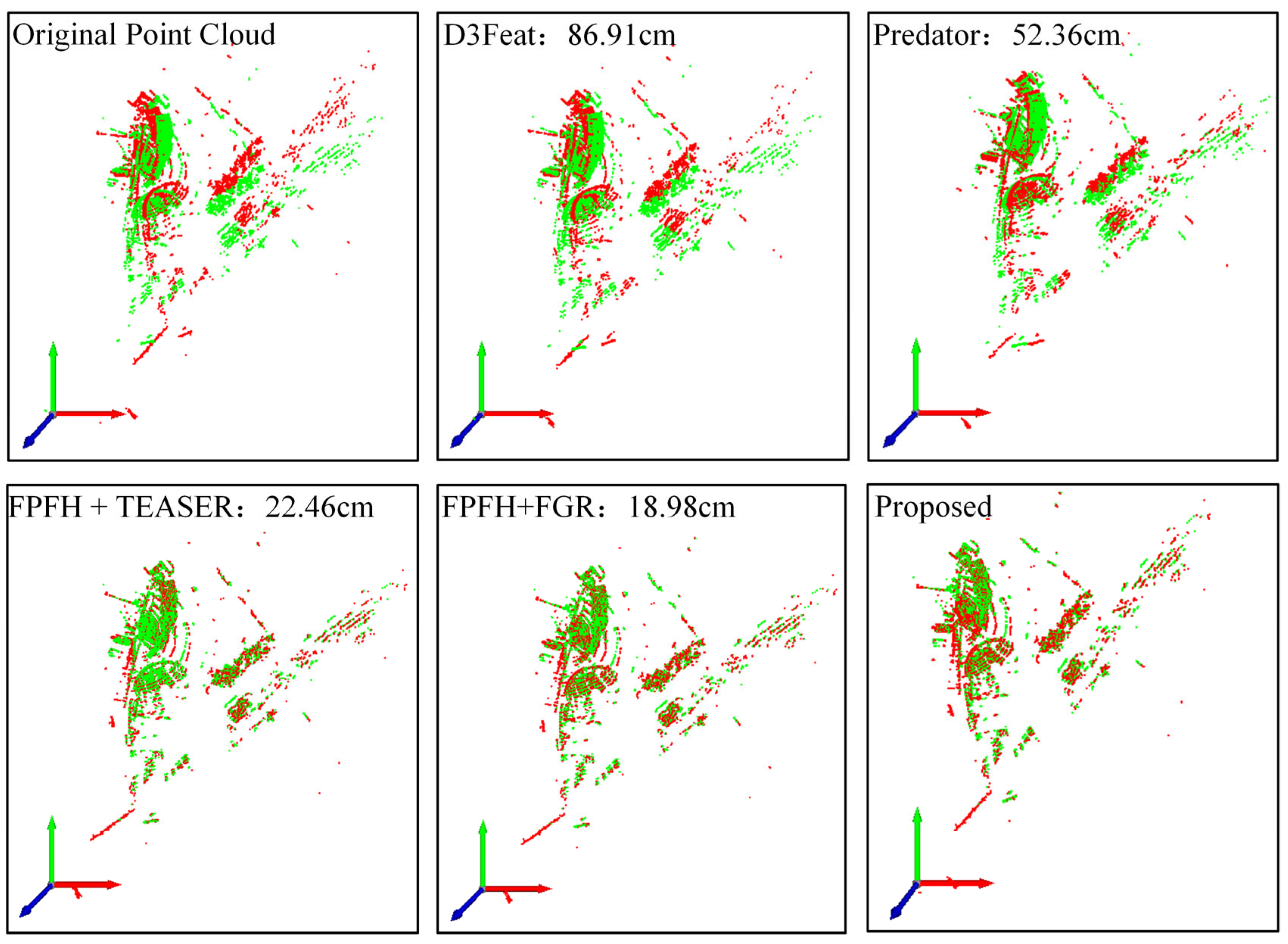

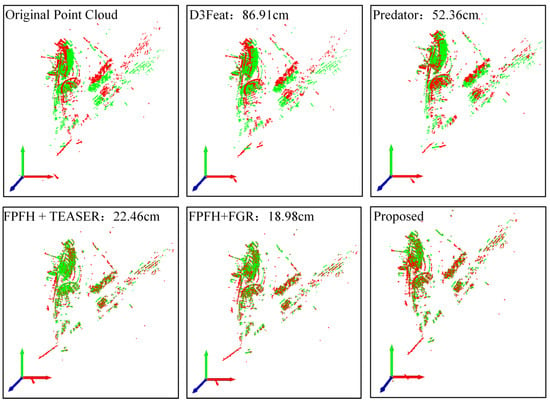

Select five methods from Table 2 for a visual comparison with the proposed method. Since the ASV in the Pohang dataset navigates through a canal with few turning scenarios, to demonstrate the robustness of the proposed method, two frames of point clouds with significant rotation angles are selected. The two frames of point clouds, which exhibit substantial steering during the leaving berth operation, are chosen for visual comparison. After removing invalid laser points, each frame retains approximately 70,000 laser points. The pose data of the two frames is shown in Table 3. According to Table 3, the ASV changes approximately 1.2 m, 0.13 m, and 0.0057 m in the x, y, and z directions, respectively, over 20 s (the values in the table are rounded to six decimal places). The change in the z-axis direction is relatively small, and the rotation angle around the z-axis is approximately 13.6 degrees; therefore, the top view of the point clouds is conducive to observing the registration results.

Table 3.

Pose data of the source point cloud and the target point cloud.

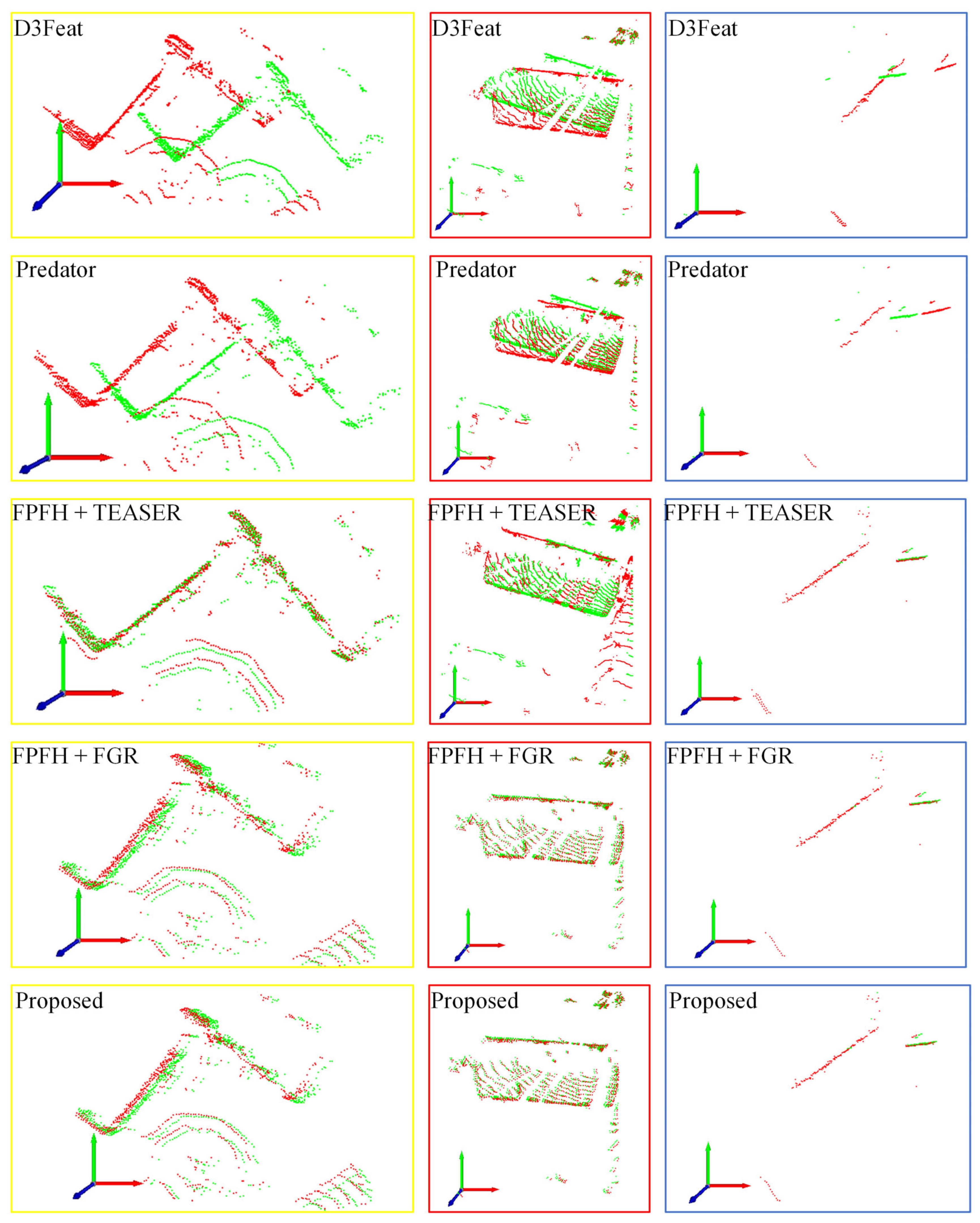

As shown in Figure 14, in the untrained dataset, the Predator and D3Feat algorithms struggle to balance the translation and rotation of the point clouds effectively. In contrast, the traditional methods, FPFH + FGR and FPFH + TEASER, perform well in point cloud registration. Figure 14 labels the registration methods used and the resulting RTE after registration, indicating that the proposed method achieves the best performance in the registration cases.

Figure 14.

Comparison of point cloud registration results (the annotations in the figure indicate the methods used and the RTE).

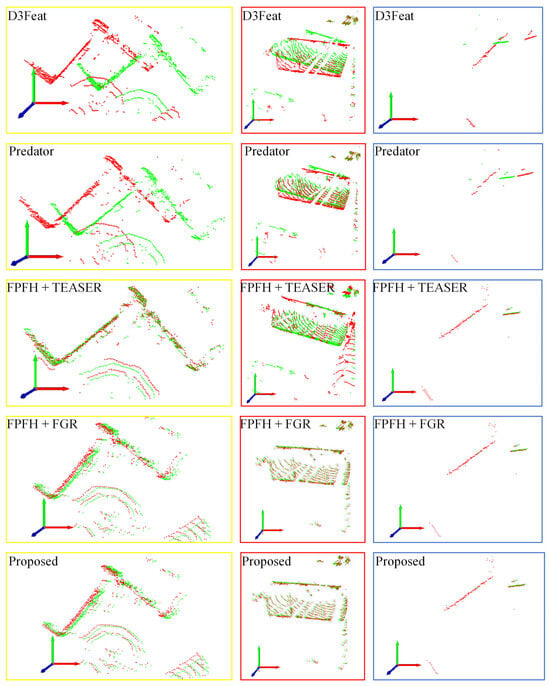

To further observe the details of point cloud registration from the five methods, three specific detail areas are presented from the registered point clouds in Figure 14. The three-dimensional coordinates (0, 20, 0) to (20, 50, 50) correspond to the coastal buildings in the upper left part of Figure 14; the three-dimensional coordinates (−10, −10, −10) to (0, 0, 10) correspond to the moored vessels in the center part of Figure 14; and the three-dimensional coordinates (−50, −180, −50) to (20, −60, 10) correspond to the square wave pier in the lower left part of Figure 14. The presentations of these three detail areas correspond to the images with yellow borders, red borders, and blue borders in Figure 15, respectively.

Figure 15.

Comparison of the detailed results of the five-point cloud registration methods (yellow border indicates coastal buildings, red indicates vessels, and blue indicates the breakwater).

From the top view of the point cloud registration details in Figure 15, it can be observed that the pre-trained models of Predator and D3Feat perform poorly in registering the two frames of point clouds in the example, which is to be expected. In contrast, the two traditional methods, FPFH + FGR and FPFH + TEASER, successfully register the two frames of point clouds, and the registration accuracy is comparable to that of the method proposed in this paper. It can be concluded that for scan-level point cloud registration in dense and noisy point cloud frames, untrained deep learning point cloud registration methods perform poorly, while traditional methods are able to achieve successful registration. The discussion will continue on map-level point cloud registration.

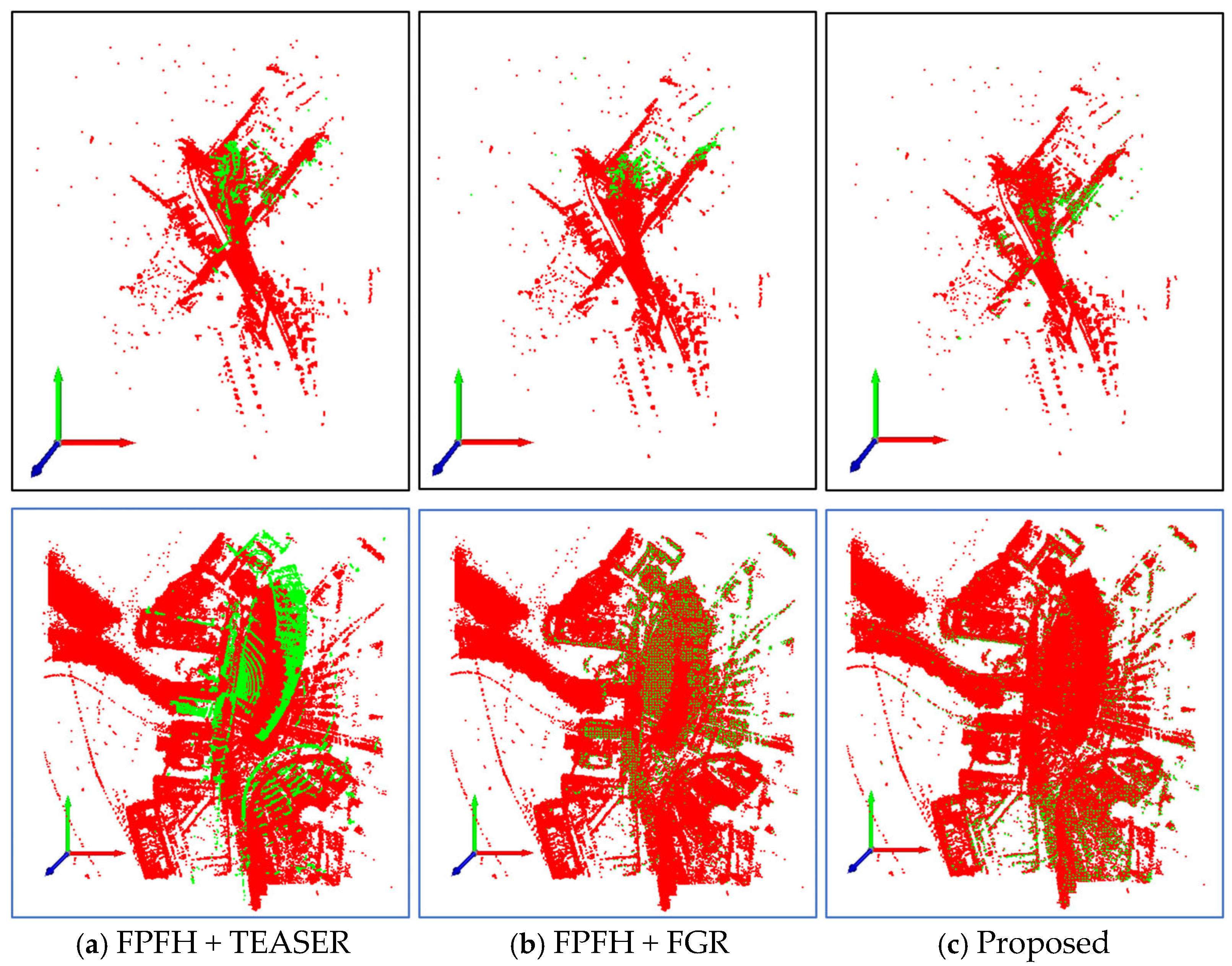

4.5. Evaluation of Map-Level Point Cloud Registration

Compared to traditional registration methods, learning-based approaches exhibit better performance in the highway scene shown in Table 1; however, these methods may decline on untrained datasets (as shown in Table 2). In contrast, the traditional method proposed in this paper is learning-free, making it less affected by dataset differences, thus demonstrating higher registration accuracy than Predator and D3Feat. To further validate the scalability of the point cloud registration method proposed in this paper, map-level point cloud registration was setup. The first 100 frames and the first 1000 frames of point clouds from the dataset were selected for registration using the point cloud map output by Fast_livo2. The map output from the first 100 frames of point clouds serves as the source point cloud, with a compressed quantity of 15,375, while the map output from the first 1000 frames of point clouds serves as the target point cloud, with a compressed point cloud quantity of 4,344,516.

In map-level registration, as shown in Figure 16, it can be observed that the registration effect of FPFH + TEASER is the worst, with the green source point cloud floating above the target point cloud and not aligning the point cloud to the coordinate system of the target point cloud. This indicates that the translation and rotation transformation accuracy computed by the FPFH + TEASER method is insufficient. The FPFH + FGR method performs relatively better in aligning the source point cloud to the target point cloud, but it is still evident that the computed rotation transformation error is large. The method proposed in this paper shows the best performance in the experiments. It is noteworthy that although FPFH + FGR is designed for global point cloud registration, it performs poorly in complex offshore port environments and in map-level registration. Therefore, the proposed method easily extends from scan-level to map-level registration and offers better scalability compared to other methods.

Figure 16.

Subgraph-level point cloud registration of the Pohang03 dataset.

4.6. Ablation Experiments and Runtime Analysis

Finally, this paper designed an ablation experiment to investigate the role of each module in the proposed method and tested the run-time performance and execution time of the proposed method for scan-level point cloud registration on a low-computing-power platform. Firstly, using the Pohang dataset 03 sequence as an example, scan-level point cloud registration was performed on the author’s laptop to verify the superiority of the proposed method. Specifically, the experiment sequentially removed or replaced one module and then calculated the average RTE of point cloud registration and the run time. As shown in Table 4, the point cloud registration methods with four different configurations were compared to the method proposed in this paper, with FPFH + FGR representing the registration results from reference [38]. The comparison between the improved FPFH + FGR and FPFH + FGR demonstrated that the improved FPFH, despite performing only one radius search, does not incur significant registration accuracy loss and improves the point cloud registration run time by about 14%. The comparison of PCFC + improved FPFH + FGR with improved FPFH + FGR highlights the role of the PCFC module, indicating that the introduction of PCFC removes redundant point clouds and noise, enhancing both registration accuracy and run time; however, the run time still does not achieve real-time registration of laser point clouds. When comparing PCFC + improved FPFH + SAC-IA with PCFC + improved FPFH + FGR, it can be observed that the introduction of the SAC-IA module results in a decrease in accuracy. However, due to the reduction in corresponding points involved in the computation, the run time is decreased. The comparison of PCFC + improved FPFH + SAC-IA with PCFC + improved FPFH + SAC-IA + Small_GICP (the method proposed in this paper) demonstrates that the introduction of the Small_GICP fine registration module improves accuracy by approximately 52% over the initial registration and enhances the run time by 42%, thereby achieving real-time registration of laser point clouds.

Table 4.

Results of the ablation experiments.

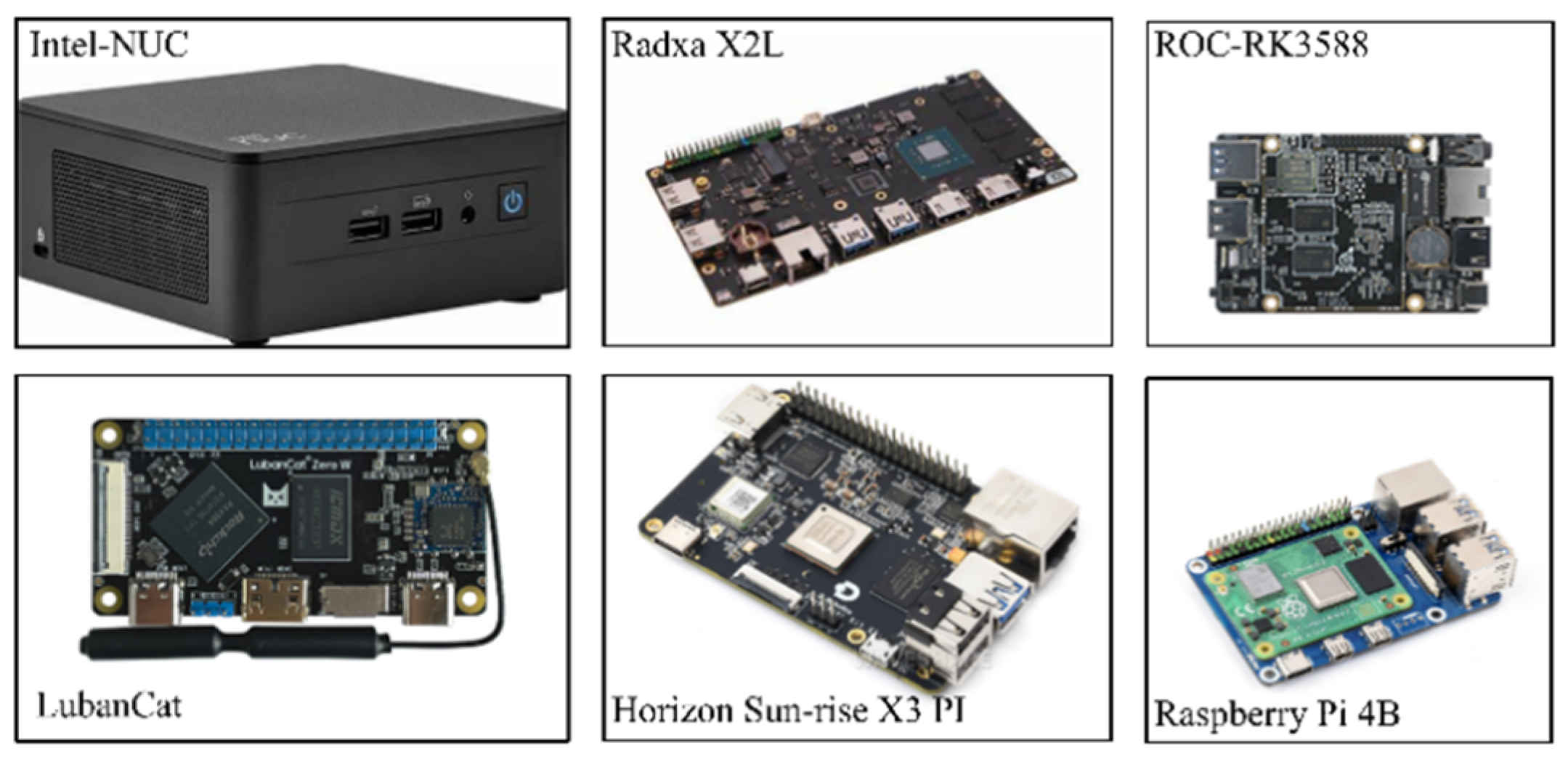

The above ablation experiments have demonstrated the performance and efficiency of the proposed method. Next, the running effect of the proposed method on a low-computing-power platform is validated to adapt to the low-computing environment of ASV while navigating on water. This paper selected six common low-computing-power devices available in the market (see Figure 17), installed the Ubuntu 20.04 operating system, and randomly selected a portion of point clouds from the Pohang 03 dataset to perform 100 point cloud registration calculations, recording the success rate and average run time for each experiment. The registration performance of the proposed method at the scan level was tested, and the registration results and computation times were recorded, as shown in Table 5. In the table, the CPU model is separated by “@,” with the model name preceding it and the clock frequency and thread count following it. Except for the Intel-NUC (with a maximum power consumption of 120 W), the power consumption of the other devices is between 10 and 20 W. A “-“ in the table indicates that the registration success rate is below 50%, and therefore the experimental results are not listed.

Figure 17.

Six common low-computing-power computing devices.

Table 5.

Point cloud registration performance on several low-computing-power devices.

The modules of the method proposed in this paper are all programmed in C++, allowing for deployment of computers with different architectures. Table 5 selects the latest open-source point cloud registration method, kiss-matcher, for comparison with the method proposed in this paper when deployed on low-computing-power platforms. Since both kiss-matcher and the proposed method utilize parallel computing techniques, it can be observed that the success rates for the deployment of the kiss-matcher method on the 4-thread Radxa X2L, Sunxi, and Raspberry Pi 4B are all below 50%, with Sunxi also failing to achieve a success rate of 50% when deploying the proposed method. The best performance was observed on the Intel-NUC, as it has the strongest CPU performance; among the four Arm architecture devices, ROC-RK3588 performed the best, achieving a success rate of 75%, and notably, the run time approached real-time performance.

5. Discussion and Prospects

5.1. Advantages of the Proposed Method

This paper analyzes the particularities of shipborne LiDAR and vehicle-mounted LiDAR datasets. In response to the specific conditions of the water environment, a conditional filtering method for point clouds is proposed to address the numerous noise points caused by ship wake reflections during ASV navigation. By analyzing the number of point clouds in each frame of the KITTI dataset (vehicle-mounted LiDAR dataset) and the Pohang dataset (shipborne LiDAR dataset), and considering the characteristics of a larger number of point clouds in water scenes, the latest point cloud compression techniques are employed to reduce the number of point clouds while preserving the original features. This lays the foundation for the extraction and registration of point cloud feature points.

In response to the various challenges encountered in practical marine engineering applications—such as point cloud noise induced by ship waves, the need for real-time performance and robustness due to highly dynamic environments, significant attitude variations in ASV platforms, and limited onboard computational resources—the proposed method has been specifically optimized. For instance, the proposed feature extraction algorithm effectively filters out ship wave-induced noise in point clouds, thereby improving the robustness and speed of registration. The overall algorithmic structure has also been optimized to enable efficient real-time registration under limited computational power, meeting the demands of ASV autonomous navigation and intelligent obstacle avoidance in dynamic marine conditions. These improvements significantly enhance the applicability and reliability of point cloud registration methods in practical marine engineering tasks, providing a solid foundation for various application scenarios such as structural inspection, emergency monitoring, and maritime transportation.

Compared to traditional point cloud registration methods, the proposed method in this paper offers a fast speed. First, the point cloud compression technique reduces the number of points involved in the registration calculation. Second, the number of radius searches in the improved FPFH algorithm for computing point features is reduced from the traditional three to one, greatly enhancing the efficiency of feature computation. Third, the introduction of the Small_GICP method in the fine registration phase further optimizes the fast_gicp approach, achieving a speed increase of up to two times [62]. Through these three improvements, the method is optimized for speed in the data processing, feature extraction, and registration computation steps, allowing for rapid and efficient operation.

While achieving rapid computation, the proposed method also ensures the robustness and accuracy of point cloud registration. In the point cloud data processing phase, conditional filtering is applied to remove wave points on the water surface, thereby enhancing the quality of feature extraction and improving the accuracy and robustness of point cloud registration. In the feature extraction phase, a feature point validation module based on PCA is introduced to calculate the linearity between feature points and neighboring points. Points with linearity greater than a specified threshold are discarded from further feature computation, thus further enhancing the quality of feature extraction. After obtaining high-quality point cloud features, two-step registration is performed using SAC-IA and Small_GICP, ensuring the accuracy of point cloud registration. This study primarily focuses on comparing the proposed method with purely classical and purely learning-based approaches. Integrating deep learning-based feature extraction with robust classical registration algorithms has the potential to further enhance the performance of the method. In future work, we will conduct systematic research to incorporate and validate such hybrid strategies.

5.2. Limitations Analysis and Future Work

Although the proposed conditional filtering method for point clouds can reduce the number of point clouds and the quality of point cloud features, the parameters used to calculate the ship wake positions in this study are derived from a simple linear fitting based on the speed of the vessels and the positions of the wake noise points in the laser point clouds from the Pohang dataset. Therefore, this method for calculating ship wake position parameters cannot be directly applied to other types of vessels. A more rigorous model or a data-driven algorithm—based on hydrodynamic principles or machine learning—would improve the prediction of the splash zone’s size and shape. We plan to address this limitation in our future research by developing adaptive parameter selection methods and exploring machine learning or hydrodynamics-based predictive models. The proposed PCFC point cloud processing method applies point cloud compression for downsampling after removing ship-generated waves, but it does not address noise points caused by distant waves. Eliminating noise induced by distant waves could further improve the accuracy and efficiency of point cloud registration. In future work, we plan to explore the use of adaptive point cloud clustering and other techniques to better handle wave-induced noise.

Further research is needed to explore the relationship between ship wakes, vessel types, and speeds, to seek a more generic method for calculating ship wake positions.

In addition, after obtaining the FPFH features, the method presented in this paper utilizes SAC-IA to extract corresponding points between two frames of point clouds. Subsequently, a module based on cosine similarity sorting of feature similarity is employed to select a subset of corresponding points with high feature similarity for the subsequent coarse registration computation. Therefore, future research could incorporate a loop closure detection module, which would determine that the ASV has completed a loop when the feature similarity reaches a specified threshold.

The current experiments are primarily based on the publicly available Pohang dataset, which validates the registration accuracy and real-time performance of the algorithm under conventional conditions. However, due to the lack of publicly available datasets containing extremely complex marine scenarios—such as high-speed moving objects, highly variable sea states, and severe environmental disturbances—we have not yet been able to evaluate the algorithm in these representative real-world environments. Based on the algorithm design and existing experimental results, we infer that there may still be room for improvement in terms of robustness and accuracy when facing dynamic objects, large pose variations, and strong interferences.

In addition, regarding the adaptability to different types of LiDAR, the experiments in this paper were conducted exclusively with 64-line LiDAR data, and have not yet been thoroughly tested on 32-line LiDAR data. Our preliminary analysis suggests that a reduction in the number of LiDAR channels and point cloud density may affect the accuracy and robustness of feature extraction and registration. Therefore, it is necessary to conduct further experiments to systematically evaluate the applicability of the proposed algorithm under different LiDAR configurations. In future work, we plan to collect or construct datasets that better represent real-world applications, including high-speed dynamic targets, varying sea states, strong environmental interference, and multiple LiDAR configurations. This will allow for a more comprehensive evaluation and optimization of the proposed algorithm’s performance in complex real marine environments, thereby enhancing its engineering practicality and application value.

During the implementation of our method, some key parameters (such as parameters k1, k2, k3 for the wake bounding box) are currently set empirically. We recognize that different parameter values may affect the accuracy and robustness of point cloud registration, and these effects could be more pronounced in various types of ASV platforms and complex, dynamic marine environments. To further enhance the generality and practical value of our approach, we plan to conduct systematic sensitivity experiments on the main parameters in future work. This will allow us to comprehensively evaluate their impact on registration accuracy and robustness. Additionally, we will explore adaptive parameter optimization strategies to better accommodate different ASV platforms and the diverse requirements of marine environments.

In this study, the computational time of the proposed algorithm was measured under ideal experimental conditions, primarily reflecting its execution efficiency for single tasks without integration into real-time sensor data streams. In practical deployment scenarios, in addition to the registration algorithm itself, multiple tasks such as point cloud data acquisition, parsing, and downstream navigation must be performed simultaneously. These processes all consume system resources and contribute to the overall processing delay. Therefore, although our experimental results demonstrate relatively high efficiency, achieving strict real-time performance in a fully integrated system remains challenging. To address this issue, we will further optimize the algorithm structure in future work and introduce targeted techniques such as parallel computing and hardware acceleration, aiming to better meet the higher real-time requirements of practical engineering applications.

6. Conclusions

This paper proposes a three-dimensional laser point cloud registration method for computationally constrained ASVs navigating inland rivers and port areas. This method considers the large amount of noise point clouds formed by laser reflections from ship wakes during ASV navigation, combining point cloud conditional filtering with point cloud compression to reduce the number of points and improve computational efficiency. Ablation experimental results indicate that incorporating the proposed PCFC point cloud processing method reduces the registration algorithm’s runtime by 98.74 ms, thereby significantly improving computational efficiency. To further reduce the computational burden of point cloud registration, the FPFH algorithm performs only one radius search and reuses the results. Additionally, a PCA-based feature point verification module is added to filter out unreliable points by assessing the linearity of points within the neighborhood, thereby improving the quality of FPFH feature computation. Ablation experiments demonstrate that the improved FPFH can enhance both the quality and speed of registration. Finally, an accurate point cloud transformation matrix is obtained through a two-step registration strategy based on SAC-IA and Small_GICP. The results of point cloud scan-level registration experiments on the KITTI benchmark dataset and the Pohang Canal dataset demonstrate that the proposed method achieves a 100% registration success rate without significantly compromising accuracy. Map-level point cloud registration experiments further validate the importance of the PCFC method and the robustness of the proposed approach. Experimental results on six low-power devices indicate that the registration time on the Intel-NUC device with an x86 architecture (2.8 GHz, eight threads) and the ARM-based device (2.4 GHz, eight threads) is 86.243 ms, suggesting that the proposed lidar point cloud registration method is promising for real-time operation. Future research will focus on optimizing the accuracy and robustness of point cloud registration on low-power devices while ensuring real-time performance.

Author Contributions

D.G.: writing—original draft, conceptualization, software. Y.Y.: writing—review and editing, methodology, visualization, supervision. H.X.: formal analysis, software, validation. Q.J.: writing—review and editing, conceptualization, methodology, validation, funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Natural Science Foundation of Liaoning Province of China under Grant 2024-BS-013/84240088, and in part by Dalian City Science and Technology Plan (Key) Project (2024JB11PT007), and supported by the Fundamental Research Funds for the Central Universities (3132025138), Maritime Safety Administration of Liaoning Province Fund (80825013), and supported by Natural Science Foundation of Liaoning Province of China under Grant 2024-BS-013.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data derived from public domain resources.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RTE | Relative Translation Error |

| RRE | Relative Rotation Error |

| tn,GT | The ground truth of the n-th translation |

| Rn,GT | The ground truth of the n-th rotation |

| Nsuccess | The number of successful registration results |

| L | The length of the vessel |

| B | The width of the vessel |

| V | The speed of the vessel |

| H | The height of the LiDAR installation |

| k1, k2, k3 | The scaling factor, k1, k2, k3 should be a function related to speed, but it is set as a constant here for simplification |

| rFPFH | Search radius for calculating angular features |

| rnormal | Search radius for calculating the normal vectors of neighboring points |

| Point number threshold for calculating angular features | |

| Linearity threshold | |

| dmin | Pairwise distance threshold between sampling points |

| Sampling point count threshold | |

| qx, qy, qz, qw, x, y, z | X, Y, Z coordinates and quaternions in the dataset |

References

- Shen, Y.; Man, X.; Wang, J.; Zhang, Y.; Mi, C. Truck Lifting Accident Detection Method Based on Improved PointNet++ for Container Terminals. J. Mar. Sci. Eng. 2025, 13, 256. [Google Scholar] [CrossRef]

- Ponzini, F.; Fruzzetti, C.; Sabatino, N. Real-Time Critical Marine Infrastructure Multi-Sensor Surveillance via a Constrained Stochastic Coverage Algorithm. In Proceedings of the International Ship Control Systems Symposium (iSCSS), Liverpool, UK, 5–7 November 2024. [Google Scholar]

- Chen, X.; Liu, S.; Liu, R.W.; Wu, H.; Han, B.; Zhao, J. Quantifying Arctic Oil Spilling Event Risk by Integrating an Analytic Network Process and a Fuzzy Comprehensive Evaluation Model. Ocean Coast. Manage. 2022, 228, 106326. [Google Scholar] [CrossRef]

- Chen, X.; Wu, H.; Han, B.; Liu, W.; Montewka, J.; Liu, R.W. Orientation-Aware Ship Detection via a Rotation Feature Decoupling Supported Deep Learning Approach. Eng. Appl. Artif. Intell. 2023, 125, 106686. [Google Scholar] [CrossRef]

- Xie, Y.; Nanlal, C.; Liu, Y. Reliable LiDAR-Based Ship Detection and Tracking for Autonomous Surface Vehicles in Busy Maritime Environments. Ocean. Eng. 2024, 312, 119288. [Google Scholar] [CrossRef]

- Deng, L.; Guo, T.; Wang, H.; Chi, Z.; Wu, Z.; Yuan, R. Obstacle Detection of Unmanned Surface Vehicle Based on Lidar Point Cloud Data. In Proceedings of the OCEANS 2022, Hampton Roads, VA, USA, 17–20 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–8. [Google Scholar]

- Eustache, Y.; Seguin, C.; Pecout, A.; Foucher, A.; Laurent, J.; Heller, D. Marine Object Detection Using LiDAR on an Unmanned Surface Vehicle. IEEE Access 2025, 13, 121658–121669. [Google Scholar] [CrossRef]

- Ponzini, F.; Zaccone, R.; Martelli, M. LiDAR target detection and classification for ship situational awareness: A hybrid learning approach. Appl. Ocean Res. 2025, 158, 104552. [Google Scholar] [CrossRef]

- Sun, C.-Z.; Zhang, B.; Wang, J.-K.; Zhang, C.-S. A Review of Visual SLAM Based on Unmanned Systems. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Education (ICAIE), Dali, China, 18–20 June 2021; pp. 226–234. [Google Scholar]

- Helgesen, Ø.K.; Vasstein, K.; Brekke, E.F.; Stahl, A. Heterogeneous multi-sensor tracking for an autonomous surface vehicle in a littoral environment. Ocean Eng. 2022, 252, 111168. [Google Scholar] [CrossRef]

- Wang, H.; Yin, Y.; Jing, Q.; Cao, Z.; Shao, Z.; Guo, D. Berthing assistance system for autonomous surface vehicles based on 3D LiDAR. Ocean. Eng. 2024, 291, 116444. [Google Scholar] [CrossRef]

- Li, Y.; Wang, T.-Q. Spatial State Analysis of Ship During Berthing and Unberthing Process Utilizing Incomplete 3D LiDAR Point Cloud Data. J. Mar. Sci. Eng. 2025, 13, 347. [Google Scholar] [CrossRef]

- Liu, G.; Pan, S.; Gao, W.; Yu, B.; Ma, C. LiDAR mini-matching positioning method based on constraint of lightweight point cloud feature map. Measurement 2024, 225, 113913. [Google Scholar] [CrossRef]

- Koide, K.; Yokozuka, M.; Oishi, S.; Banno, A. Voxelized GICP for Fast and Accurate 3D Point Cloud Registration. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 11054–11059. [Google Scholar]

- Vizzo, I.; Guadagnino, T.; Mersch, B.; Wiesmann, L.; Behley, J.; Stachniss, C. KISS-ICP: In Defense of Point-to-Point ICP—Simple, Accurate, and Robust Registration If Done the Right Way. IEEE Robot. Autom. Lett. 2023, 8, 1029–1036. [Google Scholar] [CrossRef]

- Lu, X.; Li, Y. Motion pose estimation of inshore ships based on point cloud. Measurement 2022, 205, 112189. [Google Scholar] [CrossRef]

- Lu, X.; Li, Y.; Xie, M. Preliminary study for motion pose of inshore ships based on point cloud: Estimation of ship berthing angle. Measurement 2023, 214, 112836. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Wang, H.; Yin, Y.; Jing, Q. Comparative Analysis of 3D LiDAR Scan-Matching Methods for State Estimation of Autonomous Surface Vessel. J. Mar. Sci. Eng. 2023, 11, 840. [Google Scholar] [CrossRef]

- Wu, Y.; Gong, P.; Gong, M.; Ding, H.; Tang, Z.; Liu, Y.; Ma, W.; Miao, Q. Evolutionary Multitasking With Solution Space Cutting for Point Cloud Registration. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 110–125. [Google Scholar] [CrossRef]

- Lu, F.; Chen, G.; Liu, Y.; Zhan, Y.; Li, Z.; Tao, D.; Jiang, C. Sparse-to-Dense Matching Network for Large-Scale LiDAR Point Cloud Registration. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 11270–11282. [Google Scholar] [CrossRef]

- Magnusson, M. The Three-Dimensional Normal-Distributions Transform: An Efficient Representation for Registration, Surface Analysis, and Loop Detection. Ph.D. Thesis, Örebro Universitet, Örebro, Schweden, 2009. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D Registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 3212–3217. [Google Scholar]

- Chen, G.; Wang, M.; Yue, Y.; Zhang, Q.; Yuan, L.; Yue, Y. Full Transformer Framework for Robust Point Cloud Registration with Deep Information Interaction. IEEE Trans. Neural Networks Learn. Syst. 2023, 35, 13368–13382. [Google Scholar] [CrossRef]

- Chen, Z.; Sun, K.; Yang, F.; Tao, W. SC2-PCR: A Second Order Spatial Compatibility for Efficient and Robust Point Cloud Registration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 13221–13231. [Google Scholar]

- Lv, C.; Lin, W.; Zhao, B. KSS-ICP: Point Cloud Registration Based on Kendall Shape Space. IEEE Trans. Image Process 2023, 32, 1681–1693. [Google Scholar] [CrossRef]

- He, L.; Li, W.; Guan, Y.; Zhang, H. IGICP: Intensity and Geometry Enhanced LiDAR Odometry. IEEE Trans. Intell. Veh. 2024, 9, 541–554. [Google Scholar] [CrossRef]

- Qin, Z.; Yu, H.; Wang, C.; Guo, Y.; Peng, Y.; Ilic, S.; Hu, D.; Xu, K. GeoTransformer: Fast and Robust Point Cloud Registration With Geometric Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9806–9821. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Liu, Y.; Hu, Q.; Wang, B.; Chen, J.; Dong, Z.; Guo, Y.; Wang, W.; Yang, B. RoReg: Pairwise Point Cloud Registration With Oriented Descriptors and Local Rotations. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10376–10393. [Google Scholar] [CrossRef] [PubMed]

- Tan, B.; Qin, H.; Zhang, X.; Wang, Y.; Xiang, T.; Chen, B. Using Multi-Level Consistency Learning for Partial-to-Partial Point Cloud Registration. IEEE Trans. Vis. Comput. Graph. 2024, 30, 4881–4894. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Yan, L.; Xie, H.; Wei, P.; Dai, J. A hierarchical multiview registration framework of TLS point clouds based on loop constraint. ISPRS J. Photogramm. Remote Sens. 2023, 195, 65–76. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, Y.; Ma, W.; Gong, M.; Fan, X.; Zhang, M.; Qin, A.K.; Miao, Q. RORNet: Partial-to-Partial Registration Network With Reliable Overlapping Representations. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 15453–15466. [Google Scholar] [CrossRef]

- Guo, Y.; Sohel, F.; Bennamoun, M.; Lu, M.; Wan, J. Rotational Projection Statistics for 3D Local Surface Description and Object Recognition. Int. J. Comput. Vis. 2013, 105, 63–86. [Google Scholar] [CrossRef]

- Zhong, Y. Intrinsic Shape Signatures: A Shape Descriptor for 3D Object Recognition. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 689–696. [Google Scholar]

- Lowe, D.G. Object Recognition from Local Scale-Invariant Features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–25 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Drost, B.; Ulrich, M.; Navab, N.; Ilic, S. Model Globally, Match Locally: Efficient and Robust 3D Object Recognition. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 998–1005. [Google Scholar]

- Aldoma, A.; Vincze, M.; Blodow, N.; Gossow, D.; Gedikli, S.; Rusu, R.B.; Bradski, G. CAD-Model Recognition and 6DOF Pose Estimation Using 3D Cues. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops, Barcelona, Spain, 6–13 November 2011; pp. 585–592. [Google Scholar]

- Zhou, Q.-Y.; Park, J.; Koltun, V. Fast Global Registration. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 766–782. [Google Scholar]

- Yang, H.; Shi, J.; Carlone, L. TEASER: Fast and Certifiable Point Cloud Registration. IEEE Trans. Robot. 2021, 37, 314–333. [Google Scholar] [CrossRef]

- Lim, H.; Kim, B.; Kim, D.; Mason Lee, E.; Myung, H. Quatro++: Robust global registration exploiting ground segmentation for loop closing in LiDAR SLAM. Int. J. Robot. Res. 2024, 43, 685–715. [Google Scholar] [CrossRef]

- Yang, H.; Antonante, P.; Tzoumas, V.; Carlone, L. Graduated Non-Convexity for Robust Spatial Perception: From Non-Minimal Solvers to Global Outlier Rejection. IEEE Robot. Autom. Lett. 2020, 5, 1127–1134. [Google Scholar] [CrossRef]