Towards Real-Time Reinforcement Learning Control of a Wave Energy Converter

Abstract

1. Introduction

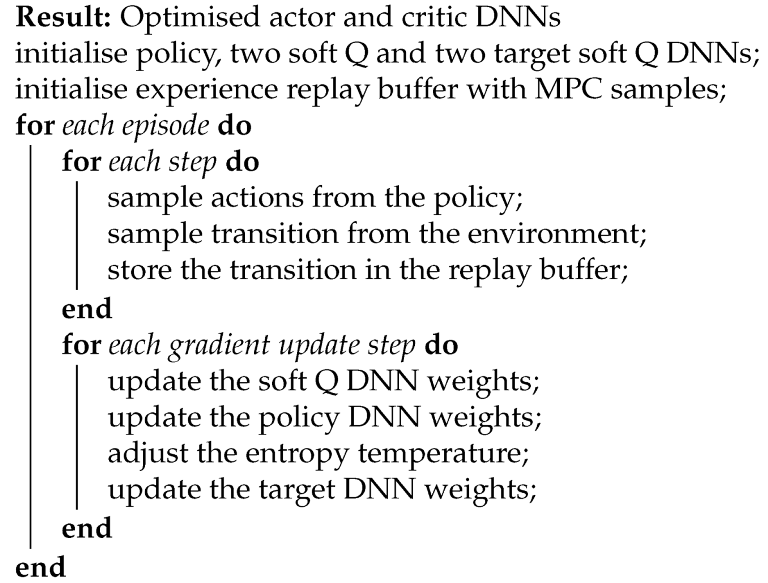

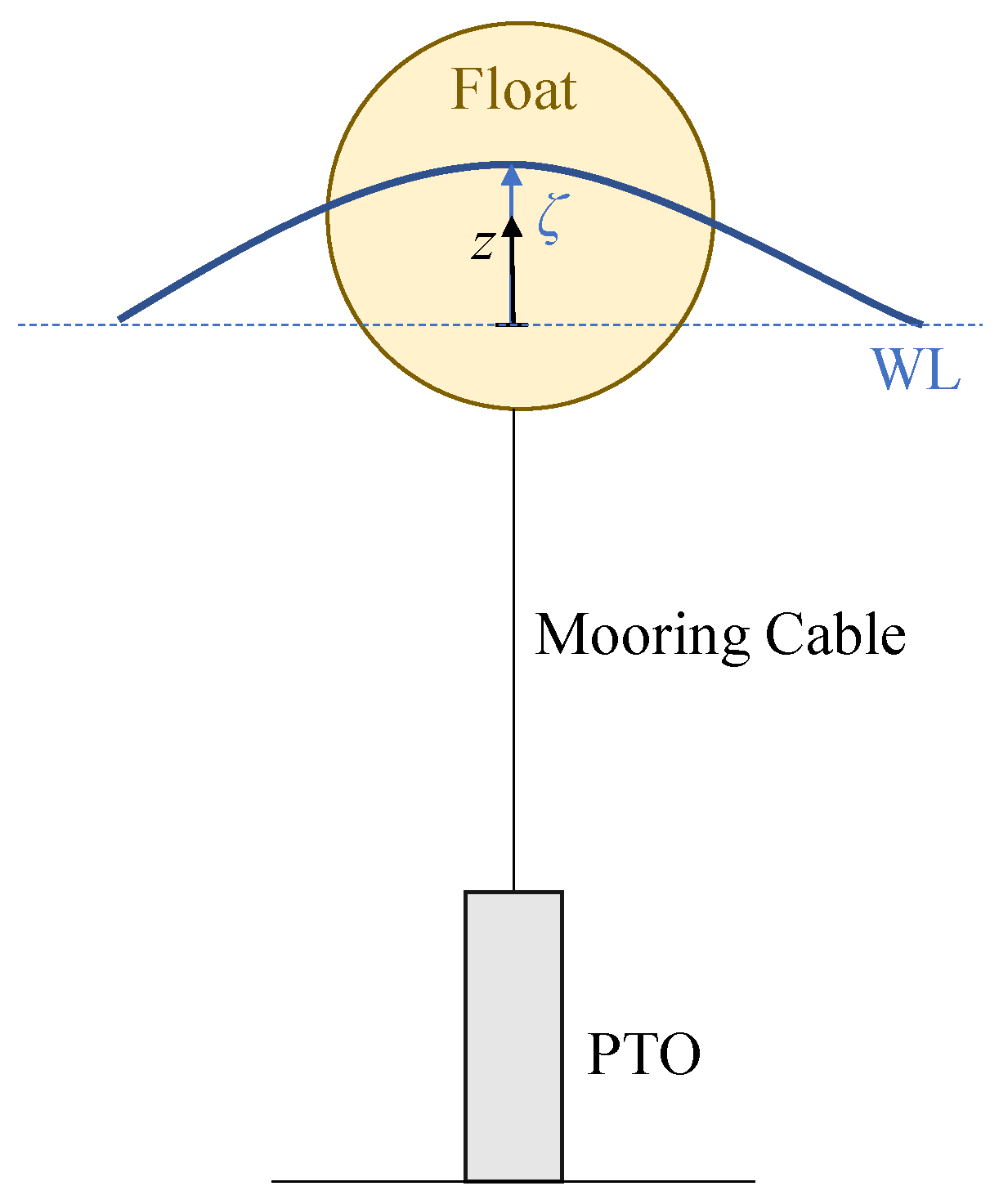

2. Linear Model of a Heaving Point Absorber

3. Real-Time Reinforcement Learning Control of a Wave Energy Converter

3.1. Problem Formulation

3.2. RL Real-Time WEC Control Framework

4. Results and Discussion

4.1. Case Study

4.2. Results in Irregular Waves

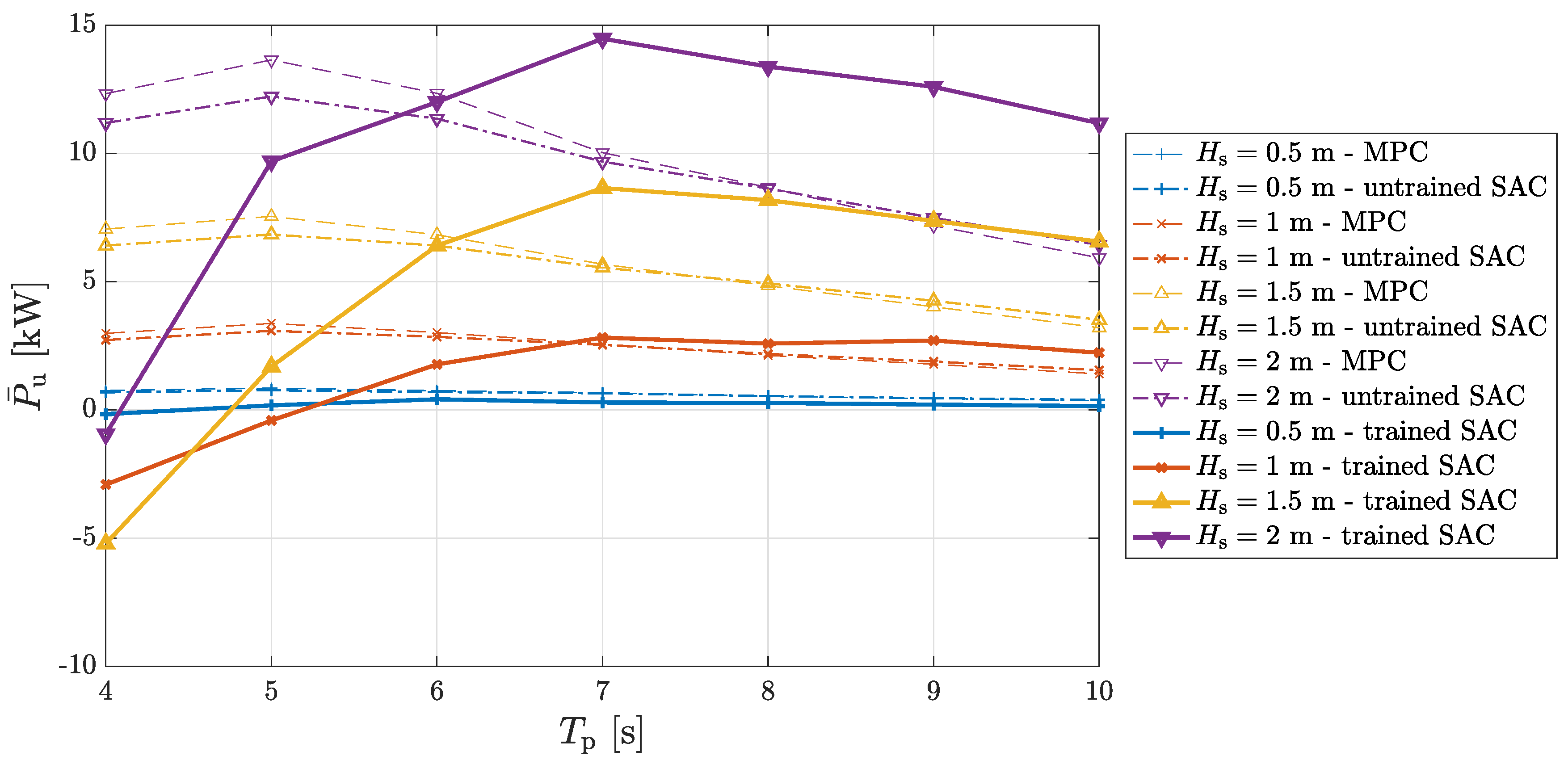

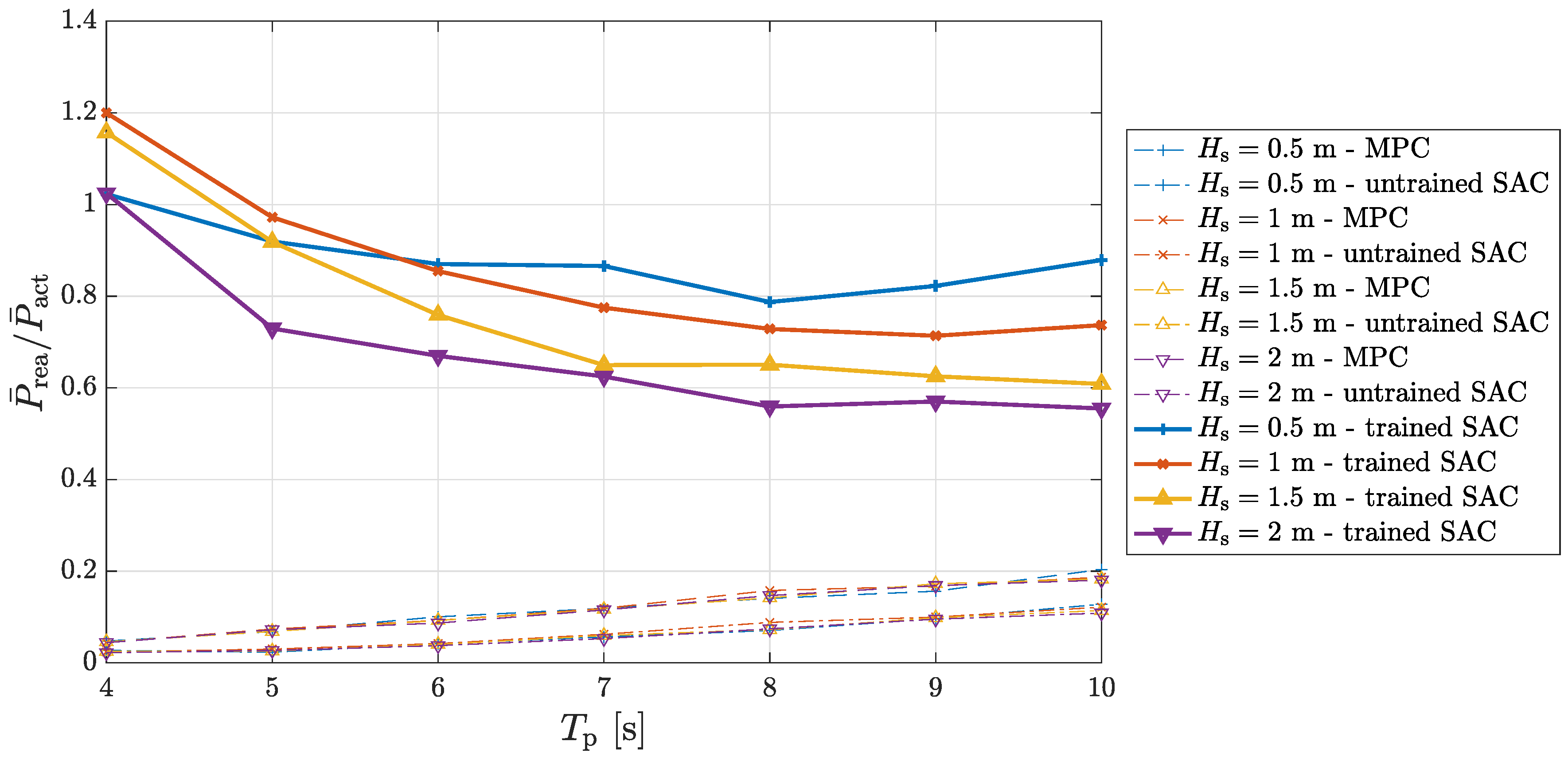

4.2.1. Training

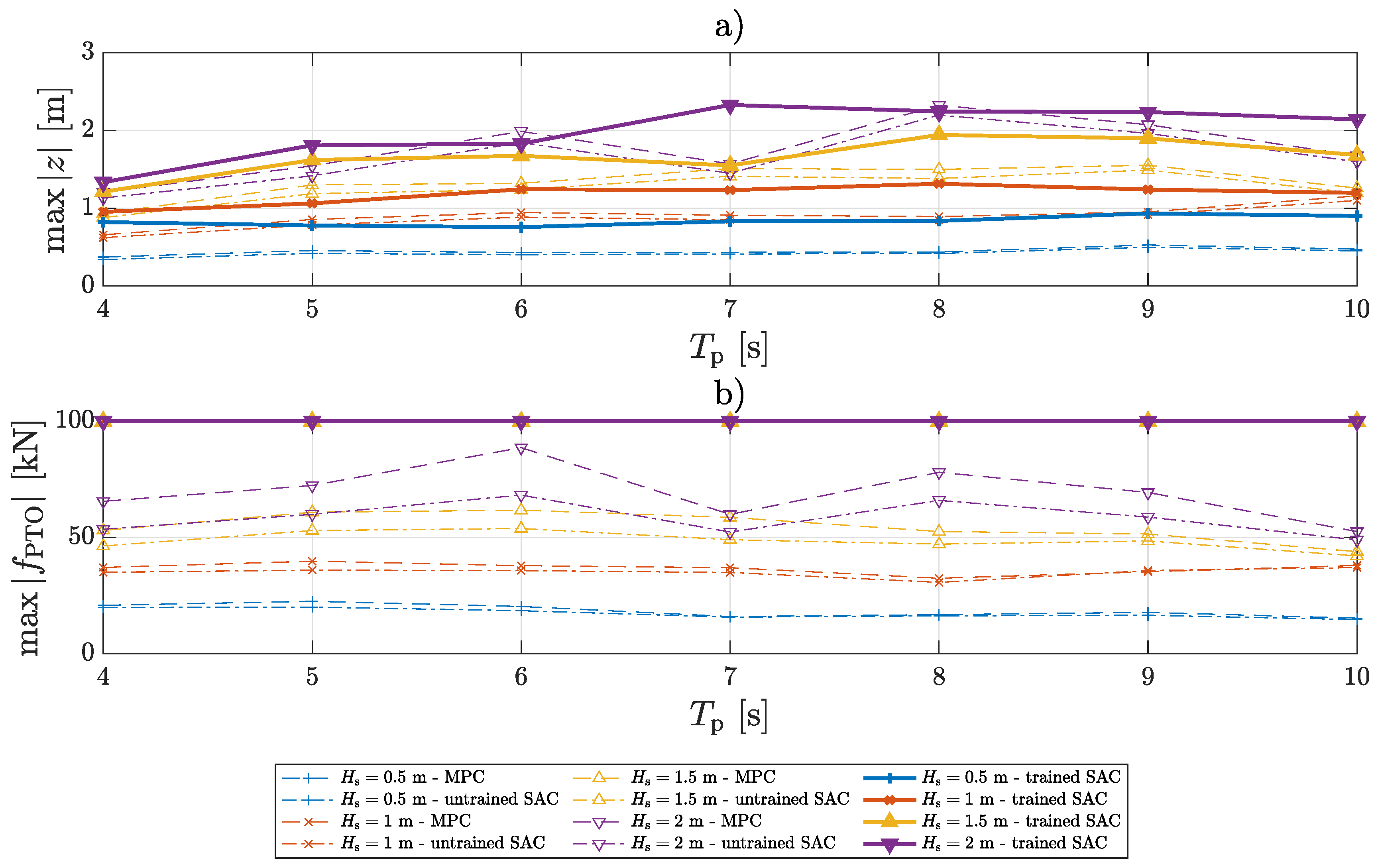

4.2.2. Comparison between SAC and MPC

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| DNN | Deep Neural Network |

| DRL | Deep Reinforcement Learning |

| LCoE | Levellised Cost of Energy |

| MPC | Model Predictive Control |

| PTO | Power Take-Off |

| RL | Reinforcement Learning |

| SAC | Soft Actor-Critic |

| WEC | Wave Energy Converter |

References

- Kempener, R.; Neumann, F. Wave Energy: Technology Brief 4; International Renewable Energy Agency Technical Report; International Renewable Energy Agency: Abu Dhabi, UAE, 2014. [Google Scholar]

- Sgurr Control; Quoceant. Control Requirements for Wave Energy Converters Landscaping Study: Final Report; Technical report; Wave Energy Scotland: Inverness, Scotland, 2016. [Google Scholar]

- Luis Villate, J.; Ruiz-Minguela, P.; Berque, J.; Pirttimaa, L.; Cagney, D.; Cochrane, C.; Jeffrey, H. Strategic Research and Innovation Agenda for Ocean Energy; Technical report; ETIPOCEAN: Bruxelles, Belgium, 2020. [Google Scholar]

- Faedo, N.; Olaya, S.; Ringwood, J.V. Optimal control, MPC and MPC-like algorithms for wave energy systems: An overview. IFAC J. Syst. Control. 2017. [Google Scholar] [CrossRef]

- Li, G.; Belmont, M.R. Model predictive control of sea wave energy converters—Part I: A convex approach for the case of a single device. Renew. Energy 2014, 69, 453–463. [Google Scholar] [CrossRef]

- Zhong, Q.; Yeung, R.W. An efficient convex formulation for model predictive control on wave-energy converters. In Proceedings of the 36th International Conference on Ocean, Offshore and Arctic Engineering, Trondheim, Norway, 25–30 June 2017. [Google Scholar] [CrossRef]

- Li, G.; Belmont, M.R. Model predictive control of sea wave energy converters—Part II: The case of an array of devices. Renew. Energy 2014, 68, 540–549. [Google Scholar] [CrossRef]

- Zhong, Q.; Yeung, R.W. Model-Predictive Control Strategy for an Array of Wave-Energy Converters. J. Mar. Sci. Appl. 2019, 18, 26–37. [Google Scholar] [CrossRef]

- Giorgi, G.; Ringwood, J.V. Nonlinear Froude-Krylov and viscous drag representations for wave energy converters in the computation/fidelity continuum. Ocean Eng. 2017, 141, 164–175. [Google Scholar] [CrossRef]

- Richter, M.; Magana, M.E.; Sawodny, O.; Brekken, T.K.A. Nonlinear Model Predictive Control of a Point Absorber Wave Energy Converter. IEEE Trans. Sustain. Energy 2013, 4, 118–126. [Google Scholar] [CrossRef]

- Li, G. Nonlinear model predictive control of a wave energy converter based on differential flatness parameterisation. Int. J. Control 2017, 90, 68–77. [Google Scholar] [CrossRef]

- Son, D.; Yeung, R.W. Optimizing ocean-wave energy extraction of a dual coaxial-cylinder WEC using nonlinear model predictive control. Appl. Energy 2017, 187, 746–757. [Google Scholar] [CrossRef]

- Oetinger, D.; Magaña, M.E.; Sawodny, O. Centralised model predictive controller design for wave energy converter arrays. IET Renew. Power Gener. 2015, 9, 142–153. [Google Scholar] [CrossRef]

- Ringwood, J.V.; Bacelli, G.; Fusco, F. Energy-Maximizing Control of Wave-Energy Converters: The Development of Control System Technology to Optimize Their Operation. IEEE Control Syst. Mag. 2014, 34, 30–55. [Google Scholar] [CrossRef]

- Korde, U.A.; Ringwood, J.V. Hydrodynamic Control of Wave Energy Devices; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Fusco, F.; Ringwood, J.V. A simple and effective real-time controller for wave energy converters. IEEE Trans. Sustain. Energy 2013, 4, 21–30. [Google Scholar] [CrossRef]

- Gaspar, J.F.; Kamarlouei, M.; Sinha, A.; Xu, H.; Calvário, M.; Faÿ, F.X.; Robles, E.; Soares, C.G. Speed control of oil-hydraulic power take-off system for oscillating body type wave energy converters. Renew. Energy 2016, 97, 769–783. [Google Scholar] [CrossRef]

- Valério, D.; Mendes, M.J.G.C.; Beirão, P.; Sá da Costa, J. Identification and control of the AWS using neural network models. Appl. Ocean Res. 2008, 30, 178–188. [Google Scholar] [CrossRef]

- Li, L.; Yuan, Z.; Gao, Y. Maximization of energy absorption for a wave energy converter using the deep machine learning. Energy 2018, 165, 340–349. [Google Scholar] [CrossRef]

- Li, L.; Gao, Z.; Yuan, Z.M. On the sensitivity and uncertainty of wave energy conversion with an artificial neural-network-based controller. Ocean Eng. 2019, 183, 282–293. [Google Scholar] [CrossRef]

- Anderlini, E.; Forehand, D.I.; Bannon, E.; Abusara, M. Reactive control of a wave energy converter using artificial neural networks. Int. J. Mar. Energy 2017, 19, 207–220. [Google Scholar] [CrossRef]

- Thomas, S.; Giassi, M.; Eriksson, M.; Göteman, M.; Isberg, J.; Ransley, E.; Hann, M.; Engström, J. A Model Free Control Based on Machine Learning for Energy Converters in an Array. Big Data Cogn. Comput. 2018, 2, 36. [Google Scholar] [CrossRef]

- Tri, N.M.; Truong, D.Q.; Thinh, D.H.; Binh, P.C.; Dung, D.T.; Lee, S.; Park, H.G.; Ahn, K.K. A novel control method to maximize the energy-harvesting capability of an adjustable slope angle wave energy converter. Renew. Energy 2016, 97, 518–531. [Google Scholar] [CrossRef]

- Na, J.; Li, G.; Wang, B.; Herrmann, G.; Zhan, S. Robust Optimal Control of Wave Energy Converters Based on Adaptive Dynamic Programming. IEEE Trans. Sustain. Energy 2019, 10, 961–970. [Google Scholar] [CrossRef]

- Na, J.; Wang, B.; Li, G.; Zhan, S.; He, W. Nonlinear constrained optimal control of wave energy converters with adaptive dynamic programming. IEEE Trans. Ind. Electron. 2019, 66, 7904–7915. [Google Scholar] [CrossRef]

- Zhan, S.; Na, J.; Li, G. Nonlinear Noncausal Optimal Control of Wave Energy Converters via Approximate Dynamic Programming. IEEE Trans. Ind. Infor. 2019, 15, 6070–6079. [Google Scholar] [CrossRef]

- Kamthe, S.; Deisenroth, M.P. Data-Efficient Reinforcement Learning with Probabilistic Model Predictive Control. In Proceedings of the Machine Learning Research, Lanzarote, Spain, 9–11 April 2018; Volume 84, pp. 1701–1710. [Google Scholar]

- Nagabandi, A.; Kahn, G.; Fearing, R.S.; Levine, S. Neural Network Dynamics for Model-Based Deep Reinforcement Learning with Model-Free Fine-Tuning. arXiv 2017, arXiv:1708.02596v2. [Google Scholar]

- Anderlini, E.; Forehand, D.I.M.; Stansell, P.; Xiao, Q.; Abusara, M. Control of a Point Absorber using Reinforcement Learning. IEEE Trans. Sustain. Energy 2016, 7, 1681–1690. [Google Scholar] [CrossRef]

- Anderlini, E.; Forehand, D.I.; Bannon, E.; Abusara, M. Control of a Realistic Wave Energy Converter Model Using Least-Squares Policy Iteration. IEEE Trans. Sustain. Energy 2017, 8, 1618–1628. [Google Scholar] [CrossRef]

- Anderlini, E.; Forehand, D.I.; Bannon, E.; Xiao, Q.; Abusara, M. Reactive control of a two-body point absorber using reinforcement learning. Ocean Eng. 2018, 148, 650–658. [Google Scholar] [CrossRef]

- Anderlini, E.; Forehand, D.I.; Bannon, E.; Abusara, M. Constraints Implementation in the Application of Reinforcement Learning to the Reactive Control of a Point Absorber. In Proceedings of the 36th International Conference on Ocean, Offshore and Arctic Engineering, Trondheim, Norway, 25–30 June 2017. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Haarnoja, T.; Zhou, A.; Hartikainen, K.; Tucker, G.; Ha, S.; Tan, J.; Kumar, V.; Zhu, H.; Gupta, A.; Abbeel, P.; et al. Soft Actor-Critic Algorithms and Applications. arXiv 2018, arXiv:1812.05905. [Google Scholar]

- Falnes, J. Ocean Waves and Oscillating Systems, paperback ed.; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar] [CrossRef]

- Cummins, W.E. The impulse response function and ship motions. Schiffstechnik 1962, 47, 101–109. [Google Scholar]

- Faedo, N.; Peña-Sanchez, Y.; Ringwood, J.V. Finite-order hydrodynamic model determination for wave energy applications using moment-matching. Ocean Eng. 2018, 163, 251–263. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning, hardcover ed.; MIT Press: Cambridge, MA, USA, 1998; p. 344. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. In Proceedings of the International Conference on Learning Representations, San Juan, PR, USA, 2–4 May 2016. [Google Scholar] [CrossRef]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Fusco, F.; Ringwood, J. Short-Term Wave Forecasting for time-domain Control of Wave Energy Converters. IEEE Trans. Sustain. Energy 2010, 1, 99–106. [Google Scholar] [CrossRef]

- Giorgi, G.; Ringwood, J.V. Computationally efficient nonlinear Froude–Krylov force calculations for heaving axisymmetric wave energy point absorbers. J. Ocean. Eng. Mar. Energy 2017, 3, 21–33. [Google Scholar] [CrossRef]

- Paparella, F.; Monk, K.; Winands, V.; Lopes, M.F.; Conley, D.; Ringwood, J.V. Up-wave and autoregressive methods for short-term wave forecasting for an oscillating water column. IEEE Trans. Sustain. Energy 2015, 6, 171–178. [Google Scholar] [CrossRef]

- Holthuijsen, L.H. Waves in Oceanic and Coastal Waters; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar] [CrossRef]

- Fourment, M.; Gillings, M.R. A comparison of common programming languages used in bioinformatics. BMC Bioinform. 2008, 9, 82. [Google Scholar] [CrossRef] [PubMed]

| 1. |

| Property | Value |

|---|---|

| Buoy diameter [m] | 5 |

| Buoy mass [kg] | 32.725 |

| Buoy resonant period [s] | 3.17 |

| Water depth [m] | ∞ |

| [kg/m3] | 1000 |

| g [m/s2] | 9.81 |

| Parameter | Value |

|---|---|

| optimizer | Adam |

| learning rate | |

| discount factor | 0.99 |

| replay buffer size | |

| number of hidden layers (all networks) | 2 |

| number of hidden units per layer | 256 |

| number of samples per minibatch | 256 |

| entropy target | −1 |

| activation function | ReLU |

| target smoothing coefficient | 0.005 |

| target update interval | 2 |

| gradient steps | 1 |

| Scheme | Training Time [s] | Total Simulation Time [s] | Time Per Control Time Step [s] |

|---|---|---|---|

| MPC | - | 11.798 | |

| SAC | 869 | 2.607 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anderlini, E.; Husain, S.; Parker, G.G.; Abusara, M.; Thomas, G. Towards Real-Time Reinforcement Learning Control of a Wave Energy Converter. J. Mar. Sci. Eng. 2020, 8, 845. https://doi.org/10.3390/jmse8110845

Anderlini E, Husain S, Parker GG, Abusara M, Thomas G. Towards Real-Time Reinforcement Learning Control of a Wave Energy Converter. Journal of Marine Science and Engineering. 2020; 8(11):845. https://doi.org/10.3390/jmse8110845

Chicago/Turabian StyleAnderlini, Enrico, Salman Husain, Gordon G. Parker, Mohammad Abusara, and Giles Thomas. 2020. "Towards Real-Time Reinforcement Learning Control of a Wave Energy Converter" Journal of Marine Science and Engineering 8, no. 11: 845. https://doi.org/10.3390/jmse8110845

APA StyleAnderlini, E., Husain, S., Parker, G. G., Abusara, M., & Thomas, G. (2020). Towards Real-Time Reinforcement Learning Control of a Wave Energy Converter. Journal of Marine Science and Engineering, 8(11), 845. https://doi.org/10.3390/jmse8110845