Human-Centered Explainable Artificial Intelligence for Marine Autonomous Surface Vehicles

Abstract

:1. Introduction

2. Method

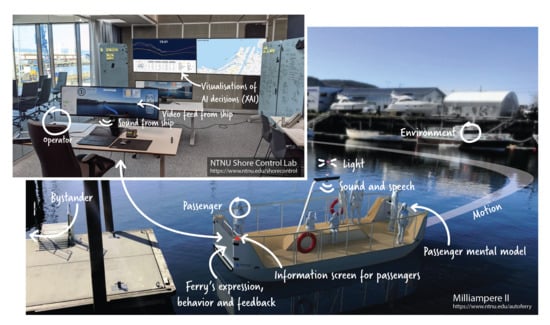

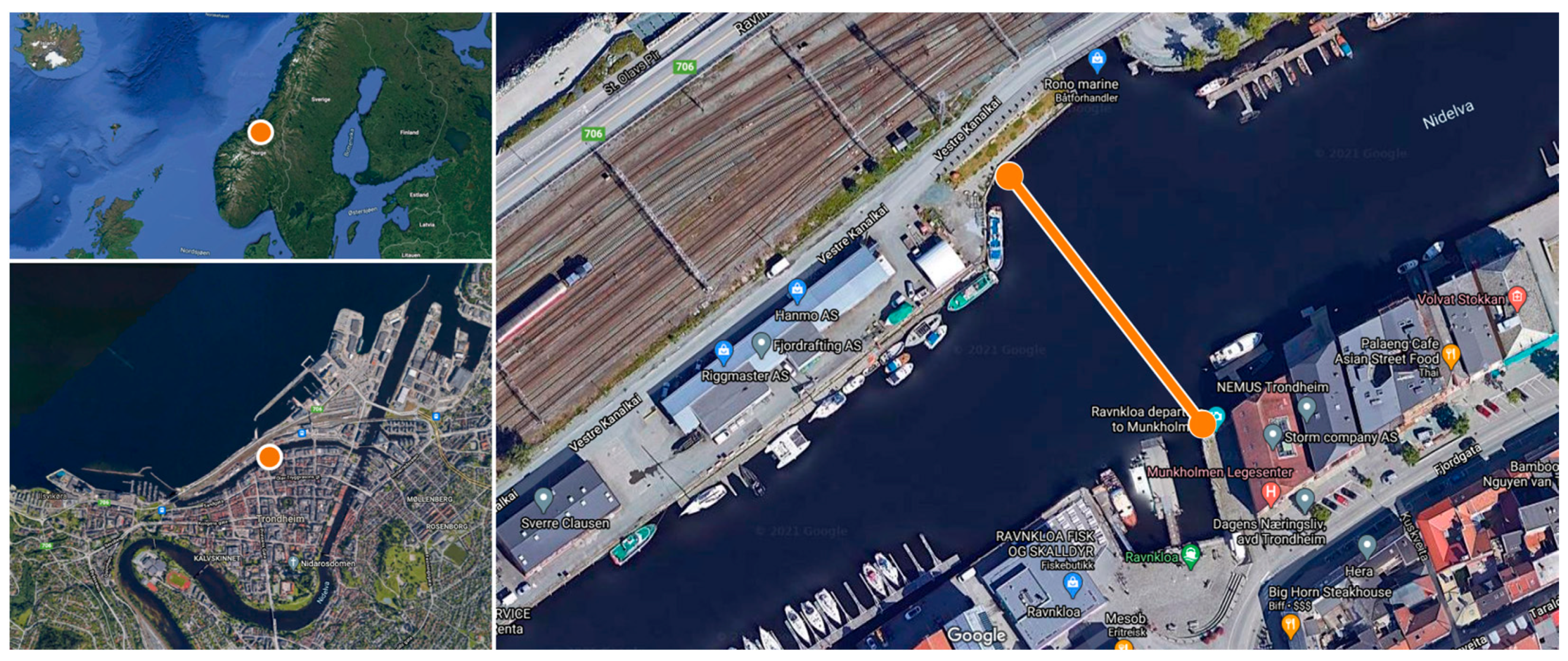

2.1. The milliAmpere2 Autonomous Passenger Ferry

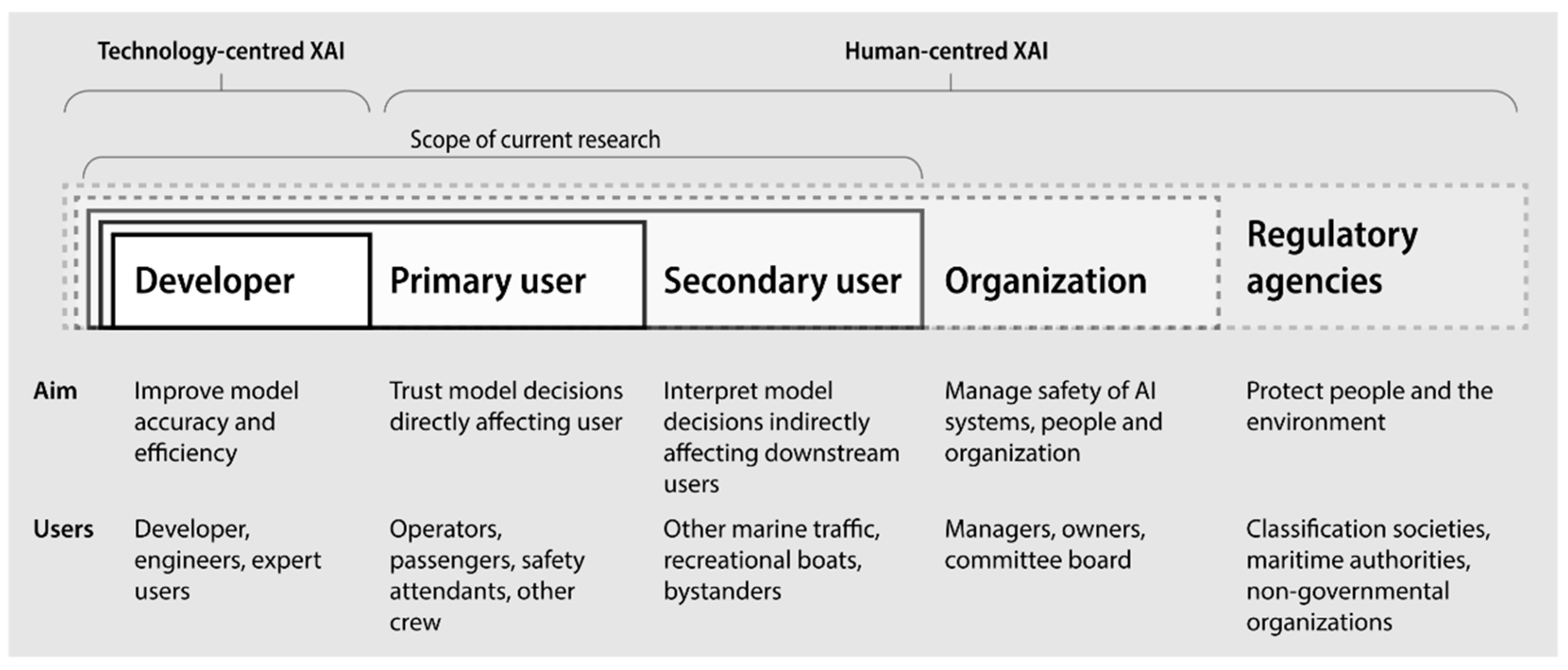

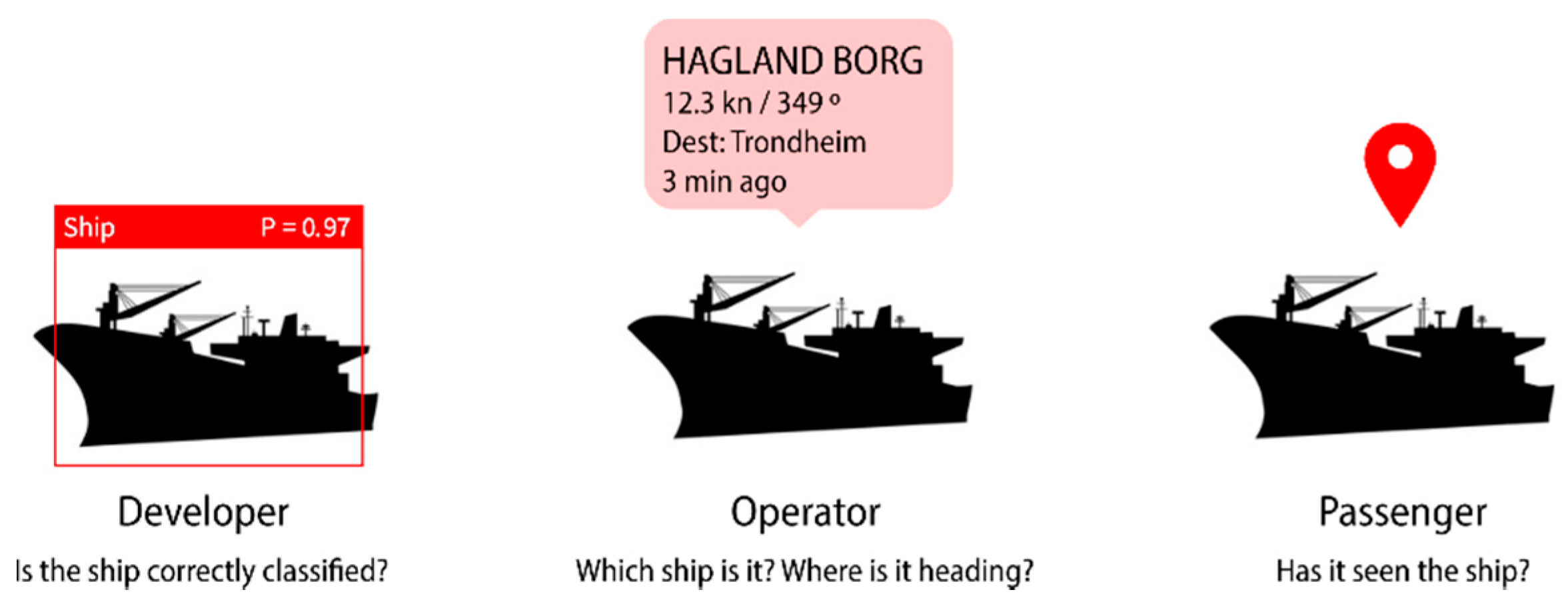

2.2. XAI Audience and Scope

2.3. Methodological Considerations

3. Results

- Analogy (representation of an unfamiliar concept in terms of a familiar one);

- Visualization (representation of internal states through external imagistic processes);

- Mental simulation (representation of behavior through ‘mental models’ and thought experiments, including numerical simulation).

3.1. Analogy

‘World’s first driverless passenger ferry. The service is free and open for everyone. The ferry works like an elevator. You press the Call button, and it calls the ferry. You can take aboard everything from your pets to your bike and stroller. The ferry goes every day from 07:00 to 22:00. The ferry crosses between Ravnkloa and Venstre Kanalkai.’

3.2. Visualization

3.2.1. User Displays

3.2.2. Design, Form and Aesthetic

3.2.3. Sensor Data

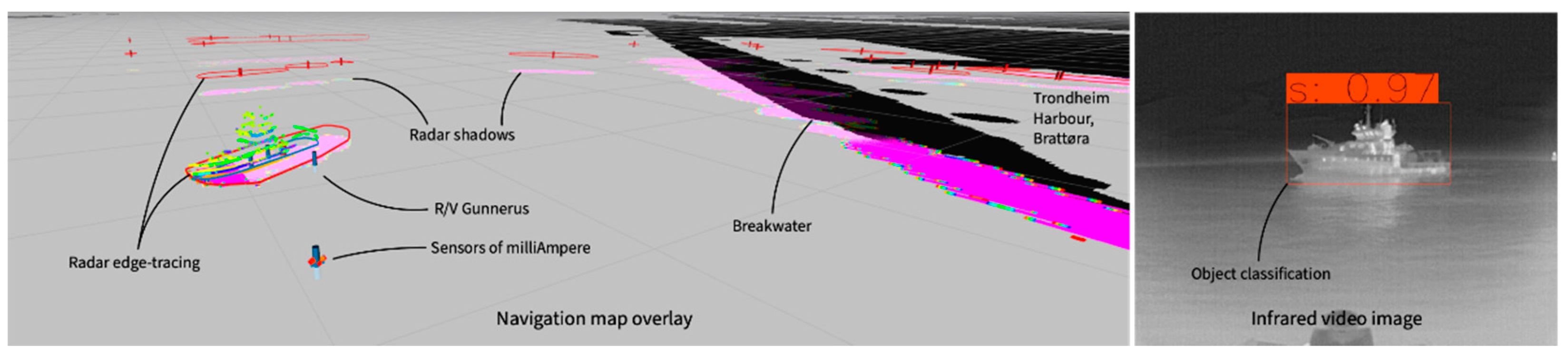

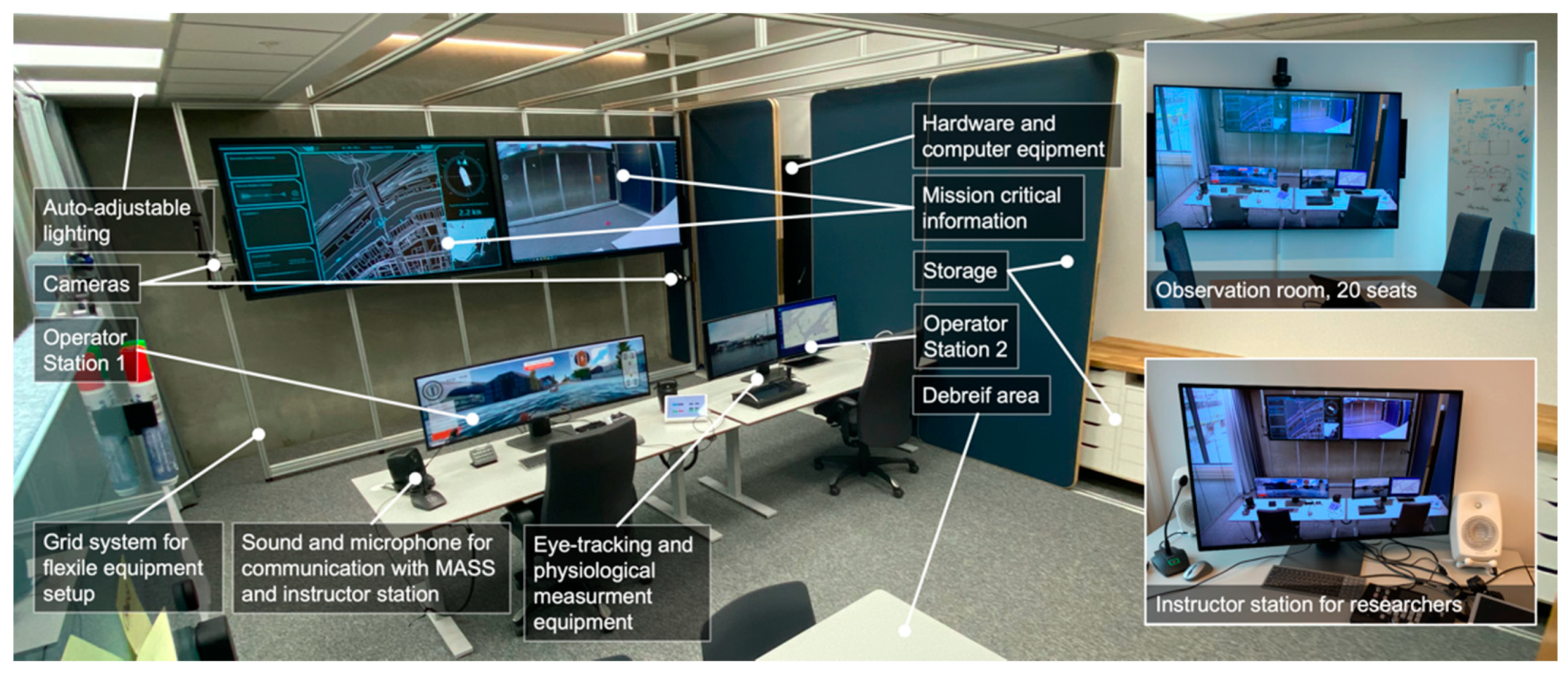

3.2.4. Data Visualization for Shore Control Center Operators

3.2.5. Visual Signals to Predict Future States

3.3. Mental Simulation

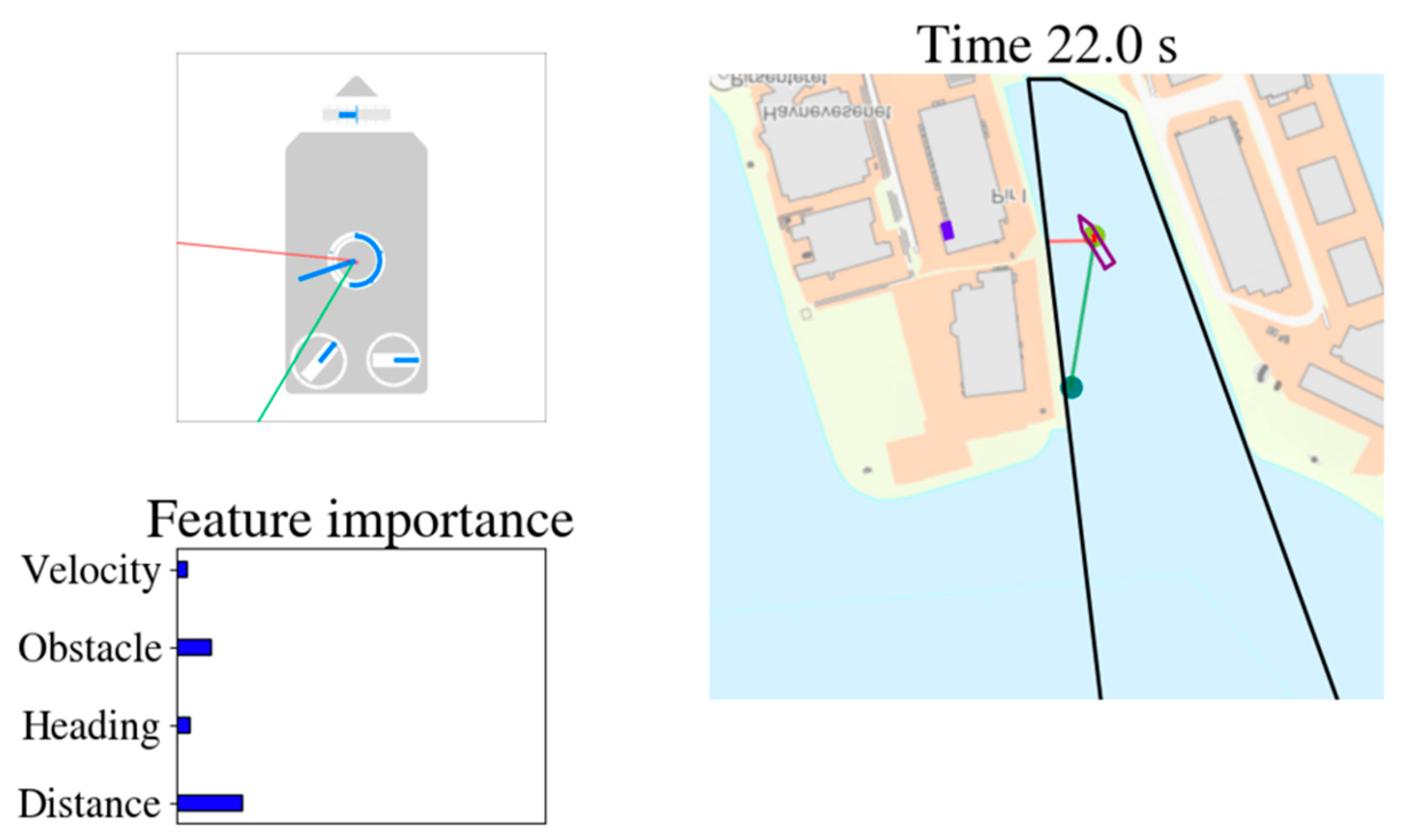

3.3.1. Path Planning

3.3.2. Trustworthiness

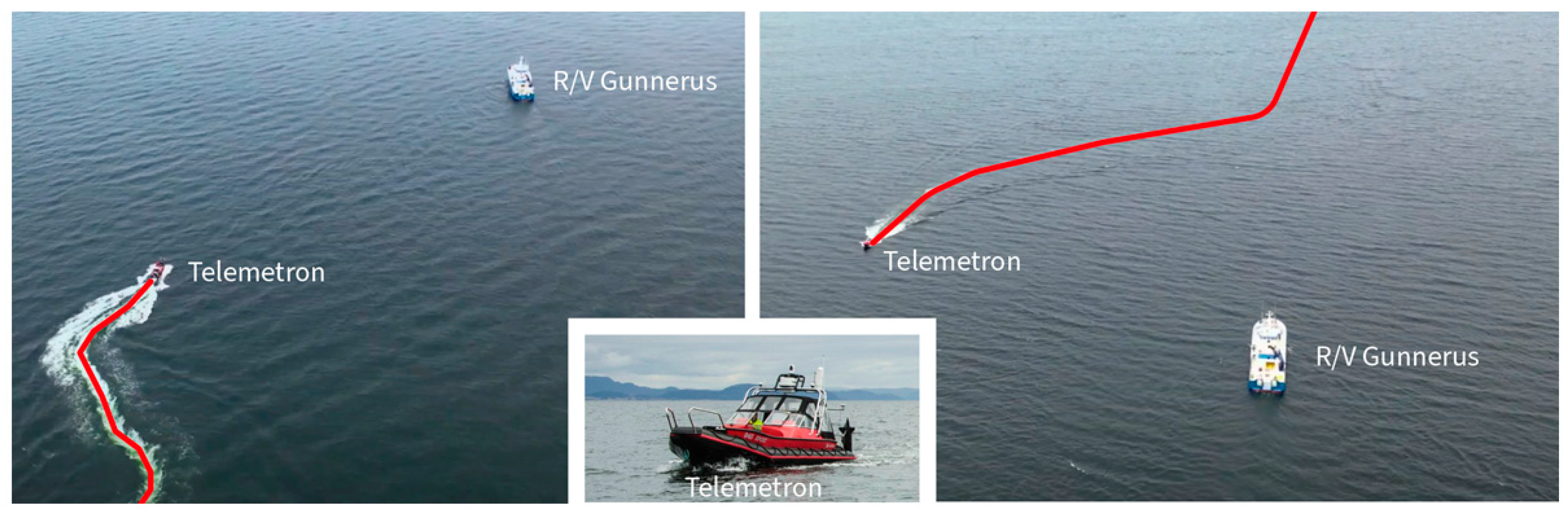

3.3.3. Human-AI Collaboration

3.4. Summary

4. Discussion

4.1. Explainability Needs for Different End User Interactions

- Developers (researchers, engineers);

- Primary users (passengers, operators);

- Secondary users (other vessels, bystanders).

4.2. XAI to Establish Interaction Relationships and Build User Trust

4.3. XAI by Encoding Abstract Concepts as Mental Models to Support User Interaction

4.4. XAI That Frames Human Autonomy Independent of Machine Autonomy

‘The ship [or ASV] can operate fully automatic in most situations and has a predefined selection of options for solving commonly encountered problems... It will call on human operators to intervene if the problems cannot be solved within these constraints. The SCC or bridge personnel continuously supervises the operations and will take immediate control when requested to by the system. Otherwise, the system will be expected to operate safely by itself.’[55] (pp. 11–12)

4.5. XAI That Accepts That Users Do Not Need to Know Inner Workings of AI to Use It Successfully

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Dunbabin, M.; Grinham, A.; Udy, J. An Autonomous Surface Vehicle for Water Quality Monitoring; Australian Robotics and Automation Association: Sydney, Australia, 2 December 2009; pp. 1–6. [Google Scholar]

- Kimball, P.; Bailey, J.; Das, S.; Geyer, R.; Harrison, T.; Kunz, C.; Manganini, K.; Mankoff, K.; Samuelson, K.; Sayre-McCord, T.; et al. The WHOI Jetyak: An Autonomous Surface Vehicle for Oceanographic Research in Shallow or Dangerous Waters. In Proceedings of the 2014 IEEE/OES Autonomous Underwater Vehicles (AUV), Oxford, MS, USA, 6–9 October 2014; pp. 1–7. [Google Scholar]

- Williams, G.; Maksym, T.; Wilkinson, J.; Kunz, C.; Murphy, C.; Kimball, P.; Singh, H. Thick and Deformed Antarctic Sea Ice Mapped with Autonomous Underwater Vehicles. Nat. Geosci. 2015, 8, 61–67. [Google Scholar] [CrossRef]

- MiT Roboat Project. Available online: http://www.roboat.org (accessed on 19 November 2020).

- Reddy, N.P.; Zadeh, M.K.; Thieme, C.A.; Skjetne, R.; Sorensen, A.J.; Aanondsen, S.A.; Breivik, M.; Eide, E. Zero-Emission Autonomous Ferries for Urban Water Transport: Cheaper, Cleaner Alternative to Bridges and Manned Vessels. IEEE Electrif. Mag. 2019, 7, 32–45. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, Y.; Li, T.; Chen, C.P. A Survey of Technologies for Unmanned Merchant Ships. IEEE Access 2020, 8, 224461–224486. [Google Scholar] [CrossRef]

- IMO. Outcome of the Regulatory Scoping Exercise for the Use of Maritime Autonomous Surface Ships (MASS); IMO: London, UK, 2021. [Google Scholar]

- Burmeister, H.-C.; Bruhn, W.; Rødseth, Ø.J.; Porathe, T. Autonomous Unmanned Merchant Vessel and Its Contribution towards the E-Navigation Implementation: The MUNIN Perspective. Int. J. e-Navig. Marit. Econ. 2014, 1, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Peeters, G.; Yayla, G.; Catoor, T.; Van Baelen, S.; Afzal, M.R.; Christofakis, C.; Storms, S.; Boonen, R.; Slaets, P. An Inland Shore Control Centre for Monitoring or Controlling Unmanned Inland Cargo Vessels. J. Mar. Sci. Eng. 2020, 8, 758. [Google Scholar] [CrossRef]

- Kongsberg. Kongsberg Maritime and Massterly to Equip and Operate Two Zero-Emission Autonomous Vessels for ASKO. Available online: https://www.kongsberg.com/maritime/about-us/news-and-media/news-archive/2020/zero-emission-autonomous-vessels/ (accessed on 29 September 2021).

- Rolls-Royce Press Releases. Available online: https://www.rolls-royce.com/media/press-releases.aspx (accessed on 18 April 2021).

- Kongsberg. First Adaptive Transit on Bastøfosen VI. Available online: https://www.kongsberg.com/maritime/about-us/news-and-media/news-archive/2020/first-adaptive-transit-on-bastofosen-vi/ (accessed on 29 September 2021).

- Gunning, D.; Aha, D. DARPA’s Explainable Artificial Intelligence (XAI) Program. AI Mag. 2019, 40, 44–58. [Google Scholar] [CrossRef]

- Horvitz, E. Principles of Mixed-Initiative User Interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Pittsburgh, PA, USA, 15–20 May 1999; Association for Computing Machinery: New York, NY, USA; pp. 159–166. [Google Scholar]

- Höök, K. Steps to Take before Intelligent User Interfaces Become Real. Interact. Comput. 2000, 12, 409–426. [Google Scholar] [CrossRef]

- Shneiderman, B. Human-Centered Artificial Intelligence: Reliable, Safe & Trustworthy. Int. J. Hum. Comput. Interact. 2020, 36, 495–504. [Google Scholar] [CrossRef] [Green Version]

- Cui, H.; Zhang, H.; Ganger, G.R.; Gibbons, P.B.; Xing, E.P. GeePS: Scalable Deep Learning on Distributed GPUs with a GPU-Specialized Parameter Server. In Proceedings of the Eleventh European Conference on Computer Systems, London, UK, 18–21 April 2016; Association for Computing Machinery: New York, NY, USA. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges toward Responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef] [Green Version]

- Voosen, P. The AI Detectives. Science 2017, 357, 22–27. [Google Scholar] [CrossRef] [Green Version]

- Došilović, F.K.; Brčić, M.; Hlupić, N. Explainable Artificial Intelligence: A Survey. In Proceedings of the 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 21–25 May 2018; pp. 210–215. [Google Scholar]

- Poursabzi-Sangdeh, F.; Goldstein, D.G.; Hofman, J.M.; Wortman Vaughan, J.W.; Wallach, H. Manipulating and Measuring Model Interpretability. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar]

- Christian, B. The Alignment Problem: Machine Learning and Human Values; WW Norton & Company: New York, NY, USA, 2020. [Google Scholar]

- Kleinberg, J.; Lakkaraju, H.; Leskovec, J.; Ludwig, J.; Mullainathan, S. Human Decisions and Machine Predictions. Q. J. Econ. 2018, 133, 237–293. [Google Scholar] [CrossRef]

- Shirado, H.; Christakis, N.A. Locally Noisy Autonomous Agents Improve Global Human Coordination in Network Experiments. Nature 2017, 545, 370–374. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hansen, H.L.; Nielsen, D.; Frydenberg, M. Occupational Accidents Aboard Merchant Ships. Occup. Environ. Med. 2002, 59, 85–91. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hetherington, C.; Flin, R.; Mearns, K. Safety in Shipping: The Human Element. J. Saf. Res. 2006, 37, 401–411. [Google Scholar] [CrossRef]

- IMO. Maritime Safety. Available online: https://www.imo.org/en/OurWork/Safety/Pages/default.aspx (accessed on 27 April 2021).

- Goerlandt, F.; Pulsifer, K. An Exploratory Investigation of Public Perceptions towards Autonomous Urban Ferries. Saf. Sci. 2022, 145, 105496. [Google Scholar] [CrossRef]

- Nersessian, N.J. Creating Scientific Concepts; MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Weber, M. Science as a Vocation. In From Max Weber; Gerth, H.H., Mills, C.W., Eds. and Translators; Oxford University Press: New York, NY, USA, 1946; pp. 129–156. [Google Scholar]

- Weber, M. Roscher and Knies: The Logical Problems of Historical Economics; Oakes, G., Translator; Free Press: New York, NY, USA, 1975. [Google Scholar]

- Swedberg, R. The Art of Social Theory; Princeton University Press: Princeton, NJ, USA, 2014. [Google Scholar]

- NTNU. Autoferry—NTNU. Available online: https://www.ntnu.edu/autoferry (accessed on 1 October 2020).

- Bitar, G.; Martinsen, A.B.; Lekkas, A.M.; Breivik, M. Trajectory Planning and Control for Automatic Docking of ASVs with Full-Scale Experiments. IFAC-PapersOnLine 2020, 53, 14488–14494. [Google Scholar] [CrossRef]

- Thyri, E.H.; Breivik, M.; Lekkas, A.M. A Path-Velocity Decomposition Approach to Collision Avoidance for Autonomous Passenger Ferries in Confined Waters. IFAC-PapersOnLine 2020, 53, 14628–14635. [Google Scholar] [CrossRef]

- Paavola, S. On the Origin of Ideas: An Abductivist Approach to Discovery. Ph.D. Thesis, University of Helsinki, Helsinki, Finland, 2006. [Google Scholar]

- Wittgenstein, L. The Blue and the Brown Book; Harper: New York, NY, USA, 1958. [Google Scholar]

- Rips, L.J. Mental muddles. In The Representation of Knowledge and Belief; Arizona Colloquium in Cognition; The University of Arizona Press: Tucson, AZ, USA, 1986; pp. 258–286. [Google Scholar]

- Mustvedt, P. Autonom Ferge Designet for å Frakte 12 Passasjerer Trygt over Nidelven. Master’s Thesis, Norwegian University of Science and Technology (NTNU), Trondheim, Norway, 2019. [Google Scholar]

- Glesaaen, P.K.; Ellingsen, H.M. Design av Brukerreise og Brygger til Autonom Passasjerferge. Master’s Thesis, Norwegian University of Science and Technology (NTNU), Trondheim, Norway, 2020. [Google Scholar]

- Gjærum, V.B.; Strümke, I.; Alsos, O.A.; Lekkas, A.M. Explaining a Deep Reinforcement Learning Docking Agent Using Linear Model Trees with User Adapted Visualization. J. Mar. Sci. Eng. 2021, 9, 1178. [Google Scholar] [CrossRef]

- NTNU. NTNU Shore Control Lab. Available online: https://www.ntnu.edu/shorecontrol (accessed on 30 September 2021).

- Veitch, E.A.; Kaland, T.; Alsos, O.A. Design for Resilient Human-System Interaction in Autonomy: The Case of a Shore Control Centre for Unmanned Ships. Proc. Des. Soc. 2021, 1, 1023–1032. [Google Scholar] [CrossRef]

- Brekke, E.F.; Wilthil, E.F.; Eriksen, B.-O.H.; Kufoalor, D.K.M.; Helgesen, Ø.K.; Hagen, I.B.; Breivik, M.; Johansen, T.A. The Autosea Project: Developing Closed-Loop Target Tracking and Collision Avoidance Systems. J. Phys. Conf. Ser. 2019, 1357, 012020. [Google Scholar] [CrossRef] [Green Version]

- Vasstein, K.; Brekke, E.F.; Mester, R.; Eide, E. Autoferry Gemini: A Real-Time Simulation Platform for Electromagnetic Radiation Sensors on Autonomous Ships. IOP Conf. Ser. Mater. Sci. Eng. 2020, 929, 012032. [Google Scholar] [CrossRef]

- VTS Manual 2021-Edition 8. IALA: Zeebrugge, Belgium. Available online: https://www.iala-aism.org/product/iala-vts-manual-2021/ (accessed on 5 November 2021).

- Kim, B.; Wattenberg, M.; Gilmer, J.; Cai, C.; Wexler, J.; Viegas, F.; Sayres, R. Interpretability Beyond Feature Attribution: Quantitative Testing with Concept Activation Vectors (TCAV). In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; ICML: San Diego, CA, USA.

- Kahneman, D.; Tversky, A. On the Psychology of Prediction. Psychol. Rev. 1973, 80, 237–251. [Google Scholar] [CrossRef] [Green Version]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-Level Classification of Skin Cancer with Deep Neural Networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Proceedings of the Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 818–833. Available online: https://arxiv.org/abs/1311.2901 (accessed on 3 November 2021).

- Heath, C.; Luff, P. Collaboration and Control: Crisis Management and Multimedia Technology in London Underground Line Control Rooms. Comput. Supported Coop. Work. 1992, 1, 69–94. [Google Scholar] [CrossRef]

- Alsos, O.A.; Das, A.; Svanæs, D. Mobile Health IT: The Effect of User Interface and Form Factor on Doctor–Patient Communication. Int. J. Med. Inform. 2012, 81, 12–28. [Google Scholar] [CrossRef]

- Lakoff, G.; Johnson, M. Metaphors We Live by; University of Chicago Press: London, UK, 2003. [Google Scholar]

- Rødseth, Ø.J. Definitions for Autonomous Merchant Ships; NFAS: Trondheim, Norway, 2017. [Google Scholar]

- Vagia, M.; Rødseth, Ø.J. A Taxonomy for Autonomous Vehicles for Different Transportation Modes. J. Phys. Conf. Ser. 2019, 1357, 012022. [Google Scholar] [CrossRef]

- Utne, I.B.; Sørensen, A.J.; Schjølberg, I. Risk Management of Autonomous Marine Systems and Operations. In Proceedings of the ASME 2017 36th International Conference on Ocean, Offshore and Arctic Engineering, Trondheim, Norway, 25–30 June 2017; Volume 3B: Structures, Safety and Reliability. ASME: New York, NY, USA, 2017. [Google Scholar]

- Stone, P.; Brooks, R.; Brynjolfsson, E.; Calo, R.; Etzioni, O.; Hager, G.; Hirschberg, J.; Kalyanakrishnan, S.; Kamar, E.; Kraus, S. Artificial Intelligence and Life in 2030: The One Hundred Year Study on Artificial Intelligence; Stanford University: Stanford, CA, USA, 2016. [Google Scholar]

- Heyerdahl, T. The Voyage of the Raft Kon-Tiki. Geogr. J. 1950, 115, 20–41. [Google Scholar] [CrossRef]

- Skjetne, R.; Sørensen, M.E.N.; Breivik, M.; Værnø, S.A.T.; Brodtkorb, A.H.; Sørensen, A.J.; Kjerstad, Ø.K.; Calabrò, V.; Vinje, B.O. AMOS DP Research Cruise 2016: Academic Full-Scale Testing of Experimental Dynamic Positioning Control Algorithms Onboard R/V Gunnerus; Volume 1: Offshore Technology; ASME: Trondheim, Norway, 2017. [Google Scholar]

- Maritime Robotics Otter. Available online: https://www.maritimerobotics.com/otter (accessed on 1 October 2021).

- Dallolio, A.; Agdal, B.; Zolich, A.; Alfredsen, J.A.; Johansen, T.A. Long-Endurance Green Energy Autonomous Surface Vehicle Control Architecture. In Proceedings of the OCEANS 2019 MTS/IEEE SEATTLE, Seattle, WA, USA, 27–31 October 2019; pp. 1–10. [Google Scholar]

- Norman, D. The Design of Everyday Things: Revised and Expanded Edition; Basic Books: New York, NY, USA, 2013. [Google Scholar]

- Wiener, N. Some Moral and Technical Consequences of Automation. Science 1960, 131, 1355–1358. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Allen, C.; Smit, I.; Wallach, W. Artificial Morality: Top-down, Bottom-up, and Hybrid Approaches. Ethics Inf. Technol. 2005, 7, 149–155. [Google Scholar] [CrossRef]

- Gabriel, I. Artificial Intelligence, Values, and Alignment. Minds Mach. 2020, 30, 411–437. [Google Scholar] [CrossRef]

- Dawes, R.M. The Robust Beauty of Improper Linear Models in Decision Making. Am. Psychol. 1979, 34, 571–582. [Google Scholar] [CrossRef]

| XAI Audience | ||||

|---|---|---|---|---|

| Model-Based Reasoning Elements | Developers | Passengers | Operators | Other Vessels |

| Analogy | Represents ASV as a familiar concept to explain AI interaction (enhances usability) | |||

| Visualization | Represents ASV sensory perception and numerical models visually to explain AI decision-making (enhances trust) | Represents ASV functionality and affordances through user displays, design, form, and aesthetics to explain AI interaction (enhances usability and trust) | Represents ASV system constraints visually to explain human-AI collaboration (enhances usability and safety) | Represents ASV behavior through visual signals to explain AI decision-making (enhances safety) |

| Mental simulation | Represents ASV behavior in terms of ‘mental models’ (often synthesized as numerical simulations) to explain AI decision-making (enhances trust) | Represents ASV behavior using ‘mental model’ of kinesthetic experience to explain AI decision-making (enhances trust) | Represents ASV behavior in terms of ‘mental models’ (often synthesized as immersive virtual simulations) to explain human-AI collaboration (enhances safety) | Represents ASV behavior in terms of ‘mental models’ of motion characteristics to explain AI decision-making (enhances safety) |

| Technology-Centered XAI | Human-Centered XAI | |

|---|---|---|

| 1. | Mission is to ensure and improve model accuracy and efficiency | Mission is to establish and maintain an interaction relationship |

| 2. | Provides data-driven visualizations of models based in mathematics | Uses mental models based on analogy-making to explain technology use |

| 3. | Frames increases in machine autonomy as subsequent reductions in human autonomy | Frames increases in machine autonomy as independent of human autonomy |

| 4. | Considers the ‘black box’ interpretability problem as a barrier to AI development | Accepts that user does not need to know inner working of AI to use it successfully |

| 5. | Considers humans as a source of error in overall system safety | Considers humans as a source of resilience in overall system safety |

| 6. | Often assigns AI human-like qualities, leading to over-selling and not meeting user expectations | Explains limitations to users and actively manages expectations |

| 7. | Seeks to improve the performance of human task by prioritizing computation | Seeks to enhance the performance of human tasks by prioritizing collaboration |

| Characteristics | Developers | Primary Users | Secondary Users | ||

|---|---|---|---|---|---|

| Users | Developers, engineers, expert users | Operators, safety attendants, crew | Passengers | Sailboats, leisure boats, kayaks (not AIS-equipped); bystanders | Fishing boats, ferries, cruise ships, other marine traffic (AIS-equipped) |

| Background knowledge | Trained developers; strong technical and analytical skills | Trained mariners, strong safety culture | No or little understanding of navigation nor of onboard safety systems | Varying navigation skills and safety culture | Trained mariners; strong safety culture |

| Context of use | Primarily office work; methodical testing of simulation-based scenarios; indirect consequences for crew and environment | Primarily field work; time-critical decision-making; direct consequences for crew and environment | Present onboard the vessel with the purpose of safe, comfortable, enjoyable, and timely transportation | Shared traffic especially in confined waterways (especially during holidays); unreliable radio communication and VTS detection; bystanders may become future passengers | Shared traffic especially in marine traffic lanes; reliable radio communication and VTS detection |

| Interaction with AI | Improve ASV model accuracy and efficiency; train models with data | Directly affected by ASV model decisions | Directly affected by ASV model decisions | Indirectly affected by the ASV state and intention | Indirectly affected by the ASV state and intention |

| XAI needs | Visualizations of ASV models; real-time visualization and processing of sensor data; description of training data | Current state and intention of ASV models; definition of AI-human control boundary; understanding of when to intervene | Confirmation that ASV ‘sees’ and avoids collisions with other objects | Confirmation that ASV ‘sees’ them to avoid collisions, dangerous situations, and ‘deadlocks’ | Clear information about ASV intentions for avoiding collisions and ‘deadlocks;’ traffic flow maintained |

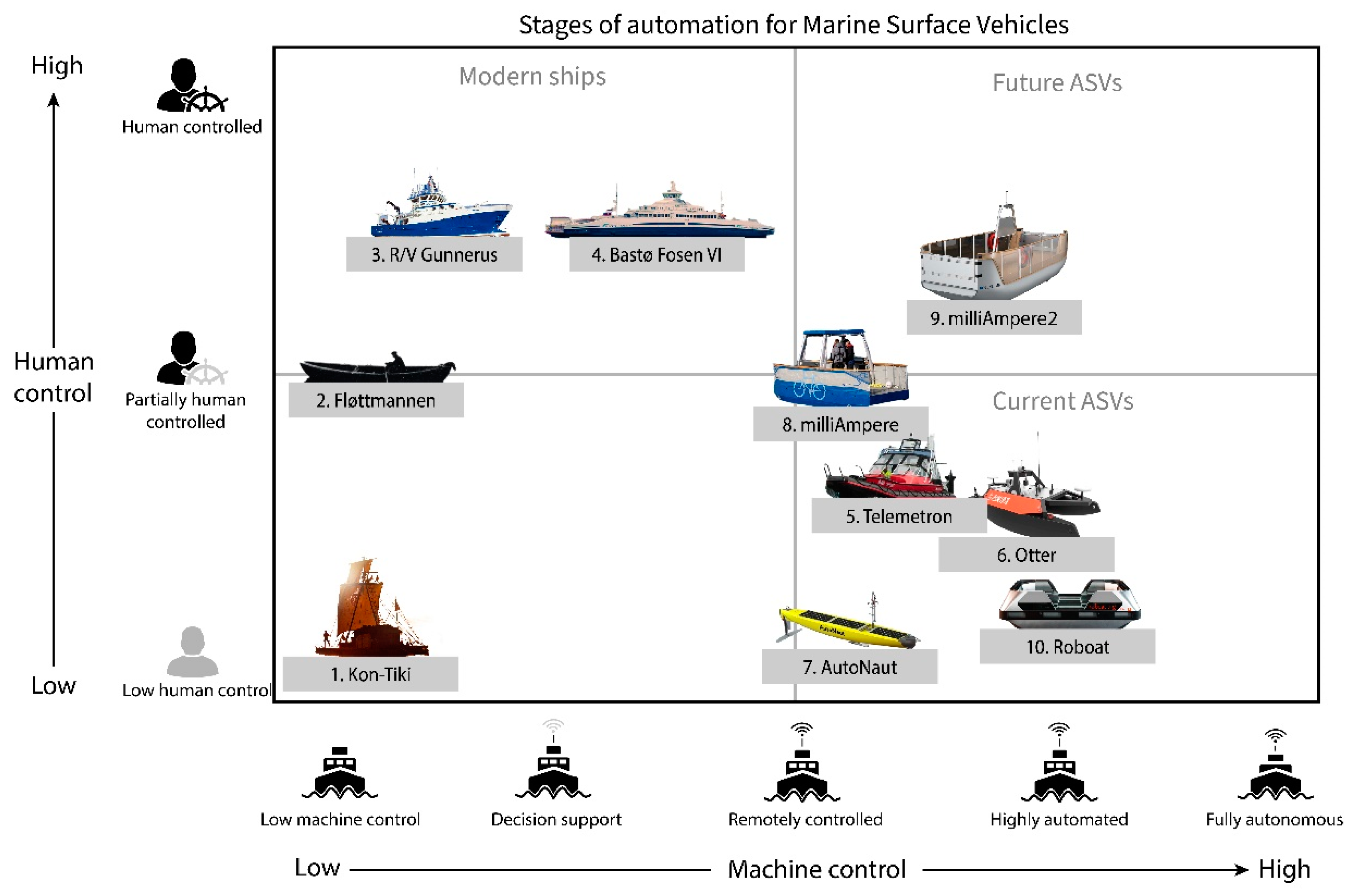

| ID | Vessel/Project | Description | Autonomous Control | Human Control |

|---|---|---|---|---|

| 1. | Kon-Tiki [59] | Balsa wood raft used in Thor Heyerdahl’s 1947 expedition in the South Pacific Ocean | None | Very low; poorly maneuverable raft relying upon wind and current |

| 2. | Fløttmannen | Passenger rowboat crossing a 100 m canal in Trondheim (same location where the milliAmpere ferries are planned for operation) | None | Medium; maneuverable in urban canals; visible actions for passengers and other vessels |

| 3. | R/V Gunnerus [60] | Research vessel owned and operated by NTNU | Low; Dynamic Positioning (DP) system for automatic station-keeping | High; navigation wheelhouse with crew and complement of four |

| 4. | Bastø Fosen VI [12] | Roll-on-roll-off car ferry (length overall 140 m) operating on Oslofjord’s Horten-Moss crossing, the busiest crossing in Norway | Medium; Auto-crossing and auto-docking; DP system | High; navigation wheelhouse with two deck officers, total crew of five |

| 5. | Telemetron [45] | Converted sport vessel with autonomous functionality; used for field testing of collision avoidance | Automated navigation including collision avoidance | Original steering and controls onboard; vehicle control station onboard for control of autonomous system |

| 6. | Otter [61] | Portable research and data-acquisition ASV power by battery packs | Medium; autonomously follows motion trajectory defined by user | Medium; remote control with joystick; set trajectories via user-friendly software interface and mobile app |

| 7. | AutoNaut [62] | Long-range research and data-acquisition ASV propelled by ocean waves | Medium; when there are no waves it drifts with current | Low; remote control of passive maneuvering system; waypoint navigation in custom user interface |

| 8. | milliAmpere [34] | Electric autonomous passenger ferry (length overall 5 m) crossing a 100 m canal in Trondheim; designed for research purposes only | Medium; Automated crossing, docking, undocking, and collision avoidance | Medium; remote control console, emergency stop, oars |

| 9. | milliAmpere2 [34] | Electric autonomous passenger ferry (length overall 8 m) crossing a 100 m canal in Trondheim; designed for real-world operation and field research | Automated crossing, docking, undocking, and collision avoidance; Dynamic Positioning (DP) | Medium; collaborative control with Shore Control Centre; direct remote motion control via DP system; passenger user interface and communication link |

| 10. | Roboat [4] | On-demand multipurpose autonomous platforms (floating bridges and stages, waste collection, goods deliver, urban transportation, data collection) | High; automated motion planning, obstacle avoidance, predictive trajectory tracking | Low; indirect control only, no direct remote motion control or collaborative control infrastructure |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Veitch, E.; Alsos, O.A. Human-Centered Explainable Artificial Intelligence for Marine Autonomous Surface Vehicles. J. Mar. Sci. Eng. 2021, 9, 1227. https://doi.org/10.3390/jmse9111227

Veitch E, Alsos OA. Human-Centered Explainable Artificial Intelligence for Marine Autonomous Surface Vehicles. Journal of Marine Science and Engineering. 2021; 9(11):1227. https://doi.org/10.3390/jmse9111227

Chicago/Turabian StyleVeitch, Erik, and Ole Andreas Alsos. 2021. "Human-Centered Explainable Artificial Intelligence for Marine Autonomous Surface Vehicles" Journal of Marine Science and Engineering 9, no. 11: 1227. https://doi.org/10.3390/jmse9111227

APA StyleVeitch, E., & Alsos, O. A. (2021). Human-Centered Explainable Artificial Intelligence for Marine Autonomous Surface Vehicles. Journal of Marine Science and Engineering, 9(11), 1227. https://doi.org/10.3390/jmse9111227