1. Introduction

Automatic identification system (AIS) data are a primary source for maritime supervision and analysis of ship behaviors and they are significant for the research of waterway traffic laws and trends whose accuracy and reliability directly affect the analysis results. However, the raw AIS data usually have a few quality problems such as invalid data, errors, values missing, abnormal values and duplicate records due to the communication link, channel interference and human tampering on AIS equipment, which are also called “dirty” data. Their existence in AIS data significantly affects maritime supervision, ship navigation safety and understanding of the water traffic. For example, normal trajectories are hard to discern in the mixed trajectories when multiple ships share one Maritime Mobile Service Identity (MMSI) number and the assessment and decision-making of safety situations can be negatively influenced. Thus, dirty AIS data should be applied to improve the data quality before further analysis of AIS data.

AIS data quality management includes data profiling, cleaning and transforming and helps to clean up the dirty data and change the data format to meet the given requirements. It particularly needs to infer whether the abnormal AIS data represent any actual data errors by the professional understandings of AIS data profiling. The quality problems in AIS data are usually detected automatically by establishing mathematical models and using rules and thresholds. The quality of uncommon AIS data, which is consistent with the situation at that time, is easily misjudged using these methods. In the case of evaluating AIS data problems, their quality can be quickly and accurately identified by reasoning and judgment with the help of human visual thinking ability while AIS data are visualized. For example, the shapes and density of the trajectories can be utilized to quickly determine whether there are offset, missing or other quality problems in AIS data by drawing a ship’s trajectory in accordance with AIS data.

Raw data are usually filtered in accordance with certain rules or their combinations to clean AIS data. Those data that do not meet the requirements are then directly removed or repaired [

1,

2]. The evidence reasoning (ER) method was utilized to filter abnormal AIS data and restore the filtered data in combination with ship dynamics [

3]. This means that abnormal and missing data would be entirely filtered out. Thus, existing dirty AIS data cleaning methods aim to eliminate the dirty data or ensure they can become “better” regardless of the value of the dirty AIS data and their exploration.

It was pointed out by Andrienko [

4,

5] and Hammond and Peters [

6] that the hidden information in the dirty data should be fully understood. For example, missing AIS data can reflect that a ship may be located in a signal blind area. Thus, more attention should be paid to AIS dirty data. If the information and characteristics of AIS dirty data are effectively mined and utilized, vital supports can be provided for AIS data maintenance and management. Moreover, cleaning and exploration of the dirty data are a cyclic and repeated process. Visual analysis combines the powerful cognitive ability of humans with the efficient computing ability of machines to explore and mine big data through human-computer interactions whose integration with the visual model can be repeatedly utilized to explore different types of quality problems in AIS data for analysis and understanding of causes and the distribution of the quality problems. Thus, a combination of human judgment and human-computer interaction can dominate AIS data quality assessment.

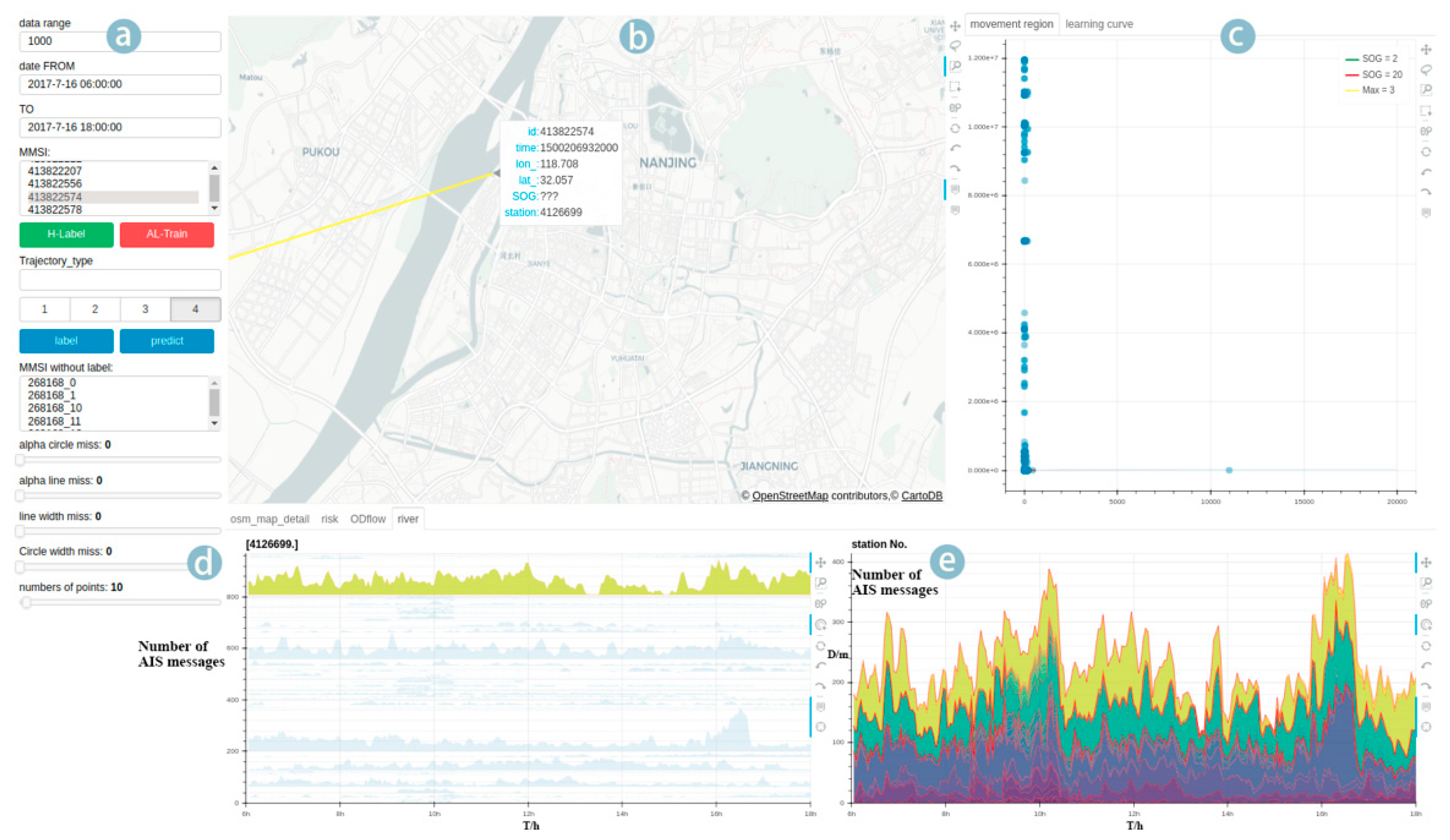

A visual analytics method was put forward and a visual analytics system was developed here to analyze AIS data quality problems. Our major contributions are as follows:

1. A visual analytics method was presented to identify and evaluate the quality of AIS data;

2. A novel visualization model of AIS dirty data was proposed to visually analyze the base station information in AIS data;

3. AIS data with quality problems were explored and discussed by examples to excavate the corresponding hidden significance.

The main part of this study is divided into five sections as follows:

Section 4: AIS Data Quality visualization (ADQvis);

2. Related Work

AIS data quality as a broad research content primarily includes AIS data profiling, cleaning and transforming. Research of data cleaning and exploration of AIS dirty data (especially exploration and analysis of missing, abnormal and duplicate data) were focused here. The preprocessing of AIS dirty data mainly focused on data filtering and repairing in previous studies.

AIS data filtering is primarily used to find and remove data problems that deviate greatly from most AIS data such as data outliers and numerical redundancy in AIS data sources. Most researchers adopted mature data cleaning technologies in the field of database research. However, these technologies are only aimed at tabular data quality problems that are not fully applicable to trajectory data. On this basis, a few scholars have formulated filtering rules of ship attributes and set a threshold for certain attributes and corresponding filtered AIS dirty data. For example, while the range of MMSI and International Maritime Organization (IMO) numbers and the maximum average speed or acceleration of a ship are set and whether it goes beyond is determined, these abnormal data records should be cleared; however, there is no strict discussion on how to determine an appropriate threshold. In studies [

3,

7,

8], by simply setting the filtering threshold, the velocity, heading angle and trajectory position information data are transformed into evidence credibility between 0 and 1. Evidence reasoning (ER) rules and evidential reasoning are utilized to synthesize and realize the identification and cleaning of abnormal data with high recognition accuracy. There can be a drift of trajectory points with the same MMSI for different ships by drawing AIS trajectories during the preprocessing [

9]. A simple method was selected to directly remove such data and ensure the data were clean. Clearly, this method would directly result in the loss and waste of data resources; some abnormal ship behavior data would always cover up their behaviors by tampering their own MMSI. The trajectory was firstly segmented in accordance with the time threshold while the abnormal data of the mix trajectory were filtered in light of the speed threshold [

10]. Finally, the processed data were utilized for ship classification and the clustering results were displayed on an electronic chart. Gao [

11] suggested that the trajectories of different vessels with same MMSI are often mistaken as noise data points because of their usual zigzag shapes. Thus, median or average and Kalman and example filtering methods are usually used to filter these offset points in the preprocessing period. However, these methods only maintain the integrity of a certain trajectory rather than the extraction of multiple trajectories to lose other trajectory data. The abnormal values of raw data were detected and eliminated by means of artificial intelligence or filtering rules for the above method. Those data conforming to the rules were only retained as input for further analysis. Thus, the hidden meaning of the abnormal data cannot be correctly understood in loss and waste of AIS data resources.

AIS data repairing as an approach covers and eliminates those abnormal data with proper trajectory data; moreover, the ship maneuverability model and trajectory prediction method were mainly adopted to restore problem trajectory data. Due to the loss of packets causing the incompleteness of data during transmission or filtering of abnormal data, the trajectory should be necessarily reconstructed to repair AIS data [

12]. Linear interpolation is one of the fastest and simplest algorithms being commonly applied in short-distance trajectory reconstructions [

13]. The maneuverability of the ship is taken into account and the trajectory is repaired by dividing the ship’s trajectory into three parts (namely straight, curve and rotation) [

7]. Unluckily, this is not suitable for long-distance trajectory repairing. A data-driven method was applied to predict a ship’s trajectory and fill the missing data in accordance with its historical trajectory data [

14]. Experiments have shown that this method can effectively recover long-term missing trajectories. The above methods are primarily used to cover or fill in the missing and filtered abnormal data of the raw AIS data so that complete trajectory data can be reconstructed for subsequent analysis. However, missing AIS data cannot be ignored and they may also imply critical information. Thus, analysis results would be one-sided if the missing AIS data were not taken into account.

Data quality analysis as an iterative process is necessarily integrated with automatic calculating technology and the human experiences and expertise to make a comprehensive judgment. Thus, the means to combine the data visualization technology and human visual cognition ability based on human-computer interaction is suitable in data visualization analysis. Visual analysis was recently popular for data cleaning [

15]. Studies on data quality management and visual analysis (especially those focusing on data cleaning) were summarized and discussed [

16]. An iterative and progressive visual analysis framework was then proposed based on the data cleaning process designed by Van den Broeck. The data quality problems still existed at this stage after data cleaning. A visual analysis method was presented to not only deal with data quality problems after preprocessing but also to support data analysis [

17]. Finally, the case study of Bogota’s public transport system presents such a fact that the method can achieve analysis (such as data quality assessment).

Visual analysis is applied in data quality analysis in many fields. Linked business data were taken as the research object; a visual analysis framework was correspondingly put forward by integrating the previous empirical rules and a visual display dashboard was designed to evaluate the data quality based on sharing business data [

18]. Stacking charts and text display visualization methods were utilized to helpfully explore the data quality of open data and select high-quality data versions for analysis [

19]. The quality metrics were applied to evaluate the quality of tabular data [

20]. Different visual representations were then designed for various data metrics and the MetricDoc system was developed to support the exploration of quality problems based on the interaction of data quality metrics. Finally, an analysis of open network test data indicated that the system could accurately reveal the quality problems of the data. The design shortages of the visual analysis of the data quality were discussed previously [

21]. The impact of various visualization models was revealed on the detection of data quality problems by cases and suggestions were provided on the visual analysis design of data quality. In view of the shortcomings of the previous data profiling methods that cannot support time series data cleaning, a two-dimensional heat map, table display and human-computer interaction methods were integrated to design a visual analytics system and support understanding of the quality issues in time series data [

22].

AIS data as a kind of spatiotemporal data are different from tabular data in that they have spatiotemporal distribution characteristics. Similarly, AIS data have the characteristics of not only time series but also spatial distribution. Thus, the aforementioned methods cannot fully display the data quality problems but AIS dirty data, which mainly contains missing and abnormal data, cannot be explored comprehensively. The visualization of missing and abnormal data is elaborated on below.

Missing data are often overlooked. Even if they are crucial, a few visual analysis methods only use the remaining data and draws corresponding conclusions. Missing data have been visualized by means of the polyline with faults [

23]. The visualization method was applied to display the missing data by lowering the hue while keeping the outline smooth and bright [

24]. The possible range of missing data was calculated by the statistical method and the uncertainty of missing data was visualized by a boxplot [

25]. Vacancy, ambiguity and vacancy annotation were applied in the visualization model [

26]. A practical analysis showed that the vacancy annotation method is more helpful for users to understand the missing data. A visual analysis was performed by Andrienko [

5] to discover the location of tunnels in cities by showing the absence of vehicle movement data. A set of processes and methods was proposed to understand data quality by visual analysis and different visualization models were applied to display and analyze mobile data. The results showed that missing data could be effectively found and their occurrence causes were explained clearly.

Overall, various visual models can be applied to display the missing data. Their effects are different where inappropriate visualization models can even mislead analysts. The problem of missing data in time series data was discussed to study how the visualization models and data repair methods affect the analysts’ judgment on the quality of raw data [

27]. Design suggestions were then presented for a visual analysis of the missing data. Missing data were regarded as an uncertainty of data quality [

28]. The uncertainty visualization method of cultural collection data was subsequently discussed and the feasibility of various visualization models was analyzed. Unfortunately, missing data were not explored in the above studies to reflect their hidden laws and surrounding environment characteristics.

In view of abnormal data visualization, abnormal AIS data refers to those data that deviate significantly from most data. Road network information was utilized for the abnormal trajectory and a two-dimensional map was projected for visualization [

29]. As parallel coordinates can represent the hidden relationships of various dimensions in high-dimensional data, they were applied in network security anomaly detection [

30] and the results indicated that this method could detect network risks in time. An abnormal visualization model was proposed based on the spherical visualization model in three dimensional space [

31] and the results showed that it can represent network activities and achieve network security supervision. A semi-supervised active learning method was utilized and the visualization of trajectory features was applied to mine anomalies in taxi data and evaluate the data quality [

31,

32]. The visualization and human-computer interaction were combined to select abnormal data from raw positioning data and use machine learning to discover more abnormal values [

33]. Data quality was then detected and evaluated. Further research was rarely carried out for the distribution patterns of those detected abnormal data so far.

Based on the above-mentioned shortcomings of AIS data quality research and application examples of data quality analysis, it was found that the current AIS quality analysis primarily lies in how to filter out or clean dirty data. Rather, the dirty data that are valuable inherently cannot be ignored in AIS data. Thus, a visual analytics system was designed here to overcome this shortcoming and explore and recycle the dirty data in massive AIS data. The specific requirements are described in detail in the next section.

3. Requirements

For filling the aforementioned gaps, a visual analytics approach was designed and developed to explore and analyze dirty data. Based on our discussion and review in

Section 2, the tasks for exploring AIS data quality were configured as follows:

T1: Identifying and filtering out various kinds of data quality issues;

T2: Exploring the spatiotemporal distribution of dirty data;

T3: Exploring the relationship between dirty data and environmental factors;

T4: Analyzing causes of data quality problems.

For successfully implementing an environment that supported these analysis and exploration tasks, the following requirements were presented before the development of our ADQvis system:

R1: Data quality identification. The visual analytics (VA) approach should be able to help users to quickly identify data quality issues by a reasonable visualization model. Moreover, interactions with the visualization model should be supported to select specific dirty data.

R2: Dirty data distribution. A visual overview of the dirty data distribution should be provided. The details of the overall distribution should be observed and further explored by appropriate human-computer interactions.

R3: Dirty data relevance exploration. The relevance between dirty data and other factors such as navigation environment and vessel static features should be intuitively discovered by applying the customized visualization model. Users should be supported to interactively explore the association information between the different attributes.

R4: Data interactive filtering. It is necessary to help users to realize data interactive filtering and exploration using time-range screening tools such as a calendar and spatial brushing tools (area selection). Moreover, human-computer interactions should be designed to transform and interact with the data behind the designed visualization model.

6. Conclusions and Prospects

A visual analytical approach for AIS dirty data exploration was established here based on an OSM where a scatter plot that displayed the spatiotemporal distance of AIS data was cut into various areas and AIS dirty data were selected in accordance with the differential distance between the trajectory points in the spatiotemporal space. Moreover, the relevance between the dirty data and the environmental factors were explored by interactions with our four quadrant stack graph. The primary conclusions of this study are listed as follows:

1. Compared with the traditional AIS data quality analysis method, our visual analytics approach presented the characteristics of intuitive display and flexible interactions to rapidly identify and explore AIS data quality issues.

2. Our ADQvis system that was applied in the empirical study of AIS data in the Wuhan Section focused on two kinds of typical dirty data (namely abnormal and missing data). The distribution of base station blind areas in the Wuhan Section and causes of AIS abnormal data could be obtained, which showed that our approach in data quality study could be put into practical applications.

Further research should be conducted from the following aspects:

1. The scalability of the system will necessarily be improved to meet the visual analysis of massive AIS historical data. Thus, the visual model can be generated and interacted more smoothly and quickly when a great number of data are input.

2. This study focuses on data quality analysis and the exploration of abnormal and missing data. However, AIS data quality issues are broad, which primarily include invalid, redundant and duplicate data. How to apply our visual analysis to these data shall be centralized in future.