1. Introduction

In recent years, autonomous underwater vehicles (AUVs) have elicited wide attention because of revolutionizing the oceanic research with applications on numerous scientific fields, such as marine geoscience, submarine oil exploration, submarine salvage, submarine pipeline repair, and archeology [

1,

2,

3]. Among all of the functions of AUV, autonomous obstacle-avoidance capability is the most important one because the obstacles are usually unknown for AUVs in underwater environment; thus, AUV can easily run into obstacles, thereby causing them to malfunction or even damage the robot [

4].

Several autonomous navigation methods for obstacle-avoidance have been reported in the literature. Lozano et al. [

5,

6] proposed visibility graph algorithm. In this algorithm, AUV is regarded as a bit, and obstacles are considered plane polygons. Subsequently, the starting point, goal point, and polygon obstacle of each vertex are connected. Moreover, all attachment fellowships without path obstructions are considered collision-free path. Finally, a safety view is formed, and some algorithms are used to search the optimal path. The principle of this method is simple and easy to realize. Takahashi et al. [

7] proposed the Voronoi diagram method. In 1983, the existence of a certain distance between the planned path and obstacles can be satisfied, and factors, such as safety, can be considered. The planning time increases and decreases with the density of obstacles. Although the shortest path can be determined by this method, it lacks flexibility. In Takahashi et al. [

8,

9], precise raster algorithm divided free space into no-overlap grid units. The grid is dominated by obstacles for grid assignment and makes a series of parallel lines to each obstacle vertices. Edges and obstacles in the planning environment are stopped. Eventually, the space is decomposed into a series of trapezoidal area to realize obstacle avoidance. In literature [

10,

11], quad-tree and octree decomposition methods were used to establish the plane sea area obstacle model and the submarine terrain model, respectively. In addition, the current velocity and direction could be used as grid attribute information to establish the current model. A* and D* algorithms are widely used path search algorithms. A* algorithm selects the optimal path node by calculating the evaluation function of all candidate nodes to the target point, which is suitable for static path planning [

12]. In the bionic fish path planning problem, Qiang et al. [

13] adopted the deployable point method to reduce the search nodes and improve the search efficiency; however, the environmental factors were not considered. D* algorithm [

14] is the dynamic A* algorithm that is suitable for solving dynamic path planning problems by detecting the changes in the previous or nearby nodes of the shortest path. Artificial potential field method is a virtual method proposed by Khatib et al. [

15]. This method is widely used in the path planning field, and its concept is to construct various virtual potential fields for the path planning of AUV [

16]. Warren [

17] used the artificial potential field method to carry out path planning for underwater robots and realized the global path planning of AUV in two-dimensional (2D) and three-dimensional (3D) spaces by reducing the local minima through heuristic knowledge. Chao [

18] adopted optimization theory, combined artificial potential field with obstacle constraint, and transformed path planning problem into solving constraint and semi-constraint problems. Cheng et al. [

19] used velocity vector synthesis algorithm to enable the combined velocity of ocean current velocity and AUV velocity point to the target, thereby minimizing resource consumption. In Ferrari et al. [

20], aiming at the problem of collaborative planning of multi-AUV to avoid multi-detection platform network, the detection platform was considered a virtual obstacle, and the planning result could determine the minimum exposure probability and the non-collision path by modifying the fitness function.

With the progress of computer technology, artificial intelligence has received extensive attention in various fields. The artificial intelligence-based path planning technology aims to transform the behavior and thoughts of some natural animals into algorithms that will be used in the path planning of mobile devices. Currently, artificial intelligence algorithms, such as particle swarm optimization algorithm, ant colony algorithm, evolutionary computing [

21], genetic algorithm, and self-organizing neural network [

22], have emerged and are widely applied. Xu et al. [

23] used the genetic algorithm and particle swarm optimization (GA-PSO) hybrid planning algorithm to realize the AUV global path planning under current conditions. Wang et al. [

24] designed cutting and handicap operators to solve the problem of ant colony path planning and realized the AUV global path planning in a 2D grid environment model. In paper [

25], particle swarm optimization (PSO) was used to solve the path planning problem of dynamic environment, and the speed and heading information of the robot were introduced into the objective function. The results verify that PSO has good real-time performance in solving the path planning problems. Xin et al. [

26] improved the ant colony path planning, designed the cutting and handicap operators, and realized the AUV global path planning in the 2D grid environment model. These methods need to know the global environment and does not have the ability to learn and explore the unknown environment path planning.

AUV is one of the most important means to explore the deep sea world. The deep ocean is a complex and changeable environment which is distributed with various mountains. When the underwater autonomous vehicle reaches the deep sea, it will face many large and small underwater canyons, and hard valley walls and other serious threats to the safety of the underwater autonomous vehicle [

27]. So, path planning and obstacle avoidance are important components of autonomous navigation for AUV. The goal is to find a collision-free path from the start to the end in a complex underwater environment. Algorithm design is the core of path planning. The learning algorithms of artificial intelligence are regarded by the majority of researchers as the future of artificial intelligence. Neural network is an important content of machine learning. In the recent path planning of underwater robots, a large number of scholars used sensor data as network input and behavior and actions as network outputs; moreover, network models were obtained through training [

28,

29]. In paper [

30], a 2D environment traversal path planning method based on biologically inspired neural networks was proposed. A recurrent neural network with convolution was developed [

31] to improve the autonomous ability and intelligence of obstacle avoidance planning. Zhu et al. [

32] focused on the study of sudden obstacles and used environmental changes to cause variations in neuron excitation and activity output values, thereby outputting collision-free path points. Reinforcement learning (RL) is an artificial intelligence algorithm that does not require prior knowledge and directly performs trial-and-error iterations with the environment to obtain feedback information to optimize strategies and is therefore widely used in mobile robot path planning in complex environments [

33,

34]. In paper [

35], an adaptive neural network obstacle control method of AUVs with control input nonlinearities using RL was considered. In addition to improving accuracy, you can also learn control strategies from data to avoid cumbersome manual tuning parameters [

36,

37]. In 1989, Watkins [

38] proposed a typical model-free RL algorithm called Q-learning algorithm. which is one of the most widely used algorithm in RL solutions [

39]. Considering that the Q-learning algorithm [

40] can guarantee convergence without knowing the model and can obtain good path planning in the case of a small state space, some scholars [

41,

42] have also applied it to the path planning of robots. However, for the research on underwater robot path planning and obstacle avoidance, such as large-dimensional and large state space, solving the optimal policy using Q-learning algorithm is difficult. Mnih et al. [

43] proposed a deep reinforcement learning (DRL) algorithm based on Deep Q Network (DQN). The performance of this algorithm in many Atari games has reached the same level as that of humans; however, it cannot be applied directly to high-level dimensional continuous motion space control problem. Cheng et al. [

44] proposed a DRL-based obstacle avoidance planning algorithm for underwater robots. Two convolutional layers in the algorithm structure extract the input state quantity features. The focus is on the distance to the target point, the distance to the obstacle, and the endpoint nearby speed and drift four-term return function (e.g., R distance, R collisions, R end, and R drift). However, no obvious advantage over traditional path planning algorithm was noted. Moreover, most researchers only considered the obstacle avoidance of static obstacles and some other works [

45,

46] presented applications where depth exploration in semi-static conditions could be improved; they seldom carried out real-time obstacle avoidance research on dynamic obstacles.

Lillicrap et al. [

47] proposed the deep deterministic policy gradient (DDPG) algorithm based on DQN and DPG. This algorithm shows strong robustness and stability and performs well when processing high-dimensional continuous motion space control tasks. More than 20 complex control tasks have been implemented, but they have not been applied to the control of AUV path planning and obstacle avoidance. Therefore, the research on the path planning and obstacle avoidance in unknown underwater canyons for AUV based on the DDPG algorithm is carried out.

The remainder of this paper is organized as follows: In

Section 2, four mathematical models required for AUV navigation in unknown underwater canyons are established. In

Section 3, the path planning and obstacle avoidance algorithm are designed. In

Section 4, the path planning and obstacle avoidance in unknown canyon simulation tests are discussed. In

Section 5, the study is concluded.

3. SumTree-DDPG Algorithm

This study uses RL methods based on DDPG. Unlike traditional value-based RL, this method can search for strategies directly. Therefore, it can be applied to a continuous high-dimensional action space. DDPG is an actor-critic algorithm. This section introduces the critic, actor, reward function, and replay memory in four aspects and proposes an improved DDPG algorithm (SumTree-DDPG) for AUV path planning and obstacle avoidance.

3.1. Critic

The critic is used to fit the state action value function, including the target Q network and the online Q network, and the two networks are updated alternately. The initial parameters of the two networks,

and

, are equal. After the random sampling of small batch data

from the experience buffer pool, the online value Q network is updated by minimizing the loss value

. The calculation of

is shown in Equation (16).

In Equation (16), Target

refers to the target

value, as shown in Equation (17).

Different from the real-time update of online Q network, the target Q network is updated every other period of time, and its update method is shown in Equation (18).

where

is a preset constant.

3.2. Actor

In the DDPG algorithm, a policy network with the parameter

is used to represent the deterministic policy

. The actor is used to fit the policy function. Its main task is to output the deterministic action value

for the input state

. The update of online policy network parameters is shown in Equation (19).

In Equation (20), the state

follows the

distribution, and

is the online policy network parameter. The target policy network is updated in the same way as the target Q network, and it is updated every once in a while as Formula (20):

In Equation (20), the parameter is a preset constant.

3.3. Reward Function Design

The reward function plays an important role in RL tasks, and it points to the direction of the network parameter update of actors and critics [

50]. The reward function of this study is mainly designed according to the large-scale continuous static obstacle avoidance strategies in

Section 2.2.1 and multiple dynamic obstacle avoidance strategies in

Section 2.2.2. Aiming at the AUV obstacle avoidance problem, this paper designs a reward function algorithm that considers the three aspects of goal, safety, and stability.

AUV’s tendency toward target behavior is reflected in the reward value of target module

. This study combines the gravitational potential field function in

Section 2.2.2 to set the reward value of the target module of the first component of the reward value. The target module reward value function

is designed as follows:

where

is the coordinate of the center position of the target area in the Cartesian coordinate method; and

is the coordinate of the center position of the AUV in the Cartesian coordinate method at time

.

When the AUV reaches the target area, the reward value of the target module will be updated:

where

is a normal number.

The AUV’s obstacle avoidance behavior is set as the safety module reward value

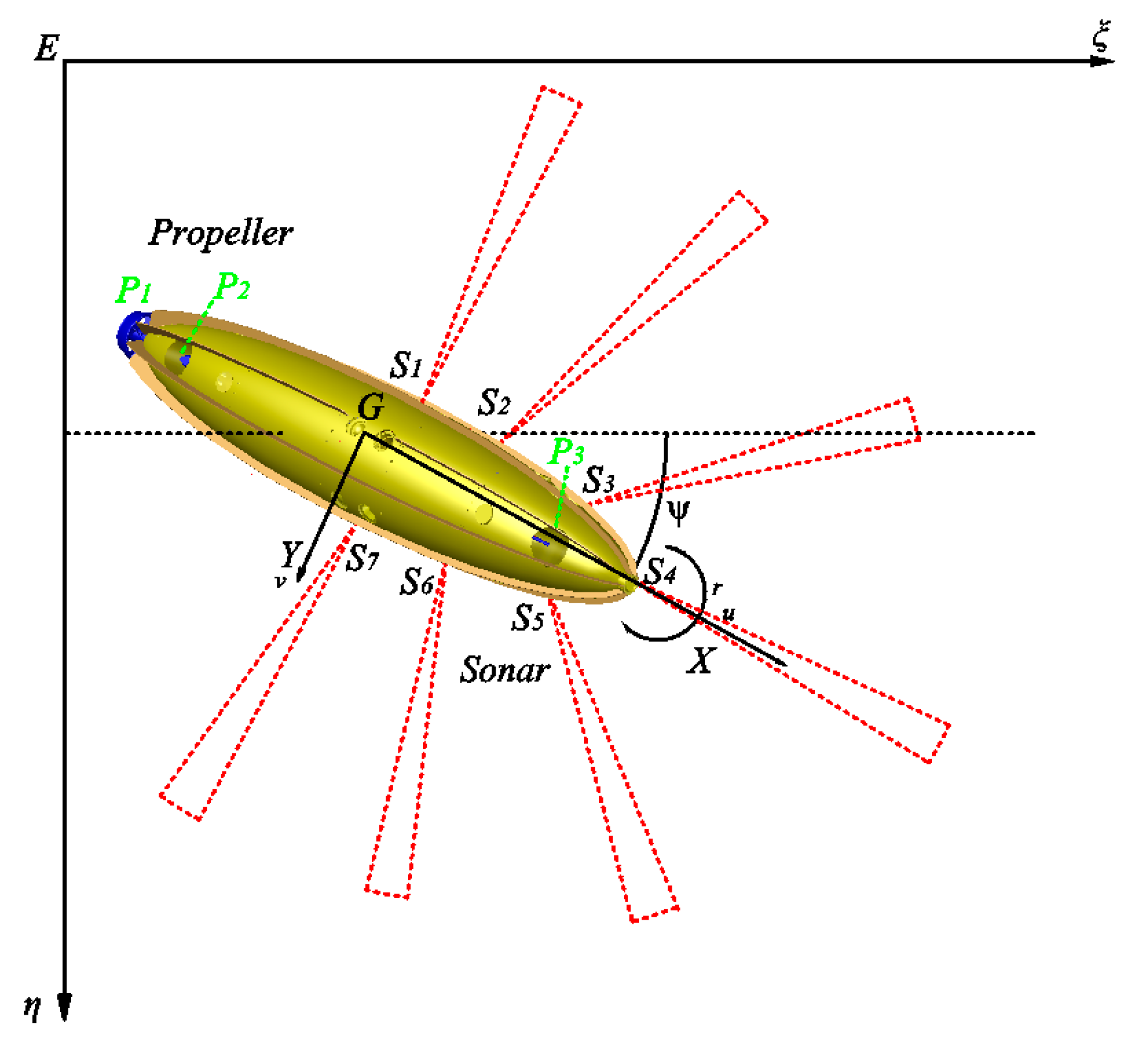

. The obstacles considered in this study include large-scale continuous static obstacle and multiple dynamic obstacles. According to

Section 2.2.1, it is proposed that the distance between the seven sonars controlling AUV and the large-scale continuous static obstacles detected is always greater than or equal to the safe radius of AUV, so that the large-scale continuous static obstacles can be avoided. According to

Section 2.2.2, the method of setting the scope of repulsion potential field of AUV is proposed to avoid collision with dynamic obstacles. Combined with the two obstacle avoidance strategies, the second component of the safety module reward value

is shown in the Equation (23):

where

is the reward value of the safety module; and

is the first component of the safety module

, which is used to avoid large-scale continuous static obstacles.

is the second component of safety module

, which is used to avoid small dynamic obstacles.

The specific process set by

is when the minimum detection distance

of the 7 sonar probes of AUV is twice longer than the safe distance

at time step

, indicating that AUV is safe and the reward value

is 0. When

is true, then the AUV is about to collide the large-scale continuous static obstacle and obtain the continuous negative reward

; when

is less than the safe distance, it means that AUV collides with the large-scale continuous static obstacle and obtains the negative reward

. Therefore, the expression of

is:

where

is the minimum detection distance of the 7 sonar probes of AUV between AUV and the large-scale continuous static obstacle at time step

;

is the set safety margin; and

is a normal number.

The specific process set by

is when the 7 sonars of AUV detect that the dynamic obstacle does not enter the repulsion area of AUV, indicating that AUV is safe and the reward value is 0. When the dynamic obstacle enters the repulsion area of AUV, then the AUV will get a continuous negative reward, and the closer the distance between the obstacle and AUV, the more negative reward it will get. If the dynamic obstacle finally reaches the safe radius of AUV, then the two collide, and the negative reward value

will be obtained. Therefore, the expression of

is:

where

is the position coordinate of the AUV in the Cartesian coordinate method at time

;

is the position coordinate of the dynamic obstacle in the Cartesian coordinate method at time

;

and

are the distance between the long axis and the short axis after expanding the AUV into an ellipsoid, respectively;

the set safety margin; and

is a normal number.

To improve the robustness of the AUV obstacle avoidance method and enhance the ability of the AUV to maintain the heading and speed when it is in a safe local area and approaching the target point, this paper designs the stability reward value function as follows.

where

represents the first component of current interference stability module value reward of the total reward

for time step t time

;

and

of the Formula (26) represent respectively the current moment and the next moment of AUV’s yaw angular velocity;

and

of the Formula (26) represent respectively the current moment and the next moment of AUV speed.

In this paper, the reward value function used for AUV path planning and obstacle avoidance is shown in Equation (27).

where

,

, and

are the weights of various factors.

The larger the value, the more the trained model focuses on this factor. The specific value needs to be set according to the specific environment and requirements. The algorithm pseudo code of reward function for AUV obstacle avoidance is shown in Algorithm 1.

| Algorithm 1. Reward Algorithm for AUV Obstacle Avoidance |

| 1: Initialize reward value |

| 2: Take action and observe |

| 4: Get the stability reward value function |

|

| 3: if transition from safe region to safe region |

| 4: then the reward value of the target module |

|

| where is the coordinate of the center position of the target area; |

| is the coordinate of the center position of the AUV at time |

| 5: else transition from safe region to unsafe region |

| 6: if AUV encounters large-scale continuous obstacle |

| 7: then the safety module reward value |

| 8: else if AUV encounters multi-dynamic obstacle |

| 9: then |

| 10: else if transition from unsafe region to obstacle region |

| 11: then and restart the exploration |

| 12: else transition from unsafe region to safe region |

| 13: then |

| 14: if transition from safe region to goal region |

| 15: then |

| 16: . |

| where , , and are the weights of various factors |

| 17: end |

3.4. Replay Memory

The DDPG algorithm uses the experience replay method to store the experience samples generated by the agent’s interaction with the environment in the experience buffer pool and randomly sample samples from it to train the network. This method of randomly sampling samples neither considers the different importance of different data, nor does fully consider the diversity of the samples to be drawn, resulting in slower model convergence. To solve this problem, the sample storage and extraction strategy in this paper are to take the method of priority extraction according to the importance of the data, which effectively improves the convergence speed of the model.

In this article, the small batch sample sampling is not random sampling, but according to the sample priority in the memory bank. So this can more effectively find the samples we need to learn. In the DDPG algorithm, the parameters of the strategy network depend on the selection of the value network, and the parameters in the value network are determined by the loss function of the value network. So the sample priority can be defined by the expectation of the difference between the target Q value and the actual Q value. The greater the difference between the target Q value of the value network and the actual Q value, the greater the prediction accuracy of the network parameters, that is, the more the sample needs to be learned, that is, the higher the priority . With priority , this article uses the SumTree method to effectively sample based on . The SumTree method does not sort the obtained samples, which reduces the computing power compared to the sorting algorithm.

SumTree is a tree structure (

Figure 5), the priority

of the sample is stored in the leaf node, and each leaf node corresponds to an index value. Using the index value, the corresponding sample can be accessed. Every two leaf nodes correspond to a parent node of an upper level. The priority of the parent node is equal to the sum of the priorities of the left and right child nodes. Thus, the top of the SumTree is

.

When sampling, this study first divides the priority of the root node (the sum of the priority of all leaf nodes) by the number of samples N and divides the priority from 0 to the sum of priority into N intervals. Then, a number is randomly selected in each interval. Because nodes with higher priority will also occupy a longer interval, the probability of being drawn will also be higher, thus achieving the purpose of priority sampling. Each time a leaf node is drawn, its priority and corresponding sample pool data are returned. N samples

,

are collected from SumTree, and the sampling probability and weight of each sample are shown in the following Equations (28) and (29), respectively.

By improving DDPG experience replay and combining with algorithm 1, the algorithm for AUV path planning and obstacle avoidance is obtained, which we call SumTree-DDPG (Algorithm 2).

| Algorithm 2. SumTree-DDPG Algorithm |

| 1: Randomly initialize critic network and actor with weights and |

| 2: Initialize target network and with weights |

| 3: Initialize the SumTree and define the capacity size |

| 4: for do |

| 5: Initialize a random process for action exploration |

| 6: Receive initial observation state |

| 7: for do |

| 8: Select action according to the current policy and exploration noise |

| 9: Take action and observe |

| 10: Decide reward using Algorithm 1 |

| 11: Store transition in SumTree |

| 12: do |

| Sample a minibatch of transitions , from SumTree |

| with probability-sampling: |

| with importance-sampling weight: |

| while |

| 13: Set |

| 14: Update critic by minimizing the loss: |

| 15: Update the actor policy using the sampled policy gradient: |

|

| 16: Update the target networks: |

|

| 17: Update transition priority: |

| 19: end for |

| 20: end for |

In addition, the structure diagram of the SumTree-DDPG algorithm applied to AUV online path planning is shown in

Figure 6.

: The walls of unknown underwater canyon;

: The walls of unknown underwater canyon;  : Target area;

: Target area;  : AUV.

: AUV.

: The walls of unknown underwater canyon;

: The walls of unknown underwater canyon;  : Target area;

: Target area;  : Dynamic obstacle;

: Dynamic obstacle;  : AUV.

: AUV.

: The walls of unknown underwater canyon;

: The walls of unknown underwater canyon;  : Target area;

: Target area;  : Dynamic obstacle;

: Dynamic obstacle;  : AUV.

: AUV.

: The walls of unknown underwater canyon;

: The walls of unknown underwater canyon;  : Target area;

: Target area;  : AUV.

: AUV.

: The walls of unknown underwater canyon;

: The walls of unknown underwater canyon;  : Target area;

: Target area;  : AUV.

: AUV.

: The walls of unknown underwater canyon;

: The walls of unknown underwater canyon;  : Target area;

: Target area;  : Dynamic obstacle;

: Dynamic obstacle;  : AUV.

: AUV.

: The walls of unknown underwater canyon;

: The walls of unknown underwater canyon;  : Target area;

: Target area;  : Dynamic obstacle;

: Dynamic obstacle;  : AUV.

: AUV.