Autonomous Underwater Vehicles and Field of View in Underwater Operations

Abstract

1. Introduction

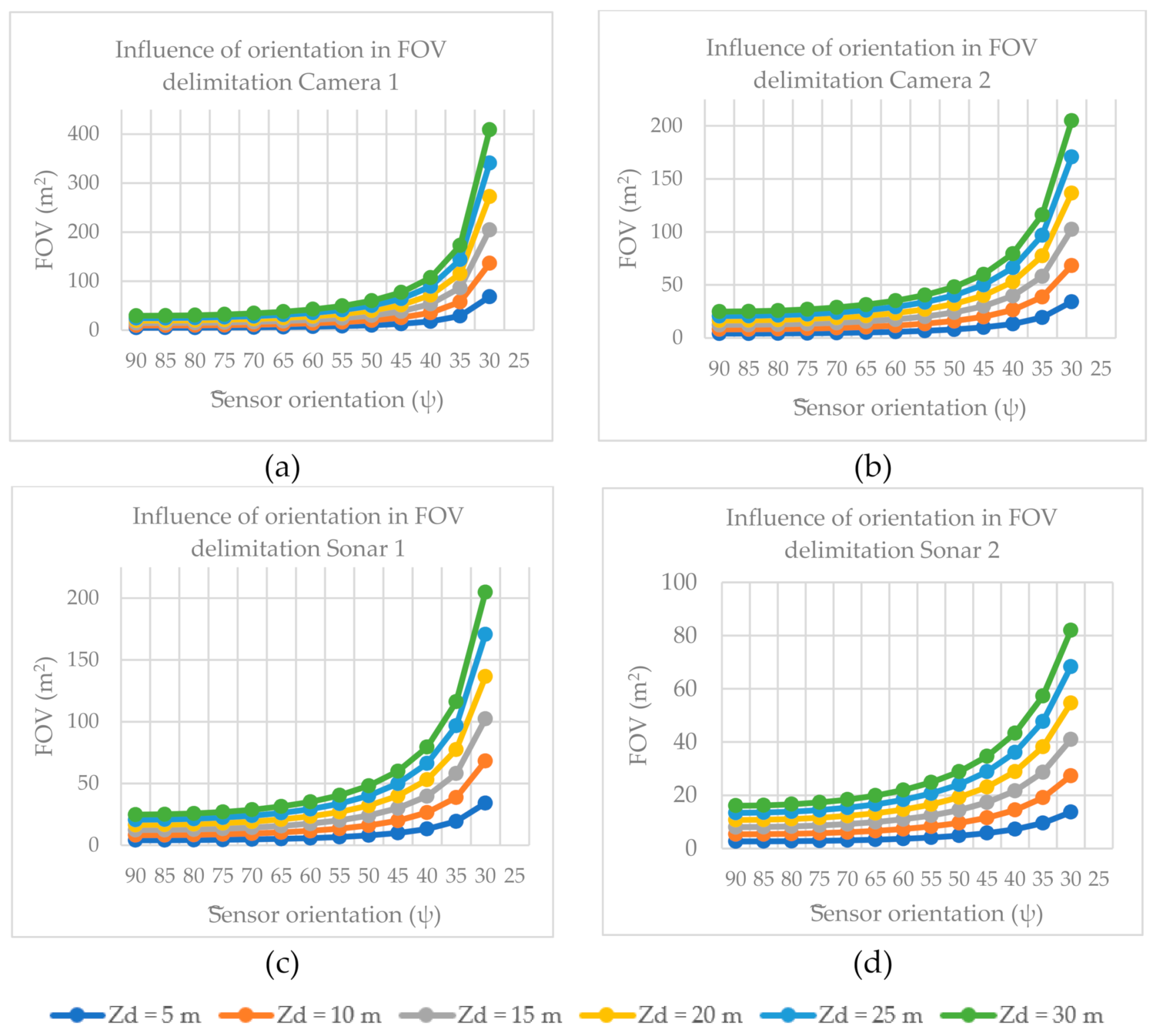

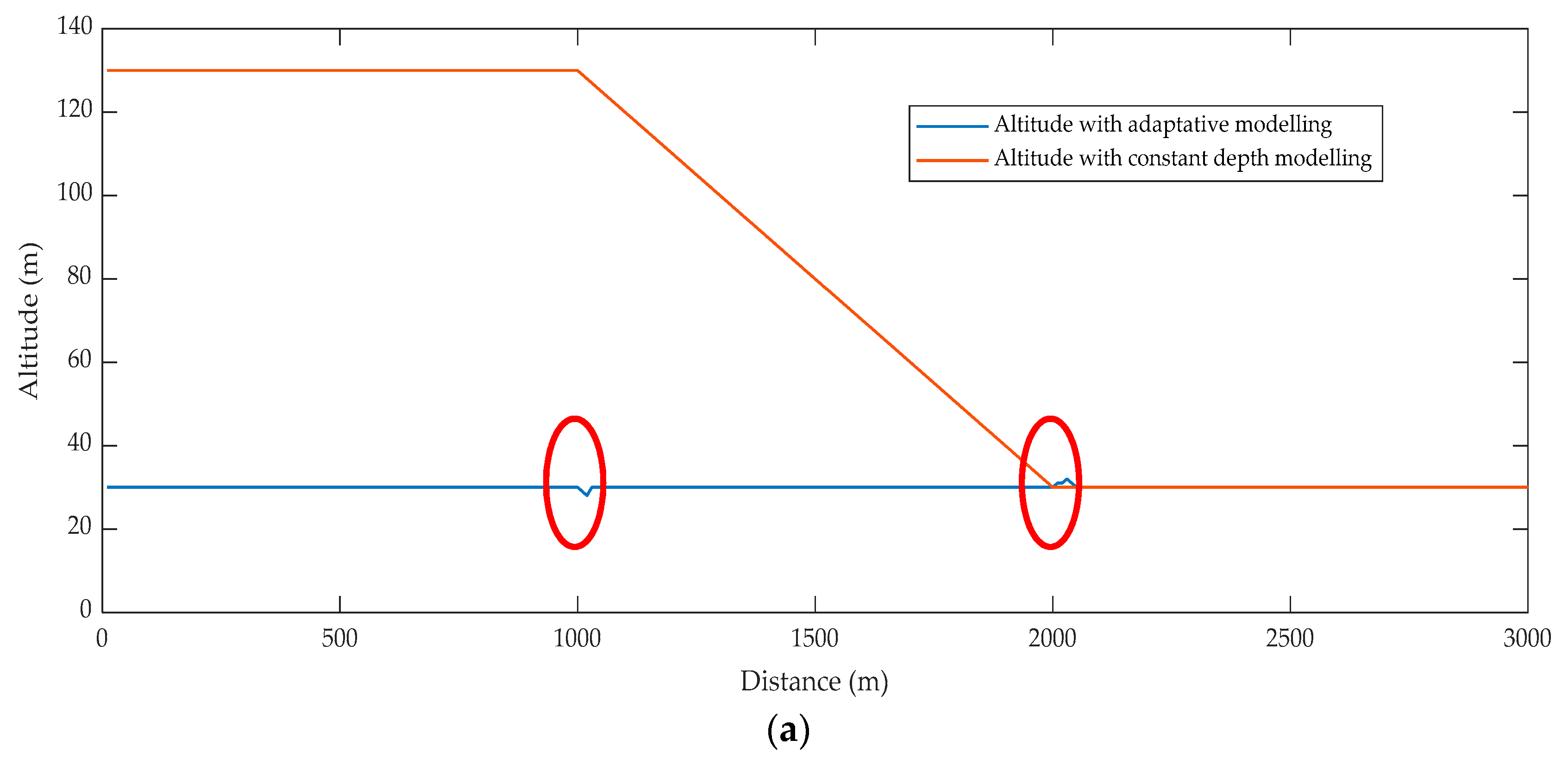

2. Approach and FOV Definition

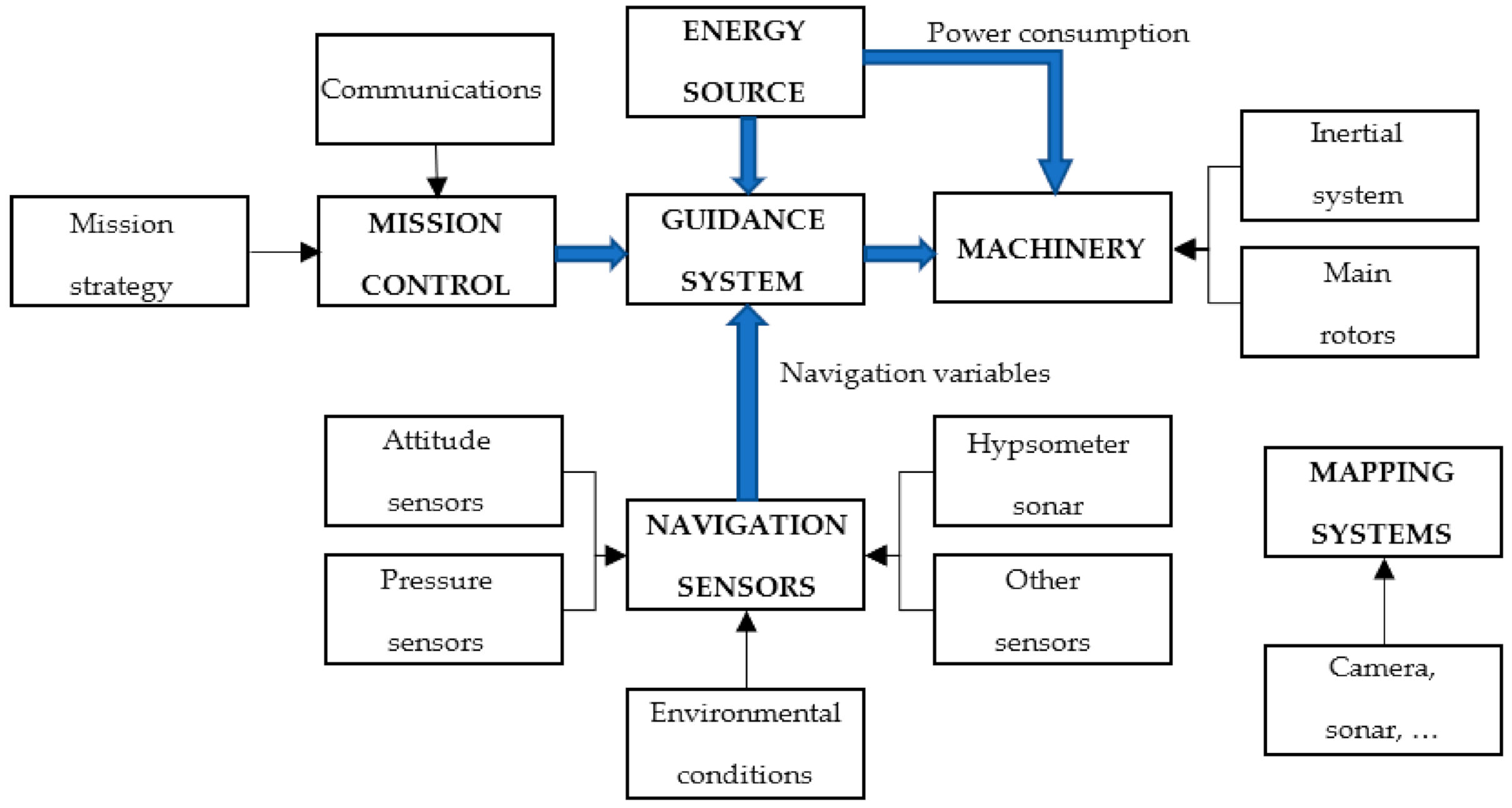

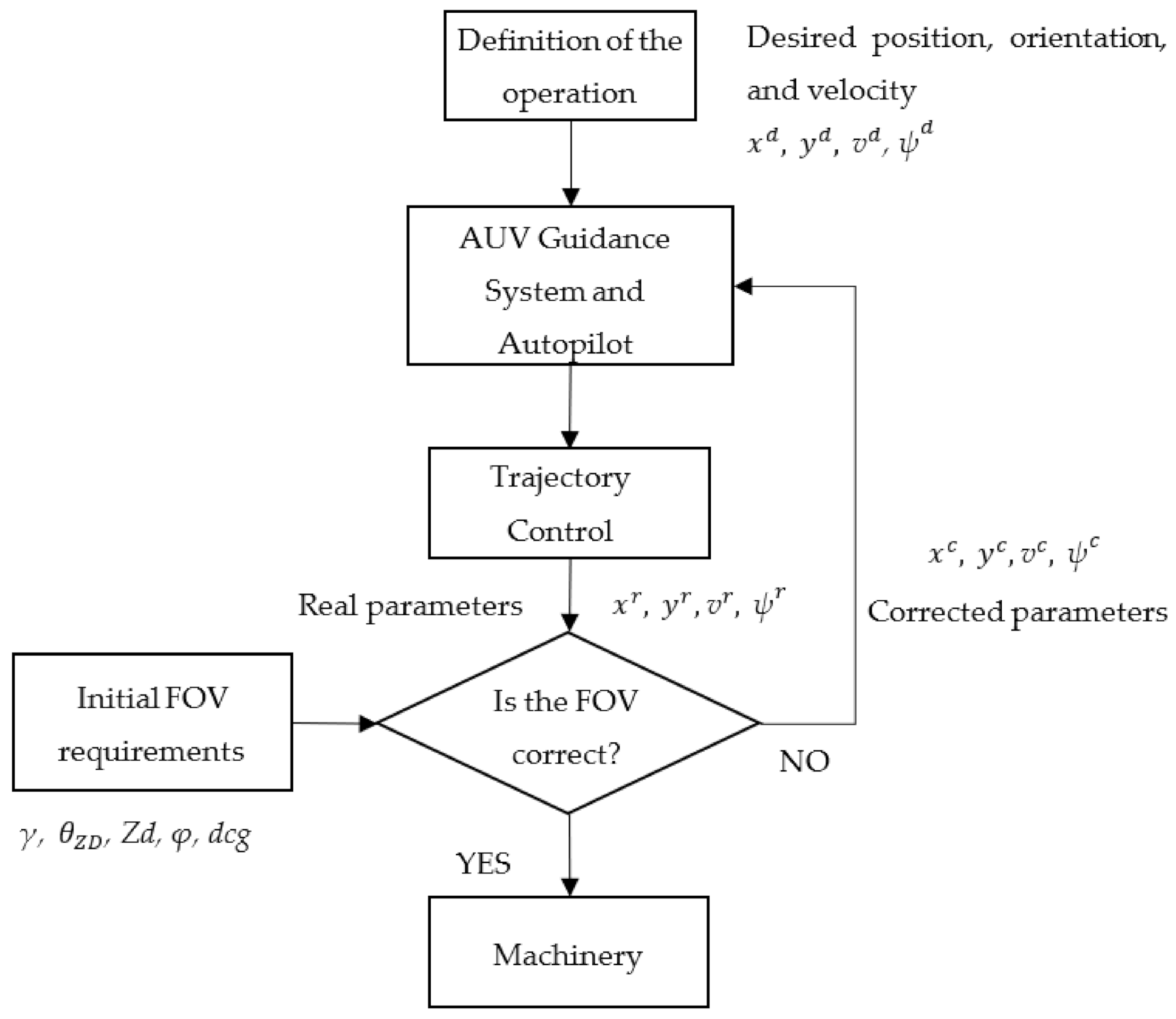

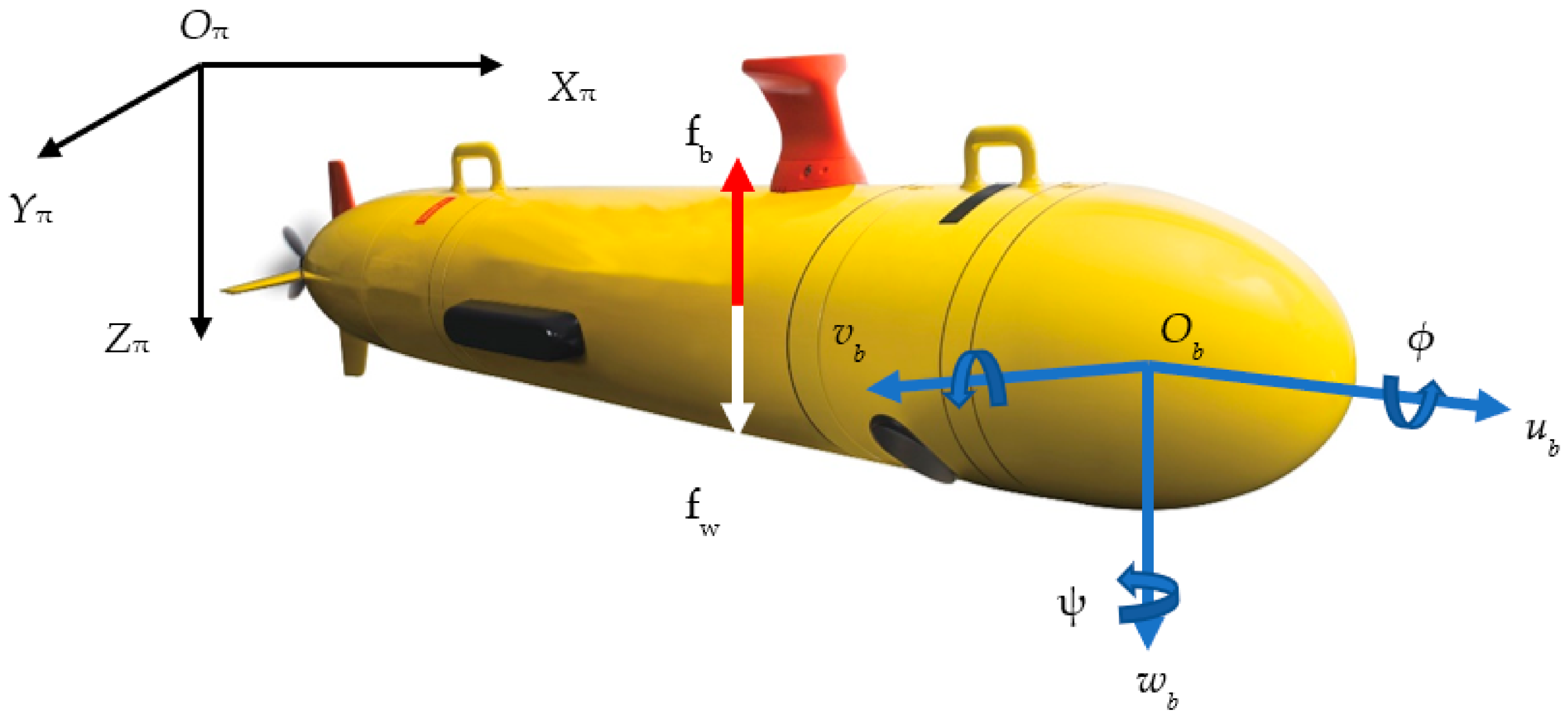

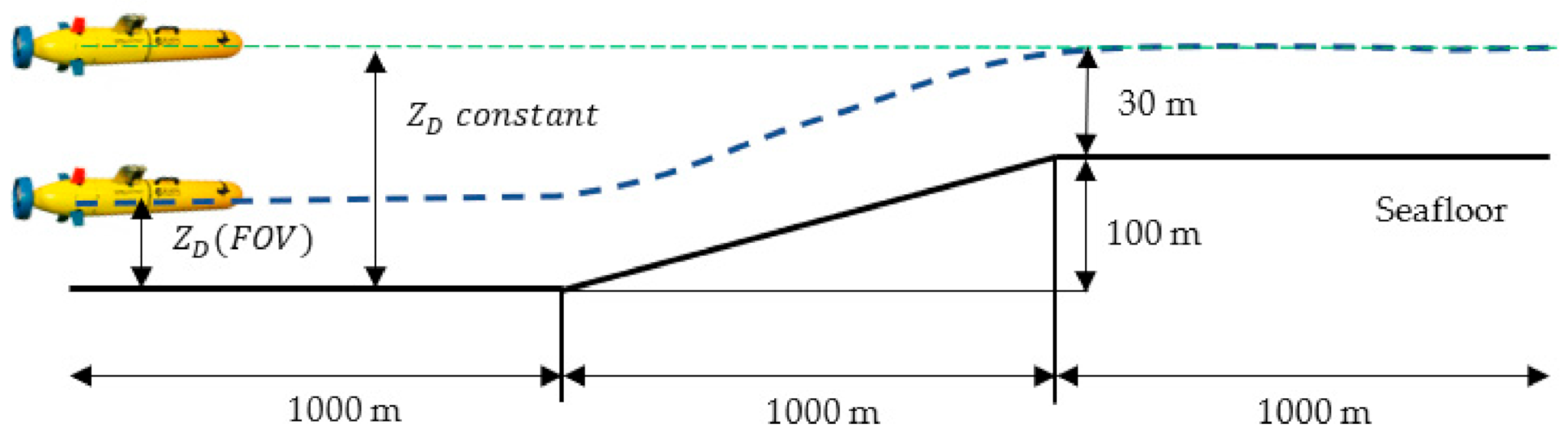

2.1. AUV Guidance System

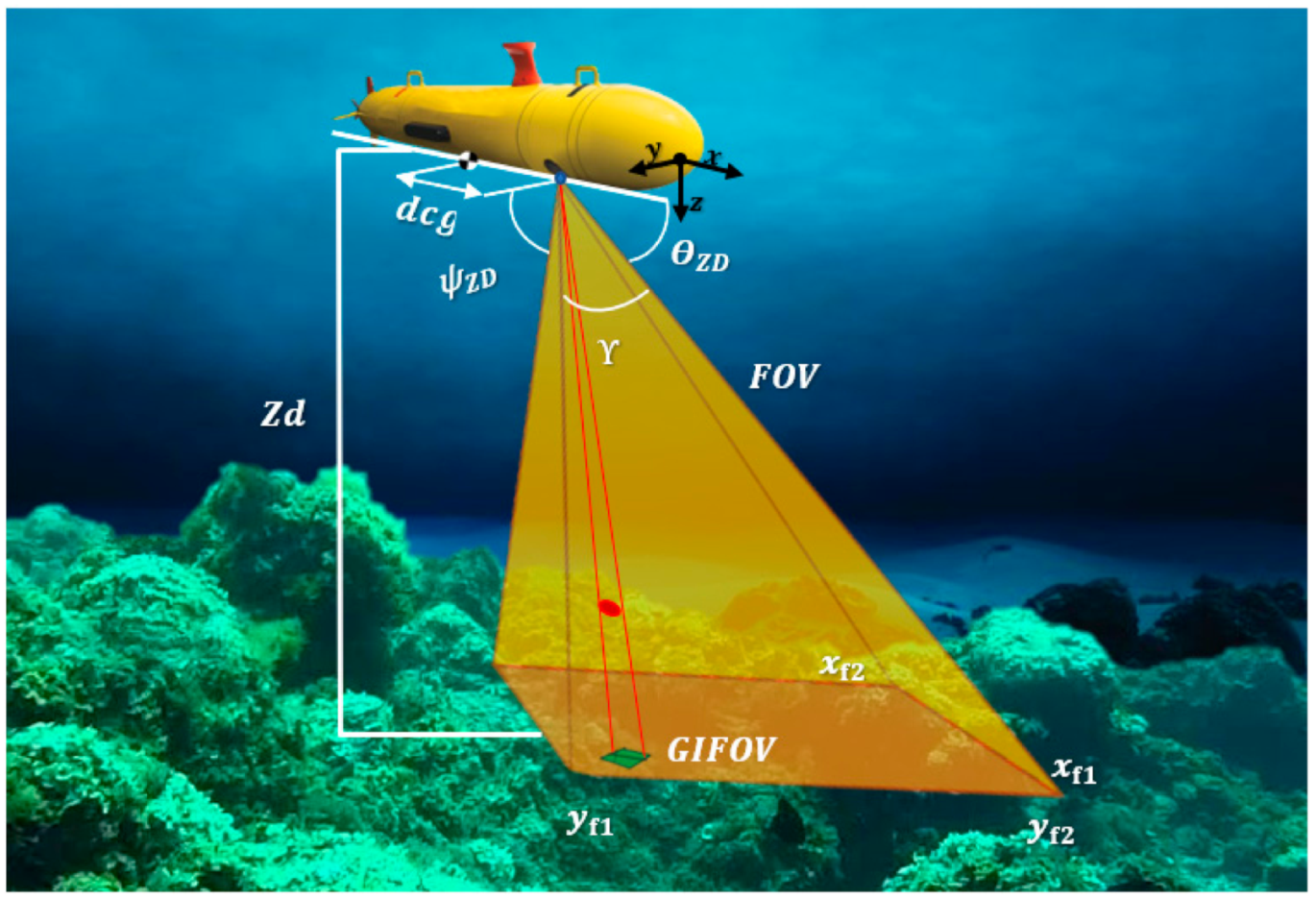

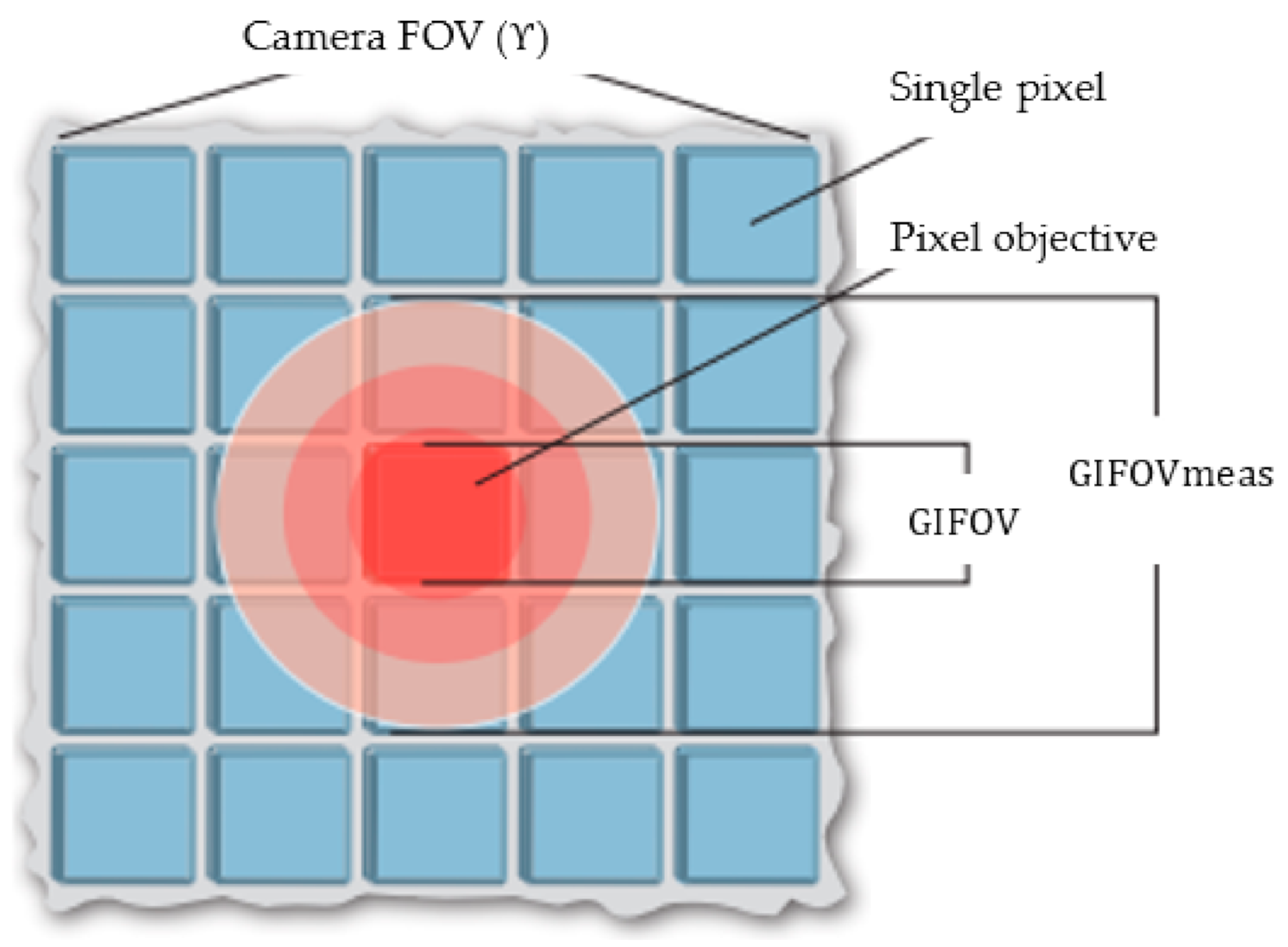

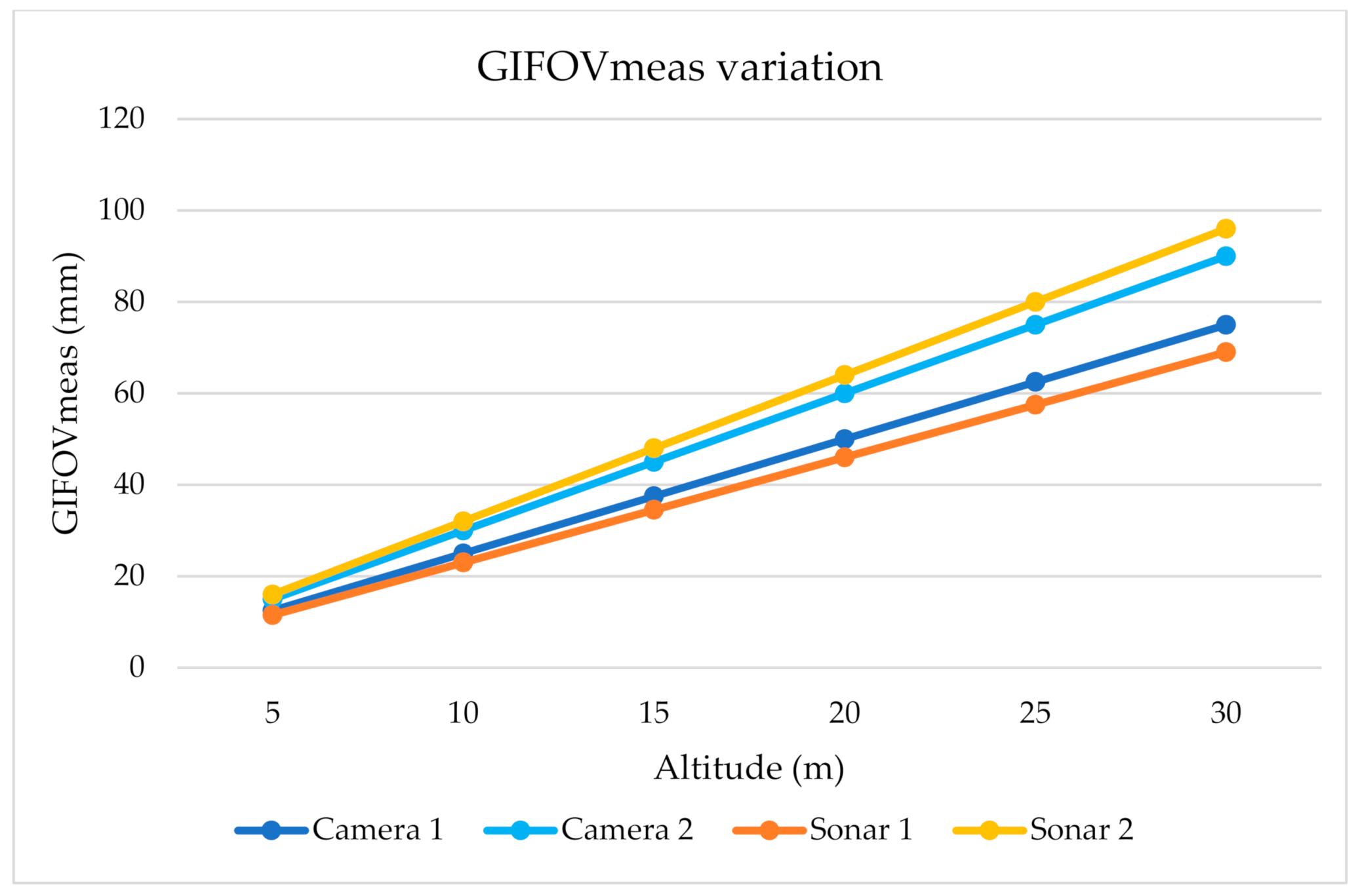

2.2. Determination of Underwater FOV

3. Case Studies and Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mayer, L.; Jakobsson, M.; Allen, G.; Dorschel, B.; Falconer, R.; Ferrini, V.; Lamarche, G.; Snaith, H.; Weatherall, P. The Nippon Foundation—GEBCO seabed 2030 project: The quest to see the world’s oceans completely mapped by 2030. Geosciences 2018, 8, 63. [Google Scholar] [CrossRef]

- Wölfl, A.-C.; Snaith, H.; Amirebrahimi, S.; Devey, C.W.; Dorschel, B.; Ferrini, V.; Huvenne, V.A.; Jakobsson, M.; Jencks, J.; Johnston, G. Seafloor Mapping–the challenge of a truly global ocean bathymetry. Front. Mar. Sci. 2019, 6, 283. [Google Scholar] [CrossRef]

- Jones, D.O.B.; Gates, A.R.; Huvenne, V.A.I.; Phillips, A.B.; Bett, B.J. Autonomous marine environmental monitoring: Application in decommissioned oil fields. Sci. Total Environ. 2019, 668, 835–853. [Google Scholar] [CrossRef]

- Li, J.-H.; Park, D.; Ki, G. Autonomous swimming technology for an AUV operating in the underwater jacket structure environment. Int. J. Nav. Archit. Ocean Eng. 2019, 11, 679–687. [Google Scholar] [CrossRef]

- Jung, M.-J.; Park, B.-C.; Bae, J.-H.; Shin, S.-H. PAUT-based defect detection method for submarine pressure hulls. Int. J. Nav. Archit. Ocean Eng. 2018, 10, 153–169. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, Y.; Su, Y.; Cao, J.; Li, Y.; Wang, Y.; Sun, Y. Statics variation analysis due to spatially moving of a full ocean depth autonomous underwater vehicle. Int. J. Nav. Archit. Ocean Eng. 2019, 11, 448–461. [Google Scholar] [CrossRef]

- Gonen, B.; Akkaya, K.; Senel, F. Efficient camera selection for maximized target coverage in underwater acoustic sensor networks. In Proceedings of the 2015 IEEE 40th Conference onLocal Computer Networks (LCN), Clearwater Beach, FL, USA, 26–29 October 2015; pp. 470–473. [Google Scholar]

- Huang, H.; Zhou, Z.; Li, H.; Zhou, H.; Xu, Y. The effects of the circulating water tunnel wall and support struts on hydrodynamic coefficients estimation for autonomous underwater vehicles. Int. J. Nav. Archit. Ocean Eng. 2020, 12, 1–10. [Google Scholar] [CrossRef]

- Campos, E.; Monroy, J.; Abundis, H.; Chemori, A.; Creuze, V.; Torres, J. A nonlinear controller based on saturation functions with variable parameters to stabilize an AUV. Int. J. Nav. Archit. Ocean Eng. 2019, 11, 211–224. [Google Scholar] [CrossRef]

- Gao, T.; Wang, Y.; Pang, Y.; Cao, J. Hull shape optimization for autonomous underwater vehicles using CFD. Eng. Appl. Comput. Fluid Mech. 2016, 10, 599–607. [Google Scholar] [CrossRef]

- Sun, Y.-S.; Ran, X.-R.; Li, Y.-M.; Zhang, G.-C.; Zhang, Y.-H. Thruster fault diagnosis method based on Gaussian particle filter for autonomous underwater vehicles. Int. J. Nav. Archit. Ocean Eng. 2016, 8, 243–251. [Google Scholar] [CrossRef]

- Belkin, I.; Sousa, J.B.d.; Pinto, J.; Mendes, R.; López-Castejón, F. Marine robotics exploration of a large-scale open-ocean front. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV), Porto, Portugal, 6–9 November 2018; pp. 1–4. [Google Scholar]

- Pliego Marugán, A.; Garcia Marquez, F.P.; Lev, B. Optimal decision-making via binary decision diagrams for investments under a risky environment. Int. J. Prod. Res. 2017, 55, 5271–5286. [Google Scholar] [CrossRef]

- Marini, S.; Gjeci, N.; Govindaraj, S.; But, A.; Sportich, B.; Ottaviani, E.; Márquez, F.P.G.; Bernalte Sanchez, P.J.; Pedersen, J.; Clausen, C.V. ENDURUNS: An Integrated and Flexible Approach for Seabed Survey Through Autonomous Mobile Vehicles. J. Mar. Sci. Eng. 2020, 8, 633. [Google Scholar] [CrossRef]

- Li, C.; Wang, P.; Li, T.; Dong, H. Performance study of a simplified shape optimization strategy for blended-wing-body underwater gliders. Int. J. Nav. Archit. Ocean Eng. 2020, 12, 455–467. [Google Scholar] [CrossRef]

- Sánchez, P.J.B.; Papaelias, M.; Márquez, F.P.G. Autonomous underwater vehicles: Instrumentation and measurements. IEEE Instrum. Meas. Mag. 2020, 23, 105–114. [Google Scholar] [CrossRef]

- Chen, H.-H.; Wang, C.-C.; Shiu, D.-C.; Lin, Y.-H. A preliminary study on positioning of an underwater vehicle based on feature matching of Seafloor Images. In Proceedings of the 2018 OCEANS-MTS/IEEE Kobe Techno-Oceans (OTO), Kobe, Japan, 28–31 May 2018; pp. 1–6. [Google Scholar]

- Qiao, L.; Zhang, W. Adaptive non-singular integral terminal sliding mode tracking control for autonomous underwater vehicles. IET Control Theory Appl. 2017, 11, 1293–1306. [Google Scholar] [CrossRef]

- Márquez, F.P.G. A new method for maintenance management employing principal component analysis. Struct. Durab. Health Monit. 2010, 6, 89. [Google Scholar]

- Clarke, J.E.H. Multibeam echosounders. In Submarine Geomorphology; Springer: Berlin, Germany, 2018; pp. 25–41. [Google Scholar]

- Herraiz, Á.H.; Marugán, A.P.; Márquez, F.P.G. Photovoltaic plant condition monitoring using thermal images analysis by convolutional neural network-based structure. Renew. Energy 2020, 153, 334–348. [Google Scholar] [CrossRef]

- Noel, C.; Viala, C.; Marchetti, S.; Bauer, E.; Temmos, J. New tools for seabed monitoring using multi-sensors data fusion. In Quantitative Monitoring of the Underwater Environment; Springer: Berlin, Germany, 2016; pp. 25–30. [Google Scholar]

- Segovia Ramírez, I.; Bernalte Sánchez, P.J.; Papaelias, M.; García Márquez, F.P. Autonomous underwater vehicles inspection management: Optimization of field of view and measurement process. In Proceedings of the 13th International Conference on Industrial Engineering and Industrial Management, Gijón, Spain, 11–12 July 2019. [Google Scholar]

- Bobkov, V.A.; Mashentsev, V.Y.; Tolstonogov, A.Y.; Scherbatyuk, A.P. Adaptive method for AUV navigation using stereo vision. In Proceedings of the 26th International Ocean and Polar Engineering Conference, Rhodes, Greece, 26 June–2 July 2016. [Google Scholar]

- Iscar, E.; Barbalata, C.; Goumas, N.; Johnson-Roberson, M. Towards low cost, deep water AUV optical mapping. In Proceedings of the OCEANS 2018 MTS/IEEE Charleston, Charleston, SC, USA, 22–25 October 2018; pp. 1–6. [Google Scholar]

- Shea, D.; Dawe, D.; Dillon, J.; Chapman, S. Real-time SAS processing for high-arctic AUV surveys. In Proceedings of the 2014 IEEE/OES Autonomous Underwater Vehicles (AUV), Oxford, MS, USA, 6–9 October 2014; pp. 1–5. [Google Scholar]

- Lucieer, V.L.; Forrest, A.L. Emerging Mapping Techniques for Autonomous Underwater Vehicles (AUVs). In Seafloor Mapping along Continental Shelves: Research and Techniques for Visualizing Benthic Environments; Finkl, C.W., Makowski, C., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 53–67. [Google Scholar] [CrossRef]

- Hernández, J.; Istenič, K.; Gracias, N.; Palomeras, N.; Campos, R.; Vidal, E.; Garcia, R.; Carreras, M. Autonomous underwater navigation and optical mapping in unknown natural environments. Sensors 2016, 16, 1174. [Google Scholar] [CrossRef] [PubMed]

- Braginsky, B.; Guterman, H. Obstacle avoidance approaches for autonomous underwater vehicle: Simulation and experimental results. IEEE J. Ocean. Eng. 2016, 41, 882–892. [Google Scholar] [CrossRef]

- Hernández, J.D.; Vidal, E.; Moll, M.; Palomeras, N.; Carreras, M.; Kavraki, L.E. Online motion planning for unexplored underwater environments using autonomous underwater vehicles. J. Field Robot. 2019, 36, 370–396. [Google Scholar] [CrossRef]

- Ramírez, I.S.; Marugán, A.P.; Márquez, F.P.G. Remotely Piloted Aircraft System and Engineering Management: A Real Case Study. In International Conference on Management Science and Engineering Management; Springer: Berlin, Germany, 2018; pp. 1173–1185. [Google Scholar]

- Hou, W.; Gray, D.J.; Weidemann, A.D.; Fournier, G.R.; Forand, J. Automated underwater image restoration and retrieval of related optical properties. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 1889–1892. [Google Scholar]

- Jaffe, J.S. Underwater optical imaging: The past, the present, and the prospects. IEEE J. Ocean. Eng. 2014, 40, 683–700. [Google Scholar] [CrossRef]

- Song, S.; Kim, B.; Yu, S.-C. Optical and acoustic image evaluation method for backtracking of AUV. In Proceedings of the OCEANS 2017-Anchorage, Anchorage, AK, 18–21 September 2017; pp. 1–6. [Google Scholar]

- Lu, H.; Li, Y.; Nakashima, S.; Kim, H.; Serikawa, S. Underwater image super-resolution by descattering and fusion. IEEE Access 2017, 5, 670–679. [Google Scholar] [CrossRef]

- Yau, T.; Gong, M.; Yang, Y.-H. Underwater camera calibration using wavelength triangulation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2499–2506. [Google Scholar]

- Bhopale, P.; Bajaria, P.; Kazi, F.; Singh, N. LMI based depth control for autonomous underwater vehicle. In Proceedings of the 2016 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kumaracoil, India, 16–17 December 2016; pp. 477–481. [Google Scholar]

- Li, J.-H.; Lee, P.-M. Design of an adaptive nonlinear controller for depth control of an autonomous underwater vehicle. Ocean Eng. 2005, 32, 2165–2181. [Google Scholar] [CrossRef]

- Loc, M.B.; Choi, H.-S.; Seo, J.-M.; Baek, S.-H.; Kim, J.-Y. Development and control of a new AUV platform. Int. J. Control Autom. Syst. 2014, 12, 886–894. [Google Scholar] [CrossRef]

- Fossen, T.I. Handbook of Marine Craft Hydrodynamics and Motion Control; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Fossen, T.I. Marine Control Systems–Guidance. Navigation, and Control of Ships, Rigs and Underwater Vehicles. Marine Cybernetics, Trondheim, Norway, Org. Number NO 985 195 005 MVA, ISBN: 8292356002. Available online: www.marinecybernetics.com (accessed on 1 July 2020).

- Gao, J.; Xu, D.; Zhao, N.; Yan, W. A potential field method for bottom navigation of autonomous underwater vehicles. In Proceedings of the 7th World Congress on Intelligent Control and Automation 2008 (WCICA 2008), Chongqing, China, 25–27 June 2008; pp. 7466–7470. [Google Scholar]

- Smith Menandro, P.; Cardoso Bastos, A. Seabed Mapping: A Brief History from Meaningful Words. Geosciences 2020, 10, 273. [Google Scholar] [CrossRef]

- Diesing, M.; Green, S.L.; Stephens, D.; Lark, R.M.; Stewart, H.A.; Dove, D. Mapping seabed sediments: Comparison of manual, geostatistical, object-based image analysis and machine learning approaches. Cont. Shelf Res. 2014, 84, 107–119. [Google Scholar] [CrossRef]

- Elibol, A.; Gracias, N.; Garcia, R. Efficient Topology Estimation for Large Scale Optical Mapping; Springer: Berlin, Germany, 2012; Volume 82. [Google Scholar]

- Segovia, I.; Pliego, A.; Papaelias, M.; Márquez, F.P.G. Optimal Management of Marine Inspection with Autonomous Underwater Vehicles; Springer: Berlin, Germany, 2019; pp. 760–771. [Google Scholar]

- Márquez, F.P.G.; Ramírez, I.S. Condition monitoring system for solar power plants with radiometric and thermographic sensors embedded in unmanned aerial vehicles. Measurement 2019, 139, 152–162. [Google Scholar] [CrossRef]

- Kwasnitschka, T.; Köser, K.; Sticklus, J.; Rothenbeck, M.; Weiß, T.; Wenzlaff, E.; Schoening, T.; Triebe, L.; Steinführer, A.; Devey, C.; et al. DeepSurveyCam—A Deep Ocean Optical Mapping System. Sensors 2016, 16, 164. [Google Scholar] [CrossRef]

- Gonzalo, A.P.; Marugán, A.P.; Márquez, F.P.G. Survey of maintenance management for photovoltaic power systems. Renew. Sustain. Energy Rev. 2020, 134, 110347. [Google Scholar] [CrossRef]

- McCamley, G.; Grant, I.; Jones, S.; Bellman, C. The impact of size variations in the ground instantaneous field of view of pixels on MODIS BRDF modelling. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 302–308. [Google Scholar] [CrossRef]

- Hurtós Vilarnau, N. Forward-Looking Sonar Mosaicing for Underwater Environments. Doctoral Thesis, University of Girona Computer Architecture and Technology Department, Girona, Spain, 2014. [Google Scholar]

- Li, Y.; Constable, S. 2D marine controlled-source electromagnetic modeling: Part 2—The effect of bathymetry. Geophysics 2007, 72, WA63–WA71. [Google Scholar] [CrossRef]

- Wu, H.; Song, S.; You, K.; Wu, C. Depth control of model-free AUVs via reinforcement learning. IEEE Trans. Syst. ManCybern. Syst. 2018, 49, 2499–2510. [Google Scholar] [CrossRef]

| Year | Data Acquisition System | Model | Ground Resolution (mm) | Sensor FOV (γ) |

|---|---|---|---|---|

| 2014 | Sonar 1 | BlueViewP900-45 (Sonar) | 2, 3 | 45° |

| 2010 | Camera 1 | AVT Prosilica (Camera) | 2, 5 | 52° |

| 2014 | Sonar 2 | ARIS Explorer 3000 (Sonar) | 3, 2 | 30° |

| 2015 | Camera 2 | AVT Prosilica GC 1380 (Camera) | 3 | 45° |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ramírez, I.S.; Bernalte Sánchez, P.J.; Papaelias, M.; Márquez, F.P.G. Autonomous Underwater Vehicles and Field of View in Underwater Operations. J. Mar. Sci. Eng. 2021, 9, 277. https://doi.org/10.3390/jmse9030277

Ramírez IS, Bernalte Sánchez PJ, Papaelias M, Márquez FPG. Autonomous Underwater Vehicles and Field of View in Underwater Operations. Journal of Marine Science and Engineering. 2021; 9(3):277. https://doi.org/10.3390/jmse9030277

Chicago/Turabian StyleRamírez, Isaac Segovia, Pedro José Bernalte Sánchez, Mayorkinos Papaelias, and Fausto Pedro García Márquez. 2021. "Autonomous Underwater Vehicles and Field of View in Underwater Operations" Journal of Marine Science and Engineering 9, no. 3: 277. https://doi.org/10.3390/jmse9030277

APA StyleRamírez, I. S., Bernalte Sánchez, P. J., Papaelias, M., & Márquez, F. P. G. (2021). Autonomous Underwater Vehicles and Field of View in Underwater Operations. Journal of Marine Science and Engineering, 9(3), 277. https://doi.org/10.3390/jmse9030277