An Anomaly Detection Model for Oil and Gas Pipelines Using Machine Learning

Abstract

:1. Introduction

- An automated system is developed to identify anomalies in the oil and gas pipeline;

- A comparison of five ML algorithms to detect pipeline leakage using industrial datasets is performed;

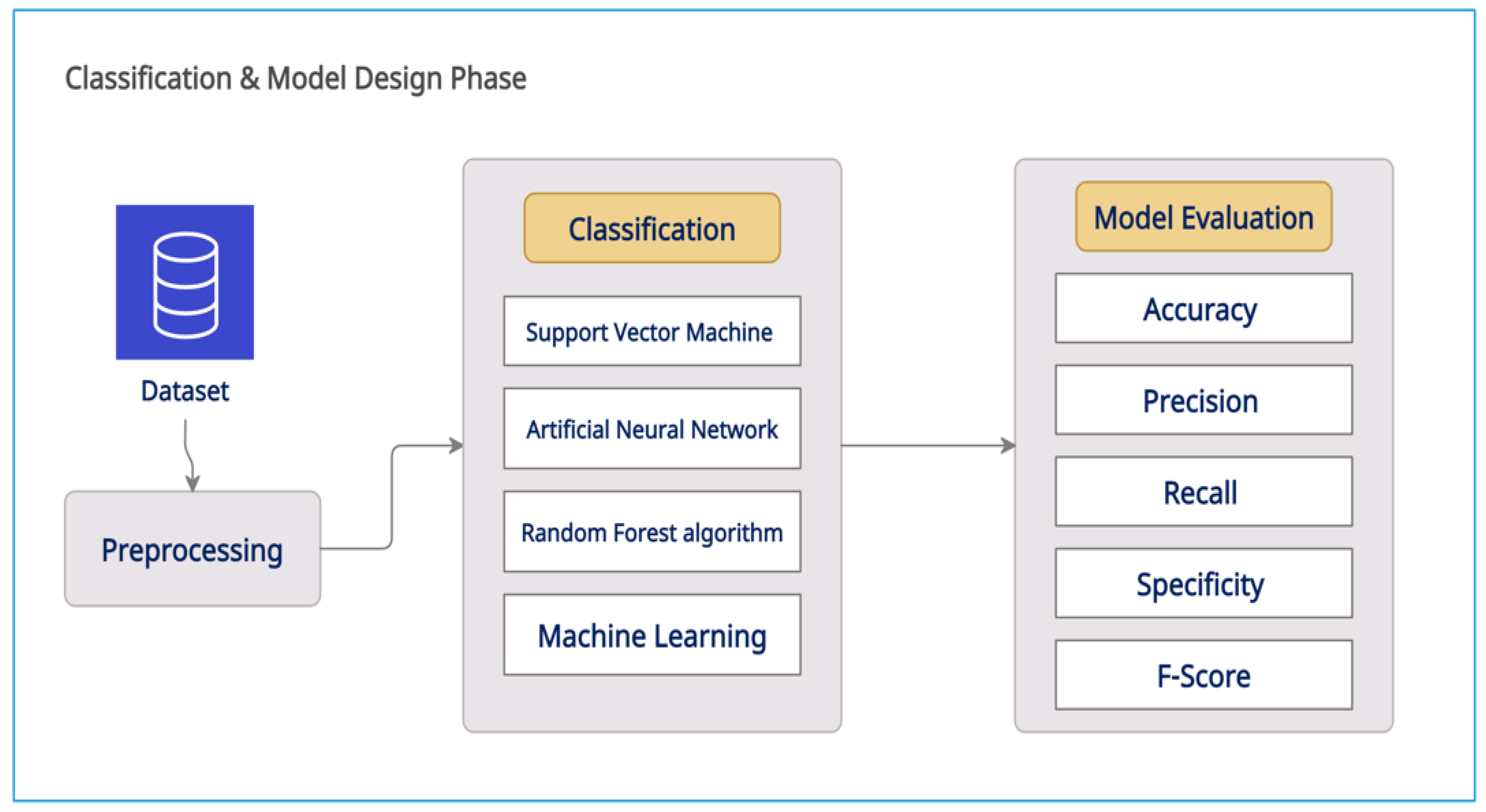

- Evaluation methodology in terms of accuracy, precision, recall, F1-score, accuracy, and ROC-AUC is proposed;

- An optimisation technique is used to increase the performance of the proposed models.

2. Related Work

3. Methodology

3.1. Data Collection

3.2. Data Preprocessing

3.2.1. Label Binarizing

3.2.2. Features Scaling

3.3. Classification and Model Design

3.3.1. Support Vector Machine

3.3.2. Decision Tree

3.3.3. Random Forest

3.3.4. K-Nearest Neighbour

3.3.5. Gradient Boosting

3.4. Parameter Tuning

Grid Search

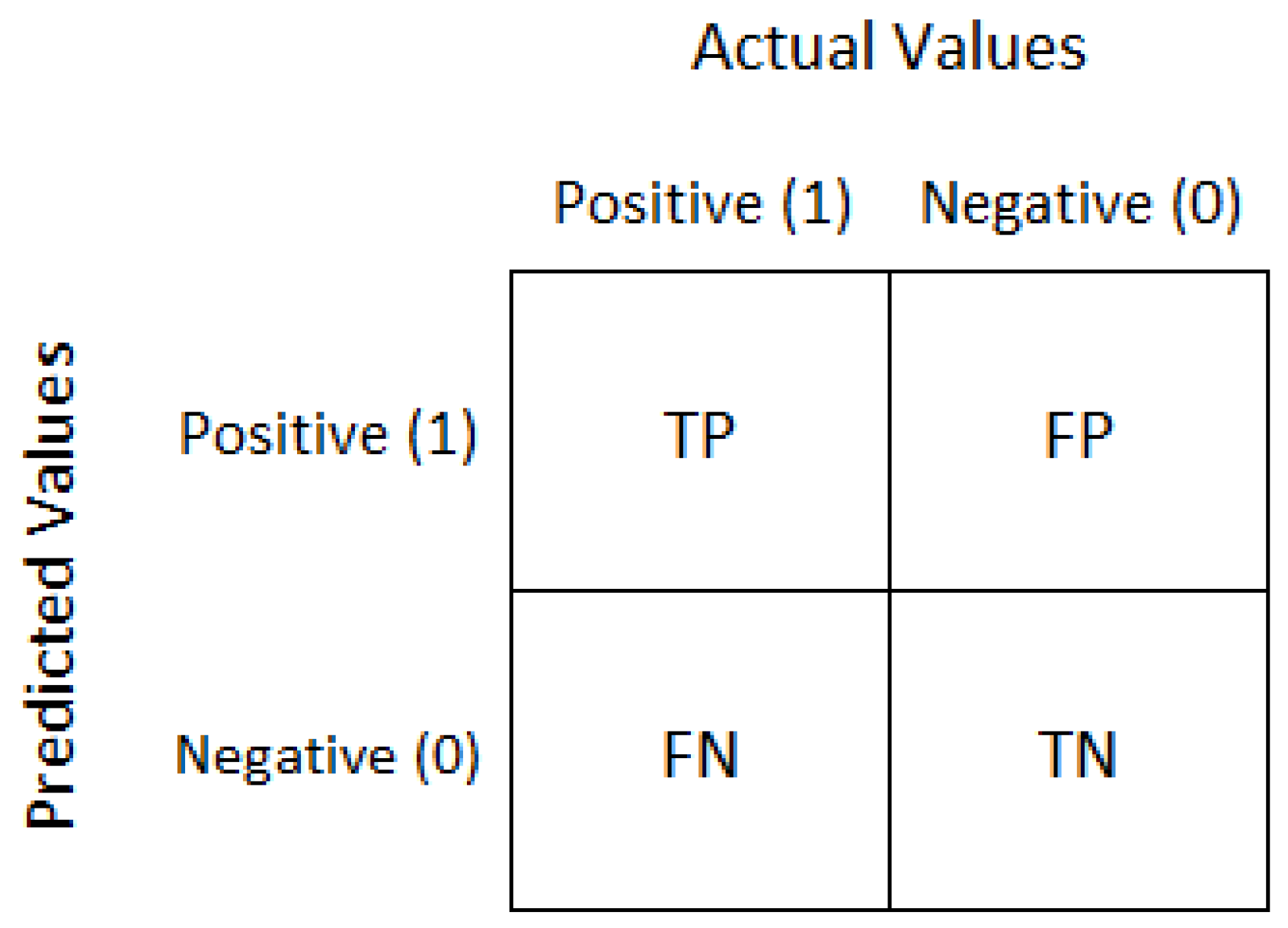

3.5. Evaluation Metrics

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nooralishahi, P.; López, F.; Maldague, X. A Drone-Enabled Approach for Gas Leak Detection Using Optical Flow Analysis. Appl. Sci. 2021, 11, 1412. [Google Scholar] [CrossRef]

- Meribout, M.; Khezzar, L.; Azzi, A.; Ghendour, N. Leak detection systems in oil and gas fields: Present trends and future prospects. Flow Meas. Instrum. 2020, 75, 101772. [Google Scholar] [CrossRef]

- What Is Artificial Intelligence (AI)? Oracle Saudi Arabia. Available online: https://www.oracle.com/sa/artificial-intelligence/what-is-ai/ (accessed on 3 June 2022).

- Wang, F.; Liu, Z.; Zhou, X.; Li, S.; Yuan, X.; Zhang, Y.; Shao, L.; Zhang, X. (INVITED)Oil and Gas Pipeline Leakage Recognition Based on Distributed Vibration and Temperature Information Fusion. Results Opt. 2021, 5, 100131. [Google Scholar] [CrossRef]

- Xiao, R.; Hu, Q.; Li, J. Leak detection of gas pipelines using acoustic signals based on wavelet transform and Support Vector Machine. Measurement 2019, 146, 479–489. [Google Scholar] [CrossRef]

- A Convolutional Neural Network Based Solution for Pipeline Leak Detection (PDF). Available online: https://www.researchgate.net/publication/337060339_A_Convolutional_Neural_Network_Based_Solution_for_Pipeline_Leak_Detection (accessed on 30 March 2022).

- De Kerf, T.; Gladines, J.; Sels, S.; Vanlanduit, S. Oil Spill Detection Using Machine Learning and Infrared Images. Remote Sens. 2020, 12, 4090. [Google Scholar] [CrossRef]

- IEEE Xplore Full-Text PDF. Available online: https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=9226415 (accessed on 30 March 2022).

- Lu, J.; Yue, J.; Jiang, C.; Liang, H.; Zhu, L. Feature extraction based on variational mode decomposition and support vector machine for natural gas pipeline leakage. Trans. Inst. Meas. Control 2020, 42, 759–769. [Google Scholar] [CrossRef]

- Melo, R.O.; Costa, M.G.F.; Costa Filho, C.F.F. Applying convolutional neural networks to detect natural gas leaks in wellhead images. IEEE Access 2020, 8, 191775–191784. [Google Scholar] [CrossRef]

- Abimbola-Ai/Oil-and-Gas-Pipeline-Leakage. Available online: https://github.com/Abimbola-ai/Oil-and-gas-pipeline-leakage (accessed on 21 November 2021).

- Kotsiantis, S.B.; Kanellopoulos, D. Data preprocessing for supervised leaning. Int. J. 2011, 60, 143–151. [Google Scholar] [CrossRef]

- Binarize Label Hivemall User Manual. Available online: https://hivemall.apache.org/userguide/ft_engineering/binarize.html (accessed on 2 March 2022).

- Machine Learning: When to Perform a Feature Scaling—Atoti. Available online: https://www.atoti.io/when-to-perform-a-feature-scaling/ (accessed on 21 November 2021).

- Feature Scaling Standardization vs. Normalization. Available online: https://www.analyticsvidhya.com/blog/2020/04/feature-scaling-machine-learning-normalization-standardization/ (accessed on 21 November 2021).

- Splitting a Dataset. Here I Explain How to Split Your Data… by Nischal Madiraju towards Data Science. Available online: https://towardsdatascience.com/splitting-a-dataset-e328dab2760a (accessed on 19 November 2021).

- Train-Test Split for Evaluating Machine Learning Algorithms. Available online: https://machinelearningmastery.com/train-test-split-for-evaluating-machine-learning-algorithms/ (accessed on 19 November 2021).

- OpenML. Available online: https://www.openml.org/a/estimation-procedures/1 (accessed on 19 November 2021).

- Jakkula, V. Tutorial on Support Vector Machine (SVM). Available online: https://course.ccs.neu.edu/cs5100f11/resources/jakkula.pdf (accessed on 1 July 2022).

- Support Vector Machine—Introduction to Machine Learning Algorithms by Rohith Gandhi Towards Data Science. Available online: https://towardsdatascience.com/support-vector-machine-introduction-to-machine-learning-algorithms-934a444fca47 (accessed on 23 November 2021).

- Negoita, M.; Reusch, B. (Eds.) Real World Applications of Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2005; Volume 179. [Google Scholar] [CrossRef]

- A Quick Introduction to Neural Networks—The Data Science Blog. Available online: https://ujjwalkarn.me/2016/08/09/quick-intro-neural-networks/ (accessed on 22 November 2021).

- Decision Tree Algorithm, Explained—KDnuggets. Available online: https://www.kdnuggets.com/2020/01/decision-tree-algorithm-explained.html (accessed on 2 March 2022).

- So, A.; Hooshyar, D.; Park, K.W.; Lim, H.S. Early Diagnosis of Dementia from Clinical Data by Machine Learning Techniques. Appl. Sci. 2017, 7, 651. [Google Scholar] [CrossRef]

- Visualization of a Random Forest Model Making a Prediction Download Scientific Diagram. Available online: https://www.researchgate.net/figure/21-Visualization-of-a-random-forest-model-making-a-prediction_fig20_341794164 (accessed on 11 April 2022).

- Understanding Random Forest. How the Algorithm Works and Why It Is… by Tony Yiu towards Data Science. Available online: https://towardsdatascience.com/understanding-random-forest-58381e0602d2 (accessed on 21 November 2021).

- Random Forest—Wikipedia. Available online: https://en.wikipedia.org/wiki/Random_forest (accessed on 21 November 2021).

- Random Forest Algorithms: A Complete Guide Built in. Available online: https://builtin.com/data-science/random-forest-algorithm (accessed on 21 November 2021).

- K-Nearest Neighbor Algorithm in Java GridDB: Open Source Time Series Database for IoT by Israel Imru GridDB Medium. Available online: https://medium.com/griddb/k-nearest-neighbor-algorithm-in-java-griddb-open-source-time-series-database-for-iot-6bf934eb8c05 (accessed on 2 March 2022).

- K-Nearest Neighbor (KNN) Algorithm for Machine Learning—Javatpoint. Available online: https://www.javatpoint.com/k-nearest-neighbor-algorithm-for-machine-learning (accessed on 2 March 2022).

- A Beginner’s Guide to Supervised Machine Learning Algorithms by Soner Yıldırım Towards Data Science. Available online: https://towardsdatascience.com/a-beginners-guide-to-supervised-machine-learning-algorithms-6e7cd9f177d5 (accessed on 2 March 2022).

- Hyperparameter Tuning for Machine Learning Models. Available online: https://www.jeremyjordan.me/hyperparameter-tuning/ (accessed on 2 March 2022).

- An Introduction to Grid Search CV What Is Grid Search. Available online: https://www.mygreatlearning.com/blog/gridsearchcv/ (accessed on 2 March 2022).

- Performance Metrics in Machine Learning [Complete Guide]—Neptune.ai. Available online: https://neptune.ai/blog/performance-metrics-in-machine-learning-complete-guide (accessed on 19 November 2021).

- Understanding Confusion Matrix by Sarang Narkhede towards Data Science. Available online: https://towardsdatascience.com/understanding-confusion-matrix-a9ad42dcfd62 (accessed on 20 November 2021).

- Confusion Matrix: Let’s Clear This Confusion by Aatish Kayyath Medium. Available online: https://medium.com/@aatish_kayyath/confusion-matrix-lets-clear-this-confusion-4b0bc5a5983c (accessed on 11 May 2022).

- Performance Metrics for Classification Problems in Machine Learning by Mohammed Sunasra Medium. Available online: https://medium.com/@MohammedS/performance-metrics-for-classification-problems-in-machine-learning-part-i-b085d432082b (accessed on 19 November 2021).

- Classification: ROC Curve and AUC Machine Learning Crash Course Google Developers. Available online: https://developers.google.com/machine-learning/crash-course/classification/roc-and-auc (accessed on 28 February 2022).

| Features | Description |

|---|---|

| Wellhead temp. (°C) | The temperature of the wellhead |

| Wellhead press (psi) | The pressure of the wellhead |

| MMCFD gas | Million standard cubic feet per day of gas |

| BOPD | Barrel of oil produced per day |

| BWPD | Barrel of water produced per day |

| BSW | Basic solid and water |

| CO2 mol. | Molecular mass of CO2 |

| Gas Grav. | Gas gravity |

| CR | Corrosion defect |

| Model | Parameter | Optimal Value |

|---|---|---|

| Random forest | bootstrap | True |

| Max_depth | Not specified | |

| Max_features | All | |

| N_estimators | 100 | |

| Support vector machine | C | 1000 |

| Gamma | 0.1 | |

| Kernal | Rbf | |

| K-nearest Neighbour | N_neighbors | 21 |

| Gradient boosting | Max_depth | Not specified |

| Max_features | Log2 | |

| N_estimators | 15 | |

| Decision tree | Criterion | Entropy |

| Max_depth | 150 |

| Classifier | Precision | Recall | F1-Score | Accuracy | ROC-AUC |

|---|---|---|---|---|---|

| RF | 0.92 | 0.92 | 0.92 | 91.56% | 0.91 |

| SVM | 0.96 | 0.96 | 0.96 | 96.1% | 0.96 |

| KNN | 0.87 | 0.87 | 0.87 | 87.13% | 0.87 |

| GB | 0.87 | 0.87 | 0.87 | 87.39% | 0.87 |

| DT | 0.84 | 0.84 | 0.84 | 83.65% | 0.84 |

| Classifier | Precision | Recall | F1-Score | Accuracy | ROC-AUC |

|---|---|---|---|---|---|

| RF | 0.92 | 0.92 | 0.92 | 91.81% | 0.92 |

| SVM | 0.97 | 0.97 | 0.97 | 97.43% | 0.97 |

| KNN | 0.89 | 0.89 | 0.89 | 89.37% | 0.89 |

| GB | 0.90 | 0.90 | 0.90 | 90.25% | 0.90 |

| DT | 0.85 | 0.85 | 0.85 | 84.97% | 0.85 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aljameel, S.S.; Alomari, D.M.; Alismail, S.; Khawaher, F.; Alkhudhair, A.A.; Aljubran, F.; Alzannan, R.M. An Anomaly Detection Model for Oil and Gas Pipelines Using Machine Learning. Computation 2022, 10, 138. https://doi.org/10.3390/computation10080138

Aljameel SS, Alomari DM, Alismail S, Khawaher F, Alkhudhair AA, Aljubran F, Alzannan RM. An Anomaly Detection Model for Oil and Gas Pipelines Using Machine Learning. Computation. 2022; 10(8):138. https://doi.org/10.3390/computation10080138

Chicago/Turabian StyleAljameel, Sumayh S., Dorieh M. Alomari, Shatha Alismail, Fatimah Khawaher, Aljawharah A. Alkhudhair, Fatimah Aljubran, and Razan M. Alzannan. 2022. "An Anomaly Detection Model for Oil and Gas Pipelines Using Machine Learning" Computation 10, no. 8: 138. https://doi.org/10.3390/computation10080138

APA StyleAljameel, S. S., Alomari, D. M., Alismail, S., Khawaher, F., Alkhudhair, A. A., Aljubran, F., & Alzannan, R. M. (2022). An Anomaly Detection Model for Oil and Gas Pipelines Using Machine Learning. Computation, 10(8), 138. https://doi.org/10.3390/computation10080138