1. Introduction

Breast cancer is one of the most predominant types of malignancy seen in the worldwide population of woman. Early diagnosis, prognosis, and correct treatment of breast cancer can improve patients’ life expectancy. According to a fact sheet by World Health Organization (WHO), in 2020 around 2.3 million women were diagnosed with breast cancer, and there were approximately 685,000 deaths globally due to this disease. Breast cancer is one of the most prevalent kinds of cancer, causing around 10.7% of deaths among all major cancer types in 2020 [

1].

Due to digitalization, there has been a tremendous advancement in the types of modalities used for screening of breast cancer. The most common modalities used for breast cancer screening are mammography, magnetic resonance imaging, breast ultrasound, positron emission tomography, and histopathological analysis of breast tissue [

2]. Mammography is X-ray imaging of human breasts and is usually prescribed when a suspicious mass in the breast is suspected as a result of a physical examination of breasts. Breast ultrasound is a non-invasive method for breast screening. It is usually helpful in the case of dense breast tissues. Breast magnetic resonance imaging also takes several images and usually is prescribed along with other diagnostic tests such as mammography or ultrasound. Breast cancer is also detected by positron emission tomography (PET) examination. PET is an imaging test that uses radio-active substance for the detection and localization of cancerous growth of cells. It is seen that the initial screening method, as mammography helps to find breast cancer at an early stage [

3,

4]. Magnetic resonance imaging is also one of the important screening modalities for breast cancer early detection. Magnetic resonance imaging (MRI) screening shows higher sensitivity for breast cancer with genetics [

5].

Even though mammography is the most common technique for the initial screening of breast cancer, it has certain drawbacks such as low sensitivity in the case of dense breast analysis and low specificity [

6]. Hence, morphological analysis of hematoxylin and eosin-stained (H&E-stained) breast tissue is the gold standard for breast malignancy detection with a very high confidence level [

2]. Breast cancer arises due to the uncontrollable growth of breast cells. It starts when ductal–lobular cells inside the breast glands grow abnormally. There are two basic types of breast cancers: ductal carcinoma in situ (DCIS) and invasive carcinoma. When the cancerous growth is confined within the ductal–lobular structure of the breast, it is called ductal carcinoma in situ (DCIS). When these cells of DCIS break through the ductal–lobular system and invade the rest of the breast parenchyma, then it is called invasive breast cancer.

Machine learning-based computer-aided diagnosis is becoming popular in breast cancer screening as these techniques promise new knowledge insights with near-human performance. Pure machine learning-based systems involving traditional classifiers used for diagnostic applications comprise various stages such as data preprocessing, image enhancements, segmentation, feature engineering, and then classification. With the tremendous research and advancements in deep learning and computer vision, neural network-based systems are predominantly designed for diagnostic workflows. The tedious task of feature engineering is automatically performed by neural network architecture in a deep learning model. In deep learning, a more advanced trend called transfer learning dominates the field of artificial intelligence (AI) research. The pre-trained architectures of deep learning models are used in the transfer learning method to save the training time and cost of design. Breast cancer biopsy images consist of many essential features which are used as a basis for disease diagnosis by expert histopathologists. These features include nuclear pleomorphism, i.e., how distinctively different the shapes and sizes of nuclei are in tubule formation and metastasis information. In supervised machine learning, a labeled dataset is shown to the machine learning model during training. The machine learning model’s learning algorithm then adjusts the model’s parameters such that it learns from the dataset. Once training is completed, completely unknown testing data is shown to the model to classify the unseen information correctly. Artificial intelligence-based studies aimed to help in breast cancer diagnostic workflows, and not only comprise malignancy detection but also of the detection of breast cancer grade, intrinsic molecular subtype, lymph node status, and metastasis occurrence. Breast cancer consists of various subtypes according to the origin of occurrence. The grade of breast cancer depicts aggressiveness of the disease. The intrinsic molecular subtype depends on the presence of hormones or proteins on the cancer cell surface. Intrinsic molecular subtype identification is a crucial prognostic evaluator in cancer treatment [

7].

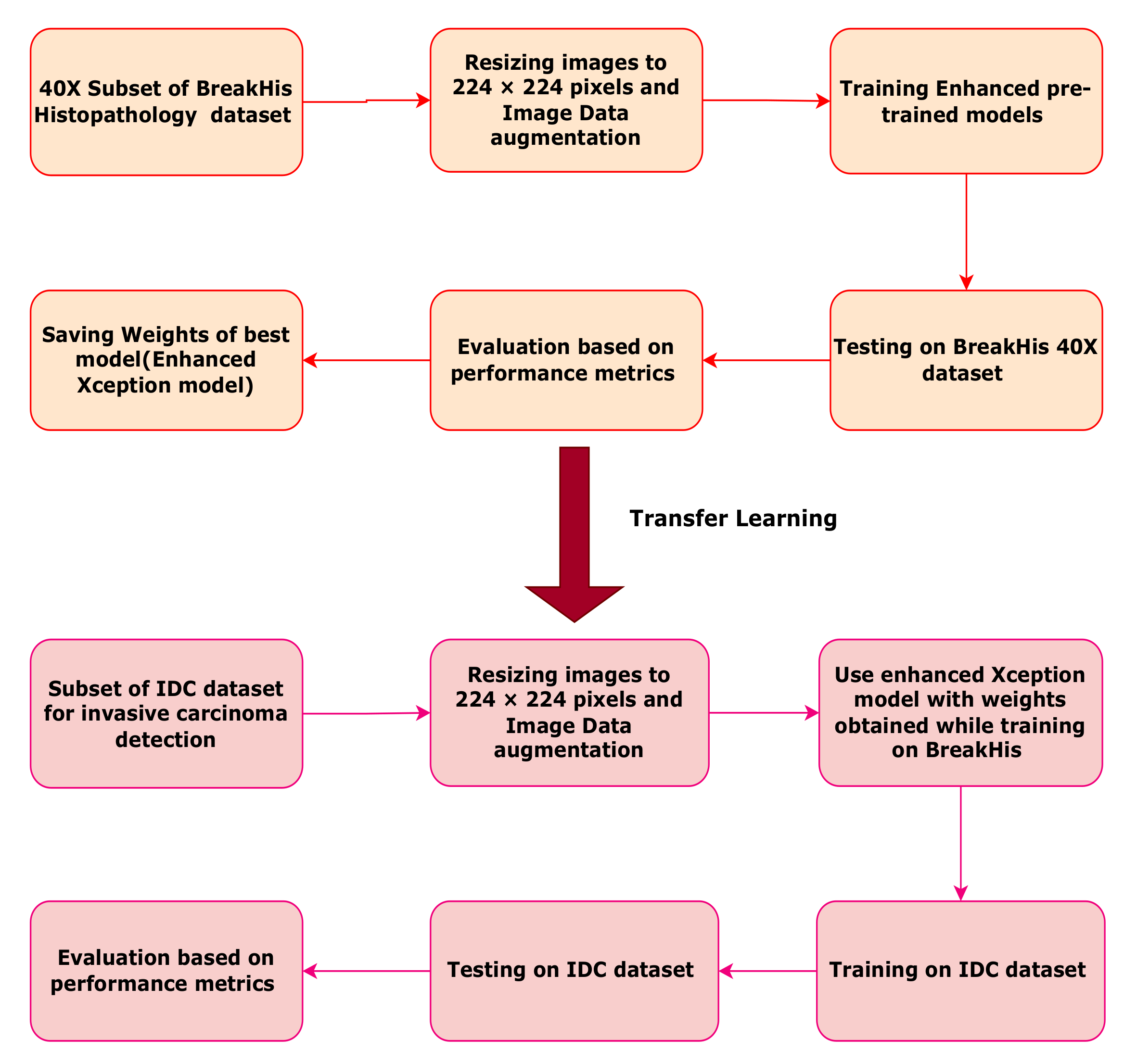

This work is focused on comparing the performances of deep convolutional neural network models which use the backbone of pre-trained architectures. Binary classification is conducted on histopathological images having a magnification factor of 40×. These biopsy images are taken from the publicly available BreakHis breast cancer dataset and IDC (Invasive ductal carcinoma) dataset. The best model with good evaluation parameters is chosen as the final classification model. Comparative analysis between the three classification models is also presented. Pre-trained models trained on the ImageNet challenge dataset are used for this study as the backbone of a deep convolutional neural network. Custom layers are added to pre-trained models. The backbone network finds important lower-order features in the histopathology images, while the custom layers are responsible for finding higher-order abstract features leading to the task of binary classification. As these histopathology images are rotation and scale invariant; data augmentation is used to increase the size of the dataset. Two different datasets, namely BreakHis and IDC are considered for this work. The flowchart of the proposed work is given in

Figure 1. As evident from the flowchart, the BreakHis 40× image dataset is first augmented using several image transforms such as rotation, flipping, and scaling. The enhanced models are trained, validated, and tested on the BreakHis dataset. Depending on the best accuracy, the enhanced customized pre-trained Xception model is finalized for the classification task. The weights learned during BreakHis learning are stored for the Xception model. The same enhanced Xception model with BreakHis weights is used for tuning on the IDC dataset.

The contributions of this study are summarized below.

Rescaling and augmentation of the BreakHis 40× dataset using Keras functionality.

Customization of pre-trained models, namely EfficientNetB0, ResNet50, and Xception, by removing the last fully connected layers and appending series of convolution as well as max pooling layers along with flattening and dense connections.

Experimenting on the BreakHis 40× dataset by using enhanced pre-trained models for binary classification task of cancer detection.

Using previously trained weights during tuning on the BreakHis dataset of the best model (customized Xception) to train, test, and validate a subset of the IDC dataset. Thus, this validates the potential of the Xception model trained on the BreakHis dataset to perform another similar task of invasive carcinoma detection.

Importing of weights also exhibits ability of enhanced Xception model to transfer learn from the BreakHis dataset to IDC dataset

The rest of the article is arranged as follows.

Section 2 describes similar work performed in breast cancer diagnosis using publicly available datasets. The methodology of the convolutional deep learning technique used for this binary classification problem is discussed in

Section 3. In

Section 4, experiments, results, and analyses are presented. Finally, the paper is concluded in

Section 5.

2. Related Work

Deep learning systems ease the diagnostic classification task as deep neural networks automatically find features and patterns in the given dataset. Along with the availability of good hardware processing architectures such as graphics processing units (GPU) and tensor processing units (TPU), different open-source programming frameworks such as Tensorflow-enabled Keras and PyTorch are also responsible for the trend in using deep learning for classification problems. In addition to these programming frameworks, pre-trained architectures of deep neural nets such as visual geometry group (VGG), AlexNet, inception, ResNet, Xception, and several other models are also available for use in a deep computer vision model.

A recurrent, residual neural network was used for semantic segmentation of medical images [

8]. In one of the studies, an improved version of U-Net-based architecture called IRU-Net was used to segment images of patients’ tissue slides. This IRU-Net method, which was designed to detect the presence of bacteria and immune cells in tissue images, used several scaled layers of residual blocks, inception blocks, and skipped connections [

9]. A multilevel semantic adaptation method was used for diverse modalities for a few-shot segmentation on cardiac image sequences. The method proved effective even under limited labels for the dataset [

10].

MRI and near infrared spectral tomography-related wearable system was designed and developed in one of the research studies [

11]. In dynamic contrast-enhanced magnetic resonance imaging (DCE-MRI) imaging, various features were extracted using different machine learning methods in some of the research studies [

12].

In 2016, Spanhol constructed a benchmark dataset of hematoxylin and eosin (H&E)-stained histopathological images for breast cancer which were extracted using fine needle aspiration cytology (FNAC) procedure. Various feature extractors such as local phase quantization (LPQ), local binary pattern (LBP), and completed local binary pattern (CLBP) were used to obtain textural and morphometric features from H&E-stained images. Performance comparison of various classifiers was made for this task [

13]. Classification of H&E-stained histopathological images was performed with features extracted from the graph run length matrix (GRLM) and gray level co-occurrence matrix (GLCM). A predictive breast cancer diagnostic model based on a modified weight assignment technique was developed [

14]. Contrast limited adaptive histogram equalization method was used for contrast improvement along with several classifiers on the BreakHis dataset [

15]. DNA repair deficiency (DRD) status was found from histopathology images by one of the researchers by making use of deep learning [

16]. One of the researchers used several pre-trained architectures of convolutional neural networks in parallel and aggregated the results for detecting breast cancer using histopathology images with Vahadane transform for stain normalization [

17]. In one magnification-dependent breast cancer binary classification study, the researcher used the transfer learning model using AlexNet [

18]. An anomaly detection mechanism using a generative adversarial network is performed to find mislabeled patches of the BreakHis dataset. DenseNet121 is used for binary classification after that [

19]. A deep convolutional neural network(CNN) model is used in [

20,

21,

22] to classify histopathology images. Color normalization and data augmentation techniques are applied to H&E-stained histopathology slides in order to perform breast cancer classification. Fully trained and fine-tuned versions of VGG architectures were used for the task [

23]. Tumor and healthy regions are segmented in one of the research studies, which used CNN for area-based annotation procedure on histopathology images [

24]. The deep convolutional neural network was also designed for finding the status of cancer metastasis in lymph nodes [

25,

26]. Breast cancer is detected using dual-modality images such as ultrasound and histopathology in one of the studies [

27]. Using transfer learned multi-head self-attention, one of the studies performed multi-class classification on the BreakHis dataset [

28]. Breast cancer histopathology images are classified into four classes as normal, begin, in situ carcinoma, and invasive carcinoma in one research study using parallel combination of convolutional neural network(CNN) and recurrent neural network(RNN) [

29]. GoogleNet-based hybrid CNN module was used with bagging strategy to classify breast cancer [

30]. DenseNet-161 and ResNet-50 models were used for invasive carcinoma detection in IDC (invasive ductal carcinoma) dataset along with validation on the BreakHis dataset [

31]. Deep learning architecture named DKS-DoubleU-Net was used to segment tubules in the breast tissue in H&E-stained images on BRACS dataset [

32]. In one more research work, binary and multiclass classification on BreakHis dataset is performed using customized pre-trained models of DenseNet and ResNet networks. In this study, the researchers are able to attend maximum accuracy of 100 percent for binary classification task on 40× magnification factor [

33]. In the proposed research work accuracy obtained is 93.33% on BreakHis 40× dataset. However, the proposed model is again trained on a new dataset called IDC. The IDC dataset consists of altogether different information from BreakHis dataset as IDC focuses on invasive ductal carcinoma cases. The model is again fine tuned on IDC dataset by just keeping the weights obtained during BreakHis training constant. Instead of using random weights or ImageNet weights, we have used previously trained weights (BreakHis weights). Thus, the proposed model is also able to learn new patterns in different dataset. Thus, this study tries to apply transfer learning concept. This transfer learning approach improves training and testing timing requirements and ensure generalization and robustness of the CNN model.

Deep CNN using the ResNet model was used for the binary classification of breast histopathology images. This method also used Wavelets of packet decomposition and histogram of oriented gradients [

34]. Several multiple instance learning algorithms with the deep convolutional neural network experimented on the BreakHis dataset [

35]. Fusion of different deep learning models has been tested on the BreakHis and ICIAR 2018 datasets in one of the research studies [

36]. A fully automated pipeline using deep learning architecture for breast cancer analysis is presented in one of the research studies [

37]. Transfer learning-based deep neural network was used for the breast cancer classification task in one more research study [

38]. Spatial features are extracted using CapsuleNet for the breast cancer classification task in some research studies [

39,

40]. Color deconvolution and transformer architecture are used for histopathological image analysis in one of the research studies [

41]. A pure transformer is used as a backbone to extract global features for histopathological image classification [

42].

Apart from the binary or multi-class classification of breast cancer according to its subtypes, deep learning techniques are also used in grade detection and intrinsic subtype classification of breast cancer. In one of the recent studies, a deep learning model named DeepGrade was used to find grades of breast cancer from whole slide images. The prognostic evaluation of patients depending on their histology grades was also carried out [

43]. In one more study, faster region convolutional neural network and deep convolutional neural network were used for mitotic activity detection [

44]. Intrinsic molecular subtypes of breast cancer are leading diagnostic factors for precision therapy. Depending on whether breast cancer cells show the presence of estrogen hormone, progesterone hormone, or HER2 (Human Epidermal Growth factor Receptor2) protein, there are various subtypes of breast cancer. These are called intrinsic or molecular subtypes of breast cancer. The hormonal status of histopathology images is found by one study which utilizes deep learning techniques on different datasets [

45]. A generative adversarial network for stain normalization and deep learning framework for intrinsic molecular subtyping of breast tissue was used in one more research study [

46]. One group of researchers used deep CNN and region of interest-based annotations on whole slide images to predict HER2 positivity in breast cancer tissue [

47]. Deep learning was used in one of the works to find the status of various genomic bookmarks [

48]. A deep learning image-based classifier was developed to predict intrinsic subtypes of breast cancer, and survival analysis was also conducted [

49]. Even though there is much research in breast cancer detection, there is a need to conduct more studies on multi-centric data. This multi-centric data from different institutions or using different datasets can guarantee the generalization of underlying architectures and the possibility of real-life use for improving clinical outcomes. Hence, this research study tries to use network models on two different datasets for a similar type of breast cancer classification task.

3. Materials and Methods: For Breast Cancer Detection

The proposed classification algorithm uses the Keras library on top of the TensorFlow environment. The Tensor processing unit with extended RAM facilities is used from the Google Colab PRO environment. In the proposed work, the Xception model, which is already pre-trained on the ImageNet dataset, is used for low-level, deep feature extraction on H&E-stained breast histopathology images with a magnification factor of 40×. The other two pre-trained models were used for performance comparison. The output of the backbone Xception network is connected to custom CNN layers. These custom layers are responsible for higher-level feature abstraction and classification. The motivation behind using pre-trained models as a backbone is that they had shown successful outputs for the ImageNet challenge. Pre-trained models of the ImageNet challenge are already trained on millions of images. Furthermore, hence, they have weights already trained for one of the image-related computer vision application problems. The bottom layers of the pre-trained network are used as they are. The top layers are changed. The last fully connected layers of pre-trained CNN, which are specific to the ImageNet classification task, are removed, and custom CNN layers are appended to the pre-trained model.

3.1. Datasets

Two different datasets, namely the BreakHis and IDC (invasive ductal carcinoma) were used for experimental analysis in this study. The final network trained on the BreakHis dataset with 40× zoom images also exhibited transfer learning capabilities, wherein the same model was used for fine-tuning and testing on a different IDC dataset. In a real-life scenario, for histopathologists analyzing H&E-stained slides on the computer screen, the magnification option proves beneficial as they can zoom in or out of the digitized slides to make inferences about the disease. However, for neural networks, we can provide slides of the same magnification factor as micro-level features can easily be extracted by controlling kernel sizes in the convolution process.

3.1.1. BreakHis Histopathological Image Dataset

BreakHis dataset consists of a total of 7909 breast histopathology images. These are images of patches extracted from whole slide tissue images. The dataset consists of tissue images of four different magnification factors, which are 40×, 100×, 200×, and 400×. In this study, 40× zoom images are considered for evaluating CNN architecture. Besides labeling images as benign or malignant, the dataset also gives information about other histological subtypes of breast cancer. The class imbalance problem is seen in the BreakHis dataset, where one class sample outnumbers others. For the 40× magnification factor, there are 652 benign and 1370 malignant samples in the BreakHis dataset [

13].

3.1.2. IDC Breast Histopathological Image Dataset

The invasive ductal carcinoma (IDC) dataset has breast cancer histopathology images in the form of patches of dimension 50 × 50 pixels. These patches contain two categories, namely IDC positive images and IDC negative images. There are a total of 277,524 images, out of which 78,786 images are IDC +ve, and 198,738 images are IDC −ve. All these patches are of 40× magnification factor. A subset of the IDC dataset is taken in this study. As this a huge dataset exhibiting class imbalance, 10,000 samples of each category are considered in the proposed work [

50].

3.2. Image Rescaling and Augmentation

The original image size of histopathology images in the BreakHis dataset is 700 × 460 pixels. All images with the 40× magnification are resized to 224 × 224 pixels. Most of the medical datasets consisting of histopathology images suffer due to limited number of training samples. To strengthen the dataset, more images are added to the 40× BreakHis dataset using various image transforms. Histopathology images being tissue images, are rotation invariant and shift invariant. The image transform operations include horizontal and vertical flips of the image, rotation of the image, zooming operation, etc. Augmented images are generated on the fly during training time. Hence, no separate memory storage is required for augmented images, resulting in better memory management. Different image transforms for the data augmentation method are shown in

Figure 2.

3.3. Architecture of Proposed CNN Model

In this work, experimentation is carried out with three different pre-trained deep models as the backbone. Out of EfficientNetB0, ResNet50, and Xception, the Xception model gave the best accuracy. To increase the model’s accuracy and extract higher-level features in histopathological images, custom layers in the form of three convolutional and three max-pooling layers are added to the pre-trained framework. The Xception model’s weights are initialized to the ImageNet weights. Custom convolutional layers have a uniform kernel size of 3 × 3 with ReLu activation function blocks. ReLu activation function is chosen as it provides constant gradient which minimizes the vanishing gradient problem. The flattening operation converts the obtained feature map into a single-dimensional vector. Dropout is used to help reduction in overfitting, and batch normalization is used as a regularization technique. The final sigmoid activation function gives output in terms of class probability in the range from 0 to 1. Then, the whole CNN architecture consisting of a pre-trained model(except the top fully connected layers) and custom layers is trained on an augmented BreakHis dataset with the 40× magnification factor. Thus, custom CNN model layers are added on the top of the backbone Xception model. The backbone Xception model works as a potential feature extractor here. The proposed architecture is given in

Figure 3.

Loss objective function used in this case is binary cross-entropy loss. During the backward pass or backpropagation pass of the neural network derivative of loss with respect to different weights is found using the chain rule. Trainable parameters of the network, meaning the weights and the biases, are updated during backpropagation.

In CNNs an optimizer is also used for the optimization of trainable parameters of the network to minimize the cost objective function. In this study, the optimizer used is Adam optimizer as it gave better accuracy than other counterparts such as Stochastic gradient descent, RMSProp, AdaDelta, etc. The learning rate decides the convergence time for the model. Two learning rates were tried for this algorithm as 0.001 and 0.0001. The best suited learning rate is kept at 0.0001. The loss function used is binary cross_entropy. The cost function of binary cross_entropy has two main terms, which consist of actual labels and network predictions. This loss calculation is performed over a minibatch of a dataset.

3.4. Enhanced Pre-Trained Models

EfficientNet models are depth, width, and resolution scaled networks. This scaling is performed to obtain more detailed feature extraction. All these dimensions are scaled up with a uniform ratio. The baseline EfficientNet model is EfficientNet B0. EfficientNet is a family of models ranging from baseline models to several scaled-up versions. Thus, EfficientNet provides multiple scaling for improving accuracy [

51]. ResNet50 or Residual Network 50 is the model which is also validated on the ImageNet large-scale visual recognition challenge dataset. For ease of optimization and better performance, the residual building blocks are included in ResNet models [

52]. ResNet50 architecture consists of 50 such residual blocks. EfficientNet, ResNet, and Xception models are convolutional neural networks with many properties such as parameter or weight sharing, location invariance, etc., making them efficient for computer vision applications. Depth-wise separable convolution is the prominent feature used in Xception architectures. It is an updated version of inception architectures. In inception architecture, the convolutions are performed spatially and over the input depth of the image. To reduce dimensions, in Xception architecture, 1 × 1 convolution blocks are run across the depth. In the standard practice of convolution operation, the application of filters across all input channels corresponding to colors and a combination of these values is performed in a single execution step. Depth-wise separable convolution divides this complete process into two parts depth-wise or channel-wise convolution and point-wise convolution. In-depth wise process, a convolution filter is applied to a single input channel at a time. All output values of these separate kernels, which work on a single channel, are stacked together, and then point-wise convolution is performed on channel-wise outputs of filters [

53].

3.5. Classifier Details

The proposed classifier used in this work for the breast cancer classification task is enhanced pre-trained Xception CNN. The Xception CNN model trained on the ImageNet dataset is used by excluding its final fully connected layers. Weight initialization is performed based on ImageNet weights only. After removing the last layers, additional convolutional and max pooling layers are added to the network, along with flattening and dense layers. Since pre-trained models are generally trained on larger and generalized datasets, adding custom layers help the modified models to adapt to specific tasks. Adding custom layers also helps to train the models faster. Image sizes are rescaled to 224 × 224 pixels. For an enhanced pre-trained Xception model, drop-out and batch normalization are used as regularization techniques. Batch normalization scales output feature values to the next layer into a standard uniform scale. Batch normalization reduces internal covariance shift to a significant extent and also helps weights to converge faster.

3.6. Training and Testing of Model

The time required for training the enhanced Xception model on the IDC dataset is 1 h 50 min, while the time taken for testing on the IDC dataset is 67 s.

The CNN architecture consisting of a pre-trained model stacked with custom CNN layers is trained on the 40× zoom images of the BreakHis dataset. A training, validation, and testing split ratio of 70%, 15%, and 15% is maintained. The model is trained for 50 epochs. Augmented images obtained from image transforms are used for training purpose. Iterations are performed for a batch size of 16 images. Overfitting or high variance problems can easily creep in for such deep architectures. Generally, neural nets trained on small datasets tend to overfit. Techniques to address the problem of overfitting or underfitting neural networks are called regularization techniques. To mitigate this overfitting issue, dropout can be inserted after any hidden layer with a different dropout factor. A dropout ratio of 0.2 is used in this work. This dropout of 20% is used after the flatten layer. Adding a dropout increases the performance of the neural network. Dropout makes some of the activation outputs of hidden neurons in hidden layers as zero. The random deactivation of neuron connections happens for every training cycle. However, the percentage of dropping remains the same. Dropout ensures minimized biasing and help to prohibit neurons from learning minute redundant details in training samples and thus eventually enhance generalization capability.

To speed up the training process and to increase its performance and stability, regularization in terms of batch normalization is used. The batch normalization process also stabilizes the weight parameters of the network. In this process, the normalized output of the previous layer is fed to the next layer. It is called batch normalization because, during the training process, the layer’s inputs are normalized by using the current batch’s variance and mean values. A batch normalization layer is added before the final dense layer.

Once the network is trained on the BreakHis dataset with an enhanced pre-trained Xception model, the same model is used to for fine-tuning on another dataset called IDC. A subset of the IDC dataset is used for this comparative analysis. The network is tuned and tested for IDC with weights borrowed while training on the BreakHis dataset. This new dataset considers ten thousand samples of IDC positive class and 10,000 samples of IDC negative class.

4. Experiments and Results

Among all the architectures, the Xception pre-trained model stacked with custom CNN layers exhibited the highest accuracy of 93.33% on the augmented BreakHis dataset for the 40× magnification factor. The same Xception pre-trained model stacked with custom CNN layers gave an accuracy of 88.08% on the IDC dataset.

The deep CNN models are frequently evaluated based on the parameters such as accuracy, recall, precision, and f1-score. The confusion matrix gives us parameters such as true positive values (TP), true negative values (TN), false positive values (FP), and false negative values (FN). True positives (TP) are the output values that are predicted by the classifier as positive outputs and are originally positive. In breast cancer classification, this corresponds to correctly diagnosed patients with breast cancer. These patients have breast cancer and are also correctly diagnosed as cancer-positive patients. False positives (FP) are the output values predicted by the classifier as positive outputs but are originally negative. In the context of breast cancer diagnosis, these are the patients wrongly classified as cancer-positive, whereas, in reality, these are cancer-free patients. True negatives (TN) are output values classified by the deep learning framework as negative and originally negative. These are correctly diagnosed cancer-free patients. False negatives (FN) are output values classified by the network as negatives but are positives in reality. For breast cancer diagnosis, this parameter is very sensitive because the patient having breast cancer but diagnosed as breast cancer negative is represented by a false negative. The choice of deep neural network architecture largely depends on a network providing a minimum value of false negatives.

Accuracy is a critical performance evaluator. It is the ratio of correctly predicted outputs of a deep neural network by a total number of samples. For symmetric datasets, accuracy can provide a good measure for analyzing the performance of deep CNN. The accuracy of deep CNN is given in Equation (

1).

Equation (

2) represents precision which is a ratio of the correct positive predictions of the network to the total positive predictions of the network.

The recall parameter for the evaluation is given in Equation (

3). In deep learning-assisted diagnostic tools, recall is a very sensitive evaluation measure as recall increases with a decrease in the number of false negative cases.

The f1-score is given in Equation (

4). F1-score, the harmonic mean of recall and precision, gives a measure of the goodness of deep CNN model for the given dataset.

The comparative results of performance parameters for different models are presented in

Table 1.

The classification output is in terms of 0 or 1 for benign and malignant patches of BreakHis 40× dataset. For IDC dataset, the classification result is in terms of IDC or non-IDC patch. The result of classification on BreakHis dataset is shown in

Figure 4.

4.1. Confusion Matrices

The confusion matrix presentation shows an evaluation of the performance of the machine learning classification model. It represents a number of actual predicted output values against corresponding class labels. The heat-map representations of the confusion matrices are shown in

Figure 5. As it is evident from confusion matrices, the maximum true positive value is given by CNN with Xception pre-trained model. The model with the minimum number of false negative cases in cancer diagnostics is considered more satisfactory. The false negative numbers are the number of patients who have breast cancer but are diagnosed as cancer-free. This false negative value is minimum for the Xception-based CNN model. Thus, CNN model with Xception as a backbone network performs better in the context of cancer diagnostics.

Confusion Matrix for Evaluation on IDC Dataset

The enhanced pre-trained Xception model was also trained and tested on a sub-part of the IDC (invasive ductal carcinoma) dataset. It consisted of 10,000 samples of each category, namely benign and malignant. The confusion matrix of CNN with Xception as a backbone for the IDC dataset is given in

Figure 6.

4.2. Receiver Operating Characteristics

The performance of the classification model can be evaluated by using the area under the receiver operating characteristics curve (AUC-ROC). There is a false positive rate on the X-axis of this curve, and on the Y-axis, there is a true positive rate. Using the AUC-ROC curve, the model’s performance can be evaluated on all possible threshold values. The AUC-ROC curve for CNN using Xception as the pre-trained model is depicted in

Figure 7. It is seen that the curve occupies a large area and is inclined toward the true positive rate parameter. The AUC value obtained for the proposed model is 0.9211.

The AUC-ROC curve is also plotted for the IDC dataset in

Figure 8. Again, CNN using the Xception model as a backbone is considered for classification on the IDC dataset. The AUC value obtained in this case is 88.07949.

4.3. Comparison with Other State of the Art Techniques

Other established methods are compared in

Table 2 based on accuracy obtained on 40× magnification factor images of the BreakHis dataset. The study proposed in this article is magnification-dependent breast cancer detection, which applies to a zoom factor of 40× for breast histopathological images. Hence, comparative analysis involves comparing with other studies based on their accuracy obtained on the 40× magnification factor.

4.4. Discussion

All the hyperparameters of the models are tweaked manually based on the accuracy criterion. The numbers of kernels in three custom convolutional layers are kept as 16, 32, and 64, respectively, with a filter size of 3 × 3. All convolutional custom layers use the Relu activation function. For all three CNN models, 50 epochs are used to fit the model with 50 steps per epoch on augmented training data. Adam is used as an optimizer with a learning rate of 0.0001. The image batch size is kept at 16. The three enhanced pre-trained models are compared based on accuracy for malignancy classification on the BreakHis dataset. The enhanced Xception pre-trained model obtained the highest accuracy of 93.33% as compared to the rest of the two models on the BreakHis classification task of malignancy detection. Further, the same model with BreakHis weights is used for binary classification on the IDC dataset. It achieved an accuracy of 88.08%.