An Adversarial Learning Approach for Super-Resolution Enhancement Based on AgCl@Ag Nanoparticles in Scanning Electron Microscopy Images

Abstract

:1. Introduction

2. Materials and Methods

2.1. Row Data

2.2. Datasets

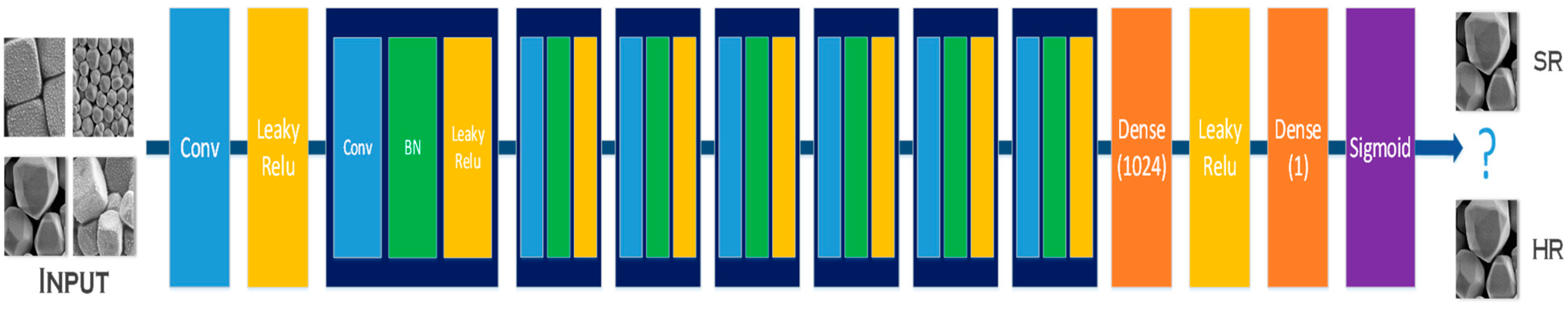

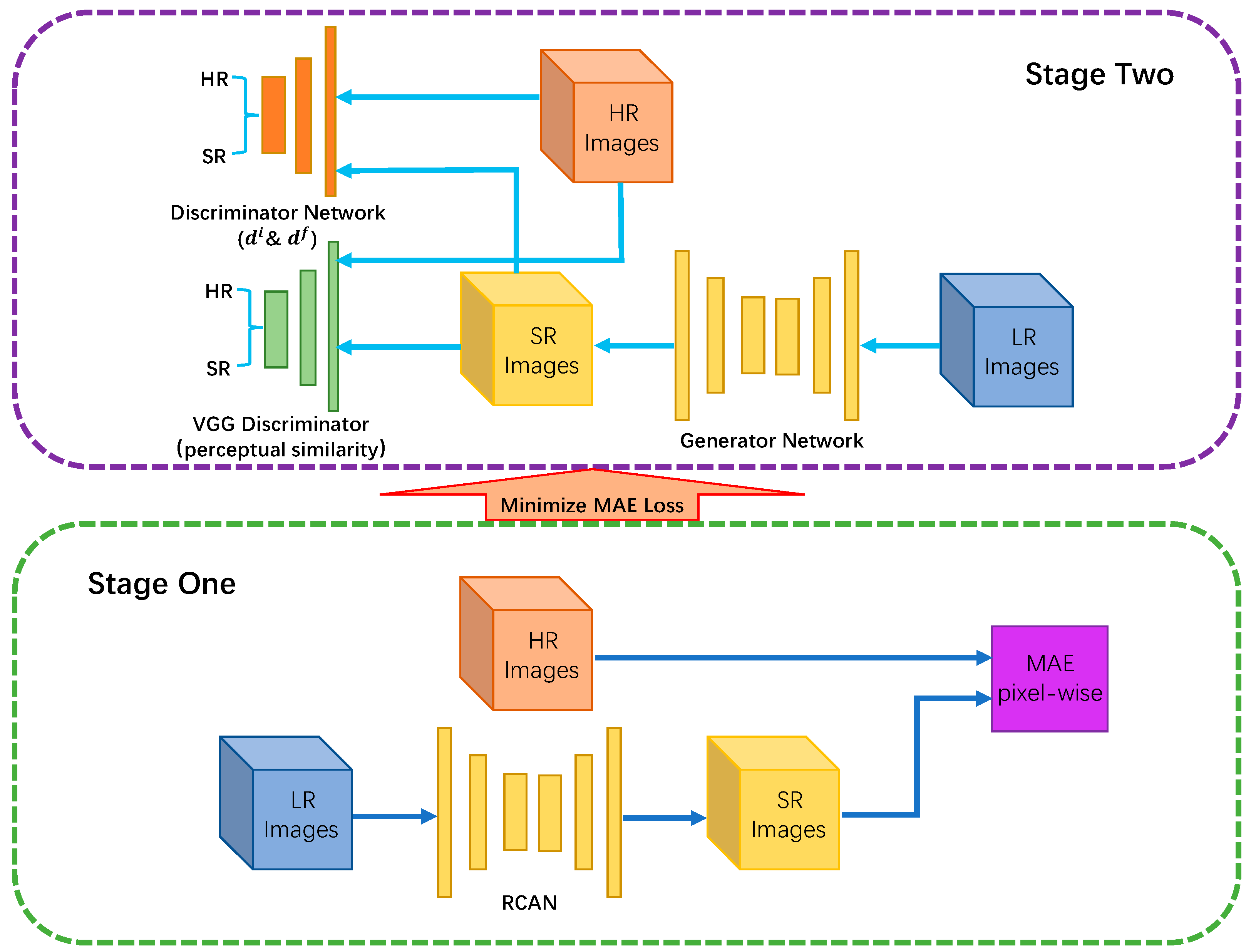

2.3. Neural Networks

2.4. Training

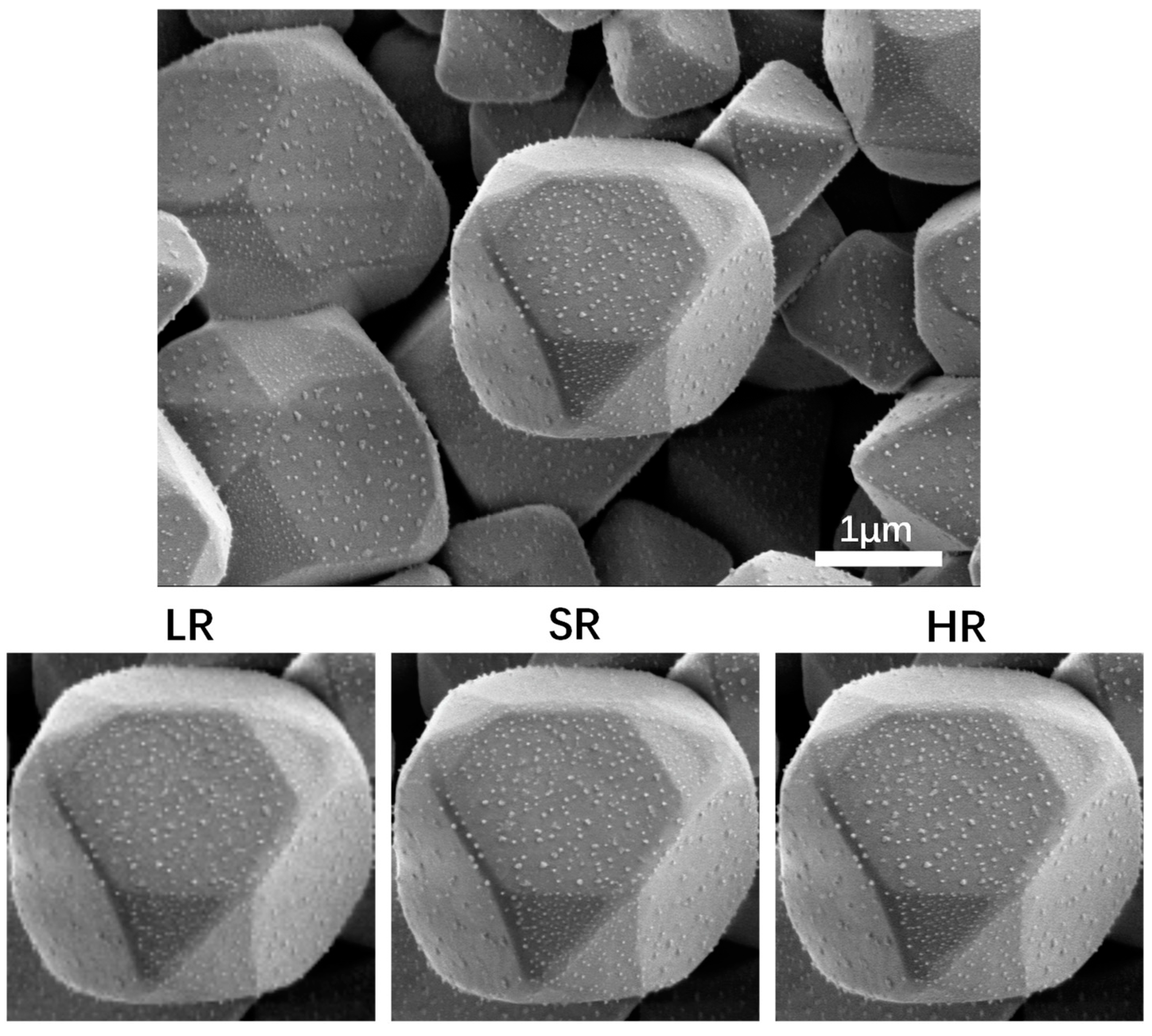

3. Results

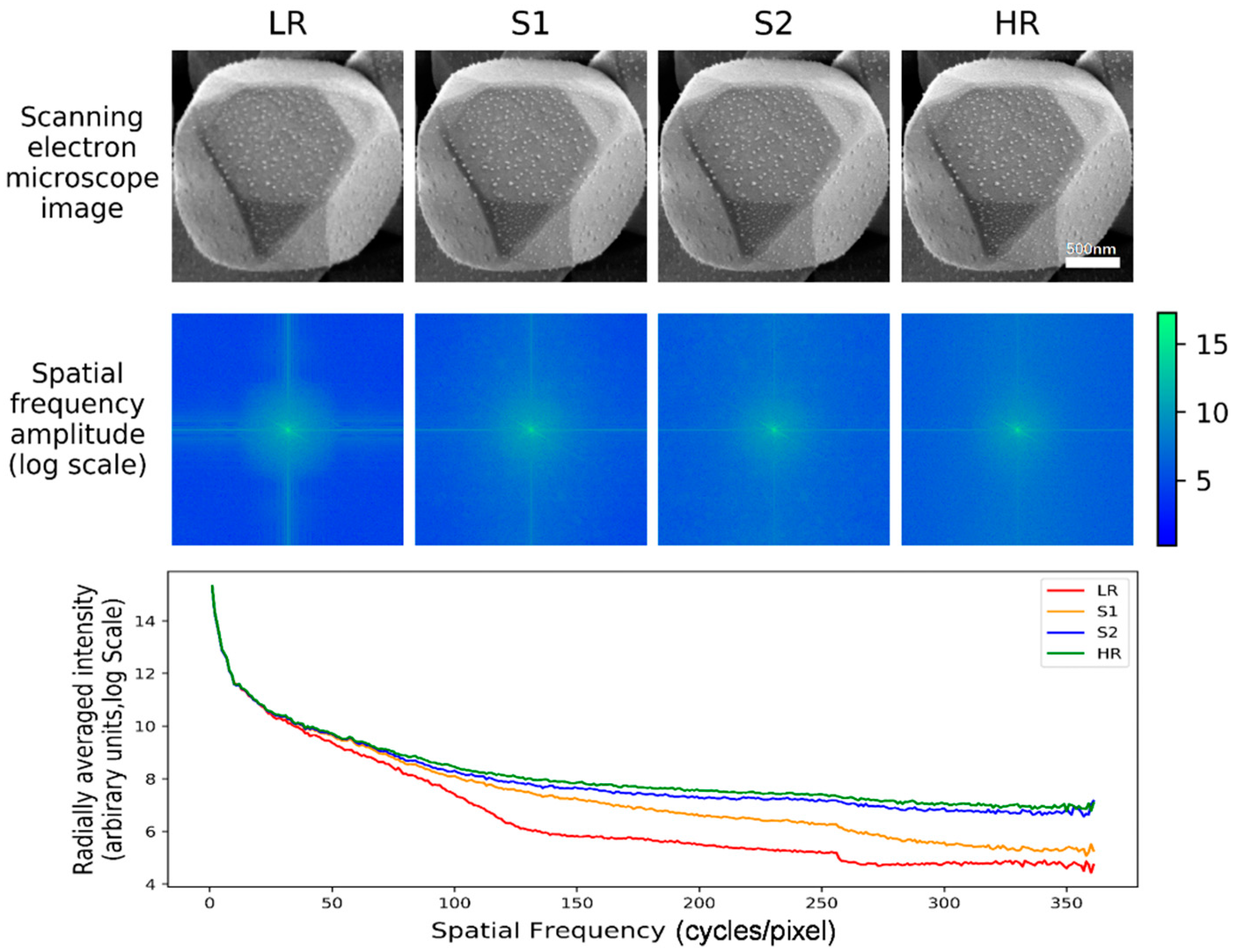

3.1. Spatial Frequency Analysis

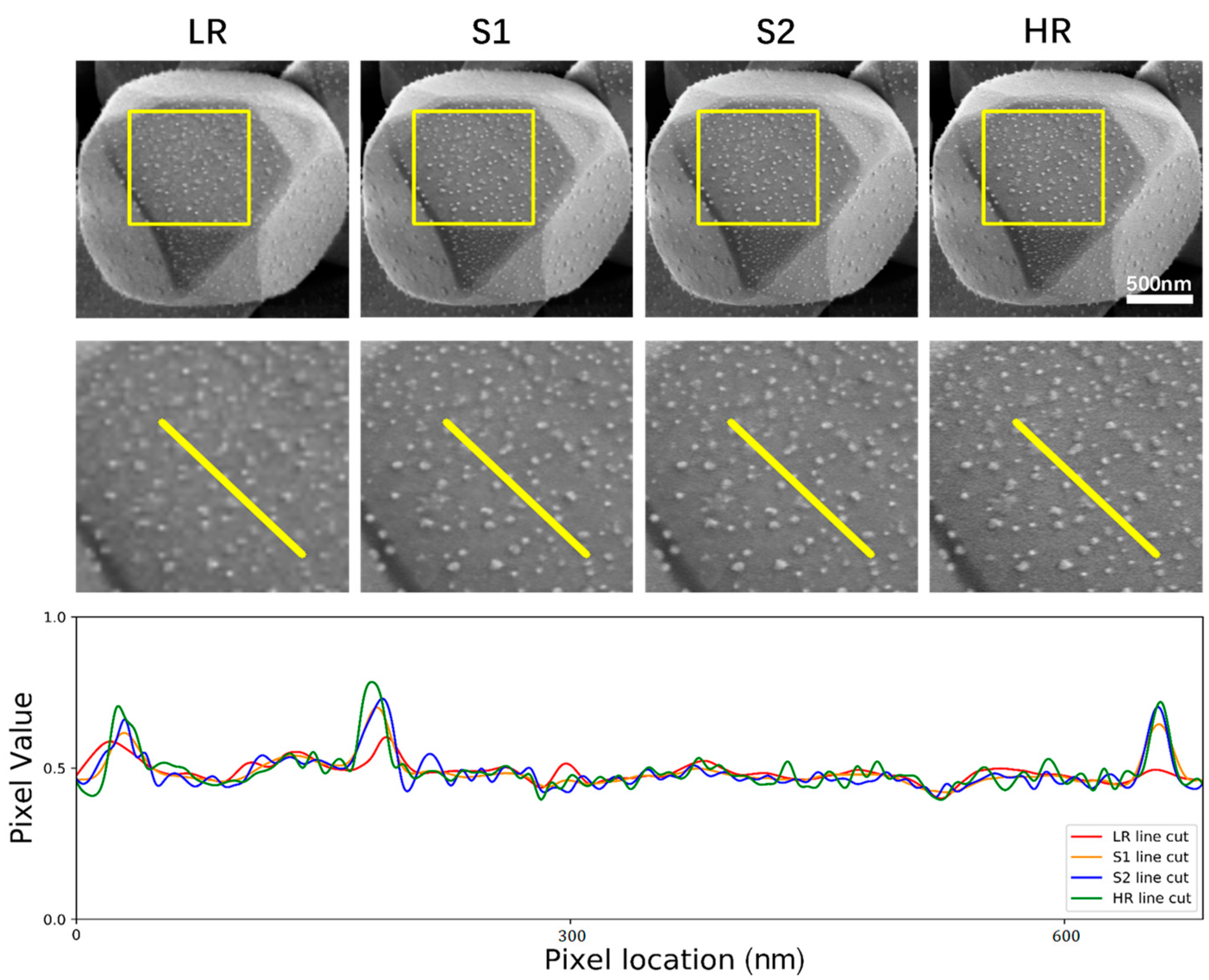

3.2. Pixel Precise Performance Analysis

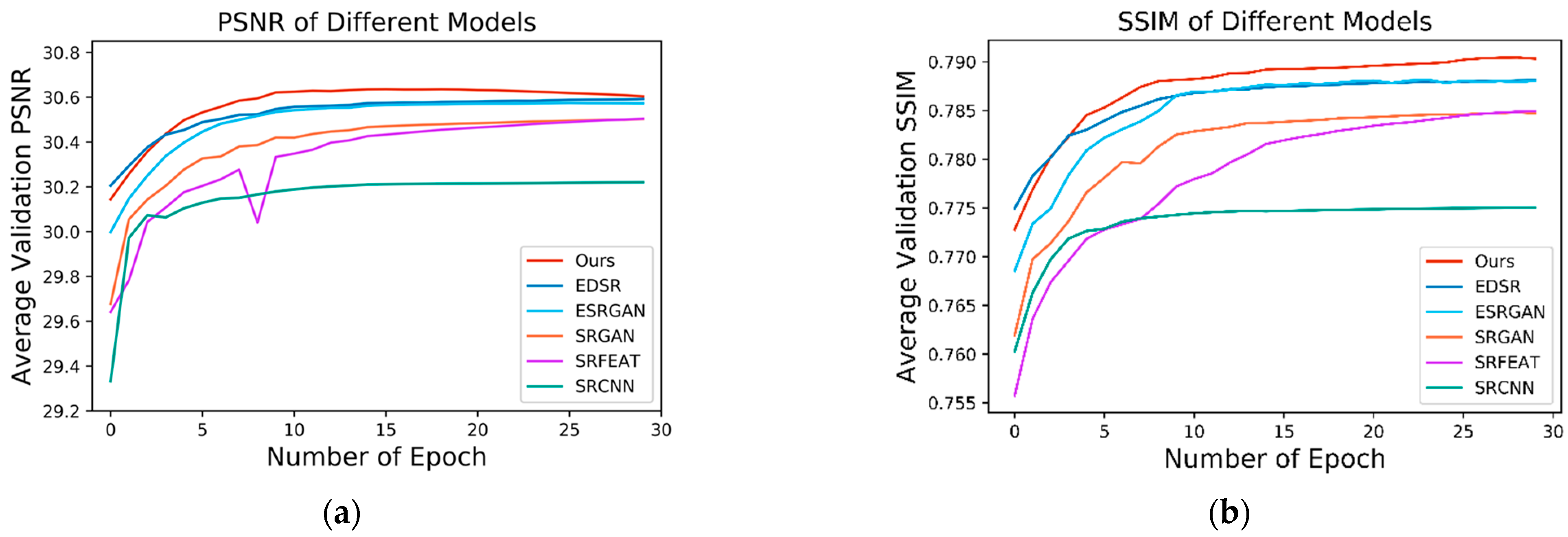

3.3. Model Performance Quantitative Metrics

3.4. Effectiveness Verification

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SEM | Scanning Electron Microscopy |

| RCAN | Residual Channel Attention Network |

| MAE | Mean Absolute Error |

| PSNR | Peak Signal-to-Noise Ratio |

| GAN | Generative Adversarial Network |

| SSIM | Structural Similarity |

| RCAB | Residual Channel Attention Block |

| RIR | Residual In Residual |

| LSC | Long Skip Connection |

| SSC | Short Skip Connection |

| CA | Channel Attention |

| RG | Residual Group |

| IFC | Information Fidelity Criterion |

References

- Zhang, T.; Li, X.; Li, C.; Cai, W.; Li, Y. One-Pot synthesis of ultrasmooth, precisely shaped gold nanospheres via surface Self-Polishing etching and regrowth. Chem. Mater. 2021, 33, 2593–2603. [Google Scholar] [CrossRef]

- Jeon, K.; Jeon, S. Synthesis of Single-Crystalline Ag Microcubes up to 5.0 μm by the Multistage Seed Growth Method. Cryst. Growth Des. 2021, 21, 908–915. [Google Scholar] [CrossRef]

- Qin, Y.; Lu, Y.; Yu, D.; Zhou, J. Controllable synthesis of Au nanocrystals with systematic shape evolution from an octahedron to a truncated ditetragonal prism and rhombic dodecahedron. CrystEngComm 2019, 21, 5602–5609. [Google Scholar] [CrossRef]

- Qin, Y.; Pan, W.; Yu, D.; Lu, Y.; Wu, W.; Zhou, J. Stepwise evolution of Au micro/nanocrystals from an octahedron into a truncated ditetragonal prism. Chem. Commun. 2018, 54, 3411–3414. [Google Scholar] [CrossRef]

- Ha, M.; Kim, J.; You, M.; Li, Q.; Fan, C.; Nam, J. Multicomponent plasmonic nanoparticles: From heterostructured nanoparticles to colloidal composite nanostructures. Chem. Rev. 2019, 119, 12208–12278. [Google Scholar] [CrossRef]

- Qin, Y.; Wang, B.; Wu, Y.; Wang, J.; Zong, X.; Yao, W. Seed-Mediated preparation of Ag@Au nanoparticles for highly sensitive Surface-Enhanced Raman detection of fentanyl. Crystals 2021, 11, 769. [Google Scholar] [CrossRef]

- Ghosh, P.; Han, G.; De, M.; Kim, C.; Rotello, V. Gold nanoparticles in delivery applications. Adv. Drug Deliver. Rev. 2008, 60, 1307–1315. [Google Scholar] [CrossRef]

- Jouyban, A.; Rahimpour, E. Optical sensors based on silver nanoparticles for determination of pharmaceuticals: An overview of advances in the last decade. Talanta 2020, 217, 121071. [Google Scholar] [CrossRef]

- Shi, Y.; Lyu, Z.; Zhao, M.; Chen, R.; Nguyen, Q.N.; Xia, Y. Noble-Metal nanocrystals with controlled shapes for catalytic and electrocatalytic applications. Chem. Rev. 2021, 121, 649–735. [Google Scholar] [CrossRef]

- Poerwoprajitno, A.R.; Gloag, L.; Cheong, S.; Gooding, J.J.; Tilley, R.D. Synthesis of low- and high-index faceted metal (Pt, Pd, Ru, Ir, Rh) nanoparticles for improved activity and stability in electrocatalysis. Nanoscale 2019, 11, 18995–19011. [Google Scholar] [CrossRef]

- Zhang, L.; Xie, Z.; Gong, J. Shape-controlled synthesis of Au-Pd bimetallic nanocrystals for catalytic applications. Chem. Soc. Rev. 2016, 45, 3916–3934. [Google Scholar] [CrossRef] [PubMed]

- Xie, W.; Walkenfort, B.; Schlücker, S. Label-Free SERS monitoring of chemical reactions catalyzed by small gold nanoparticles using 3D plasmonic superstructures. J. Am. Chem. Soc. 2013, 135, 1657–1660. [Google Scholar] [CrossRef] [PubMed]

- Tsiper, S.; Dicker, O.; Kaizerman, I.; Zohar, Z.; Segev, M.; Eldar, Y.C. Sparsity-Based super resolution for SEM images. Nano Lett. 2017, 17, 5437–5445. [Google Scholar] [CrossRef] [Green Version]

- Yang, D.S.; Mohammed, O.F.; Zewail, A.H. Scanning ultrafast electron microscopy. Proc. Natl. Acad. Sci. USA 2010, 107, 14993–14998. [Google Scholar] [CrossRef] [Green Version]

- You, C.; Cong, W.; Vannier, M.W.; Saha, P.K.; Hoffman, E.A.; Wang, G.; Li, G.; Zhang, Y.; Zhang, X.; Shan, H.; et al. CT Super-Resolution GAN constrained by the identical, residual, and cycle learning ensemble (GAN-CIRCLE). IEEE Trans. Med. Imaging 2020, 39, 188–203. [Google Scholar] [CrossRef] [Green Version]

- Egerton, R.F.; Li, P.; Malac, M. Radiation damage in the TEM and SEM. Micron 2004, 35, 399–409. [Google Scholar] [CrossRef]

- Schatten, H. Low voltage high-resolution SEM (LVHRSEM) for biological structural and molecular analysis. Micron 2011, 42, 175–185. [Google Scholar] [CrossRef]

- Sreehari, S.; Venkatakrishnan, S.V.; Simmons, J.; Drummy, L.; Bouman, C.A. Model-Based Super-Resolution of SEM images of Nano-Materials. Microsc. Microanal. 2016, 22, 532–533. [Google Scholar] [CrossRef] [Green Version]

- Sreehari, S.; Venkatakrishnan, S.V.; Bouman, K.L.; Simmons, J.P.; Drummy, L.F.; Bouman, C.A. Multi-resolution data fusion for Super-Resolution electron microscopy. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Hololulu, HI, USA, 21–26 July 2016; pp. 88–96. [Google Scholar]

- Hagita, K.; Higuchi, T.; Jinnai, H. Super-resolution for asymmetric resolution of FIB-SEM 3D imaging using AI with deep learning. Sci. Rep. 2018, 8, 1–8. [Google Scholar]

- De Haan, K.; Ballard, Z.S.; Rivenson, Y.; Wu, Y.; Ozcan, A. Resolution enhancement in scanning electron microscopy using deep learning. Sci. Rep. 2019, 9, 1–7. [Google Scholar] [CrossRef]

- Wang, J.; Lan, C.; Wang, C.; Gao, Z. Deep learning super-resolution electron microscopy based on deep residual attention network. Int. J. Imaging. Syst. Technol. 2021, 31, 2158–2169. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. A comprehensive evaluation of full reference image quality assessment algorithms. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 1477–1480. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Fast and accurate image Super-Resolution with deep laplacian pyramid networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 2599–2613. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [Green Version]

- Shi, W.; Caballero, J.; Huszar, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time single image and video Super-Resolution using an efficient Sub-Pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nevada, LV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for Large-Scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 2017, 3, 47–57. [Google Scholar] [CrossRef]

- Johnson, J.; Alahi, A.; Li, F. Perceptual losses for Real-Time style transfer and Super-Resolution. In Computer Vision-ECCV 2016, Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; Volume 9906, pp. 694–711. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution using deep convolutional networks. IEEE Trans. Pattern Anal. 2016, 38, 295–307. [Google Scholar] [CrossRef] [Green Version]

- Park, S.; Son, H.; Cho, S.; Hong, K.; Lee, S. SRFeat: Single image Super-Resolution with feature discrimination. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 439–455. [Google Scholar]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic single image Super-Resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Loy, C.C. ESRGAN: Enhanced Super-Resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 10–13 September 2018. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced deep residual networks for single image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar]

- Sheikh, H.R.; Bovik, A.C.; de Veciana, G. An information fidelity criterion for image quality assessment using natural scene statistics. IEEE Trans. Image Process. 2005, 14, 2117–2128. [Google Scholar] [CrossRef] [Green Version]

- Lu, Y.; Qin, Y.; Yu, D.; Zhou, J. Stepwise Evolution of AgCl Microcrystals from Octahedron into Hexapod with Mace Pods and their Visible Light Photocatalytic Activity. Crystals 2019, 9, 401. [Google Scholar] [CrossRef] [Green Version]

- Lu, Y.; Mao, J.; Wang, Z.; Qin, Y.; Zhou, J. Facile synthesis of porous hexapod Ag@AgCl dual catalysts for in situ SERS monitoring of 4-Nitrothiophenol reduction. Catalysts 2020, 10, 746. [Google Scholar] [CrossRef]

| Model | PSNR | SSIM | IFC | |||

|---|---|---|---|---|---|---|

| Validation | Test | Validation | Test | Validation | Test | |

| Bicubic | 28.8893 | 32.7277 | 0.7237 | 0.7986 | 2.2080 | 2.6611 |

| SRCNN | 30.1828 | 34.3070 | 0.7741 | 0.8308 | 2.8027 | 3.4853 |

| SRFEAT | 30.4520 | 34.5693 | 0.7844 | 0.8370 | 2.9933 | 3.7132 |

| SRGAN | 30.4613 | 34.6050 | 0.7844 | 0.8374 | 3.0142 | 3.7318 |

| ESRGAN | 30.5191 | 34.6965 | 0.7876 | 0.8395 | 3.0389 | 3.8046 |

| EDSR | 30.5335 | 34.6844 | 0.7880 | 0.8393 | 3.0543 | 3.8150 |

| OURS | 30.5446 | 34.7389 | 0.7903 | 0.8425 | 3.0772 | 3.8374 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, L.; Wang, Z.; Lu, Y.; Zhou, J. An Adversarial Learning Approach for Super-Resolution Enhancement Based on AgCl@Ag Nanoparticles in Scanning Electron Microscopy Images. Nanomaterials 2021, 11, 3305. https://doi.org/10.3390/nano11123305

Fan L, Wang Z, Lu Y, Zhou J. An Adversarial Learning Approach for Super-Resolution Enhancement Based on AgCl@Ag Nanoparticles in Scanning Electron Microscopy Images. Nanomaterials. 2021; 11(12):3305. https://doi.org/10.3390/nano11123305

Chicago/Turabian StyleFan, Li, Zelin Wang, Yuxiang Lu, and Jianguang Zhou. 2021. "An Adversarial Learning Approach for Super-Resolution Enhancement Based on AgCl@Ag Nanoparticles in Scanning Electron Microscopy Images" Nanomaterials 11, no. 12: 3305. https://doi.org/10.3390/nano11123305