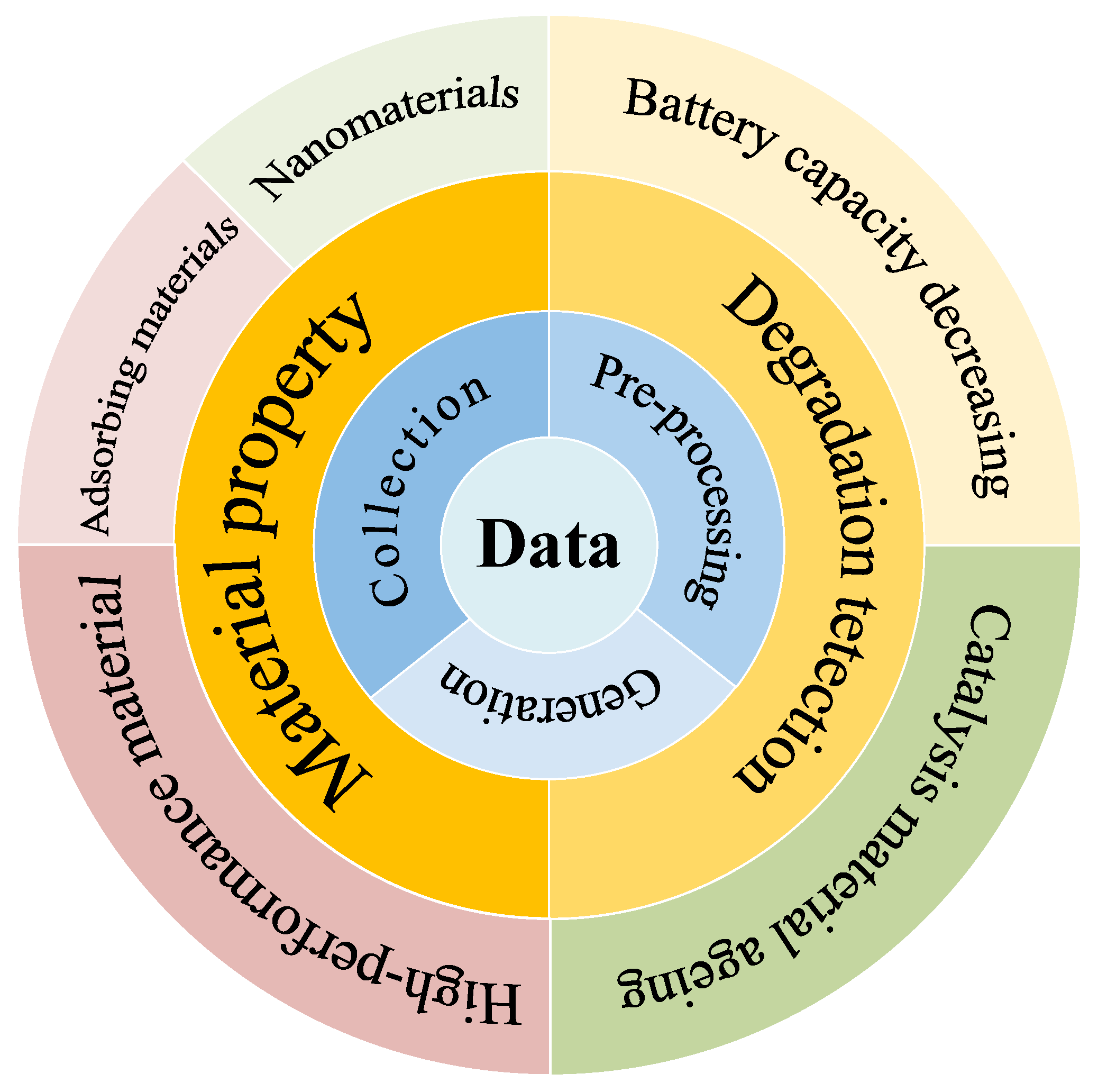

A Review of Performance Prediction Based on Machine Learning in Materials Science

Abstract

:1. Introduction

2. Data

2.1. Data Collection and Generation

2.2. Data Preprocessing

3. Performance Prediction

3.1. Material Properties

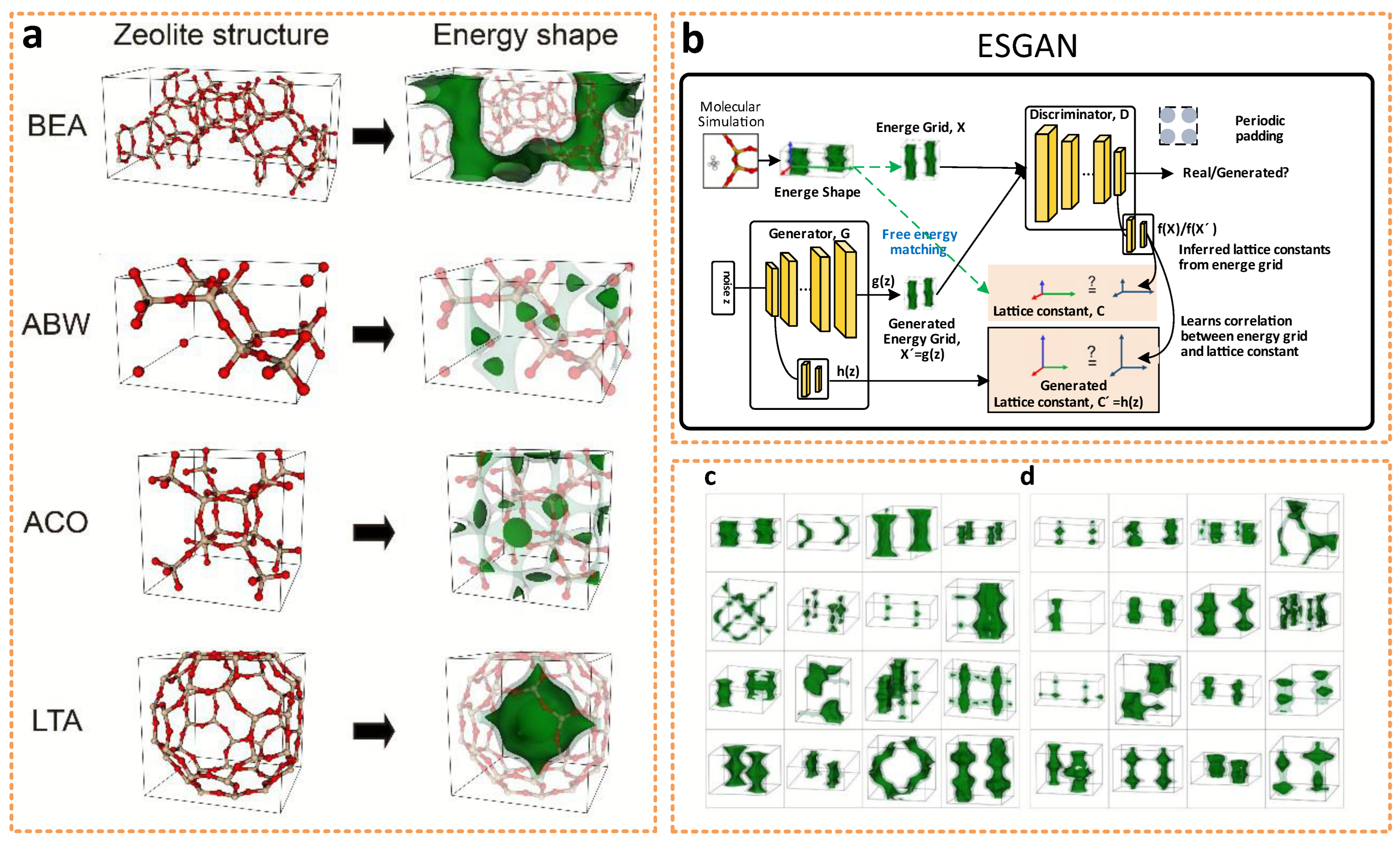

3.1.1. Nanomaterials

3.1.2. Adsorbing Materials

3.1.3. High-Performance Materials

3.2. Degradation Detection

4. Outlook and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, X.; Fan, K.; Liu, Y.; Li, Y.; Liu, X.; Feng, W.; Wang, X. Recent Advances in Fluorinated Graphene from Synthesis to Applications: Critical Review on Functional Chemistry and Structure Engineering. Adv. Mater. 2022, 34, e2101665. [Google Scholar] [CrossRef] [PubMed]

- Nimbalkar, A.; Kim, H. Opportunities and Challenges in Twisted Bilayer Graphene: A Review. Nanomicro Lett. 2020, 12, 126. [Google Scholar] [CrossRef] [PubMed]

- Wei, T.; Hauke, F.; Hirsch, A. Evolution of Graphene Patterning: From Dimension Regulation to Molecular Engineering. Adv. Mater. 2021, 33, e2104060. [Google Scholar] [CrossRef]

- Houtsma, R.S.K.; de la Rie, J.; Stohr, M. Atomically precise graphene nanoribbons: Interplay of structural and electronic properties. Chem. Soc. Rev. 2021, 50, 6541–6568. [Google Scholar] [CrossRef] [PubMed]

- Carvalho, A.F.; Kulyk, B.; Fernandes, A.J.S.; Fortunato, E.; Costa, F.M. A Review on the Applications of Graphene in Mechanical Transduction. Adv. Mater. 2022, 34, e2101326. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, Y.; Wang, Y.; Zhang, X.; Zuo, C.; Shen, L.; Ding, L. Lead-Free Perovskite Photodetectors: Progress, Challenges, and Opportunities. Adv. Mater. 2021, 33, e2006691. [Google Scholar] [CrossRef]

- Younis, A.; Lin, C.H.; Guan, X.; Shahrokhi, S.; Huang, C.Y.; Wang, Y.; He, T.; Singh, S.; Hu, L.; Retamal, J.R.D.; et al. Halide Perovskites: A New Era of Solution-Processed Electronics. Adv. Mater. 2021, 33, e2005000. [Google Scholar] [CrossRef]

- Xiang, W.; Liu, S.; Tress, W. A review on the stability of inorganic metal halide perovskites: Challenges and opportunities for stable solar cells. Energy Environ. Sci. 2021, 14, 2090–2113. [Google Scholar] [CrossRef]

- Ricciardulli, A.G.; Yang, S.; Smet, J.H.; Saliba, M. Emerging perovskite monolayers. Nat. Mater. 2021, 20, 1325–1336. [Google Scholar] [CrossRef]

- Mai, H.; Chen, D.; Tachibana, Y.; Suzuki, H.; Abe, R.; Caruso, R.A. Developing sustainable, high-performance perovskites in photocatalysis: Design strategies and applications. Chem. Soc. Rev. 2021, 50, 13692–13729. [Google Scholar] [CrossRef]

- Li, S.; Gao, Y.; Li, N.; Ge, L.; Bu, X.; Feng, P. Transition metal-based bimetallic MOFs and MOF-derived catalysts for electrochemical oxygen evolution reaction. Energy Environ. Sci. 2021, 14, 1897–1927. [Google Scholar] [CrossRef]

- Teo, W.L.; Zhou, W.; Qian, C.; Zhao, Y. Industrializing metal–organic frameworks: Scalable synthetic means and their transformation into functional materials. Mater. Today 2021, 47, 170–186. [Google Scholar] [CrossRef]

- Doustkhah, E.; Hassandoost, R.; Khataee, A.; Luque, R.; Assadi, M.H.N. Hard-templated metal-organic frameworks for advanced applications. Chem. Soc. Rev. 2021, 50, 2927–2953. [Google Scholar] [CrossRef] [PubMed]

- Qian, Y.; Zhang, F.; Pang, H. A Review of MOFs and Their Composites-Based Photocatalysts: Synthesis and Applications. Adv. Funct. Mater. 2021, 31, 34. [Google Scholar] [CrossRef]

- Huang, Z.; Yu, H.; Wang, L.; Liu, X.; Lin, T.; Haq, F.; Vatsadze, S.Z.; Lemenovskiy, D.A. Ferrocene-contained metal organic frameworks: From synthesis to applications. Coord. Chem. Rev. 2021, 430, 213737. [Google Scholar] [CrossRef]

- Sahoo, R.; Mondal, S.; Pal, S.C.; Mukherjee, D.; Das, M.C. Covalent-Organic Frameworks (COFs) as Proton Conductors. Adv. Energy Mater. 2021, 11, 2102300. [Google Scholar] [CrossRef]

- Meng, Z.; Mirica, K.A. Covalent organic frameworks as multifunctional materials for chemical detection. Chem. Soc. Rev. 2021, 50, 13498–13558. [Google Scholar] [CrossRef]

- She, P.; Qin, Y.; Wang, X.; Zhang, Q. Recent Progress in External-Stimulus-Responsive 2D Covalent Organic Frameworks. Adv. Mater. 2022, 34, e2101175. [Google Scholar] [CrossRef]

- Zhou, L.; Jo, S.; Park, M.; Fang, L.; Zhang, K.; Fan, Y.; Hao, Z.; Kang, Y.M. Structural Engineering of Covalent Organic Frameworks for Rechargeable Batteries. Adv. Energy Mater. 2021, 11, 2003054. [Google Scholar] [CrossRef]

- Zhao, X.; Pachfule, P.; Thomas, A. Covalent organic frameworks (COFs) for electrochemical applications. Chem. Soc. Rev. 2021, 50, 6871–6913. [Google Scholar] [CrossRef]

- Gomes, S.I.L.; Scott-Fordsmand, J.J.; Amorim, M.J.B. Alternative test methods for (nano)materials hazards assessment: Challenges and recommendations for regulatory preparedness. Nano Today 2021, 40, 101242. [Google Scholar] [CrossRef]

- Burden, N.; Clift, M.J.D.; Jenkins, G.J.S.; Labram, B.; Sewell, F. Opportunities and Challenges for Integrating New In Vitro Methodologies in Hazard Testing and Risk Assessment. Small 2021, 17, e2006298. [Google Scholar] [CrossRef] [PubMed]

- Groh, K.J.; Muncke, J. In Vitro Toxicity Testing of Food Contact Materials: State-of-the-Art and Future Challenges. Compr. Rev. Food Sci. Food Saf. 2017, 16, 1123–1150. [Google Scholar] [CrossRef] [PubMed]

- Guo, K.; Yang, Z.; Yu, C.H.; Buehler, M.J. Artificial intelligence and machine learning in design of mechanical materials. Mater. Horiz. 2021, 8, 1153–1172. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Liu, Y.; Esan, O.C.; Pan, Z.; An, L. Machine learning for advanced energy materials. Energy AI 2021, 3, 100049. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, Z.; Jiao, Y.; Nie, C. Material Discrimination Algorithm Based on Hyperspectral Image. Sci. Program. 2021, 2021, 8329974. [Google Scholar] [CrossRef]

- Di, D.; Shi, F.; Yan, F.; Xia, L.; Mo, Z.; Ding, Z.; Shan, F.; Song, B.; Li, S.; Wei, Y. Hypergraph learning for identification of COVID-19 with CT imaging. Med. Image Anal. 2021, 68, 101910. [Google Scholar] [CrossRef]

- Wang, H.; Li, C. Quality guided image recognition towards industrial materials diffusion. J. Vis. Commun. Image Represent. 2019, 64, 102608. [Google Scholar] [CrossRef]

- Moosavi, S.M.; Jablonka, K.M.; Smit, B. The Role of Machine Learning in the Understanding and Design of Materials. J. Am. Chem. Soc. 2020, 48, 20273–20287. [Google Scholar] [CrossRef]

- Tohidi, S.; Sharifi, Y. Load-carrying capacity of locally corroded steel plate girder ends using artificial neural network. Thin-Walled Struct. 2016, 100, 48–61. [Google Scholar] [CrossRef]

- Bhaduri, A.; Gupta, A.; Graham-Brady, L. Stress field prediction in fiber-reinforced composite materials using a deep learning approach. Compos. Part B Eng. 2022, 238, 109879. [Google Scholar] [CrossRef]

- Fang, Z.; Roy, K.; Mares, J.; Sham, C.-W.; Chen, B.; Lim, J.B.P. Deep learning-based axial capacity prediction for cold-formed steel channel sections using Deep Belief Network. Structures 2021, 33, 2792–2802. [Google Scholar] [CrossRef]

- Fang, Z.; Roy, K.; Chen, B.; Sham, C.-W.; Hajirasouliha, I.; Lim, J.B.P. Deep learning-based procedure for structural design of cold-formed steel channel sections with edge-stiffened and un-stiffened holes under axial compression. Thin-Walled Struct. 2021, 166, 108076. [Google Scholar] [CrossRef]

- Zhou, S.; Liu, S.; Chen, W.; Cheng, Y.; Fan, J.; Zhao, L.; Xiao, X.; Chen, Y.H.; Luo, C.X.; Wang, M.S.; et al. A “Biconcave-Alleviated” Strategy to Construct Aspergillus niger-Derived Carbon/MoS2 for Ultrastable Sodium Ion Storage. ACS Nano 2021, 15, 13814–13825. [Google Scholar] [CrossRef]

- Huang, X.L.; Wang, Y.-X.; Chou, S.-L.; Dou, S.X.; Wang, Z.M. Materials engineering for adsorption and catalysis in room-temperature Na–S batteries. Energy Environ. Sci. 2021, 14, 3757–3795. [Google Scholar] [CrossRef]

- Li, H.; Zhang, W.; Sun, K.; Guo, J.; Yuan, K.; Fu, J.; Zhang, T.; Zhang, X.; Long, H.; Zhang, Z.; et al. Manganese-Based Materials for Rechargeable Batteries beyond Lithium–Ion. Adv. Energy Mater. 2021, 11, 2100867. [Google Scholar] [CrossRef]

- Gao, Y.; Guo, Q.; Zhang, Q.; Cui, Y.; Zheng, Z. Fibrous Materials for Flexible Li–S Battery. Adv. Energy Mater. 2020, 11, 2002580. [Google Scholar] [CrossRef]

- Jin, C.; Nai, J.; Sheng, O.; Yuan, H.; Zhang, W.; Tao, X.; Lou, X.W. Biomass-based materials for green lithium secondary batteries. Energy Environ. Sci. 2021, 14, 1326–1379. [Google Scholar] [CrossRef]

- Ma, Q.; Zheng, Y.; Luo, D.; Or, T.; Liu, Y.; Yang, L.; Dou, H.; Liang, J.; Nie, Y.; Wang, X.; et al. 2D Materials for All-Solid-State Lithium Batteries. Adv. Mater. 2022, 34, e2108079. [Google Scholar] [CrossRef]

- Jin, H.; Sun, Y.; Sun, Z.; Yang, M.; Gui, R. Zero-dimensional sulfur nanomaterials: Synthesis, modifications and applications. Coord. Chem. Rev. 2021, 438, 213913. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, B.; Sun, J.; Hu, W.; Wang, H. Recent advances in porous nanostructures for cancer theranostics. Nano Today 2021, 38, 101146. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Yang, Y.; Xiong, Y.; Zhang, L.; Xu, W.; Duan, G.; Mei, C.; Jiang, S.; Rui, Z.; Zhang, K. Porous aerogel and sponge composites: Assisted by novel nanomaterials for electromagnetic interference shielding. Nano Today 2021, 38, 101204. [Google Scholar] [CrossRef]

- Ajdary, R.; Tardy, B.L.; Mattos, B.D.; Bai, L.; Rojas, O.J. Plant Nanomaterials and Inspiration from Nature: Water Interactions and Hierarchically Structured Hydrogels. Adv. Mater. 2021, 33, e2001085. [Google Scholar] [CrossRef] [PubMed]

- Glowniak, S.; Szczesniak, B.; Choma, J.; Jaroniec, M. Advances in Microwave Synthesis of Nanoporous Materials. Adv. Mater. 2021, 33, e2103477. [Google Scholar] [CrossRef]

- Sharma, S.; Sahu, B.K.; Cao, L.; Bindra, P.; Kaur, K.; Chandel, M.; Koratkar, N.; Huang, Q.; Shanmugam, V. Porous nanomaterials: Main vein of agricultural nanotechnology. Prog. Mater. Sci. 2021, 121, 100812. [Google Scholar] [CrossRef]

- John, R. What is material informatics. In Materials Informatics-Effective Data Management for New Materials Discovery; Knowledge Press: Boston, MA, USA, 1999. [Google Scholar]

- Curtarolo, S.; Hart, G.L.; Nardelli, M.B.; Mingo, N.; Sanvito, S.; Levy, O. The high-throughput highway to computational materials design. Nat. Mater. 2013, 12, 191–201. [Google Scholar] [CrossRef]

- Earl, D.J.; Deem, M.W. Toward a database of hypothetical zeolite structures. Ind. Eng. Chem. Res. 2006, 45, 5449–5454. [Google Scholar] [CrossRef]

- Yang, Z.; Li, X.; Catherine Brinson, L.; Choudhary, A.N.; Chen, W.; Agrawal, A. Microstructural materials design via deep adversarial learning methodology. J. Mech. Des. 2018, 140, 111416. [Google Scholar] [CrossRef]

- Wang, Z.; Smith, D.E. Finite element modelling of fully-coupled flow/fiber-orientation effects in polymer composite deposition additive manufacturing nozzle-extrudate flow. Compos. Part B Eng. 2021, 219, 108811. [Google Scholar] [CrossRef]

- Yu, C.-H.; Qin, Z.; Buehler, M.J. Artificial intelligence design algorithm for nanocomposites optimized for shear crack resistance. Nano Futures 2019, 3, 035001. [Google Scholar] [CrossRef]

- Gu, G.X.; Chen, C.-T.; Richmond, D.J.; Buehler, M.J. Bioinspired hierarchical composite design using machine learning: Simulation, additive manufacturing, and experiment. Mater. Horiz. 2018, 5, 939–945. [Google Scholar] [CrossRef]

- Gu, G.X.; Chen, C.-T.; Buehler, M.J. De novo composite design based on machine learning algorithm. Extrem. Mech. Lett. 2018, 18, 19–28. [Google Scholar] [CrossRef]

- Lv, Z.; Yue, M.; Ling, M.; Zhang, H.; Yan, J.; Zheng, Q.; Li, X. Controllable Design Coupled with Finite Element Analysis of Low-Tortuosity Electrode Architecture for Advanced Sodium-Ion Batteries with Ultra-High Mass Loading. Adv. Energy Mater. 2021, 11, 2003725. [Google Scholar] [CrossRef]

- Haq, M.R.u.; Nazir, A.; Jeng, J.-Y. Design for additive manufacturing of variable dimension wave springs analyzed using experimental and finite element methods. Addit. Manuf. 2021, 44, 102032. [Google Scholar] [CrossRef]

- Song, S.; Zhu, M.; Xiong, Y.; Wen, Y.; Nie, M.; Meng, X.; Zheng, A.; Yang, Y.; Dai, Y.; Sun, L.; et al. Mechanical Failure Mechanism of Silicon-Based Composite Anodes under Overdischarging Conditions Based on Finite Element Analysis. ACS Appl. Mater. Interfaces 2021, 13, 34157–34167. [Google Scholar] [CrossRef]

- Jin, C.; Zhang, W.; Liu, P.; Yang, X.; Oeser, M. Morphological simplification of asphaltic mixture components for micromechanical simulation using finite element method. Comput. Aided Civ. Infrastruct. Eng. 2021, 36, 1435–1452. [Google Scholar] [CrossRef]

- Lyngdoh, G.A.; Kelter, N.-K.; Doner, S.; Krishnan, N.M.A.; Das, S. Elucidating the auxetic behavior of cementitious cellular composites using finite element analysis and interpretable machine learning. Mater. Des. 2022, 213, 110341. [Google Scholar] [CrossRef]

- Cao, Y.; Lin, X.; Kang, N.; Ma, L.; Wei, L.; Zheng, M.; Yu, J.; Peng, D.; Huang, W. A novel high-efficient finite element analysis method of powder bed fusion additive manufacturing. Addit. Manuf. 2021, 46, 102187. [Google Scholar] [CrossRef]

- Gholami, M.; Afrasiab, H.; Baghestani, A.M.; Fathi, A. A novel multiscale parallel finite element method for the study of the hygrothermal aging effect on the composite materials. Compos. Sci. Technol. 2022, 217, 109120. [Google Scholar] [CrossRef]

- Nivelle, P.; Tsanakas, J.A.; Poortmans, J.; Daenen, M. Stress and strain within photovoltaic modules using the finite element method: A critical review. Renew. Sustain. Energy Rev. 2021, 145, 111022. [Google Scholar] [CrossRef]

- Yang, Y.; Kingan, M.J.; Mace, B.R. A wave and finite element method for calculating sound transmission through rectangular panels. Mech. Syst. Signal. Process. 2021, 151, 107357. [Google Scholar] [CrossRef]

- Wang, S.; Celebi, M.E.; Zhang, Y.-D.; Yu, X.; Lu, S.; Yao, X.; Zhou, Q.; Miguel, M.-G.; Tian, Y.; Gorriz, J.M. Advances in data preprocessing for biomedical data fusion: An overview of the methods, challenges, and prospects. Inf. Fusion 2021, 76, 376–421. [Google Scholar] [CrossRef]

- Pedroni, A.; Bahreini, A.; Langer, N. Automagic: Standardized preprocessing of big EEG data. NeuroImage 2019, 200, 460–473. [Google Scholar] [CrossRef]

- Liu, W.; Ford, P.; Uvegi, H.; Margarido, F.; Santos, E.; Ferrão, P.; Olivetti, E. Economics of materials in mobile phone preprocessing, focus on non-printed circuit board materials. Waste Manag. 2019, 87, 78–85. [Google Scholar] [CrossRef]

- Mishra, P.; Biancolillo, A.; Roger, J.M.; Marini, F.; Rutledge, D.N. New data preprocessing trends based on ensemble of multiple preprocessing techniques. TrAC Trends Anal. Chem. 2020, 132, 116045. [Google Scholar] [CrossRef]

- Kojima, T.; Washio, T.; Hara, S.; Koishi, M. Synthesis of computer simulation and machine learning for achieving the best material properties of filled rubber. Sci. Rep. 2020, 10, 18127. [Google Scholar] [CrossRef]

- Baysal, M.; Günay, M.E.; Yıldırım, R. Decision tree analysis of past publications on catalytic steam reforming to develop heuristics for high performance: A statistical review. Int. J. Hydrogen Energy 2017, 42, 243–254. [Google Scholar] [CrossRef]

- Dunn, A.; Wang, Q.; Ganose, A.; Dopp, D.; Jain, A. Benchmarking materials property prediction methods: The Matbench test set and Automatminer reference algorithm. Npj Comput. Mater. 2020, 6, 138. [Google Scholar] [CrossRef]

- Carleo, G.; Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 2017, 355, 602–606. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Zou, X.; Pan, J.; Sun, Z.; Wang, B.; Jin, Z.; Xu, G.; Yan, F. Machine learning analysis and prediction models of alkaline anion exchange membranes for fuel cells. Energy Environ. Sci. 2021, 14, 3965–3975. [Google Scholar] [CrossRef]

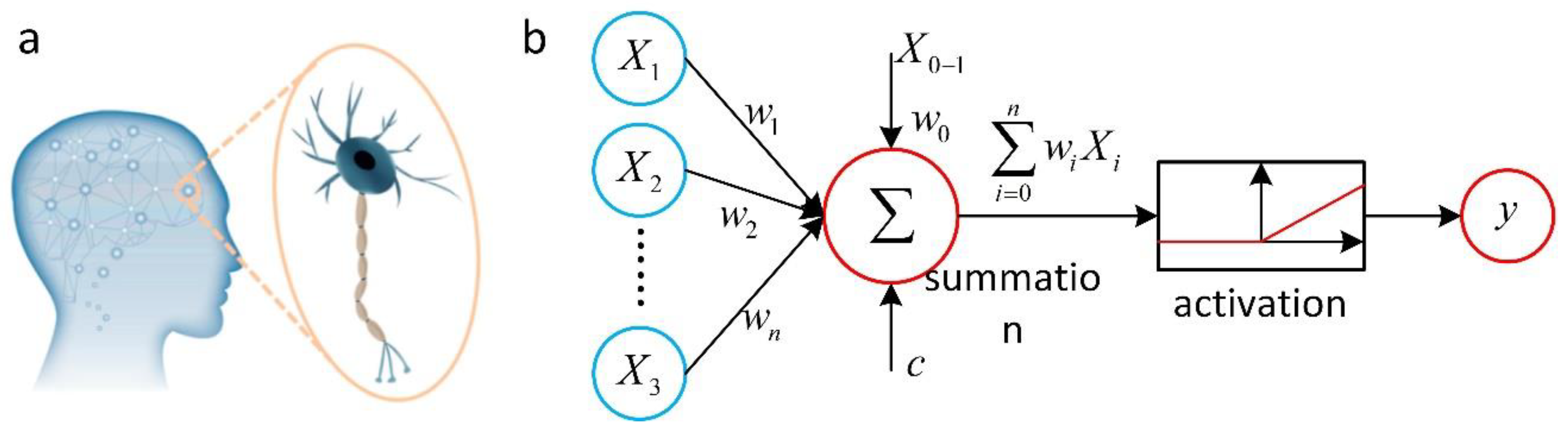

- Hong, Y.; Hou, B.; Jiang, H.; Zhang, J. Machine learning and artificial neural network accelerated computational discoveries in materials science. WIREs Comput. Mol. Sci. 2019, 10, e1450. [Google Scholar] [CrossRef]

- Shi, L.; Zhang, S.; Arshad, A.; Hu, Y.; He, Y.; Yan, Y. Thermo-physical properties prediction of carbon-based magnetic nanofluids based on an artificial neural network. Renew. Sustain. Energy Rev. 2021, 149, 111341. [Google Scholar] [CrossRef]

- Kim, C.; Lee, J.-Y.; Kim, M. Prediction of the Dynamic Stiffness of Resilient Materials using Artificial Neural Network (ANN) Technique. Appl. Sci. 2019, 9, 1088. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural. Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Tran, T.T.K.; Bateni, S.M.; Ki, S.J.; Vosoughifar, H. A review of neural networks for air temperature forecasting. Water 2021, 13, 1294. [Google Scholar] [CrossRef]

- Chen, L.; Li, S.; Bai, Q.; Yang, J.; Jiang, S.; Miao, Y. Review of image classification algorithms based on convolutional neural networks. Remote Sens. 2021, 13, 4712. [Google Scholar] [CrossRef]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Umar, A.M.; Linus, O.U.; Arshad, H.; Kazaure, A.A.; Gana, U.; Kiru, M.U. Comprehensive review of artificial neural network applications to pattern recognition. IEEE Access 2019, 7, 158820–158846. [Google Scholar] [CrossRef]

- Vargas-Hákim, G.A.; Mezura-Montes, E.; Acosta-Mesa, H.-G. A Review on Convolutional Neural Networks Encodings for Neuroevolution. IEEE Trans. Evol. Comput. 2021, 26, 12–27. [Google Scholar] [CrossRef]

- Lee, S.; Kim, B.; Kim, J. Predicting performance limits of methane gas storage in zeolites with an artificial neural network. J. Mater. Chem. A 2019, 7, 2709–2716. [Google Scholar] [CrossRef]

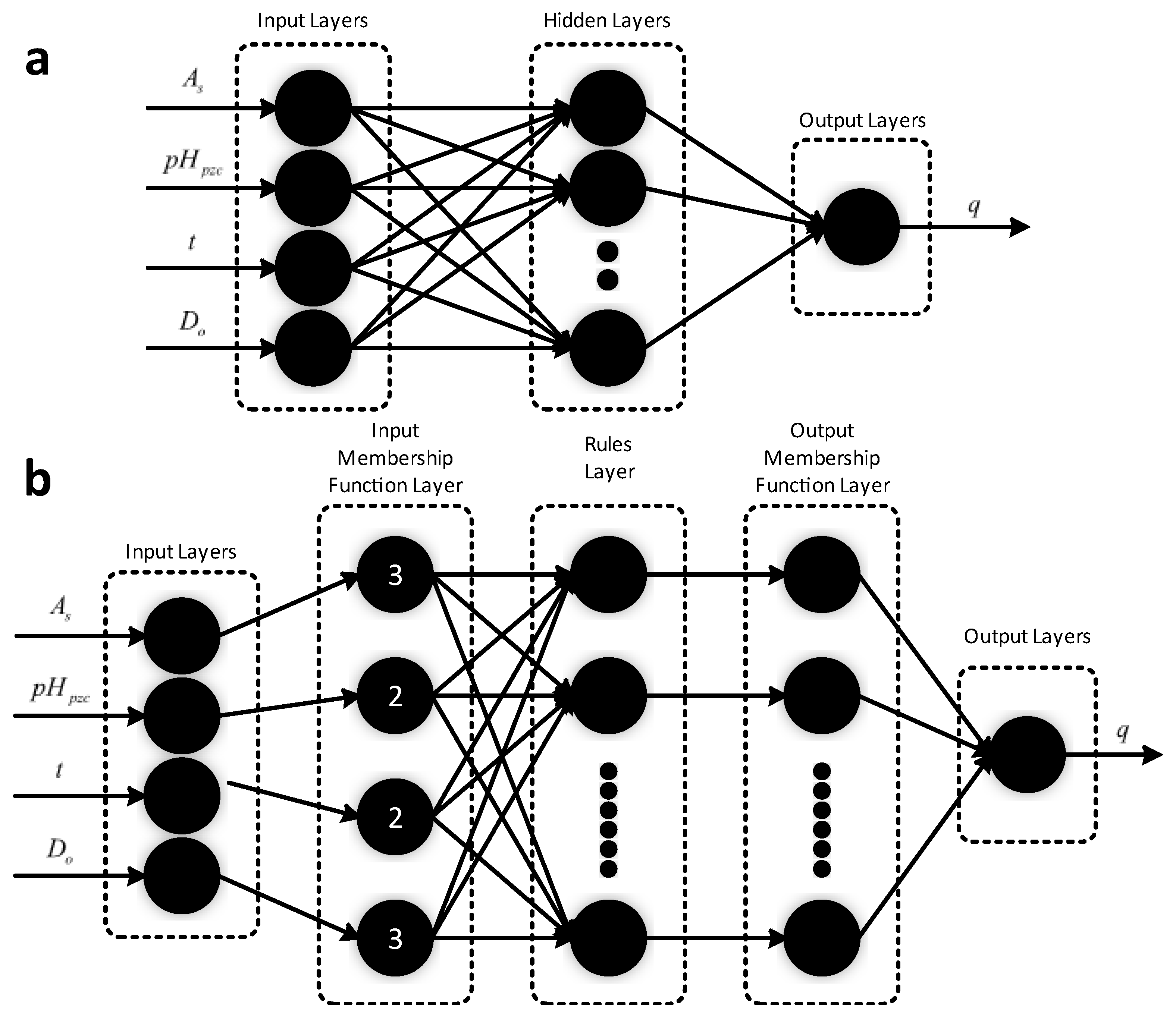

- Franco, D.S.P.; Duarte, F.A.; Salau, N.P.G.; Dotto, G.L. Analysis of indium (III) adsorption from leachates of LCD screens using artificial neural networks (ANN) and adaptive neuro-fuzzy inference systems (ANIFS). J. Hazard. Mater. 2020, 384, 121137. [Google Scholar] [CrossRef] [PubMed]

- Franco, D.S.; Duarte, F.A.; Salau, N.P.G.; Dotto, G.L. Adaptive neuro-fuzzy inference system (ANIFS) and artificial neural network (ANN) applied for indium (III) adsorption on carbonaceous materials. Chem. Eng. Commun. 2019, 206, 1452–1462. [Google Scholar] [CrossRef]

- Zhang, L.; Wei, Z.; Yao, S.; Gao, Y.; Jin, X.; Chen, G.; Shen, Z.; Du, F. Polymorph Engineering for Boosted Volumetric Na-Ion and Li-Ion Storage. Adv. Mater. 2021, 33, e2100210. [Google Scholar] [CrossRef]

- Wei, Y.; ZhaoPing, L.; WeiKang, B.; Hui, X.; ZeSheng, Y.; HaiJun, J. Light, strong, and stable nanoporous aluminum with native oxide shell. Sci. Adv. 2021, 28, eabb9471. [Google Scholar] [CrossRef]

- Zhang, X.; Qiao, J.; Jiang, Y.; Wang, F.; Tian, X.; Wang, Z.; Wu, L.; Liu, W.; Liu, J. Carbon-Based MOF Derivatives: Emerging Efficient Electromagnetic Wave Absorption Agents. Nanomicro Lett. 2021, 13, 135. [Google Scholar] [CrossRef]

- Kim, Y.R.; Lee, T.W.; Park, S.; Jang, J.; Ahn, C.W.; Choi, J.J.; Hahn, B.D.; Choi, J.H.; Yoon, W.H.; Bae, S.H.; et al. Supraparticle Engineering for Highly Dense Microspheres: Yttria-Stabilized Zirconia with Adjustable Micromechanical Properties. ACS Nano 2021, 15, 10264–10274. [Google Scholar] [CrossRef]

- Li, X.; Yu, X.; Chua, J.W.; Lee, H.P.; Ding, J.; Zhai, W. Microlattice Metamaterials with Simultaneous Superior Acoustic and Mechanical Energy Absorption. Small 2021, 17, e2100336. [Google Scholar] [CrossRef]

- Leiping, L.; Degang, J.; Kun, Z.; Maozhuang, Z.; Jingquan, L. Industry-Scale and Environmentally Stable Ti3C2Tx MXene Based Film for Flexible Energy Storage Devices. Adv. Funct. Mater. 2021, 35, 2103960. [Google Scholar] [CrossRef]

- Hu, L.; Zhong, Y.; Wu, S.; Wei, P.; Huang, J.; Xu, D.; Zhang, L.; Ye, Q.; Cai, J. Biocompatible and biodegradable super-toughness regenerated cellulose via water molecule-assisted molding. Chem. Eng. J. 2021, 417, 129229. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, Y.; Anankanbil, S.; Guo, Z. Applications of nanocellulosic products in food: Manufacturing processes, structural features and multifaceted functionalities. Trends Food Sci. Technol. 2021, 113, 277–300. [Google Scholar] [CrossRef]

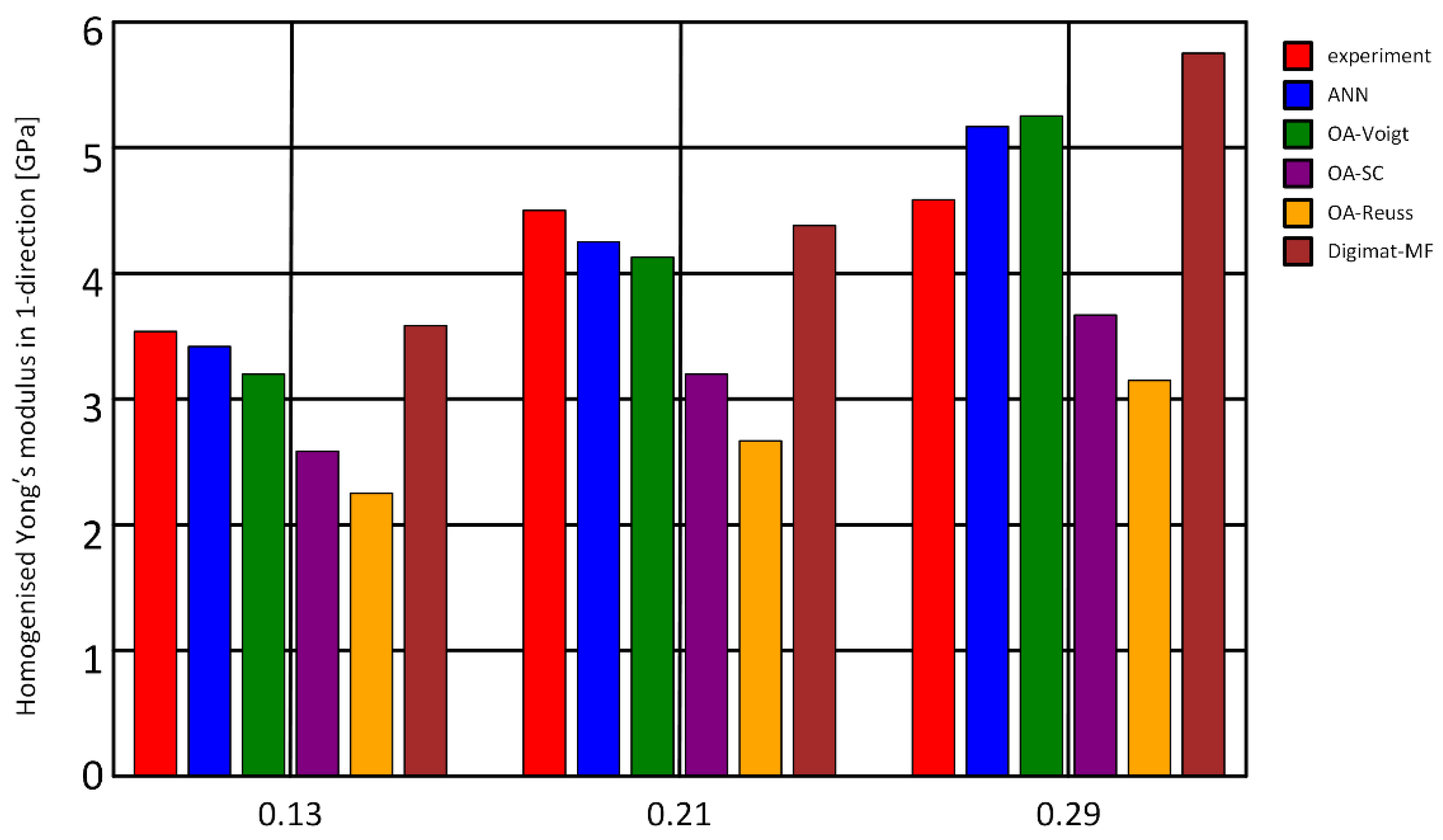

- Mentges, N.; Dashtbozorg, B.; Mirkhalaf, S.M. A micromechanics-based artificial neural networks model for elastic properties of short fiber composites. Compos. Part B Eng. 2021, 213, 108736. [Google Scholar] [CrossRef]

- Mirkhalaf, S.; Eggels, E.; van Beurden, T.; Larsson, F.; Fagerström, M. A finite element based orientation averaging method for predicting elastic properties of short fiber reinforced composites. Compos. Part B Eng. 2020, 202, 108388. [Google Scholar] [CrossRef]

- Aycan Dumenci, N.; Cagcag Yolcu, O.; Aydin Temel, F.; Turan, N.G. Identifying the maturity of co-compost of olive mill waste and natural mineral materials: Modelling via ANN and multi-objective optimization. Bioresour. Technol. 2021, 338, 125516. [Google Scholar] [CrossRef]

- Devaraj, T.; Aathika, S.; Mani, Y.; Jagadiswary, D.; Evangeline, S.J.; Dhanasekaran, A.; Palaniyandi, S.; Subramanian, S. Application of Artificial Neural Network as a nonhazardous alternative on kinetic analysis and modeling for green synthesis of cobalt nanocatalyst from Ocimum tenuiflorum. J. Hazard. Mater. 2021, 416, 125720. [Google Scholar] [CrossRef]

- Zhang, Y.; Mesaros, A.; Fujita, K.; Edkins, S.D.; Hamidian, M.H.; Ch’ng, K.; Eisaki, H.; Uchida, S.; Davis, J.C.S.; Khatami, E.; et al. Machine learning in electronic-quantum-matter imaging experiments. Nature 2019, 570, 484–490. [Google Scholar] [CrossRef]

- Liu, B.; Vu-Bac, N.; Zhuang, X.; Fu, X.; Rabczuk, T. Stochastic integrated machine learning based multiscale approach for the prediction of the thermal conductivity in carbon nanotube reinforced polymeric composites. Compos. Sci. Technol. 2022, 224, 109425. [Google Scholar] [CrossRef]

- Xu, D.; Hui, Z.; Liu, Y.; Chen, G. Morphing control of a new bionic morphing UAV with deep reinforcement learning. Aerosp. Sci. Technol. 2019, 92, 232–243. [Google Scholar] [CrossRef]

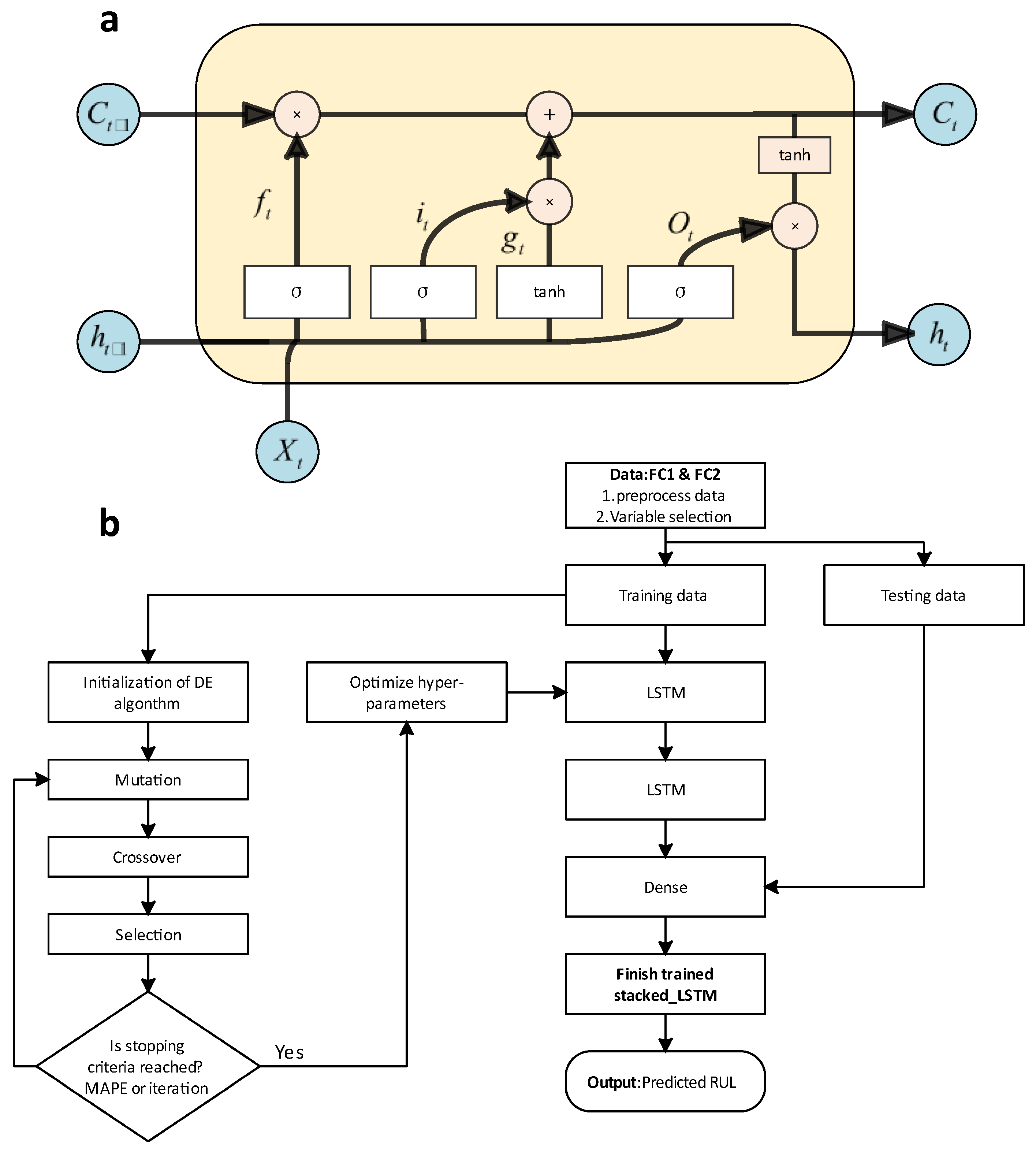

- Wang, F.-K.; Cheng, X.-B.; Hsiao, K.-C. Stacked long short-term memory model for proton exchange membrane fuel cell systems degradation. J. Power Sources 2020, 448, 227591. [Google Scholar] [CrossRef]

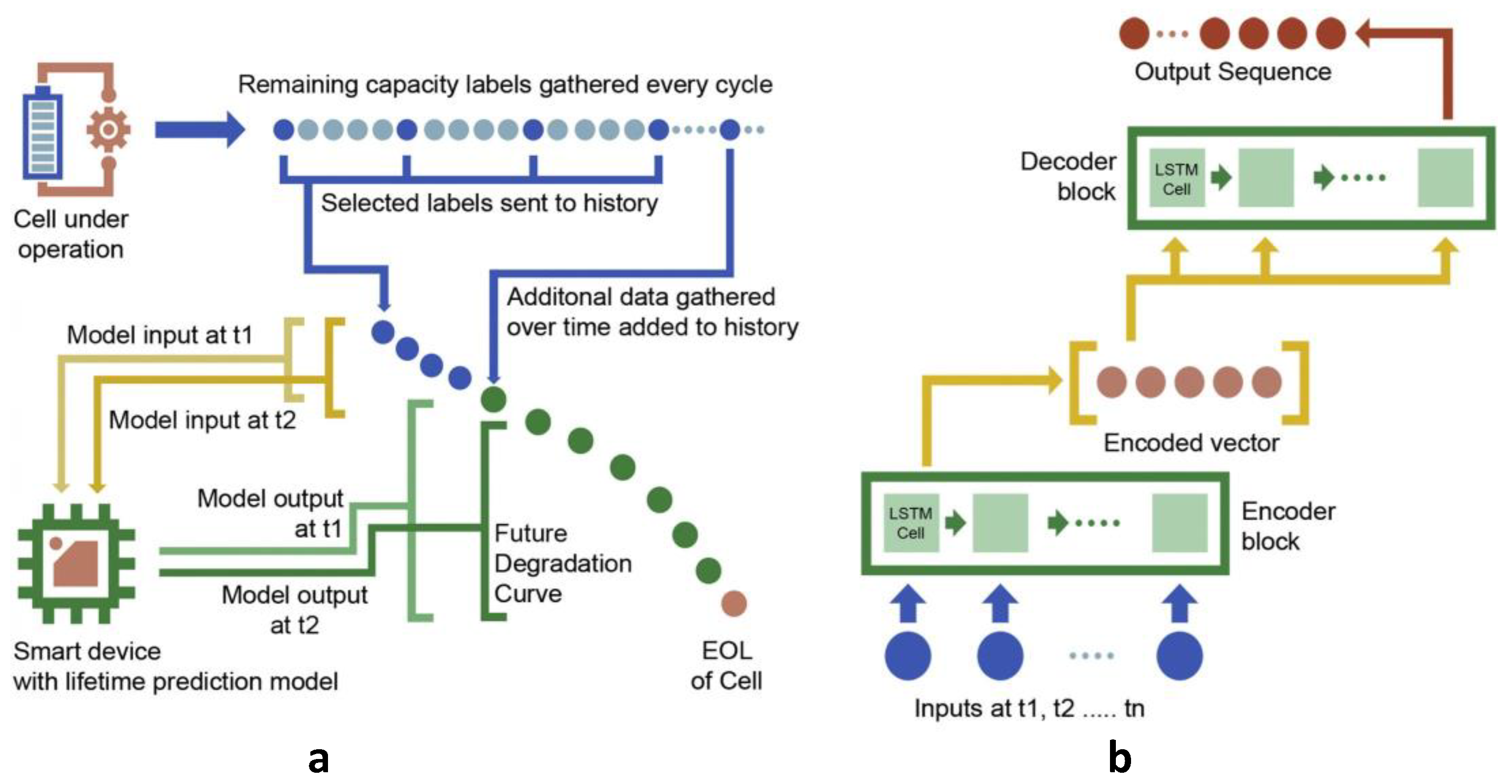

- Li, W.; Sengupta, N.; Dechent, P.; Howey, D.; Annaswamy, A.; Sauer, D.U. One-shot battery degradation trajectory prediction with deep learning. J. Power Sources 2021, 506, 230024. [Google Scholar] [CrossRef]

- Navidpour, A.H.; Hosseinzadeh, A.; Huang, Z.; Li, D.; Zhou, J.L. Application of machine learning algorithms in predicting the photocatalytic degradation of perfluorooctanoic acid. Catal. Rev. 2022, 64, 1–26. [Google Scholar] [CrossRef]

- Thike, P.H.; Zhao, Z.; Shi, P.; Jin, Y. Significance of artificial neural network analytical models in materials’ performance prediction. Bull. Mater. Sci. 2020, 43, 211. [Google Scholar] [CrossRef]

- Yang, L.; Xu, M.; Guo, Y.; Deng, X.; Gao, F.; Guan, Z. Hierarchical Bayesian LSTM for Head Trajectory Prediction on Omnidirectional Images. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Xue, J.; Zhang, P.; Zheng, N.; Ouyang, W. Social-aware pedestrian trajectory prediction via states refinement LSTM. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2742–2759. [Google Scholar] [CrossRef]

- Ma, Y.; Guo, Z.; Xia, B.; Zhang, Y.; Liu, X.; Yu, Y.; Tang, N.; Tong, X.; Wang, M.; Ye, X. Identification of antimicrobial peptides from the human gut microbiome using deep learning. Nat. Biotechnol. 2022, 40, 921–931. [Google Scholar] [CrossRef]

- Zhang, T.; Zheng, W.; Cui, Z.; Zong, Y.; Li, Y. Spatial–temporal recurrent neural network for emotion recognition. IEEE Trans. Cybern. 2018, 49, 839–847. [Google Scholar] [CrossRef]

- Ma, Q.; Li, S.; Cottrell, G. Adversarial joint-learning recurrent neural network for incomplete time series classification. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1765–1776. [Google Scholar] [CrossRef]

- Shu, X.; Zhang, L.; Qi, G.-J.; Liu, W.; Tang, J. Spatiotemporal co-attention recurrent neural networks for human-skeleton motion prediction. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3300–3315. [Google Scholar] [CrossRef]

- Pu, G.; Men, Y.; Mao, Y.; Jiang, Y.; Ma, W.-Y.; Lian, Z. Controllable Image Synthesis with Attribute-Decomposed GAN. IEEE Trans. Pattern Anal. Mach. Intell. 2022. [Google Scholar] [CrossRef]

- Tang, H.; Shao, L.; Torr, P.H.; Sebe, N. Local and Global GANs with Semantic-Aware Upsampling for Image Generation. IEEE Trans. Pattern Anal. Mach. Intell. 2022. [Google Scholar] [CrossRef] [PubMed]

- Zhu, J.; Zhao, D.; Zhang, B.; Zhou, B. Disentangled inference for gans with latently invertible autoencoder. Int. J. Comput. Vis. 2022, 130, 1259–1276. [Google Scholar] [CrossRef]

- Nelson, T.R.; White, A.J.; Bjorgaard, J.A.; Sifain, A.E.; Zhang, Y.; Nebgen, B.; Fernandez-Alberti, S.; Mozyrsky, D.; Roitberg, A.E.; Tretiak, S. Non-adiabatic Excited-State Molecular Dynamics: Theory and Applications for Modeling Photophysics in Extended Molecular Materials. Chem. Rev. 2020, 120, 2215–2287. [Google Scholar] [CrossRef]

- Wang, J.; Arantes, P.R.; Bhattarai, A.; Hsu, R.V.; Pawnikar, S.; Huang, Y.M.; Palermo, G.; Miao, Y. Gaussian accelerated molecular dynamics (GaMD): Principles and applications. Wiley Interdiscip. Rev. Comput. Mol. Sci. 2021, 11, e1521. [Google Scholar] [CrossRef]

- Damjanovic, J.; Miao, J.; Huang, H.; Lin, Y.S. Elucidating Solution Structures of Cyclic Peptides Using Molecular Dynamics Simulations. Chem. Rev. 2021, 121, 2292–2324. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Yang, T.; Ji, H.; Zhou, J.; Wang, Z.; Qian, T.; Yan, C. Boosting the Optimization of Lithium Metal Batteries by Molecular Dynamics Simulations: A Perspective. Adv. Energy Mater. 2020, 10, 2002373. [Google Scholar] [CrossRef]

- Janesko, B.G. Replacing hybrid density functional theory: Motivation and recent advances. Chem. Soc. Rev. 2021, 50, 8470–8495. [Google Scholar] [CrossRef]

- Zhang, I.Y.; Xu, X. On the top rung of Jacob’s ladder of density functional theory: Toward resolving the dilemma of SIE and NCE. WIREs Comput. Mol. Sci. 2020, 11, e1490. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, C.; Liu, W. NAC-TDDFT: Time-Dependent Density Functional Theory for Nonadiabatic Couplings. Acc. Chem. Res. 2021, 54, 3288–3297. [Google Scholar] [CrossRef]

- Liao, X.; Lu, R.; Xia, L.; Liu, Q.; Wang, H.; Zhao, K.; Wang, Z.; Zhao, Y. Density Functional Theory for Electrocatalysis. Energy Environ. Mater. 2021, 5, 157–185. [Google Scholar] [CrossRef]

- Hammes-Schiffer, S. A conundrum for density functional theory. Science 2017, 6320, 28–29. [Google Scholar] [CrossRef]

- Zhu, J.; Ge, Z.; Song, Z.; Gao, F. Review and big data perspectives on robust data mining approaches for industrial process modeling with outliers and missing data. Annu. Rev. Control 2018, 46, 107–133. [Google Scholar] [CrossRef]

- Fu, J.; Zhang, Y.; Liu, J.; Lian, X.; Tang, J.; Zhu, F. Pharmacometabonomics: Data processing and statistical analysis. Brief. Bioinform. 2021, 22, bbab138. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Huang, G.; Song, S.; Pan, X.; Xia, Y.; Wu, C. Regularizing deep networks with semantic data augmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3733–3748. [Google Scholar] [CrossRef] [PubMed]

| Database Name | Material Categories | Features | URL |

|---|---|---|---|

| MatWeb | Metals, plastics, ceramics and composites | Tensile strength, breaking strength, Vicat softening point, etc. | https://www.matweb.com/search/PropertySearch.aspx (accessed on 13 July 2022) |

| NIST | Metals, polymers, etc. | Thermochemical, thermophysical and ion energetics data | http://webbook.nist.gov/chemistry/name-ser.html (accessed on 13 July 2022) |

| AZO materials | Alloy, rubber, plastics, etc. | Mechanical strength, element, molecular weight, etc. | https://www.azom.com/materials-engineering-directory.aspx (accessed on 13 July 2022) |

| M-Base Company | Polymer | Tensile modulus, yield stress and strain, density, molding shrinkage, etc. | https://www.materialdatacenter.com/mb/ (accessed on 13 July 2022) |

| Ceramic Industry | Ceramic materials | Forming method, sintering process/temperature, tensile strength, bulk resistivity, dielectric strength and elastic modulus, etc. | http://ceramicindustry.com/directories/2718-ceramic-components-directory/ (accessed on 13 July 2022) |

| NIST ATERIAL MEASUREMENT LABORATORY | Materials | Phase diagram, various thermodynamic and kinetic parameters, atomic spectra, physical parameters, etc., | https://www.nist.gov/mml (accessed on 13 July 2022) |

| PoLyInfo | Polymer | Chemical formula, type of material, physical properties | http://polymer.nims.go.jp/ (accessed on 13 July 2022) |

| CAMPUS | Plastic | Molding shrinkage, breaking strength, Vicat softening point, structure, etc. | http://www.campusplastics.com/ (accessed on 13 July 2022) |

| Cole-Parmer | Materials | Chemical compatibility | http://www.coleparmer.com/techinfo/chemcomp.asp (accessed on 13 July 2022) |

| The materials project | Materials | Chemical formula, type of material, physical properties, etc. | https://materialsproject.org/ (accessed on 13 July 2022) |

| crystalstar | Crystal | Crystal structures of organic, inorganic and metal-organic compounds and minerals | http://www.crystalstar.org/ (accessed on 13 July 2022) |

| FactSage Database | Materials | Phase diagram and various thermodynamic and kinetic parameters | http://www.crct.polymtl.ca/ (accessed on 13 July 2022) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, Z.; Liu, W.; Huang, C.; Mei, T. A Review of Performance Prediction Based on Machine Learning in Materials Science. Nanomaterials 2022, 12, 2957. https://doi.org/10.3390/nano12172957

Fu Z, Liu W, Huang C, Mei T. A Review of Performance Prediction Based on Machine Learning in Materials Science. Nanomaterials. 2022; 12(17):2957. https://doi.org/10.3390/nano12172957

Chicago/Turabian StyleFu, Ziyang, Weiyi Liu, Chen Huang, and Tao Mei. 2022. "A Review of Performance Prediction Based on Machine Learning in Materials Science" Nanomaterials 12, no. 17: 2957. https://doi.org/10.3390/nano12172957

APA StyleFu, Z., Liu, W., Huang, C., & Mei, T. (2022). A Review of Performance Prediction Based on Machine Learning in Materials Science. Nanomaterials, 12(17), 2957. https://doi.org/10.3390/nano12172957