2.1. Conceptual Architecture

The MyOGC integrated system provides an overall automation solution for detecting the Verticillium wilt from aerial multi-spectral images. The basic user requirements for the MyOGC platform are to support different methods of data insertion—manually from the user or directly from the multispectral sensor located to the drone. Thus, it combines cloud and edge computing technologies, ensuring a highly efficient and scalable high-demanding data processing system and the execution of adapted AI prediction models in an embedded platform in the user’s edge devices. The functional module of the MyOGC platform is depicted in

Figure 2.

The MyOGC system consists of four main sub systems: (a) The core has a coordination role; it provides the interfaces to the users and edge devices and it accepts and schedules data processes requests for execution in the other subsystems; (b) the data storage combines a classical relational database management system (RDBMS) and file system to store metadata, multi-spectral images and results; (c) the containers execution engine initiates containers which execute specific data processing tasks during a data processing pipeline; and (d) the drone hosts the edge device, a Coral Edge TPU device from Google, deployed for executing region of interest detection and classification tasks.

In the core subsystem, the process orchestrator is the module that receives input data and requests for processing. Such requests can be either the process of multi-spectral images of an olive field and the prediction of the spread of the disease on it, or use the stored data in order to train the AI prediction models (both cloud and embedded). According to the request, it selects the appropriate analysis workflow, it calculates the required resources and it proceeds to create the execution plan. The plan contains the data processing microservices that must be used and a workflow that defines the execution order of the analysis tasks. the process orchestrator coordinates and monitors the analysis workflow, initiating each step and passing the intermediate results between the tasks.

The two interfaces of the core subsystem are a graphical user interface (GUI) and a HTTP-based application programming interface (API). The GUI is the point of interaction of the users with the system. It is implemented using the Python Django Framework and Angular Js library for the frontend. The user can define fields, upload new multispectral images of a field and ask for processing while the results are depicted in a Geographic-based interactive map. The HTTP API is mainly used for the interoperability between the cloud platform and the edge device, embedded in the drone. The HTTP API uses the GET and POST methods for allowing the invocation of methods that support various tasks, such as image uploading, downloading new trained IA models, image process execution and prediction uploading.

The data storage, as mentioned before, is the centralized subsystem, responsible to securely store all the data of the MyOGC integrated system. An RDBMS is used for storing users and fields’ metadata, pre-processed data, devices connection info, prediction results, etc. On the other hand, the filesystem is used to save binary files, such as the input and processed images and the AI trained prediction models.

The containers execution environment takes advantage of the virtual containers technology, providing on demand data process functionalities in a cloud infrastructure. Each container is independent of computational resources and provides a specific data analysis task using the microservices architectural model [

28]. There are four microservices in the MyOGC architecture: (a) the tree crown detection, (b) the vegetation indices calculation, (c) the AI prediction for the Verticillium wilt disease—presence and spread and (d) the AI prediction model training. All these microservices run independently and they execute specific tasks which are invoked as services by the process orchestrator. The container orchestrator’s main role is the instantiation of the appropriate containers to be available for the execution of an analysis task. It executes a credit-based algorithm [

29] for scheduling the instantiation of the containers according to the number of user requests and the available computational resources of the cloud infrastructure. This approach ensures both the scalability and the reuse of the cloud resources for serving the on-demand user’s requests in the most efficient manner.

Finally, the drone sub-system aims to bring the intelligence provided by the AI prediction models near to the user’s main device. In MyOGC, the drone with a multi-spectral camera is used to capture the aerial image datasets. These datasets contain overlapping images that can be merged to create a reflectance map, which is a mosaic of the area of interest where each of the pixels in the image represents the actual reflectance of the imaged object used for plant health analysis and the detection of the Verticillium wilt in olive trees. The classic procedure is to upload the images in the MyOGC platform for further processing and algorithmic analysis. The MyOGC system provides and additional feature. An embedded board with GPU capabilities is installed with the camera in the drone. A compact version of the AI prediction models is installed in the embedded, which is able to perform the data process analysis on the spot. The results are sent to the MyOGC platform for presentation to the user.

2.2. Multimodal Processing Approaches

Plant leaves contain information which is highly associated with their health. Optical leaf properties such as reflectance and transmittance are useful in remote sensing techniques for disease detection. They allow early detection, well before they can be perceived by the human eye, in a non-invasive manner.

In assessing a plant’s health, the most basic and common metric used is the reflection of vegetation, i.e., the ratio of the reflected radiation to the incident radiation. An assumption is made that the reflection of vegetation at a certain electromagnetic wavelength, or spectral reflectivity, depends on the properties of the vegetation due to factors such as the type of each plant, its water content, its chlorophyll content and its morphology [

30]. However, there may be a need to compare measurements that are more related to biophysical variables than to the spectral reflectivity itself. For these reasons, Vegetation Indices are often calculated. These indicators are obtained when two or more wavelength bands are used in an equation to calculate the corresponding vegetation index. In addition, vegetation indicators can help minimize problems related to reflectivity data, such as changes in viewing angles, atmospheric distortions and shadows, especially as most vegetation indicators are calculated as ratios of two or more wavelength bands [

30,

31]. Different vegetation markers use different wavelength zones and provide information on different biophysical variables [

32]. For example, one of the most commonly used indicators is the Normalized Difference Vegetation Index (NDVI) [

6,

33]. NDVI uses the wavelength corresponding to the red (RED—670 nm) color band and is absorbed to a very large extent by the chlorophyll in the foliage of the plants, and the wavelength band corresponding to the near-infrared (NIR—800 nm) in which the chlorophyll shows the most intense reflection (Equation (

1)). NDVI values range from −1 to 1 with the values closest to 1 corresponding to healthier and denser vegetation. NDVI can be calculated using reflexivity or non-physical measurements for the wave bands.

Another example where a vegetation index can provide biophysical information is the normalized difference red edge (NDRE) which is calculated using the near-infrared and red-edge wavelength bands (REG—750 nm), and the green exceedance index (GEI), which is calculated using the red, blue and green wavelength bands. Research for GEI showed that the measured gross primary product in a deciduous forest was significantly correlated with GEI. Thus, a specialized vegetation index can be used as a substitute for measurable biophysical variables that are important when evaluating the phenology of a particular site or plant.

Calculation of vegetation indices is usually done on a pixel-by-pixel basis and is, therefore, very sensitive to even slight image distortions. In order to calculate the real changes in biochemical and physiological parameters of vegetation, collected multispectral data have to be geometrically and radiometrically aligned, calibrated and corrected, so as to ensure that the pixels in two images represent the same soil characteristics and the same soil point. Thus, a crucial part of MyOGC is the correct design and implementation of appropriate geometric transformations and spatial-temporal image filters which include, characteristically, algorithms for image registration and alignment, image stitching, creation of orthomosaic with photogrammetry techniques, spectral and luminosity corrections and noise filtering. Classical computer vision techniques are, in most cases, adequate for the implementation of the aforementioned processes. Usually, though, image registration requires many resources, especially when, as in our case, there are significant differences between the input images. To efficiently and accurately align them, we process them successively in pairs, based on the similarity of the spectral response of each band: RED and GRE; GRE and REG; and finally, REG and NIR. We then combine successive pairs with motion homography. This yields satisfactory results. In our case, the above process has been found to be much quicker and more accurate that other frequently employed techniques by other tools that were tried (

Table 4) but a detailed analysis of its performance is needed to confirm any benefits.

Another class of processing algorithms relates to the removal of image noise due to data acquisition and enhancing the distinction between the objects under detection (i.e., tree crowns) and the background (i.e., shaded area). To remove unneeded details and smooth out the detection of relevant characteristics, a median filter of disc-shaped kernel is applied. The kernel size is correlated to the expected or the desirable minimum radius of tree features. After experimentation with kernel sizes, a kernel corresponding to a real length of about 50 cm was found to be adequate.

The next stage in processing of the multispectral data concerns the extraction of useful macroscopic characteristics of the grove, in an individual tree basis. A key part of this process is the detection of individual olive trees and the delineation of their crown. This is achieved by using state of the art classical computer vision techniques [

10,

18] (

Table 5) and techniques developed specifically for MyOGC. Though the state-of-the-art techniques are usually adequate for this task, we developed a modified version that is appropriate for on-the-fly execution on an embedded device, mounted on a UAV, thus facilitating the real-time monitoring process. More specifically, MyOGC employs a distance-based algorithm, in the four dimensional multimodal space, that estimates the probability that an image region belongs to a tree. The distance is calculated in reference to a point (RP) in the multi-spectral intensity space that shows maximum correlation with the spectral response of the trees to be delineated. The rationale is that regions that contain a point very close to the RP have a very high probability of belonging to a tree as a whole, rather than as individual pixels. Therefore, roughly, the probability

that the center pixel of a region

A of area

belongs to a tree is inversely proportional to the minimum distance of every pixel (

) of the the disc to the reference point

. That is, we employ a minimum local filter in respect to the distance of each pixel in the image to the reference point. This is expressed by an equation of the form of Equation (

2). The denominator part (

) takes values from

to

. The value of 1 is achieved when the minimum distance is 0, meaning that there is a point in the disc that coincides with the reference point.

The (RP) can be extracted from spectral data available from the literature, or experimentally estimated from the input data. Using this algorithm MyOGC achieves fast and accurate crown delineation (

Figure 3). Furthermore, this algorithm was used to generate groundtruth data to train a type of neural network that can be executed on embedded devices (

Section 2.4). This allowed for real-time crown delineation using only one-band images.

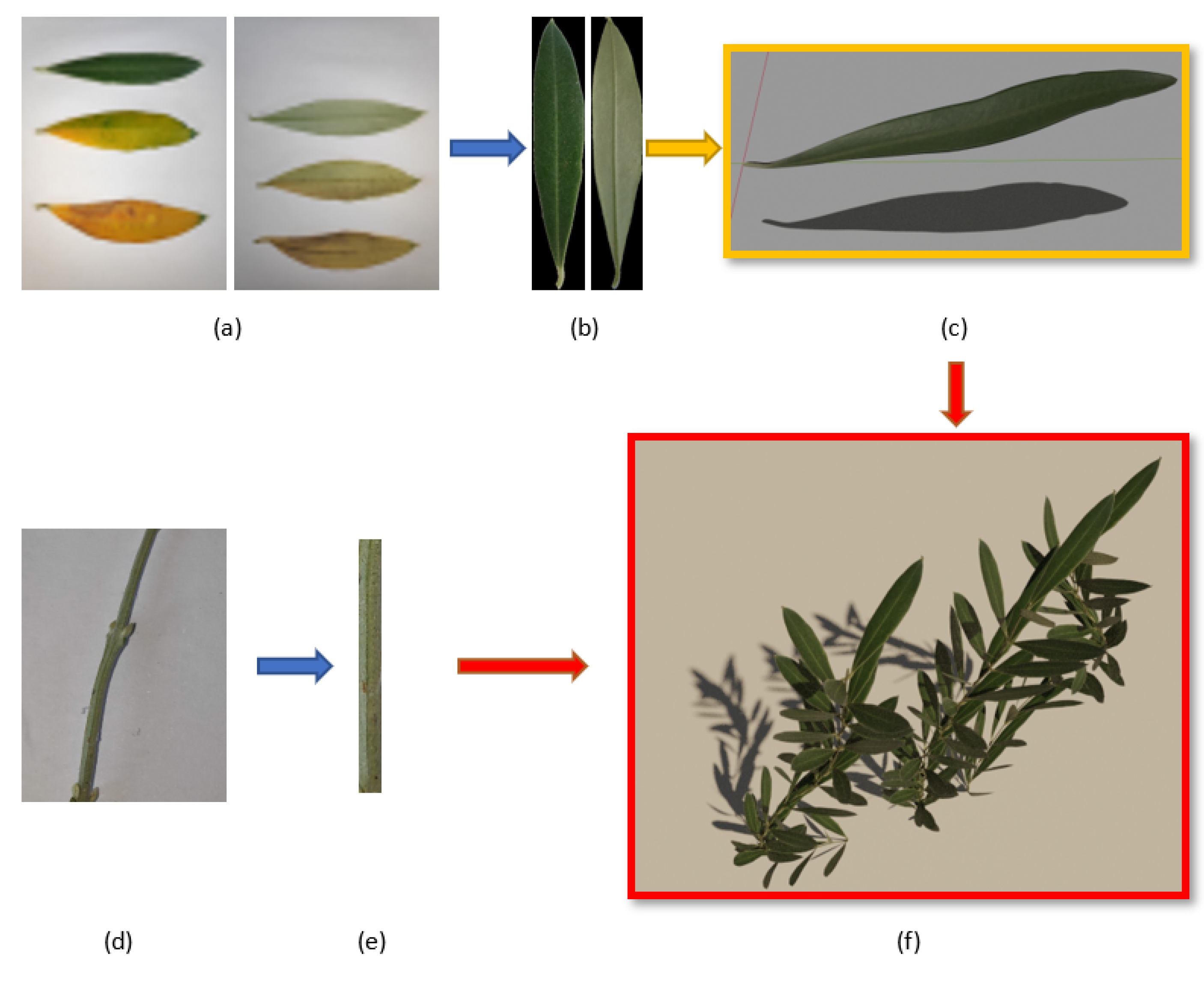

2.3. Annotation of Data

The synergy of the UAV multispectral camera and the portable spectrophotometer serves the purposes of early diagnosis of Verticillium wilt in olive trees [

2,

6]. The next step of the multimodal processing is thus the characterization of the input data. MyOGC employs two methods of annotation: (i) using spectral data from the laboratory analysis and (ii) using the NDVI and NDRE indices. For annotation using the data of the laboratory analysis, a similar procedure is followed as for the crown delineation case, extending the categorization levels from two (ground/tree) to four (or more) stress levels. Specifically, each pixel of the image is categorized into one of four categories, based on its spectral proximity to a characteristic reference spectrum. The reference spectrum is obtained from the laboratory analysis, from the average of the spectral responses of individual leaves that are in different stages of the 11-point scale (

Table 2).

For annotation using the NDVI and NDRE indices, the crown delineation algorithm is first applied to isolate the areas of interest and enhance the resolution of the indices. Then, a statistical analysis of the distribution of the NDVI and NDRE (

Figure 4) provides threshold values that divide the areas of interest into categories. The fusion of these categories for each vegetation index gives the final annotation (

Figure 5). Previous results have shown that the NDVI and NDRE have high sensitivity to Verticillium wilt symptoms in olive trees [

2]. In our case, though, a full comparison to state-of-the-art methods and an assessment of the advantages of our modified methods could not be made as the occurrence of Verticillium wilt on the groves under study was very low. We should note, however, that the success of the MyOGC does not depend on the details of the prediction algorithm, as this is an easily upgradable component.

2.4. Real Time Processing on Edge Devices

A second method is used for the same multimodal processing purposes but targeting a different platform, an embeddable device tuned for running ML applications. This device can be mounted on the UAV and connects to the multispectral sensors, allowing real time processing of the captured multispectral images. To make possible the on-the-fly processing of incomplete and noisy data, we use a convolutional neural network (CNN), a class of NN that is ideal for tasks involving image segmentation and classification, trained on ground truth data that are automatically generated from classically processed multispectral images. In the CNN architecture (

Figure 6) the input data are fed to successive down-scaling layers (left branch) to reduce the spatial resolution of the feature maps and then to corresponding up-scaling layers (right branch), increasing the spatial resolution of the feature maps. The CNN is trained to classify either multimodal or unimodal data, balancing needs for accuracy or speed. The results of the classification by the CNN can be seen in

Figure 7.

The main trade-offs between the simple computer vision and the CNN methods are in implementation complexity, accuracy and efficiency. On one hand, the CV approach is much simpler to implement and shows high and consistent accuracy, but is not efficient enough and therefore not a good choice for embedded devices. The CNN approach, on the other hand, is significantly more complex and requires much more work to get to satisfactory results; furthermore, the accuracy of segmentation is not as consistent as in the CV case and the CNN may need some fine-tuning and readjustment between runs or between fields. The deciding advantage, though, of the CNN method is that it gives very good results when deploying data from fewer bands or even one band, eliminating the preprocessing overhead (

Table 6), and making the method suitable for low power and low memory embedded platforms, especially on ML-tuned devices that further enhance the efficiency benefits of the method. To the authors’ knowledge, automatically training and accurately calculating tree crowns in real time from one-band data is a new contribution to the literature. Details and elaboration on the method have been presented in [

34].

2.5. My Olive Grove Coach Platforms

MyOGC system consists of two basic platforms: (a) the cloud platform that contains the most of the MyOGC subsystems and (b) the edge platform which is an embedded board (Coral’s Dev Board) capable of executing complex AI and image processing techniques. The roles and interconnections between them are depicted in the

Section 2 of the current article.

The cloud platform’s GUI is the main access point for the users to the MyOGC system. It provides the basic authorization and authentication mechanism and the forms for managing the fields related meta-data, such as location, photography sessions, owner and prediction results.

Regarding the prediction results, in order to demonstrate the condition of the fields to their respective farmers, the platform generates multiple colored layers, which are presented as overlays on the original map of the field. When the end-user decides to spectate a field, the platform redirects to a specific interactive map screen where the preprocessed orthomosaic with three basic colors (red, yellow, green) is presented. Green represents healthy trees without phytopathological stress signs; yellow represents stress which is quantified by reduced photosynthetic activity of the affected plant’s canopy and therefore possible onset of disease symptoms; and finally, red indicates sick trees and/or ground. The end-user can zoom in and out of the map, in order to preview every single tree on the map, with great detail.

For the map’s representation, Google’s Leaflet library was utilized with Google Map’s satellite image tiles. The overlay is a preprocessed orthomosaic that was constructed with open source photogrammetry software (“OpenSFM” and “GDAL” libraries), ensuring the maintenance of the spectral reflectance accuracy (reflectance map) and the exact geographical coordinates of the original multispectral images. Consequently, the image is rendered with a level of transparency, and the map is initialized based on the orthomosaic’s coordinates. In this manner, only the farmers’ fields which can be stretched with map zooms are visualized (

Figure 8).

The edge platform used in MyOGC is the “Dev Board” by Coral. It is a development board for prototyping on-device ML products. The device’s Edge-TPU is ideal for running embedded ML applications. In this project a dev-board is employed on the drone in order to assist and assess the data collection procedure in real time, bypassing the need for the cpu-intensive and time consuming step (uploading images to the server and processing), at least for preliminary data analysis. More specifically, algorithms are run on the dev-board that delineate the olive trees and provide preliminary info for their health status.