Multispectral Cameras and Machine Learning Integrated into Portable Devices as Clay Prediction Technology

Abstract

:1. Introduction

2. Background

2.1. Optics

2.2. Soil Science

- 1.

- Hue: This is usually red or yellow;

- 2.

- Value: This is light or dark; the darker, the closer the value is zero;

- 3.

- Chroma: This corresponds to the brightness, with zero corresponding to gray [29].

- 1.

- Superficial dark brown: This offers a wealth of organic matter, good aggregation and a good amount of nutrients;

- 2.

- Light yellow and red in the subsoil: This indicates high concentrations of iron oxide and good drainage; iron oxides also contribute to the aggregation of the soil, containing air and water for root development.

- 1.

- Spotted or stained with opaque yellow and orange, bluish gray or olive green: This indicates permanent flooding of the soil and lack of oxygenation and aeration of the soil;

- 2.

- Rusted colors (ferrihydrite): Indicates constant flooding;

- 3.

- Whitish and pale colors: This indicates the presence of a water layer above the clay [31].

2.3. Computer Vision

2.4. Machine Learning

- 1.

- 2.

3. Related Work

- 1.

- Sensors: This shows the sensors used in the related studies;

- 2.

- Analysis: This identifies which tool is used for data analysis. In other words, it identifies how results were generated for decision making or information to users;

- 3.

- Spectral range: This informs the type of spectral image employed (multispectral or hyperspectral);

- 4.

- Application: This describes the object, material or scenery analysed.

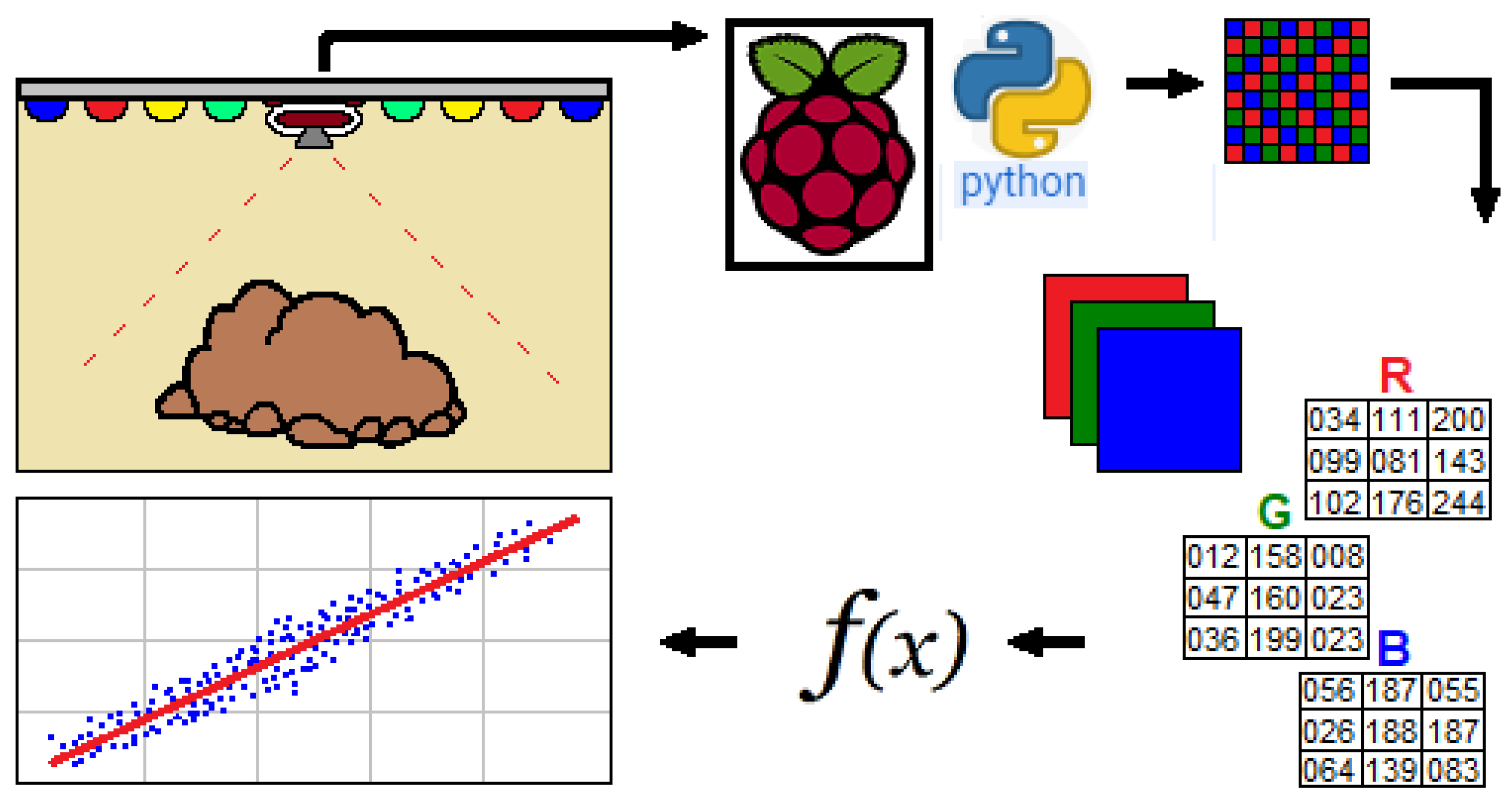

4. Materials and Methods

- 1.

- The generation of histograms of the image in each light spectrum;

- 2.

- The use of the histograms in a machine learning training algorithm;

- 3.

- All the results obtained in already existing methods will present the results.

- 1.

- Extraction of the image under the effect of a certain LED color;

- 2.

- The image is divided into three histograms;

- 3.

- The histograms are concatenated, as well as each of the LED colors.

- 4.

- As a result, a CSV file (Comma Separated Values) is generated with all histograms in all LED colors, as illustrated in Figure 4.

| Algorithm 1 Procedure of predicting clay through histogram images. |

Input: Image parameters (ROI) and number of PLSR factors Output: Predicion charts and reporting data

|

5. Results and Discussions

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| CMOS | Complementary Metal-Oxide-Semiconductor |

| CPU | Central Process Unit |

| CSI | Camera Serial Interface |

| CSV | Comma Separated Values |

| DT | Decision Trees |

| GPIO | General Purpose Input/Output |

| IoT | Internet of Things |

| LED | Light Emitting Diode |

| LVs | Latent Variables |

| ML | Machine Learning |

| MMA | Methods of Multivariate Analysis |

| MIPI | Mobile Industry Processor Interface |

| N/A | Not Available |

| NoIR | No Infra-Red |

| OpenCV | Open Source Computer Vision Library |

| PLS | Partial Least Squares |

| PNG | Portable Network Graphics |

| R2 | Coefficient of Determination |

| RAM | Random Access Memory |

| Ref | Reference sample |

| RGB | Red Green Blue |

| RMSEC | Root Mean Square Error of Calibration |

| RMSECV | Root Mean Square Error of Cross Validation |

| SVM | Support Vector Machines |

| Symbols | |

| n | Number of samples |

| x | Predicted clay concentration |

| y | Reference clay concentration |

References

- Fiehn, H.B.; Schiebel, L.; Avila, A.F.; Miller, B.; Mickelson, A. Smart Agriculture System Based on Deep Learning. In Proceedings of the 2nd International Conference on Smart Digital Environment (ICSDE’18), Rabat, Morocco, 18–20 October 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 158–165. [Google Scholar] [CrossRef]

- Lytos, A.; Lagkas, T.; Sarigiannidis, P.; Zervakis, M.; Livanos, G. Towards smart farming: Systems, frameworks and exploitation of multiple sources. Comput. Netw. 2020, 172, 107147. [Google Scholar] [CrossRef]

- Hochman, Z.; Carberry, P.; Robertson, M.; Gaydon, D.; Bell, L.; McIntosh, P. Prospects for ecological intensification of Australian agriculture. Eur. J. Agron. 2013, 44, 109–123. [Google Scholar] [CrossRef]

- Saiz-Rubio, V.; Rovira-Más, F. From Smart Farming towards Agriculture 5.0: A Review on Crop Data Management. Agronomy 2020, 10, 207. [Google Scholar] [CrossRef] [Green Version]

- Wolfert, S.; Ge, L.; Verdouw, C.; Bogaardt, M.J. Big Data in Smart Farming—A review. Agric. Syst. 2017, 153, 69–80. [Google Scholar] [CrossRef]

- Demattê, J.A.M.; Dotto, A.C.; Bedin, L.G.; Sayão, V.M.; e Souza, A.B. Soil analytical quality control by traditional and spectroscopy techniques: Constructing the future of a hybrid laboratory for low environmental impact. Geoderma 2019, 337, 111–121. [Google Scholar] [CrossRef]

- Bolfe, É.L.; de Castro Jorge, L.A.; Sanches, I.D.; Júnior, A.L.; da Costa, C.C.; de Castro Victoria, D.; Inamasu, R.Y.; Grego, C.R.; Ferreira, V.R.; Ramirez, A.R. Precision and Digital Agriculture: Adoption of Technologies and Perception of Brazilian Farmers. Agriculture 2020, 10, 653. [Google Scholar] [CrossRef]

- Tümsavaş, Z.; Tekin, Y.; Ulusoy, Y.; Mouazen, A.M. Prediction and mapping of soil clay and sand contents using visible and near-infrared spectroscopy. Biosyst. Eng. 2019, 177, 90–100. [Google Scholar] [CrossRef]

- Griebeler, G.; da Silva, L.S.; Cargnelutti Filho, A.; Santos, L.d.S. Avaliação de um programa interlaboratorial de controle de qualidade de resultados de análise de solo. Rev. Ceres 2016, 63, 371–379. [Google Scholar] [CrossRef] [Green Version]

- Demattê, J.A.M.; Alves, M.R.; da Silva Terra, F.; Bosquilia, R.W.D.; Fongaro, C.T.; da Silva Barros, P.P. Is It Possible to Classify Topsoil Texture Using a Sensor Located 800 km Away from the Surface? Rev. Bras. Ciência Solo 2016, 40. [Google Scholar] [CrossRef] [Green Version]

- Nanni, M.R.; Demattê, J.A.M.; Rodrigues, M.; dos Santos, G.L.A.A.; Reis, A.S.; de Oliveira, K.M.; Cezar, E.; Furlanetto, R.H.; Crusiol, L.G.T.; Sun, L. Mapping Particle Size and Soil Organic Matter in Tropical Soil Based on Hyperspectral Imaging and Non-Imaging Sensors. Remote Sens. 2021, 13, 1782. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, S.; Wu, Z.; Wang, S.; Bryant, C.R.; Senthilnath, J.; Cunha, M.; Fu, Y.H. Integrating Spectral and Textural Information for Monitoring the Growth of Pear Trees Using Optical Images from the UAV Platform. Remote Sens. 2021, 13, 1795. [Google Scholar] [CrossRef]

- Crucil, G.; Oost, K.V. Towards Mapping of Soil Crust Using Multispectral Imaging. Sensors 2021, 21, 1850. [Google Scholar] [CrossRef] [PubMed]

- Garini, Y.; Young, I.T.; McNamara, G. Spectral imaging: Principles and applications. Cytom. Part A 2006, 69A, 735–747. [Google Scholar] [CrossRef]

- Amigo, J.M.; Grassi, S. Configuration of hyperspectral and multispectral imaging systems. In Data Handling in Science and Technology; Elsevier: Amsterdam, The Netherlands, 2020; pp. 17–34. [Google Scholar] [CrossRef]

- Ambrož, M. Raspberry Pi as a low-cost data acquisition system for human powered vehicles. Measurement 2017, 100, 7–18. [Google Scholar] [CrossRef]

- Lucca, A.V.; Sborz, G.M.; Leithardt, V.; Beko, M.; Zeferino, C.A.; Parreira, W. A Review of Techniques for Implementing Elliptic Curve Point Multiplication on Hardware. J. Sens. Actuator Netw. 2020, 10, 3. [Google Scholar] [CrossRef]

- Leithardt, V.; Santos, D.; Silva, L.; Viel, F.; Zeferino, C.; Silva, J. A Solution for Dynamic Management of User Profiles in IoT Environments. IEEE Lat. Am. Trans. 2020, 18, 1193–1199. [Google Scholar] [CrossRef]

- Viel, F.; Silva, L.A.; Leithardt, V.R.Q.; Santana, J.F.D.P.; Teive, R.C.G.; Zeferino, C.A. An Efficient Interface for the Integration of IoT Devices with Smart Grids. Sensors 2020, 20, 2849. [Google Scholar] [CrossRef]

- Helfer, G.A.; Barbosa, J.L.V.; dos Santos, R.; da Costa, A.B. A computational model for soil fertility prediction in ubiquitous agriculture. Comput. Electron. Agric. 2020, 175, 105602. [Google Scholar] [CrossRef]

- Da Costa, A.; Helfer, G.; Barbosa, J.; Teixeira, I.; Santos, R.; dos Santos, R.; Voss, M.; Schlessner, S.; Barin, J. PhotoMetrix UVC: A New Smartphone-Based Device for Digital Image Colorimetric Analysis Using PLS Regression. J. Braz. Chem. Soc. 2021. [Google Scholar] [CrossRef]

- Martini, B.G.; Helfer, G.A.; Barbosa, J.L.V.; Modolo, R.C.E.; da Silva, M.R.; de Figueiredo, R.M.; Mendes, A.S.; Silva, L.A.; Leithardt, V.R.Q. IndoorPlant: A Model for Intelligent Services in Indoor Agriculture Based on Context Histories. Sensors 2021, 21, 1631. [Google Scholar] [CrossRef]

- Baumann, L.; Librelotto, M.; Pappis, C.; Helfer, G.A.; Santos, R.O.; Santos, R.B.; Costa, A.B. NanoMetrix: An app for chemometric analysis from near infrared spectra. J. Chemom. 2020, 34. [Google Scholar] [CrossRef]

- Pozo, S.D.; Rodríguez-Gonzálvez, P.; Sánchez-Aparicio, L.J.; Muñoz-Nieto, A.; Hernández-López, D.; Felipe-García, B.; González-Aguilera, D. Multispectral Imaging in Cultural Heritage Conservation. ISPRS Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2017, 42, 155–162. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 2nd ed.; Addison-Wesley Longman Publishing Co., Inc.: New York, NY, USA, 2001. [Google Scholar]

- Cao, A.; Pang, H.; Zhang, M.; Shi, L.; Deng, Q.; Hu, S. Design and Fabrication of an Artificial Compound Eye for Multi-Spectral Imaging. Micromachines 2019, 10, 208. [Google Scholar] [CrossRef] [Green Version]

- Carstensen, J.M. LED spectral imaging with food and agricultural applications. Image Sensing Technologies: Materials, Devices, Systems, and Applications V; Dhar, N.K., Dutta, A.K., Eds.; SPIE: Orlando, FL, USA, 2018. [Google Scholar] [CrossRef]

- Dagar, R.; Som, S.; Khatri, S.K. Smart Farming—IoT in Agriculture. In Proceedings of the IEEE 2018 International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 11–12 July 2018. [Google Scholar] [CrossRef]

- Han, P.; Dong, D.; Zhao, X.; Jiao, L.; Lang, Y. A smartphone-based soil color sensor: For soil type classification. Comput. Electron. Agric. 2016, 123, 232–241. [Google Scholar] [CrossRef]

- Brady, N.C.; Weil, R.R. The Nature and Properties of Soils, 14th ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2008. [Google Scholar]

- Peverill, K.I. (Ed.) Soil Analysis: An Interpretation Manual, Reprinted ed.; CSIRO Publ: Melbourne, Australia, 2005. [Google Scholar]

- Elias, R. Digital Media: A Problem-Solving Approach for Computer Graphics, 1st ed.; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Gerlach, J.B. Digital Nature Photography, 2nd ed.; Routledge: Oxford, UK, 2015. [Google Scholar]

- Domínguez, C.; Heras, J.; Pascual, V. IJ-OpenCV: Combining ImageJ and OpenCV for processing images in biomedicine. Comput. Biol. Med. 2017, 84, 189–194. [Google Scholar] [CrossRef] [PubMed]

- Mehmood, T.; Liland, K.H.; Snipen, L.; Sæbø, S. A review of variable selection methods in Partial Least Squares Regression. Chemom. Intell. Lab. Syst. 2012, 118, 62–69. [Google Scholar] [CrossRef]

- Wang, J.; Tiyip, T.; Ding, J.; Zhang, D.; Liu, W.; Wang, F.; Tashpolat, N. Desert soil clay content estimation using reflectance spectroscopy preprocessed by fractional derivative. PLoS ONE 2017, 12, e0184836. [Google Scholar] [CrossRef] [Green Version]

- Nawar, S.; Mouazen, A.M. Optimal sample selection for measurement of soil organic carbon using on-line vis-NIR spectroscopy. Comput. Electron. Agric. 2018, 151, 469–477. [Google Scholar] [CrossRef]

- Svensgaard, J.; Roitsch, T.; Christensen, S. Development of a Mobile Multispectral Imaging Platform for Precise Field Phenotyping. Agronomy 2014, 4, 322–336. [Google Scholar] [CrossRef]

- Hassan-Esfahani, L.; Torres-Rua, A.; Jensen, A.; McKee, M. Assessment of Surface Soil Moisture Using High-Resolution Multi-Spectral Imagery and Artificial Neural Networks. Remote Sens. 2015, 7, 2627–2646. [Google Scholar] [CrossRef] [Green Version]

- Treboux, J.; Genoud, D. Improved Machine Learning Methodology for High Precision Agriculture. In Proceedings of the IEEE 2018 Global Internet of Things Summit (GIoTS), Bilbao, Spain, 4–7 June 2018. [Google Scholar] [CrossRef]

- Žížala, D.; Minařík, R.; Zádorová, T. Soil Organic Carbon Mapping Using Multispectral Remote Sensing Data: Prediction Ability of Data with Different Spatial and Spectral Resolutions. Remote Sens. 2019, 11, 2947. [Google Scholar] [CrossRef]

- Lopez-Ruiz, N.; Granados-Ortega, F.; Carvajal, M.A.; Martinez-Olmos, A. Portable multispectral imaging system based on Raspberry Pi. Sens. Rev. 2017, 37, 322–329. [Google Scholar] [CrossRef]

- OmniVision Technologies Inc. OV5647 Sensor Datasheet. Available online: https://cdn.sparkfun.com/datasheets/Dev/RaspberryPi/ov5647_full.pdf (accessed on 8 June 2021).

- Raspberry Pi Foundation. FAQs—Raspberry Pi Documentation. Available online: https://www.raspberrypi.org/documentation/faqs/ (accessed on 8 June 2021).

- Park, J.I.; Lee, M.H.; Grossberg, M.D.; Nayar, S.K. Multispectral Imaging Using Multiplexed Illumination. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007. [Google Scholar] [CrossRef]

- Pelliccia, D. Partial Least Squares Regression in Python. 2019. Available online: https://nirpyresearch.com/partial-least-squares-regression-python/ (accessed on 3 October 2020).

- Wetterlind, J.; Piikki, K.; Stenberg, B.; Söderström, M. Exploring the predictability of soil texture and organic matter content with a commercial integrated soil profiling tool. Eur. J. Soil Sci. 2015, 66, 631–638. [Google Scholar] [CrossRef]

- Aranda, J.A.S.; Bavaresco, R.S.; de Carvalho, J.V.; Yamin, A.C.; Tavares, M.C.; Barbosa, J.L.V. A computational model for adaptive recording of vital signs through context histories. J. Ambient. Intell. Humaniz. Comput. 2021. [Google Scholar] [CrossRef] [PubMed]

- Rosa, J.H.; Barbosa, J.L.V.; Kich, M.; Brito, L. A Multi-Temporal Context-aware System for Competences Management. Int. J. Artif. Intell. Educ. 2015, 25, 455–492. [Google Scholar] [CrossRef] [Green Version]

- Dupont, D.; Barbosa, J.L.V.; Alves, B.M. CHSPAM: A multi-domain model for sequential pattern discovery and monitoring in contexts histories. Pattern Anal. Appl. 2019, 23, 725–734. [Google Scholar] [CrossRef]

- Da Rosa, J.H.; Barbosa, J.L.; Ribeiro, G.D. ORACON: An adaptive model for context prediction. Expert Syst. Appl. 2016, 45, 56–70. [Google Scholar] [CrossRef]

- Filippetto, A.S.; Lima, R.; Barbosa, J.L.V. A risk prediction model for software project management based on similarity analysis of context histories. Inf. Softw. Technol. 2021, 131, 106497. [Google Scholar] [CrossRef]

| Criterion | [38] | [39] | [40] | [41] | [42] | This Work |

|---|---|---|---|---|---|---|

| Sensors | Camera | Satellite | Camera | Satellite | Camera | Camera |

| Analysis | MMA | ANN | DT | SVM | N/A | ML |

| Spectral range | Multi | Multi | Hyper | Multi | Multi | Multi |

| Application | Wheat | Soil | Vineyard | Soil | Fruit | Soil |

| LED | Wavelength (nm) | Size (mm) | Voltage (V) |

|---|---|---|---|

| White | 500–620 | 5 | 3.0–3.2 |

| Yellow | 580–590 | 5 | 2.8–3.1 |

| Red | 620–630 | 5 | 2.8–3.1 |

| Green | 570–573 | 5 | 3.0–3.4 |

| Blue | 460–470 | 5 | 3.0–3.4 |

| LED | Green | Red | White | Yellow | Blue |

|---|---|---|---|---|---|

| Variables | 768 | 768 | 768 | 768 | 768 |

| Factors | 6 | 10 | 8 | 9 | 6 |

| R2 | 0.82 | 0.607 | 0.857 | 0.839 | 0.806 |

| RMSEC (%) | 7.93 | 11.74 | 7.06 | 7.51 | 8.24 |

| RMSECV (%) | 19.36 | 23.89 | 13.66 | 13.59 | 26.35 |

| LED | Green | Red | White | Yellow | Blue | Histogram |

|---|---|---|---|---|---|---|

| Variables | 256 | 256 | 256 | 256 | 256 | - |

| Factors | 10 | 10 | 10 | 10 | 10 | - |

| R2 | 0.507 | 0.586 | 0.838 | 0.614 | 0.236 | Red |

| RMSEC (%) | 13.13 | 12.03 | 7.56 | 11.61 | 16.34 | Red |

| RMSECV (%) | 17.35 | 24.21 | 20.86 | 18.73 | 20.33 | Red |

| R2 | 0.705 | 0.243 | 0.751 | 0.590 | 0.378 | Green |

| RMSEC (%) | 12.16 | 16.27 | 9.33 | 11.97 | 14.74 | Green |

| RMSECV (%) | 23.64 | 25.72 | 21.61 | 22.14 | 22.61 | Green |

| R2 | 0.552 | 0.151 | 0.818 | 0.221 | 0.799 | Blue |

| RMSEC (%) | 12.51 | 17.23 | 7.98 | 16.50 | 8.37 | Blue |

| RMSECV (%) | 18.06 | 48.31 | 22.29 | 19.67 | 32.56 | Blue |

| LED | Joined Histogram |

|---|---|

| Variables | 3840 |

| Factors | 5 |

| R2 | 0.962 |

| RMSEC (%) | 3.66 |

| RMSECV (%) | 16.87 |

| #Sample | Clay Ref% | Clay LED% | #Sample | Clay Ref% | Clay LED% |

|---|---|---|---|---|---|

| 55121 | 4 | 6.12 | 55830 | 36 | 35.51 |

| 55051 | 6 | 5.34 | 55892 | 37 | 37.51 |

| 55066 | 7 | 12.14 | 53981 | 39 | 40.20 |

| 55129 | 10 | 1.83 | 56181 | 40 | 35.84 |

| 55049 | 11 | 11.04 | 55433 | 41 | 49.42 |

| 55446 | 15 | 22.31 | 53982 | 44 | 40.73 |

| 55478 | 19 | 19.59 | 55375 | 45 | 37.17 |

| 56145 | 20 | 22.84 | 56005 | 46 | 46.96 |

| 55469 | 24 | 27.21 | 55406 | 47 | 41.86 |

| 56148 | 25 | 23.78 | 55360 | 49 | 50.63 |

| 56103 | 26 | 24.95 | 60231 | 51 | 50.92 |

| 53977 | 27 | 28.50 | 56479 | 58 | 54.81 |

| 55988 | 29 | 33.24 | 56259 | 61 | 64.97 |

| 56105 | 31 | 28.64 | 55189 | 64 | 62.73 |

| 55962 | 32 | 35.45 | 60199 | 68 | 70.11 |

| 55437 | 34 | 29.86 | 60172 | 71 | 67.03 |

| 54015 | 35 | 34.20 | 60182 | 72 | 70.54 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Helfer, G.A.; Barbosa, J.L.V.; Alves, D.; da Costa, A.B.; Beko, M.; Leithardt, V.R.Q. Multispectral Cameras and Machine Learning Integrated into Portable Devices as Clay Prediction Technology. J. Sens. Actuator Netw. 2021, 10, 40. https://doi.org/10.3390/jsan10030040

Helfer GA, Barbosa JLV, Alves D, da Costa AB, Beko M, Leithardt VRQ. Multispectral Cameras and Machine Learning Integrated into Portable Devices as Clay Prediction Technology. Journal of Sensor and Actuator Networks. 2021; 10(3):40. https://doi.org/10.3390/jsan10030040

Chicago/Turabian StyleHelfer, Gilson Augusto, Jorge Luis Victória Barbosa, Douglas Alves, Adilson Ben da Costa, Marko Beko, and Valderi Reis Quietinho Leithardt. 2021. "Multispectral Cameras and Machine Learning Integrated into Portable Devices as Clay Prediction Technology" Journal of Sensor and Actuator Networks 10, no. 3: 40. https://doi.org/10.3390/jsan10030040

APA StyleHelfer, G. A., Barbosa, J. L. V., Alves, D., da Costa, A. B., Beko, M., & Leithardt, V. R. Q. (2021). Multispectral Cameras and Machine Learning Integrated into Portable Devices as Clay Prediction Technology. Journal of Sensor and Actuator Networks, 10(3), 40. https://doi.org/10.3390/jsan10030040