Knowledge-Based Approach for the Perception Enhancement of a Vehicle †

Abstract

:1. Introduction

2. Related Works

2.1. Vehicular Perception

- Radars have been used for decades for vehicular applications [25,26]. This technology has proved itself to be great in mid-to-long range measurement and have a great accuracy, in addition to doing well in a poor-weather situation [27]. It is still heavily present in vehicles but has a small Field Of View (FOV) and shows poor results in near-distance measurement and static object detection. There is also the problem of receiving interference from other sources or vehicles.

- Cameras have shown an interesting potential, in both single and stereo vision. When considering the perception quality, they are the least expensive sensor that can be used [24]. They allow a quick classification of the obstacle and a potential 3D mapping of the area. Stereoscopy in particular shows very good results in detecting forms, depth, colors and velocity, although it requires substantial computational power [28]. The most advanced models can also be used for long-range precise detection, but they have a more important cost [29]. However, the performance highly depends on the weather and brightness [27], and the required computational power can sometimes be heavy.

- LIDAR technology relies on measuring laser light reflection to infer the distance to a target. It has been studied since the 1980s [30] but it is only in early 2000 that it has found its way in vehicular application [31,32]. It is a useful tool for 3D mapping and localization, and can be used on a large FOV [27], but it relies heavily on good-weather conditions and is not efficient outside a defined range.

2.2. UAV for Vehicular Applications

2.3. Data Fusion

2.4. Secured Communication

- An inclement weather or poor illumination leads to a weakened visibility which is a main factor of car crashes;

- In an environment that is more and more connected, there are some intelligent tools that can be requested to provide additional data to improve perception and visibility, such as UAV;

- Having data from various sensors raises the question of having a mean of fusing them. Knowledge-based approaches, especially ontologies, have shown great potential for multi-sensors management;

- When using an external entity, the communication must be guaranteed to be secured. In that regard, VLC technology offers a strong potential.

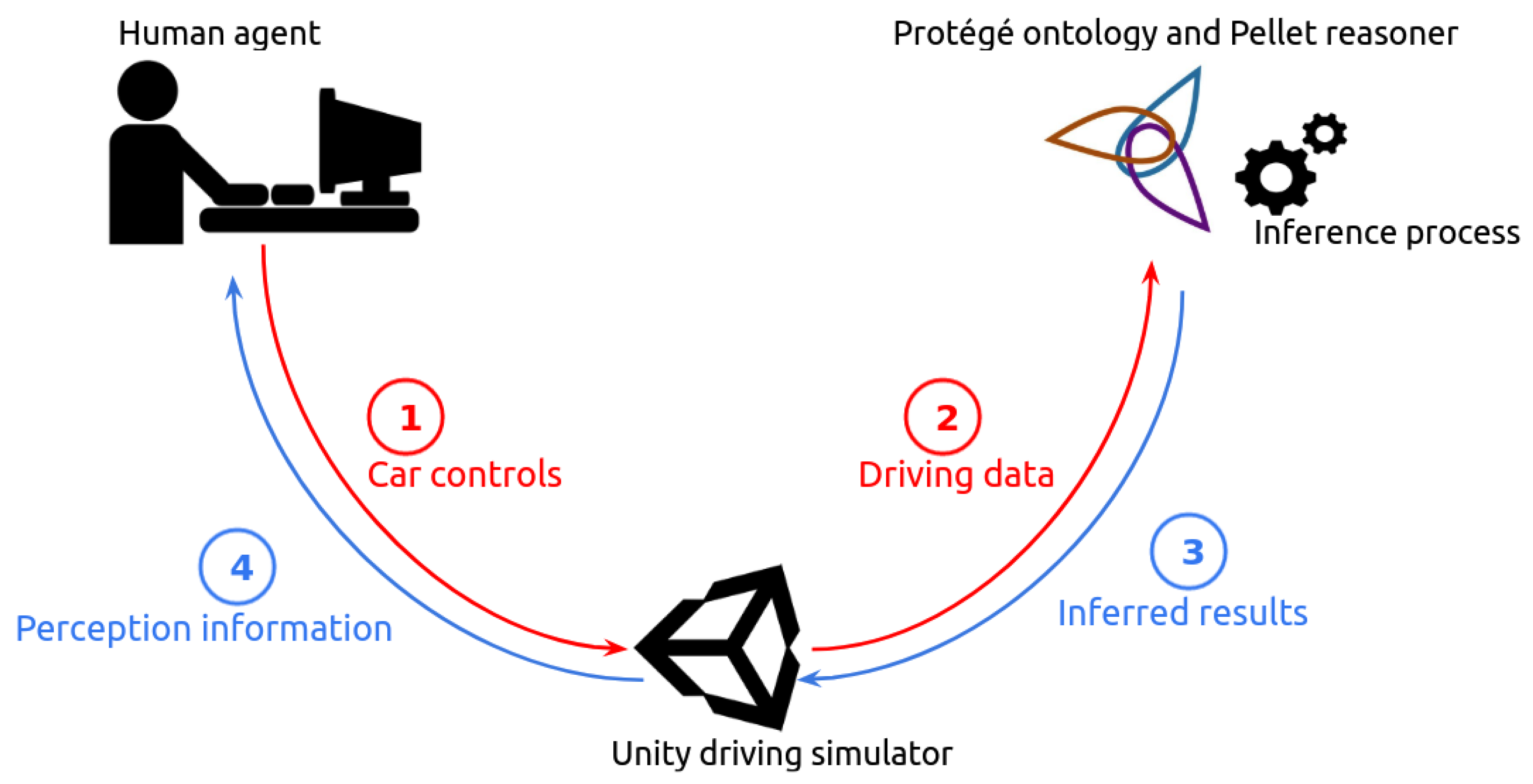

3. Proposed Methodology

3.1. Knowledge Base

- Vehicle represents the different; vehicles detected in the environment. The class encompasses both the Car and the UAV entities

- Weather lists all the possible type of weathers that can be encountered. In this case, it covers [Sunny, Fog, Rain, Snow];

- Environment describes the context in which the vehicle it evolves, one amongst [NormalEnv, DarkEnv, BadWeatherEnv, UnusualEnv];

- Sensors covers the sensors that are used for the perception on a vehicle, as detailed in [22]. The main ones are [cameraMono, cameraStereo, cameraInfra, Lidar, Radar, Sonar]. In addition, there are also environmental sensors used to determine the environment status, [rainSensor, brightnessSensor, fogSensor]. It is illustrated in Figure 2 and detailed Table A2 in Appendix A.

3.2. VLC Communication

- Radiofrequency: The DSRC (Dedicated Short (RF) [64] is the communication protocol designated for automotive V2X use. It is the standard protocol, but the strength of the signal depends of the land form and its sensitivity to electromagnetic interference.

- VLC: The VLC protocol performs poorly in some weather conditions, but it can also improve the lighting in darker areas. It can be an interesting choice.

- Hybrid RF/VLC: This protocol allows a better Quality of Service via the redundancy of information. If the context allows it, this should be the preferred choice.

- Intelligent Transport Systems can natively communicate with their surroundings [65]

- VLC is a technology revolving around light, making for a brighter environment.

- The redundancy of information allows for a more secured communication and robust system.

3.2.1. Hashing Algorithms

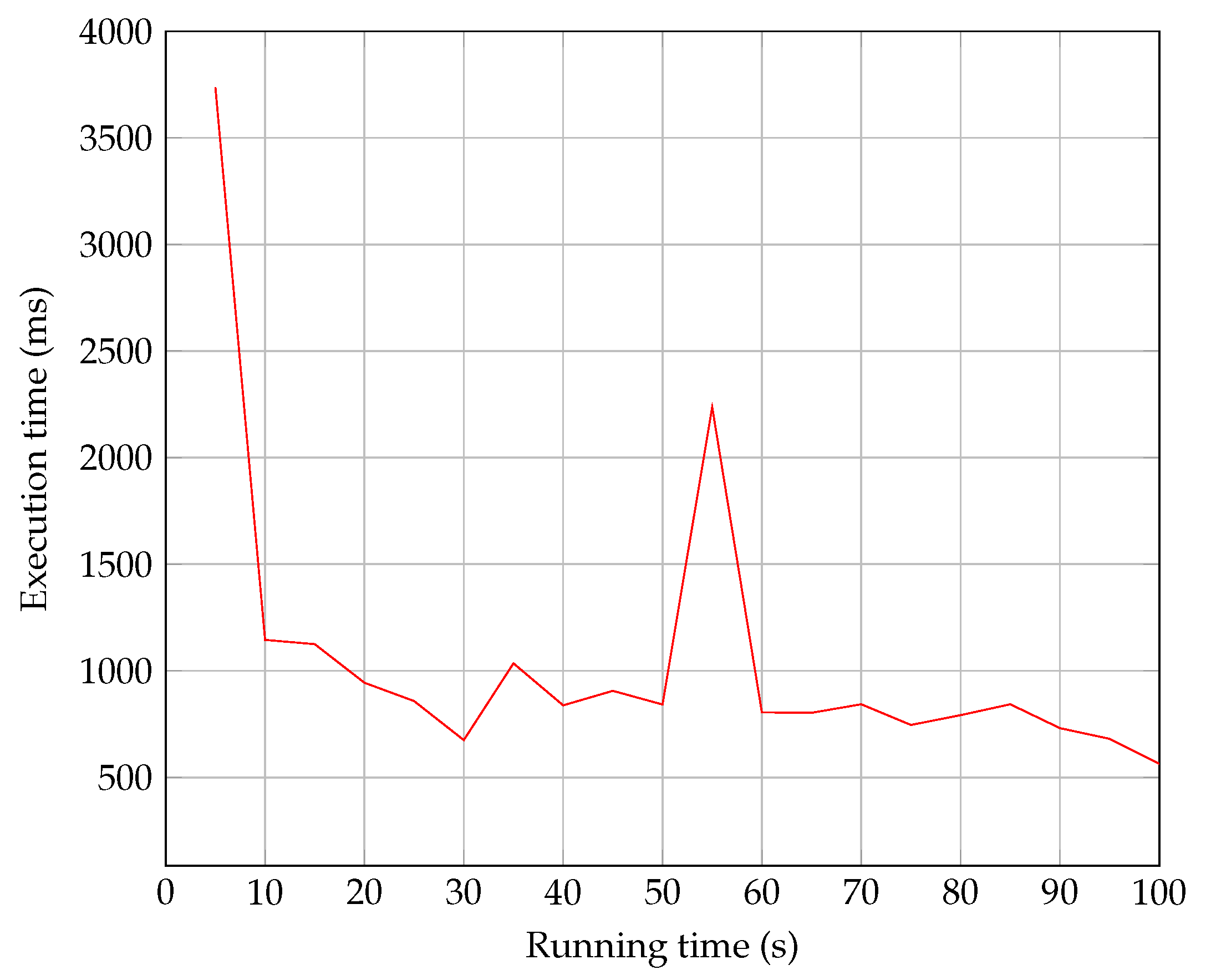

3.2.2. VLC Transmission Speed

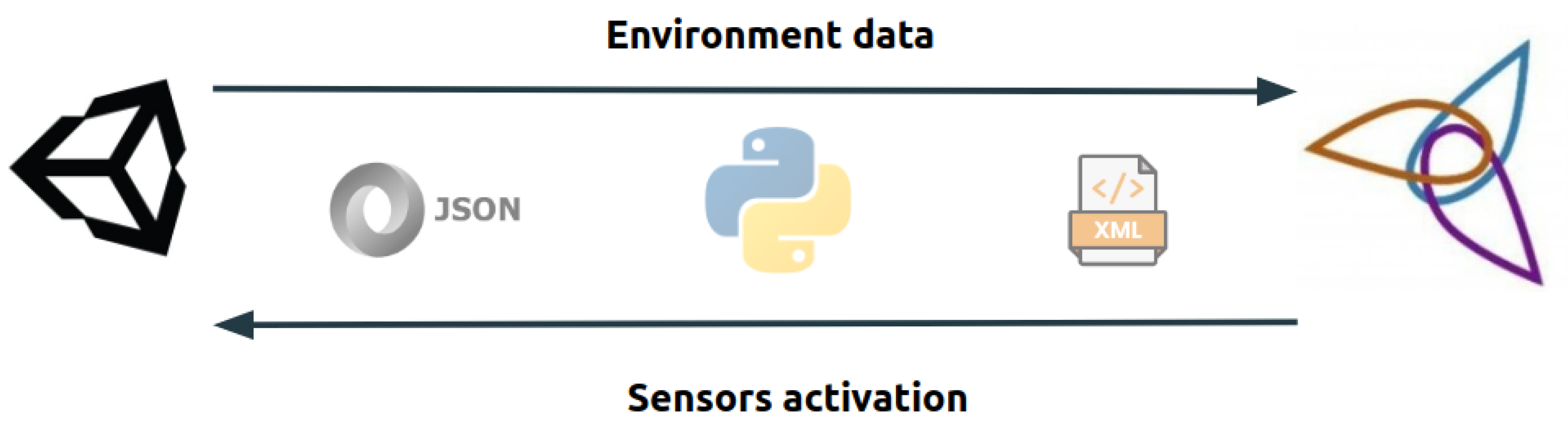

4. Simulation and Results

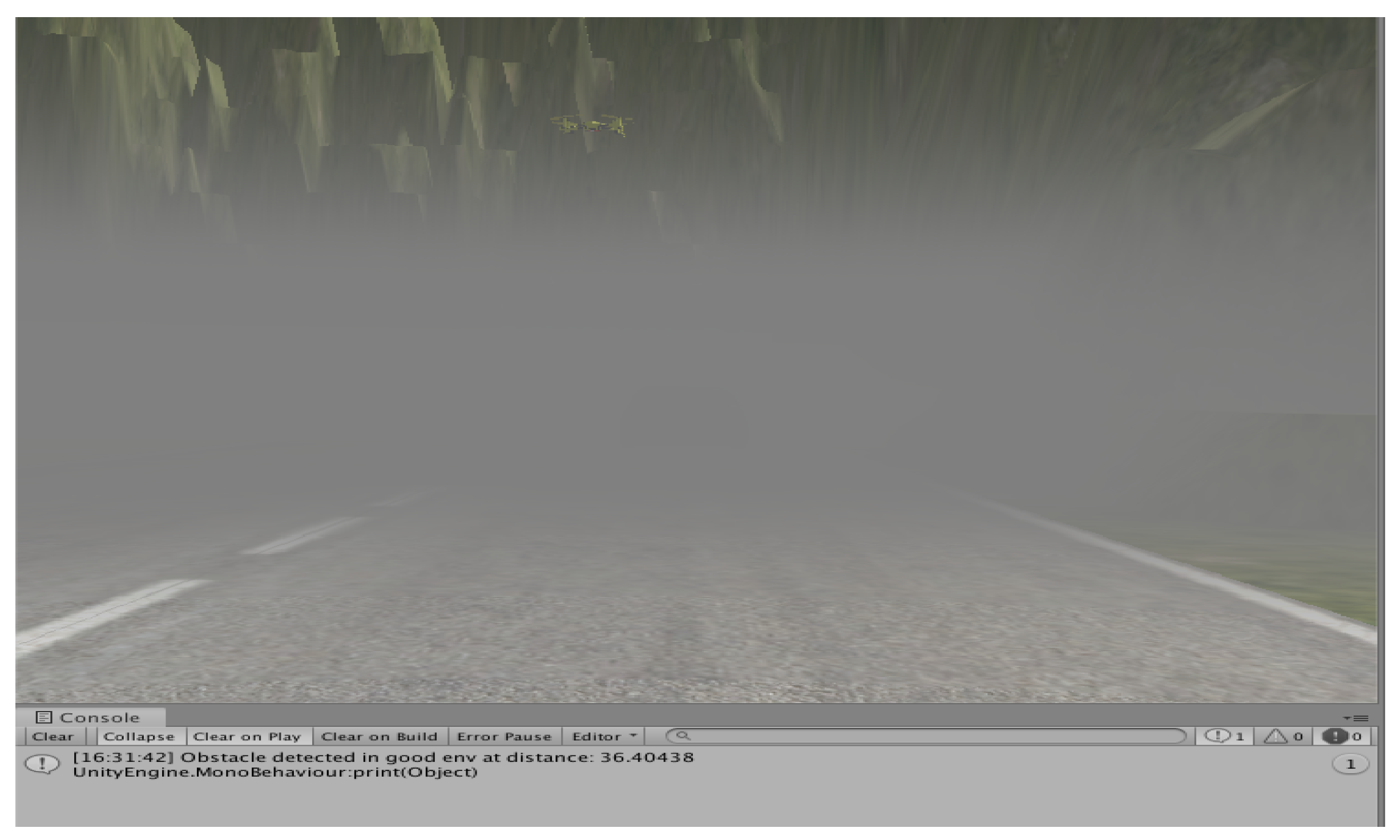

4.1. Simulated Environment

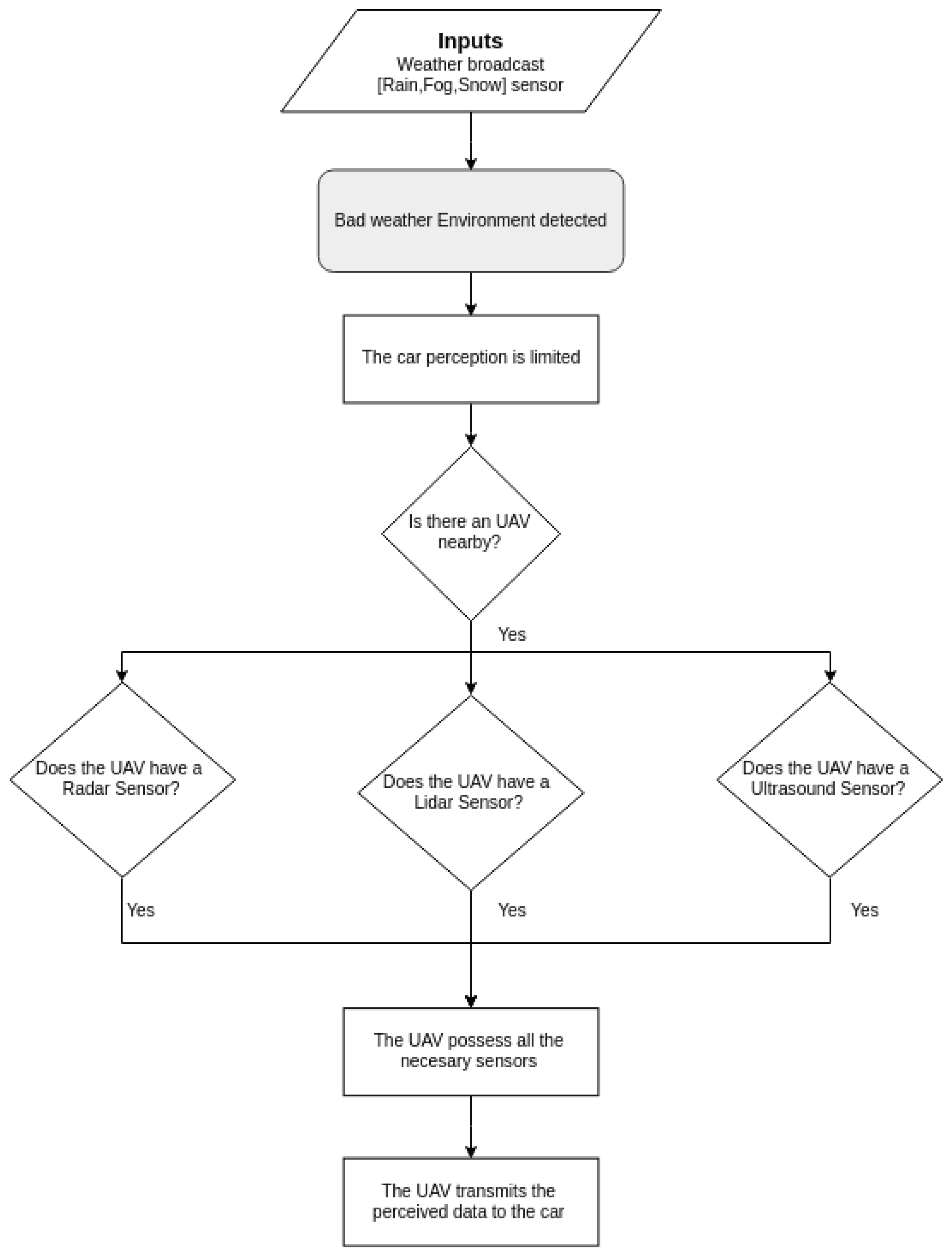

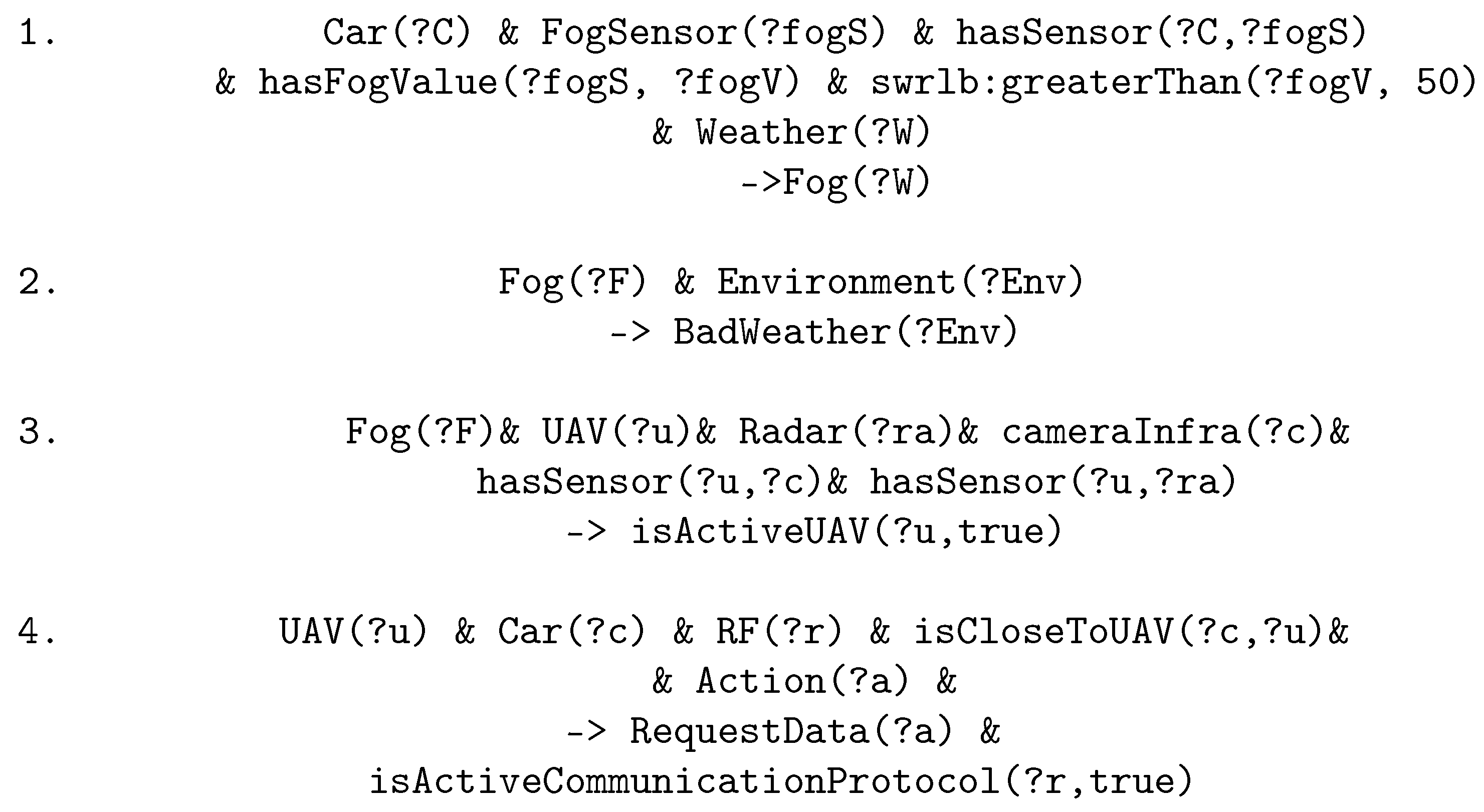

4.2. Logical Rules

- 1

- The environmental sensors embedded on the main vehicle will send back some data. If it is above a certain threshold, the environment is inferred as Foggy;

- 2

- A Foggy environment is considered as a Bad Weather environment, same as Rainy or Snowy;

- 3

- The model looks up for a UAV carrying sensors that works well in this environment and is within reach. If it is deemed acceptable, the UAV is considered for potential data transmission;

- 4

- If the proximity condition is fulfilled, a data request is made. Due to VLC performing poorly in heavy fog [79], an RF-communication protocol is chosen.

4.3. Experimentation Description

- The driver needs to take a turn in an intersection with limited visibility.

- The driver goes through a foggy area.

- The driver needs to go through a certain area where one of the buildings is on fire.

4.4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Appendix A

| Parameter | Human Eye | Monoscopic Camera | Stereoscopic Camera | Thermal Camera |

|---|---|---|---|---|

| Object detection | Very Good | Good | Very Good | Good for shape detection |

| Object recognition | Very Good | Good | Good | Poor |

| Range detection | Up to 300 m | Poor | Good | Poor |

| Poor weather performance | Poor in snow, fog and heavy rain | Poor in snow, fog and rain | Poor in snow, fog and rain | Good in snow, fog and rain |

| Poor illumination performance | Poor | Poor | Poor | Good |

| Parameter | Human Eye | Lidar | Radar | Ultrasound |

| Object detection | Very Good | Very Good | Very Good for distance measurement | Very Good |

| Object recognition | Very Good | Good | Poor | Poor |

| Range detection | Up to 300 m | Up to 200 m | Up to 200 m | Very Good |

| Poor weather performance | Poor in snow, fog and heavy rain | Poor in snow, fog and rain | Good | Good |

| Poor illumination performance | Poor | Good | Indepent of illumination | Independent of illumination |

| Class | Description | |

|---|---|---|

| Active Sensors | Lidar | Uses a Laser in order to map the surroundings |

| Radar | Uses electromagnetic waves in order to determine a distance | |

| Ultrasound | Uses ultrasonic waves in order to determine a distance | |

| Passive Sensors | Monoscopic Camera | Captures a continuous set of images that can be processed |

| Stereoscopic Camera | Two different Cameras allowing the consideration of depths in image processing | |

| Thermal Camera | Capture infrared and thermal emissions. Works in harsher conditions but the results are hard to process | |

| Environmental Sensors | Rain Sensor | Determines the Rain situation |

| Fog Sensor | Determines the Fog situation | |

| Brightness Sensor | Determines the brightness value (Darkness or Overbright situation) | |

| Variable | Ontology Class Values and/or Linking Property | Associated Simulator Value | Comment |

|---|---|---|---|

| Vehicle Speed | hasSpeed [NoSpeed,ExtraLowSpeed,LowSpeed, NormalSpeed,HighSpeed,Overspeed] | (float) VehicleSpeed | |

| Position of the vehicle | isOnRoad [Roads] | (string) Name of the Road where the vehicle is | |

| Distance to Obstacle | hasDistanceFromVehicle [FarDistance, MediumDistance,NearDistance] | (float) DistanceToVehicle | |

| Weather status | [Fog,Sun] | (int) FogSensorValue | Default value “Sun” |

| Brightness status | [Dark,Normal,Overbright] | (int) brightnessValue | |

| Environmental Status | [Normal,Dark,BadWeather,Hazardous] | - | Inferred from other elements |

| Hazard | [FireHazard] | (int,int) X & Y Position of the hazard | Not declared if there is no Hazard |

| Sensors available | hasSensor [cameraMono,cameraStereo,cameraInfra, fogSensor,brightSensor,lidar,radar,sonar] | (string) Names of the sensors on the vehicle | For both the car and the UAV |

| Communication protocols | hasCommunicationProtocol[RF,VLC,Hybrid] | (string) Name of the communication protocol | |

| UAV data | isActiveUAV [true,false] | - | Inferred from other elements |

References

- New Jersey Bill A2757. Senate and General Assembly of the State of New Jersey. 2012. Available online: https://www.njleg.state.nj.us/bills/BillView.asp?BillNumber=A2757 (accessed on 8 November 2021).

- Oklahoma Bill HB3007. Oklahoma House of Congress. 2012. Available online: http://www.oklegislature.gov/BillInfo.aspx?Bill=hb3007&Session=1800 (accessed on 8 November 2021).

- Su, K.; Li, J.; Fu, H. Smart city and the applications. In Proceedings of the 2011 International Conference on Electronics, Communications and Control (ICECC), Ningbo, China, 9–11 September 2011; pp. 1028–1031. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Minkoff, A.S. A Markov decision model and decomposition heuristic for dynamic vehicle dispatching. Oper. Res. 1993, 41, 77–90. [Google Scholar] [CrossRef]

- Khezaz, A.; Hina, M.D.; Guan, H.; Ramdane-Cherif, A. Driving Context Detection and Validation using Knowledge-based Reasoning. In Proceedings of the 12th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management, Budapest, Hungary, 2–4 November 2020; Volume 2. [Google Scholar]

- Fuchs, S.; Rass, S.; Kyamakya, K. Integration of Ontological Scene Representation and Logic-Based Reasoning for Context-Aware Driver Assistance Systems. Electron. Commun. EASST 2008, 11, 1–12. [Google Scholar] [CrossRef]

- Endsley, M.R. Toward a theory of situation awareness in dynamic systems. In Situational Awareness; Routledge: London, UK, 2017; pp. 9–42. [Google Scholar]

- Baumann, M.R.; Petzoldt, T.; Krems, J.F. Situation Awareness beim Autofahren als Verstehensprozess. In MMI Interaktiv-Aufmerksamkeit und Situationawareness beim Autofahren; Gesellschaft für Informatik e.V.: Bonn, Germany, 2006; Volume 1. [Google Scholar]

- Campbell, M. The wet-pavement accident problem: Breaking through. Traffic Q. 1971, 25, 209–214. [Google Scholar]

- Harith, S.H.; Mahmud, N.; Doulatabadi, M. Environmental Factor and Road Accident: A Review Paper. In Proceedings of the International Conference on Industrial Engineering and Operations Management, Bangkok, Thailand, 5–7 March 2019; p. 10. [Google Scholar]

- Das, S.; Brimley, B.K.; Lindheimer, T.E.; Zupancich, M. Association of reduced visibility with crash outcomes. IATSS Res. 2018, 42, 143–151. [Google Scholar] [CrossRef]

- Das, S.; Dutta, A.; Sun, X. Patterns of rainy weather crashes: Applying rules mining. J. Transp. Saf. Secur. 2020, 12, 1083–1105. [Google Scholar] [CrossRef]

- Andrey, J.; Yagar, S. A temporal analysis of rain-related crash risk. Accid. Anal. Prev. 1993, 25, 465–472. [Google Scholar] [CrossRef]

- Carlevaris-Bianco, N.; Eustice, R.M. Learning visual feature descriptors for dynamic lighting conditions. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2769–2776. [Google Scholar]

- Gade, R.; Moeslund, T.B. Thermal cameras and applications: A survey. Mach. Vis. Appl. 2014, 25, 245–262. [Google Scholar] [CrossRef] [Green Version]

- Tarel, J.P.; Hautiere, N. Fast visibility restoration from a single color or gray level image. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2201–2208. [Google Scholar] [CrossRef]

- Tarel, J.P.; Hautiere, N.; Caraffa, L.; Cord, A.; Halmaoui, H.; Gruyer, D. Vision Enhancement in Homogeneous and Heterogeneous Fog. IEEE Intell. Transport. Syst. Mag. 2012, 4, 6–20. [Google Scholar] [CrossRef] [Green Version]

- World Health Organization. Save LIVES: A Road Safety Technical Package; World Health Organization: Geneva, Switzerland, 2017. [Google Scholar]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Schoettle, B. Sensor Fusion: A Comparison of Sensing Capabilities of Human Drivers and Highly Automated Vehicles; University of Michigan: Ann Arbor, MI, USA, 2017. [Google Scholar]

- Van Brummelen, J.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous vehicle perception: The technology of today and tomorrow. Transp. Res. Part C Emerg. Technol. 2018, 89, 384–406. [Google Scholar] [CrossRef]

- Campbell, M.; Egerstedt, M.; How, J.P.; Murray, R.M. Autonomous driving in urban environments: Approaches, lessons and challenges. Philos. Trans. R. Soc. A 2010, 368, 4649–4672. [Google Scholar] [CrossRef] [Green Version]

- Vanholme, B.; Gruyer, D.; Lusetti, B.; Glaser, S.; Mammar, S. Highly Automated Driving on Highways Based on Legal Safety. IEEE Trans. Intell. Transp. Syst. 2013, 14, 333–347. [Google Scholar] [CrossRef]

- Woll, J. Monopulse Doppler radar for vehicle applications. In Proceedings of the Intelligent Vehicles ’95. Symposium, Detroit, MI, USA, 25–26 September 1995; pp. 42–47. [Google Scholar] [CrossRef]

- Mayhan, R.J.; Bishel, R.A. A two-frequency radar for vehicle automatic lateral control. IEEE Trans. Veh. Technol. 1982, 31, 32–39. [Google Scholar] [CrossRef]

- Rasshofer, R.H.; Gresser, K. Automotive radar and lidar systems for next generation driver assistance functions. Adv. Radio Sci. 2005, 3, 205–209. [Google Scholar] [CrossRef] [Green Version]

- Sivaraman, S.; Trivedi, M.M. Looking at Vehicles on the Road: A Survey of Vision-Based Vehicle Detection, Tracking, and Behavior Analysis. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1773–1795. [Google Scholar] [CrossRef] [Green Version]

- Sahin, F.E. Long-Range, High-Resolution Camera Optical Design for Assisted and Autonomous Driving. Photonics 2019, 6, 73. [Google Scholar] [CrossRef] [Green Version]

- Smith, M. Light Detection and Ranging (LIDAR), Volume 2. A Bibliography with Abstracts; National Technical Information Service: Springfield, VA, USA, 1978.

- Li, B.; Zhang, T.; Xia, T. Vehicle detection from 3D lidar using fully convolutional network. arXiv 2016, arXiv:1608.07916. [Google Scholar]

- Mahlisch, M.; Schweiger, R.; Ritter, W.; Dietmayer, K. Sensorfusion Using Spatio-Temporal Aligned Video and Lidar for Improved Vehicle Detection. In Proceedings of the 2006 IEEE Intelligent Vehicles Symposium, Meguro-Ku, Japan, 13–15 June 2006; pp. 424–429. [Google Scholar] [CrossRef]

- Iwasaki, Y. A method of robust moving vehicle detection for bad weather using an infrared thermography camera. In Proceedings of the 2008 International Conference on Wavelet Analysis and Pattern Recognition, Hong Kong, China, 30–31 August 2008; Volume 1, pp. 86–90. [Google Scholar]

- Hina, M.D.; Guan, H.; Soukane, A.; Ramdane-Cherif, A. CASA: An Alternative Smartphone-Based ADAS. Int. J. Inf. Technol. Decis. Mak. 2021, 20, 1–41. [Google Scholar] [CrossRef]

- Bengler, K.; Dietmayer, K.; Farber, B.; Maurer, M.; Stiller, C.; Winner, H. Three Decades of Driver Assistance Systems: Review and Future Perspectives. IEEE Intell. Transp. Syst. Mag. 2014, 6, 6–22. [Google Scholar] [CrossRef]

- Menouar, H.; Guvenc, I.; Akkaya, K.; Uluagac, A.S.; Kadri, A.; Tuncer, A. UAV-Enabled Intelligent Transportation Systems for the Smart City: Applications and Challenges. IEEE Commun. Mag. 2017, 55, 22–28. [Google Scholar] [CrossRef]

- Shi, W.; Zhou, H.; Li, J.; Xu, W.; Zhang, N.; Shen, X. Drone assisted vehicular networks: Architecture, challenges and opportunities. IEEE Netw. 2018, 32, 130–137. [Google Scholar] [CrossRef]

- Hadiwardoyo, S.A.; Hernández-Orallo, E.; Calafate, C. T; Cano, J.C; Manzoni, P. Experimental characterization of UAV-to-car communications. Comput. Netw. 2018, 136, 105–118. [Google Scholar] [CrossRef]

- Zhan, C.; Zeng, Y.; Zhang, R. Energy-Efficient Data Collection in UAV Enabled Wireless Sensor Network. arXiv 2017, arXiv:1708.00221. [Google Scholar] [CrossRef] [Green Version]

- Božek, P.; Bezák, P.; Nikitin, Y.; Fedorko, G.; Fabian, M. Increasing the production system productivity using inertial navigation. Manuf. Technol. 2015, 15, 274–278. [Google Scholar] [CrossRef]

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep learning sensor fusion for autonomous vehicle perception and localization: A review. Sensors 2020, 20, 4220. [Google Scholar] [CrossRef]

- Bendadouche, R.; Roussey, C.; De Sousa, G.; Chanet, J.P.; Hou, K.M. Etat de l’art sur les ontologies de capteurs pour une intégration intelligente des données. In Proceedings of the INFORSID 2012, Montpellier, France, 29–31 May 2012; pp. 89–104. [Google Scholar]

- Calder, M.; Morris, R.A.; Peri, F. Machine reasoning about anomalous sensor data. Ecol. Inform. 2010, 5, 9–18. [Google Scholar] [CrossRef]

- Compton, M.; Neuhaus, H.; Taylor, K.; Tran, K.N. Reasoning about sensors and compositions. SSN 2009, 522, 33–48. [Google Scholar]

- Henson, L.; Barnaghi, T.; Corcho, C.; Castro, G.; Herzog, G.; Janowicz, K. Semantic Sensor Network xg Final Report. 2011. Available online: https://www.w3.org/2005/Incubator/ssn/XGR-ssn-20110628/ (accessed on 8 November 2021).

- Rivest, R.L.; Shamir, A.; Adleman, L. A method for obtaining digital signatures and public-key cryptosystems. Commun. ACM 1978, 21, 120–126. [Google Scholar] [CrossRef]

- Wang, X.; Feng, D.; Lai, X.; Yu, H. Collisions for Hash Functions MD4, MD5, HAVAL-128 and RIPEMD. IACR Cryptol. ePrint Arch. 2004, 2004, 199. [Google Scholar]

- Fattahi, J. Analyse des Protocoles Cryptographiques par les Fonctions témoins. Ph.D. Thesis, Université Laval, Quebec City, QC, Canada, 2016. [Google Scholar]

- Zimmermann, H. OSI reference model-the ISO model of architecture for open systems interconnection. IEEE Trans. Commun. 1980, 28, 425–432. [Google Scholar] [CrossRef]

- Nauryzbayev, G.; Abdallah, M.; Al-Dhahir, N. Outage Analysis of Cognitive Electric Vehicular Networks over Mixed RF/VLC Channels. arXiv 2020, arXiv:2004.11143. [Google Scholar] [CrossRef]

- Marabissi, D.; Mucchi, L.; Caputo, S.; Nizzi, F.; Pecorella, T.; Fantacci, R.; Nawaz, T.; Seminara, M.; Catani, J. Experimental Measurements of a Joint 5G-VLC Communication for Future Vehicular Networks. J. Sens. Actuator Netw. 2020, 9, 32. [Google Scholar] [CrossRef]

- Rahaim, M.B.; Vegni, A.M.; Little, T.D.C. A hybrid Radio Frequency and broadcast Visible Light Communication system. In Proceedings of the 2011 IEEE GLOBECOM Workshops (GC Wkshps), Houston, TX, USA, 5–9 December 2011; pp. 792–796. [Google Scholar] [CrossRef] [Green Version]

- Rakia, T.; Yang, H.C.; Gebali, F.; Alouini, M.S. Optimal Design of Dual-Hop VLC/RF Communication System with Energy Harvesting. IEEE Commun. Lett. 2016, 20, 1979–1982. [Google Scholar] [CrossRef]

- Pan, G.; Ye, J.; Ding, Z. Secure Hybrid VLC-RF Systems with Light Energy Harvesting. IEEE Trans. Commun. 2017, 65, 4348–4359. [Google Scholar] [CrossRef]

- Trochim, W.M.; Donnelly, J.P. Research Methods Knowledge Base. 2001. Available online: https://conjointly.com/kb/ (accessed on 8 November 2021).

- Balci, O.; Smith, E.P. Validation of Expert System Performance; Technical Report; Department of Computer Science, Virginia Polytechnic Institute; State University: Blacksburg, VA, USA, 1986. [Google Scholar]

- Jaffar, J.; Maher, M.J. Constraint logic programming: A survey. J. Log. Program. 1994, 19–20, 503–581. [Google Scholar] [CrossRef] [Green Version]

- Paulheim, H. Knowledge graph refinement: A survey of approaches and evaluation methods. Semant. Web 2016, 8, 489–508. [Google Scholar] [CrossRef] [Green Version]

- Noy, N.F.; McGuinness, D.L. Ontology Development 101: A Guide to Creating Your First Ontology; Knowledge Systems Laboratory: Stanford, CA, USA, 2001. [Google Scholar]

- Horridge, M.; Bechhofer, S. The owl api: A java api for owl ontologies. Semant. Web 2011, 2, 11–21. [Google Scholar] [CrossRef]

- Kornyshova, E.; Deneckère, R. Decision-making ontology for information system engineering. In International Conference on Conceptual Modeling; Springer: Berlin/Heidelberg, Germany, 2010; pp. 104–117. [Google Scholar]

- O’Connor, M.J.; Shankar, R.D.; Musen, M.A.; Das, A.K.; Nyulas, C. The SWRLAPI: A Development Environment for Working with SWRL Rules. In Proceedings of the Fifth OWLED Workshop on OWL: Experiences and Directions, Collocated with the 7th International Semantic Web Conference (ISWC-2008), Karlsruhe, Germany, 26–27 October 2008. [Google Scholar]

- Armand, A.; Filliat, D.; Ibanez-Guzman, J. Ontology-based context awareness for driving assistance systems. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 227–233. [Google Scholar] [CrossRef] [Green Version]

- Kenney, J.B. Dedicated short-range communications (DSRC) standards in the United States. Proc. IEEE 2011, 99, 1162–1182. [Google Scholar] [CrossRef]

- Tonguz, O.; Wisitpongphan, N.; Bai, F.; Mudalige, P.; Sadekar, V. Broadcasting in VANET. In Proceedings of the 2007 Mobile Networking for Vehicular Environments, Anchorage, AK, USA, 11 May 2007; pp. 7–12. [Google Scholar]

- Rivest, R.; Dusse, S. The MD5 Message-Digest Algorithm; Internet Activities Board, Internet Privacy Task Force: Fremont, CA, USA, 1992. [Google Scholar] [CrossRef] [Green Version]

- Pamuła, D.; Zi, A. Securing video stream captured in real time. Przegląd Elektrotechniczny 2010, 86, 167–169. [Google Scholar]

- Webb, W.T.; Hanzo, L. Modern Quadrature Amplitude Modulation: Principles and Applications for Fixed and Wireless Channels: One; IEEE Press-John Wiley: Hoboken, NJ, USA, 1994. [Google Scholar]

- Haigh, P.A.; Ghassemlooy, Z.; Rajbhandari, S.; Papakonstantinou, I. Visible light communications using organic light emitting diodes. IEEE Commun. Mag. 2013, 51, 148–154. [Google Scholar] [CrossRef]

- Haigh, P.A.; Ghassemlooy, Z.; Rajbhandari, S.; Papakonstantinou, I.; Popoola, W. Visible Light Communications: 170 Mb/s Using an Artificial Neural Network Equalizer in a Low Bandwidth White Light Configuration. J. Light. Technol. 2014, 32, 1807–1813. [Google Scholar] [CrossRef]

- Shi, M.; Wang, C.; Li, G.; Liu, Y.; Wang, K.; Chi, N. A 5Gb/s 2 × 2 MIMO Real-time Visible Light CommunicationSystem based on silicon substrate LEDs. In Proceedings of the 2019 Global LIFI Congress (GLC) Paris, France, 12–13 June 2019; p. 5. [Google Scholar]

- Udacity. Udacity Self-Driving Car Project. 2017. Available online: https://github.com/udacity/self-driving-car-sim (accessed on 8 November 2021).

- Haas, J.K. A History of the Unity Game Engine. Ph.D. Thesis, Worcester Polytechnic Institute, Worcester, MA, USA, 2014. [Google Scholar]

- Heng, L.; Choi, B.; Cui, Z.; Geppert, M.; Hu, S.; Kuan, B.; Liu, P.; Nguyen, R.; Yeo, Y.C.; Geiger, A.; et al. Project autovision: Localization and 3d scene perception for an autonomous vehicle with a multi-camera system. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4695–4702. [Google Scholar]

- Gao, H.; Cheng, B.; Wang, J.; Li, K.; Zhao, J.; Li, D. Object classification using CNN-based fusion of vision and LIDAR in autonomous vehicle environment. IEEE Trans. Ind. Inform. 2018, 14, 4224–4231. [Google Scholar] [CrossRef]

- Steinbaeck, J.; Steger, C.; Holweg, G.; Druml, N. Next generation radar sensors in automotive sensor fusion systems. In Proceedings of the 2017 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 10–12 October 2017; pp. 1–6. [Google Scholar]

- Sirin, E.; Parsia, B.; Grau, B.C.; Kalyanpur, A.; Katz, Y. Pellet: A practical owl-dl reasoner. J. Web Semant. 2007, 5, 51–53. [Google Scholar] [CrossRef]

- Aleksandrov, D.; Penkov, I. Energy consumption of mini UAV helicopters with different number of rotors. In Proceedings of the 11th International Symposium “Topical Problems in the Field of Electrical and Power Engineering”, Pärnu, Estonia, 16–21 January 2012; pp. 259–262. [Google Scholar]

- Kim, Y.H.; Cahyadi, W.A.; Chung, Y.H. Experimental demonstration of VLC-based vehicle-to-vehicle communications under fog conditions. IEEE Photonics J. 2015, 7, 1–9. [Google Scholar] [CrossRef]

- Chen, Q.; Xie, Y.; Guo, S.; Bai, J.; Shu, Q. Sensing system of environmental perception technologies for driverless vehicle: A review of state of the art and challenges. Sens. Actuators A Phys. 2021, 319, 112566. [Google Scholar] [CrossRef]

- Mohammed, A.S.; Amamou, A.; Ayevide, F.K.; Kelouwani, S.; Agbossou, K.; Zioui, N. The perception system of intelligent ground vehicles in all weather conditions: A systematic literature review. Sensors 2020, 20, 6532. [Google Scholar] [CrossRef] [PubMed]

- Gruyer, D.; Magnier, V.; Hamdi, K.; Claussmann, L.; Orfila, O.; Rakotonirainy, A. Perception, information processing and modeling: Critical stages for autonomous driving applications. Annu. Rev. Control 2017, 44, 323–341. [Google Scholar] [CrossRef]

| Component | Description |

|---|---|

| Class | Object describing the concepts in the domain, whether they are abstract ideas or physical actors. Classes can be hierarchized by levels, for example having a Vehicle as a top-class containing Car, Bus and Bike as sub-classes |

| Individuals | Real instances belonging to Classes and representing the actual elements stored in the knowledge base |

| Properties | The specific information relative to classes. They can be intrinsic to an object, or extrinsic, representing the interconnections between different concepts and allow to link two individuals together. |

| Study | Speed | Transmission Time (for 200 Gb) |

|---|---|---|

| Haigh et al., 2013 [69] | 3 Mb/s | 18 h |

| Haigh et al., 2016 [70] | 170 Mb/s | 19 min |

| Shi et al., 2019 [71] | 5 Gb/s | 40 s |

| Average Speed | Time to Complete the Circuit | Max Speed | Number of Incidents | |

|---|---|---|---|---|

| Normal driver | 27 km/h | 67 s | 69 km/h | 1 |

| Cautious driver | 16 km/h | 92 s | 37 km/h | 0 |

| Careless driver | 33 km/h | 54 s | 78 km/h | 3 |

| Average Speed | Time to Complete the Circuit | Max Speed | Number of Incidents | |

|---|---|---|---|---|

| Normal driver | 31 km/h | 50 s | 78 km/h | 0 |

| Cautious driver | 22 km/h | 65 s | 46 km/h | 0 |

| Careless driver | 44 km/h | 41 s | 120 km/h | 2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khezaz, A.; Hina, M.D.; Guan, H.; Ramdane-Cherif, A. Knowledge-Based Approach for the Perception Enhancement of a Vehicle. J. Sens. Actuator Netw. 2021, 10, 66. https://doi.org/10.3390/jsan10040066

Khezaz A, Hina MD, Guan H, Ramdane-Cherif A. Knowledge-Based Approach for the Perception Enhancement of a Vehicle. Journal of Sensor and Actuator Networks. 2021; 10(4):66. https://doi.org/10.3390/jsan10040066

Chicago/Turabian StyleKhezaz, Abderraouf, Manolo Dulva Hina, Hongyu Guan, and Amar Ramdane-Cherif. 2021. "Knowledge-Based Approach for the Perception Enhancement of a Vehicle" Journal of Sensor and Actuator Networks 10, no. 4: 66. https://doi.org/10.3390/jsan10040066

APA StyleKhezaz, A., Hina, M. D., Guan, H., & Ramdane-Cherif, A. (2021). Knowledge-Based Approach for the Perception Enhancement of a Vehicle. Journal of Sensor and Actuator Networks, 10(4), 66. https://doi.org/10.3390/jsan10040066