Smart Stick Navigation System for Visually Impaired Based on Machine Learning Algorithms Using Sensors Data

Abstract

:1. Introduction

2. Related Work

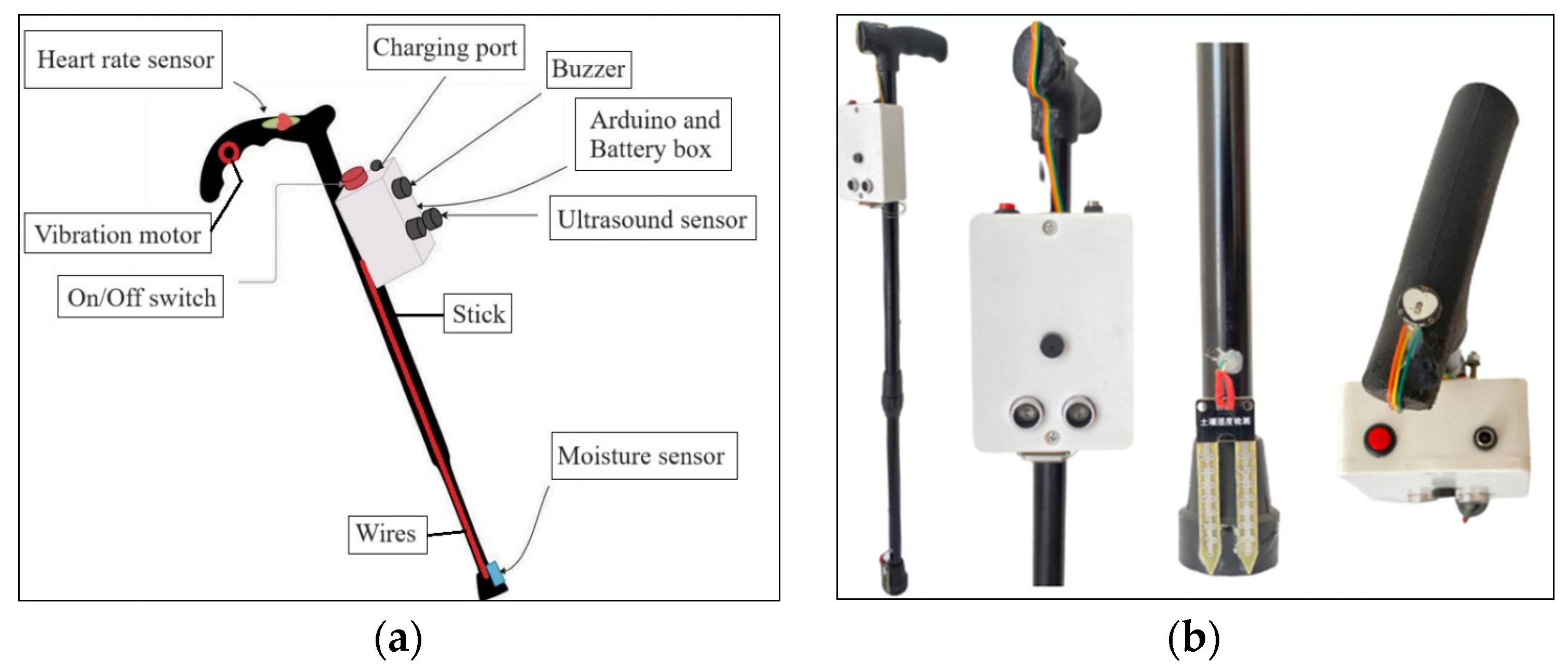

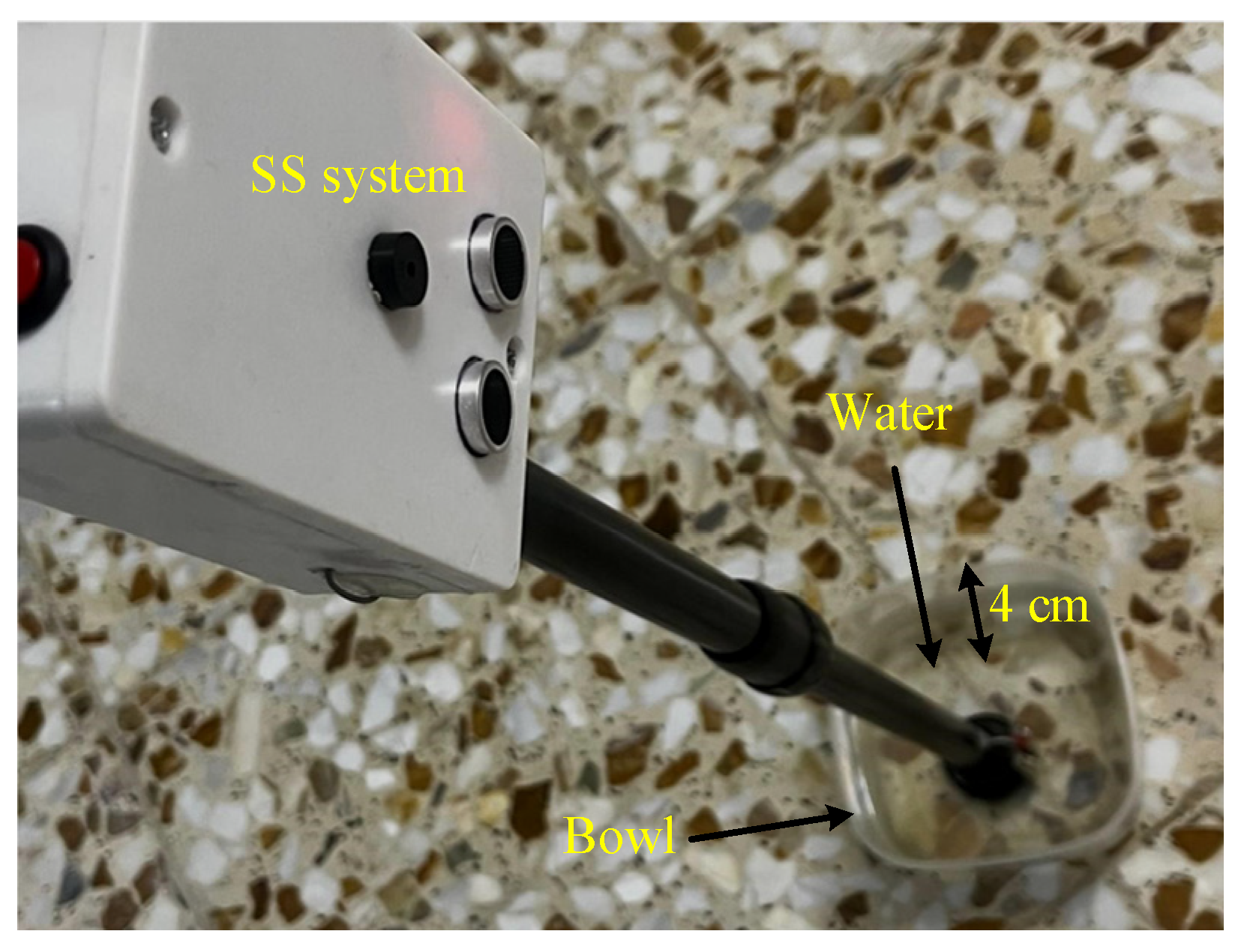

3. System Design

4. Hardware Architecture

4.1. Microcontroller-Based Arduino Nano

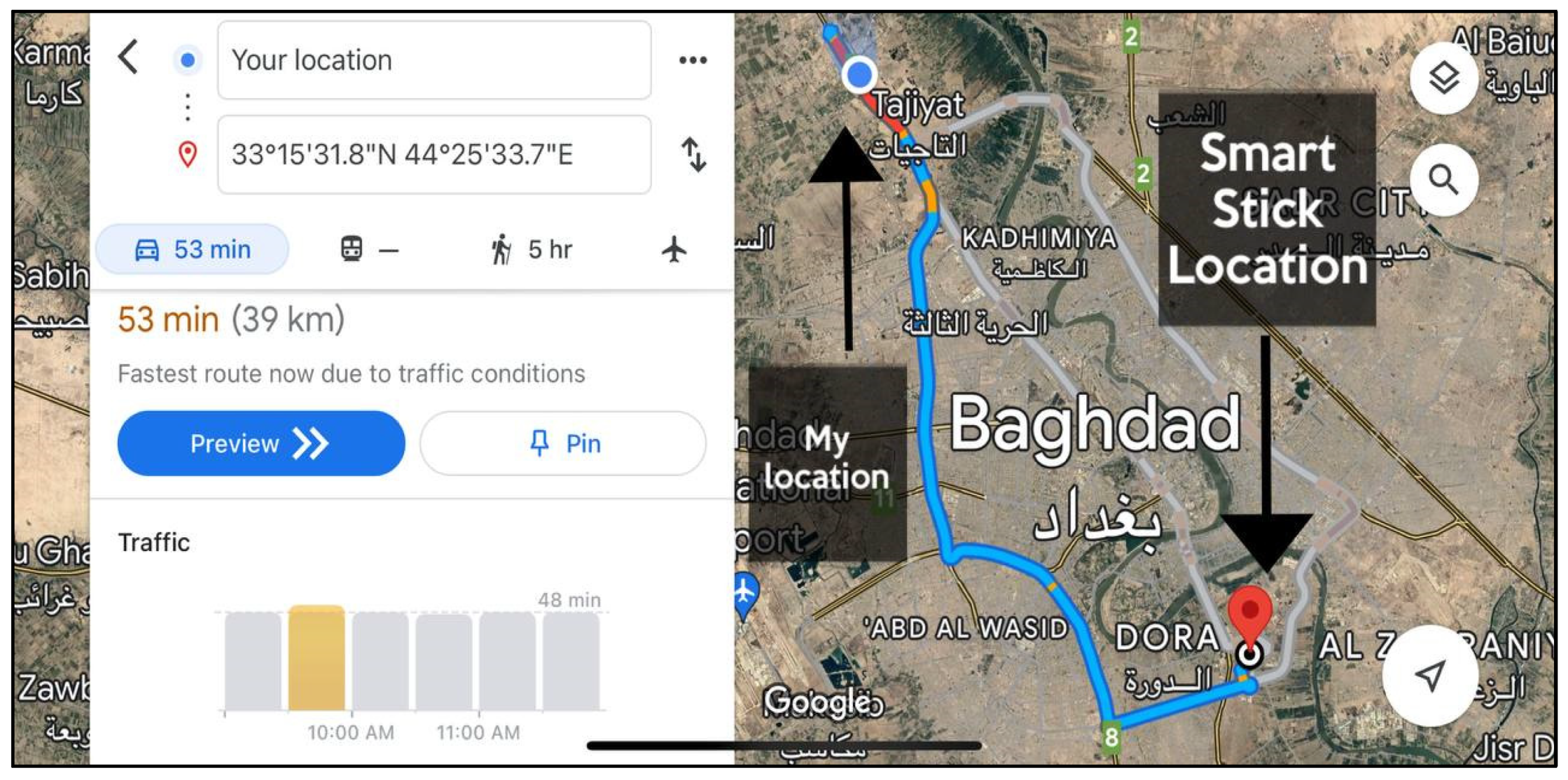

4.2. GPS Module

4.3. GSM Module

4.4. Biomedical and Environmental Sensors

4.5. Actuators (Vibration Motor and Buzzer)

4.6. Power Source

5. Software Architecture

- Initially, the microcontroller’s input and output ports and the serial port’s data rate were configured.

- Subsequently, the sensors were initialized, encompassing heart rate, soil moisture, ultrasound, GSM, and GPS.

- The sensors acquired relevant data and stored and processed data within the microcontroller.

- The microcontroller prompted the GPS to acquire the VIP’s geolocations (latitude and longitude).

- If the geolocations were successfully obtained, they were stored in the microcontroller for subsequent transmission to the family member or caregiver; otherwise, the process returned to step 4.

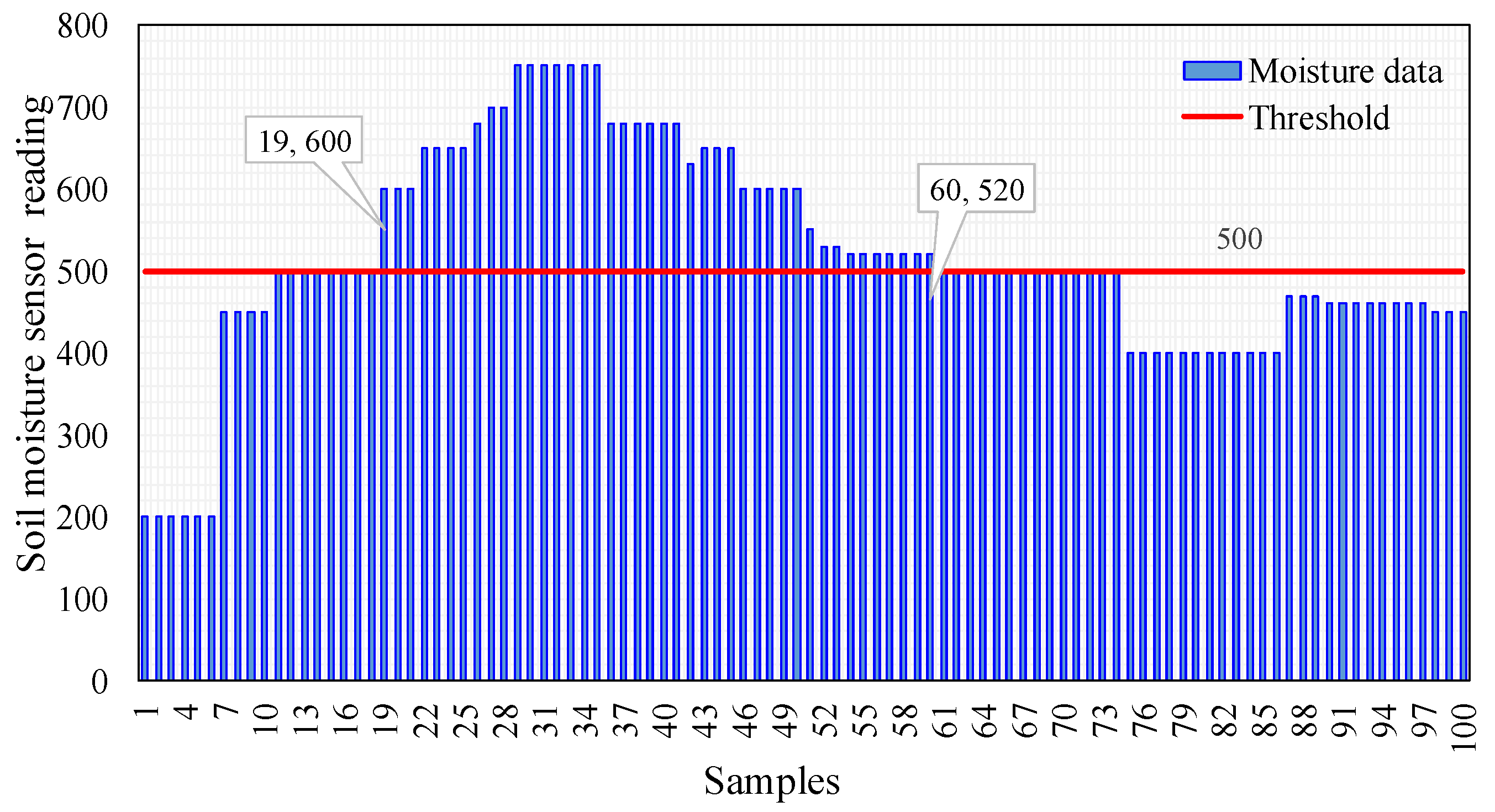

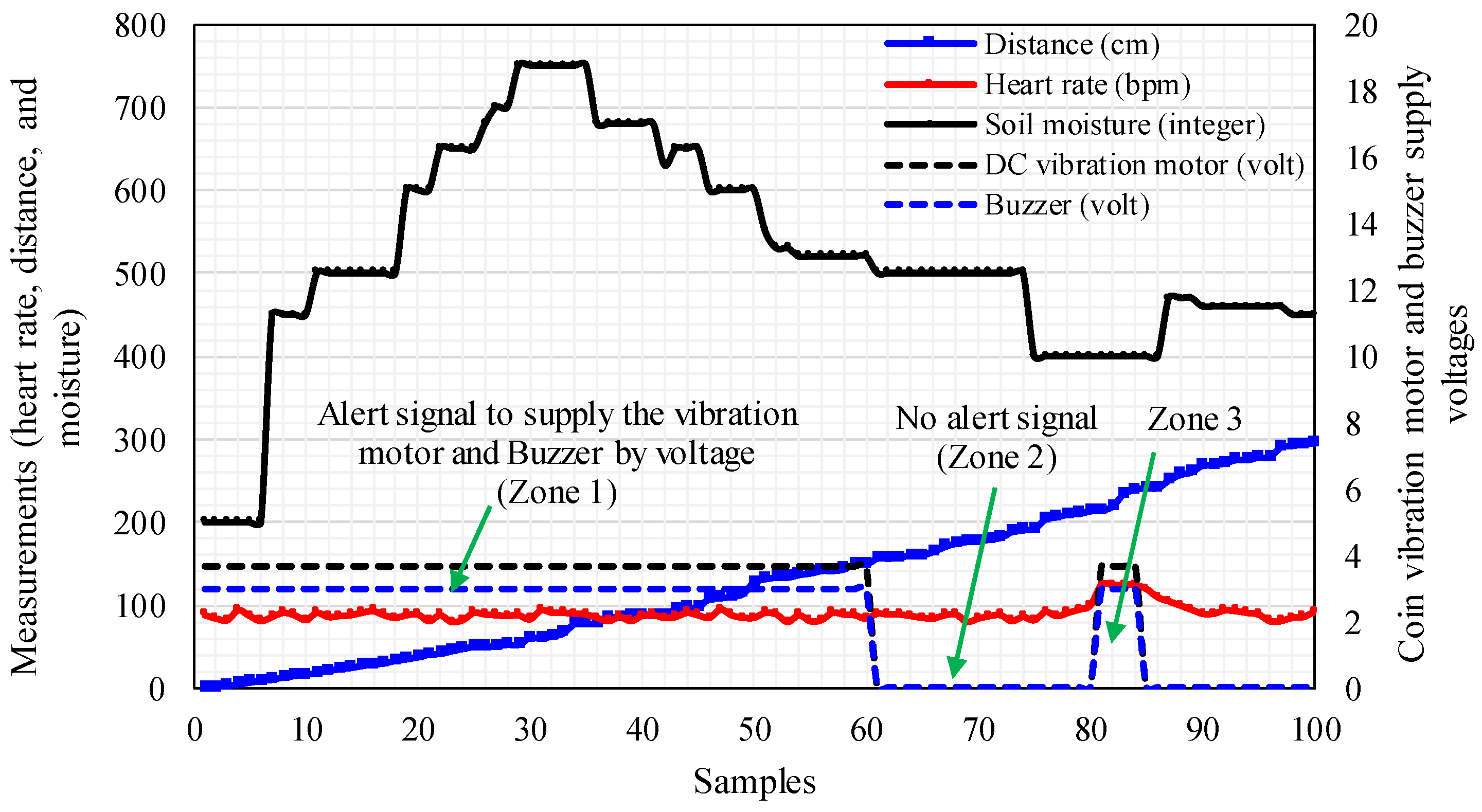

- The stored sensor data underwent testing. If the heart rate exceeded 120 bpm, an obstacle was identified within a distance less than 40 cm, or if the soil moisture level surpassed 500, the microcontroller activated a Buzzer and DC vibration motor to alert the VIP to their health and environmental conditions. Otherwise, the microcontroller transitioned the sensors and GSM into sleep mode to conserve energy for the SS system. A timestep of 3 s was configured to process the data acquired from the sensors. This time was selected to provide the blind person with a real-time warning signal in the form of sound or vibration and enable him/her to take necessary action.

- The microcontroller transmitted the geolocations and SMS (related to the VIP’s heart rate) to the GSM.

- The GSM conveyed the geolocations and SMS to the mobile phone of the family member or caregiver via the GSM network.

- Finally, the family member assisted the VIP in emergencies.

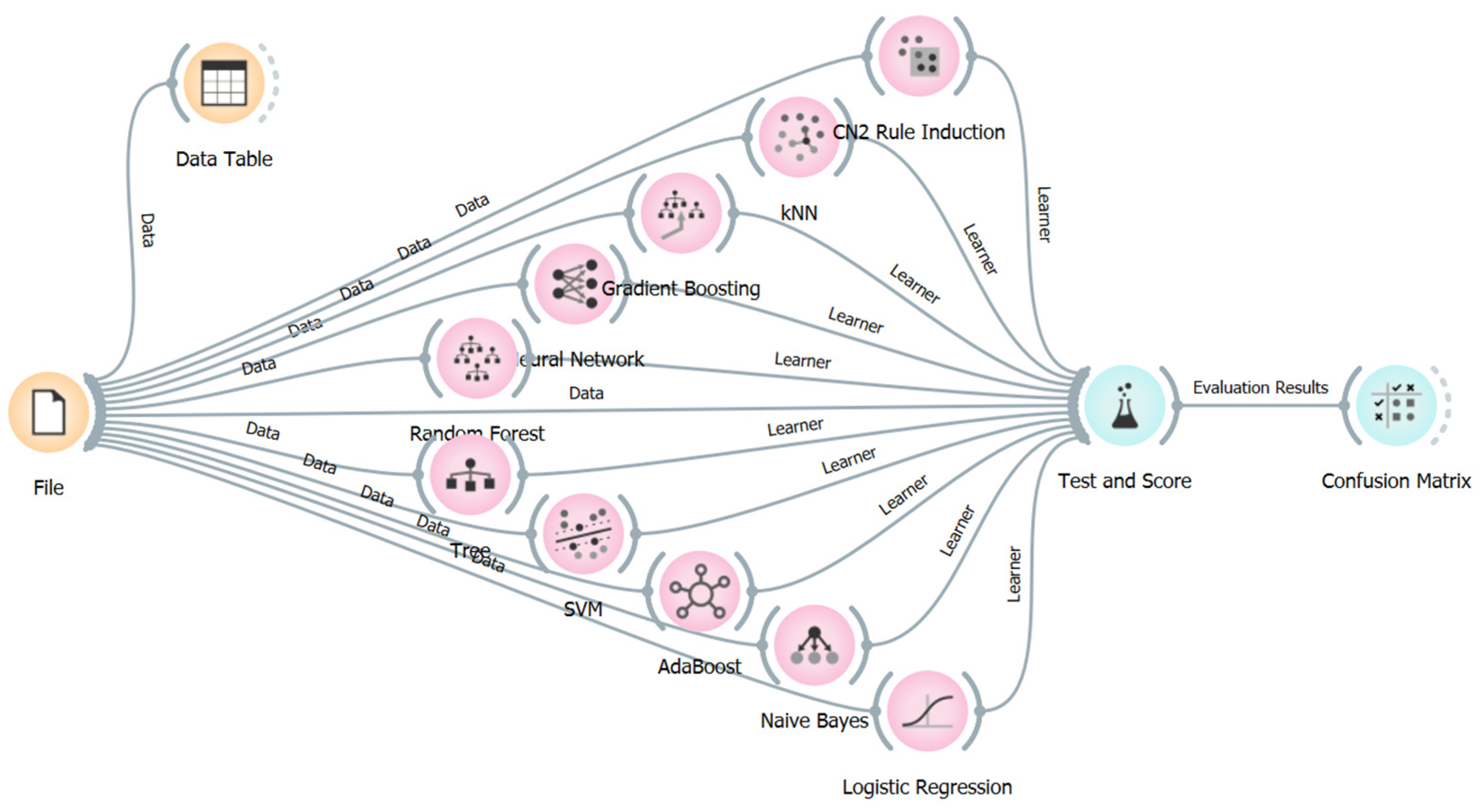

6. Machine Learning Algorithms

6.1. Data Collection for ML Algorithm

6.2. Implementation of Machine Learning Algorithms

6.3. Performance Evaluation of Machine Learning Algorithms

7. Results and Discussion

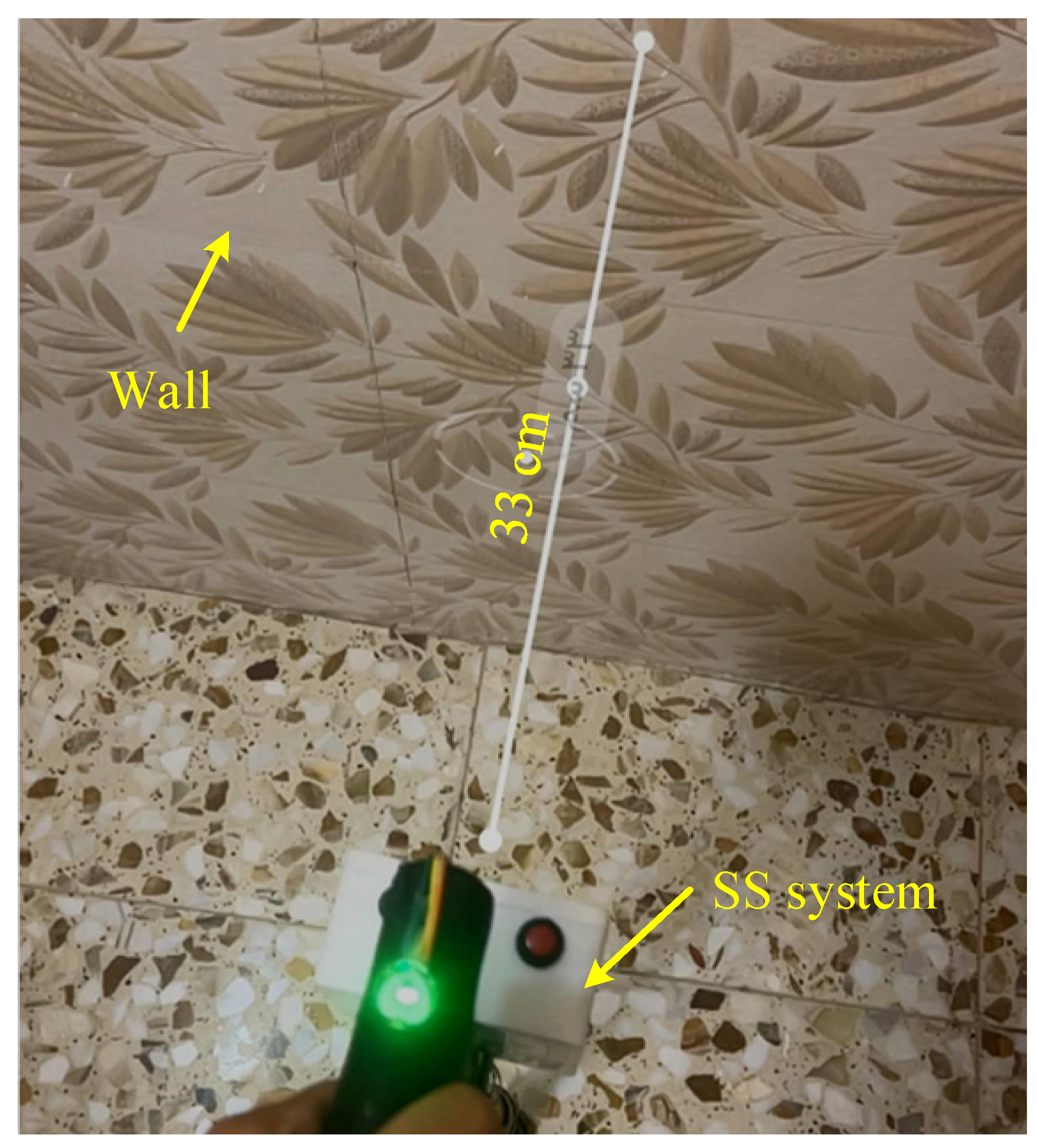

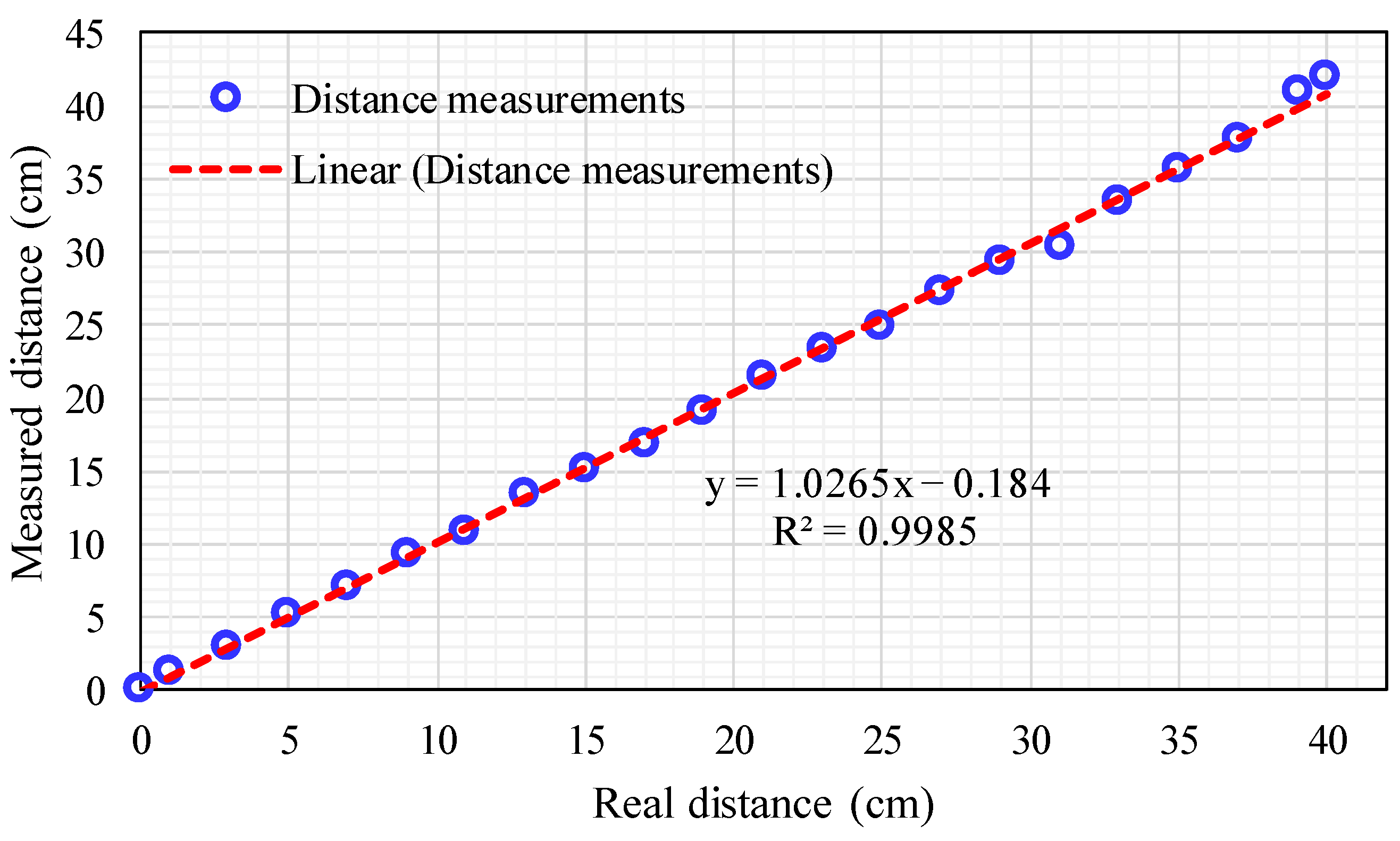

7.1. Results of Sensors Measurements

7.1.1. Results of Distance Measurements

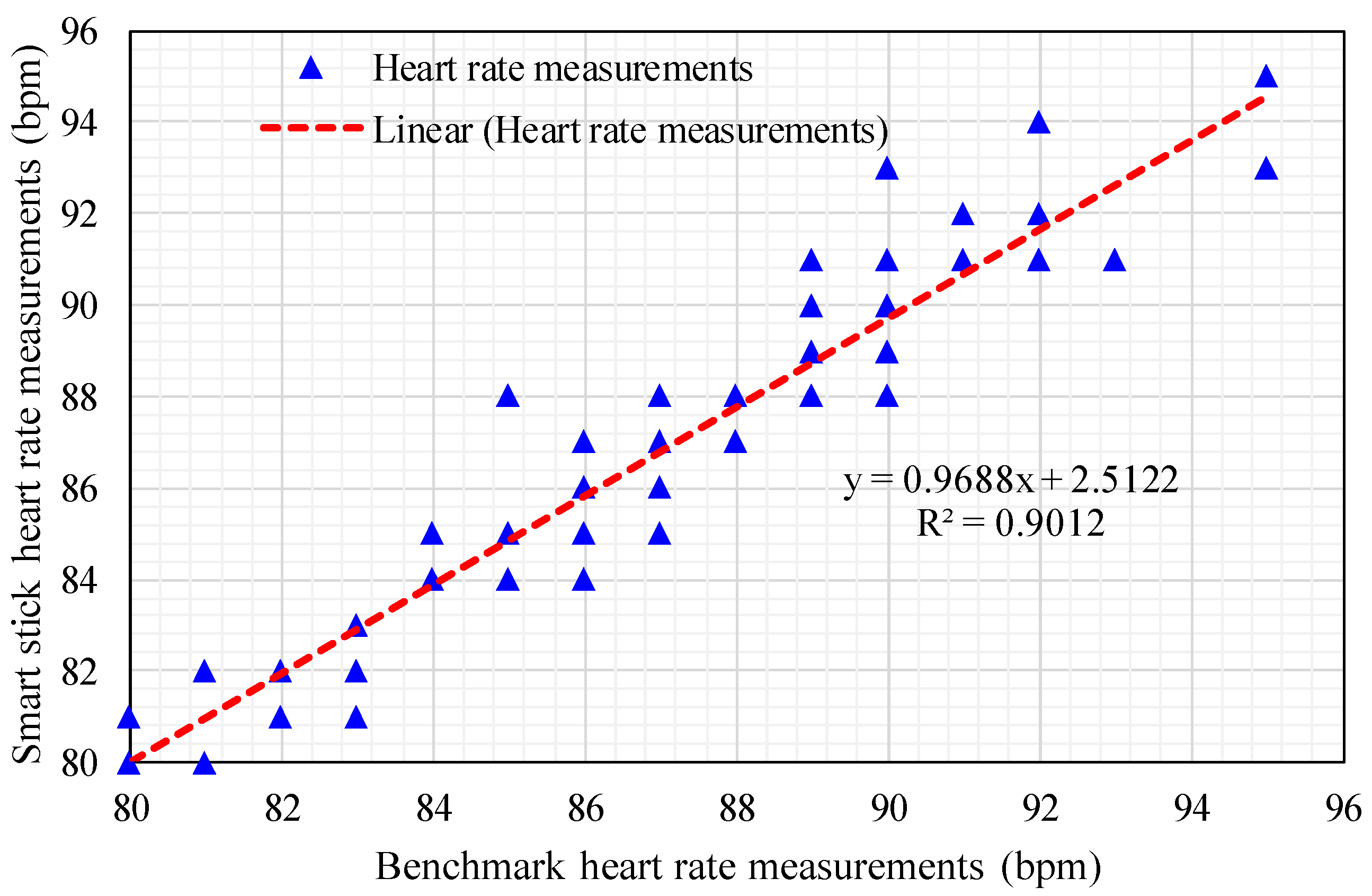

7.1.2. Results of Heart Rate Measurements

7.1.3. Results of Moisture Measurements

7.1.4. Results of Geolocations Estimation

7.2. Results of ML Algorithms

7.2.1. Results of Confusion Metrics Parameters

7.2.2. Results of Performance Metrics

- High-performance algorithms: Gradient Boosting, AdaBoost, CN2 Rule Induction, Random Forest, and Decision Tree, as highlighted in bold black color.

- Moderate performance algorithms: kNN, Neural Network, and SVM.

- Lower performance algorithms: Logistic Regression and Naïve Bayes.

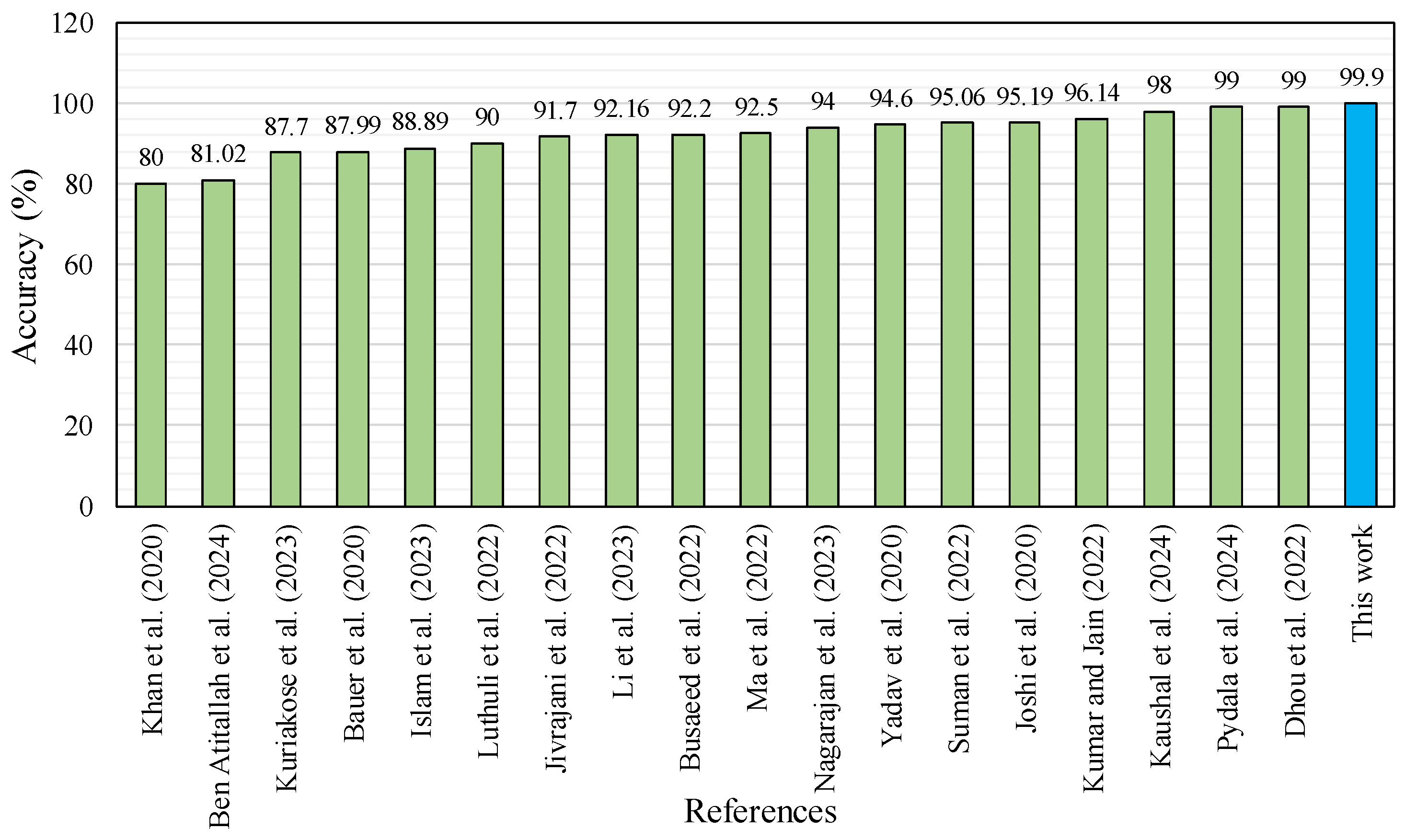

8. Comparison Results

| Ref./Year | Task | ML Model | Sensors | Actuator/Device | Communication Module | Processor | Detection Type | Environments | Performance Metric | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ultrasonic | Moisture | Hear Rate | Accelerometer | Vibration Motor | Buzzer | Camera | Earphone/ Headphone | GPS | GSM | Raspberry Pi | Arduino | Obstacle Detection | Moisture Detection | Health Monitoring | Indoor | Outdoor | Accuracy (%) | |||

| Jivrajani et al. [1]/2022 | Obstacle detection | CNN | ✓ | × | ✓ | × | × | × | ✓ | × | ✓ | ✓ | ✓ | × | ✓ | ✓ | ✓ | ✓ | ✓ | 91.7 |

| Luthuli et al. [54]/2022 | Detect obstacles and objects | CNN | ✓ | × | × | × | × | × | ✓ | ✓ | × | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 90.00 |

| Yang et al. [59]/2024 |

| A novel approach-based TENG | × | × | × | × | ✓ | ✓ | × | × | × | × | × | ✓ | ✓ | ✓ | × | × | × | N/A |

| Ben Atitallah et al. [27]/ 2024 | Obstacle detection | YOLO v5 neural network | × | × | × | × | × | × | × | × | × | × | × | × | ✓ | × | × | ✓ | ✓ | 81.02 |

| Ma et al. [28]/2024 |

| YOLACT algorithm-based CNN | ✓ | × | × | ✓ | ✓ | × | ✓ | × | ✓ | × | ✓ | ✓ | ✓ | × | × | × | × | 92.5 |

| Khan et al. [36]/2020 |

| Tesseract OCR engine | ✓ | × | × | × | × | × | ✓ | ✓ | × | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 80.00 |

| Bauer et al. [60]/2020 |

|

| × | × | × | × | × | × | ✓ | × | × | × | × | × | ✓ | × | × | × | ✓ | 87.99 |

| Dhou et al. [55]/2022 |

|

| ✓ | × | × | ✓ | × | ✓ | ✓ | × | ✓ | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 89 99 98 93 |

| Busaeed et al. [61]/2022 | Obstacle detection | Kstar | ✓ | × | × | × | ✓ | ✓ | ✓ | ✓ | × | × | × | ✓ | ✓ | × | × | ✓ | ✓ | 92.20 |

| Suman et al. [10]/2022 | Obstacle identification | RNN | ✓ | ✓ | × | × | × | × | ✓ | ✓ | × | × | × | ✓ | ✓ | ✓ | × | ✓ | ✓ | 95.06 (ind) 87.68 (oud) |

| Kumar and Jain [56]/ 2022 | Recognize the environments and navigate | YOLO | ✓ | × | × | × | × | × | ✓ | ✓ | ✓ | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 96.14 |

| Nagarajan and Gopinath [9]/2023 | Object recognition | DCNN and DRN | × | × | × | × | × | × | × | × | × | × | × | × | ✓ | × | × | ✓ | × | 94.00 |

| Kuriakose et al. [62]/2023 | Navigation assistant | DeepNAVI | ✓ | × | × | × | × | × | ✓ | × | × | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 87.70 |

| Islam et al. [13]/2023 |

| SSDLite MobileNetV2 | ✓ | × | × | × | × | × | ✓ | × | × | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 88.89 |

| Li et al. [17]/2023 |

| YOLO v5 | ✓ | ✓ | ✓ | ✓ | × | × | ✓ | ✓ | × | × | ✓ | × | ✓ | ✓ | ✓ | ✓ | ✓ | 92.16 |

| Pydala et al. [63]/2024 |

|

| ✓ | × | × | × | × | × | ✓ | ✓ | × | × | × | × | ✓ | × | × | × | × | 99.00 |

| Kaushal et al. [64]/2024 | Obstacle identification | CNN | ✓ | × | × | × | × | ✓ | ✓ | × | ✓ | ✓ | × | ✓ | ✓ | × | × | ✓ | ✓ | 98.00 |

| Yadav et al. [57]/2020 | Object identification | CNN | ✓ | × | × | × | ✓ | × | ✓ | ✓ | × | × | ✓ | ✓ | × | × | × | × | 94.6 | |

| Kumar et al. [65] /2024 | Obstacle detection | CNN-based MobileNet model | ✓ | × | × | × | × | × | × | ✓ | ✓ | ✓ | × | ✓ | ✓ | × | × | ✓ | × | N/A |

| Joshi et al. [58]/2020 |

| YOLO v3 | ✓ | × | × | × | × | × | ✓ | ✓ | × | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 95.19 |

| This work |

|

| ✓ | ✓ | ✓ | × | ✓ | ✓ | × | × | ✓ | ✓ | × | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 99.9 |

9. Conclusions

- Extra sensors such as thermal imaging, light detection ranging, infrared, and cameras could enable the system to detect and classify obstacles more precisely, especially in varying environmental conditions.

- Other health sensors that can be added to the SS system, such as oxygen saturation and blood pressure monitors, can present a more thorough health monitoring system.

- Using edge and cloud computing increases real-time data processing capabilities and enables continuous learning and updating of ML algorithms based on data collected from multiple VIP.

- Implementing deep learning algorithms can improve decision making and pattern recognition processes, making the SS system more adaptive to complex and diverse scenarios.

- Utilizing reinforcement-learning algorithms, for example, Deep Q-Networks, can help SS systems learn specific user preferences and behaviors, resulting in a more personalized and effective travel experience.

- An SS system with smart eyeglasses can improve VIP navigation in various surroundings.

- Another potential enhancement relates to the battery usage of the SS, as the battery lifetime is presently limited. To overcome the limitation of the power resources, for example, a wireless power transfer technology can be used to charge the battery of the SS when not in use by VIP.

- Expanding the feedback instruments to comprise haptic responses and voice signals can provide a wide range of user preferences and requests, increasing the overall user experience.

- ML was trained and tested at the offline phase in the current work. Therefore, in the next step, we plan to implement it in real time, which requires a sophisticated microcontroller, such as the Arduino Nano 33 BLE Sense, to incorporate sensor measurements with ML algorithms.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jivrajani, K.; Patel, S.K.; Parmar, C.; Surve, J.; Ahmed, K.; Bui, F.M.; Al-Zahrani, F.A. AIoT-based smart stick for visually impaired person. IEEE Trans. Instrum. Meas. 2022, 72, 2501311. [Google Scholar] [CrossRef]

- Ashrafuzzaman, M.; Saha, S.; Uddin, N.; Saha, P.K.; Hossen, S.; Nur, K. Design and Development of a Low-cost Smart Stick for Visually Impaired People. In Proceedings of the International Conference on Science & Contemporary Technologies (ICSCT), Dhaka, Bangladesh, 5–7 August 2021; pp. 1–6. [Google Scholar]

- Farooq, M.S.; Shafi, I.; Khan, H.; Díez, I.D.L.T.; Breñosa, J.; Espinosa, J.C.M.; Ashraf, I. IoT Enabled Intelligent Stick for Visually Impaired People for Obstacle Recognition. Sensors 2022, 22, 8914. [Google Scholar] [CrossRef] [PubMed]

- Ben Atitallah, A.; Said, Y.; Ben Atitallah, M.A.; Albekairi, M.; Kaaniche, K.; Alanazi, T.M.; Boubaker, S.; Atri, M. Embedded implementation of an obstacle detection system for blind and visually impaired persons’ assistance navigation. Comput. Electr. Eng. 2023, 108, 108714. [Google Scholar] [CrossRef]

- Gharghan, S.K.; Al-Kafaji, R.D.; Mahdi, S.Q.; Zubaidi, S.L.; Ridha, H.M. Indoor Localization for the Blind Based on the Fusion of a Metaheuristic Algorithm with a Neural Network Using Energy-Efficient WSN. Arab. J. Sci. Eng. 2023, 48, 6025–6052. [Google Scholar] [CrossRef]

- Ali, Z.A. Design and evaluation of two obstacle detection devices for visually impaired people. J. Eng. Res. 2023, 11, 100–105. [Google Scholar] [CrossRef]

- Singh, B.; Ekvitayavetchanuku, P.; Shah, B.; Sirohi, N.; Pundhir, P. IoT-Based Shoe for Enhanced Mobility and Safety of Visually Impaired Individuals. EAI Endorsed Trans. Internet Things 2024, 10, 1–19. [Google Scholar] [CrossRef]

- Wang, J.; Wang, S.; Zhang, Y. Artificial intelligence for visually impaired. Displays 2023, 77, 102391. [Google Scholar] [CrossRef]

- Nagarajan, A.; Gopinath, M.P. Hybrid Optimization-Enabled Deep Learning for Indoor Object Detection and Distance Estimation to Assist Visually Impaired Persons. Adv. Eng. Softw. 2023, 176, 103362. [Google Scholar] [CrossRef]

- Suman, S.; Mishra, S.; Sahoo, K.S.; Nayyar, A. Vision Navigator: A Smart and Intelligent Obstacle Recognition Model for Visually Impaired Users. Mob. Inf. Syst. 2022, 2022, 9715891. [Google Scholar] [CrossRef]

- Mohd Romlay, M.R.; Mohd Ibrahim, A.; Toha, S.F.; De Wilde, P.; Venkat, I.; Ahmad, M.S. Obstacle avoidance for a robotic navigation aid using Fuzzy Logic Controller-Optimal Reciprocal Collision Avoidance (FLC-ORCA). Neural Comput. Appl. 2023, 35, 22405–22429. [Google Scholar] [CrossRef]

- Holguín, A.I.H.; Méndez-González, L.C.; Rodríguez-Picón, L.A.; Olguin, I.J.C.P.; Carreón, A.E.Q.; Anaya, L.G.G. Assistive Device for the Visually Impaired Based on Computer Vision. In Innovation and Competitiveness in Industry 4.0 Based on Intelligent Systems; Méndez-González, L.C., Rodríguez-Picón, L.A., Pérez Olguín, I.J.C., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 71–97. [Google Scholar] [CrossRef]

- Islam, R.B.; Akhter, S.; Iqbal, F.; Rahman, M.S.U.; Khan, R. Deep learning based object detection and surrounding environment description for visually impaired people. Heliyon 2023, 9, e16924. [Google Scholar] [CrossRef] [PubMed]

- Balasubramani, S.; Mahesh Rao, E.; Abdul Azeem, S.; Venkatesh, N. Design IoT-Based Blind Stick for Visually Disabled Persons. In Proceedings of the International Conference on Computing, Communication, Electrical and Biomedical Systems, Coimbatore, India, 25–26 March 2021; pp. 385–396. [Google Scholar]

- Dhilip Karthik, M.; Kareem, R.M.; Nisha, V.; Sajidha, S. Smart Walking Stick for Visually Impaired People. In Privacy Preservation of Genomic and Medical Data; Tyagi, A.K., Ed.; Wiley: Hoboken, NJ, USA, 2023; pp. 361–381. [Google Scholar]

- Panazan, C.-E.; Dulf, E.-H. Intelligent Cane for Assisting the Visually Impaired. Technologies 2024, 12, 75. [Google Scholar] [CrossRef]

- Li, J.; Xie, L.; Chen, Z.; Shi, L.; Chen, R.; Ren, Y.; Wang, L.; Lu, X. An AIoT-Based Assistance System for Visually Impaired People. Electronics 2023, 12, 3760. [Google Scholar] [CrossRef]

- Hashim, Y.; Abdulbaqi, A.G. Smart stick for blind people with wireless emergency notification. TELKOMNIKA (Telecommun. Comput. Electron. Control) 2024, 22, 175–181. [Google Scholar] [CrossRef]

- Sahoo, N.; Lin, H.-W.; Chang, Y.-H. Design and Implementation of a Walking Stick Aid for Visually Challenged People. Sensors 2019, 19, 130. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, H.Q.; Duong, A.H.L.; Vu, M.D.; Dinh, T.Q.; Ngo, H.T. Smart Blind Stick for Visually Impaired People; Springer International Publishing: Cham, Switzerland, 2022; pp. 145–165. [Google Scholar]

- Senthilnathan, A.; Palanivel, P.; Sowmiya, M. Intelligent stick for visually impaired persons. In Proceedings of the AIP Conference Proceedings, Coimbatore, India, 24–25 March 2022; p. 060016. [Google Scholar] [CrossRef]

- Khan, I.; Khusro, S.; Ullah, I. Identifying the walking patterns of visually impaired people by extending white cane with smartphone sensors. Multimed. Tools Appl. 2023, 82, 27005–27025. [Google Scholar] [CrossRef]

- Thi Pham, L.T.; Phuong, L.G.; Tam Le, Q.; Thanh Nguyen, H. Smart Blind Stick Integrated with Ultrasonic Sensors and Communication Technologies for Visually Impaired People. In Deep Learning and Other Soft Computing Techniques: Biomedical and Related Applications; Phuong, N.H., Kreinovich, V., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2023; Volume 1097, pp. 121–134. [Google Scholar]

- Bazi, Y.; Alhichri, H.; Alajlan, N.; Melgani, F. Scene Description for Visually Impaired People with Multi-Label Convolutional SVM Networks. Appl. Sci. 2019, 9, 5062. [Google Scholar] [CrossRef]

- Gensytskyy, O.; Nandi, P.; Otis, M.J.D.; Tabi, C.E.; Ayena, J.C. Soil friction coefficient estimation using CNN included in an assistive system for walking in urban areas. J. Ambient Intell. Humaniz. Comput. 2023, 14, 14291–14307. [Google Scholar] [CrossRef]

- Chopda, R.; Khan, A.; Goenka, A.; Dhere, D.; Gupta, S. An Intelligent Voice Assistant Engineered to Assist the Visually Impaired. In Proceedings of the Intelligent Computing and Networking, Mumbai, India, 25–26 February 2022; Lecture Notes in Networks and Systems; pp. 143–155. [Google Scholar]

- Ben Atitallah, A.; Said, Y.; Ben Atitallah, M.A.; Albekairi, M.; Kaaniche, K.; Boubaker, S. An effective obstacle detection system using deep learning advantages to aid blind and visually impaired navigation. Ain Shams Eng. J. 2024, 15, 102387. [Google Scholar] [CrossRef]

- Ma, Y.; Shi, Y.; Zhang, M.; Li, W.; Ma, C.; Guo, Y. Design and Implementation of an Intelligent Assistive Cane for Visually Impaired People Based on an Edge-Cloud Collaboration Scheme. Electronics 2022, 11, 2266. [Google Scholar] [CrossRef]

- Mahmood, M.F.; Mohammed, S.L.; Gharghan, S.K. Energy harvesting-based vibration sensor for medical electromyography device. Int. J. Electr. Electron. Eng. Telecommun. 2020, 9, 364–372. [Google Scholar] [CrossRef]

- Kulurkar, P.; kumar Dixit, C.; Bharathi, V.; Monikavishnuvarthini, A.; Dhakne, A.; Preethi, P. AI based elderly fall prediction system using wearable sensors: A smart home-care technology with IOT. Meas. Sens. 2023, 25, 100614. [Google Scholar] [CrossRef]

- Jumaah, H.J.; Kalantar, B.; Halin, A.A.; Mansor, S.; Ueda, N.; Jumaah, S.J. Development of UAV-Based PM2.5 Monitoring System. Drones 2021, 5, 60. [Google Scholar] [CrossRef]

- Ahmed, N.; Gharghan, S.K.; Mutlag, A.H. IoT-based child tracking using RFID and GPS. Int. J. Comput. Appl. 2023, 45, 367–378. [Google Scholar] [CrossRef]

- Fakhrulddin, S.S.; Gharghan, S.K.; Al-Naji, A.; Chahl, J. An Advanced First Aid System Based on an Unmanned Aerial Vehicles and a Wireless Body Area Sensor Network for Elderly Persons in Outdoor Environments. Sensors 2019, 19, 2955. [Google Scholar] [CrossRef] [PubMed]

- Fakhrulddin, S.S.; Gharghan, S.K. An Autonomous Wireless Health Monitoring System Based on Heartbeat and Accelerometer Sensors. J. Sens. Actuator Netw. 2019, 8, 39. [Google Scholar] [CrossRef]

- Al-Naji, A.; Al-Askery, A.J.; Gharghan, S.K.; Chahl, J. A System for Monitoring Breathing Activity Using an Ultrasonic Radar Detection with Low Power Consumption. J. Sens. Actuator Netw. 2019, 8, 32. [Google Scholar] [CrossRef]

- Khan, M.A.; Paul, P.; Rashid, M.; Hossain, M.; Ahad, M.A.R. An AI-based visual aid with integrated reading assistant for the completely blind. IEEE Trans. Hum. Mach. Syst. 2020, 50, 507–517. [Google Scholar] [CrossRef]

- Zakaria, M.I.; Jabbar, W.A.; Sulaiman, N. Development of a smart sensing unit for LoRaWAN-based IoT flood monitoring and warning system in catchment areas. Internet Things Cyber-Phys. Syst. 2023, 3, 249–261. [Google Scholar] [CrossRef]

- Sharma, A.K.; Singh, V.; Goyal, A.; Oza, A.D.; Bhole, K.S.; Kumar, M. Experimental analysis of Inconel 625 alloy to enhance the dimensional accuracy with vibration assisted micro-EDM. Int. J. Interact. Des. Manuf. 2023, 1–15. [Google Scholar] [CrossRef]

- Gharghan, S.K.; Nordin, R.; Ismail, M. Energy Efficiency of Ultra-Low-Power Bicycle Wireless Sensor Networks Based on a Combination of Power Reduction Techniques. J. Sens. 2016, 2016, 7314207. [Google Scholar] [CrossRef]

- Mahmood, M.F.; Gharghan, S.K.; Mohammed, S.L.; Al-Naji, A.; Chahl, J. Design of Powering Wireless Medical Sensor Based on Spiral-Spider Coils. Designs 2021, 5, 59. [Google Scholar] [CrossRef]

- Mahdi, S.Q.; Gharghan, S.K.; Hasan, M.A. FPGA-Based neural network for accurate distance estimation of elderly falls using WSN in an indoor environment. Measurement 2021, 167, 108276. [Google Scholar] [CrossRef]

- Prabhakar, A.J.; Prabhu, S.; Agrawal, A.; Banerjee, S.; Joshua, A.M.; Kamat, Y.D.; Nath, G.; Sengupta, S. Use of Machine Learning for Early Detection of Knee Osteoarthritis and Quantifying Effectiveness of Treatment Using Force Platform. J. Sens. Actuator Netw. 2022, 11, 48. [Google Scholar] [CrossRef]

- Kaseris, M.; Kostavelis, I.; Malassiotis, S. A Comprehensive Survey on Deep Learning Methods in Human Activity Recognition. Mach. Learn. Knowl. Extr. 2024, 6, 842–876. [Google Scholar] [CrossRef]

- Anarbekova, G.; Ruiz, L.G.B.; Akanova, A.; Sharipova, S.; Ospanova, N. Fine-Tuning Artificial Neural Networks to Predict Pest Numbers in Grain Crops: A Case Study in Kazakhstan. Mach. Learn. Knowl. Extr. 2024, 6, 1154–1169. [Google Scholar] [CrossRef]

- Runsewe, I.; Latifi, M.; Ahsan, M.; Haider, J. Machine Learning for Predicting Key Factors to Identify Misinformation in Football Transfer News. Computers 2024, 13, 127. [Google Scholar] [CrossRef]

- Onur, F.; Gönen, S.; Barışkan, M.A.; Kubat, C.; Tunay, M.; Yılmaz, E.N. Machine learning-based identification of cybersecurity threats affecting autonomous vehicle systems. Comput. Ind. Eng. 2024, 190, 110088. [Google Scholar] [CrossRef]

- Jingning, L. Speech recognition based on mobile sensor networks application in English education intelligent assisted learning system. Meas. Sens. 2024, 32, 101084. [Google Scholar] [CrossRef]

- Mutemi, A.; Bacao, F. E-Commerce Fraud Detection Based on Machine Learning Techniques: Systematic Literature Review. Big Data Min. Anal. 2024, 7, 419–444. [Google Scholar] [CrossRef]

- Makulavičius, M.; Petkevičius, S.; Rožėnė, J.; Dzedzickis, A.; Bučinskas, V. Industrial Robots in Mechanical Machining: Perspectives and Limitations. Robotics 2023, 12, 160. [Google Scholar] [CrossRef]

- Alslaity, A.; Orji, R. Machine learning techniques for emotion detection and sentiment analysis: Current state, challenges, and future directions. Behav. Inf. Technol. 2024, 43, 139–164. [Google Scholar] [CrossRef]

- Gharghan, S.K.; Hashim, H.A. A comprehensive review of elderly fall detection using wireless communication and artificial intelligence techniques. Measurement 2024, 226, 114186. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.J.; Jurman, G. The Matthews correlation coefficient (MCC) is more informative than Cohen’s Kappa and Brier score in binary classification assessment. IEEE Access 2021, 9, 78368–78381. [Google Scholar] [CrossRef]

- Sannino, G.; De Falco, I.; De Pietro, G. Non-Invasive Risk Stratification of Hypertension: A Systematic Comparison of Machine Learning Algorithms. J. Sens. Actuator Netw. 2020, 9, 34. [Google Scholar] [CrossRef]

- Luthuli, M.B.; Malele, V.; Owolawi, P.A. Smart Walk: A Smart Stick for the Visually Impaired. In Proceedings of the International Conference on Intelligent and Innovative Computing Applications, Balaclava, Mauritius, 8–9 December 2022; pp. 131–136. [Google Scholar]

- Dhou, S.; Alnabulsi, A.; Al-Ali, A.-R.; Arshi, M.; Darwish, F.; Almaazmi, S.; Alameeri, R. An IoT machine learning-based mobile sensors unit for visually impaired people. Sensors 2022, 22, 5202. [Google Scholar] [CrossRef] [PubMed]

- Kumar, N.; Jain, A. A Deep Learning Based Model to Assist Blind People in Their Navigation. J. Inf. Technol. Educ. Innov. Pract. 2022, 21, 95–114. [Google Scholar] [CrossRef] [PubMed]

- Yadav, D.K.; Mookherji, S.; Gomes, J.; Patil, S. Intelligent Navigation System for the Visually Impaired—A Deep Learning Approach. In Proceedings of the 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 11–13 March 2020; pp. 652–659. [Google Scholar] [CrossRef]

- Joshi, R.C.; Yadav, S.; Dutta, M.K.; Travieso-Gonzalez, C.M. Efficient Multi-Object Detection and Smart Navigation Using Artificial Intelligence for Visually Impaired People. Entropy 2020, 22, 941. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Gao, M.; Choi, J. Smart walking cane based on triboelectric nanogenerators for assisting the visually impaired. Nano Energy 2024, 124, 109485. [Google Scholar] [CrossRef]

- Bauer, Z.; Dominguez, A.; Cruz, E.; Gomez-Donoso, F.; Orts-Escolano, S.; Cazorla, M. Enhancing perception for the visually impaired with deep learning techniques and low-cost wearable sensors. Pattern Recognit. Lett. 2020, 137, 27–36. [Google Scholar] [CrossRef]

- Busaeed, S.; Mehmood, R.; Katib, I.; Corchado, J.M. LidSonic for visually impaired: Green machine learning-based assistive smart glasses with smart app and Arduino. Electronics 2022, 11, 1076. [Google Scholar] [CrossRef]

- Kuriakose, B.; Shrestha, R.; Sandnes, F.E. DeepNAVI: A deep learning based smartphone navigation assistant for people with visual impairments. Expert Syst. Appl. 2023, 212, 118720. [Google Scholar] [CrossRef]

- Pydala, B.; Kumar, T.P.; Baseer, K.K. VisiSense: A Comprehensive IOT-based Assistive Technology System for Enhanced Navigation Support for the Visually Impaired. Scalable Comput. Pract. Exp. 2024, 25, 1134–1151. [Google Scholar] [CrossRef]

- Kaushal, R.K.; Kumar, T.P.; Sharath, N.; Parikh, S.; Natrayan, L.; Patil, H. Navigating Independence: The Smart Walking Stick for the Visually Impaired. In Proceedings of the 2024 5th International Conference on Mobile Computing and Sustainable Informatics (ICMCSI), Lalitpur, Nepal, 18–19 January 2024; pp. 103–108. [Google Scholar] [CrossRef]

- Kumar, A.; Surya, G.; Sathyadurga, V. Echo Guidance: Voice-Activated Application for Blind with Smart Assistive Stick Using Machine Learning and IoT. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

| ML Models | Hyperparameters | Value/Type | Input Features | Target (Output) |

|---|---|---|---|---|

| kNN | Number of neighbors | 5 |

| Alert decision (1, 0) |

| Distance weighting | Uniform | |||

| Metric | Euclidean | |||

| Gradient Boosting | Number of trees | 100 | ||

| Learning rate | 0.1 | |||

| Limit the depth of individual trees | 3 | |||

| Fraction of training instances | 1 | |||

| Split subset | Less than 2 | |||

| Random Forest | Number of trees | 100 | ||

| Number of trees considered at each split | 5 | |||

| Limit the depth of individual trees | 3 | |||

| Neural Network | Number of layers | 1 | ||

| Number of neurons per layer | 20 | |||

| Activation function type | Rectified Linear Unit (ReLu) | |||

| Learning rate | 0.1 | |||

| Solver | Adam | |||

| Iteration | 100 | |||

| Regularization (Alpha) | 0.0001 | |||

| SVM | Cost | 1 | ||

| Regression loss epsilon | 0.1 | |||

| Type of kernel | RBF | |||

| Numerical tolerance | 0.001 | |||

| Iteration | 100 | |||

| AdaBoost | Number of estimators | 100 | ||

| Learning rate | 0.1 | |||

| Classifier | SAMME.R | |||

| Regression loss function | Linear | |||

| Naïve Bayes | Default | --- | ||

| Logistic Regression | Regularization | Ridge(L2) | ||

| Cost strength | 1 | |||

| Class weights | No | |||

| CN2 Rule Induction | Weighted | 0.7 | ||

| Evaluation measure | Entropy | |||

| Beam width | 5 | |||

| Minimum rule coverage | 1 | |||

| Maximum rule length | 5 | |||

| Decision Tree | Minimum number of instances in leaves | 2 | ||

| Tree type | Induce binary tree | |||

| Split subset | Less than 5 | |||

| Tree depth | 10 | |||

| Majority reaches | 95% |

| Metrics | Mathematical Equation |

|---|---|

| Acc | |

| Prec | |

| Rec | |

| Spec | |

| F1 | |

| MCC | |

| AUC |

| ML Algorithm | Parameters | ||||

|---|---|---|---|---|---|

| TN | FP | FN | TP | Total Samples | |

| kNN | 1050 | 50 | 92 | 1808 | 3000 |

| Gradient Boosting | 1063 | 37 | 10 | 1890 | 3000 |

| Random Forest | 1063 | 37 | 42 | 1858 | 3000 |

| Neural Network | 1062 | 38 | 339 | 1561 | 3000 |

| SVM | 1045 | 55 | 215 | 1685 | 3000 |

| AdaBoost | 1063 | 37 | 10 | 1890 | 3000 |

| Naïve Bayes | 1098 | 2 | 146 | 1754 | 3000 |

| Logistic Regression | 1022 | 78 | 114 | 1786 | 3000 |

| CN2 Rule Induction | 1081 | 19 | 0 | 1900 | 3000 |

| Decision Tree | 1055 | 45 | 75 | 1825 | 3000 |

| ML Algorithm | Parameters | ||||

|---|---|---|---|---|---|

| TN | FP | FN | TP | Total Samples | |

| kNN | 350 | 20 | 0 | 630 | 1000 |

| Gradient Boosting | 360 | 10 | 0 | 630 | 1000 |

| Random Forest | 360 | 10 | 0 | 630 | 1000 |

| Neural Network | 360 | 10 | 110 | 520 | 1000 |

| SVM | 360 | 10 | 60 | 570 | 1000 |

| AdaBoost | 360 | 10 | 0 | 630 | 1000 |

| Naïve Bayes | 370 | 0 | 40 | 590 | 1000 |

| Logistic Regression | 340 | 30 | 30 | 600 | 1000 |

| CN2 Rule Induction | 370 | 0 | 0 | 630 | 1000 |

| Decision Tree | 360 | 10 | 0 | 630 | 1000 |

| ML Algorithm | Train Time (s) | AUC (%) | Acc (%) | F1 (%) | Prec (%) | Rec (%) | MCC (%) | Spec (%) |

|---|---|---|---|---|---|---|---|---|

| AdaBoost | 0.265 | 98.1 | 98.4 | 98.4 | 98.4 | 98.4 | 96.6 | 97.7 |

| Gradient Boosting | 1.588 | 98.1 | 98.4 | 98.4 | 98.4 | 98.4 | 96.6 | 97.7 |

| Random Forest | 0.808 | 99.6 | 97.6 | 97.6 | 97.6 | 97.6 | 94.8 | 97.3 |

| Decision Tree | 0.117 | 96.7 | 96.0 | 96.0 | 96.0 | 96.0 | 91.5 | 96.0 |

| CN2 Rule Induction | 19.240 | 99.6 | 95.2 | 95.2 | 95.6 | 95.2 | 89.9 | 91.8 |

| kNN | 0.160 | 99.3 | 95.3 | 95.3 | 95.3 | 95.3 | 89.9 | 95.3 |

| Neural Network | 1.938 | 98.1 | 86.5 | 85.7 | 88.6 | 86.5 | 72.0 | 76.9 |

| SVM | 0.282 | 98.9 | 91.0 | 91.1 | 91.7 | 91.1 | 81.7 | 92.7 |

| Naïve Bayes | 0.211 | 95.2 | 95.1 | 95.1 | 95.6 | 95.1 | 90.1 | 97.1 |

| Logistic Regression | 0.449 | 99.1 | 93.6 | 93.6 | 93.7 | 93.6 | 86.3 | 93.3 |

| ML Algorithm | Cross-Validation Time (s) | AUC (%) | Acc (%) | F1-Sco (%) | Prec (%) | Rec (%) | MCC (%) | Spec (%) |

|---|---|---|---|---|---|---|---|---|

| AdaBoost | 0.01 | 100 | 99.9 | 99.9 | 99.9 | 99.9 | 99.9 | 100 |

| Gradient Boosting | 0.022 | 100 | 99.9 | 99.9 | 99.9 | 99.9 | 99.9 | 100 |

| Random Forest | 0.016 | 100 | 99.9 | 99.9 | 99.9 | 99.9 | 99.9 | 100 |

| Decision Tree | 0.001 | 99.9 | 99.9 | 99.9 | 99.9 | 99.9 | 99.8 | 100 |

| CN2 Rule Induction | 0.01 | 99.9 | 99.9 | 99.9 | 99.9 | 99.9 | 99.8 | 100 |

| kNN | 0.057 | 99.7 | 95.5 | 95.5 | 95.6 | 95.5 | 94.7 | 99.1 |

| Neural Network | 0.01 | 99.3 | 93.3 | 93.2 | 93.6 | 93.3 | 92.1 | 98.7 |

| SVM | 0.091 | 100 | 97.2 | 97.2 | 97.3 | 97.2 | 96.7 | 99.4 |

| Naïve Bayes | 0.007 | 94.8 | 75.9 | 73.6 | 76.4 | 75.9 | 72.1 | 95.2 |

| Logistic Regression | 0.009 | 96.4 | 80.1 | 77.7 | 79.8 | 80.1 | 77.4 | 96.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gharghan, S.K.; Kamel, H.S.; Marir, A.A.; Saleh, L.A. Smart Stick Navigation System for Visually Impaired Based on Machine Learning Algorithms Using Sensors Data. J. Sens. Actuator Netw. 2024, 13, 43. https://doi.org/10.3390/jsan13040043

Gharghan SK, Kamel HS, Marir AA, Saleh LA. Smart Stick Navigation System for Visually Impaired Based on Machine Learning Algorithms Using Sensors Data. Journal of Sensor and Actuator Networks. 2024; 13(4):43. https://doi.org/10.3390/jsan13040043

Chicago/Turabian StyleGharghan, Sadik Kamel, Hussein S. Kamel, Asaower Ahmad Marir, and Lina Akram Saleh. 2024. "Smart Stick Navigation System for Visually Impaired Based on Machine Learning Algorithms Using Sensors Data" Journal of Sensor and Actuator Networks 13, no. 4: 43. https://doi.org/10.3390/jsan13040043

APA StyleGharghan, S. K., Kamel, H. S., Marir, A. A., & Saleh, L. A. (2024). Smart Stick Navigation System for Visually Impaired Based on Machine Learning Algorithms Using Sensors Data. Journal of Sensor and Actuator Networks, 13(4), 43. https://doi.org/10.3390/jsan13040043