Data Readout Techniques on FPGA for the ATLAS RPC-BIS78 Detectors

Abstract

:1. Introduction

- Thinner gas gap at 1 mm (the original RPCs have 2 mm);

- Lower operating high voltage, at 5400 V compared to 9600 V;

- New generation Si-Ge with higher-gain, low-noise front-end electronics.

2. Materials and Methods

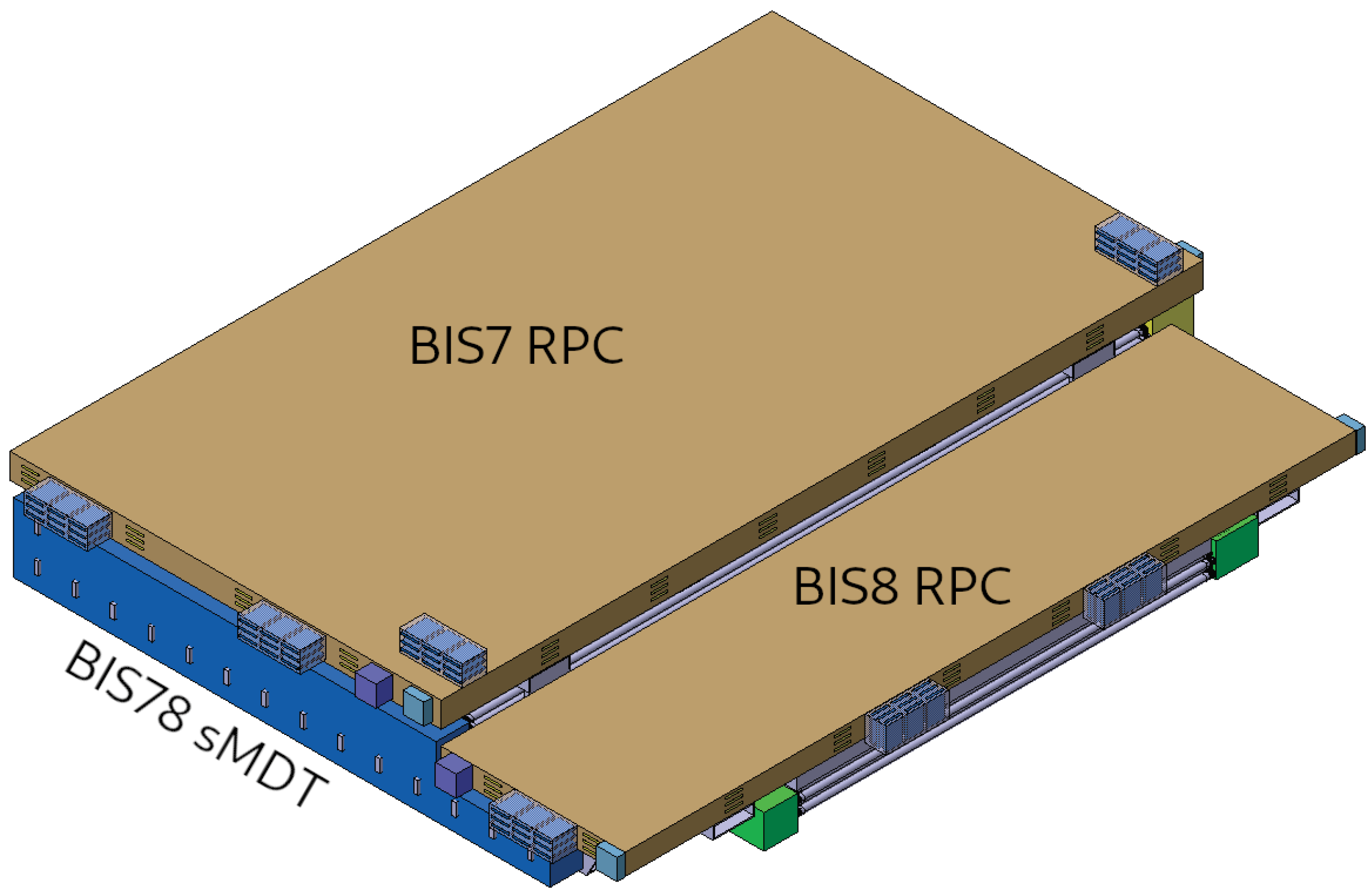

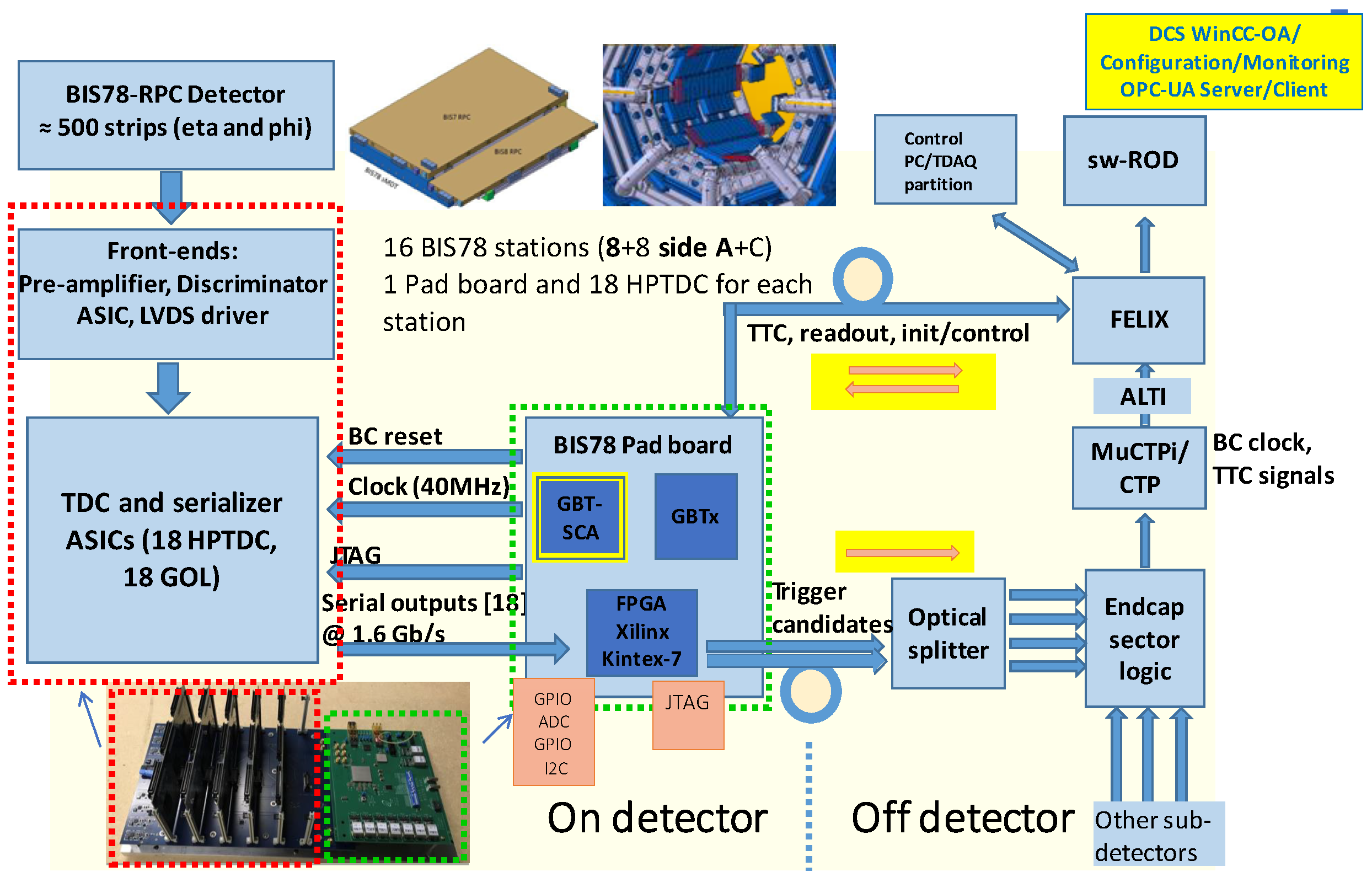

2.1. The BIS78 Data Acquisition and Control System

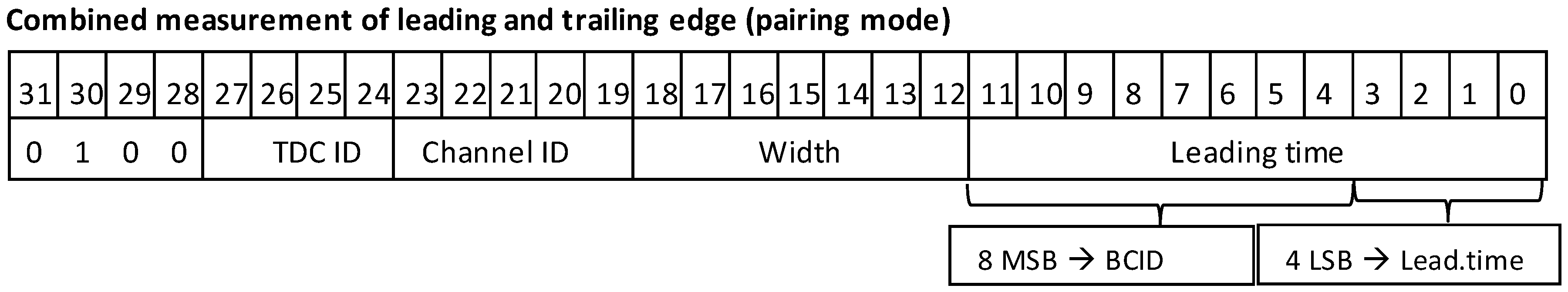

2.1.1. On-Detector Electronics

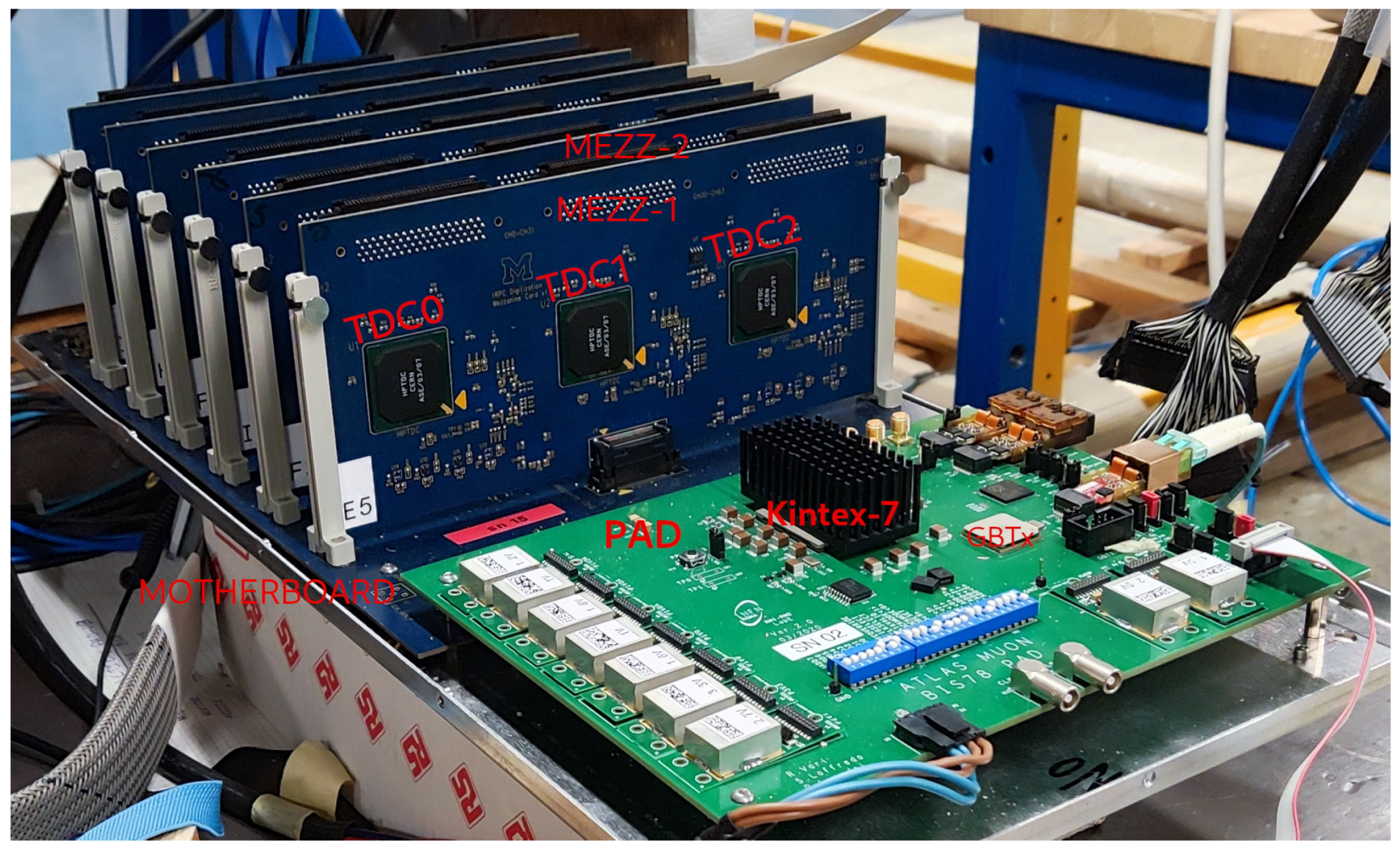

- New on-detector front-end electronics: 8-channel Front-End PCBs composed of a new Amplifier (Si), a full-custom ASIC Discriminator (SiGe) and an LVDS transmitter. A set of two of these boards contains 32 channels and connects via ribbon cables to one HPTDC (High Performance Time-to-Digital Converter) [5] installed on the Mezzanine card.

- HPTDC Mezzanine boards: each one hosts 3 CERN-HPTDC digitizers and 3 CERN-GOL (Gigabit Optical Link) [6] transmitter-serializer chips.

- Motherboard (MB): hosts and holds in a vertical position 6 Mezzanine boards and connects through an HPC-FMC connector to the PAD board (see next item); thus, there are 18 HPTDC and 18 GOL ASICs in the MB + Mezzanine system.

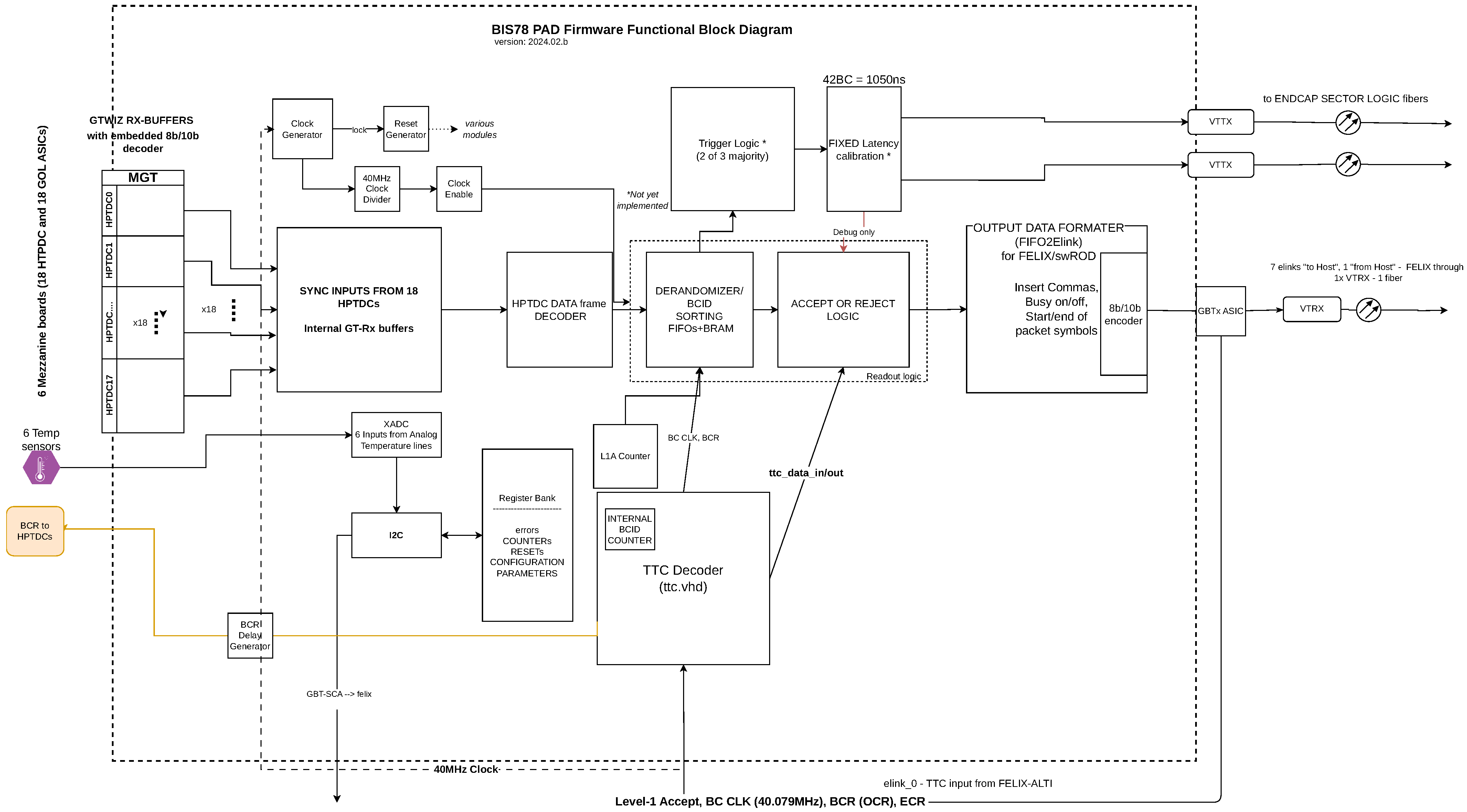

- Trigger PAD board: the Readout and Trigger data acquisition and processing unit, based on the Xilinx Kintex-7 FPGA, model XC7K355T [7]. It features a standard rad-hard set of communication chips developed by the CERN GBT project [8] for Phase I: a GBTx ASIC and a GBT-SCA slow-control ASIC. The FPGA is communicating with the GBT-SCA chip over the protocol, which in turn connects via an e-link to the GBTx and then to the FELIX system [9], where the slow-control software runs, as described in Section 2.1.3. Optical communication with the FELIX system is handled by a VTRX transceiver, while two VTTX transmitters send trigger candidates to the End-cap Sector Logic board. Both VTRX and VTTX were developed by the CERN-coordinated Versatile Link Project [10,11].

2.1.2. Off-Detector Electronics, Control System, and Software

2.1.3. TDAQ Software

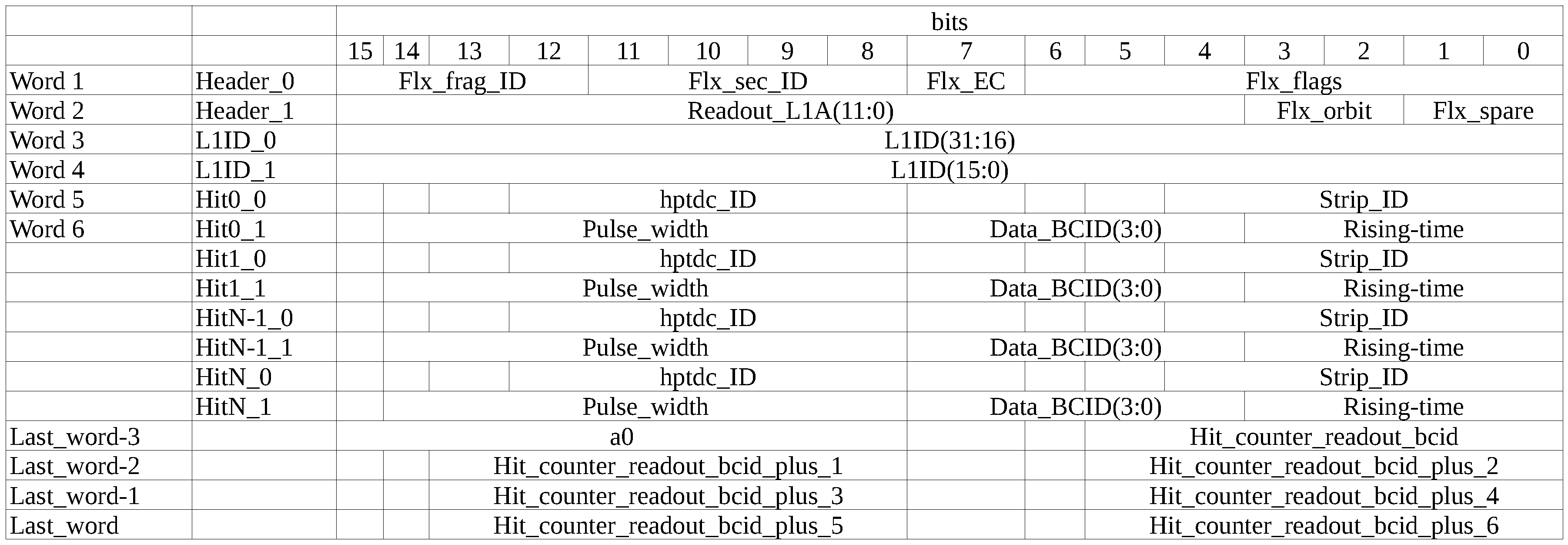

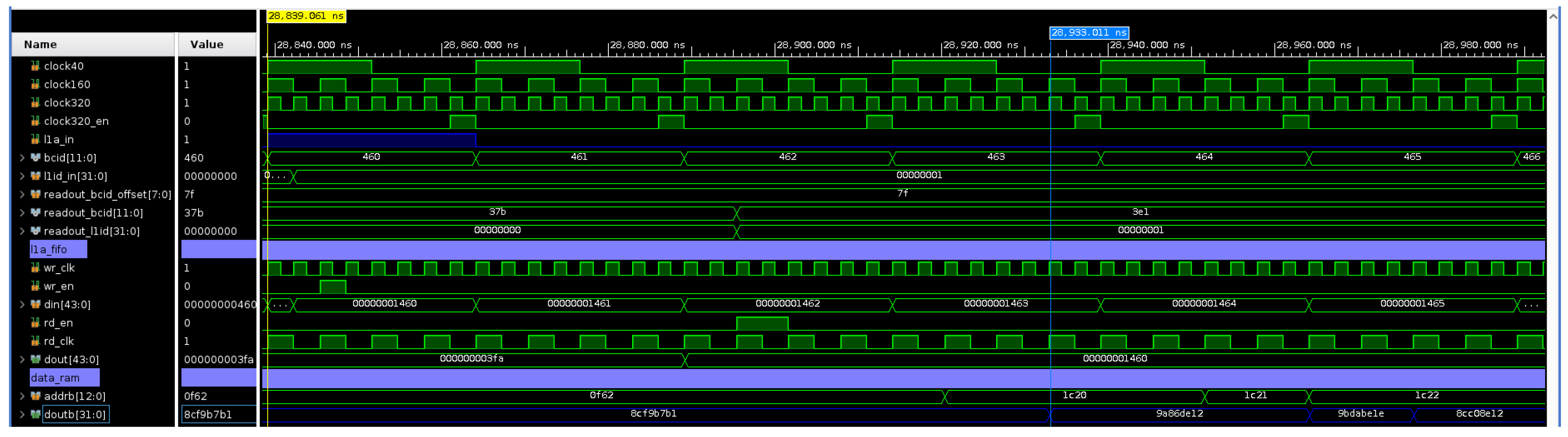

2.2. Data Readout Firmware

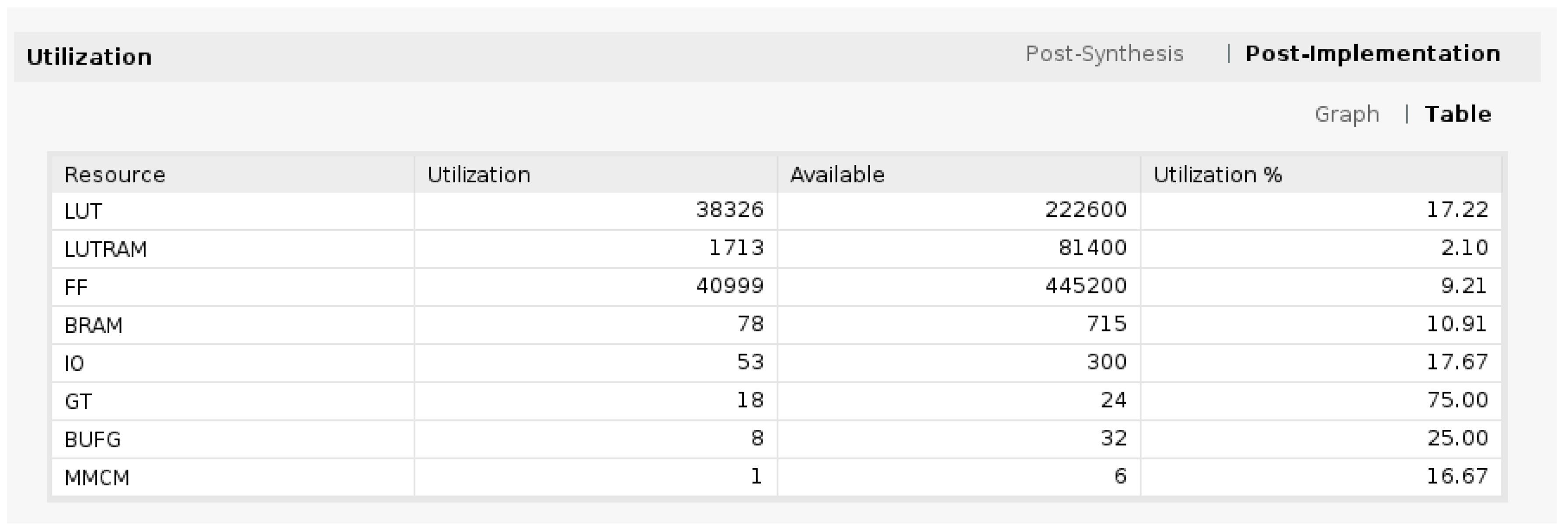

Resource Utilization

3. Results and Discussion

3.1. Firmware and Software Testing in the ATLAS TDAQ Frame

3.1.1. Testing at the Muon Lab

3.1.2. Testing at Point 1

3.2. Conclusions

3.3. Future plans

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ASIC | Application Specific Integrated Circuit |

| ALTI | ATLAS Local Trigger Interface |

| ATLAS | A Toroidal LHC ApparatuS |

| BCR | Bunch Counter Reset |

| BIS | Barrel Inner Small |

| BRAM | Block Random Access Memory |

| CTP | Central Trigger Processor |

| DCS | Detector Control System |

| ECR | Event Counter Reset |

| EVID | Extended Level-1 ID |

| FELIX | Front-End LInk eXchange |

| FPGA | Field Programmable Gate Array |

| GBT | GigaBit Transceiver |

| GBT-SCA | GBT Slow Control Adapter |

| GOL | Gigabit Optical Link |

| HL-LHC | High Luminosity–Large Hadron Collider |

| HTPDC | High Performance Time-to-Digital Converter |

| ILA | Integrated Logic Analyzer |

| L1A | Level-1 Accept |

| MDT | Monitored Drift Tube |

| OCR | Orbit Counter Reset |

| OPC UA | Open Platform Communications Unified Architecture |

| RPC | Resistive Plate Chamber |

| SW ROD | Software Read-Out Driver |

| TDAQ | Trigger and Data AcQuisition |

| TTC | Timing, Trigger, Control |

| VIO | Virtual Input-Output |

| VME | Versa Module Eurocard |

References

- ATLAS Collaboration. Technical Design Report for the Phase-II Upgrade of the ATLAS Muon Spectrometer. Technical Report; CERN. Available online: https://cds.cern.ch/record/2285580 (accessed on 30 May 2024).

- ATLAS Collaboration. Technical Design Report for the Phase-II Upgrade of the ATLAS TDAQ System; Technical Report; CERN: Geneva, Switzerland, 2017. [Google Scholar] [CrossRef]

- Fleischmann, P. BIS78 Installation: Upgrading the ATLAS Muon Spectrometer. General Photo. Available online: https://cds.cern.ch/record/2736104 (accessed on 30 May 2024).

- ATLAS Collaboration. The ATLAS Experiment at the CERN Large Hadron Collider. J. Instrum. 2008, 3, S08003. [Google Scholar] [CrossRef]

- Christiansen, J. HPTDC High Performance Time to Digital Converter; Technical Report; CERN: Geneva, Switzerland, 2004; Version 2.2 for HPTDC Version 1.3; Available online: https://cds.cern.ch/record/1067476 (accessed on 30 May 2024).

- GOL Project. Available online: https://proj-gol.web.cern.ch (accessed on 30 May 2024).

- AMD-Xilinx. Kintex-7 Series FPGA, OfficialWebpage. Available online: https://www.xilinx.com/products/silicon-devices/fpga/kintex-7.html (accessed on 30 May 2024).

- Moreira, P.; Ballabriga, R.; Baron, S.; Bonacini, S.; Cobanoglu, O.; Faccio, F.; Fedorov, T.; Francisco, R.; Gui, P.; Hartin, P.; et al. The GBT Project. In Proceedings of the Topical Workshop on Electronics for Particle Physics, Paris, France, 21–25 September 2009. [Google Scholar] [CrossRef]

- The ATLAS FELIX Project. 2022. Available online: https://zenodo.org/records/6347081 (accessed on 30 May 2024).

- Versatile Link Project Public Area. Available online: https://espace.cern.ch/project-versatile-link/public/default.aspx (accessed on 30 May 2024).

- CERN EP-ESE Department. GBT and Versatile Link Official Webpage. Available online: https://ep-ese.web.cern.ch/project/gbt-and-versatile-link (accessed on 30 May 2024).

- ALTI Module Documentation, Official ATLAS Twiki Webpage. Available online: https://twiki.cern.ch/twiki/bin/view/Atlas/LevelOneCentralTriggerALTI (accessed on 30 May 2024).

- Polini, A. Monitoring and control system of the ATLAS RPCs in view of run-2. JINST 2014, 9, C11011. [Google Scholar] [CrossRef]

- SY5527 Universal Multichannel Power Supply System. Available online: https://www.caen.it/products/sy5527/ (accessed on 30 May 2024).

- ATLAS Collaboration. TDAQ Framework. 2021. Available online: https://zenodo.org/records/4889104 (accessed on 30 May 2024).

- Nikiel, P.P.; Farnham, B.; Filimonov, V.; Schlenker, S. Generic OPC UA Server Framework. J. Phys. Conf. Ser. 2015, 664, 082039. [Google Scholar] [CrossRef]

- Poy, A.B.; Boterenbrood, H.; Burckhart, H.J.; Cook, J.; Filimonov, V.; Franz, S.; Gutzwiller, O.; Hallgren, B.; Khomutnikov, V.; Schlenker, S.; et al. The detector control system of the ATLAS experiment. J. Instrum. 2008, 3, P05006. [Google Scholar] [CrossRef]

- ATLAS Collaboration. Athena. 2019. Available online: https://doi.org/10.5281/zenodo.2641997 (accessed on 30 May 2024).

- FELIX User Manual, Official ATLAS Gitlab. Available online: https://gitlab.cern.ch/atlas-tdaq-felix-dev/felix-user-manual/-/tree/4.2.x?ref_type=heads (accessed on 30 May 2024).

- Brun, R.; Rademakers, F.; Canal, P.; Naumann, A.; Couet, O.; Moneta, L.; Vassilev, V.; Linev, S.; Piparo, D.; GANIS, G.; et al. root-project/root: v6.18/02. 2020. Available online: https://zenodo.org/records/3895860 (accessed on 30 May 2024).

- CERN EOS Storage System. Available online: https://eos-web.web.cern.ch/eos-web (accessed on 30 May 2024).

| bit-7 | bit-6 | bit-5 | bit-4 | bit-3 | bit-2 | bit-1 | bit-0 |

|---|---|---|---|---|---|---|---|

| L1A | Soft-reset | Test-pulse | ECR | BCR | L0A | SCA-reset | EC0R |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vgenopoulos, A.; Kordas, K.; Lasagni, F.; Perrella, S.; Polini, A.; Vari, R. Data Readout Techniques on FPGA for the ATLAS RPC-BIS78 Detectors. Technologies 2024, 12, 83. https://doi.org/10.3390/technologies12060083

Vgenopoulos A, Kordas K, Lasagni F, Perrella S, Polini A, Vari R. Data Readout Techniques on FPGA for the ATLAS RPC-BIS78 Detectors. Technologies. 2024; 12(6):83. https://doi.org/10.3390/technologies12060083

Chicago/Turabian StyleVgenopoulos, Andreas, Kostas Kordas, Federico Lasagni, Sabrina Perrella, Alessandro Polini, and Riccardo Vari. 2024. "Data Readout Techniques on FPGA for the ATLAS RPC-BIS78 Detectors" Technologies 12, no. 6: 83. https://doi.org/10.3390/technologies12060083

APA StyleVgenopoulos, A., Kordas, K., Lasagni, F., Perrella, S., Polini, A., & Vari, R. (2024). Data Readout Techniques on FPGA for the ATLAS RPC-BIS78 Detectors. Technologies, 12(6), 83. https://doi.org/10.3390/technologies12060083