Characterization of a Large, Low-Cost 3D Scanner

Abstract

:1. Introduction

2. Background

2.1. Three-Dimensional Scanning and Its Application Areas

2.2. Three-Dimensional Scanner Hardware

2.3. Assessment of 3D Scanning

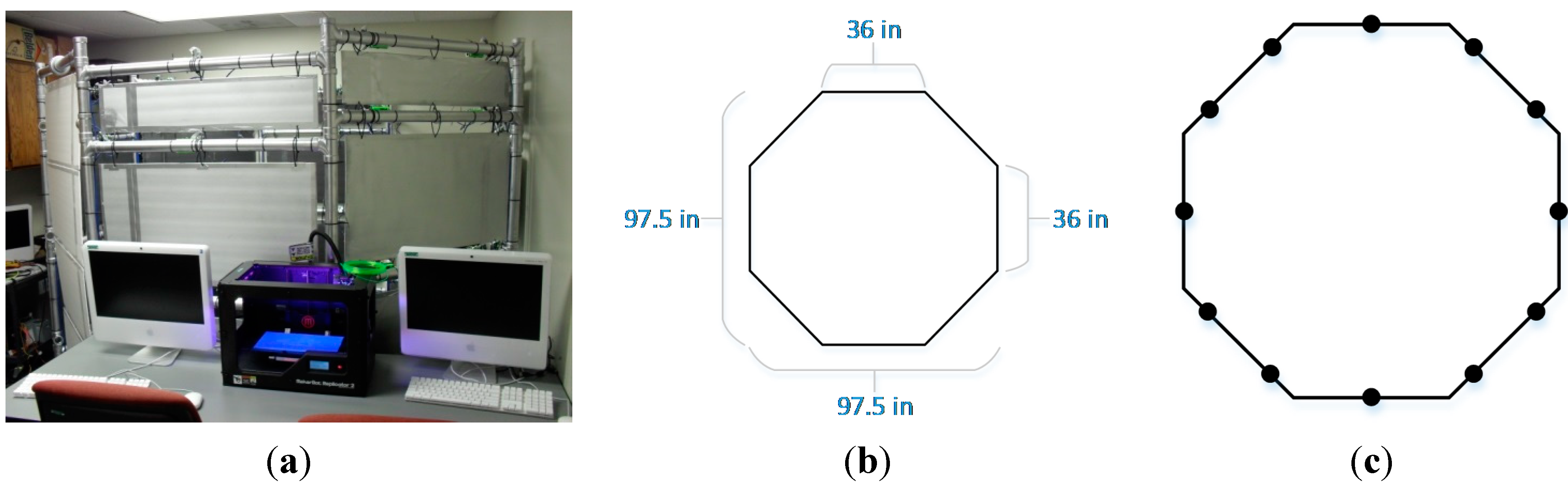

3. Overview of the 3D Scanner

4. Experimental Design, Methods and Data Collected

4.1. Characterization of Object Scanning

| CAD Model Values | Scaled Values (in) | Actual Measurement | Absolute Error | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 1 | Model 2 | Model 3 | Model 1 | Model 2 | Model 3 | ||

| Short Side 1 | 0.88 | 1.05 | 0.93 | 11.69 | 11.91 | 11.39 | 11.75 | 0.06 | 0.16 | 0.36 |

| Short Side 2 | 0.87 | 1.00 | 0.95 | 11.57 | 11.33 | 11.61 | 11.75 | 0.18 | 0.42 | 0.14 |

| Long Side 1 | 1.33 | 1.54 | 1.44 | 17.68 | 17.48 | 17.57 | 17.75 | 0.07 | 0.27 | 0.18 |

| Long Side 2 | 1.34 | 1.59 | 1.46 | 17.83 | 18.07 | 17.83 | 17.75 | 0.08 | 0.32 | 0.08 |

| Diagonal 1 | 1.59 | 1.90 | 1.74 | 21.05 | 21.54 | 21.18 | 21.00 | 0.05 | 0.54 | 0.18 |

| Diagonal 2 | 1.61 | 1.85 | 1.72 | 21.38 | 20.92 | 21.04 | 21.00 | 0.38 | 0.08 | 0.04 |

| Average Error | 0.14 | 0.30 | 0.16 | |||||||

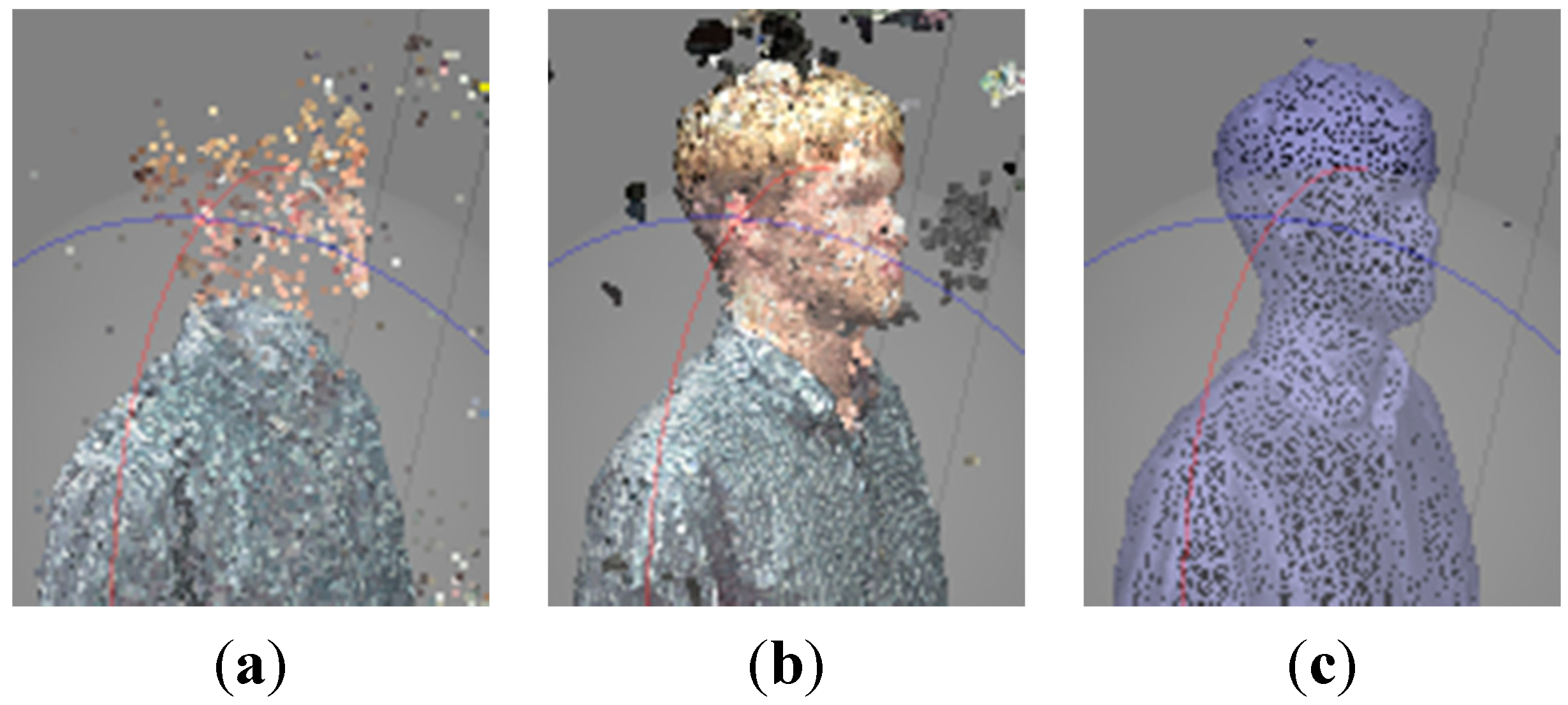

4.2. Characterization of Human Scanning

| Measurement (in) | CAD | Scaled (in) | Discrepancy | |

|---|---|---|---|---|

| Segment 1 | 3.00 | 0.211 | 2.563 | 0.44 |

| Segment 2 | 2.63 | 0.163 | 1.977 | 0.65 |

| Segment 3 | 6.88 | 0.557 | 6.750 | 0.12 |

| Segment 4 | 6.00 | 0.509 | 6.167 | 0.17 |

| Segment 5 | 10.00 | 0.864 | 10.475 | 0.48 |

| Segment 6 | 9.60 | 0.810 | 9.822 | 0.22 |

| Segment 7 | 8.00 | 0.615 | 7.451 | 0.55 |

| Segment 8 | 6.13 | 0.534 | 6.473 | 0.35 |

| Segment 9 | 10.00 | 0.814 | 9.867 | 0.13 |

| Segment 10 | 10.00 | 0.867 | 10.512 | 0.51 |

| Average | 7.22 | 0.595 | 12.148 | 0.37 |

4.3. Characterization of the Impact of Lighting

| Overhead 1 | Overhead 2 | Front | Middle | Back | |

|---|---|---|---|---|---|

| Test 1 | X | X | |||

| Test 2 | X | X | X | ||

| Test 3 | X | X | X | X | |

| Test 4 | X | X | X | X | X |

| Test 5 | X | X | |||

| Test 6 | X | X | X | X |

| Shoulder Width | Volume | Normalization Factor | Normalized Volume | |

|---|---|---|---|---|

| Test 1 | 1.427 | 2.958 × 10−7 | 0.900 | 2.158 × 10−7 |

| Test 2 | 1.428 | 3.342 | 0.899 | 2.430 |

| Test 3 | 0.957 | 0.943 | 1.342 | 2.280 |

| Test 4 | 1.147 | 1.687 | 1.120 | 2.371 |

| Test 5 | 1.482 | 3.444 | 0.867 | 2.241 |

| Test 6 | 1.266 | 2.157 | 1.015 | 2.253 |

5. Discussion of Data Collected

6. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Straub, J.; Kerlin, S. Development of a large, low-cost, instant 3D scanner. Technologies 2014, 2, 76–95. [Google Scholar] [CrossRef]

- Gu, F.; Xie, H. Status and development trend of 3D laser scanning technology in the mining field. 2013. [Google Scholar] [CrossRef]

- Mahdjoubi, L.; Moobela, C.; Laing, R. Providing real-estate services through the integration of 3D laser scanning and building information modelling. Comput. Ind. 2013, 64, 1272–1281. [Google Scholar] [CrossRef]

- Laurent, J.; Fox-Ivey, R.; Dominguez, F.S.; Garcia, J.A.R. Use of 3D scanning technology for automated inspection of tunnels. In Proceedings of the 2014 World Tunnel Congress, Iguazu Falls, Brazil, 9–15 May 2014.

- Legg, M.; Bradley, S. Automatic 3D scanning surface generation for microphone array acoustic imaging. Appl. Acoust. 2014, 76, 230–237. [Google Scholar] [CrossRef]

- Bindean, I.; Stoian, V. Determination of the remaining bearing capacity of an existing slab using 3D scanning technology. In Recent Advances in Civil and Mining Engineering, Proceedings of the 4th European Conference of Civil Engineering and the 1st European Conference of Mining Engineering, Antalya, Turkey, 8–10 October 2013; WSEAS—World Scientific and Engineering Academy and Society: Stevens Point, WI, USA, 2013; pp. 136–140. [Google Scholar]

- Nas, S.; Jucan, S. Aspects regarding 3D laser scanning surveys for road design. Agric. Agric. Pract. Sci. J. 2013, 85, 140–144. [Google Scholar]

- Shih, J.; Lin, T. Fusion of image and laser-scanning data in a large-scale 3D virtual environment. Proc. SPIE 2013. [Google Scholar] [CrossRef]

- Dawson, P.C.; Bertulli, M.M.; Levy, R.; Tucker, C.; Dick, L.; Cousins, P.L. Application of 3D laser scanning to the preservation of Fort Conger, a historic polar research base on Northern Ellesmere Island, Arctic Canada. Arctic 2013, 66, 147–158. [Google Scholar] [CrossRef]

- Nesi, L. The Use of 3D Laser Scanning Technology in Buildings Archaeology: The Case of Måketorpsboden in Kulturen Museum, Lund. Master’s Thesis, Lund University, Lund, Sweden, 2013. [Google Scholar]

- Burens, A.; Grussenmeyer, P.; Guillemin, S.; Carozza, L.; Leveque, F.; Mathé, V. Methodological developments in 3D scanning and modelling of archaeological French heritage site: The bronze age painted cave of “LES FRAUX”, Dordogne (France). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 131–135. [Google Scholar] [CrossRef]

- Ares, M.; Royo, S.; Vidal, J.; Campderrós, L.; Panyella, D.; Pérez, F.; Vera, S.; Ballester, M.A.G. 3D scanning system for in-vivo imaging of human body. In Fringe 2013; Springer: New York, NY, USA, 2014; pp. 899–902. [Google Scholar]

- Filipescu, E.; Salistean, A.; Filipescu, E.; Olaru, S.; Niculescu, C. Software solution to assess morphological body through 3D scanning results. In Proceedings of the 2013 “eLearning and Software for Education” (eLSE), Bucharest, Romania, 25–26 April 2013; pp. 391–397.

- D’Apuzzo, N. 3D body scanning technology for fashion and apparel industry. Proc. SPIE 2007. [Google Scholar] [CrossRef]

- Helmore, E. Menswear’s Young Bloods Kick It Old School. Wall Street Journal, 12 February 2011. [Google Scholar]

- Crease, R.P. Invasion of the Full-Body Scanners. Wall Street Journal, 7 January 2010. [Google Scholar]

- King, R. 3D Imaging Spreads to Fashion and Beyond. BloombergBusinessweek, 6 October 2008. [Google Scholar]

- Chowdhury, H.; Alam, F.; Mainwaring, D.; Beneyto-Ferre, J.; Tate, M. Rapid prototyping of high performance sportswear. Procedia Eng. 2012, 34, 38–43. [Google Scholar] [CrossRef]

- DeMatto, A. 5 Ways Body Scanners Could Make Fitting Rooms Obsolete. Popular Mechanics, 29 June 2010. [Google Scholar]

- Anonymous. US Coast Guard Uses Body Scanners in Measurement of Uniforms. Fibre2fashion, 12 June 2008. [Google Scholar]

- Treleaven, P.; Wells, J. 3D body scanning and healthcare applications. Computer 2007, 40, 28–34. [Google Scholar] [CrossRef]

- Brown, W.M.; Price, M.E.; Kang, J.; Pound, N.; Zhao, Y.; Yu, H. Fluctuating asymmetry and preferences for sex-typical bodily characteristics. Proc. Natl. Acad. Sci. USA 2008, 105, 12938–12943. [Google Scholar] [CrossRef] [PubMed]

- Stephan, C.N.; Guyomarc’h, P. Quantification of perspective-induced shape change of clavicles at radiography and 3D scanning to assist human identification. J. Forensic Sci. 2014, 59, 447–453. [Google Scholar]

- Burnsides, D.; Boehmer, M.; Robinette, K. 3-D landmark detection and identification in the CAESAR project. In Proceedings of the 2001 International Conference on 3D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 393–398.

- Robinette, K.M.; Daanen, H.; Paquet, E. The CAESAR project: A 3-D surface anthropometry survey. In Proceedings of the Second International Conference on 3-D Digital Imaging and Modeling, Ottawa, ON, Canada, 4–8 October 1999; pp. 380–386.

- [TC]2 SizeUSA. Availiable online: http://www.sizeusa.com/ (accessed on 10 August 2014).

- News Limited Target offers 3D body scanner to measure customers. Availiable online: http://www.news.com.au/technology/body-scanner-targets-right-fit/story-e6frfro0-1226335908818 (accessed on 10 August 2014).

- University of the Arts London SizeUK—Results from the UK National Sizing Survey. Availiable online: http://www.arts.ac.uk/research/research-projects/completed-projects/sizeuk-results-from-the-uk-national-sizing-survey/ (accessed on 10 August 2014).

- Shape GB What is Shape GB? Availiable online: http://www.shapegb.org/what_is_shape_gb (accessed on 10 August 2014).

- Stuyck, T.; Vandermeulen, D.; Smeets, D.; Claes, P. HR-Kinect-High-Resolution Dynamic 3D Scanning for Facial Expression Analysis. Availiable online: http://www.student.kuleuven.be/~s0200995/paper.pdf (accessed on 10 August 2014).

- Sarghie, B.; Costea, M.; Liute, D. Anthropometric study of the foot using 3D scanning method and statistical analysis. In Proceedings of the 2013 International Symposium in Knitting and Apparel, Iasi, Romania, 21–22 June 2013.

- Ciobanu, O.; Xu, W.; Ciobanu, G. The use of 3D scanning and rapid prototyping in medical engineering. Fiability Durab. 2013, 2013 (Suppl. 1), 241–247. [Google Scholar]

- Javed, M.A.; Won, S.P.; Khamesee, M.B.; Melek, W.W.; Owen, W. A laser scanning based reverse engineering system for 3D model generation. In Proceedings of the IECON 2013—39th Annual Conference of the IEEE Industrial Electronics Society, Vienna, Austria, 10–13 November 2013; pp. 4334–4339.

- Peterka, J.; Morovič, L.; Pokorný, P.; Kováč, M.; Hornák, F. Optical 3D scanning of cutting tools. Appl. Mech. Mater. 2013, 421, 663–667. [Google Scholar] [CrossRef]

- Voicu, A.; Gheorghe, G.I.; Badita, L. 3D measuring of complex automotive parts using video-laser scanning. Sci. Bull. VALAHIA Univ. 2013, 11, 174–178. [Google Scholar]

- Groenendyk, M. A Further Investigation into 3D Printing and 3D Scanning at the Dalhousie University Libraries: A Year Long Case Study; Canadian Association of Research Libraries: Ottawa, ON, Canada, 2013. [Google Scholar]

- Bogdanova, G.; Todorov, T.; Noev, N. Digitization and 3D scanning of historical artifacts. Digit. Present. Preserv. Cult. Sci. Herit. 2013, 3, 133–138. [Google Scholar]

- Brozović, M.; Avsec, A.; Tevčić, M. Dimensional control of complex geometry objects using 3D scanning technology. In Proceedings of the 14th International Scientific Conference on Production Engineering-Cim, Biograd, Hrvatska, 19–22 June 2013.

- Daanen, H.M.; van de Water, G.J. Whole body scanners. Displays 1998, 19, 111–120. [Google Scholar] [CrossRef]

- Borghese, N.A.; Ferrigno, G.; Baroni, G.; Pedotti, A.; Ferrari, S.; Savarè, R. Autoscan: A flexible and portable 3D scanner. IEEE Comput. Graph. Appl. 1998, 18, 38–41. [Google Scholar] [CrossRef]

- Rocchini, C.; Cignoni, P.; Montani, C.; Pingi, P.; Scopigno, R. A low cost 3D scanner based on structured light. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2001; Volume 20, pp. 299–308. [Google Scholar]

- Daanen, H.; Ter Haar, F. 3D whole body scanners revisited. Displays 2013, 34, 270–275. [Google Scholar] [CrossRef]

- Grivon, D.; Vezzetti, E.; Violante, M.G. Development of an innovative low-cost MARG sensors alignment and distortion compensation methodology for 3D scanning applications. Robot. Auton. Syst. 2013, 61, 1710–1716. [Google Scholar] [CrossRef]

- Grivon, D.; Vezzetti, E.; Violante, M. Study and development of a low cost “OptInertial” 3D scanner. Precis. Eng. 2014, 38, 261–269. [Google Scholar] [CrossRef]

- Galantucci, L.M.; Lavecchia, F.; Percoco, G.; Raspatelli, S. New method to calibrate and validate a high-resolution 3D scanner, based on photogrammetry. Precis. Eng. 2014, 38, 279–291. [Google Scholar] [CrossRef]

- Rocchini, C.; Cignoni, P.; Montani, C.; Scopigno, R. Acquiring, stitching and blending diffuse appearance attributes on 3D models. Vis. Comput. 2002, 18, 186–204. [Google Scholar] [CrossRef]

- Bruno, F.; Bianco, G.; Muzzupappa, M.; Barone, S.; Razionale, A. Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS J. Photogramm. Remote Sens. 2011, 66, 508–518. [Google Scholar] [CrossRef]

- Fourie, Z.; Damstra, J.; Gerrits, P.O.; Ren, Y. Evaluation of anthropometric accuracy and reliability using different three-dimensional scanning systems. Forensic Sci. Int. 2011, 207, 127–134. [Google Scholar] [CrossRef] [PubMed]

- Kouchi, M.; Mochimaru, M. Errors in landmarking and the evaluation of the accuracy of traditional and 3D anthropometry. Appl. Ergon. 2011, 42, 518–527. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Wang, M.J.; Mollard, R. The effect of arm posture on the scan-derived measurements. Appl. Ergon. 2010, 41, 236–241. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Wang, M. The evaluation of scan-derived anthropometric measurements. IEEE Trans. Instrum. Measur. 2010, 59, 2048–2054. [Google Scholar] [CrossRef]

- Ma, Y.; Kwon, J.; Mao, Z.; Lee, K.; Li, L.; Chung, H. Segment inertial parameters of Korean adults estimated from three-dimensional body laser scan data. Int. J. Ind. Ergon. 2011, 41, 19–29. [Google Scholar] [CrossRef]

- Robinette, K.M.; Daanen, H.A. Precision of the CAESAR scan-extracted measurements. Appl. Ergon. 2006, 37, 259–265. [Google Scholar] [CrossRef] [PubMed]

- Tikuisis, P.; Meunier, P.; Jubenville, C. Human body surface area: Measurement and prediction using three dimensional body scans. Eur. J. Appl. Physiol. 2001, 85, 264–271. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.; Xu, B. A portable stereo vision system for whole body surface imaging. Image Vis. Comput. 2010, 28, 605–613. [Google Scholar] [CrossRef] [PubMed]

- Polo, M.; Felicisimo, A.M.; Villanueva, A.G.; Martinez-del-Pozo, J. Estimating the uncertainty of Terrestrial Laser Scanner measurements. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4804–4808. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Straub, J.; Kading, B.; Mohammad, A.; Kerlin, S. Characterization of a Large, Low-Cost 3D Scanner. Technologies 2015, 3, 19-36. https://doi.org/10.3390/technologies3010019

Straub J, Kading B, Mohammad A, Kerlin S. Characterization of a Large, Low-Cost 3D Scanner. Technologies. 2015; 3(1):19-36. https://doi.org/10.3390/technologies3010019

Chicago/Turabian StyleStraub, Jeremy, Benjamin Kading, Atif Mohammad, and Scott Kerlin. 2015. "Characterization of a Large, Low-Cost 3D Scanner" Technologies 3, no. 1: 19-36. https://doi.org/10.3390/technologies3010019

APA StyleStraub, J., Kading, B., Mohammad, A., & Kerlin, S. (2015). Characterization of a Large, Low-Cost 3D Scanner. Technologies, 3(1), 19-36. https://doi.org/10.3390/technologies3010019