1. Introduction

The development of Web 2.0 technologies has made the WWW a rich source of information to be exploited in business, in all levels of education, and in various communication situations as in the cases of e-Health, e-Commerce, and e-Government. In parallel, the WWW has become a place where people constantly interact with each other, by posting information (e.g., on blogs or discussion forums), by modifying and enhancing other people’s contributions (e.g., in Wikipedia), and by sharing information (e.g., on Facebook and other social networking services). Furthermore, the expansion of the Internet-of-Things (IoT) and smart home and smart work environments create new interaction dimensions, incorporating multimodal command of devices heavily making use of human speech production and understanding, along with other modalities.

As effective as these technologies might be to average hearing users, they seem to create considerable difficulties to born or early deaf signers and hard-of-hearing (HoH) individuals, since they all require a good command and extensive use of the written language, if not full exploitation of the oral communication channel. When considering populations with hearing deficits, thought, in many occasions in the literature it has been mentioned that deaf individuals are confronted with significant difficulty when they need to access a written text (indicatively listing [

1,

2,

3]). At the same time, statistics show that average deaf adults most commonly present reading ability corresponding to early to mid-primary school level skills.

On the other hand, although the cloud receives vast amounts of video uploads daily, sign language (SL) videos present two major problems: first, they are not anonymous, and second, they cannot be easily edited and reused in the way written texts can. Nonetheless, accessibility of electronic content by deaf and HoH WWW users remains crucially dependent on the possibility to acquire information that can be presented in their native SL, from the vast amounts of text sources being constantly uploaded. Similarly, crucial is the ability to easily create new electronic content that can enable dynamic message exchange, covering various communication needs.

To address this problem, a number of research efforts [

4,

5] have been reported towards making Web 2.0 accessible for SL users by allowing interactions in SL via the incorporation of real-time SL technologies. These technologies are based on availability of SL monolingual and bilingual/multilingual (among SL and vocal language) resources, with emphasis on dynamic production of SL, where the signed utterance is presented via a signing virtual engine (i.e., avatar).

Within the Dicta-Sign sign-Wiki demonstrator environment (

Figure 1), the end-user had the option (among other functionalities) of both creating and viewing signed content by exploiting pre-existing lexical resources [

6]. The Dicta-Sign demonstrator has been a proof of concept for the possibility for slight modifications of the stored resources and previously created signed utterances in order to convey a new message presented to the user by means of a signing avatar [

7]. And although signing avatar performance still remains a challenging task within the domain of SL technologies [

8,

9], it cannot be denied that synthetic signing systems give deaf user the freedom to create and view new signed content.

In the rest of the paper, we demonstrate how Web synchronous/asynchronous communication and content may become more accessible to deaf and HoH users by combining a set of Language Technology (LT) tools with signer oriented interface design in a number of applications supporting education and communication via SL. Such tools may incorporate bilingual and monolingual dictionary look-up and fingerspelling facilities as well as dynamic SL synthesis environments. The proposed architecture exploits bilingual vocal language; SL lexicon resources, monolingual SL resources, and standard language technology tools such as a tagger and a lemmatizer to handle the written form of the vocal language. We, thus, propose an SL tool workbench, the different components of which integrate LT tools and technologies provided via a signer friendly graphical user interface (GUI) as presented next, and implemented in two different environments: (a) the official educational content platform of the Greek Ministry of Education for the primary and secondary education levels, and (b) the ILSP Sign Language Technologies Team website (slt.ilsp.gr,

Figure 2). Both environments provide free of charge accessibility and educational services to the Greek Sign Language (GSL) signers’ community. In doing so, they equally address the needs of native GSL signers and learners of GSL as a second language (L2). The educational platform’s GUI and content evaluation procedures are also presented, followed by a concluding discussion on the workbench application and the perspective of supporting the complete communication cycle via SL.

2. (S)LT Tools and Resources Supporting Web Accessibility

In recent years, awareness-raising efforts have placed certain issues at the top of national, European and international policy agendas. From conventions such as the EU Disability Strategy 2010–2020 (European Disability Strategy 2010–2020), there is an international movement towards providing disabled persons with what is needed. Among other things, this also means granting total accessibility to knowledge and information.

However, internet-based communication by means of SL still remains subject to a number of critical technological limitations, taking into account that signers’ videos still remain the major source of SL linguistic message exchange. In [

10], an extensive discussion of the limitations posed by the use of SL videos has been reported. Similarly, there have been made reference to the issues relating to creation of SL resources that are needed to drive SL synthesis engines. Issues relating to signing avatar technology and its acceptance by end-users have also been tackled, with emphasis on the discussion of a number of interfaces designed to serve deaf users and suggestions as to the tools that would enable better access to Web content by native signers.

The limitations in composing, editing and reusing SL utterances as well as their consequences for Deaf education and communication have been systematically mentioned in the SL literature since the second half of the 20th century. Researchers including Stokoe [

11] and, more recently, the HamNoSyS team [

12,

13] and Neidle [

14], have proposed different systems for sign transcriptions, in an attempt to provide a writing system for SLs in line with the systems available for vocal languages [

15]. However, the three-dimensional properties of SLs have prevented wide acceptance of such systems for incorporation by deaf individuals in everyday practice.

An intuitive way to overcome the already set Web 2.0 and the upcoming Future Internet barriers is to exploit (Sign) Language Technologies in order to support interfaces, which enable signers to easily gain knowledge from electronic text and communicate in SL in a more intuitive manner. With this target in mind, many studies have been devoted to improving signing avatar performance in respect to naturalness of signing [

9,

16], primarily aiming at higher acceptance rates of SL consuming assistive technologies by signers’ communities.

To this end, research has focused on incorporating principle SL articulation features in avatar signing [

17,

18,

19], exploiting input from SL theoretical linguistic analysis and, nowadays, SL corpus-based data (

Figure 3).

The specifications and special characteristics of the set of tools and resources, which enable content accessibility and user interaction via SL in the platforms presented here as proof of concept use cases, are extensively reported in [

20].

The tools mentioned in [

20] for the handling of lexical items met in Greek written text, are part of the ILSP language tool suite [

21], being subject to constant maintenance and improvement. Language technology tools are necessary for the implementation of the showcased accessibility tools, since vocal languages such as Modern Greek (MG), used for demonstration purposes of the proposed implementation, exhibit a highly inflected morphological system, causing the reference form of a lemma to partially or completely change, according to different syntactic patterns. This situation, in general, poses a significant extra load to text comprehension by deaf users, who need to become bilingual in the written form of a (highly inflected) vocal language. Morphological complexity makes checking of the different morphological forms against the reference form of a lemma in a lexicon database a difficult task, demanding numerous filters and raising the retrieval error risk, unless handled in a systematic way by means of LT tools.

In the user interface of the platform supporting access to educational material, the initialization of the integrated services is done through the use of help buttons of appropriate shape and size. Furthermore, color code conventions and pop-up windows for informative or interaction purposes have been employed to ensure that the services are provided in a friendly manner to deaf and HoH users, while video tooltips are available in GSL in the form of help menus at all stages of use within the deaf accessibility platform mode. The integrated deaf accessibility services are initialized by the user and are provided as Add-Ons while browsing through the “Photodentro” and “Digital Educational Content” platforms [

22].

On the other hand, the adopted approach in the ILSP SL Technologies Team website has placed the three major SL accessibility support tools (the fingerspelling keyboard, a bilingual dictionary look-up facility, and the synthetic signing environment) in the site’s homepage (

Figure 4). They are, therefore, web services that are directly accessible by clicking on the respective icons. The bilingual dictionary look-up and the synthetic signing environment are both fed by appropriate language resources incorporating: (i) a bilingual electronic dictionary, the various connections of which are described in more detail in

Section 3.1 next, and (ii) a monolingual GSL lexicon dataset, incorporating transcriptions of lemmas according to the HamNoSys notation, especially developed to feed dynamic synthesis of signed utterances in GSL.

The integrated tools are run through a web browser with the help of java applets, currently supporting Mozilla Firefox, Chrome, and Internet Explorer. The supported operating systems are Microsoft Windows (XP or later) as well as Mac OS X with Safari browser. The environment that enables creation and maintenance of the various forms of resources required to drive the deaf accessibility tools and technologies of

Section 3, is presented in detail in

Section 2.1.

2.1. The SiS-Builder SL Lexicographic Environment

SiS-Builder [

23] is an online environment targeting the needs of SL resources development and maintenance. It, thus, provides a complete lexicographic environment, enabling the creation and interrelation of SL lexicon databases, which accommodate monolingual, bilingual and multilingual complex content. This enables the implementation of various dictionaries, as well as the creation of SL lexical resources that are adequate for research work on sign synthesis and animation. The most prominent need that led to its design and implementation was the requirement to generate SiGML (Signing Gesture Markup Language) transcriptions of HamNoSys strings in order to feed the sign synthesis avatar of the University of East Anglia (UEA) (

http://vh.cmp.uea.ac.uk) [

7].

In the course of its implementation, SiS-Builder was enriched with a number of functionalities that provide a complete environment for creating, editing, maintaining, and testing SL lexical resources, appropriately annotated for sign synthesis and animation. The tool is based on open source internet technologies to allow for easy access and platform compatibility, mostly exploiting php and java script, and is accessible through the following URL:

http://sign.ilsp.gr/sisbuilder.

The tool provides a GUI, via which users can automatically create SiGML scripts to be used by the UEA avatar animation engine, either by entering HamNoSys strings [

12,

13] of signs already stored in a properly coded lexical database, or by creating HamNoSys-annotated lemmas online, using the relevant SiS-Builder function (

Figure 5). Once the HamNoSys coded data are provided, the characters are automatically converted and the corresponding SiGML script is created. The script that is thus, created can be stored upon demand on the SiS-Builder server and be ready for future use.

Editing is also possible on already stored SiGML scripts, which allows for immediate presentation of the modified resource by the avatar. In order to store the new lexical item, however, it is obligatory to provide the relevant HamNoSys descriptions, which in turn will be converted and stored as a SiGML script.

Within the SiS-Builder environment, users may currently animate a simple sign or a sign phrase consisting of up to four lexical items. After having entered the HamNoSys annotated string in the proper field, users may add the non-manual characters of the sign they are dealing with by a set of combined actions in the “Non-manual characters” section. Users may choose the non-manual features they need to describe a sign from an (almost) exhaustive list of non-manual characters. To achieve a performance as close to natural as possible, users may choose a variety of features, such as combined movements for the head (e.g., “tilt left and swing right”). Users may apply the same procedure for more than one signs until the final step, which is the creation of the relevant SiGML script. An indicative part of the implemented non-manual features is shown in

Figure 6.

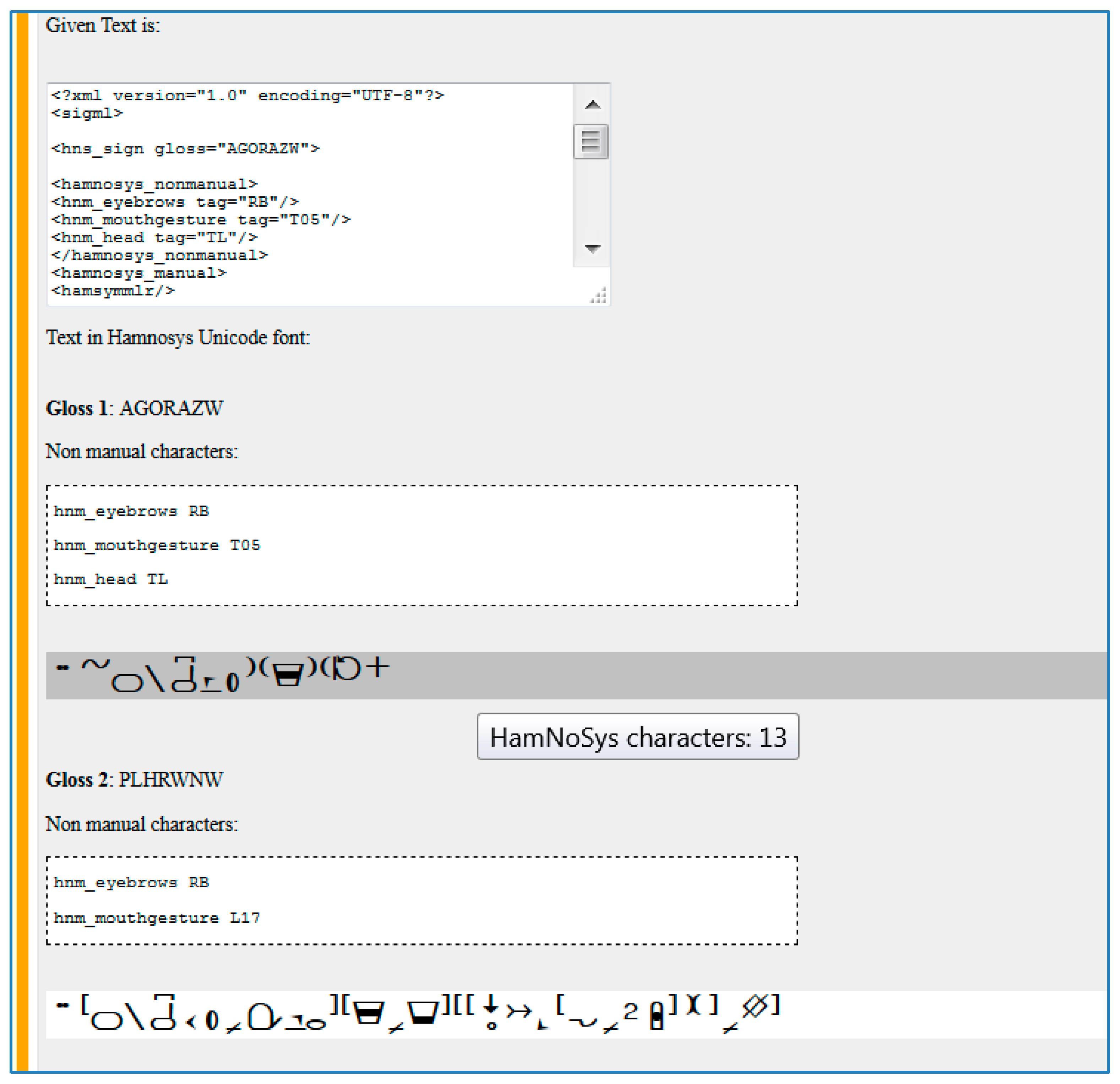

SiS-Builder also provides for the option to convert a SiGML script back to the corresponding HamNoSys notation. The results of this transformation are depicted in

Figure 7. As already mentioned, HamNoSys strings are visualized either by converting data in SiGML format or by selecting a validated lexical item from a list.

SiS-Builder enables multiple users to create and test their own data sets. As such, the tool is Internet-based, free to be accessed by anyone, with no special installation requirements on the client side. Registered users have access to the signing resources (signs, concepts, and phrases) repository incorporated in the environment. Users can go through the available list of signs or search for the lexical resource they are interested in, by exploiting the “Quick-Search” function, visualized on the left hand side menu of the screen.

Figure 8 depicts part of the GSL repository of lexical resources. Furthermore, they are also provided with their own repository space. This facility allows users to store, experiment with and modify the lexical resources they have previously created, until they achieve satisfactory descriptions for synthesis, corresponding to appropriate animation performance.

Finally, in order to facilitate users to create lexical resources for avatar animation or to make it possible to compare natural signing resources, with the avatar’s SL performance, a video repository is offered to registered users, containing authentic signing data.

To demonstrate the comparison utility of the specific functionality,

Figure 8 depicts an instantiation of video presentation of a specific lexical item (the GSL sign for “wild” in this example), while

Figure 9 instantiates the same lexical item when visualized by the UEA avatar. Search in the video repository is possible either by means of the English form of a lexical item, its Greek form or the name of the corresponding video file. A partial search query is also possible. Users can search for example the item “lawyer” by typing either “lawyer” or “law” or the name of the corresponding video file, if this is known to them, i.e., typing “1061law.avi” or “δικ” or “δικηγόρος”, if they make the same query in Greek. Search results are then presented to the user in a similar way, as the list shown in

Figure 8. Lexical resources created in the SiS-Builder environment may feed the UEA signing avatar accessible at:

http://vhg.cmp.uea.ac.uk/tech/jas/095z/SPA-framed-gui-win.html.

3. SL Tools and Technologies Workbench

Within the framework of the here presented implementations, the functionalities facilitating sign search are connected to a look-up environment that can be utilized either in direct link with the viewed text or as an independent dictionary look-up tool activated on demand. As a text accessibility support, it enables presentation of the corresponding sign to a given unknown word by simply double clicking the “unknown” word, irrespective of the morphological form appearing in the text.

The bilingual dictionary, the virtual fingerspelling keyboard and the dynamic sign phrase synthesis tool, described next in more detail, are incorporated in the use case workbench in an interoperable manner to support accessibility to text content and communication between platform users. A detailed presentation of the workbench components is provided in [

24].

3.1. Bilingual Dictionary Look-Up Environment

While browsing through any digital text, educational or informative, uploaded on a supported for SL accessibility websites, deaf or HoH GSL signers may seek explanation in GSL for any word appearing in the text. The selected “unknown” word is checked against the system’s lexicon of signs using any of the methods described in [

20] to enter a specific query. The unknown word is looked up in the database of correspondences between written MG lemmas and the respective GSL sign and, if identified, users are presented with information regarding the video lemma representation in GSL, examples of use, synonyms (

Figure 10) linked to senses, or expressions linked to lemmas.

The task to be executed, however, is by no means trivial, since in many cases the morphological form of a word in a text differs considerably from the form associated with a headword in a common dictionary, especially in languages with very rich morphology, such as MG. Thus, the successful execution of a given query demands an initial processing step of morphological decomposition of the selected word prior to its accurate association with the corresponding entry in the bilingual dictionary. The search procedure, therefore, entails morphological queries for any given inserted word form while it also takes into account any grammatical/semantic differentiation among lemmas in order to filter the search results. This happens, for instance, in the case of the stress position in word sets «θόλος» [th’olos]—«θολός» [thol’os] or for semantic differences between two morphologically different types of the same lemma (e.g., «αφαιρώ» [afer’o]—«αφηρημένος» [afirim’enos]).

NOEMA+, the dictionary that supports the accessibility of textual material currently comprises approximately 12,000 bilingual entries (MG-GSL). The dictionary underwent several stages of evaluation (ibid.) both internally and externally by professionals, GSL experts (some of whom were native signers), and actual end-users. The largest part of NOEMA+ entries was based on an analysis of GSL corpora annotated for glosses entailed in the signed utterances. Identifying separate lemmas in a lexical resource as a video SL corpus is far from intuitive. Moreover, as one of the languages in this dictionary is an oral/written one, while the other is a three-dimensional language, the problem of equivalence proved to be even more challenging than in the case of language pairs of similar modalities (oral or signed).

As far as dictionary structure is concerned, each GSL entry is accompanied by one or more MG equivalents for each sense it represents, by synonyms (if any) in either language and by simple examples of use. When applicable, multi-word MG entries are linked to their respective single-word ones (excluding functional words) via cross-references. Apart from facilitating easy reference, this feature also contains pedagogical added value, considering that most of the words that form these phrases are inflected types of other entries. It, therefore, becomes easier for users to link each inflected type to the base form of the entry. A deliberate decision to exclude any metalinguistic information at this point was made in order to make NOEMA+ more user-friendly to the primary target audience, i.e., native signers. As the dictionary was based on video GSL corpora, the vast majority of the examples of use are authentic as opposed to constructed ones, whereas a small number of them were created ad hoc.

When the use of the dictionary is selected as a standalone platform tool, users may also employ the alphabetically ordered search option. This search option is, in general, considered appropriate for video databases of signs and it has been already applied in some educational and/or e-government internet-based applications [

10].

This approach provides the alphabetical ordering of the entailed concepts’ written form, allowing the user to choose the appropriate lemma from the available ordered list. This method prevents the delay in the system response caused by searching within the entire content of an extended video database [

25]. A detailed report on NOEMA+ compilation and selection of example sentences, along with the methodology followed for the lexicon database creation and the implemented GUI, is provided in [

26,

27].

3.2. Virtual Fingerspelling Keyboard

The fingerspelling keyboard facility comprises a set of virtual keys that correspond to the GSL fingerspelling alphabet. Each key depicts the handshape that represents each letter of the alphabet, while the digits 0-9 are also included. Thus, users can select a sequence of handshapes corresponding to the desired alphanumeric string, while on the screen they can visually inspect the selected sequence being fingerspelled in GSL.

The tool can either run as an external service (

Figure 11) or be interconnected with the lexicon and the dynamic sign synthesis tool as a string input mode of search data (i.e., lemmas). Such tools allow the fingerspelling of proper names [

28] and can generally support deaf users while inserting data of the type names, numbers etc. in web forms and also in various other communication situations [

28], like when taking school exams or filling out records. Furthermore, when incorporated in the synthetic signing environment, this tool enables the visualization of proper names in the context of a signed utterance and also fingerspelling of “unknown words”, thus, preventing performance failure when no identified item can be found in the system’s sign database.

At this point, it should be mentioned that fingerspelling is not part of a natural SL system. It is rather a convention among natural signers sharing a specific natural space with some given oral language, which allows them to visualize the alphabetic characters of the language of their environment. In this respect, fingerspelled alphabets become a supportive mechanism of significant value for the intercultural deaf-oral communication [

29]. As regards the fingerspelling keyboard facility under discussion, the user interface provides help in GSL in the form of a video tooltip to facilitate user interaction with the specific service.

3.3. Dynamic Sign Synthesis Environment

When the dynamic sign synthesis tool is selected, the representation of the sign phrase composed by the user is performed via a virtual signer through the use of a java applet, which runs in the web browser. The user selects the components of the phrase to be synthesized among the available lexical items that are appropriately coded for synthesis (namely, those containing information not visible to users yet important for synthetic representation). The user interface is designed to allow for different word orderings of the phrase to be synthesized, while the signing phrase may, at this point, consist of up to four components. The composition of new synthetic sign phrases is achieved by selecting the desired phrase components from a list of available, appropriately annotated lexical items [

13,

14]. The HamNoSys notation system [

12,

13] has been used for the phonological annotation of sign lemmas, along with features for the non-manual activity present in sign formation, while the University of East Anglia (UEA) avatar engine [

29] has been used to perform signing. End-users interact with the system via a simple search-and-match interface to compose their desired phrases. Phrase components are marked by different color frames indicating which items in the phrase are signed and which are fingerspelled. Users select the desired element by clicking on it and the respective GSL gloss is then included in the sign phrase to be performed by the avatar (

Figure 12). In case a word is not present in the synthesis lexicon, users are provided with the option to fingerspell it. This option proves especially helpful in the case of incorporating proper names in the signed phrase. Furthermore, search results may provide options, among which users may choose, as in the case of possible GSL synonyms.

A drag-and-drop facility, first demonstrated in the Dicta-Sign sign-Wiki [

5], allows multiple orderings of phrase components so as to create grammatical structures in GSL. Verifying user choices is important at any stage of this process, so that users can be certain about the content they are creating. When the structuring of the newly built signed phrase is completed, this phrase is performed by a signing avatar for final verification. Users may select to save, modify, or delete each phrase they have built. They may keep the saved phrases or parts of them for further use depending on their communication needs (e.g., a non-native signer could make use of this utility for language learning purposes).

Since the mainstream school environment is not usually familiar with SL, the sign synthesis tool may also be used by SL illiterate individuals. Thus, in the current implementations, a template-based GSL grammar guide is incorporated in the sign synthesis environment to help non-signers compose grammatically correct GSL utterances.

On Signing Avatar Technology and User Acceptance

Sign synthesis utilizes sign formation features in order to provide 3D synthetic representations of natural signing. It must be mentioned that signing avatar skeletons are implemented, following a completely different set of requirements than avatars developed for games, since the degree of freedom in hands, face, head, and upper body needs to be especially high to allow for convincing representations of multimodal human language utterances. As far as the deaf user communities are concerned, avatars have been received with skepticism, since the degree of naturalness captured in video has been far from possible for early synthetic signing. During the last decade, signing avatar technology has developed to the point to allow representation of more complex motion, such as simultaneous performance of the hands, the upper body and the head, also including a number of facial expressions [

8,

30]. Technological enhancements allowed for a higher score of acceptance among end-users of synthetic signing engines, which paved the way towards considering the development of complete editing environments for SL.

The avatar currently used to perform GSL signs in the reported environments is the signing avatar developed by UEA. Some of the recent enhancements of this specific avatar were verified by results of extensive testing and evaluation by end-user groups as part of the user evaluation processes that took place in the framework of the Dicta-Sign project [

31].

Synthesis, in this context, is based on the use of SiGML, which is an XML language-based heavily on HamNoSys transcriptions that can be mapped directly to SiGML.

The SiGML animation system is primarily implemented through the JASigning software, which is supported on both Windows and Macintosh platforms. This is achieved by the use of Java with OpenGL for rendering and compiled C++ native code for the Animgen component that converts SiGML to conventional low-level data for 3D character animation. JASigning includes both Java applications and web applets for enabling virtual signing on web pages. Both are deployed from an Internet server using JNLP technology that installs components automatically, but securely, on client systems.

What has been showcased with incorporation of the synthetic signing environment in two widely accessible platforms is the potential of an SL editor, in line with intuitive SL representation mechanisms of human signers.

4. Educational Platform Evaluation

In the previous sections, a set of tools was presented, which enable content accessibility and dynamic production via synthetic signing. The same accessibility facilitators have been integrated in the official educational content platform of the Greek Ministry of Education for the primary and secondary education levels, aiming to promote textbook accessibility, group work and student-student and student-teacher interaction via messages exchanged by means of SL. As SL accessibility tools were being incorporated in the educational content platform, they underwent different types of evaluation in three stages:

The first stage included a thorough internal technical evaluation process relating to the success of the integration of tools and their effective use in the platform itself. This was carried out by experts in each of the areas involved, who had to evaluate not only each tool individually, but also the platform as an integrated whole. Technical evaluation ran in parallel to integration work and, in its final stage, focused on issues of robustness and performance stability of the virtual machine (VM) accommodating the platform in the cloud, while receiving huge numbers of parallel requests.

The second evaluation stage involved a small team of five GSL experts, including three native GSL signers, who tried out the platform as end-users, acting as informants to provide valuable feedback on the usability and content of the platform. All team members kept a logbook of the part of the content they checked with notes on vocabulary content, GSL example phrases accompanying sign reference forms in the dictionary look-up environment, and avatar performance of the signs. The team of experts met regularly to discuss their findings and propose corrections and improvements, which were then incorporated in the platform’s database. The same team was consulted regarding the design of the platform GUI.

Finally, the third stage of the evaluation took place in February 2015 and involved two real end-user groups. This was carried out during a series of visits at the Deaf School of Argiroupoli, one of the largest deaf schools established in the area of Athens, including both primary and secondary educational settings, which provides education to deaf and hard of hearing children of both deaf and hearing parents according to the official curriculum of the Greek Ministry of Education. The establishment’s high school is supported by 15 teaching staff members including both deaf and hearing teachers, while it also provides services by one psychologist, one social worker and one logo-therapist.

End-user evaluation involved participation of both 14 high school staff members and 36 native Greek students from all six grades of the Greek national secondary education system (13–18 years old). All participants from both user groups had adequate computer skills. The tool-suit presentation (50 min) and the follow-up discussion (45 min) were also joined from a representative team of three staff members of the adjunct primary school, including the primary school director. Access permission to the school premises was obtained through the valid procedures at the time of evaluation execution.

End-user subjective evaluation focused on three aspects: (i) provided SL content, (ii) ease of use via the adopted GUI, (iii) effectiveness of provided functionalities. No written Greek was used for instructions or clarifications in respect to the evaluated issues.

The evaluation was carried out in three steps. Step-one involved presentation of/familiarization with the accessibility functionalities both regarding the GUI and content to the targeted user groups (teachers and students). Step-two provided a period of one week for hands-on use of the complete tools suite environment. Step-three entailed a discussion meeting with the teachers group and a hands-on meeting with the students group, who also expressed their opinion based on their experience with the platform using a five point Likert Scale.

Interpretation to GSL was offered during all sessions and for every interaction of the evaluators with the developers’ team. To eliminate misunderstandings, no written language was used, as the adopted Likert Scale employed emoticon figures to allow individual users to express how they perceived their experience with the complete tools suite (

Figure 13).

The three aspects that underwent subjective evaluation were also represented by icons in the distributed subjective evaluation sheet, as depicted in

Figure 14.

The subjective evaluation form filled out by each student after completing the evaluation process is provided in

Figure 15. No video recording of any of the sessions took place to preserve anonymity of the subjects. All sessions took place in the school computer room at a time slot after completion of the daily curriculum duties, usually dedicated to free/outdoor activities. All students participated voluntarily in the evaluation sessions. Interaction with the accessibility tools was performed on a game-like base involving the following three tasks:

A list of five randomly selected proper names from the curriculum educational material was given to each student in order to test proper name acquisition by means of the virtual fingerspelling keyboard. Students were then encouraged to use the fingerspelling keyboard on their own to compose playful names for each other.

A list of ten textbook-based inflected forms of Greek words (highly differentiating from the dictionary entry form in terms of morphology), was provided for lookup in the bilingual dictionary tool. Students were then encouraged to explore all search facilities of the dictionary with lemmas of their preference.

Regarding the sign synthesis tool, at a first stage, a set of five small sentences often appearing in a school environment was provided to users in written Greek form. Students were asked to create their version of the sentences in GSL using the sign synthesis tool and then compose the appropriate GSL responses via synthetic signing. They could consult the dictionary at any time during this procedure. At a second stage, students were free to create their own synthetic phrases using the sign synthesis tool.

After becoming familiar with the platform and having used it for an amount of time that allowed them to navigate with ease, both user groups were asked to provide comments based on their experience with the platform. The students’ experience results were collected in the form of the subjective evaluation questionnaires with questions on the GUI structure, the provided content and users’ opinions on synthetic signing (

Figure 15). Comments derived from discussion with the teachers were noted down in the form of a log-book. All collected comments were then studied, grouped (e.g., comments about content or usability, comments with teaching and/or learning implications) and analyzed by the developing team as a means to make possible improvements or additions/extensions.

On the whole, the platform was positively scored by both user groups. Young students tended to find being able to synthesize signed phrases especially amusing, while they were pleased with the avatar performance. This result indicates a radical change in signers’ attitude, since previous experience [

9] has shown that users had been rather concerned as regards SL performance by avatars. The collected comments from both user groups presented high scores for the option to compose one’s own chosen utterances, recognizing the importance of this technology when incorporated in the educational process and in spontaneous human communication, which may be one of the reasons that can explain the positive attitude towards avatar performance.

More particularly, search of unknown written content with direct linking to GSL lexicon presentation was one of the features that received particularly high scores in respect to its usability as an educational support mechanism. The most striking result from the point of view of teachers was that they considered the platform as a very helpful mechanism to be exploited in bilingual deaf education. To this end, they pointed out that they would like to also see information on the grammatical gender of Greek lemmas in parallel of viewing the Greek equivalent to the presented GSL sign in the vocabulary display window. Finally, the fingespelling facility was accepted by all users as something natural in the school environment, while several users noted that there are too many proper names to be memorized. The analysis of results for the platform content, GUI and functionalities, as evaluated by both school staff and students, is visualized in

Figure 16,

Figure 17 and

Figure 18, where

Figure 16a,

Figure 17a and

Figure 18a provide evaluation data from school staff, while

Figure 16b,

Figure 17b and

Figure 18b present student evaluation results.

5. Discussion

Accessibility of electronic content by deaf and HoH WWW users is directly connected with the possibility to acquire information that can be presented in a comprehensible way in SL and with the availability to create new electronic content, comment on or modify and reuse existing “text”. SL authoring tools, in general, belong to rising technologies that are still subject to basic research and thus, not directly available to end-users.

However, a few research efforts focus on facilitating end-users to view retrieved content in their SL by means of an avatar and also composing messages in SL by using simple interfaces and by exploiting adequately annotated lexical resources from an associated repository. Nevertheless, such applications require considerable infrastructure from the domain of LT, which may overload a system that needs to provide real-time responses. Furthermore, this kind of LT may even not be available for a specific language.

In parallel, when designing GUIs for deaf and HoH users, popular user interfaces with the specific end-user group need to be taken into consideration, where various evaluation results have shown a strong preference for the use of color code and avoidance of pop-ups or other “noise”-producing elements, as well as the use of search mechanisms based on the handshape (primary and/or secondary) of lemma formations and fuzzy search options regarding written forms of oral language [

10].

As regards the here reported workbench tools, based on the pilot use of the educational platform and the analysis of user evaluation results, several additions or improvements can be foreseen. For instance, one of the options considered for inclusion in future extensions of the platform is search by handshape, so that users will not have to be restricted in using words while searching. This will be utilized following the appropriate grouping of handshapes within the alphabetical index of the lexical content, so as to limit the number of the search items that are returned by the system.

The composition of synthetic signing phrases may facilitate communication over the Internet and it can also be crucial for class and group work, since it allows for the direct participation and dynamic linguistic message exchange, in a manner similar to what hearing individuals do when writing. The emerging technology of sign synthesis opens new perspectives with respect to the participation of deaf and HoH individuals in web-based everyday activities, including access and retrieval of information and anonymous communication.

As far as future steps are concerned, this team anticipates that the results of the research and the findings of the evaluation procedure, which is the first one carried out in the context of official education for this service, will be used to further improve both this and other similar online services. At the same time, one of the fundamental aims of this research line is to stay current and useful for end-users, which makes it essential to be updated periodically.

Finally, it is expected that, if advanced SL technology tools and SL resources are combined with standard LT tools and resources for vocal/written language forms, they may provide workbench environments to radically change the deaf accessibility landscape over the next period, which is a more extensive goal for this type of research. For example, one may envisage the incorporation of sign synthesis environments in machine translation (MT) applications targeting SLs and, thus, opening yet new perspectives as regards their potential and usability [

32].