Waking Up In the Morning (WUIM): A Smart Learning Environment for Students with Learning Difficulties

Abstract

:1. Introduction

- Learners: Students with special educational needs (SEN) and/or disability (D) (from now on SEND) and their typically developing (TD) peers.

- Context: Inclusive settings, where students from various backgrounds and with different physical, cognitive, and psychosocial skills are welcome by their neighborhood schools. The concept of inclusive educational context (inclusive education means all children are educated in the same classrooms, in the same schools, providing real learning opportunities for groups who have traditionally been excluded, not only children with disabilities, but children from cultural, religious, ethnic backgrounds). is the result of successive discussions in international forums. The culmination of the international meetings was the “Statement and Framework for Action on Special Needs Education” approved by the World Conference on Special Needs organized by the Government of Spain in cooperation with UNESCO in Salamanca from 7 to 10 June 1994 [15].

- Content: Derives from the functional domain of Activities of Daily Living (ADLs) and more specifically from the field of training in morning routines using traditional educational materials, and cutting-edge technologies such as AR, VR, and 360° videos.

2. Theoretical and Conceptual Framework

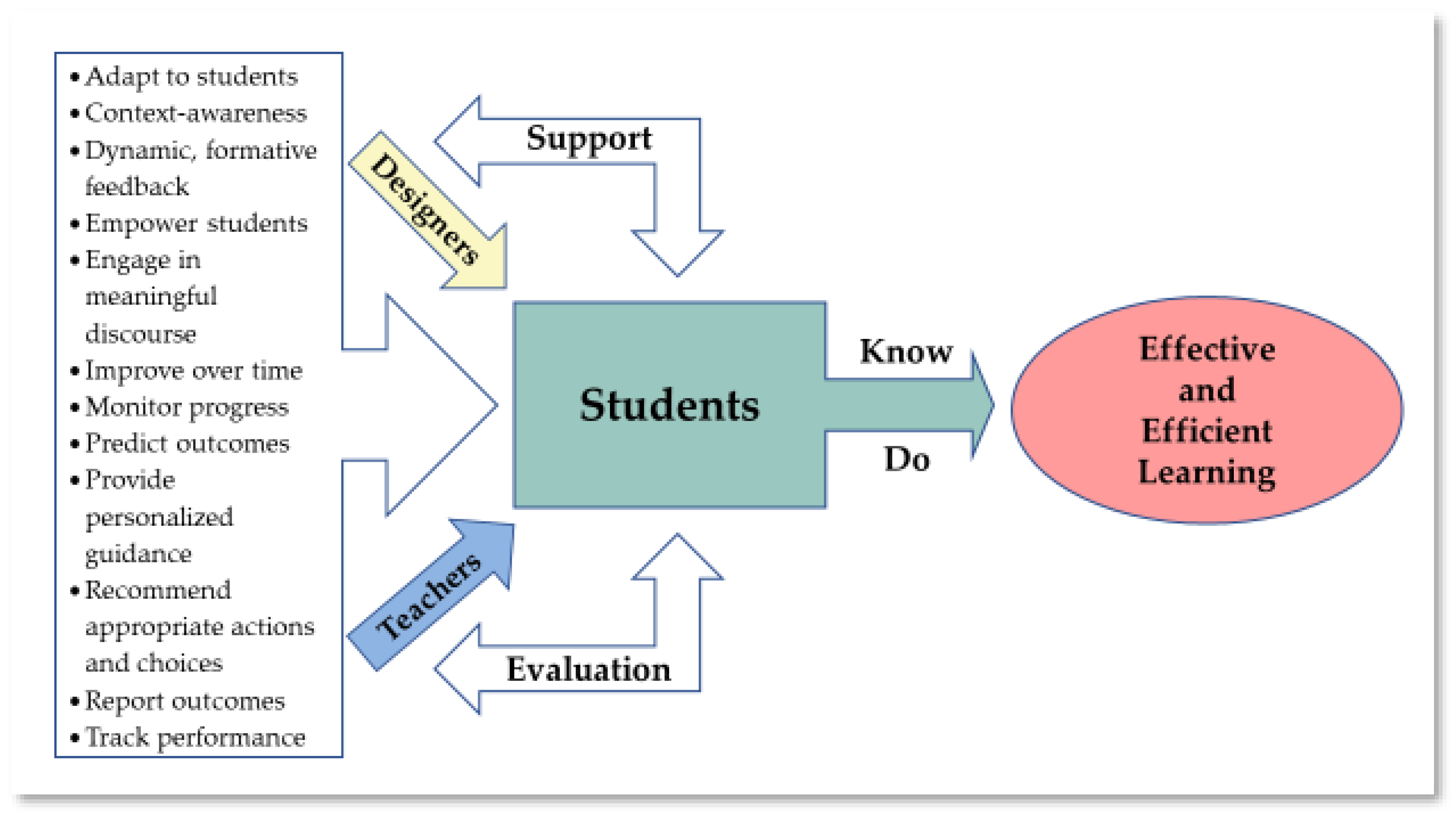

2.1. Smart Learning Environments, Interactivity, and Gamification

2.2. From Differentiated Instruction, Cognitive Theory of Multimedia Learning and Universal Design for Learning to Transmedia Learning

3. WUIM: A Transmedia Project for Inclusive Educational Settings

- “WUIM-Puzzles” encompasses traditional board games with flashcards and wooden block puzzles which constitute the toys of the whole project.

- “WUIM-AR” is an AR game-like application that is combined with WUIM-Puzzles.

- “WUIM-VR” is a VR simulation using gamification techniques.

3.1. User Experience Design

- Usefulness: Quality, importance, and value of a system or product to serve users’ purposes. Although from the very outset, the transdisciplinary design team decided that WUIM will consist of affordable low-cost applications, this decision does not mean that the quality of materials and coding were overlooked.

- Usability and utility are the two components of usefulness: A usable product provides effectiveness, efficiency, engagement, error tolerance, and ease of learning. However, even though a product is easy and pleasant to use, it might not be useful to someone if it does not meet his/her needs. WUIM was designed to be easy to use for both students and teachers. The primary goal was for WUIM to be an effective, efficient, engaging learning material that allows users to easily learn content, both in terms of activities and technology.

- Findability: Ease of finding a product or system. WUIM is available online and for free.

- Creditability: A product leads users to trust it, not only because it does the job it promised to do, but also because it can last for a reasonable amount of time, with accurate and appropriate information. WUIM in addition to the activities it provides, i.e., morning routine training, offers know-how to teachers and parents to develop their own material.

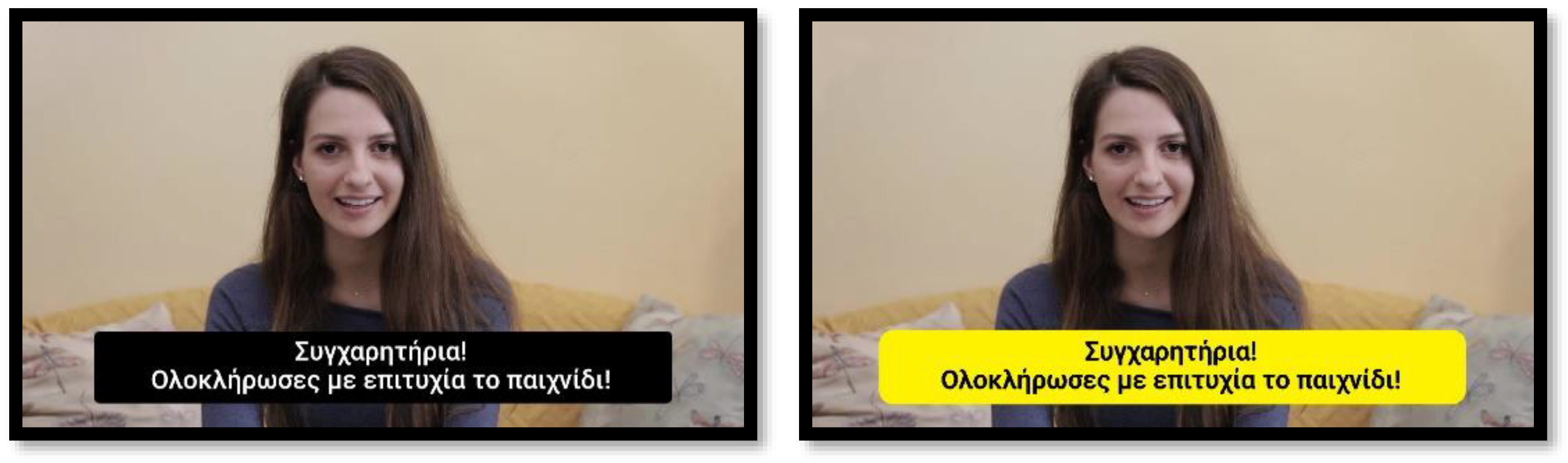

- Desirability: Branding, image, identity, aesthetics, and emotional design, which are also elements of user interface design. During the WUIM design, special emphasis was given to each of these features. Thus, a short name was chosen with intensity and easy to remember. Attention was also paid to user interface design according to the principles of Cognitive Theory of Multimedia Learning and guidelines of Web Accessibility for Designers (https://webaim.org/ (accessed on 2 June 2021)) and Microsoft (https://docs.microsoft.com/en-us/windows/win32/uxguide/vis-fonts (accessed on 2 June 2021)) regarding the choice of font type, colors and size, background colors, and contrasts.

- Accessibility: The experience provided to users, even if they have a disability, such as hearing, vision, movement or learning difficulties. WUIM focus on motor, learning and hearing difficulties, and students with visual problems but not totally blind.

- Value: The ultimate goal of a product is the added value it provides to users. Besides the vital educational content, an overview of the factors that shape the user experience corresponds to the design and development of WUIM.

3.2. A Process from Educational Design to Development

3.3. Game Development

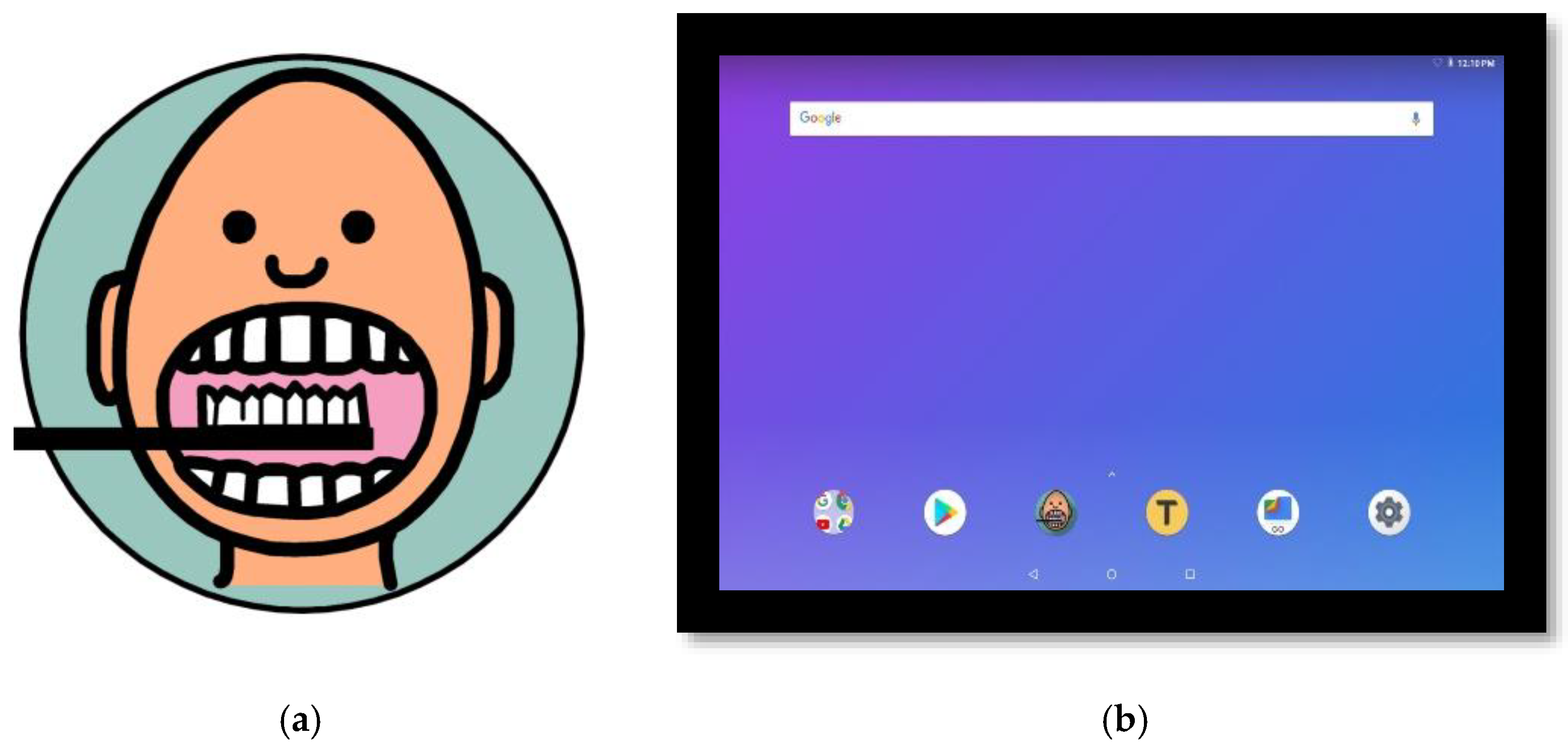

3.3.1. Concept Definition and Visual Symbol Selection

- Creditability and desirability: PCS are widely known in the field of special education, recognized by most children with SEND, are very comprehensive and meet the requirements of interface design. The narration of each symbol is very understandable. Besides, PCS can be used as a storyboard, i.e., a graphic layout that sequences illustrations and images.

- Usability: PCS are effective and efficient and no extra training time is required.

- Utility: PCS are easily printed and portable.

- Findability: PCS are easy to find.

- Accessibility: PCS are characterized by simplicity in depicting people and objects. Studies on the effect of the amount of detail on pictorial recognition memory have concluded that the simpler a picture, the lower cognitive load and therefore avoiding problems with concentration and distraction [82]. Besides, according to the instructions of AR development engines, pictures simplicity serves their rapid response as AR triggers.

3.3.2. Puzzle Design-Production

3.3.3. Film Production

3.3.4. AR and VR Game Development

- For the film production, three basic steps were followed: pre-production, production, and post-production. The pre-production phase included the scripting, the storyboard, the shotlist and the breakdown sheet. The production phase included the shooting. Post-production included video and audio editing, subtitles, voice-over and sound design. Finally, the footage processing, so that depending on the type of game technology, i.e., VR or AR, videos were integrated into the code.

- For the VR game, we procced as follows: We set the winning conditions and other game parameters. Then, we defined the environment and user interface (UI) design according to users’ characteristics. Next, we developed the code on the game console and associated the videos with the scenes. After the game flow was checked according to the game logic adopted by the design team, the VR game testing with the devices (smartphones/tablets, desktop, HMDs) was performed.

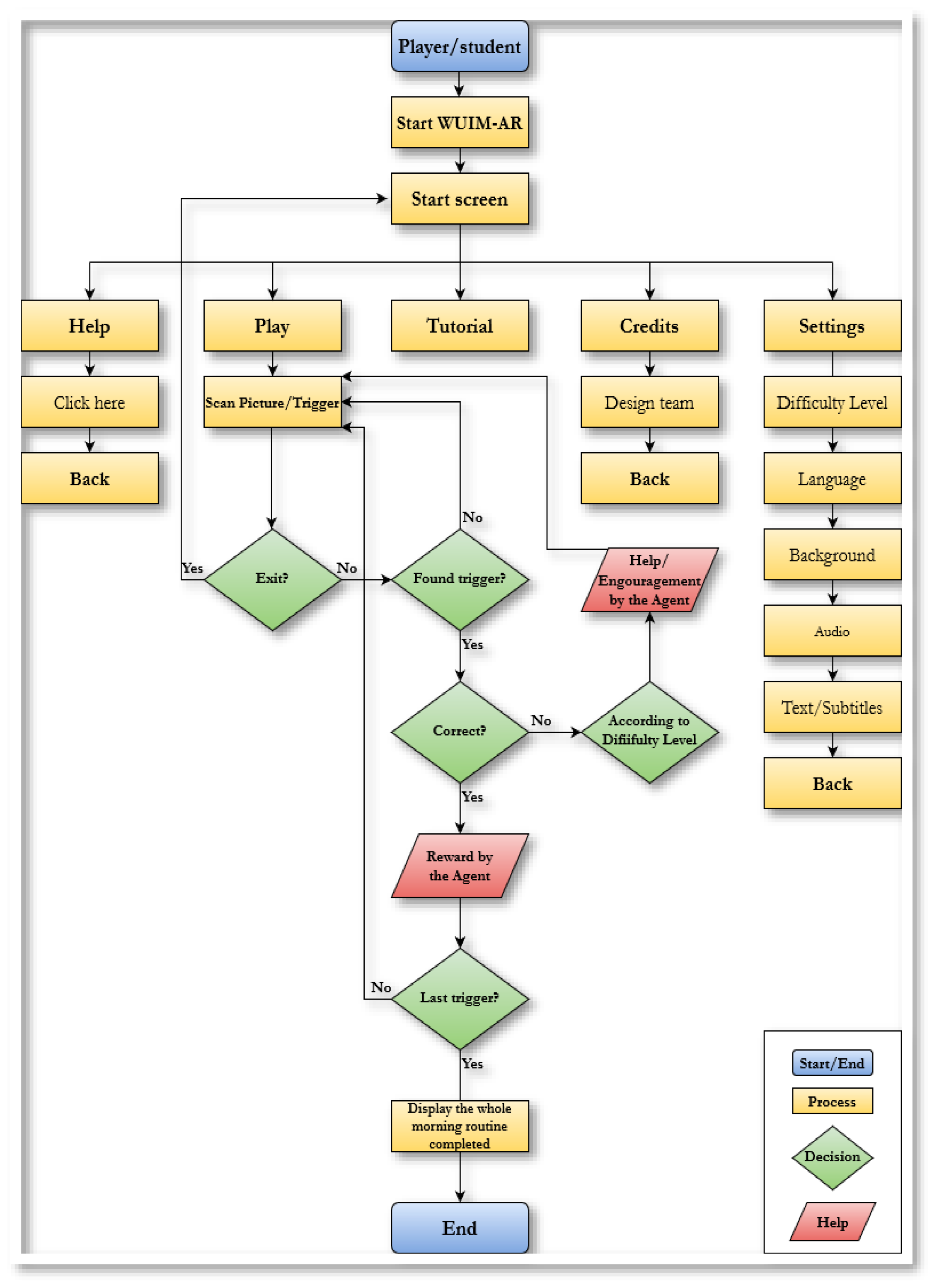

- For the AR game, we procced as follows: We set the winning conditions and other game parameters. The AR game is more directional than the VR version, the logic is serial and the winning conditions are different from the winning conditions of the VR game, which is freer compared to the AR. After defining the images that are the targets of AR and editing them to be recognized by a camera of tablets and smartphones according to Vuforia specifications, they were integrated into the game development engine (Unity). After configuring the code and data and retesting on devices, the application was ready for distribution.

- WUIM-AR Development

- WUIM-VR development

3.4. Game Logic—Gameplay

3.4.1. WUIM-Puzzle and WUIM-AR Gameplay

3.4.2. WUIM-VR Gameplay

4. Formative User and Expert Evaluation

- User-based methods, which include focus groups, interviews, questionnaires, experiments, observation, and bio-physiological measurements,

- Expert-based methods such as heuristic evaluations,

- Automated methods like telemetry analysis, and

- Specialized methods for the evaluation of social game play or action games.

- Research Question 1 (RQ1): Does WUIM accomplish the seven criteria that shape the user experience? Is it useful, usable, findable, creditable, desirable, accessible, and valuable?

- Research Question 2 (RQ2): Is WUIM a smart learning environment? In more detail, it gathers features such as effectiveness, efficiency, scalability, autonomy, engagement, flexibility, adaptiveness, personalization, conversationality, reflectiveness, innovation, and self-organization?

4.1. Participants

4.2. Data Collection

- Content: subjective adequacy of feedback, subjective adequacy of educational material, subjective clarity of learning objectives, subjective quality of the narration.

- Technical characteristics: subjective usability/playability (functionality), subjective audiovisual experience/aesthetics, subjective realism.

- User state of mind: presence/immersion, pleasure.

- Characteristics that allow learning: subjective relevance to personal interests, and motivation.

4.3. Procedure

- First, researchers gave the puzzles with increased difficulty (square pieces). The students were not given additional information regarding the content and the narration, to examine whether they could be able to place the activities in order without help. If difficulty was identified, then they were given the easiest version, the one with multiple shapes.

- They were then given the tablets. Researchers told children to press the symbol “a face brushing its teeth” on the screen. This symbol is the WUIM-AR app start button (Figure 32). Afterwards, researchers told the children to follow the pedagogical agent’s instructions. After finishing the puzzle game and augmented reality, participants completed the questionnaire or proceeded with the interview respectively.

- Then, they dealt with the VR application. Due to the use of special headset devices, the process focused on how to adapt smartphones in headsets, to wear and start the game. They were then allowed to navigate freely. When they finished the game, its evaluation followed.

5. Results

- RQ1: Does WUIM accomplish the seven criteria that shape the user experience? Is it useful, usable, findable, creditable, desirable, accessible, and valuable?

- User answers were positive for all seven factors that shape the UX.

- Indicatively, we summarize user comments during the observation by the researchers (think-aloud protocol):

- -

- “When I was playing the games, I did not catch a real toothbrush but I caught the wooden pieces and imagined that I was catching a real one” (Irini, a girl with moderate intellectual disability and mental age of 7 years)

- -

- “I liked the tablet. I held a tablet for the first time in my life and it was easy” (Vasso, a girl with moderate intellectual disability and mental age of 9 years)

- -

- “I did not feel stressed with the VR Head-Mounted glasses. On the contrary, I found them fascinating”. In addition, the researchers observed that as long as Panagiotis wore the HMD, the involuntary muscle spasms significantly decreased (Panayiotis, a boy with spastic cerebral palsy, difficulty with fine motor skills, and without intellectual disability).

- -

- “I really like the technology but I would like aid to hold the tablet more steadily decreased” (Alexis, a boy with spastic cerebral palsy, difficulty with fine motor skills, and without intellectual disability).

- RQ2: Is WUIM a smart learning environment? In more detail, it gathers features such as effectiveness, efficiency, scalability, autonomy, engagement, flexibility, adaptiveness, personalization, conversationality, reflectiveness, innovation, and self-organization?

- When therapists were asked if they considered WUIM effective, efficient, scalable, autonomous, engaging, flexible, adaptive, personalized, reflective, innovative, and self-directed and whether they would apply similar training materials, they were initially hesitant. Therapists had no gaming experience, they have not been introduced to either virtual or augmented reality, nor involved in content design and development process with innovative technologies. When they were informed that they do not need special programming knowledge for content development, they showed particular interest in creating their own puzzles.

6. Limitations and Future Work

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Factor | Statement |

|---|---|

| Presence | I was deeply concentrated in the game If someone was talking to me, I couldn’t hear him I forgot about time passing while playing the game |

| Enjoyment | I think the game was fun I felt bored while playing the game * It felt good to successfully complete the tasks in this game |

| Subjective learning effectiveness | I felt that this game can ease the way I learn This game made learning more interesting I will definitely try to apply the knowledge I learned with this game |

| Subjective narratives’ adequacy | I was captivated by the game’s story from the beginning I enjoyed the story provided by the game I could clearly understand the game’s story |

| Subjective realism | There were times when the virtual objects seemed to be as real as the real ones When I played the game, the virtual world was more real than the real world |

| Subjective feedback’s adequacy | I received immediate feedback on my actions I received information on my success (or failure) on the intermediate goals immediately |

| Subjective audiovisual adequacy | I enjoyed the sound effects in the game I think the game’s audio fits the mood or style of the game I enjoyed the game’s graphics I think the game’s graphics fit the mood or style of the game |

| Subjective relevance to personal interests | The content of this game was relevant to my interests I could relate the content of this game to things I have seen, done, or thought about in my own life It is clear to me how the content of the game is related to things I already know |

| Subjective goals’ clarity | The game’s goals were presented at the beginning of the game The game’s goals were presented clearly |

| Subjective ease of use | I think it was easy to learn how to use the game I imagine that most people will learn to use this game very quickly I felt that I needed help from someone else in order to use the game because It was not easy for me to understand how to control the game * |

| Subjective adequacy of the learning material | In some cases, there was so much information that it was hard to remember the important points * The exercises in this game were too difficult * |

| Motivation | This game did not hold my attention * When using the game, I did not have the impulse to learn more about the learning subject * The game did not motivate me to learn * |

References

- Legg, S.; Hutter, M. A Collection of Definitions of Intelligence. In Proceedings of the 2007 conference on Advances in Artificial General Intelligence: Concepts, Architectures and Algorithms: Proceedings of the AGI Workshop 2006; IOS Press: Amsterdam, The Netherlands, 2007; Available online: https://dl.acm.org/doi/10.5555/1565455.1565458 (accessed on 20 May 2021).

- Spector, J.M. Conceptualizing the emerging field of smart learning environments. Smart Learn. Environ. 2014, 1, 2. [Google Scholar] [CrossRef] [Green Version]

- Kaimara, P.; Deliyannis, I. Why Should I Play This Game? The Role of Motivation in Smart Pedagogy. In Didactics of Smart Pedagogy; Daniela, L., Ed.; Springer International Publishing: Cham, Switzerland, 2019; pp. 113–137. [Google Scholar]

- Zhu, Z.-T.; Yu, M.-H.; Riezebos, P. A research framework of smart education. Smart Learn. Environ. 2016, 3, 4. [Google Scholar] [CrossRef] [Green Version]

- Kaimara, P.; Poulimenou, S.-M.; Oikonomou, A.; Deliyannis, I.; Plerou, A. Smartphones at schools? Yes, why not? Eur. J. Eng. Res. Sci. 2019, 1–6. [Google Scholar] [CrossRef]

- Kaimara, P.; Oikonomou, A.C.; Deliyannis, I.; Papadopoulou, A.; Miliotis, G.; Fokides, E.; Floros, A. Waking up in the morning (WUIM): A transmedia project for daily living skills training. Technol. Disabil. 2021, 33, 137–161. [Google Scholar] [CrossRef]

- Deliyannis, I.; Kaimara, P. Developing Smart Learning Environments Using Gamification Techniques and Video Game Technologies. In Didactics of Smart Pedagogy; Daniela, L., Ed.; Springer International Publishing: Cham, Switzerland, 2019; pp. 285–307. ISBN 9783030015510. [Google Scholar]

- Poulimenou, S.-M.; Kaimara, P.; Papadopoulou, A.; Miliotis, G.; Deliyannis, I. Tourism policies for communicating World Heritage Values: The case of the Old Town of Corfu in Greece. In Proceedings of the 16th NETTIES CONFERENCE: Access to Knowledge in the 21st Century the Interplay of Society, Education, ICT and Philosophy; IAFeS—International Association for eScience, Ed.; IAFeS: Wien, Austria, 2018; Volume 6, pp. 187–192. [Google Scholar]

- Poulimenou, S.-M.; Kaimara, P.; Deliyannis, I. Promoting Historical and Cultural Heritage through Interactive Storytelling Paths and Augmented Reality [Aνάδειξη της Ιστορικής Και Πολιτιστικής Κληρονομιάς μέσω Διαδραστικών Διαδρομών Aφήγησης και Επαυξημένη Πραγματικότητα]. In Proceedings of the 2nd Pan-Hellenic Conference on Digital Cultural Heritage-EuroMed, Volos, Greece, 1–3 December 2017; pp. 627–636. (In Greek). [Google Scholar]

- Kaimara, P.; Renessi, E.; Papadoloulos, S.; Deliyannis, I.; Dimitra, A. Fairy tale meets ICT: Vitalizing professions of the past [Το παραμύθι συναντά τις ΤΠΕ: ζωντανεύοντας τα επαγγέλματα του παρελθόντος]. In Proceedings of the 3rd International Conference in Creative Writing, Corfu, Greece, 6–8 October 2017; Kotopoulos, T.H., Nanou, V., Eds.; Postgraduate Programme ‘Creative Writing’—University of Western Macedonia: Corfu, Greece, 2018; pp. 292–318. ISBN 978-618-81047-9-2. (In Greek) [Google Scholar]

- Deliyannis, I.; Poulimenou, S.-M.; Kaimara, P.; Filippidou, D.; Laboura, S. BRENDA Digital Tours: Designing a Gamified Augmented Reality. Application to Encourage Gastronomy Tourism and local food exploration. In Proceedings of the 2nd International Conference of Cultural Sustainable Tourism, Maia, Portugal, 13–15 October 2020. [Google Scholar]

- Poulimenou, S.-M.; Kaimara, P.; Deliyannis, I. World Heritage Monuments Management Planning of in the light of UN Sustainable Development Goals: The case of the Old Town of Corfu. In Proceedings of the 4th International Conference on “Cities’ Identity through Architecture and Arts”, Pisa, Italy, 14–16 December 2020. [Google Scholar]

- Sims, R. An interactive conundrum: Constructs of interactivity and learning theory. Australas. J. Educ. Technol. 2000, 16, 45–57. [Google Scholar] [CrossRef] [Green Version]

- Kaimara, P.; Poulimenou, S.-M.; Deliyannis, I. Digital learning materials: Could transmedia content make the difference in the digital world? In Epistemological Approaches to Digital Learning in Educational Contexts; Daniela, L., Ed.; Routledge: London, UK, 2020; pp. 69–87. [Google Scholar]

- UNESCO. World Conference on Special Needs Education: Access and Quality. Final Report; United Nations Educational, Scientific and Cultural Organization & International Bureau of Education: Paris, France, 1994. [Google Scholar]

- Tomlinson, C.A.; Brighton, C.; Hertberg, H.; Callahan, C.M.; Moon, T.R.; Brimijoin, K.; Conover, L.A.; Reynolds, T. Differentiating Instruction in Response to Student Readiness, Interest, and Learning Profile in Academically Diverse Classrooms: A Review of Literature. J. Educ. Gift. 2003, 27, 119–145. [Google Scholar] [CrossRef] [Green Version]

- Mayer, R. Introduction to Multimedia Learning. In The Cambridge Handbook of Multimedia Learning; Mayer, R., Ed.; Cambridge University Press: Cambridge, UK, 2014; pp. 1–24. [Google Scholar]

- Moreno, R.; Mayer, R. Interactive Multimodal Learning Environments. Educ. Psychol. Rev. 2007, 19, 309–326. [Google Scholar] [CrossRef]

- Meyer, A.; Rose, D.H.; Gordon, D. CAST: Universal Design for Learning: Theory & Practice. Available online: http://udltheorypractice.cast.org/home?5 (accessed on 29 May 2021).

- CAST Universal Design for Learning Guidelines Version 2.2. Available online: http://udlguidelines.cast.org (accessed on 29 May 2021).

- Rodrigues, P.; Bidarra, J. Transmedia Storytelling as an Educational Strategy: A Prototype for Learning English as a Second Language. Int. J. Creat. Interfaces Comput. Graph. 2016, 7, 56–67. [Google Scholar] [CrossRef]

- Kaimara, P.; Deliyannis, I.; Oikonomou, A.; Miliotis, G. Transmedia storytelling meets Special Educational Needs students: A case of Daily Living Skills Training. In Proceedings of the 4th International Conference on Creative Writing Conference, 12–15 September 2019, Florina, Greece; Kotopoulos, T.H., Vakali, A.P., Eds.; Postgraduate Programme ‘Creative Writing’—University of Western Macedonia: Florina, Greece, 2021; pp. 542–561. ISBN 978-618-5613-00-6. [Google Scholar]

- Spector, J.M. The potential of smart technologies for learning and instruction. Int. J. Smart Technol. Learn. 2016, 1, 21. [Google Scholar] [CrossRef]

- Daniela, L.; Lytras, M.D. Editorial: Themed issue on enhanced educational experience in virtual and augmented reality. Virtual Real. 2019, 23, 325–327. [Google Scholar] [CrossRef]

- Ertmer, P.A.; Newby, T.J. Behaviorism, Cognitivism, Constructivism: Comparing Critical Features From an Instructional Design Perspective. Perform. Improv. Q. 2013, 26, 43–71. [Google Scholar] [CrossRef]

- Dewey, J. Democracy and Education: An Introduction to the Philosophy of Education; Macmillan: New York, NY, USA, 1922; ISBN 9780199272532. [Google Scholar]

- Chi, M.T.H.; Wylie, R. The ICAP Framework: Linking Cognitive Engagement to Active Learning Outcomes. Educ. Psychol. 2014, 49, 219–243. [Google Scholar] [CrossRef]

- Deliyannis, I. The Future of Television-Convergence of Content and Technology; Deliyannis, I., Ed.; IntechOpen: London, UK, 2019. [Google Scholar]

- Fisch, S.M. Children’s Learning from Educational Television; Routledge: New York, NU, USA, 2014; ISBN 9781410610553. [Google Scholar]

- Li, K.C.; Wong, B.T.-M. Review of smart learning: Patterns and trends in research and practice. Australas. J. Educ. Technol. 2021, 37, 189–204. [Google Scholar] [CrossRef]

- Salen, K.; Zimmerman, E. Rules of Play-Game Design Fundamentals; Massachusetts Institute of Technology: Cambridge, MA, USA, 2004; ISBN 0-262-24045-9. [Google Scholar]

- Klopfer, E.; Osterweil, S.; Salen, K. Moving Learning Games Forward, Obstacles Opportunities & Openness; The Education Arcade: Cambridge, MA, USA, 2009. [Google Scholar]

- Balducci, F.; Grana, C. Affective Classification of Gaming Activities Coming from RPG Gaming Sessions. In E-Learning and Games. Edutainment 2017; Tian, F., Gatzidis, C., El Rhalibi, A., Tang, W., Charles, F., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 10345. [Google Scholar] [CrossRef] [Green Version]

- Deterding, S.; Dixon, D.; Khaled, R.; Nacke, L. From game design elements to gamefulness. In Proceedings of the 15th International Academic MindTrek Conference: Envisioning Future Media Environments; ACM Press: New York, NY, USA, 2011; pp. 9–15. [Google Scholar]

- Prensky, M. Digital Game-Based Learning; Paragon House: St. Paul, MN, USA, 2007. [Google Scholar]

- Morschheuser, B.; Hassan, L.; Werder, K.; Hamari, J. How to design gamification? A method for engineering gamified software. Inf. Softw. Technol. 2018, 95, 219–237. [Google Scholar] [CrossRef] [Green Version]

- Plass, J.L.; Homer, B.D.; Kinzer, C.K. Foundations of Game-Based Learning. Educ. Psychol. 2015, 50, 258–283. [Google Scholar] [CrossRef]

- Nicholson, S. A User-Centered Theoretical Framework for Meaningful Gamification. In Proceedings of the GlLS 8.0 Games + Learning + Society Conference, Madison, WI, USA, 13–15 June 2012; Martin, C., Ochsner, A., Squire, K., Eds.; ETC Press: Madison, WI, USA, 2012; pp. 223–230. [Google Scholar]

- Daniela, L. Epistemological Approaches to Digital Learning in Educational Contexts; Daniela, L., Ed.; Routledge: London, UK, 2020; ISBN 9780429319501. [Google Scholar]

- Landrum, T.J.; McDuffie, K.A. Learning styles in the age of differentiated instruction. Exceptionality 2010, 18, 6–17. [Google Scholar] [CrossRef]

- Tomlinson, C.A. Differentiation of Instruction in the Elementary Grades; Report No. ED 443572; ERIC Clearinghouse on Elementary and Early Childhood Education: Champaign, IL, USA, 2000.

- Oviatt, S. Advances in robust multimodal interface design. IEEE Comput. Graph. Appl. 2003, 23, 62–68. [Google Scholar] [CrossRef]

- Jonassen, D.H. Technology as cognitive tools: Learners as designers. ITForum Pap. 1994, 1, 67–80. [Google Scholar]

- Montessori, M. The Montessori Method: Scientific Pedagogy as Applied Child Education in ‘The Children’s Houses’, with Additions and Revisions by the Author; Frederick A Stokes Company: New York, NY, USA, 1912. [Google Scholar]

- Tindall-Ford, S.; Chandler, P.; Sweller, J. When two sensory modes are better than one. J. Exp. Psychol. Appl. 1997, 3, 257–287. [Google Scholar] [CrossRef]

- Clark, J.M.; Paivio, A. Dual coding theory and education. Educ. Psychol. Rev. 1991, 3, 149–210. [Google Scholar] [CrossRef] [Green Version]

- Kanellopoulou, C.; Kermanidis, K.L.; Giannakoulopoulos, A. The Dual-Coding and Multimedia Learning Theories: Film Subtitles as a Vocabulary Teaching Tool. Educ. Sci. 2019, 9, 210. [Google Scholar] [CrossRef] [Green Version]

- Sadoski, M. A Dual Coding View of Vocabulary Learning. Read. Writ. Q. 2005, 21, 221–238. [Google Scholar] [CrossRef]

- Woodin, S. SUITCEYES (Smart, User-friendly, Interactive, Tactual, Cognition-Enhancer That Yields Extended Sensosphere) Scoping Report on Law and Policy on Deafblindness, Disability and New Technologies; United Kingdom: SUITCEYES-European Union’s Horizon 2020 Programme. 2020. Available online: https://www.hb.se/en/research/research-portal/projects/suitceyes-/ (accessed on 2 June 2021).

- Mayer, R.E. Applying the science of learning to medical education. Med. Educ. 2010, 44, 543–549. [Google Scholar] [CrossRef]

- Scolari, C.A. Transmedia Storytelling: Implicit Consumers, Narrative Worlds, and Branding in Contemporary Media Production. Int. J. Commun. 2009, 3, 586–606. [Google Scholar]

- Herr-Stephenson, B.; Alper, M.; Reilly, E.; Jenkins, H. T Is for Transmedia: Learning through Transmedia Play; USC Annenberg Innovation Lab and the Joan Ganz Cooney Center at Sesame Workshop: Los Angeles, CA, USA; New York, NY, USA, 2013. [Google Scholar]

- Jenkins, H. Wandering through the Labyrinth: An Interview with USC’s Marsha Kinder. Int. J. Transmedia Lit. 2015, 1, 253–275. [Google Scholar]

- López-Varela Azcárate, A. Transmedial Ekphrasis. From Analogic to Digital Formats. IJTL Int. J. Transmedia Lit. 2015, 45–66. [Google Scholar] [CrossRef]

- Lygkiaris, M.; Deliyannis, I. Aνάπτυξη Παιχνιδιών: Σχεδιασμός Διαδραστικής Aφήγησης Θεωρίες, Τάσεις και Παραδείγματα [Game Development: Designing Interactive Narrative Theories, Trends and Examples]; Fagottobooks: Athens, Greece, 2017; ISBN 9789606685750. [Google Scholar]

- Robin, B.R.; McNeil, S.G. What educators should know about teaching digital storytelling. Digit. Educ. Rev. 2012, 22, 37–51. [Google Scholar] [CrossRef]

- Fleming, L. Expanding Learning Opportunities with Transmedia Practices: Inanimate Alice as an Exemplar. J. Media Lit. Educ. 2013, 5, 370–377. [Google Scholar]

- Pence, H.E. Teaching with Transmedia. J. Educ. Technol. Syst. 2011, 40, 131–140. [Google Scholar] [CrossRef]

- Alper, M.; Herr-Stephenson, R. Transmedia Play: Literacy across Media. J. Media Lit. Educ. 2013, 5, 366–369. [Google Scholar]

- Jenkins, H. Transmedia Storytelling and Entertainment: An annotated syllabus. Continuum 2010, 24, 943–958. [Google Scholar] [CrossRef]

- Scolari, C.A.; Lugo Rodríguez, N.; Masanet, M. Transmedia Education. From the contents generated by the users to the contents generated by the students. Rev. Lat. Comun. Soc. 2019, 74, 116–132. [Google Scholar] [CrossRef] [Green Version]

- Nordmark, S.; Milrad, M. Tell Your Story About History: A Mobile Seamless Learning Approach to Support Mobile Digital Storytelling (mDS). In Seamless Learning in the Age of Mobile Connectivity; Springer: Singapore, 2015; pp. 353–376. [Google Scholar]

- Teske, P.R.J.; Horstman, T. Transmedia in the classroom: Breaking the fourth wall. In Proceedings of the 16th International Academic MindTrek Conference (MindTrek ’12); Association for Computing Machinery, Ed.; ACM Press: New York, NY, USA, 2012; pp. 5–9. [Google Scholar] [CrossRef]

- Aiello, P.; Carenzio, A.; Carmela, D.; Gennaro, D.; Tore, S.D.; Sibilio, M. Transmedia Digital Storytelling to Match Students’ Cognitive Styles in Special Education. Research Educ. Media 2013, 5, 123–134. [Google Scholar]

- Kaimara, P.; Miliotis, G.; Deliyannis, I.; Fokides, E.; Oikonomou, A.; Papadopoulou, A.; Floros, A. Waking-up in the morning: A gamified simulation in the context of learning activities of daily living. Technol. Disabil. 2019, 31, 195–198. [Google Scholar] [CrossRef] [Green Version]

- Kaimara, P.; Deliyannis, I.; Oikonomou, A.; Fokides, E.; Miliotis, G. An innovative transmedia-based game development method for inclusive education. Digit. Cult. Educ. 2021, in press. [Google Scholar]

- Soegaard, M. The Basic of User Experience Design; Interaction Design Foundation; Available online: https://www.interaction-design.org/ebook (accessed on 30 May 2021).

- United Nations General Comment No. 6 (2018) on Equality and Non-Discrimination; UN Committee on the Rights of Persons with Disabilities: Geneva, Switzerland, 2018.

- Kaimara, P.; Fokides, E.; Oikonomou, A.; Deliyannis, I. Undergraduate students’ attitudes towards collaborative digital learning games. In Proceedings of the 2nd International Conference Digital Culture and AudioVisual Challenges, Interdisciplinary Creativity in Arts and Technology, Corfu, Greece, 10–11 May 2019; pp. 63–64. [Google Scholar]

- Fokides, E.; Kaimara, P. Future teachers’ views on digital educational games [Oι απόψεις των μελλοντικών εκπαιδευτικών για τα ψηφιακά εκπαιδευτικά παιχνίδια]. Themes Sci. Technol. Educ. 2020, 13, 83–95. (In Greek) [Google Scholar]

- Kaimara, P.; Fokides, E. Future teachers’ views on digital educational games [Oι απόψεις των μελλοντικών εκπαιδευτικών για τα ψηφιακά εκπαιδευτικά παιχνίδια]. In Proceedings of the 2nd Pan-Hellenic Conference: Open Educational Resources and E-Learning, Korinthos, Greece, 13–14 December 2019; Jimoyiannis, A., Tsiotakis, P., Eds.; Department of Social and Education Policy of the University of Peloponnese & Hellenic Association of ICT in Education (HAICTE): Korinthos, Greece, 2019; p. 41. (In Greek) [Google Scholar]

- Kaimara, P.; Fokides, E.; Oikonomou, A.; Deliyannis, I. Potential Barriers to the Implementation of Digital Game-Based Learning in the Classroom: Pre-service Teachers’ Views. Technol. Knowl. Learn. 2021. [Google Scholar] [CrossRef]

- Pratten, R. Getting Started withTransmedia Storytelling: A Practical Guide for Beginners; CreateSpace Independent Publishing Platform: Scotts Valley, CA, USA, 2015; ISBN 1515339165. [Google Scholar]

- Bloom, B.S. Taxonomy of educational objectives: The classification of educational goals. In Handbook I: Cognitive Domain; Engelhart, M.D., Furst, E.J., Hill, W.H., Krathwohl, D.R., Eds.; McKay: New York, NY, USA, 1956. [Google Scholar]

- Lowyck, J. Bridging Learning Theories and Technology-Enhanced Environments: A Critical Appraisal of Its History. In Handbook of Research on Educational Communications and Technology; Spector, J.M., Merrill, M.D., Elen, J., Bishop, M.J., Eds.; Springer: New York, NY, USA, 2014; pp. 3–20. ISBN 978-1-4614-3184-8. [Google Scholar]

- Pratt, C.; Steward, L. Applied Behavior Analysis: The Role of Task Analysis and Chaining. Available online: https://www.iidc.indiana.edu/pages/Applied-Behavior-Analysis (accessed on 25 May 2021).

- Bruner, J.S.; Watson, R. Child’s Talk: Learning to Use Language; Oxford University Press: New York, NY, USA, 1983; ISBN 198576137. [Google Scholar]

- Lillard, A.S. Playful learning and Montessori education. Am. J. Play 2013, 5, 157–186. [Google Scholar]

- Vygotsky, L. Mind in Society: The Development of Higher Psychological Processes, 2nd ed.; Cole, M., John-Steiner, V., Scribner, S., Souberman, E., Eds.; Harvard University Press: Cambridge, UK, 1978; ISBN 0-674-57628-4. [Google Scholar]

- Bach, H. Composing a Visual Narrative Inquiry. In Handbook of Narrative Inquiry: Mapping a Methodology; SAGE Publications, Inc.: Thousand Oaks, CA, USA, 2007; pp. 280–307. ISBN 1456564684. [Google Scholar]

- Miller, G.A. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychol. Rev. 1994, 101, 343–352. [Google Scholar] [CrossRef]

- Pezdek, K.; Chen, H.C. Developmental differences in the role of detail in picture recognition memory. J. Exp. Child Psychol. 1982, 33, 207–215. [Google Scholar] [CrossRef]

- Ke, F. Designing and integrating purposeful learning in game play: A systematic review. Educ. Technol. Res. Dev. 2016, 64, 219–244. [Google Scholar] [CrossRef]

- Panagopoulos, I. Reshaping Contemporary Greek Cinema through a Re-Evaluation of the Historical and Political Perspective of Theo Angelopoulos’s Work. Ph.D. Thesis, University of Central Lancashire, Preston, UK, 2019. [Google Scholar]

- Murphy, C. Why Games Work and the Science of Learning. In Proceedings of the MODSIM World 2011 Conference and Expo ‘Overcoming Critical Global Challenges with Modeling & Simulation’; NASA Conference Publication: Virginia Beach, VA, USA, 2012; pp. 383–392. [Google Scholar]

- Fotaris, P.; Mastoras, T. Escape Rooms for Learning: A Systematic Review. In Proceedings of the 13th European Conference on Game Based Learning; Odense, Denmark, 3–4 October 2019; Eleaek, L., Ed.; Academic Conferences Ltd.: Reading, UK, 2019; pp. 235–243. [Google Scholar] [CrossRef]

- Dalgarno, B.; Lee, M.J.W. What are the learning affordances of 3-D virtual environments? Br. J. Educ. Technol. 2010, 41, 10–32. [Google Scholar] [CrossRef]

- Faizan, N.D.; Löffler, A.; Heininger, R.; Utesch, M.; Krcmar, H. Classification of Evaluation Methods for the Effective Assessment of Simulation Games: Results from a Literature Review. Int. J. Eng. Pedagog. 2019, 9, 19. [Google Scholar] [CrossRef] [Green Version]

- Fokides, E.; Atsikpasi, P.; Kaimara, P.; Deliyannis, I. Let players evaluate serious games. Design and validation of the Serious Games Evaluation Scale. Int. Comput. Games Assoc. ICGA 2019, 41, 116–137. [Google Scholar] [CrossRef]

- Bernhaupt, R. User Experience Evaluation Methods in the Games Development Life Cycle. In Game User Experience Evaluation. Human–Computer Interaction Series; Bernhaupt, R., Ed.; Springer: Cham, Switzerland, 2015; pp. 1–8. [Google Scholar]

- Mirza-Babaei, P.; Nacke, L.; Gregory, J.; Collins, N.; Fitzpatrick, G. How does it play better? Exploring User Testing and Biometric Storyboards in Games User Research. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; ACM: New York, NY, USA, 2013; pp. 1499–1508. [Google Scholar]

- Nacke, L.; Drachen, A.; Göbel, S. Methods for Evaluating Gameplay Experience in a Serious Gamming Context. Int. J. Comput. Sci. Sport 2010, 9, 40–51. [Google Scholar]

- Almeida, P.; Abreu, J.; Silva, T.; Varsori, E.; Oliveira, E.; Velhinho, A.; Fernandes, S.; Guedes, R.; Oliveira, D. Applications and Usability of Interactive Television. In Communications in Computer and Information Science, Proceedings of the 6th Iberoamerican Conference, JAUTI 2017, Aveiro, Portugal, 12–13 October 2017; Abásolo, M.J., Abreu, J., Almeida, P., Silva, T., Eds.; Springer: Cham, Switzerland, 2018; Volume 813, pp. 44–57. [Google Scholar] [CrossRef]

- Bernhaupt, R.; Mueller, F. ‘Floyd’ Game User Experience Evaluation. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems—CHI EA ’16; ACM Press: New York, NY, USA, 2016; pp. 940–943. [Google Scholar]

- Kaimara, P.; Fokides, E.; Oikonomou, A.; Atsikpasi, P.; Deliyannis, I. Evaluating 2D and 3D serious games: The significance of student-player characteristics. Dialogoi Theory Prax. Educ. 2019, 5, 36–56. [Google Scholar] [CrossRef] [Green Version]

- World Health Organization. World Health Organization International Statistical Classification of Diseases and Related Health Problems, 10th Revision (ICD-10), 5th ed.; World Health Organization: Geneva, Switzerland, 2016. [Google Scholar]

- Brooke, J. SUS: A ‘quick and dirty’ usability scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., Weerdmeester, B.A., McClelland, I.L., Eds.; Taylor & Francis: London, UK, 1996; pp. 189–194. [Google Scholar]

- Fokides, E.; Atsikpasi, P.; Kaimara, P.; Deliyannis, I. Factors Influencing the Subjective Learning Effectiveness of Serious Games. J. Inf. Technol. Educ. Res. 2019, 18, 437–466. [Google Scholar] [CrossRef] [Green Version]

- Kaimara, P.; Fokides, E.; Plerou, A.; Atsikpasi, P.; Deliyannis, I. Serious Games Effect Analysis On Player’s Characteristics. Int. J. Smart Educ. Urban Soc. 2020, 11, 75–91. [Google Scholar] [CrossRef]

- Fokides, E.; Kaimara, P.; Deliyannis, I.; Atsikpasi, P. Development of a scale for measuring the learning experience in serious games. In Proceedings of the 1st International Conference Digital Culture and AudioVisual Challenges, Interdisciplinary Creativity in Arts and Technology, Corfu, Greece, 1–2 June 2018; Panagopoulos, M., Papadopoulou, A., Giannakoulopoulos, A., Eds.; CEUR-WS: Corfu, Greece, 2018; Volume 2811, pp. 181–186. [Google Scholar]

- Wen, Y. Augmented reality enhanced cognitive engagement: Designing classroom-based collaborative learning activities for young language learners. Educ. Technol. Res. Dev. 2021, 69, 843–860. [Google Scholar] [CrossRef]

- Alzahrani, N.M. Augmented Reality: A Systematic Review of Its Benefits and Challenges in E-learning Contexts. Appl. Sci. 2020, 10, 5660. [Google Scholar] [CrossRef]

- Badilla-Quintana, M.G.; Sepulveda-Valenzuela, E.; Salazar Arias, M. Augmented Reality as a Sustainable Technology to Improve Academic Achievement in Students with and without Special Educational Needs. Sustainability 2020, 12, 8116. [Google Scholar] [CrossRef]

| Differentiated Instruction (DI) | |

|---|---|

| Content | Simultaneous presentation with audio and visual media. |

| Process | More time available for a student to complete a task or encouragement of a more advanced student to look an issue into in greater depth |

| Product | Providing more expression options (creating a puppet show, writing a letter, painting, etc.). |

| Learning environment | Provision of a place where students can work alone and quietly without disruptions or instead work collectively (collaborative learning). |

| 1 | Multimedia | People learn better from words and pictures than from words alone. |

| 2 | Modality | People learn better from graphics and narrations than from on-screen (printed) text (people learn better from a multimedia message when the words are spoken rather than written). |

| 3 | Redundancy | People learn better just with animation and narration (it might be difficult for some learners to understand e.g., foreign language learners or certain auditory learning disabilities). |

| 4 | Segmenting | People learn better when a multimedia lesson is presented in user-paced segments rather than as a continuous unit. |

| 5 | Pre-training | People learn better from a multimedia lesson when they know the names and characteristics of the main concepts (they already know some of the basics). |

| 6 | Coherence | People learn better when extraneous material is excluded rather than included. |

| 7 | Signaling | People learn better when they are shown exactly what to pay attention to on the screen. |

| 8 | Spatial contiguity | People learn better when corresponding words and pictures are presented near rather than far from each other on the page or screen. |

| 9 | Temporal contiguity | People learn better when corresponding words and pictures are presented simultaneously rather than successively. |

| 10 | Personalization | People learn better when the words of a multimedia presentation are in conversational style rather than formal style |

| 11 | Voice | People learn better when the words are spoken in a standard-accented (friendly) human voice rather than a machine voice or foreign-accented human voice. |

| 12 | Image | People do not necessarily learn better when the speaker’s image is on the screen. |

| 1 | Guided-discovery | People learn better when guidance is incorporated into discovery-based multimedia environments. |

| 2 | Worked-out example | People learn better when they receive worked out examples in initial skill learning. |

| 3 | Collaboration | People can learn better with collaborative online learning activities. |

| 4 | Self-explanation | People learn better when they are encouraged to generate self-explanations during learning. |

| 5 | Animation and interactivity | People do not necessarily learn better from animation than from static diagrams. |

| 6 | Navigation | people learn better in hypertext environments when appropriate navigation aids are provided |

| 7 | Site map | People can learn better in an online environment when the interface includes a map showing where the learner is in the lesson. |

| 8 | Prior knowledge | Instructional design principles that enhance multimedia learning for novices may hinder multimedia learning for more expert learners. |

| 9 | Cognitive aging | Instructional design principles that effectively expand working memory capacity are especially helpful for older learners. |

| WUIM-AR Factor Groups | Students with SEND | Therapists | |

|---|---|---|---|

| A | Content | 4.8 | 4.6 |

| B | Technical characteristics | 4.6 | 4.2 |

| C | State of mind | 4.3 | 4.3 |

| D | Characteristics that allow learning | 4.7 | 4.6 |

| WUIM-VR Factor Groups | Students with SEND | Therapists | |

|---|---|---|---|

| A | Content | 4.9 | 4.7 |

| B | Technical characteristics | 4.5 | 4.1 |

| C | State of mind | 4.8 | 4.7 |

| D | Characteristics that allow learning | 4.8 | 4.7 |

| WUIM-AR | WUIM-VR | |

|---|---|---|

| Students with SEND | 4.6 | 4.75 |

| Therapists | 4.4 | 4.55 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaimara, P.; Deliyannis, I.; Oikonomou, A.; Fokides, E. Waking Up In the Morning (WUIM): A Smart Learning Environment for Students with Learning Difficulties. Technologies 2021, 9, 50. https://doi.org/10.3390/technologies9030050

Kaimara P, Deliyannis I, Oikonomou A, Fokides E. Waking Up In the Morning (WUIM): A Smart Learning Environment for Students with Learning Difficulties. Technologies. 2021; 9(3):50. https://doi.org/10.3390/technologies9030050

Chicago/Turabian StyleKaimara, Polyxeni, Ioannis Deliyannis, Andreas Oikonomou, and Emmanuel Fokides. 2021. "Waking Up In the Morning (WUIM): A Smart Learning Environment for Students with Learning Difficulties" Technologies 9, no. 3: 50. https://doi.org/10.3390/technologies9030050

APA StyleKaimara, P., Deliyannis, I., Oikonomou, A., & Fokides, E. (2021). Waking Up In the Morning (WUIM): A Smart Learning Environment for Students with Learning Difficulties. Technologies, 9(3), 50. https://doi.org/10.3390/technologies9030050