1. Introduction

In a recent interview, Esther Wojcicki, who is currently vice-chair of the Creative Commons board of directors, shared her views about digital revolution in the classroom. In her talk she explains that future education should aim at exploiting digital technologies for project-based learning, further pinpointing that 80% of student learning takes place outside the classroom as a consequence of the ‘actions and interactions’ that characterize these activities [

1]. This is far from unexpected: learning to become an engineer in the 21st century is a process deeply grounded in ‘experiences’.

Constructivism theories describe how knowledge is deeply tied to action. Students need to be activated in their learning process, and the learning situation should give them the opportunity to take responsibility, make decisions and deal with reality. From a more practical viewpoint, engineering work today is no longer a matter of optimizing solutions for functionality or performances. Rather, engineering endeavors are characterized by increasingly complex and multifaceted values and should consider social, political, technological, cultural and environmental issues [

2]. Furthermore, rapid changes in technology, climate change, mobility, inequality, and multicultural workplace environments, are slowly transforming the understanding of what engineering is. In order to create sufficiently good solutions to complex technical problems, engineering students need to work with authentic challenges, to be exposed to proven practices and to interact with practitioners in different roles.

It is important for engineering educators to be aware of these global macro-trends to define the way students should act and think like an engineer in the world of tomorrow [

3].

Recent educational initiatives, such as CDIO [

4], describe the latter as learning events that imply practical hands-on activities able to generate real-world verifiable results. In these events, students “enter the learning situation with more or less articulate ideas about the topic at hand, some of which may be misconceptions” (see [

5]). From this, new concepts (i.e., learning) are derived from and continuously modified by experience. Borrowing an example from [

6], approaching engineering is like exploring a foreign town: by experiencing road maps, signs and other info, you begin creating a mental model of the city, which is refined and improved as far as new information becomes available.

An important aspect of both constructivism and CDIO is that learning is broader than what occurs in classrooms and that class-bound situations often leave students without full learning of a subject (e.g., [

7,

8,

9]). At the same time, CDIO strives to incorporate the principle of active learning [

10] to a level that encompasses its application to real industry-related engineering challenges [

11]. Fostering ‘experiential learning’, exposing students to situations that mimic those encountered by engineers in their daily profession [

12,

13], becomes a critical task for engineering educators when creating constructively aligned learning activities. Yet, it is generally difficult to assess what specific learnings are leveraged by outside-the-class activities, and a question remains about how to measure with precision their effect on students’ learning.

The main objective of this paper is to propose an approach (and a process) to measure the students’ perception of learning in Conceive-Design-Implement-Operate activities conducted outside the classroom. The approach is based on the opportunity of gathering and analyzing lessons learned from the student reflection reports at the end of a team-based innovation project performed in collaboration with selected company partners. The analysis of these data makes it possible for course coordinators and program managers to understand which aspects of learning are emphasized in outside-the-class activities so to tune these experiences in a way to leverage the intended learning outcomes of a course. In situations where these activities are conducted in cross-functional teams, mixing students from different disciplines and programs, the approach is also used to benchmark the learning perceptions of the different target groups, spotlighting the most significant gaps and areas of improvement.

The paper initially describes the main features of the approach, illustrating the process by which lessons learned are collected and categorized to measure ‘learning perception’ among the student population. It further exemplifies the approach with regards to the MT2554 Value Innovation course at Blekinge Institute of Technology, presenting the findings obtained at the third and second level of the CDIO Syllabus 2.0, with an outlook on the main differences in perception among Industrial Engineering and Management (IE), Mechanical Engineering (ME) and international students in the course. The discussion section further elaborates on the benefits and the limitation of the approach and reflects on its implementation in the MT2554 course. In the last concluding section, the authors point to future directions for the development of the proposed approach.

3. Measuring Learning Perception: A ‘Lessons Learned’ Based Approach

The concept of ‘lessons learned’ is central to the development and application of the proposed approach to measure students’ learning perception.

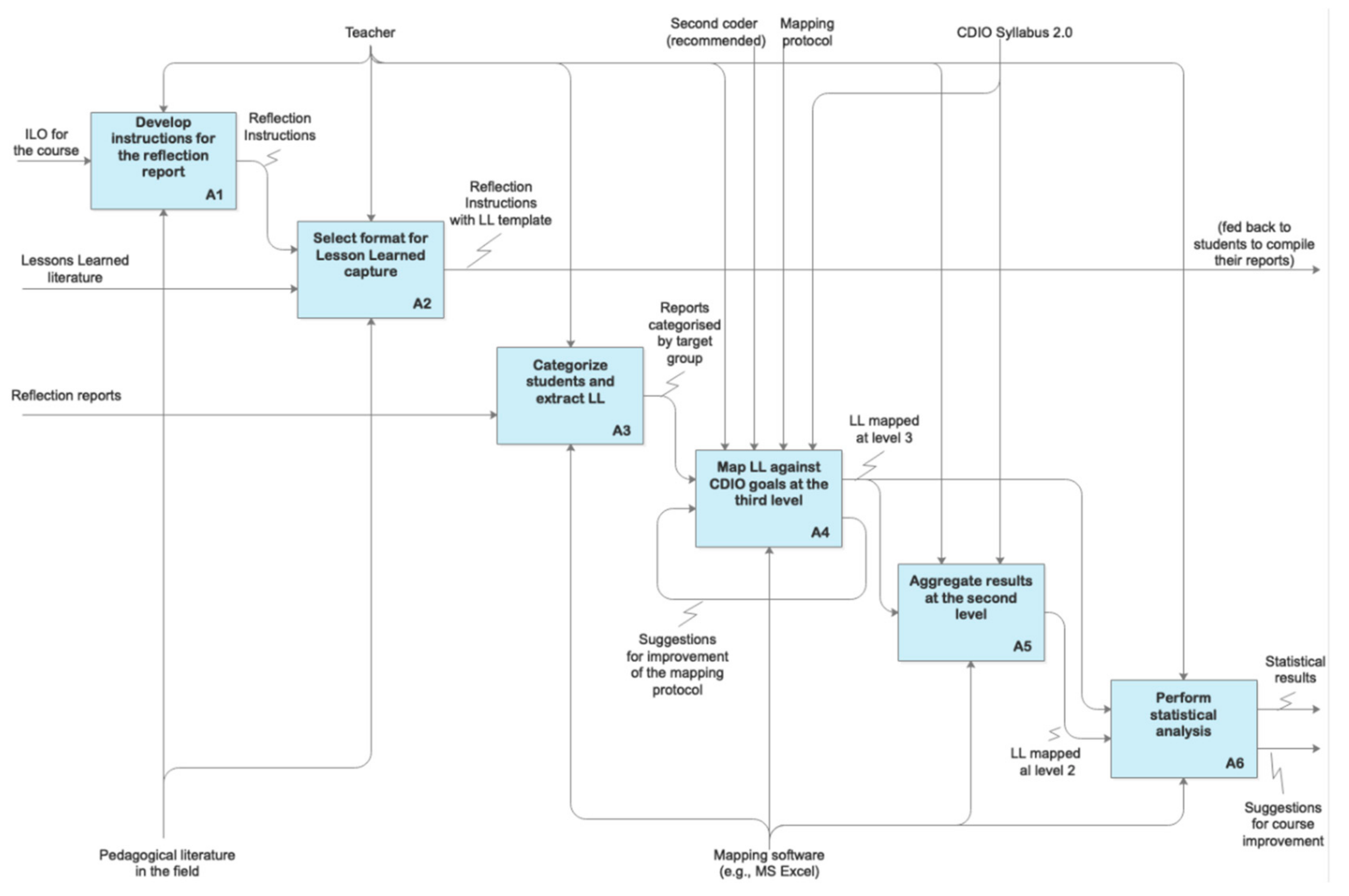

Figure 1 provides a description of the underlying process for capturing, categorizing and analyzing the lessons learned from the students’ reflection reports.

The process kicks-off with the development of detailed instructions for the reflection report on the base of the Intended Learning Outcomes (ILO) of the course. The report is used as the students’ last individual assignment in the course and aims at capturing the learning at the highest levels of the SOLO taxonomy [

20]. This instruction document features several different tasks, which typically request students to discuss the most/least valuable tools used in their projects or to elaborate on best/worst decisions taken during the course of the work. In a similar fashion, one such task asks the students to elaborate on the Lessons Learned (LL) during the project work. Here students are presented with the following question:

“Can you list three key lessons learned during the project work that you would share with future students in the course?”

In the instruction document for the assignment, students are introduced to the concept of lessons learned with more details. Emerging from the definition provided by NASA (see [

21]), those are defined as knowledge or understanding gained by experience (which may be positive, as in a successful test or mission, or negative, as in a mishap or failure) that should be actively taken into account in future projects. In this definition, LL are conceived as knowledge artifacts that convey experiential knowledge derived from success or failure of a task, decision, or process, that when reused, can positively impact an organization’s performance [

22]. Each lesson must be significant, having real or assumed impact on operations. At the same time, it should be valid and factually/technically correct. Furthermore, it should be applicable, for instance to reduce or eliminate the potential for failures in a specific process, task or decision.

LL are of great importance in engineering design and product development. These are recognized as highly knowledge-intensive activities, and LL are useful means to turn tacit knowledge to explicit (see [

23]), to share experiential knowledge across time and space [

24], and to reuse it from one project to another [

25]. Several templates are proposed to capture LL and contextualize them in real work activities to support decision-making (e.g., [

26]). These templates commonly feature a section dedicated to background information on the project, as well as an abstract, a description of the conditions for reuse, other relevant details and useful references. The template used to capture LL from the students in the course project is adapted from that proposed by Chirumalla et al. [

27] in the aerospace sector and Features 7 main steps (

Table 1).

Chirumalla et al. [

27] explain that, as the first task, it is important to provide a quick summary of the LL, describing why it is important. For this reason, at Step 0 students are asked to shortly recapitulate the main points of their lesson through a brief statement. Step 1 aims at further guiding students in deepening the description of their lesson, focusing on the background and environmental conditions of the task at hand. Step 2 is concerned with providing information about how a task was executed, how specific tools have been used and what circumstances have impacted the execution of the task. During Step 3, students are asked to clearly describe the learning from successes or failures that came across during the activity. At Step 4 they are requested to provide a detailed description of the lesson that was learned, with a focus on how this will help future students in avoiding the problem described above (or to repeat favorable outcomes). Chirumalla et al. [

27] further discuss the importance of describing how effective the lesson learned was, for instance by measuring the performance of an improvement. For this reason, the students are asked to provide some quantifiable measurements of change (e.g., time, cost, quality) in relation to the lesson being learned in the task (Step 5). Eventually, they are requested to identify the potential beneficiaries (or target audience) of the LL. The instructions containing the LL template are shared with the students during the first round of formative feedback. This is typically conducted in the form of an ‘elevator pitch’ where students are asked to hold a short presentation to illustrate the findings of their Conceive and Design steps. The reflections reports are submitted at the end of the course together with the final project report. At this step, the course responsible categorizes students in relevant groups (e.g., dividing them by program) and further extracts the LL from their reflection document.

These lessons are typically expressed by students with different levels of quality and at different levels of granularity. In order to be able to analyze them so to compare answers and identifying trends, in a way to reveal what aspects of learning have been perceived to be the most important ones by the students, these lessons need to be mapped against the goals of the CDIO Syllabus 2.0. The CDIO Syllabus 2.0 features three levels of detail: from the high-level goals (e.g., ‘Interpersonal skills’ or ‘Learning to live together’) to more teachable and assessable skills (e.g., the 3.1.1 “Forming Effective Teams” goal featured in

Figure 2).

Each LL is initially mapped to a maximum of 3 items at the third level of the Syllabus, having the care to preserve the original meaning of the lessons and to cover all the major relevant learnings expressed in the description. This activity is best performed with more than one coder, in order to reduce personal biases in the mapping. In this case, it is important to ensure good alignment among all individuals involved in the mapping process. This is typically obtained through a meeting preceding the mapping task, where the coders agree on a mapping protocol, which is updated as far as understanding grows about how an effective mapping should be performed. These data points are further aggregated at the second level, which consists of 19 items that are roughly at the level of detail of national standards and accreditation criteria. All these mapping steps are supported by an ad-hoc software, such as MS Excel or other mind-mapping tools. At the end of the process, the course coordinator can further analyze the statistical results in search of significant trends or gaps in learning perceptions, e.g., by confronting the overall results for consecutive years or by benchmarking students from different programs. Statistical tests are further applied to the dataset to assess the existence of a statistically significant difference between the target groups being analyzed. This information is further discussed, for instance, with the program manager and other teachers to discuss areas of improvement and tune outside-the-class activities to better achieve the ILO.

4. Application Example: The MT2554 Value Innovation Course

The expression ‘value innovation’ originates from the innovation management literature and refers to the creation of new and uncontested market space through the development of solutions that generate a leap of value for customers and users (while reducing cost and negative impact on our planet and society). The main objective of the MT2554 course to raise students’ understanding of how to develop innovative products and services with a focus on value creation, through the use of the Design Thinking (DT) methodology framework (Leavy, 2010). Students learn about how to analyze customers’ and stakeholders’ needs, how to generate innovative concepts, how to create value-adding prototypes and how to verify the ‘goodness’ of their idea through the development of relevant simulation models. Importantly, the course represents a paradigm shift from the traditional linear problem-solving approaches and fits well with design situations dominated by ambiguity and lack of knowledge (wicked problems).

The course is mandatory for students in the Industrial Engineering and Management (IE) Mechanical Engineering (ME) and Sustainable Product-Service Systems Innovation (up to 2017) master programs. Furthermore, the course is a popular choice among those international exchange students with sufficient skills and pre-requisites in the area of product development and mathematics. The course accounts for 7.5 ECTS points in the European Credit Transfer Systems. The latter is based on learning achievements and students’ workload, with 60 ECTS points corresponding to a full academic year with a workload range from 1500 to 1800 h. The point system captures the expected amount of workload for the students, subsequently guiding the definition of the learning objectives and the duration of the course.

4.1. Outside-the-Classroom Activities in the Course

The course features lectures on design and innovation, which include a mix of short theory reviews and active work in different group constellations. These are complemented by workshops and class exercises that give participants a first-hand experience of the most relevant tools in the DT toolbox. Following CDIO recommendations, the course is designed with an overreaching project work in collaboration with selected company partners, which kicks-off just after the course introduction and stretches along the entire period of the study (8 weeks). This is intended to give course participants the opportunity to apply the acquired theoretical base in a ‘real-life’ development project. Each project is conducted by small cross-functional design teams (4 to 6 participants), mixing students from the different programs.

As shown in

Figure 3, students are initially provided with a design brief. These are presented during a ‘showdown’ event in the classroom and features a preliminary description of the design challenge to be addressed in each project. The briefs contain contextual information about the partners’ company, a detail description of the challenge, the details of the industrial contact point, a list of the targeted learning outcomes for the project and a list of relevant ‘tools’ to be used in the different phases of the DT process. While this description is used by the students to prioritize the project they wish to work with, teams are formed having care to ensure cross-disciplinarity and balanced workload. Once the projects are allocated, activities are kicked-off at the company facilities. This event typically includes a factory tour and a demonstration of the main ‘reference object’ (product or service) under investigation. Internal company documentation is also provided to the students during the kick-off. In the following weeks, the teams have regular interactions with their partner companies. Outside-the-classroom activities initially focus on Conceive-Design stages of the project, including interviews, observations, and workshops with relevant customers and stakeholders. In all projects, students are asked to describe target groups and customer types for existing markets, to analyze the experience with current solutions, and to refine the design challenge description by applying ‘needfinding’ methods and tools in a relevant environment. By further analyzing societal and technological trends, the students are further asked to design and select innovative product and/or service concepts using systematic innovation approaches. Experience and lessons learned from the project work are initially shared during presentation events (elevator pitches) in the classroom, while peer evaluation and group coaching (feed-forward) are used to stimulate critical reflection regarding the process and the results.

In a later stage, physical prototypes, simulations models and storyboards are used as main ‘objects’ to discuss solution concepts with industrial practitioners and to verify their effectiveness in the operational environment. The results from the project are presented in an open forum (when possible) or internally at the company facilities (due to confidentiality issues). The company feedback on these final results is formalized on the Project report and constitute a main item for reflection in the individual Reflection report, which constitutes the basis for grading.

4.2. Data Collection, Mapping, and Analysis

The research presented in this paper is based on the research data collected between 2016 and 2019 during four editions of the MT2554 Value Innovation course, from a total of 141 students (53 from the Industrial Engineering and Management, 69 from the Mechanical Engineering, and 19 international students form the remaining programs). The instruction report for the assignment features the 7-step LL template proposed by Chirumalla et al. [

26]. Noticeably, each lesson learned description was limited to a maximum of 250 words, as a way to ensure focus by emphasizing synthesis.

Each lesson learned was initially mapped against 3 items at the third level of the CDIO Syllabus 2.0. In turn, this highlighted the items at the second level that were considered to have been leveraged by the project work. This mapping process foresaw 2 iterations, because the initial results were revised as far as experience with the mapping process grew. At the end of this activity, a total of approximatively 1000 data points was considered in the analysis.

Figure 4 presents an example of LL from one of the reports, showing also how it is mapped against 3 different goals at the third level of the CDIO Syllabus 2.0.

The data obtained were analyzed by calculating the percentage of students indicating a correlation between a specific learning outcome of the CDIO Syllabus 2.0 and a lesson learned. Three main studies were conducted to gather relevant input for the further development of the course structure and content. The first study simply aimed at measuring the overall students’ perception of learning with regards to outside-the-class activities. The goal, in this case, was to observe if the lessons learned from these activities were aligned with the intended learning outcomes of the course and with the CDIO goals. The second study aimed at assessing the difference in perceptions among different student populations. More specifically, the goal was to verify if a statistically significant difference in learning perception exists among students enrolled in different programs. The third study aimed at measuring the effect on learning perception of implementing a new module in the course. The approach was then implemented to measure any difference in perception among students caused by the opportunity of exploiting Discrete Event Simulations (DES) in the company-based projects.

4.3. Study 1: Measuring the Overall Students’ Perceptions of Learning

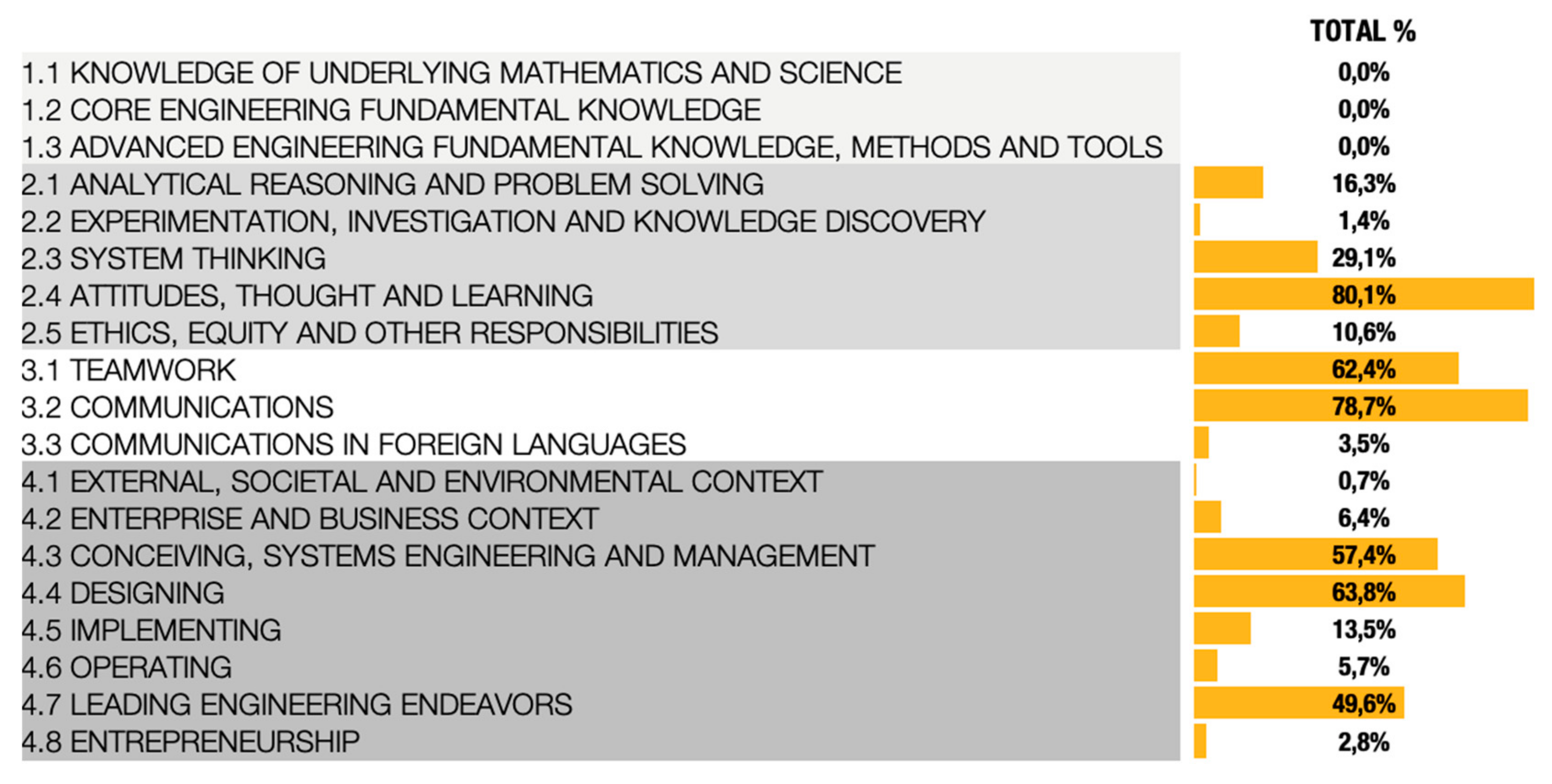

Figure 5 shows the result of the analysis conducted on the second level of the CDIO Syllabus 2.0 for all the 143 students participating in the MT2554 Value Innovation course, from 2016 to 2019. The CDIO goals are ranked based on the number of students (in percentage) that mentioned them in their reflection report at the end of the course.

The analysis shows that about 80% of the students perceive to have experienced one or more lessons learned concerning the

Attitude, Thought and Learning goal (2.4) while working with their real-life projects. The latter has been found to play an important role in the development of general character traits of initiative and perseverance. This is aligned with the CDIO purpose of emphasizing the role of ‘attitude development’ in the formation of the engineers of tomorrow [

4]. At the same time, most of the students acknowledge that the opportunity of applying their theoretical notions in real-life situations has helped them in developing more generic modes of thought of creative and critical thinking. Similarly, students point to the skills of self-awareness and metacognition, curiosity and lifelong learning and educating, and time management as main perceived learnings.

The analysis also shows that more than 78% of the students believe to have acquired lessons learned related to the

Communications goal (3.2) during the course. As highlighted by Crawley et al. [

4] this goal comprises the skills necessary for formal communication and for devising a communications strategy and structure. These are acknowledged to have fundamental importance in a modern team-based environment for becoming engineers. In this respect, project activities conducted outside the classroom are found to strongly emphasize the development of skills related to the four-common media (written, oral, graphic and electronic), as well as to more informal communications and relational skills, such as inquiry and effective listening, negotiation, advocacy, and networking. Group-based projects naturally challenge students in the development of skills related to the

Teamwork goal (3.1). Hence it is not surprising to observe this goal to be highlighted in more than 60% of the reports. Another major aspect of interest is the widespread feeling of having grown skills with regard to the

Leading Engineering Endeavors goal (4.7). Almost half of the students refer to this dimension in their reflections, elaborating mostly on topics that constitute creating a ‘purposeful vision’ for their project.

At the other end of the spectrum, it is interesting to observe that only a small portion of students emphasize aspects related to the Operating goal (4.6) as major LL. This is partly due to some design challenges more ‘wicked’ in nature, forcing students to spend a considerable amount of time in framing the problem and gathering needs. Furthermore, none of the students have been found to explicitly refer to core and/or advanced engineering fundamental knowledge goals (1.1, 1.2 and 1.3) in their reflection papers. Lessons learned in this domain have been largely overshadowed by aspects related to the development of personal, professional and interpersonal skills.

Figure 6 shows the most frequent CDIO goals at level three highlighted by the students in their reflection reports at the end of the course. This analysis spotlights with more detail the specific goals considered to have been leveraged by out-of-the-classroom activities.

More than half of the students indicate to have grown lessons learned with regards to the Understanding needs and setting goals item (56.03%). The latter is described in the CDIO framework as the ability to uncover needs and opportunities related to customers, technology and the environment. In their reports, students often discuss the challenges they encountered while aiming to grasp the context of the system goals, as well as while benchmarking market information and regulatory influences. Another aspect that is emphasized by the students in their reports is Team Operation. A major learning aspect relates to the ability of planning and facilitating effective meetings, setting goals and agendas, establishing the ground rules for the team and scheduling the execution of the project. Time and Resource Management is another lesson learned frequently discussed in the reports, with a frequency similar to the Disciplinary Design goal. Several students acknowledge that leaning activities outside the classroom have been most beneficial to realize the importance and urgency of tasks, as well as their efficient execution. Students further perceived to have developed skills with regards to the Communication Strategy goal and describe several lessons learned with regards to their increased ability to tune the communication objectives on the needs and character of the audience, to apply the correct ‘style’ for the communication situation at hand, and to employ the appropriate combination of media.

The interaction with external stakeholders is perceived to have triggered a process where students recognize ideas that may be better than their own, and where they are stimulated in negotiating acceptable solutions by reaching an agreement without compromising fundamental principles. A large share of students (about one-third of the sample) acknowledge the development of Inquiry, Listening and Dialog skills, which refers to the ability to listen carefully to others with the intention to understand—asking thoughtful questions, creating constructive dialogue and processing diverse points of view. The group project with external partners has also shown to be successful in developing students’ skills with regards to their Initiative and willingness to make decisions in the face of uncertainty. More than 30% of the students describe lessons learned related to need of leading the innovation process and taking decisions on the information at hand.

4.4. Study 2: Comparing Learning Perception among Students from Different Programs

The analysis was further detailed by clustering the students based on their enrollment in a study program in order to determine the existence of a statistically significant difference between different students’ backgrounds. A limitation in such analysis was posed by excluding the students enrolled in the master of Sustainable Product-Service Systems Innovation, given their limited number, and by excluding the exchange students due to the high heterogeneity of background in their group composition. The presence of differences between the two remaining independent groups (i.e., the students in Mechanical Engineering, and the students in Industrial Engineering and Management) consisted in verifying if the lessons learned identified were set irrespectively from the type of the group. The results of this analysis at the second level of the CDIO Syllabus are shown in

Figure 7. This displays the ratio of students as % of the total from each program that refers to a specific CDIO goal, as well as the positive of the negative delta between the IE and ME groups.

The most significant difference in student’s perception is observed with regards to the Analytical Reasoning And Problem Solving goal, a lesson learned more often described by students in mechanical engineering. Systems Thinking, on the other hand, is the CDIO goal that differs the most when looking at what IE students emphasize in their report compared to their counterparts. Less significant differences are observed with regards to the CDIO goals related to Communications and Teamwork abilities. In order to analyze these results with more detail, the statistical relevance of such differences, the Kruskal-Wallis H test was applied to analyze the responses from both groups. The reason why this is believed to be the most suitable test in this situation is that it builds on three assumptions needed to be verified, namely:

the dependent variable is measured at the ordinal or continuous level;

the independent variable consists of two categorical independent groups;

there is an independence of observations.

Kruskal-Wallis H test was preferred to similar approaches, such as the Mann-Whitney U test, because for the latter, it was not possible to run a Levene F test on the homogeneity of variance on the dataset, given the absolute deviation being constant for each datapoint. The results of the test are captured in form of a so-called p-value. This describes the probability of obtaining the observed results of a test, assuming that the null hypothesis is correct. One commonly used

p-value is 0.05, meaning that strong evidence against the null hypothesis exists only for those items with a p-value below this threshold. Eventually, Kruskal-Wallis H test shows that

The Analytical Reasoning And Problem Solving goal is the only one showing a meaningful difference (

p-value = 0.009) among students of the different study programs (

Figure 8).

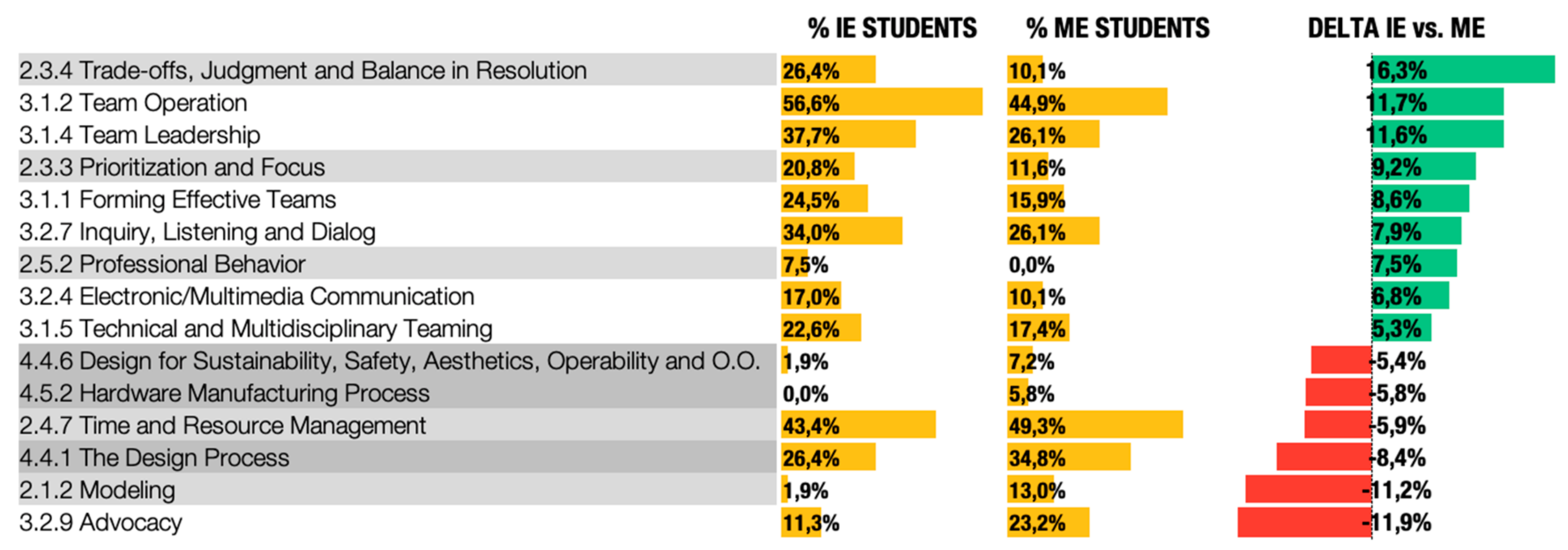

Also, in this case, the analysis was deepened to understand the differences in perception between IE and ME students.

Figure 9 shows an extract of the analysis conducted at the third level, highlighting the goals that differ the most between the two groups.

At first glance, students in the ME program emphasize lessons learned related to the different components of the design process—as well as aspects of ‘advocacy’—more than their counterpart. Activities conducted outside the classroom are acknowledged by these students to increase their ability to clearly explain their own point of view, to explain how one reaches an interpretation or conclusion, and to adjust their ‘selling pitch’ on audience characteristics. IE students seem, instead, to emphasize aspects related to prioritization and trade-off resolution more frequently than mechanical engineers. The latter seems relatively less sensitive towards aspects related to tensions between various factors when approaching system design. Team Operation, Team Leadership, and Prioritization and focus are goals emphasized comparably more by IE students than ME students.

The Kruskal-Wallis test was further applied to verify the statistical significance of the results presented in

Figure 9. Noticeably, only the results related to the

Modelling goal (

p-value = 0.023) proved to be substantiated by statistical evidence in relation to the enrollment of students in different programs. In practice, the p-value measured for all the results related to the CDIO goal at level 3 (minus one) cannot rule out the probability of observing the above differences if no difference exists.

4.5. Study 3: Assessing the Effect on Learning of Introducing a New Course Module

Another aspect of interest is the opportunity to measure the impact of a new course module on the students’ learning perceptions. In 2018, the course syllabus for Value Innovation was modified to introduce Discrete Event Simulation (DES) as a support tool for the last stage of the Design Thinking methodology (Implementation). This new module consists of an 8-h tutorial with individual exercises. In their projects, students were asked to apply DES to demonstrate the ability of their solutions to meet the customer needs, as well as the cost targets, by developing a model of the To-Be operations. This task was meant for the students to deepen the interaction with the partner company, identifying relevant activities to be simulated in the process and gathering data for populating and running the DES.

The approach makes possible to observe how the introduction of the module has impacted the students’ perception of learning with regards to outside-the-class activities (

Figure 10). At first glance, it is noticeable how the focus of the lessons learned at the second level of the CDIO Syllabus 2.0 has shifted from communication-related aspects to problem-solving, analytical reasoning and design.

The Kruskal-Wallis test was applied to verify statistical differences in learning perception among the 2 groups of students—those participating in 2018 and 2019 (i.e., working with DES), vs. those taking part during 2016 and 2017 (i.e., not using DES). Only 2 items at the second level hold statistical relevance: the Analytical Reasoning And Problem Solving (p-value = 0.003) and the Designing (p-value = 0.000) goal. With regards to the first item, it is not surprising to observe students working with DES to describe with more frequency how to deal with the generation of quantitative models and simulations, as well as about how to generate assumptions to simplify complex systems and the environment. Looking at the DESIGNING goal, the analysis at the third level shows differences between the two groups with regards to the Design Process (p-value = 0.000), the Design for Sustainability, Safety, Aesthetics, Operability and Other Objectives (p-value = 0.003), and the Designing a Sustainable Implementation Process (p-value = 0.006) goals.

The introduction of the new module appears to emphasize students’ learning perception with regards to the need of considering a range of lifecycle aspects to optimize a proposed design. At the same time, students seem to have learned more about the need to accommodate changing requirements and to iterate the design until convergence. The introduction of the new module has also raised awareness among students about the need to include social sustainability aspects in the design, discussing lessons learned centered on human users/operators and their allocation/utilization in the tasks.

5. Discussion and Conclusions

The task of engineering educators is that of teaching students who are “ready to engineer, that is, broadly prepared with both pre-professional engineering skills and deep knowledge of the technical fundamentals” [

28] (p.11). Fostering ‘experiential learning’ in real-life situations becomes a critical task in this process, and it is crucial to be able to assess and measure the effect these activities have on the students’ perception of learning.

This paper proposes an approach to support the generally difficult task of assessing how specific learnings are leveraged by outside-the-class activities. The main goal of this approach is to go beyond the traditional course evaluation questionnaires, and rather dig down into the student’s reflections to obtain a more complete and multi-faceted picture of how learning takes place outside the classroom. Ultimately, the results of this analysis are intended to support course coordinators and program managers in constructively aligned learning activities in courses and curricula. By facilitating the benchmarking across years and programs, they support the selection of relevant cases and provide information to fine-tune teaching material to achieve the ILO for engineering education.

From a methodological perspective, the approach presented in this paper does encounter some limitations to consider when interpreting the results. First of all, the number of lessons learned that the students are allowed to formalize in the reflection report is limited. The effect of this is that, on the one hand, some students might feel forced to emphasize lessons learned that they have barely perceived. Other students might, on the other end, be forced to select the most relevant ones from a large pool of almost equivalent items. Additionally, the mapping between lessons learned and the CDIO syllabus is not always a straightforward process and biases can be introduced. In the described implementation of the approach, each LL has been mapped to a maximum of 3 CDIO items. However, some more verbose descriptions left room for interpretation and could possibly belong to several more goals, forcing the authors to choose between the goals who best fit the description.

The results of these investigations are intended to provide a basis for the future development of innovation projects with engineering students, supporting the definition of learning outcomes that are relevant for the CDIO Syllabus 2.0, and of constructively aligned learning experiences. Importantly, the methods described - based on the use of lessons learned and their mapping towards the Syllabus—is intended to be generic enough to be re-used across courses and programs, to measure the effect of alternative strategies for active learning in different contexts. As final reflection, the individual assignment has proven to be a great needfinding tool to discover preferences among students and constructively aligned learning activities accordingly.