Student Participation in Online Content-Related Discussion and Its Relation to Students’ Background Knowledge

Abstract

:1. Introduction

1.1. Analysing Online Discussion

1.2. Research Questions

- What are the network role counts for students in online discussions of a blended university course?

- What are the structural similarities between each student’s background knowledge and the aggregated body of knowledge when collected and analyzed with the new method introduced in this paper?

- Is there a significant relationship between the structural measures of background knowledge and online discussions in a blended university course?

2. Materials and Methods

Isaac Newton [Laws of Mechanics, Falling Apple Story, Robert Hooke, Law of Gravity]

Isaac Newton was an English scholar and philosopher. He wrote Philosophiae Naturalis Principia Mathematica, where he published three laws of mechanics—that is, Newton’s laws of motion. The first law was the law of inertia, the second law was the basic law of dynamics, and the third law was force and counter-force. In the book, the general law of gravity is also presented. It is believed that Isaac Newton was inspired to investigate gravity when he saw an apple in the garden falling to the ground. He was also in correspondence with Robert Hooke, from whom Newton heard the hypothesis of the sun’s attraction, which is inversely proportional to the square of the distance. This led Newton to investigate the matter, and eventually got his theory published.

2.1. Role Analysis of Online Discussion

2.2. Structural Analysis of Background Knowledge

2.3. Correlation Analysis

3. Results

3.1. Roles

3.2. Similarities

3.3. Correlation of Similarities and Roles

4. Discussion

4.1. Role Analysis

4.2. Similarity Analysis

4.3. Correlation Analysis

4.4. Limitations and Implications

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. The Guiding Questions Used During the Online Discussions

| 1st Period |

| Time span: 1572–1704 Theme: Scientific revolution |

| Article: L. Rosenfeld (1965), Newton and the law of gravitation [50] |

Guiding questions:

|

| 2nd Period |

| Time span: 1704–1789 Theme: Enlightenment and the science of enlightenment |

| Article: E. McMullin (2002), The Origins of the Field Concept in Physics [51] |

Guiding questions:

|

| 3rd Period |

| Time span: 1789–1848 Industrialisation and liberal educational ideal |

| Article: D. Sherry (2011), Thermoscopes, thermometers, and the foundations of measurement [52] |

Guiding questions:

|

| 4th Period |

| Time span: 1848–1900 Theme: Technologizing society and its science |

| Article: O. Darrigol (1999), Baconian Bees in the Electromagnetic Fields: Experimenter-Theorists In Nineteenth-Century Electrodynamics [53] |

Guiding questions:

|

| 5th Period |

| Time span: 1900–1914 Theme: Modern science and technologicalization I |

| Article: H. Kragh (2011), Resisting the Bohr Atom: The Early British Opposition [54] |

Guiding questions:

|

| 6th Period |

| Time span: 1914–1928 Theme: Modern science and technologicalization II |

| Article: K. Camilleri (2006), Heisenberg and the wave-particle duality [55] |

Guiding questions:

|

References

- Dillenbourg, P.; Eurelings, A.; Hakkarainen, K. Introduction. In European Perspectives on Computer-Supported Collaborative Learning; Dillenbourg, P., Eurelings, A., Hakkarainen, K., Eds.; University of Maastricht: Maastricht, The Netherlands, 2001. [Google Scholar]

- Stahl, G.; Koschmann, T.; Daniel, S. Computer-Supported Collaborative Learning. In The Cambridge Handbook of the Learning Sciences; Sawyer, R.K., Ed.; Cambridge University Press: Oxford, UK, 2014; Chapter 24; pp. 479–500. [Google Scholar]

- Scardamalia, M.; Bereiter, C. Computer Support for Knowledge-Building Communities. J. Learn. Sci. 1994, 3, 265–283. [Google Scholar] [CrossRef]

- Scardamalia, M.; Bereiter, C. Knowledge Building and Knowledge Creation. In The Cambridge Handbook of the Learning Sciences; Sawyer, R.K., Ed.; Cambridge University Press: Oxford, UK, 2014; Chapter 20; pp. 397–417. [Google Scholar]

- Stahl, G.K. Group Cognition: Computer Support for Building Collaborative Knowledge; Acting with Technology; MIT Press: Cambridge, MA, USA, 2006; p. 510. [Google Scholar]

- Pena-Shaffa, J.B.; Nicholls, C. Analyzing student interactions and meaning construction in computer bulletin board discussions. Comput. Educ. 2004, 42, 243–265. [Google Scholar] [CrossRef]

- Martínez, A.; Dimitriadis, Y.; Gómez-Sánchez, E.; Rubia-Avi, B.; Jorrín-Abellán, I.; Marcos, J.A. Studying participation networks in collaboration using mixed methods. Int. J. Comput. Support. Collab. Learn. 2006, 1, 383–408. [Google Scholar] [CrossRef]

- Schellens, T.; Valcke, M. Collaborative learning in asynchronous discussion groups: What about the impact on cognitive processing? Comput. Hum. Behav. 2005, 21, 957–975. [Google Scholar] [CrossRef]

- Sinha, S.; Rogat, T.K.; Adams-Wiggins, K.R.; Hmelo-Silver, C.E. Collaborative group engagement in a computer-supported inquiry learning environment. Int. J. Comput. Support. Collab. Learn. 2015, 10, 273–307. [Google Scholar] [CrossRef]

- Järvelä, S.; Malmberg, J.; Koivuniemi, M. Recognizing socially shared regulation by using the temporal sequences of online chat and logs in CSCL. Learn. Instruct. 2016, 42, 1–11. [Google Scholar] [CrossRef]

- Järvelä, S.; Järvenoja, H.; Malmberg, J. Capturing the dynamic and cyclical nature of regulation: Methodological Progress in understanding socially shared regulation in learning. Int. J. Comput. Support. Collab. Learn. 2019, 14, 425–441. [Google Scholar] [CrossRef] [Green Version]

- Noroozi, O.; Teasley, S.D.; Biemans, H.J.; Weinberger, A.; Mulder, M. Facilitating learning in multidisciplinary groups with transactive CSCL scripts. Int. J. Comput. Support. Collab. Learn. 2013, 8, 189–223. [Google Scholar] [CrossRef]

- Verdú, N.; Sanuy, J. The role of scaffolding in CSCL in general and in specific environments. J. Comput. Assist. Learn. 2014, 30, 337–348. [Google Scholar] [CrossRef] [Green Version]

- Lucas, M.; Gunawardena, C.; Moreira, A. Assessing social construction of knowledge online: A critique of the interaction analysis model. Comput. Hum. Behav. 2014, 30, 574–582. [Google Scholar] [CrossRef]

- Jeong, A.C. The Sequential Analysis of Group Interaction and Critical Thinking in Online. Am. J. Distance Educ. 2003, 17, 25–43. [Google Scholar] [CrossRef]

- Heo, H.; Lim, K.Y.; Kim, Y. Exploratory study on the patterns of online interaction and knowledge co-construction in project-based learning. Comput. Educ. 2010, 55, 1383–1392. [Google Scholar] [CrossRef]

- Wecker, C.; Fischer, F. Where is the evidence? A meta-analysis on the role of argumentation for the acquisition of domain-specific knowledge in computer-supported collaborative learning. Comput. Educ. 2014, 75, 218–228. [Google Scholar] [CrossRef]

- Jeong, H.; Hmelo-Silver, C.E.; Jo, K. Ten years of Computer-Supported Collaborative Learning: A meta-analysis of CSCL in STEM education during 2005–2014. Educ. Res. Rev. 2019, 28, 100284. [Google Scholar] [CrossRef]

- Schellens, T.; Valcke, M. Fostering knowledge construction in university students through asynchronous discussion groups. Comput. Educ. 2006, 46, 349–370. [Google Scholar] [CrossRef]

- Koponen, I.T.; Nousiainen, M. An agent-based model of discourse pattern formation in small groups of competing and cooperating members. J. Artif. Soc. Soc. Simul. 2018, 21. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Woo, H.L.; Zhao, J. Investigating critical thinking and knowledge construction in an interactive learning environment. Interact. Learn. Environ. 2009, 17, 95–104. [Google Scholar] [CrossRef]

- Boelens, R.; De Wever, B.; Voet, M. Four key challenges to the design of blended learning: A systematic literature review. Educ. Res. Rev. 2017, 22, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Eryilmaz, E.; van der Pol, J.; Ryan, T.; Clark, P.M.; Mary, J. Enhancing student knowledge acquisition from online learning conversations. Int. J. Comput. Support. Collab. Learn. 2013, 8, 113–144. [Google Scholar] [CrossRef]

- Engelmann, T.; Hesse, F.W. How digital concept maps about the collaborators’ knowledge and information influence computer-supported collaborative problem solving. Int. J. Comput. Support. Collab. Learn. 2010, 5, 299–319. [Google Scholar] [CrossRef]

- Arvaja, M. Contextual perspective in analysing collaborative knowledge construction of two small groups in web-based discussion. Int. J. Comput. Support. Collab. Learn. 2007, 2, 133–158. [Google Scholar] [CrossRef]

- Marzano, R.J. Building Background Knowledge for Academic Achievement: Research on What Works in Schools; Association for Supervision and Curriculum Development: Alexandria, VA, USA, 2004; p. 217. [Google Scholar]

- Zambrano R., J.; Kirschner, F.; Sweller, J.; Kirschner, P.A. Effects of prior knowledge on collaborative and individual learning. Learn. Instruct. 2019, 63, 101214. [Google Scholar] [CrossRef]

- De Wever, B.; Schellens, T.; Valcke, M.; Van Keer, H. Content analysis schemes to analyze transcripts of online asynchronous discussion groups: A review. Comput. Educ. 2006, 46, 6–28. [Google Scholar] [CrossRef]

- Puntambekar, S.; Erkens, G.; Hmelo-Seilver, C. Introduction. In Analyzing Interactions in CSCSL: Methods, Approaches and Issues; Puntambekar, S., Erkens, G., Hmelo-Seilver, C., Eds.; Springer: New York, NY, USA, 2011. [Google Scholar]

- Wasserman, S. Social Network Analysis: Methods and Applications; Structural Analysis in the Social Sciences; Cambridge University Press: Cambridge, UK, 1994. [Google Scholar]

- De Laat, M.; Lally, V.; Lipponen, L.; Simons, R.J. Investigating patterns of interaction in networked learning and computer-supported collaborative learning: A role for Social Network Analysis. Int. J. Comput. Support. Collab. Learn. 2007, 2, 87–103. [Google Scholar] [CrossRef]

- Smith Risser, H.; Bottoms, S.A. “Newbies” and “Celebrities”: Detecting social roles in an online network of teachers via participation patterns. Int. J. Comput. Support. Collab. Learn. 2014, 9, 433–450. [Google Scholar] [CrossRef]

- Dado, M.; Bodemer, D. A review of methodological applications of social network analysis in computer-supported collaborative learning. Educ. Res. Rev. 2017, 22, 159–180. [Google Scholar] [CrossRef]

- Barabási, A. The network takeover. Nat. Phys. 2011, 8, 14–16. [Google Scholar] [CrossRef]

- Knoke, D.; Yang, S. Social Network Analysis; SAGE Publications, Inc.: Los Angeles, CA, USA; London, UK, 2008. [Google Scholar]

- Cesareni, D.; Cacciamani, S.; Fujita, N. Role taking and knowledge building in a blended university course. Int. J. Comput. Support. Collab. Learn. 2016, 11, 9–39. [Google Scholar] [CrossRef] [Green Version]

- Itzkovitz, S.; Milo, R.; Kashtan, N.; Ziv, G.; Alon, U. Subgraphs in random networks. Phys. Rev. E 2003, 68, 026127. [Google Scholar] [CrossRef] [Green Version]

- Milo, R.; Shen-Orr, S.; Itzkovitz, S.; Kashtan, N.; Chklovskii, D.; Alon, U. Network motifs: Simple building blocks of complex networks. Science 2002, 298, 824–827. [Google Scholar] [CrossRef] [Green Version]

- McDonnell, M.D.; Yaveroğlu, Ö.N.; Schmerl, B.A.; Iannella, N.; Ward, L.M. Motif-Role-Fingerprints: The Building- Blocks of Motifs, Clustering-Coefficients and Transitivities in Directed Networks. PLoS ONE 2014, 9, e114503. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Estrada, E. The Structure of Complex Networks: Theory and Applications; Oxford University Press: Oxford, UK, 2012. [Google Scholar]

- Katz, L. A new status index derived from sociometric analysis. Psychometrika 1953, 18, 39–43. [Google Scholar] [CrossRef]

- Jaccard, P. The Distribution of the Flora in the Alpine Zone. 1. N. Phytol. 1912, 11, 37–50. [Google Scholar] [CrossRef]

- Koponen, I.T.; Nousiainen, M. Formation of reciprocal appreciation patterns in small groups: An agent-based model. Complex Adapt. Syst. Model. 2016, 4, 24. [Google Scholar] [CrossRef] [Green Version]

- Hiltz, S.R.; Goldman, R. What Are Asynchronous Learning Networks. In Learning Together Online, Research in Asynchronous Learning Networks; Hiltz, S.R., Goldman, R., Eds.; Lawrene Erlbaum Associates: Mahwah, NJ, USA, 2005. [Google Scholar]

- Wise, A.F.; Hausknecht, S.N.; Zhao, Y. Attending to others’ posts in asynchronous discussions: Learners’ online “listening” and its relationship to speaking. Int. J. Comput. Support. Collab. Learn. 2014, 9, 185–209. [Google Scholar] [CrossRef]

- Lommi, H.; Koponen, I.T. Network cartography of university students’ knowledge landscapes about the history of science: Landmarks and thematic communities. Appl. Netw. Sci. 2019, 4. [Google Scholar] [CrossRef]

- De Domenico, M.; Biamonte, J. Spectral Entropies as Information-Theoretic Tools for Complex Network Comparison. Phys. Rev. X 2016, 6, 041062. [Google Scholar] [CrossRef] [Green Version]

- Holme, P.; Saramäki, J. Temporal networks. Phys. Rep. 2012, 519, 97–125. [Google Scholar] [CrossRef] [Green Version]

- Kovanen, L.; Karsai, M.; Kaski, K.; Kertész, J.; Saramäki, J. Temporal motifs. In Temporal Networks; Springer: Berlin/Heidelberg, Germany, 2013; pp. 119–133. [Google Scholar]

- Rosenfeld, L. Newton and the Law of Gravitation. Arch. Hist. Exact Sci. 1965, 2, 365–386. [Google Scholar] [CrossRef]

- McMullin, E. The Origins of the Field Concept in Physics. Phys. Perspect. 2002, 4, 13–39. [Google Scholar] [CrossRef]

- Sherry, D. Thermoscopes, thermometers, and the foundations of measurement. Stud. Hist. Philos. Sci. Part A 2011, 42, 509–524. [Google Scholar] [CrossRef]

- Darrigol, O. Baconian bees in the electromagnetic fields: Experimenter-theorists in nineteenth-century electrodynamics. Stud. Hist. Philos. Sci. Part B Stud. Hist. Philos. Mod. Phys. 1999, 30, 307–345. [Google Scholar] [CrossRef]

- Kragh, H. Resisting the Bohr Atom: The Early British Opposition. Phys. Perspect. 2011, 13, 4–35. [Google Scholar] [CrossRef]

- Camilleri, K. Heisenberg and the wave-particle duality. Stud. Hist. Philos. Sci. Part B Stud. Hist. Philos. Mod. Phys. 2006, 37, 298–315. [Google Scholar] [CrossRef]

| Period | Time Span | Theme |

|---|---|---|

| 1st | 1572–1704 | Scientific revolution |

| 2nd | 1704–1789 | Enlightenment and the science of enlightenment |

| 3rd | 1789–1848 | Industrialization and liberal educational ideal |

| 4th | 1848–1900 | Technologizing society and its science |

| 5th | 1900–1914 | Modern science and technologicalization I |

| 6th | 1914–1928 | Modern science and technologicalization II |

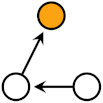

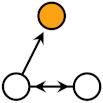

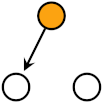

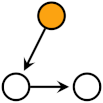

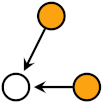

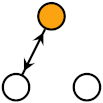

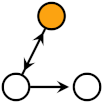

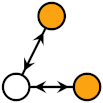

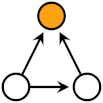

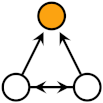

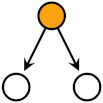

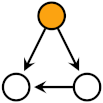

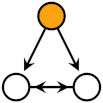

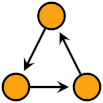

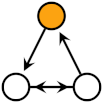

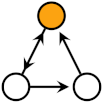

| Role | Motif-Roles | |||||||

|---|---|---|---|---|---|---|---|---|

| r = 1 m = 1 |  | r = 2 m = 2 |  | r = 3 m = 3 |  | ||

| 1-sink | ||||||||

| r = 4 m = 2 |  | r = 5 m = 4 |  | r = 6 m = 7 |  | ||

| 1-source | ||||||||

| r = 7 m = 3 |  | r = 8 m = 7 |  | r = 9 m = 8 |  | ||

| 1-recip | ||||||||

| r = 10 m = 4 |  | r = 11 m = 5 |  | r = 12 m = 6 |  | ||

| 2-sink | ||||||||

| r = 13 m = 1 |  | r = 14 m = 5 |  | r = 15 m = 11 |  | ||

| 2-source | ||||||||

| r = 16 m = 2 |  | r = 17 m = 5 |  | r = 18 m = 9 |  | r = 19 m = 10 |  |

| relay | ||||||||

| r = 20 m = 7 |  | r = 21 m = 10 |  | r = 22 m = 11 |  | r = 23 m = 12 |  |

| relay & sink | ||||||||

| r = 24 m = 3 |  | r = 25 m = 6 |  | r = 26 m = 10 |  | r = 27 m = 12 |  |

| relay & source | ||||||||

| r = 28 m = 8 |  | r = 29 m = 12 |  | r = 30 m = 13 |  | ||

| all | ||||||||

| Student | 1st Period | 2nd Period | 3rd Period | 4th Period | 5th Period | 6th Period |

|---|---|---|---|---|---|---|

| A | 1-source | 1-recip | 1-sink | 1-sink | 1-source | 1-sink |

| B | 2-sink | 1-source | all | 2-source | all | all |

| C | 2-sink | all | all | all | 2-sink | 2-source |

| D | 2-source | 1-recip | 1-sink | 1-sink | 1-recip | 1-recip |

| E | 1-source | 1-recip | 1-recip | 2-source | ||

| F | 2-sink | 1-sink | 1-source | 1-recip | 1-recip | |

| G | 1-sink | 1-recip | 1-recip | all | ||

| H | 1-recip | all | 1-source | all | 2-source | 2-source |

| I | all | 1-source | ||||

| J | 1-recip | 1-recip | ||||

| K | 1-sink | 1-recip | 1-sink |

| Kendall- | Spearman r | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Role | p1 | p2 | p3 | p4 | p5 | p6 | p1 | p2 | p3 | p4 | p5 | p6 |

| 1 | −0.31 | −0.57 * | 0.09 | −0.32 | −0.36 | 0.12 | −0.43 | −0.75 * | 0.21 | −0.56 | −0.62 | 0.22 |

| 2 | −0.59 * | −0.46 | 0.63 * | 0.32 | −0.21 | −0.53 | −0.74 * | −0.58 | 0.76 * | 0.36 | −0.21 | −0.67 |

| 3 | −0.44 | −0.57 * | 0.26 | −0.32 | −0.54 | −0.84 | −0.67 | −0.75 * | 0.45 | −0.56 | −0.68 | −0.89 * |

| 4 | −0.31 | −0.55 * | 0.20 | −0.22 | 0.15 | 0.32 | −0.48 | −0.70 * | 0.43 | −0.37 | 0.15 | 0.35 |

| 5 | −0.57 * | −0.49 | 0.51 | 0.32 | 0.30 | −0.32 | −0.76 * | −0.62 | 0.67 * | 0.36 | 0.52 | −0.36 |

| 6 | −0.44 | −0.51 | 0.23 | −0.11 | 0.50 | 0.11 | −0.68 * | −0.65 | 0.44 | −0.15 | 0.59 | −0.05 |

| 7 | −0.33 | −0.55 * | 0.26 | −0.22 | 0.30 | −0.12 | −0.62 | −0.70 * | 0.45 | −0.37 | 0.33 | −0.22 |

| 8 | −0.54 * | −0.51 | 0.29 | −0.11 | 0.45 | 0.11 | −0.73 * | −0.65 | 0.47 | −0.15 | 0.58 | −0.05 |

| 9 | −0.42 | −0.55 * | 0.26 | −0.22 | 0.26 | −0.12 | −0.66 | −0.70 * | 0.45 | −0.37 | 0.34 | −0.22 |

| Kendall- | Spearman r | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Role | p1 | p2 | p3 | p4 | p5 | p6 | p1 | p2 | p3 | p4 | p5 | p6 |

| 1 | −0.11 | −0.44 | −0.19 | −0.18 | −0.45 | −0.71 | −0.18 | −0.64 | −0.13 | −0.32 | −0.63 | −0.77 |

| 2 | −0.47 | −0.30 | 0.52 | 0.91 | −0.22 | −0.18 | −0.63 | −0.40 | 0.65 | 0.95 | −0.26 | −0.32 |

| 3 | −0.29 | −0.44 | 0.04 | −0.18 | −0.77 | −0.71 | −0.52 | −0.64 | 0.21 | −0.32 | −0.87 | −0.77 |

| 4 | −0.11 | −0.42 | −0.04 | 0.00 | 0.36 | 0.71 | −0.25 | −0.57 | 0.18 | 0.00 | 0.45 | 0.77 |

| 5 | −0.44 | −0.34 | 0.37 | 0.91 | 0.60 | 0.18 | −0.66 | −0.45 | 0.53 | 0.95 | 0.78 | 0.32 |

| 6 | −0.29 | −0.37 | 0.00 | 0.18 | 0.89 * | 0.91 | −0.55 | −0.49 | 0.19 | 0.32 | 0.95 * | 0.95 |

| 7 | −0.14 | −0.42 | 0.04 | 0.00 | 0.60 | 0.71 | −0.45 | −0.57 | 0.21 | 0.00 | 0.67 | 0.77 |

| 8 | −0.40 | −0.37 | 0.07 | 0.18 | 0.84 | 0.91 | −0.61 | −0.49 | 0.24 | 0.32 | 0.89 * | 0.95 |

| 9 | −0.25 | −0.42 | 0.04 | 0.00 | 0.63 | 0.71 | −0.51 | −0.57 | 0.21 | 0.00 | 0.71 | 0.77 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Turkkila, M.; Lommi, H. Student Participation in Online Content-Related Discussion and Its Relation to Students’ Background Knowledge. Educ. Sci. 2020, 10, 106. https://doi.org/10.3390/educsci10040106

Turkkila M, Lommi H. Student Participation in Online Content-Related Discussion and Its Relation to Students’ Background Knowledge. Education Sciences. 2020; 10(4):106. https://doi.org/10.3390/educsci10040106

Chicago/Turabian StyleTurkkila, Miikka, and Henri Lommi. 2020. "Student Participation in Online Content-Related Discussion and Its Relation to Students’ Background Knowledge" Education Sciences 10, no. 4: 106. https://doi.org/10.3390/educsci10040106

APA StyleTurkkila, M., & Lommi, H. (2020). Student Participation in Online Content-Related Discussion and Its Relation to Students’ Background Knowledge. Education Sciences, 10(4), 106. https://doi.org/10.3390/educsci10040106