Comprehension-Oriented Learning of Cell Biology: Do Different Training Conditions Affect Students’ Learning Success Differentially?

Abstract

:1. Introduction

1.1. Concept Mapping from a Cognitive and Metacognitive Perspective

1.2. Concept Mapping, Prior Knowledge, and Cognitive Load

1.3. Training in Concept Mapping

- (a)

- (b)

- (c)

- (d)

1.4. Research Question and Hypotheses

- (1)

- More organization and elaboration processes during learning in terms of information integration into prior knowledge structures and thus knowledge consolidation.

- (2)

- Less perceived cognitive load during learning when using CM.

- (3)

- Better handling and editing prescribed and creating own concept maps, respectively.

2. Materials and Methods

2.1. Sample

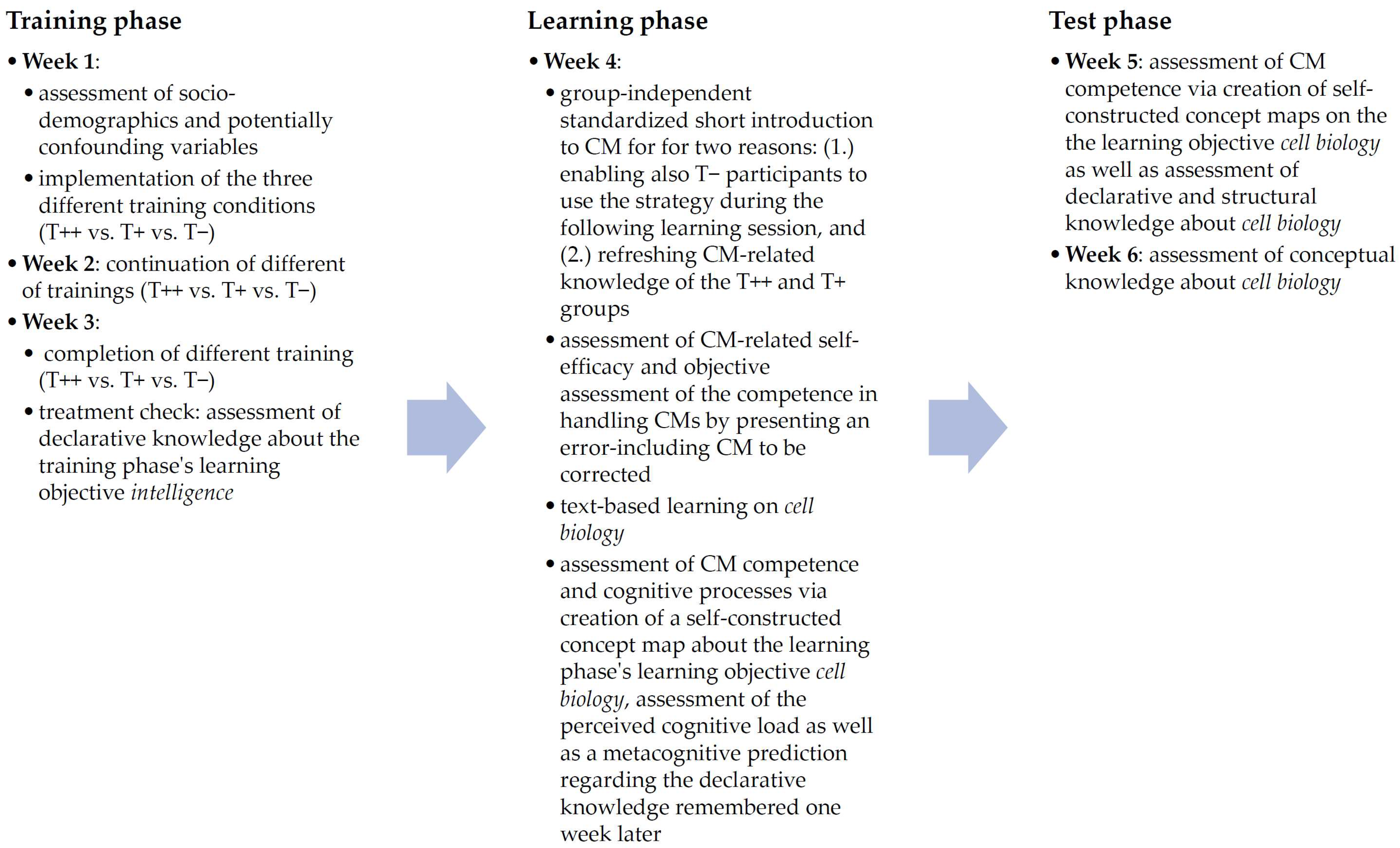

2.2. Experimental Design

- (1)

- The first group (T++) was given additional scaffolding and feedback during CM practice and participants received a strictly guided training. First, the instructor gave an overview of the day’s learning objectives and main topics in terms of an advance organizer. An introduction to the CM strategy followed, including declarative knowledge elements about its practical use. A list of metacognitive prompts [41] (e.g., “Did I label all arrows clearly, concisely and correctly?” or “Where can I draw new connections?”; see Supplementary Materials Sections S2.3 and S2.4) was handed out for the individual work phase to induce the use of the relevant learning strategies of elaboration and organization [18], but prompts were reduced over the course of the training phase in terms of fading [130]. During the subsequent work phase, participants constructed concept maps based on learning text passages dealing with the abstract topic of intelligence. Here, they received different scaffolds (see Supplementary Materials Sections S2.1 and 2.2): in week 1, a skeleton map allowed participants to focus entirely on the main concepts and linking terms [80]; in week 2, a given set of the 12 main concepts taken from the learning material allowed participants to define links between these concepts on their own; and in week 3, T++-participants were able to construct concept maps completely by themselves. After each work phase, one of the participants’ completed maps was transferred to a blackboard and discussed. An expert map was presented and discussed as well, so participants were able to compare it to their own. Additionally, all participants received individual verbal as well as written feedback on their constructed concept maps. Considering previous findings [131,132,133], we decided on a knowledge of correct results (KCR) feedback approach but limited the feedback to marking CM errors and pointing out any resulting misconceptions. In addition, a list of the most common CM errors was available (see Supplementary Materials Sections S2.5 and S2.6).

- (2)

- The second group (T+) constructed concept maps in each session on their own without any additional support. As analyzing and providing feedback is very time-consuming, this approach is more economic and has been found to be effective as well [134]. The training sessions’ sequence was framed in almost the same manner as in group T++: all sessions started with an advance organizer, followed by an introduction to CM but without giving metacognitive prompts. During the subsequent individual work phase, participants worked on the same learning material but without any scaffolding during their own concept map construction. All participants had the opportunity to ask questions during the introduction but were not able to compare their own results to one of the participants’ or an expert map. Given these characteristics, this group represents the kind of practical training most likely found in classrooms [135,136].

- (3)

- The third group (T−) did not receive any CM training but used common non-CM learning strategies from other studies [18,114,137] to deal with the same learning material as the T++ and T+ groups: group discussions in week 1, writing a summary in week 2, and carousel workshops in week 3. The training sessions’ procedure was again framed in almost the same manner as in group T++: they started with an advance organizer, followed by an introduction to the respective learning strategy including metacognitive prompts (see Supplementary Materials Section S3). During the subsequent individual work phase, participants worked on the learning material and afterwards, they were given the opportunity to discuss their results and compare them to an expert solution.

2.3. Concept Map Scoring

2.4. Further Measures and Operationalizations

2.4.1. Measures Prior to Training Phase (Week 1)

2.4.2. Measures after Training Phase/Treatment Check (Week 3)

2.4.3. Measures Prior to Learning Phase (Week 4)

2.4.4. Measures during Learning Phase (Week 4)

2.4.5. Measures after Learning Phase/Learning Outcome (Weeks 5 and 6)

2.5. Materials and Procedure

2.6. Statistical Analyses

3. Results

3.1. Pre-Analyses of Baseline Differences (Measures Prior to Training Phase, Week 1)

3.2. Treatment Check (Measure after Training Phase, Week 3)

3.3. Concept Mapping-Related Self-Efficacy and Error Detection Task (Measures Prior to Learning Phase, Week 4)

3.4. Recall, Organization, and Elaboration Processes (Measures during Learning Phase, Week 4)

3.5. Cognitive Load (Measures during Learning Phase, Week 4)

3.6. Metacognitve Prediction (Measures during Learning Phase, Week 4)

3.7. Concept Map Quality, Structural, Declarative, and Conceptual Knowledge (Measures after Learning Phase/Learning Outcome in Test Phase, Weeks 5 and 6)

4. Discussion

4.1. Pre-Analyses of Baseline Differences (Measures Prior to Training Phase, Week 1)

4.2. Treatment Check (Measure after Training Phase, Week 3)

4.3. Concept Mapping-Related Self-Efficacy and Error Detection Task (Measures Prior to Learning Phase, Week 4)

4.4. Recall, Organization, and Elaboration Processes (Measures during Learning Phase, Week 4)

4.5. Cognitive Load (Measures during Learning Phase, Week 4)

4.6. Facilitated Acquirement of Knowledge and Skills (Measures during as Well as after Learning Phase, Weeks 4, 5, and 6)

4.6.1. Concept Map Quality

4.6.2. Structural Knowledge

4.6.3. Declarative Knowledge

4.6.4. Conceptual Knowledge

4.6.5. Metacognitive Prediction

4.7. Summarizing Discussion and Implications

4.8. Limitations

- (1)

- Regarding our student sample, we assume a high homogeneity in terms of a high degree of learning experience and academic performance. Therefore, it might be possible that the CM learning strategy was quite easy to assimilate for our participants to their already existing learning strategy repertoire. This homogenizing factor, in addition to the applied learning material, which was comparable to the material they already were familiar with, could have undermined some group differences on dependent variables regarding the three different training conditions. In this regard, it could be expected that referring to other samples than experienced learners could reveal more distinct group differences. Nevertheless, our total sample size of N = 73 was simply too small to draw conclusions in terms of external validity, so we recommend interpreting our results found for our small student sample size with caution.

- (2)

- Furthermore, the number of N = 73 participants in our study was not large enough to reach sufficient absolute frequency. Accordingly, a parallelization of the groups to which the participants had assigned themselves could not be achieved to our complete satisfaction, even if no initial differences could be shown. This is directly related to the power determined for our data analysis, which did not exceed about 0.30 for correlational and about 0.45 for ANOVA testing. To ensure that our expected small to medium-sized effects could be detected at a power level of 0.80 to 0.90, our sample should have included approximately N = 150 participants. Despite the desirability of such optimized test conditions, it seems difficult to imagine how the required intensive care of such a large number of participants could have been ensured over a six-week study period, given limited personnel and financial resources.

- (3)

- The instrument used to assess the participants’ ability to detect errors in a prescribed concept map (see Section 2.4.3 and Section 3.3) was apparently too easy for all three groups. A more complex test, presenting formal as well as content-related errors at different levels of difficulty, could have led to clearer results.

4.9. Prospects for Future Research

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dahnke, H.; Fuhrmann, A.; Steinhagen, K. Entwicklung und Einsatz von Computersimulation und Concept Mapping als Erhebungsinstrumente bei Vorstellungen zur Wärmephysik eines Hauses [Development and use of computer simulation and concept mapping as survey instruments for ideas on the thermal physics of a house]. Z. Didakt. Nat. 1998, 4, 67–80. [Google Scholar]

- Hegarty-Hazel, E.; Prosser, M. Relationship between students’ conceptual knowledge and study strategies—Part 1: Student learning in physics. Int. J. Sci. Educ. 1991, 13, 303–312. [Google Scholar] [CrossRef]

- Perkins, D.N.; Grotzer, T.A. Dimensions of causal understanding: The role of complex causal models in students’ understanding of science. Stud. Sci. Educ. 2005, 41, 117–165. [Google Scholar] [CrossRef]

- Peuckert, J.; Fischler, H. Concept Maps als Diagnose-und Auswertungsinstrument in einer Studie zur Stabilität und Ausprägung von Schülervorstellungen [Concept Maps as a diagnostic and evaluation tool in a study on the stability and expression of student perceptions]. In Concept Mapping in Fachdidaktischen Forschungsprojekten der Physik und Chemie [Concept Mapping in Didactic Research Projects in Physics and Chemistry]; Fischler, H., Peuckert, J., Eds.; Studien zum Physiklernen; Logos: Berlin, Germany, 2000; Volume 1, pp. 91–116. [Google Scholar]

- Grüß-Niehaus, T. Zum Verständnis des Löslichkeitskonzeptes im Chemieunterricht—Der Effekt von Methoden Progressiver und Kollaborativer Reflexion [To Understand the Concept of Solubility in Chemistry Lessons—The Effect of Methods of Progressive and Collaborative Reflection]; Logos: Berlin, Gremany, 2010. [Google Scholar]

- Markow, P.G.; Lonning, R.A. Usefulness of concept maps in college chemistry laboratories: Students’ perceptions and effects on achievement. J. Res. Sci. Teach. 1998, 35, 1015–1029. [Google Scholar] [CrossRef]

- Stracke, I. Einsatz Computerbasierter Concept Maps zur Wissensdiagnose in der Chemie: Empirische Untersuchungen am Beispiel des Chemischen Gleichgewichts [Use of Computer-Based Concept Maps for Knowledge Diagnosis in Chemistry: Empirical Studies Using the Example of Chemical Equilibrium]; Waxmann: Münster, Gremany, 2004. [Google Scholar]

- Assaraf, O.B.-Z.; Dodick, J.; Tripto, J. High school students’ understanding of the human body system. Res. Sci. Educ. 2013, 43, 33–56. [Google Scholar] [CrossRef]

- Bramwell-Lalor, S.; Rainford, M. The effects of using concept mapping for improving advanced level biology students’ lower- and higher-order cognitive skills. Int. J. Sci. Educ. 2014, 36, 839–864. [Google Scholar] [CrossRef]

- Feigenspan, K.; Rayder, S. Systeme und systemisches Denken in der Biologie und im Biologieunterricht [Systems and systemic thinking in biology and biology teaching]. In Systemisches Denken im Fachunterricht [Systemic Thinking in School Subjects]; Arndt, H., Ed.; FAU Lehren und Lernen; FAU University Press: Erlangen, Germany, 2017; pp. 139–176. [Google Scholar]

- Hegarty-Hazel, E.; Prosser, M. Relationship between students’ conceptual knowledge and study strategies—Part 2: Student learning in biology. Int. J. Sci. Educ. 1991, 13, 421–429. [Google Scholar] [CrossRef]

- Heinze-Fry, J.A.; Novak, J.D. Concept mapping brings long-term movement toward meaningful learning. Sci. Educ. 1990, 74, 461–472. [Google Scholar] [CrossRef]

- Kinchin, I.M.; De-Leij, F.A.A.M.; Hay, D.B. The evolution of a collaborative concept mapping activity for undergraduate microbiology students. J. Furth. High. Educ. 2005, 29, 1–14. [Google Scholar] [CrossRef]

- Schmid, R.F.; Telaro, G. Concept mapping as an instructional strategy for high school biology. J. Educ. Res. 1990, 84, 78–85. [Google Scholar] [CrossRef]

- Tripto, J.; Assaraf, O.B.; Snapir, Z.; Amit, M. How is the body’s systemic nature manifested amongst high school biology students? Instr. Sci. 2017, 45, 73–98. [Google Scholar] [CrossRef]

- Sadava, D.E.; Hillis, D.M.; Heller, H.C. Purves Biologie [Purves Biology], 10th ed.; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Urry, L.A.; Cain, M.L.; Wasserman, S.A.; Minorsky, P.V.; Reece, J.B. Campbell Biologie [Campbell Biology], 11th ed.; Pearson: Hallbergmoos, Germany, 2019. [Google Scholar]

- Großschedl, J.; Harms, U. Effekte metakognitiver Prompts auf den Wissenserwerb beim Concept Mapping und Notizen Erstellen [Effects of metacognitive prompts on knowledge acquisition in concept mapping and note taking]. Z. Didakt. Nat. 2013, 19, 375–395. [Google Scholar]

- Dreyfus, A.; Jungwirth, E. The pupil and the living cell: A taxonomy of dysfunctional ideas about an abstract idea. J. Biol. Educ. 1989, 23, 49–55. [Google Scholar] [CrossRef]

- Flores, F.; Tovar, M.E.; Gallegos, L. Representation of the cell and its processes in high school students: An integrated view. Int. J. Sci. Educ. 2003, 25, 269–286. [Google Scholar] [CrossRef]

- Anderman, E.M.; Sinatra, G.M.; Gray, D.L. The challenges of teaching and learning about science in the twenty-first century: Exploring the abilities and constraints of adolescent learners. Stud. Sci. Educ. 2012, 48, 89–117. [Google Scholar] [CrossRef]

- Brandstädter, K.; Harms, I.; Großschedl, J. Assessing system thinking through different concept-mapping practices. Int. J. Sci. Educ. 2012, 34, 2147–2170. [Google Scholar] [CrossRef]

- Dekker, S. Drift into Failure: From Hunting Broken Components to Understanding Complex. Systems; CRC Press: London, UK, 2011. [Google Scholar]

- Grotzer, T.A.; Solis, S.L.; Tutwiler, M.S.; Cuzzolino, M.P. A study of students’ reasoning about probabilistic causality: Implications for understanding complex systems and for instructional design. Instr. Sci. 2017, 45, 25–52. [Google Scholar] [CrossRef]

- Hashem, K.; Mioduser, D. Learning by Modeling (LbM): Understanding complex systems by articulating structures, behaviors, and functions. Int. J. Adv. Comput. Sci. Appl. 2013, 4, 80–86. [Google Scholar] [CrossRef] [Green Version]

- Hmelo-Silver, C.E.; Azevedo, R. Understanding complex systems: Some core challenges. J. Learn. Sci. 2006, 15, 53–61. [Google Scholar] [CrossRef]

- Jacobson, M.J.; Wilensky, U. Complex systems in education: Scientific and educational importance and implications for the learning sciences. J. Learn. Sci. 2006, 15, 11–34. [Google Scholar] [CrossRef] [Green Version]

- Plate, R. Assessing individuals’ understanding of nonlinear causal structures in complex systems. Syst. Dyn. Rev. 2010, 26, 19–33. [Google Scholar] [CrossRef]

- Proctor, R.W.; van Zandt, T. Human Factors in Simple and Complex. Systems, 3rd ed.; CRC Press Taylor & Francis Group: Boca Raton, FL, USA, 2018. [Google Scholar]

- Raia, F. Students’ understanding of complex dynamic systems. J. Geosci. Educ. 2005, 53, 297–308. [Google Scholar] [CrossRef]

- Yoon, S.A.; Anderson, E.; Koehler-Yom, J.; Evans, C.; Park, M.; Sheldon, J.; Schoenfeld, I.; Wendel, D.; Scheintaub, H.; Klopfer, E. Teaching about complex systems is no simple matter: Building effective professional development for computer-supported complex systems instruction. Instr. Sci. 2017, 45, 99–121. [Google Scholar] [CrossRef] [Green Version]

- Friedrich, H.F.; Mandl, H. Lernstrategien: Zur Strukturierung des Forschungsfeldes [Learning strategies: On the structuring of the research field]. In Handbuch Lernstrategien [Handbook of Learning Strategies]; Mandl, H., Friedrich, H.F., Eds.; Hogrefe: Göttingen, Germany, 2006; pp. 1–23. [Google Scholar]

- Arndt, H. (Ed.) Systemisches Denken im Fachunterricht [Systemic Thinking in School Subjects]; FAU University Press: Erlangen, Germany, 2017; p. 369. [Google Scholar]

- Cañas, A.; Coffey, J.; Carnot, M.J.; Feltovich, P.; Hoffman, R.R.; Feltovich, J.; Novak, J.D. A Summary of Literature Pertaining to the Use of Concept Mapping Techniques and Technologies for Education and Performance Support. Available online: https://www.ihmc.us/users/acanas/Publications/ConceptMapLitReview/IHMC%20Literature%20Review%20on%20Concept%20Mapping.pdf (accessed on 11 May 2021).

- Großschedl, J.; Harms, U. Metakognition—Dirigentin des Gedankenkonzerts [Metacognition—Conductor of the concert of thoughts]. In Biologie Methodik [Biology Methodology], 2nd ed.; Spörhase-Eichmann, U., Ruppert, W., Eds.; Cornelsen Scriptor: Berlin, Germany, 2014; pp. 48–52. [Google Scholar]

- Horton, P.B.; McConney, A.A.; Gallo, M.; Woods, A.L.; Senn, G.J.; Hamelin, D. An investigation of the effectiveness of concept mapping as an instructional tool. Sci. Educ. 1993, 77, 95–111. [Google Scholar] [CrossRef]

- Novak, J.D. The pursuit of a dream: Education can be improved. In Teaching Science for Understanding; Educational Psychology Series; Mintzes, J.J., Wandersee, J.H., Novak, J.D., Eds.; Elsevier Academic Press: San Diego, CA, USA, 2005; pp. 3–28. [Google Scholar]

- Schroeder, N.L.; Nesbit, J.C.; Anguiano, C.J.; Adesope, O.O. Studying and constructing concept maps: A meta-analysis. Educ. Psychol. Rev. 2018, 30, 431–455. [Google Scholar] [CrossRef] [Green Version]

- Cañas, A.; Carff, R.; Hill, G.; Carvalho, M.; Arguedas, M.; Eskridge, T.C.; Lott, J.; Carvajal, R. Concept maps: Integrating knowledge and information visualization. In Knowledge and Information Visualization: Searching for Synergies; Tergan, S.-O., Keller, T., Eds.; Springer: Berlin, Germany, 2005; pp. 205–219. [Google Scholar]

- Feller, W.; Spörhase, U. Innere Differenzierung durch Experten-Concept-Maps [Internal differentiation through expert concept maps]. In Biologie Methodik [Biology Methodology], 4th ed.; Spörhase-Eichmann, U., Ruppert, W., Eds.; Cornelsen: Berlin, Germany, 2018; pp. 77–81. [Google Scholar]

- Großschedl, J.; Harms, U. Metakognition—Denken aus der Vogelperspektive [Metacognition—Thinking from a bird’s eye view]. In Biologie Methodik [Biology Methodology], 4th ed.; Spörhase-Eichmann, U., Ruppert, W., Eds.; Cornelsen: Berlin, Germany, 2018; pp. 48–52. [Google Scholar]

- Haugwitz, M.; Nesbit, J.C.; Sandmann, A. Cognitive ability and the instructional efficacy of collaborative concept mapping. Learn. Individ. Diff. 2010, 20, 536–543. [Google Scholar] [CrossRef]

- Mehren, R.; Rempfler, A.; Ulrich-Riedhammer, E.M. Die Anbahnung von Systemkompetenz im Geographieunterricht [The initiation of system competence in geography lessons]. In Systemisches Denken im Fachunterricht [Systemic Thinking in School Subjects]; Arndt, H., Ed.; FAU Lehren und Lernen; FAU University Press: Erlangen, Germany, 2017. [Google Scholar]

- Michalak, M.; Müller, B. Durch Sprache zum systemischen Denken [Through language to systemic thinking]. In Systemisches Denken im Fachunterricht [Systemic Thinking in School Subjects]; Arndt, H., Ed.; FAU Lehren und Lernen; FAU University Press: Erlangen, Germany, 2017; pp. 111–138. [Google Scholar]

- Novak, J.D.; Cañas, A. The origins of the concept mapping tool and the continuing evolution of the tool. Inf. Vis. 2006, 5, 175–184. [Google Scholar] [CrossRef]

- Novak, J.D.; Cañas, A.J. Theoretical origins of concept maps, how to construct them, and uses in education. Reflecting Educ. 2007, 3, 29–42. [Google Scholar]

- Novak, J.D.; Cañas, A. The Theory Underlying Concept Maps and How to Construct Them; Florida Institute for Human and Machine Cognition: Pensacola, FL, USA, 2008; Available online: http://cmap.ihmc.us/docs/pdf/TheoryUnderlyingConceptMaps.pdf (accessed on 11 April 2021).

- Novak, J.D.; Gowin, D.B. Learning How to Learn; Cambridge University Press: New York, NY, USA, 1984. [Google Scholar]

- van Zele, E.; Lenaerts, J.; Wieme, W. Improving the usefulness of concept maps as a research tool for science education. Int. J. Sci. Educ. 2004, 26, 1043–1064. [Google Scholar] [CrossRef]

- Cañas, A.J.; Novak, J.D. Concept mapping using CmapTools to enhance meaningful learning. In Knowledge Cartography, 2nd ed.; Advanced Information and Knowledge Processing; Okada, A., Ed.; Springer: London, UK, 2014; pp. 23–45. [Google Scholar]

- Cronin, P.J.; Dekkers, J.; Dunn, J.G. A procedure for using and evaluating concept maps. Res. Sci. Educ. 1982, 12, 17–24. [Google Scholar] [CrossRef]

- Novak, J.D. Concept mapping: A useful tool for science education. J. Res. Sci. Teach. 1990, 27, 937–949. [Google Scholar] [CrossRef]

- Novak, J.D. Learning, Creating, and Using Knowledge: Concept Maps as Facilitative Tools in Schools and Corporations, 2nd ed.; Routledge: New York, NY, USA, 2010. [Google Scholar]

- Marzetta, K.; Mason, H.; Wee, B. ‘Sometimes They Are Fun and Sometimes They Are Not’: Concept Mapping with English Language Acquisition (ELA) and Gifted/Talented (GT) Elementary Students Learning Science and Sustainability. Educ. Sci. 2018, 8, 13. [Google Scholar] [CrossRef] [Green Version]

- Ullah, A.M.M.S. Fundamental Issues of Concept Mapping Relevant to Discipline-Based Education: A Perspective of Manufacturing Engineering. Educ. Sci. 2019, 9, 228. [Google Scholar] [CrossRef] [Green Version]

- Beier, M.; Brose, B.; Gemballa, S.; Heinze, J.; Knerich, H.; Kronberg, I.; Küttner, R.; Markl, J.S.; Michiels, N.; Nolte, M.; et al. Markl Biologie: Oberstufe [Markl Biology: Upper School]; Klett: Stuttgart, Germany, 2018. [Google Scholar]

- Rost, F. Lern-und Arbeitstechniken für das Studium [Learning and Working Techniques for University Studies]; Springer Fachmedien: Wiesbaden, Germany, 2018. [Google Scholar]

- Kinchin, I.M. If concept mapping is so helpful to learning biology, why aren’t we all doing it? Int. J. Sci. Educ. 2001, 23, 1257–1269. [Google Scholar] [CrossRef]

- Machado, C.T.; Carvalho, A.A. Concept mapping: Benefits and challenges in higher education. J. Contin. High. Educ. 2020, 68, 38–53. [Google Scholar] [CrossRef]

- Hilbert, T.; Renkl, A. Concept mapping as a follow-up strategy to learning from texts: What characterizes good and poor mappers? Instr. Sci. 2008, 36, 53–73. [Google Scholar] [CrossRef]

- Tergan, S.-O. Digital concept maps for managing knowledge and information. In Knowledge and Information Visualization; Hutchison, D., Kanade, T., Kittler, J., Kleinberg, J.M., Mattern, F., Mitchell, J.C., Naor, M., Nierstrasz, O., Pandu Rangan, C., Steffen, B., et al., Eds.; Lecture Notes in Computer Science; Springer: Heidelberg, Germany, 2005; Volume 3426, pp. 185–204. [Google Scholar]

- Roessger, K.M.; Daley, B.J.; Hafez, D.A. Effects of teaching concept mapping using practice, feedback, and relational framing. Learn. Instr. 2018, 54, 11–21. [Google Scholar] [CrossRef]

- Ausubel, D.P. The Psychology of Meaningful Verbal Learning; Grune & Stratton: New York, NY, USA, 1963. [Google Scholar]

- Ausubel, D.P. The Acquisition and Retention of Knowledge: A Cognitive View; Springer: Dordrecht, The Netherlands, 2000. [Google Scholar]

- Novak, J.D. A Theory of Education; Cornell University Press: Ithaca, NY, USA, 1977. [Google Scholar]

- Hilbert, T.; Nückles, M.; Renkl, A.; Minarik, C.; Reich, A.; Ruhe, K. Concept Mapping zum Lernen aus Texten [Concept mapping for learning from texts]. Z. Pädagogische Psychol. 2008, 22, 119–125. [Google Scholar] [CrossRef]

- Nesbit, J.C.; Adesope, O.O. Learning with concept and knowledge maps: A meta-analysis. Rev. Educ. Res. 2006, 76, 413–448. [Google Scholar] [CrossRef] [Green Version]

- Renkl, A.; Nückles, M. Lernstrategien der externen Visualisierung [Learning strategies of external visualization]. In Handbuch Lernstrategien [Handbook of Learning Strategies]; Mandl, H., Friedrich, H.F., Eds.; Hogrefe: Göttingen, Germany, 2006; pp. 135–147. [Google Scholar]

- Sumfleth, E.; Neuroth, J.; Leutner, D. Concept Mapping—Eine Lernstrategie muss man lernen. Concept Mapping—Learning Strategy is Something You Must Learn. CHEMKON 2010, 17, 66–70. [Google Scholar] [CrossRef]

- Weinstein, C.E.; Mayer, R.E. The teaching of learning strategies. In Handbook of Research on Teaching, 3rd ed.; Wittrock, M.C., Ed.; Macmillan: New York, NY, USA, 1986; pp. 315–327. [Google Scholar]

- Cox, R. Representation construction, externalised cognition and individual differences. Learn. Instr. 1999, 9, 343–363. [Google Scholar] [CrossRef]

- VanLehn, K.; Jones, R.M.; Chi, M.T.H. A model of the self-explanation effect. J. Learn. Sci. 1992, 2, 1–59. [Google Scholar] [CrossRef]

- Hilbert, T.; Nückles, M.; Matzel, S. Concept mapping for learning from text: Evidence for a worked-out-map-effect. In International Perspectives in the Learning Sciences: Cre8ing a Learning World, Proceedings of the Eighth International Conference on International Conference for the Learning Sciences—ICLS 2008, Utrecht, The Netherlands, 24–28 June 2008; Kanselaar, G., Jonker, V., Kirschner, P.A., Prins, F.J., Eds.; International Society of the Learning Sciences: Utrecht, The Netherlands, 2008; pp. 358–365. [Google Scholar]

- Baddeley, A. Working memory: Theories, models, and controversies. Annu. Rev. Psychol. 2012, 63, 1–29. [Google Scholar] [CrossRef] [Green Version]

- Hasselhorn, M.; Gold, A. Pädagogische Psychologie: Erfolgreiches Lernen und Lehren [Educational Psychology: Successful Learning and Teaching], 4th ed.; Kohlhammer: Stuttgart, Germany, 2017. [Google Scholar]

- Cadorin, L.; Bagnasco, A.; Rocco, G.; Sasso, L. An integrative review of the characteristics of meaningful learning in healthcare professionals to enlighten educational practices in health care. Nurs. Open 2014, 1, 3–14. [Google Scholar] [CrossRef]

- Hattie, J. Visible Learning: A Synthesis of Over 800 Meta-Analyses Relating to Achievement; Routledge: Abingdon, UK, 2008; p. 378. [Google Scholar]

- Haugwitz, M. Kontextorientiertes Lernen und Concept Mapping im Fach Biologie: Eine Experimentelle Untersuchung zum Einfluss auf Interesse und Leistung unter Berücksichtigung von Moderationseffekten Individueller Voraussetzungen beim Kooperativen Lernen [Context-oriented Learning and Concept Mapping in Biology. An Experimental Investigation of the Influence on Interest and Performance, Taking into Account Moderation Effects of Individual Prerequisites in Cooperative Learning]. Ph.D. Thesis, University of Duisburg-Essen, Duisburg, Germany, 2009. [Google Scholar]

- Gunstone, R.F.; Mitchell, I.J. Metacognition and conceptual change. In Teaching Science for Understanding; Educational Psychology Series; Mintzes, J.J., Wandersee, J.H., Novak, J.D., Eds.; Elsevier Academic Press: San Diego, CA, USA, 2005; pp. 133–163. [Google Scholar]

- Ruiz-Primo, M.A. Examining concept maps as an assessment tool. In Concept Maps: Theory, Methodology, Technology; Cañas, A., Novak, J.D., González, F.M., Eds.; Universidad Pública de Navarra: Pamplona, Spain, 2004; pp. 555–563. [Google Scholar]

- McClelland, J.L.; Rumelhart, D.E.; Hinton, G.E. The appeal of parallel distributed processing. In Explorations in the Microstructure of Cognition; Rumelhart, D.E., McClelland, J.L., the PDP Research Group, Eds.; Parallel distributed processing; Bradford: Cambridge, MA, USA, 1986; pp. 3–44. [Google Scholar]

- Thurn, C.M.; Hänger, B.; Kokkonen, T. Concept mapping in magnetism and electrostatics: Core concepts and development over time. Educ. Sci. 2020, 10, 129. [Google Scholar] [CrossRef]

- Amadieu, F.; Van Gog, T.; Paas, F.; Tricot, A.; Mariné, C. Effects of prior knowledge and concept-map structure on disorientation, cognitive load, and learning. Learn. Instr. 2009, 19, 376–386. [Google Scholar] [CrossRef]

- Hailikari, T.; Katajavuori, N.; Lindblom-Ylanne, S. The relevance of prior knowledge in learning and instructional design. Am. J. Pharm. Educ. 2008, 72, 113. [Google Scholar] [CrossRef] [Green Version]

- Krause, U.-M.; Stark, R. Vorwissen aktivieren [Activate prior knowledge]. In Handbuch Lernstrategien [Handbook of Learning Strategies]; Mandl, H., Friedrich, H.F., Eds.; Hogrefe: Göttingen, Germany, 2006; pp. 38–49. [Google Scholar]

- Rouet, J.-F. Managing cognitive load during document-based learning. Learn. Instr. 2009, 19, 445–450. [Google Scholar] [CrossRef]

- Sedumedi, T.D.T. Prior knowledge in chemistry instruction: Some insights from students’ learning of ACIDS/BASES. Psycho-Educ. Res. Rev. 2013, 2, 34–53. [Google Scholar]

- Strohner, H. Kognitive Systeme: Eine Einführung in die Kognitionswissenschaft [Cognitive Systems: An Introduction to Cognitive Science]; VS Verlag für Sozialwissenschaften: Wiesbaden, Germany, 1995. [Google Scholar]

- Friedrich, H.F. Vermittlung von reduktiven Textverarbeitungsstrategien durch Selbstinstruktion [Teaching reductive text processing strategies through self-instruction]. In Lern-und Denkstrategien: Analyse und Intervention [Learning and Thinking Strategies: Analysis and Intervention]; Mandl, H., Friedrich, H.F., Eds.; Hogrefe: Göttingen, Germany, 1992; pp. 193–212. [Google Scholar]

- Kalyuga, S. Expertise reversal effect and its implications for learner-tailored instruction. Educ. Psychol. Rev. 2007, 19, 509–539. [Google Scholar] [CrossRef]

- Kalyuga, S.; Ayres, P.; Chandler, P.; Sweller, J. The expertise reversal effect. Educ. Psychol. 2003, 38, 23–31. [Google Scholar] [CrossRef] [Green Version]

- Cronbach, L.J. The two disciplines of scientific psychology. Am. Psychol. 1957, 12, 671–684. [Google Scholar] [CrossRef]

- Cronbach, L.J. Beyond the two disciplines of scientific psychology. Am. Psychol. 1975, 30, 116–127. [Google Scholar] [CrossRef]

- Sweller, J. Implications of cognitive load theory for multimedia learning. In The Cambridge Handbook of Multimedia Learning; Mayer, R.E., Ed.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2005; pp. 19–30. [Google Scholar]

- Debue, N.; van de Leemput, C. What does germane load mean? An empirical contribution to the cognitive load theory. Front. Psych. 2014, 5, 1099. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Klepsch, M.; Schmitz, F.; Seufert, T. Development and validation of two instruments measuring intrinsic, extraneous, and germane cognitive load. Front. Psych. 2017, 8, 1–18. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sweller, J.; van Merrienboer, J.; Paas, F. Cognitive architecture and instructional design. Educ. Psychol. Rev. 1998, 10, 251–296. [Google Scholar] [CrossRef]

- Bannert, M. Effekte metakognitiver Lernhilfen auf den Wissenserwerb in vernetzten Lernumgebungen [Effects of metacognitive learning aids on knowledge acquisition in networked learning environments]. Z. Pädagogische Psychol. 2003, 17, 13–25. [Google Scholar] [CrossRef]

- Jüngst, K.L.; Strittmatter, P. Wissensstrukturdarstellung: Theoretische Ansätze und praktische Relevanz [Knowledge structure representation: Theoretical approaches and practical relevance]. Unterrichtswissenschaft 1995, 23, 194–207. [Google Scholar]

- Mintzes, J.J.; Cañas, A.; Coffey, J.; Gorman, J.; Gurley, L.; Hoffman, R.; McGuire, S.Y.; Miller, N.; Moon, B.; Trifone, J.; et al. Comment on “Retrieval practice produces more learning than elaborative studying with concept mapping”. Science 2011, 334, 453. [Google Scholar] [CrossRef] [Green Version]

- Schwendimann, B. Concept Mapping. In Encyclopedia of Science Education; Gunstone, R., Ed.; Springer: Dordrecht, The Netherlands, 2015; pp. 198–202. [Google Scholar]

- Jonassen, D.H.; Beissner, K.; Yacci, M. Structural Knowledge: Techniques for Representing, Conveying, and Acquiring Structural Knowledge; Lawrence Erlbaum: Hillsdale, NJ, USA, 1993. [Google Scholar]

- Anderson, J.R. Language, Memory, and Thought; Lawrence Erlbaum: Hillsdale, NJ, USA, 1976. [Google Scholar]

- McCormick, R. Conceptual and procedural knowledge. Int. J. Technol. Des. Educ. 1997, 7, 141–159. [Google Scholar] [CrossRef]

- Renkl, A. Wissenserwerb [Knowledge Acquisition]. In Pädagogische Psychologie [Educational Psychology], 3rd ed.; Wild, E., Möller, J., Eds.; Springer: Berlin, Germany, 2020; pp. 3–24. [Google Scholar]

- Moors, A.; de Houwer, J. Automaticity: A theoretical and conceptual analysis. Psychol. Bull. 2006, 132, 297–326. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mietzel, G. Pädagogische Psychologie des Lernens und Lehrens [Educational Psychology of Learning and Teaching], 9th ed.; Hogrefe: Göttingen, Germany, 2017. [Google Scholar]

- Haugwitz, M.; Sandmann, A. Kooperatives Concept Mapping in Biologie: Effekte auf den Wissenserwerb und die Behaltensleistung. [Cooperative concept mapping in biology: Effects on knowledge acquisition and retention]. Z. Didakt. Nat. 2009, 15, 89–107. [Google Scholar]

- Hilbert, T.S.; Renkl, A. Individual differences in concept mapping when learning from texts. In Proceedings of the 27th Annual Meeting of the Cognitive Science Society, Stresa, Italy, 21–23 July 2005; Bara, B.G., Barsalou, L., Bucciarelli, M., Eds.; Lawrence Erlbaum: Mahwah, NJ, USA, 2005; pp. 947–952. [Google Scholar]

- Jegede, O.J.; Alaiyemola, F.F.; Okebukola, P.A.O. The effect of concept mapping on students’ anxiety and achievement in biology. J. Res. Sci. Teach. 1990, 27, 951–960. [Google Scholar] [CrossRef]

- McCagg, E.C.; Dansereau, D.F. A convergent paradigm for examining knowledge mapping as a learning strategy. J. Educ. Res. 1991, 84, 317–324. [Google Scholar] [CrossRef]

- Romero, C.; Cazorla, M.; Buzón, O. Meaningful learning using concept maps as a learning strategy. J. Technol. Sci. Educ. 2017, 7, 313. [Google Scholar] [CrossRef] [Green Version]

- Morse, D.; Jutras, F. Implementing concept-based learning in a large undergraduate classroom. CBE Life Sci. Educ. 2008, 7, 243–253. [Google Scholar] [CrossRef] [PubMed]

- Ajaja, O.P. Which way do we go in biology teaching? Lecturing, concept mapping, cooperative learning or learning cycle? Electron. J. Sci. Educ. 2013, 17, 1–37. [Google Scholar]

- Chiu, C.-H. Evaluating system-based strategies for managing conflict in collaborative concept mapping. J. Comput. Assist. Learn. 2004, 20, 124–132. [Google Scholar] [CrossRef] [Green Version]

- Chularut, P.; DeBacker, T.K. The influence of concept mapping on achievement, self-regulation, and self-efficacy in students of English as a second language. Contemp. Educ. Psychol. 2004, 29, 248–263. [Google Scholar] [CrossRef] [Green Version]

- Hay, D.; Kinchin, I.; Lygo-Baker, S. Making learning visible: The role of concept mapping in higher education. Stud. High. Educ. 2008, 33, 295–311. [Google Scholar] [CrossRef]

- Hilbert, T.; Renkl, A. Learning how to use a computer-based concept-mapping tool: Self-explaining examples helps. Comput. Hum. Behav. 2009, 25, 267–274. [Google Scholar] [CrossRef]

- Quinn, H.J.; Mintzes, J.J.; Laws, R.A. Successive concept mapping. J. Coll. Sci. Teach. 2003, 33, 12–16. [Google Scholar]

- Pearsall, N.R.; Skipper, J.E.J.; Mintzes, J.J. Knowledge restructuring in the life sciences: A longitudinal study of conceptual change in biology. Sci. Educ. 1997, 81, 193–215. [Google Scholar] [CrossRef]

- van de Pol, J.; Volman, M.; Beishuizen, J. Scaffolding in teacher–student interaction: A decade of research. Educ. Psychol. Rev. 2010, 22, 271–296. [Google Scholar] [CrossRef] [Green Version]

- Chang, K.E.; Sung, Y.-T.; Chen, I.-D. The effect of concept mapping to enhance text comprehension and summarization. J. Exp. Educ. 2002, 71, 5–23. [Google Scholar] [CrossRef]

- Chang, K.E.; Sung, Y.T.; Chen, S.F. Learning through computer-based concept mapping with scaffolding aid. J. Comput. Assist. Learn. 2001, 17, 21–33. [Google Scholar] [CrossRef]

- Gurlitt, J.; Renkl, A. Prior knowledge activation: How different concept mapping tasks lead to substantial differences in cognitive processes, learning outcomes, and perceived self-efficacy. Instr. Sci. 2010, 38, 417–433. [Google Scholar] [CrossRef]

- Kapuza, A. How concept maps with and without a list of concepts differ: The case of statistics. Educ. Sci. 2020, 10, 91. [Google Scholar] [CrossRef] [Green Version]

- Ruiz-Primo, M.A.; Schultz, S.E.; Li, M.; Shavelson, R.J. Comparison of the reliability and validity of scores from two concept-mapping techniques. J. Res. Sci. Teach. 2001, 38, 260–278. [Google Scholar] [CrossRef]

- Ruiz-Primo, M.A.; Shavelson, R.J.; Li, M.; Schultz, S.E. On the validity of cognitive interpretations of scores from alternative concept-mapping techniques. Educ. Assess. 2001, 7, 99–141. [Google Scholar] [CrossRef]

- Ajaja, O.P. Concept mapping as a study skill. Int. J. Educ. Sci. 2011, 3, 49–57. [Google Scholar] [CrossRef]

- den Elzen-Rump, V.; Leutner, D. Naturwissenschaftliche Sachtexte verstehen—Ein computerbasiertes Trainingsprogramm für Schüler der 10. Jahrgangsstufe zum selbstregulierten Lernen mit einer Mapping-Strategie [Understanding scientific texts—A computer-based training program for 10th grade students for self-regulated learning with a mapping strategy]. In Selbstregulation Erfolgreich Fördern [Promoting Self-Regulation Successfully]; Landmann, M., Schmitz, B., Eds.; Pädagogische Psychologie; Kohlhammer: Stuttgart, Germany, 2007; pp. 251–268. [Google Scholar]

- Nückles, M.; Hübner, S.; Dümer, S.; Renkl, A. Expertise reversal effects in writing-to-learn. Instr. Sci. 2010, 38, 237–258. [Google Scholar] [CrossRef]

- Bangert-Drowns, R.L.; Kulik, J.A.; Kulik, C.-L.C. Effects of Frequent Classroom Testing. J. Educ. Res. 1991, 85, 89–99. [Google Scholar] [CrossRef]

- Heubusch, J.D.; Lloyd, J.W. Corrective feedback in oral reading. J. Behav. Educ. 1998, 8, 63–79. [Google Scholar] [CrossRef]

- Shute, V.J. Focus on formative feedback. Rev. Educ. Res. 2008, 78, 153–189. [Google Scholar] [CrossRef]

- Hauser, S.; Nückles, M.; Renkl, A. Supporting concept mapping for learning from text. In The International Conference of the Learning Sciences: Indiana University 2006; Proceedings of the ICLS 2006, Bloomington, IN, USA, 27 June–1 July 2006; Barab, S.A., Hay, K.E., Hickey, D.T., Eds.; International Society of the Learning Sciences: Bloomington, IN, USA, 2006; Volume 1, pp. 243–249. [Google Scholar]

- Serbessa, D.D. Tension between traditional and modern teaching-learning approaches in Ethiopian primary schools. J. Int. Coop. Educ. 2006, 9, 123–140. [Google Scholar]

- Tsai, C.-C. Nested epistemologies: Science teachers’ beliefs of teaching, learning and science. Int. J. Sci. Educ. 2002, 24, 771–783. [Google Scholar] [CrossRef]

- Reader, W.; Hammond, N. Computer-based tools to support learning from hypertext: Concept mapping tools and beyond. Comput. Educ. 1994, 22, 99–106. [Google Scholar] [CrossRef]

- Besterfield-Sacre, M.; Gerchak, J.; Lyons, M.; Shuman, L.J.; Wolfe, H. Scoring concept maps: An integrated rubric for assessing engineering education. J. Eng. Educ. 2004, 19, 105–115. [Google Scholar] [CrossRef]

- Kinchin, I.M.; Möllits, A.; Reiska, P. Uncovering types of knowledge in concept maps. Educ. Sci. 2019, 9, 131. [Google Scholar] [CrossRef] [Green Version]

- McClure, J.R.; Sonak, B.; Suen, H.K. Concept map assessment of classroom learning: Reliability, validity, and logistical practicality. J. Res. Sci. Teach. 1999, 36, 475–492. [Google Scholar] [CrossRef] [Green Version]

- Kleickmann, T.; Großschedl, J.; Harms, U.; Heinze, A.; Herzog, S.; Hohenstein, F.; Köller, O.; Kröger, J.; Lindmeier, A.; Loch, C. Professionswissen von Lehramtsstudierenden der mathematisch-naturwissenschaftlichen Fächer—Testentwicklung im Rahmen des Projekts KiL [Professional knowledge of student teachers of mathematical and scientific subjects—Test development within the KiL project]. Unterrichtswissenschaft 2014, 42, 280–288. [Google Scholar]

- Champagne Queloz, A.; Klymkowsky, M.W.; Stern, E.; Hafen, E.; Köhler, K. Diagnostic of students’ misconceptions using the Biological Concepts Instrument (BCI): A method for conducting an educational needs assessment. PLoS ONE 2017, 12, e0176906. [Google Scholar] [CrossRef] [Green Version]

- Shi, J.; Wood, W.B.; Martin, J.M.; Guild, N.A.; Vicens, Q.; Knight, J.K. A diagnostic assessment for introductory molecular and cell biology. CBE Life Sci. Educ. 2010, 9, 453–461. [Google Scholar] [CrossRef] [PubMed]

- Schneider, W.; Schlagmüller, M.; Ennemoser, M. Lesegeschwindigkeits-und Verständnistest für die Klassen 6–12 [Reading Speed and Comprehension Test for Grades 6–12]; Hogrefe: Göttingen, Germany, 2007. [Google Scholar]

- Wirtz, M.A.; Caspar, F. Beurteilerübereinstimmung und Beurteilerreliabilität [Interrater Agreement and Interrater Reliability]; Hogrefe: Göttingen, Germany, 2002. [Google Scholar]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Karpicke, J.D.; Blunt, J.R. Retrieval practice produces more learning than elaborative studying with concept mapping. Science 2011, 331, 772–775. [Google Scholar] [CrossRef] [PubMed]

- Blunt, J.R.; Karpicke, J.D. Learning with retrieval-based concept mapping. J. Educ. Psychol. 2014, 106, 849–858. [Google Scholar] [CrossRef] [Green Version]

- Townsend, C.L.; Heit, E. Judgments of learning and improvement. Mem. Cogn. 2011, 39, 204–216. [Google Scholar] [CrossRef]

- Anderson, J.R. Cognitive Psychology and Its Implications, 8th ed.; Worth: New York, NY, USA, 2015. [Google Scholar]

- Grotzer, T.A. Expanding our vision for educational technology: Procedural, conceptual, and structural knowledge. Educ. Technol. 2002, 42, 52–59. [Google Scholar]

- Kleickmann, T. Zusammenhänge Fachspezifischer Vorstellungen von Grundschullehrkräften zum Lehren und Lernen mit Fortschritten von Schülerinnen und Schülern im Konzeptuellen Naturwissenschaftlichen Verständnis [Correlations of Subject-Specific Ideas of Primary School Teachers on Teaching and Learning with Progress Made by Pupils in Conceptual Scientific Understanding]. Ph.D. Thesis, University of Münster, Münster, Germany, 2008. [Google Scholar]

- Watson, M.K.; Pelkey, J.; Noyes, C.R.; Rodgers, M.O. Assessing conceptual knowledge using three concept map scoring methods. J. Eng. Educ. 2016, 105, 118–146. [Google Scholar] [CrossRef]

- Weißeno, G.; Detjen, J.; Juchler, I.; Massing, P.; Richter, D. Konzepte der Politik: Ein Kompetenzmodell [Political Concepts: A Competence Model]; Bundeszentrale für Politische Bildung: Bonn, Germany, 2010. [Google Scholar]

- Großschedl, J.; Harms, U. Assessing conceptual knowledge using similarity judgments. Stud. Educ. Eval. 2013, 39, 71–81. [Google Scholar] [CrossRef]

- Nerdel, C. Grundlagen der Naturwissenschaftsdidaktik: Kompetenzorientiert und Aufgabenbasiert für Schule und Hochschule [Basics of Science Education: Competence-Oriented and Task-Based for Schools and Universities]; Springer: Berlin, Germany, 2017. [Google Scholar]

- Babb, A.P.P.; Takeuchi, M.A.; Alnoso Yáñez, G.; Francis, K.; Gereluk, D.; Friesen, S. Pioneering STEM education for pre-service teachers. Int. J. Eng. Pedagog. 2016, 6, 4–11. [Google Scholar] [CrossRef] [Green Version]

- Kultusministerkonferenz. Einheitliche Prüfungsanforderungen in der Abiturprüfung Biologie (Beschluss der Kultusministerkonferenz vom 01.12.1989 i.d.F. vom 05.02.2004) [Uniform Examination Requirements in the Biology Abitur Examination (Resolution of the Conference of Ministers of Education and Cultural Affairs of 01.12.1989 as Amended on 05.02.2004)]. Germany, 2004. Available online: https://www.kmk.org/fileadmin/veroeffentlichungen_beschluesse/1989/1989_12_01-EPA-Biologie.pdf (accessed on 10 May 2021).

- McDonald, C.V. STEM education: A review of the contribution of the disciplines of science, technology, engineering and mathematics. Sci. Educ. Int. 2016, 27, 530–569. [Google Scholar]

- Miller, S.P.; Hudson, P.J. Using evidence-based practices to build mathematics competence related to conceptual, procedural, and declarative knowledge. Learn. Disabil. Res. Pract. 2007, 22, 47–57. [Google Scholar] [CrossRef]

- Turns, S.R.; van Meter, P.N. Applying knowledge from educational psychology and cognitive science to a first course in thermodynamics. In Proceedings of the 2012 ASEE Annual Conference & Exposition, San Antonio, TX, USA, 10–13 June 2012. [Google Scholar]

- U.S. Department of Education. Transforming American Education: Learning Powered by Technology; U.S. Department of Education: Washington, DC, USA, 2010. [Google Scholar]

- Kinchin, I.M. Visualising knowledge structures in biology: Discipline, curriculum and student understanding. J. Biol. Educ. 2011, 45, 183–189. [Google Scholar] [CrossRef]

- Salmon, D.; Kelly, M. Using Concept Mapping to Foster Adaptive Expertise: Enhancing Teacher Metacognitive Learning to Improve Student Academic Performance; Peter Lang: New York, NY, USA, 2014. [Google Scholar]

- Kinchin, I.M. Using concept maps to reveal understanding: A two-tier analysis. Sch. Sci. Rev. 2000, 81, 41–46. [Google Scholar]

- Bitchener, J. Evidence in support of written corrective feedback. J. Second Lang. Writ. 2008, 17, 102–118. [Google Scholar] [CrossRef]

- Lyster, R.; Saito, K.; Sato, M. Oral corrective feedback in second language classrooms. Lang. Teach. 2013, 46, 1–40. [Google Scholar] [CrossRef] [Green Version]

- Deci, E.L.; Richard, M. Intrinsic Motivation and Self-Determination in Human Behavior; Springer US: Boston, MA, USA, 1985. [Google Scholar]

- Ryan, R.M.; Deci, E.L. Intrinsic and extrinsic motivation from a self-determination theory perspective: Definitions, theory, practices, and future directions. Contemp. Educ. Psychol. 2020, 61, 101860. [Google Scholar] [CrossRef]

- Karpicke, J.D. Retrieval-Based Learning. Curr. Dir. Psychol. Sci. 2012, 21, 157–163. [Google Scholar] [CrossRef]

- Karpicke, J.D. Concept Mapping. In The SAGE Encyclopedia of Educational Research, Measurement, and Evaluation; Frey, B.B., Ed.; Sage: Tousand Oaks, CA, USA, 2018; pp. 498–501. [Google Scholar]

- Deutsche Gesellschaft für Psychologie [German Psychological Society]. Berufsethische Richtlinien des Berufsverbandes Deutscher Psychologinnen und Psychologen e. V. und der Deutschen Gesellschaft für Psychologie [Professional Ethical Guidelines of the Professional Association of German Psychologists e. V. and the German Psychological Society]. Available online: https://www.bdp-verband.de/binaries/content/assets/beruf/ber-foederation-2016.pdf (accessed on 27 May 2021).

- World Medical Association. WMA’s Declaration of Helsinki Serves as Guide to Physicians. J. Am. Med. Assoc. 1964, 189, 33–34. [Google Scholar] [CrossRef]

- World Medical Association. Declaration of Helsinki. Ethical Principles for Medical Research Involving Human Subjects. J. Am. Med. Assoc. 2013, 310, 2191–2194. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- General Data Protection Regulation. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation). Off. J. Eur. Union 2016, 59, 294. [Google Scholar]

| Variable | Level | Observed n in Groups | Comparison (Groups) | ||

|---|---|---|---|---|---|

| T++ 1 | T+ 2 | T− 3 | |||

| Gender | female | 18 | 18 | 21 | χ²(2) = 3.28, p = 0.19 |

| male | 9 | 3 | 4 | ||

| Educational level in biology 4 | essential | 5 | 5 | 7 | χ²(4) = 11.96, p < 0.05 |

| basic | 11 | 7 | 17 | ||

| advanced | 11 | 9 | 1 | ||

| University study program | B. A. | 10 | 9 | 13 | χ²(2) = 1.19, p = 0.55 |

| B. Sc. | 17 | 12 | 12 | ||

| Variable | Group | Comparison (Groups) | |||||

|---|---|---|---|---|---|---|---|

| T++ 1 | T+ 2 | T− 3 | |||||

| M4 | SD5 | M | SD | M | SD | ||

| Age | 22.93 | 5.94 | 22.05 | 3.37 | 22.64 | 5.92 | F(2, 70) = 0.16, p = 0.85 |

| Final school exam grade | 2.02 | 0.63 | 2.07 | 0.69 | 1.81 | 0.55 | F(2, 70) = 1.10, p = 0.34 |

| Prior knowledge in cell biology | 7.74 | 3.86 | 7.52 | 3.22 | 6.64 | 2.93 | F(2, 70) = 0.75, p = 0.48 |

| Familiarity with concept maps | 1.55 | 0.66 | 1.73 | 0.75 | 1.33 | 0.40 | F(2, 70) = 1.64, p = 0.20 |

| Reading speed | 842.33 | 247.32 | 845.14 | 271.97 | 835.52 | 225.37 | F(2, 70) = 0.01, p = 0.99 |

| Reading comprehension | 16.67 | 6.72 | 18.33 | 5.84 | 16.68 | 6.52 | F(2, 70) = 0.50, p = 0.61 |

| Variable | Group | Comparison (Groups) | |||||

|---|---|---|---|---|---|---|---|

| T++ 1 | T+ 2 | T− 3 | |||||

| M4 | SD5 | M | SD | M | SD | ||

| Error detection 6 | 7.74 | 1.66 | 7.05 | 1.53 | 7.12 | 1.39 | F(2, 70) = 1.56, p = 0.22 |

| Proper error correction 6 | 7.67 | 1.75 | 7.10 | 1.70 | 6.80 | 1.56 | χ²(2) = 6.10, p < 0.05 |

| Improper error correction | 0.48 | 0.64 | 0.86 | 0.96 | 1.08 | 1.00 | χ²(2) = 5.41, p = 0.08 |

| CM-related self-efficacy | 5.11 | 1.03 | 5.38 | 1.03 | 5.47 | 0.98 | χ²(2) = 2.75, p = 0.25 |

| Variable | Group | Comparison (Groups) | |||||

|---|---|---|---|---|---|---|---|

| T++ 1 | T+ 2 | T− 3 | |||||

| M4 | SD5 | M | SD | M | SD | ||

| R-propositions 6 | 36.74 | 14.77 | 46.95 | 17.43 | 38.08 | 14.36 | F(2, 70) = 2.92, p = 0.07 |

| O-propositions 7 | 0.41 | 0.89 | 0.81 | 1.63 | 0.44 | 0.65 | χ²(2) = 0.48, p = 0.79 |

| E-propositions 8 | 0.41 | 0.97 | 0.24 | 1.09 | 0.12 | 0.44 | χ²(2) = 2.50, p = 0.29 |

| Variable | Group | Comparison (Groups) | |||||

|---|---|---|---|---|---|---|---|

| T++ 1 | T+ 2 | T− 3 | |||||

| M4 | SD5 | M | SD | M | SD | ||

| Extraneous cognitive load | 4.10 | 1.36 | 3.62 | 1.14 | 4.49 | 1.25 | χ²(2) = 6.51, p < 0.05 |

| Intrinsic cognitive load | 5.87 | 0.88 | 5.41 | 1.00 | 5.86 | 1.22 | χ²(2) = 3.48, p = 0.18 |

| Germane cognitive load | 5.67 | 1.16 | 5.43 | 1.09 | 5.60 | 1.42 | χ²(2) = 0.91, p = 0.64 |

| Variable | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| 1 Metacognitive prediction | - | ||||

| 2 aQCM Index 1 | 0.56 *** | - | |||

| 3 Structural knowledge | 0.62 *** | 0.66 *** | - | ||

| 4 Declarative knowledge | 0.71 *** | 0.61 *** | 0.65 *** | - | |

| 5 Conceptual knowledge | −0.01 | 0.27 * | −0.08 | 0.15 | - |

| Variable | Univariate Comparisons | Post Hoc Tests 1 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| F-Test | p | H ² | Group | N2 | M3 | SD4 | Comparison | p | |

| aQCM Index 8 | F(2, 70) = 5.67 | <0.01 | 0.14 | T++ 5 | 27 | 61.52 | 18.82 | T+ | 1.00 |

| T− | 0.01 | ||||||||

| T+ 6 | 21 | 57.19 | 19.31 | T++ | 1.00 | ||||

| T− | 0.08 | ||||||||

| T− 7 | 25 | 45.56 | 14.09 | T++ | 0.01 | ||||

| T+ | 0.08 | ||||||||

| Structural knowledge | F(2, 70) = 4.38 | <0.05 | 0.11 | T++ | 27 | 0.46 | 0.17 | T+ | 1.00 |

| T− | 0.03 | ||||||||

| T+ | 21 | 0.45 | 0.18 | T++ | 1.00 | ||||

| T− | 0.06 | ||||||||

| T− | 25 | 0.33 | 0.15 | T++ | 0.03 | ||||

| T+ | 0.06 | ||||||||

| Declarative knowledge | F(2, 70) = 2.52 | 0.09 | 0.07 | T++ | 27 | 15.19 | 6.12 | T+ | 0.56 |

| T− | 0.98 | ||||||||

| T+ | 21 | 17.14 | 4.23 | T++ | 0.56 | ||||

| T− | 0.09 | ||||||||

| T− | 25 | 13.80 | 4.32 | T++ | 0.98 | ||||

| T+ | 0.09 | ||||||||

| Conceptual knowledge | F(2, 70) = 5.67 | <0.01 | 0.14 | T++ | 27 | 12.96 | 5.79 | T+ | 1.00 |

| T− | 0.01 | ||||||||

| T+ | 21 | 12.62 | 4.97 | T++ | 1.00 | ||||

| T− | 0.02 | ||||||||

| T− | 25 | 8.80 | 3.39 | T++ | 0.01 | ||||

| T+ | 0.02 | ||||||||

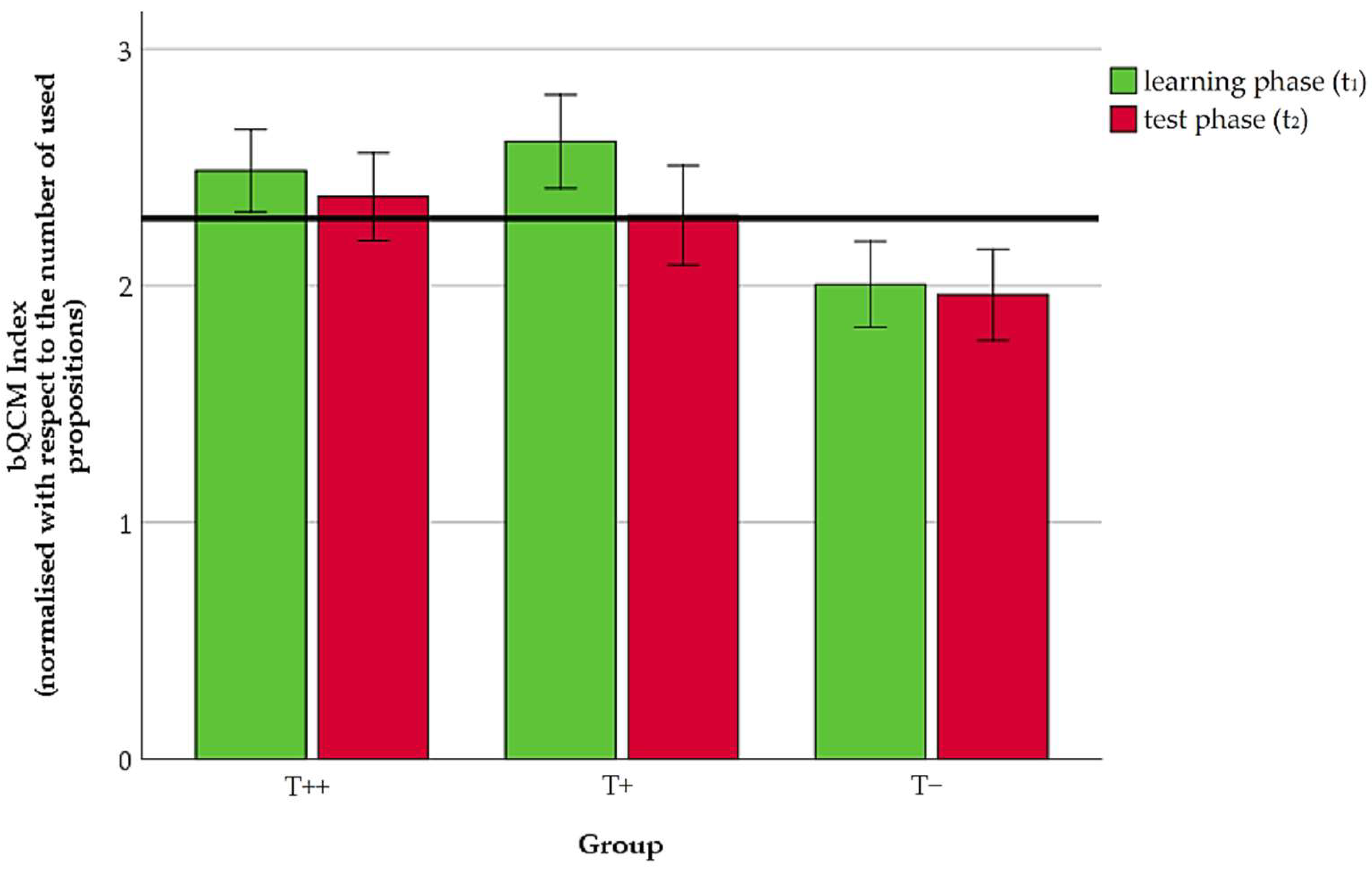

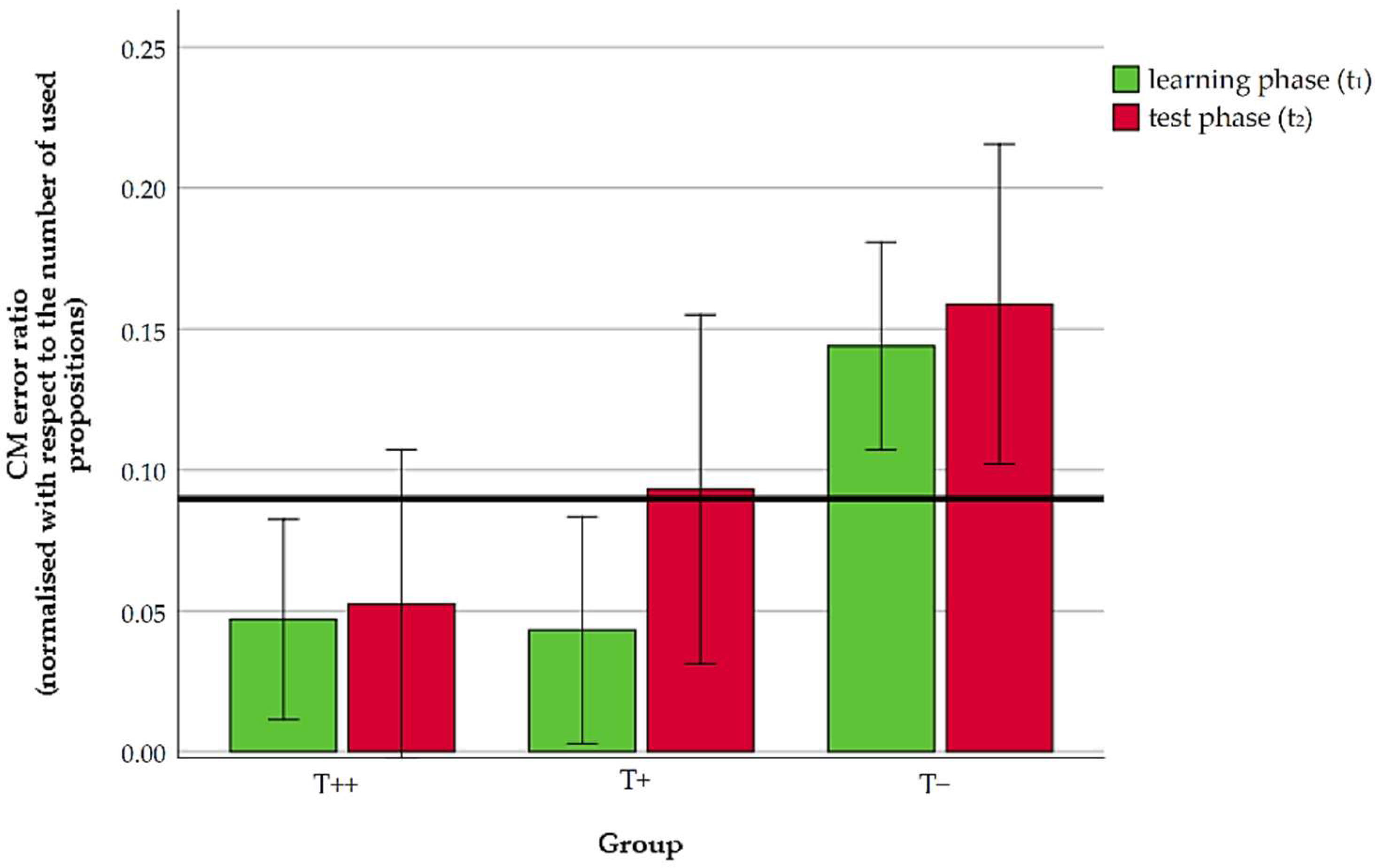

| Variable | Group | N1 | POM 2 | M3 | SD4 | F-Test (Group × Time) | p |

|---|---|---|---|---|---|---|---|

| bQCM-Index 10 | T++ 5 | 27 | t1 8 | 2.49 | 0.47 | F(2, 70) = 2.27 | 0.11 |

| t2 9 | 2.38 | 0.43 | |||||

| T+ 6 | 21 | t1 | 2.61 | 0.45 | |||

| t2 | 2.30 | 0.49 | |||||

| T− 7 | 25 | t1 | 2.01 | 0.47 | |||

| t2 | 1.96 | 0.49 | |||||

| Concept map error ratio | T++ | 27 | t1 | 0.05 | 0.09 | F(2, 70) = 0.56 | 0.57 |

| t2 | 0.05 | 0.08 | |||||

| T+ | 21 | t1 | 0.04 | 0.06 | |||

| t2 | 0.09 | 0.15 | |||||

| T− | 25 | t1 | 0.14 | 0.12 | |||

| t2 | 0.16 | 0.15 |

| Variable | Group | Comparison | p |

|---|---|---|---|

| bQCM Index 4 | T++ 1 | T+ | 1.00 |

| T− | <0.01 | ||

| T+ 2 | T++ | 1.00 | |

| T− | <0.01 | ||

| T− 3 | T++ | <0.01 | |

| T+ | <0.01 | ||

| Concept map error ratio | T++ | T+ | 1.00 |

| T− | <0.01 | ||

| T+ | T++ | 1.00 | |

| T− | <0.05 | ||

| T− | T++ | <0.01 | |

| T+ | <0.05 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Becker, L.B.; Welter, V.D.E.; Aschermann, E.; Großschedl, J. Comprehension-Oriented Learning of Cell Biology: Do Different Training Conditions Affect Students’ Learning Success Differentially? Educ. Sci. 2021, 11, 438. https://doi.org/10.3390/educsci11080438

Becker LB, Welter VDE, Aschermann E, Großschedl J. Comprehension-Oriented Learning of Cell Biology: Do Different Training Conditions Affect Students’ Learning Success Differentially? Education Sciences. 2021; 11(8):438. https://doi.org/10.3390/educsci11080438

Chicago/Turabian StyleBecker, Lukas Bernhard, Virginia Deborah Elaine Welter, Ellen Aschermann, and Jörg Großschedl. 2021. "Comprehension-Oriented Learning of Cell Biology: Do Different Training Conditions Affect Students’ Learning Success Differentially?" Education Sciences 11, no. 8: 438. https://doi.org/10.3390/educsci11080438