Abstract

Testing as an assessment technique has been widely used at all levels of education—from primary to higher school. The main purpose of the paper is to evaluate the effect of context-based testing in teaching and learning of analytical chemistry in a Russian university. The paper formulates the objectives of context-based testing, discusses its features and compares with conventional testing; proposes a model of constructing and administering context-based testing; provides examples of context-based tests. The experiment was conducted at the Ural Sate University of Economics (Russia) with bachelor’s degree students with nonscience majors. Students were assigned to one of the experimental conditions: in the control group, traditional tests were carried out, while the experimental group students experienced context-based testing. The statistical data—students’ test scores—obtained at all stages of the experiment were analyzed on the basis of statistical criteria (Shapiro–Wilk, Student’s T, Fisher). The findings of our experiment enable us to answer the guided research questions. Context-based testing may be considered as an essential component of context-based teaching and learning. In comparison with conventional testing, context-based testing could impact developing knowledge of fundamental analytical chemistry concepts and contribute to more solid knowledge.

1. Introduction

There are some key issues with how chemistry has traditionally been taught: (1) content in courses is overloaded due to the rapidly emerging body of scientific knowledge; (2) lack of clear purpose of learning science as curricula are taught as a aggregation of isolated facts that do not facilitate the formation of meaningful connections between facts; (3) lack of transfer of problem-solving skills; (4) lack of relevance to students’ lives; and (5) inadequate emphasis on the skills necessary to advance in further studies of chemistry [1]. The idea of setting chemistry within particular contexts and structuring courses as modules to enhance student engagement and learning developed in the 1980s and since then has become increasingly popular.

Context-based chemistry “provide[s] meaning to the learning of chemistry; [students] should experience their learning as relevant to some aspect of their lives and be able to construct coherent ‘mental maps’ of the subject” [1] (p. 960). The key theoretical ideas underpinning context-based learning are constructivism, situated learning and activity theory [1]. Context-based learning aims to help students gain a better understanding of the world of science, appreciate the importance of chemistry in the modern world, and see how it relates to daily life events. The effect of implementing the context-based approach to the learning–teaching process has been extensively researched. These studies raise the issues related to the organization of practice-oriented learning activities, content of context-based learning [2,3]; its epistemological aspects [4,5]; students’ and teachers’ attitudes to context-based learning and its impact on students’ motivation [2,6,7,8]; and, in general, effectiveness of the context-based approach in comparison with traditional instruction in science teaching [9,10]. These studies indicate a positive effect of context-based learning in improving students’ long-term memory [11]. Examples of fully implemented context-based chemistry teaching are the Salters Chemistry courses in the UK [12]; the ChemCom and Chemistry in Context courses in the US [3,13]; an Israeli industrial-based chemistry curriculum [14]; ChemieimKontext in Germany [5,15]; and Chemistry in Practice in the Netherlands [16]; the Visualizing the Chemistry of Climate Change (VC3) initiative in some North American institutions [17]; the thermochemical and thermodynamics courses in Turkish highschools [18]. The context-based approach in teaching and learning can be implemented via the REACT strategy that uses five essential forms of learning: relating, experiencing, applying, cooperating and transferring [11,19,20,21].

In a special issue on context-based learning published in the International Journal of Science Education in 2018, Sevian et al. [22] refer to numerous works that have been devoted to the impact of context-based learning on science education worldwide. They highlighted the focus of further research on the larger and micro-contexts, the latter implying the classroom and beyond and examining “what happens in classroom, what conditions in real classroom make learning advantageous, who benefits and in what ways” [22] (p. 4), We, however, believe that there is another challenge of learning in context that is worth addressing—assessment, and more specifically, testing. Context-based testing can be seen as a teacher’s potential to improve the aspect of achieving student learning outcomes in chemistry classrooms.

The success of context-based teaching and learning is heavily dependent on the quality of the curriculum materials and implementation in classroom. The construction of teaching materials including assessment tools as a fundamental component of context-based teaching and learning is essential. Though there are examples of well-established assessments such as those produced by the American Chemistry Society Exam Institute, they are practically unknown to Russian chemistry teachers. Holme et al. [23] believe that chemistry is a particularly good topic for improved assessment because it is (a) a component of the curriculum in a large number of science-based majors and (b) a field with a strong mix of both qualitative and quantitative concepts [1] (p. 962).

Testing as an assessment technique has been widely used at all levels of education—from primary to higher school. A new round of evaluation method development came with information technology. Computerized tests have become a common assessment technique in the tertiary education all over the world employed in teaching and learning of a variety of subjects. The adoption of the competence approach as an imperative of the educational process in many countries, including Russia, has had a significant impact on the objectives of testing, test administration and result processing. Learners’ achievements are measured as a set of skills that allow the learners to perform meaningful actions and develop professional rather than mere scientific knowledge. However, as traditional tests largely focus on an algorithmic and factual recall dominated by Low Order Cognitive Skills (LOCS), they fail to provide information about the learners’ decision making, problem solving and critical system thinking approach, dominated by High Order Cognitive Skills (HOCS). The latter can be attained by integrating HOCS-fostering teaching and assessment strategies, e.g., context-based open-ended tasks of different levels of complexity [24].

It is especially necessary to dwell on teaching science to university students who do not major in sciences. In Russia, the transition to a shorter four-year bachelor’s degree period of study (compared to the former five-year one-level ‘specialism’ model) led to a radical curriculum reform resulting in a significant reduction in the number (and in many cases a complete exclusion) of fundamental science disciplines. Accordingly, in the course of constant modifications in state educational standards based on the learning outcomes approach, science subject knowledge, understanding and skills are less and less frequently mentioned in the benchmark statements for bachelor’s degrees in nonscience majors. Without discussing the reasons for and possible unfortunate consequences of a lower level of fundamental education in Russia, which used to be highly valued all over the world as a distinctive feature of the higher school, it is worth highlighting that there is a call for bridging the gap between the intensive theoretical knowledge of science courses and their practical application.

The way to resolve this problem is to utilize the context (practice)-based approach to designing science curricula, including the appropriate assessment techniques. Context-based learning has been adopted by many countries, including Russia. Theoretical and empirical background for context-based learning [25] correlates with modern trends in knowledge economy development and promotes creating needs for innovation and creativity [26,27]. However, as has rightly been reported by Mahaffy et al. [17], the existing literature has focused mostly on implementing context-based learning on secondary school level, while research examining specific assessment tools—context-based testing on post-secondary level—is still limited [28,29,30]. The experience in employing context-based learning in teaching chemistry at the university level [31,32,33] allows the authors to suggest that the design and content of context-based tests differ from conventional tests used to assess students’ achievement in the science course. It seems that such tools should be an integral part of context-based learning, which could significantly enhance the effectiveness of contextual learning with regard to both practical and fundamental aspects, and, as a result, encourage the development of students’ ‘scientific’ competence.

Thus, the purpose of the study is to investigate the effect of context-based testing as a component of context-based instruction on university students’ learning outcomes in the course of analytical chemistry.

2. Materials and Methods

2.1. The Concept of Context-Based Testing in Sciences. Development of Instruments for Context-Based Testing

While traditional standardized tests typically address traditional course content and skills [23]—and often emphasize procedural skills, i.e., the ability to carry out a sequence of actions to solve a problem and the reproduction of facts and definitions—in the context-based environment, students are assigned learning tasks that resample characteristic activities in an authentic scientific practice. When carrying out these tasks, the learner becomes acquainted with the artefacts belonging to that authentic scientific practice [34] (p. 1111). Furthermore, according to the authors of this paper, the term ‘context-based testing’ in sciences aims to measure the level of knowledge and understanding of the subject matter and ability to apply this knowledge in the professional environment, i.e., measure content knowledge and HOCS (problem solving, ability to frame a scientific question, ability to transfer knowledge to novel situations, etc.). This procedure involves the use of context-based tests, linked to scientific discipline.

Context-based testing as a component of context-based learning can reinforce students’ knowledge and understanding of fundamental concepts and topics; develop students’ awareness of the relevance of the topics; integrate theory with application; and develop creative abilities for innovative potential embodied in professional activities.

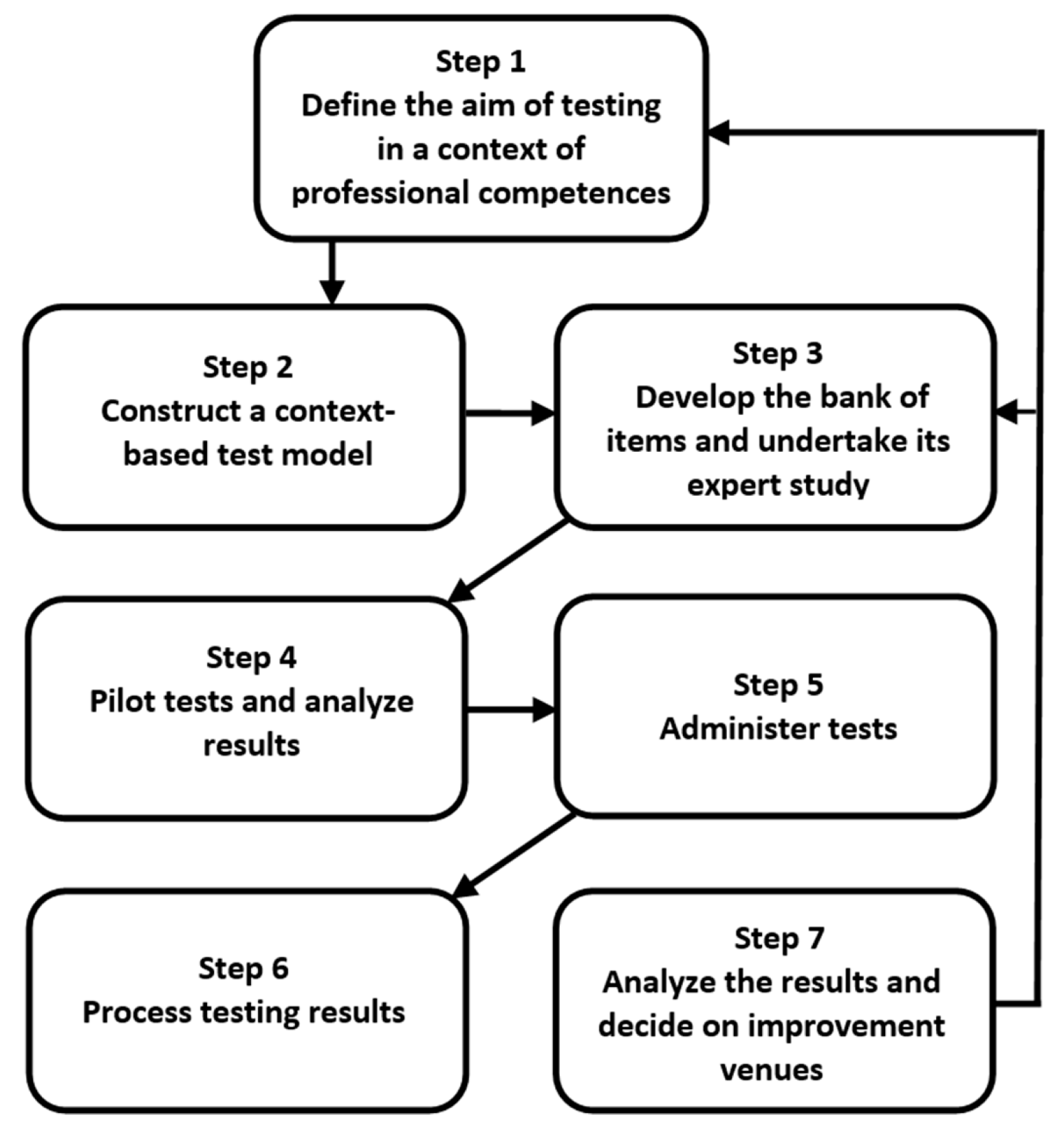

The process of constructing and administering context-based tests should adhere to such principles asintegrating fundamental and applied aspects of tested issues, highlighting the role of scientific knowledge in solving real-world problems and urgent professional tasks, the modular structure of tests, and flexibility and variability based on various nonlinear connections between modules. The strategy has been developed by the authors that determines the construction path of context-based tests and test administration (Figure 1). It involves a number of successive steps and a feedback mechanism.

Figure 1.

The process for constructing and administering context-based tests.

At the initial stage, the first and the most important step for testers is to define the goals and objectives of testing in a science discipline, focusing on targeted professional skills (competence) being developed in a particular module of a study. Once the aim of testing is described, the second step is to construct a test model. In a relevant module, testers choose the topic/topics and identify context-based areas that need to be tested. At this stage the choice of the content complexity is essential because it will affect the students’ responses to the tasks and eventually the outcomes of the context-based learning [35,36]. At the third stage, testers’ task is to design and sequence test items for each selected module. There is a variety of them, but, as the testing experience shows, testers might tend to overuse questions addressing a choice of patterns of behaviour in a given situation. This type of question is more relevant for professional tests, while context-based tests aim to measure students’ ability to integrate theory with application. Context-based tests should link knowledge of fundamental concepts with real life tasks.

The fourth phase is pilot testing and monitoring. This helps testers obtain valid information regarding the usefulness of the content; readability of the content, content accessibility. At this stage, by analysing the output/results, tester can take the necessary steps to modify the quality as well as the context of the content based on an analysis of the pilot study data. This will allow them to cater to all the requirements of the users (students) and improve the content according to their needs. Once the aforementioned stages are completed, testers have the content tested by the users or the target audience (Steps 5 and 6). This helps testers earn more credibility, trustworthy feedback and review, which further allows them to make accurate changes (Step 7) in order to improve the testing procedure (modifying item parameters, test models, the bank of items) and teaching and learning in general.

The proposed strategy guides the construction of context-based tests in analytical chemistry and the pilot testing during laboratory sessions. The content of context-based tests is aligned with the learning outcomes of the bachelor’s degree programs in Biotechnology; Commodities Management and Expertise; Food Processing Technology and Public Catering offered in the Ural State University of Economics (USUE) (Ekaterinburg, Russia).

In accordance with the purpose and of the study and taking into consideration the proposed concept of context-based testing, the following leading questions have been guiding our analysis:

(a) Do tests with context-based tasks contribute to a higher degree of knowledge acquisition at the end of the instructional unit compared to more traditional testing approaches?

(b) Does context-based testing contribute to a more solid knowledge acquisition, i.e., to a higher level of retained knowledge compared to more traditional testing approaches?

2.2. Research Participants

The study was conducted with second-year students (aged 18–19 years) attending bachelor’s degree programs with majors in Food Processing Technology and Public Catering at the USUE. The sample of this study consisted of 50 students. Within an experimental research design [37], participants were randomly assigned to one of the experimental conditions using ciox.ru on-line tool. The experimental and control groups were equal in number—25 students each. In the control group, conventional tests were carried out, while the experimental group students experienced context-based testing. The language of instruction is Russian.

2.3. Ethical Aspects of Research

The following ethical procedures were implemented in the researchers’ process of data collection.

The experiment was performed following the rules of the Declaration of Helsinki of 1975 and in compliance with the formal requirements in the journal’s ‘Research and Publication Ethics’. Because Russia does not have professional organizations that guide ethical standards in higher education, USUE has developed its own ethical protocols. The experiment design (including group allocation) was approved by the Academic Board of the Institute of Trade, Food Processing Technologies and Service. Informed consent was acquired for any experimentation with human subjects. The Academic Board also approved the findings for analysis and inclusion in a published study. The research was shared with participants in each group in experimental studies.

The interests of participants were given due consideration. All the students participating in the study were provided with enough information to be able to judge that the study is worthwhile and that they wish to support it. The participants were sufficiently informed about their potential involvement in the experiment—what they will be asked to do, when, and under what circumstances. The participants were also informed that they have a right to make a free choice over whether to contribute to a study or not, and that there will be no sanctions, consequences or differential treatment for nonparticipation. The students participating in the experiment were aware that they could decline or withdraw participation in the study at any point without prejudice.

All students volunteered to participate in the experiment and assessment processes. They were curious to monitor their progress and receive feedback on their achievements. Participants’ identity was protected by de-identification that was carried out before data analysis to avoid posing the risk to participants being identifiable compromising their privacy and confidentiality.

The double agency when the researcher also serves as the teacher of the participants was minimized by involving independent staff who were in charge of assigning students to control or experimental groups and students’ works coding at the focus phase.

2.4. Research Design

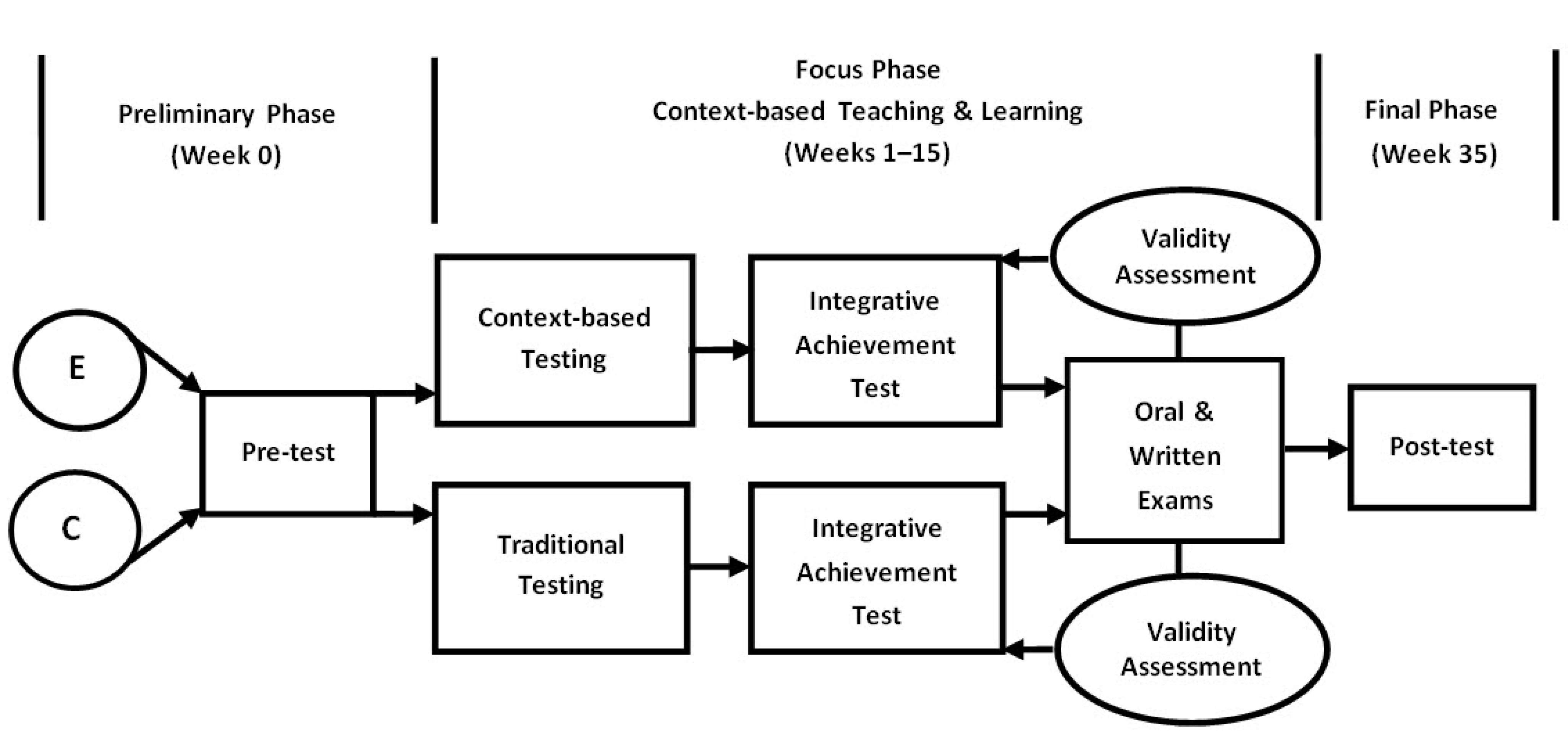

This study was conducted using the pre/post-test design involving a control group and an experimental group. The entire time span of the experiment was 35 weeks including a 15-week program followed by a delayed post-test after 20 weeks. The experiment was divided into three phases: preliminary, focus, and final. The duration of the Analytical Chemistry (context-based) course was one term (15 weeks). Test data were gathered at three points: start-of-course (or the preliminary phase), end-of-course (or the focus phase) (end of Week 15), and after-course (or the final phase) (end of Week 35). The time gap between the focus and final phases was 20 weeks. Figure 2 illustrates the timeline and sequence of the experiment.

Figure 2.

The design of the experiment (E—experimental group, C—control group).

The Preliminary Phase. At the preliminary phase (the start-of-course point), students were put to a pre-test that aimed at measuring possible differences in the prior knowledge of chemistry of control and experimental group students. The prior knowledge test was similar to the United State Examination (its results are viewed as admission requirements for university entrants) and contained 20 structured multiple-choice questions with different difficulty indexes which affected the score of each answer. Three teachers independently assigned a certain difficulty index to each question. The obtained rankings were brought to team meetings and discussed until agreement was reached. To differentiate a level of difficulty, the number of logical steps, or algorithms, was considered [38]: if students needed to take one or two steps to complete the task, the question was scored one point (a low level of difficulty); if three algorithmic procedures were involved, the task was scored two points (a moderate level of difficulty); if more than three steps the score was three points (the highest level of difficulty). Thus, the most difficult six questions were scored three points; the least difficult six questions had a one-point score; the remaining eight questions were scored two points. The total score that students could receive ranged from 0 to 40.

The Focus Phase. The focus phase was the richest and most intense in terms of types of evaluation methods and test administration. At this phase, students in both—control and experimental—groups were experiencing the context-based teaching and learning process that is described in detail by Stozhko et al. [32,39] and Bortnik et al. [33]. The Analytical Chemistry course is comprised of three modules:

Module 1 Solutions, Dissociation, Hydrolysis (Weeks 1–5);

Module 2 Complex Compounds (Weeks 6–10);

Module 3 Redox Processes (Weeks 11–15).

Each module includes 5 lectures (10 contact hours) and 3 laboratory sessions (12 contact hours). Lectures were intended to provide fundamental and applied knowledge. Laboratory work was the key component of context-based learning. The context was set up with a virtual chemistry laboratory, which offered the opportunity to perform scientific experiments with online labs in pedagogically structured learning spaces [32,33,39]. The control and experimental group students carried out chemical experiments identical in terms of preparation and completion procedures. The examples of topics are given below:

Determination of the content of organic acids in beverages by acid-base titration.

Determination of antioxidants in juice, coffee, tea, and wine by the potentiometric method.

Determination of the total content of iron ions in waters and beverages by adsorption voltammetry.

Inversion-voltammetric determination of toxic metal ions (Cu, Pb, Cd, Zn) in food products.

Determination of tap water hardness by the complexometric method.

During this 15-week (one term) stage, three tests related to three studied modules: (1) Solutions, Dissociation, Hydrolysis; (2) Complex Compounds; and (3) RedoxProcesses were administered. Context-based testing was administered to the experimental group students, and traditional testing was administered to the control group students (Figure 2, Focus Phase). Each test included six tasks. A level of difficulty for each task was differentiated in the same way as at the preliminary phase. All students were tested in-class: the control group was computer-tested, while the experimental group was to write answers to open-ended questions in the instructor’s presence. For both groups, students’ responses were assessed with the help of a key system developed by the testers, which excluded biased assumptions. Assessment also took into account a level of difficulty of the task.

A week prior to the examination, all students took an integrative achievement test (Figure 2, Focus Phase). The self-developed integrative achievement test in analytical chemistry included 20 questions related to specific contents already covered in all three modules. The maximum total score was 40. The control group students experienced an integrative achievement test that contained conventional test items. The experimental group was offered an integrative achievement test that was made up ofcontext-based test items only.

Moreover, at the very end of the focus phase, all students had to take an end-of-term examination which was composed of two components: oral and written. An oral component aimed at testing students’ fundamental knowledge of chemistry and included traditional style knowledge questions that require simple recall information or a simple application of known theory or knowledge to familiar situations. The total number of points in the oral component was 20.

A written component was made up of the context-based tasks demanding the application of known theory or knowledge to practical situations (context). The tasks had different levels of difficulty which affected the score of each answer: 2 points were given to the least difficult task; one task was scored 3 points; three tasks were scored 4 points; 6and 7 points were given to the most difficult tasks. Thus, the maximum total score for the written component was 30 points, and the overall maximum score for both oral and written components was 50 (20 + 30) points.

To ensure greater objectivity of the course exam, it was conducted in a large group, combining both control and experimental students. Then students’ written works were shuffled and letter-encoded by support staff. The algorithm of coding and decoding was unknown to the testers. After the teachers (‘experts’) rated the works, the support staff decoded them and transferred the experts’ grades to tables with student names. The oral component of the exam was carried out by another group of independent experts who were unaware of which group—control or experimental—a student belonged to.

The Final Phase. At the final phase, a delayed post-test was administered 20 weeks later, after the end of the Analytical Chemistry course (Week 35). The delayed post-test was meant to measure the effects of context-based testing on both group students’ achievement levels of learning outcomes. The delayed post-test included 20 questions, a combination of traditional and context-based items. The maximum total score was 40 points.

2.5. Tests

Assessment methods were designed in accordance with the adopted strategy (Figure 1). Table 1 presents extracts from the integrative achievement test tasks offered to control (traditional test) and experimental group students (context-based test). The tasks correspond to three modules of subject study (mentioned earlier): Solutions, Dissociation, and Hydrolysis; Complex Compounds; and Redox Processes. Conventional tests included multiple-choice questions, while context-based tasks, were designed in such a way that students could use their scientific knowledge to find solutions to the presented problem.

Table 1.

Sample items of traditional and context-based integrative achievement tests.

The design of integrative achievement tests was also based on contents covered in the three modules (such as examples in Table 1). Table 2 illustrates a written component of the end-of-course exam (Figure 1, Focus Phase).

Table 2.

A written exam test (maximum score = 30).

Because the language of all instructional materials students worked with was Russian, the extracts presented in Table 1 and the test in Table 2 have been translated into English by Irina Pervukhina, one of the authors of this paper. She has a high level of fluency in both—Russian and English—languages and close knowledge of the research context and the specific study. The translation was checked by the other two authors by using back translation.

It is apparent from Table 1 and Table 2 that each contextual task of the tests and written exam includes a specific chemical content and a question based on this content. The context-based question focuses on student’s relevant area of study and different fields of application (health, ecology, food production, etc.). On the contrary, traditional questions are limited to the field of chemistry only. The context-based tasks are ‘open-ended’: each task poses a problem with a varying degree of complexity, and students should independently choose an algorithm for finding a solution applying knowledge and understanding gained in the university curriculum.

Example 1 (Table 1) illustrates the Solutions, Dissociation, and Hydrolysis Module. The hydrogen pH value is considered as a measure of aqueous solution acidity. The acidity of the reaction medium is of particular importance for biochemical processes taking place in the body and in food. For example, the pH value of milk determines the colloidal state of proteins and the stability of the polydisperse system of milk; the conditions for the development of useful and harmful microflora and its impact on fermentation and maturation; the state of equilibrium between ionic and colloidal calcium phosphate and the resulting thermal stability of protein substances; the activity of dative and bacterial enzymes; the rate of formation of typical taste and smell components of individual dairy products. To answer a traditional test question, students should be able to recall what acidity is and the pH values of various common acids, including lactic. Remembering and understanding belong to the lower-order cognitive skills (LOCS) in the Bloom’s taxonomy [24]. In a context-based task, the emphasis is shifted to the biochemical process related to dairy food production. To be able to answer the question correctly (“the milk pH value lowers during storage due to the formation of lactic acid as a result of lactic acid fermentation”), students should demonstrate cognitive skills of higher levels, i.e., application and, to some extent, analysis and evaluation (higher-order cognitive skills (HOCS).

Example 2 (Table 1) illustrates the Complex Compounds Module. Complex compounds are widely spread in the nonliving world and wild life. They are used as catalysts and inhibitors of various processes in food products. In particular, iron-based compounds affect the processes occurring in red wine. These compounds cause reactions that noticeably degrade the quality of red wines, leading to the appearance of ferric tannate haze, turbidity, darkening of color and dark precipitate. The traditional test task focuses on the composition of the complex determination of the charges of the complexing agent and complex ion. In the context task, the focus is shifted to the process that takes place in the drink and the way to control this process.

Example 3 (Table 1) illustrates the Redox Processes Module. The traditional test task is to compare the reducing properties of various acids, while the context-based task is to compare the antioxidant activity of fruits and berries containing the same acids. This test question directly echoes Example 6 of the written exam question (Table 2), where students are asked to compare the AOP of different spice extracts, analyze and synthesize, and design an optimal recipe for a sauce with the best AOP. When completing the task, students should take into account a number of factors: the effect on the AOP of the protein medium (in this case, meat broth), the synergistic effect (strengthening of the AOP) when interacting with two components, the antagonistic effect (weakening of the AOP) when interacting with the other three components. As a result, the chosen combination does not seem obvious at first glance (taking into account only the initial values of the AOP), namely: [cardamom + nutmeg + black pepper + sweet pepper + oregano], the total AOP = 78.356 mg/g because it includes two components with the lowest ‘own’ initial AOP values.

Question 7 of the written exam (Table 2) is ranked as the most difficult task (7 points). As a pre-requisite of task completion students should have learnt what acid-base titration is; the law of multiple proportions, titration of salt mixtures; the concepts of free and total alkalinity, the equivalence point, the titration curve, acid-base indicators, etc. The student task is to write down the equations of the chemical reactions that occur during the chemical analysis, calculate free and total alkalinity of the natural water sample, and finally make a decision if the water is suitable for preparing a beverage. If the decision is negative (which is the case as free alkalinity of the sample (6.5 mmol/L) exceeds the given value (5.0 mmol/L), the student should suggest a possible way of reducing alkalinity. As a rule, the alkaline excess can be neutralized by water acidification, and the right choice of acid in terms of naturality and usefulness is essential.

2.6. Statistical Analysis and Data Treatment

The statistical analysis included hypothesis testing used to assess the plausibility of the proposed hypotheses.

The null hypothesis H0* states that at each phase of the experiment, the scores received by the control and experimental groups of students meet the normal law.

The alternative hypothesis H1* states that the law of score distribution is non-normal.

The null hypothesis H0 states that there is no statistically significant difference between the results of testing activities obtained by students of the control and experimental groups at each phase of the experiment.

The alternative hypothesis H1 states that there is statistically significant difference between the results of testing activities obtained by students of the control and experimental groups at each phase of the experiment.

All the data obtained were managed with R-Studio software packages. The performed statistical analysis included the Shapiro–Wilk test (W-test) for normality, the Fisher test (F-test) for comparing two variances (standard deviations from the means) and the Student’s t test for equivalence. The level of significance in this comparison was determined to be 0.05.

3. Results

The findings of the experiment are displayed in Table 3. It summarizes the results of the assessment at different stages of the experiment and their statistical processing. Critical values obtained for the Shapiro–Wilk test and the Student’s t test were: Wscr = 0.9186 and tcr = 2.011 (for degrees of freedom in the samples ν =48).

Table 3.

Results of assessments taken in the control and experimental groups obtained at different phases of the experiment.

At all three stages of the experiment, the Shapiro–Wilk test value is above critical and ps value is above 0.05 (Table 3). These data allow us to reject the alternative hypothesis H1* and accept the null hypothesis H0* which states that the scores received by the control and experimental groups meet the normal law.

The analysis of the pre-test data (Table 3) shows that value of the t test (t = 0.3097) is below critical; pt value (pt = 0.7581) is above 0.05; and the confidence interval for equivalence differences between group means crosses zero. This enables us to accept the null hypothesis H0 which states that there is no statistically significant difference between the pre-test results obtained by control and experimental students and to reject the alternative hypothesis H1. These findings suggest that students of the two groups had comparative levels of prior knowledge.

At the same time, it is apparent from Table 3 that for all assessment methods the mean score in the experimental group is higher than in the control group. The t test experimental value is above critical and pt value is below 0.05. This allows us to reject the null hypothesis H0 and to accept the alternative hypothesis H1 stating that here is statistically significant difference between the results of assessment in the control and experimental groups obtained by each consecutive assessment method at the focus and final phases of the experiment, namely integrative achievement test, oral and written exams and the delayed post-test. However, it is worth noting that though the difference between the mean score obtained in integrative achievement tests is statistically significant, it does not allow us to conclude that the experimental group students have performed better because the content of the tasks for the experimental group differed from that of the control group. The degree of performance can be measured by analyzing the exam (oral and written) and delayed post-test array of scores.

To assess the validity of the integrated achievement tests conducted in the two groups (conventional tests in the control group; tests with the inclusion of context-based tasks in the experimental group), the array of scores for these tests was compared with the array of scores for a written part, oral part of the exam and the total exam grade. The correlation of the arrays was found using R-studio software. The revealed correlation coefficients were 89.8%, 89.6%, and 90.1% respectively. The obtained results indicate a sufficiently high level of validity of the context-based and traditional tests used.

4. Discussion

Indices of effect size have been calculated applying Cohen’s Index (d) [40]. The findings made it clear that for the pre-test the effect size referring to the difference between group means is almost zero (d < 0.1), while for the other tests (integrative achievement test, oral exam, written exam, oral and written exams, post-test), the average effect is regular and its size varies from 0.54 to 0.65.

The findings of the experiment are in line with the relevant study [25,41]. Some correlation can be found with the findings presented by Sevian et al. [42], who compared student learning outcomes investigated in two university chemistry courses. It can be concluded that context-based tasks offered by the teacher during lectures and laboratory sessions have affected positively students’ learning and conceptual understanding of the subject of study. Though the current research does not analyze student responses by applying the HOCS/LOCS taxonomy, we can assume that context-based learning activities may have positive effect on enhancing student research skills [33]. For Russian students, the study of chemistry is a requirement for bachelor degree programs in Biotechnology; Commodities Management and Expertise; and Food Processing Technology and Public Catering. However, because their major is not chemistry, LOCS are considered as acceptable and satisfactory and can be used as a launching pad for acquiring HOCS in further studies of profession-targeted subjects. At the university level, teaching of chemistry includes algorithmic and/or LOCS-oriented exams. LOCS questions in the chemistry teaching and assessment (examination) refer to knowledge questions that require just a recall of information or a simple application of theory or knowledge to familiar situations and context. They may be solvable by means of algorithmic processes through specific directives or practices [24,43] (p. 187). Our research allows students to enhance opportunities for applying theory and knowledge to a professional context.

It is appropriate to emphasize that in our study, both groups—experimental and control—participated in the same process of contextual learning, attended lectures in a single stream and performed the same tasks during laboratory workshops with contextual content. There were differences in the control (testing) tools only; that is why analysis of the experimental results allows us to give an affirmative answer to the two research questions stated in Section 2.1. of the paper. Context-based testing as an assessment tool of context-based learning can contribute to a higher degree of knowledge acquisition and to a higher level of retained knowledge as compared to more traditional testing approaches.

5. Limitations of the Study

However, some limitations are worth noting. The sample size (n = 50) was limited by the number of students that could be enrolled in this course; therefore, the findings should be interpreted with caution to avoid overgeneralization. As it was mentioned in the beginning of this section, the current research does not analyze student responses by applying the HOCS/LOCS taxonomy. Although our research questions were supported statistically, the sample was not reassessed once the experiment was over. Therefore, it is not possible to evaluate how long the skills are retained after the end of the course.

Another aspect that should be mentioned is the potential issue relating to the multiple t-tests. In our experiment, we were interested in understanding the true difference between a pair of groups—control and experimental. Repeating testing, or multiple t-tests, with more than two groups might result in the probability of a false positive finding [44].

Certain limitations are linked to the length of the course; it totals 66 contact teaching hours. As a result, the experiment was limited in terms of time, contents, and—what was especially important for this experiment—the range of context-based tasks.

6. Conclusions

As a result of the research, the authors have developed the concept of context-based testing as assessment tool in context-based teaching and learning; have designed context-based tests in analytical chemistry; and have made an attempt to evaluate impact of their application on students’ achievement. Both the experimental and control student groups were exposed to context-based teaching and learning (lectures and laboratory sessions). The key difference was in the type of the assessment tools used.

The experiment has shown that students in the experimental group, who were tested according to the context-based learning methodology, were observed to have increased achievement levels while those of the control group, who received traditional instruction, were identified to have no significant differences in their achievement scores.

The findings of the experiment allow us to answer the guided research questions. In comparison with conventional testing, context-based testing could contribute to (1) a higher degree of knowledge acquisition at the end of the instructional unit and (2) more solid knowledge acquisition, i.e., a higher level of retained knowledge. These findings are in line with the literature cited in Ulusoy and Onen [6] (p. 543) that indicates higher achievement levels as a consequence of context-based learning approach.

The findings from this research have implications for designing more efficient curriculum and assessment methods in particular, not only for a university-level analytical chemistry course, but also for other science courses. The analysis of the data through the lens of context-based teaching and learning can provide a framework to help instructors develop a course with more meaningful materials. The implication of the work for faculty is to expand their awareness for the potential of applying context-based testing in teaching analytical chemistry or other science course to university students with nonscience majors. Another benefit might be a dialogue with teaching community that promotes advanced studies on effective science classroom practices.

Author Contributions

Conceptualization, methodology and investigation, B.B. and N.S.; testing, writing—original draft preparation, B.B., N.S. and I.P.; writing—review and editing, I.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the ethical protocol of the Institute of Trade, Food Technologies and Services and ethical principle of conducting the research at USUE.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Research was conducted according to ethical principle of conducting the research at the Ural State University of Economics.

Data Availability Statement

More about the research and project on providit.uniri.hr (accessed on 20 May 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gilbert, J.K. On the nature of ‘context’ in chemical education. Int. J. Sci. Educ. 2006, 28, 957–976. [Google Scholar] [CrossRef]

- de Jong, O. Making Chemistry Meaningful. Conditions for Successful Context-based Teaching. Educ. Quím. 2006, 17, 215–221. [Google Scholar] [CrossRef]

- Wannagatesiri, T.; Fakcharoenphol, W.; Laohammanee, K.; Ramsiri, R. Implication of context-based science in middle school students: Case of learning heat. Int. J. Sci. Math. Technol. Learn. 2017, 24, 1–19. [Google Scholar] [CrossRef]

- Prins, G.T.; Bulte, A.M.W.; Pilot, A. Evaluation of a design principle for fostering students’ epistemological views on models and modelling using authentic practices as contexts for learning in chemistry education. Int. J. Sci. Educ. 2011, 33, 1539–1569. [Google Scholar] [CrossRef]

- Poikela, E. Developing criteria for knowing and learning at work: Towards context-based assessment. J. Workplace Learn. 2004, 16, 267–274. [Google Scholar] [CrossRef]

- Ulusoy, F.M.; Onen, A.S. A research on the generative learning model supported by context-based learning. Eurasia J. Math. Sci. Technol. Educ. 2014, 10, 537–546. [Google Scholar] [CrossRef]

- Rahayu, S.; Chandrasegaran, A.L.; Treagust, D.F.; Kita, M.; Ibnu, S. Understanding acid-base concepts: Evaluating the efficacy of a senior high school student-centred instructional program in Indonesia. Int. J. Sci. Math. Educ. 2011, 9, 1439–1458. [Google Scholar] [CrossRef][Green Version]

- Di Fuccia, D.; Galagovsky, L.; Pérgola, M.; Diaz, I.S. ChemieimKontext: The Students’ View on its Adaption in Spain and Argentina—Two Case Studies. Sci. Educ. 2019, 9, 131–145. [Google Scholar] [CrossRef]

- Ilhan, N.; Yildirim, A.; Yilmaz, S.S. The effect of context-based chemical equilibrium on grade 11 students’ learning, motivation and constructivist learning environment. Int. J. Environ. Sci. Educ. 2016, 11, 3117–3137. [Google Scholar] [CrossRef]

- Kazeni, M.; Onwu, G. Comparative effectiveness of context-based and traditional approaches in teaching genetics: Student views and achievement. Afr. J. Res. Math. Sci. Technol. Educ. 2013, 17, 50–62. [Google Scholar] [CrossRef]

- Ültay, N.; Çalik, M. Comparison of Different Teaching Designs of ‘Acids and Bases’ Subject. Eurasia J. Math. Sci. Technol. Educ. 2016, 12, 57–86. [Google Scholar] [CrossRef]

- Bennett, J.; Lubben, F. Context-based chemistry: The Salters approach. Int. J. Sci. Educ. 2006, 28, 999–1015. [Google Scholar] [CrossRef]

- Schwartz, A.T. Contextualized chemistry education: The American experience. Int. J. Sci. Educ. 2006, 28, 977–998. [Google Scholar] [CrossRef]

- Hofstein, A.; Kesner, M. Industrial chemistry and school chemistry: Making chemistry studies more relevant. Int. J. Sci. Educ. 2006, 28, 1017–1039. [Google Scholar] [CrossRef]

- Parchmann, I.; Gräsel, C.; Baer, A.; Nentwig, P.; Demuth, R.; Ralle, B.; the Chik Project Group. “ChemieimKontext”: A symbiotic implementation of a context-based teaching and learning approach’. Int. J. Sci. Educ. 2006, 28, 1041–1062. [Google Scholar] [CrossRef]

- Bulte, A.M.W.; Westbroek, H.B.; de Jong, O.; Pilot, A. A Research Approach to Designing Chemistry Education using Authentic Practices as Contexts. Int. J. Sci. Educ. 2006, 28, 1063–1086. [Google Scholar] [CrossRef]

- Mahaffy, P.G.; Holme, T.A.; Martin-Visscher, L.; Martin, B.E.; Versprille, A.; Kirchhoff, M.; McKenzie, L.; Towns, M. Beyond “Inert” Ideas to Teaching General Chemistry from Rich Contexts: Visualizing the Chemistry of Climate Change (VC3). J. Chem. Educ. 2017, 94, 1027–1035. [Google Scholar] [CrossRef]

- Cigdemoglu, C.; Gebanb, O. Improving Students’ Chemical Literacy Level on Thermochemical and Thermodynamics Concepts through Context-Based Approach. Chem. Educ. Res. Pract. 2015, 16, 302–317. [Google Scholar] [CrossRef]

- Ültay, N.; Durukan, Ü.G.; Ültay, E. Evaluation of the Effectiveness of Conceptual Change Texts in REACT Strategy. Chem. Educ. Res. Pract. 2015, 16, 22–38. [Google Scholar] [CrossRef]

- Günter, T. The effect of the REACT strategy on students’ achievements with regard to solubility equilibrium: Using chemistry in contexts. Chem. Educ. Res. Pract. 2018, 19, 1287–1306. [Google Scholar] [CrossRef]

- Putri, M.E.; Saputro, D.R.S. The effect of application of react learning strategies on mathematics learning achievements: Empirical analysis on learning styles of junior high school students. Int. J. Educ. Res. Rev. 2019, 4, 231–237. [Google Scholar] [CrossRef]

- Sevian, H.; Dori, Y.J.; Parchmann, I. How does STEM context-based learning work: What we know and what we still do not know. Int. J. Sci. Educ. 2018, 40, 1095–1107. [Google Scholar] [CrossRef]

- Holme, T.; Bretz, S.L.; Cooper, M.; Lewis, J.; Paek, P.; Pienta, N.; Stacy, A.; Stevens, R.; Towns, M. Enhancing the role of assessment in curriculum reform in chemistry. Chem. Educ. Res. Pract. 2010, 11, 92–97. [Google Scholar] [CrossRef]

- Zoller, U.; Tsaparlis, G. Higher and Lower-Order Cognitive Skills: The Case of Chemistry. Res. Sci. Educ. 1997, 27, 117–130. [Google Scholar] [CrossRef]

- Parchmann, I.; Broman, K.; Busker, M.; Rudnik, J. Context-Based Teaching and Learning on School and University Level. In Chemistry Education: Best Practices, Innovative Strategies, and New Technologies; Garcia-Martinez, J., Serrano, E., Eds.; Wiley-VCH: Weinheim, Germany, 2015; pp. 259–278. [Google Scholar]

- Reshetova, L.V.; Stozhko, D.K. Institutional Changes in the Macroregional Model of the Real Technological Development. Upr. Manag. 2015, 53, 33–37. [Google Scholar]

- Stozhko, D.K.; Bortnik, B.I.; Stozhko, N.Y. Managing the innovative educational environment of the university in the context of knowledge economy. In Proceedings of the 2nd International Scientific Conference on New Industrialization: Global, National, Regional Dimension (SICNI 2018), Ekaterinburg, Russia, 4–5 December 2018; pp. 422–426. [Google Scholar]

- Williams, P. Assessing context-based learning: Not only rigorous but also relevant. Assess. Eval. High. Educ. 2008, 33, 395–408. [Google Scholar] [CrossRef]

- Broman, K.; Parchmann, I. Students’ application of chemical concepts when solving chemistry problems in different contexts. Chem. Educ. Res. Pract. 2014, 15, 516–529. [Google Scholar] [CrossRef]

- Good, M.; Marshman, E.; Yerushalmi, E.; Singh, C. Physics teaching assistants’ views of different types of introductory problems: Challenge of perceiving the instructional benefits of context-rich and multiple-choice problems. Phys. Rev. Phys. Educ. Res. 2018, 14, 020120. [Google Scholar] [CrossRef]

- Stozhko, N.; Bortnik, B.; Mironova, L.; Tchernysheva, A.; Podshivalova, E. Interdisciplinary project-based learning: Technology for improving student cognition. Res. Learn. Technol. 2015, 23, 27577. [Google Scholar] [CrossRef]

- Stozhko, N.; Bortnik, B.; Tchernysheva, A.; Podshivalova, E. Context-based student research project work within the framework of the analytical chemistry course. Chemistry 2017, 26, 727–736. [Google Scholar]

- Bortnik, B.; Stozhko, N.; Pervukhina, I.; Tchernysheva, A.; Belysheva, G. Effect of virtual analytical chemistry laboratory on enhancing student research skills and practices. Res. Learn. Technol. 2017, 25, 1968. [Google Scholar] [CrossRef]

- Prins, G.T.; Bulte, A.M.W.; Pilot, A. Designing context-based teaching materials by transforming authentic scientific modelling practices in chemistry. Int. J. Sci. Educ. 2018, 40, 1108–1135. [Google Scholar] [CrossRef]

- Talanquer, V. Commonsense chemistry: A model for understanding students’ alternative conceptions. J. Chem. Educ. 2006, 83, 811–816. [Google Scholar] [CrossRef]

- Broman, K. Chemistry: Content, Context and Choices. Towards Students’ Higher Order Problem Solving in Upper Secondary School. Ph.D. Thesis, Umeåuniversitet, Umeå, Sweden, 2015. [Google Scholar]

- Creswell, J.W. Research Design: Qualitative, Quantitative and Mixed Methods, Approaches, 4th ed.; Sage Publications: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Starichenko, B.E.; Mamontova, M.Y.; Slepuhin, A.V. Metodikaispol’zovaniyaiformasionno-kommunikatsionnykhtekhnologiy v uchebnomprotsesse. Part 3. Komp’yuternyyetekhnologiidiagnostikiuchebnykhdostizheniy. In Methodology of Using Information and Communication Technologies in Teaching. Computer Technologies for Diagnostics of Learning Achievements; USPU: Yekaterinburg, Russia, 2013. (In Russian) [Google Scholar]

- Stozhko, N.Y.; Tchernysheva, A.V.; Podshivalova, E.M.; Bortnik, B.I. Context-based chemistry lab work with the use of computer-assisted learning system. Chemistry 2016, 25, 67–276. [Google Scholar]

- Sullivan, G.M.; Feinn, R. Using Effect Size—Or Why the P Value Is Not Enough. J Grad. Med. Educ. 2012, 4, 279–282. [Google Scholar] [CrossRef]

- Broman, K.; Bernholt, S.; Parchmann, I. Analyzing task design and students’ responses to context-based problems through different analytical frameworks. Res. Sci. Technol. Educ. 2015, 33, 143–161. [Google Scholar] [CrossRef]

- Sevian, H.; Hugi-Cleary, D.; Ngai, C.; Wanjiku, F.; Baldoria, J.M. Comparison of learning in two context-based university chemistry classes. Int. J. Sci. Educ. 2018, 40, 1239–1262. [Google Scholar] [CrossRef]

- Zoller, U. Algorithmic, LOCS and HOCS (chemistry) exam questions: Performance and attitudes of college students. Int. J. Sci. Educ. 2002, 24, 185–203. [Google Scholar] [CrossRef]

- Ranganathan, P.; Pramesh, C.S.; Buyse, M. Common pitfalls in statistical analysis: The perils of multiple testing. Perspect. Clin Res. 2016, 7, 106–107. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).