Empirical Findings on Learning Success and Competence Development at Learning Factories: A Scoping Review

Abstract

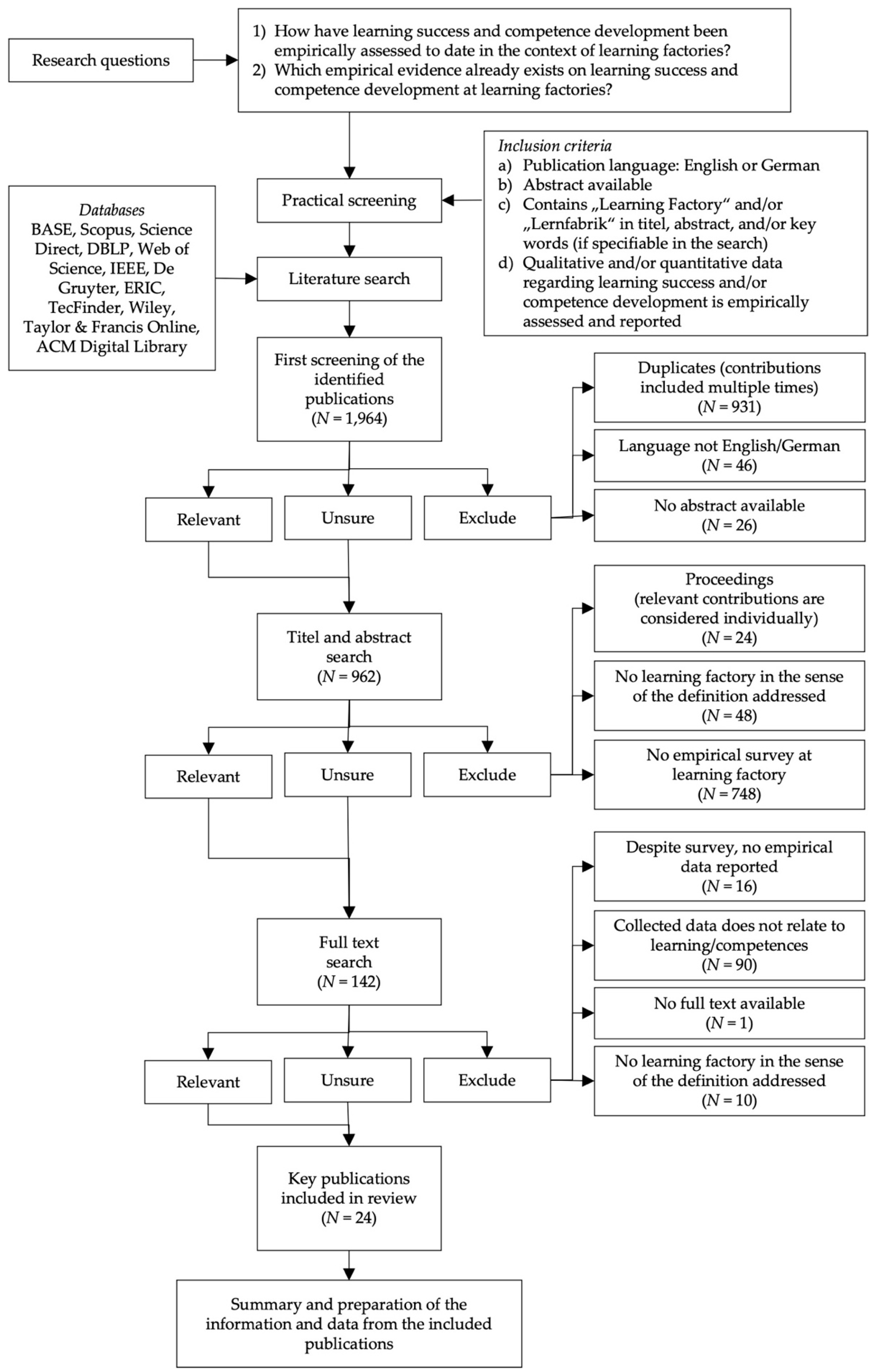

:1. Introduction

Research Questions

- How have learning success and competence development been empirically assessed to date in the context of learning factories?

- Which empirical evidence already exists on learning success and competence development at learning factories?

2. Materials and Methods

3. Results

3.1. Characteristics of Publications and Studies

| Authors, Year (Source of Evidence) | Country (Type of Learning Factory) | Intervention (Duration) | Number and Origin of Participants | Type of Measurement (Measurement Time Points) | How Learning/Competences Assessed? | Findings |

|---|---|---|---|---|---|---|

| Morell de Ramirez, Velez-Arocho, Zayas-Castro, and Torres, 1998 [19] (Conference paper) | Puerto Rico (physical) | Seminar (one semester) | N = 181; students (multidisciplinary): n = 122, faculty: n = 14, industry: n = 42, other: n = 3 | Questionnaire for (self-)evaluation (post) | Questionnaire: 5 items on learning/competences (in the questionnaire for students), 5-point scale from “strongly agree” to “strongly disagree” | Responses that “strongly agree” and “agree”: • Communication skills emphasized: 89% of industry, 71% of faculty, and 80% of students • Teamwork skills emphasized: 93% of industry, 93% of faculty, and 97% of students • Better understanding of engineering: 78% of students • More confident in solving real life problems: 78% of students • More confident in their ability to teach themselves: 80% of students |

| Cachay, Wennemer, Abele, and Tenberg, 2012 [20] (Conference paper) | Germany (physical) | Trainings (4.5 h) Experimental group: 60 min. own experience at the learning factory Control group: 60 min. lesson with practical examples shown by instructor | N = 25 (students in engineering); experimental group: n = 16, control group: n = 9 | Knowledge test questionnaire (pre, post); practical application (post) | Knowledge test: Eight open questions regarding action-independent knowledge and 5 open questions regarding action-substantiating knowledge, answers for analysis evaluated on 5-point scale from 1 “no answer/not correct” to 5 “correct” Practical application: Time measurement, support evaluation | Knowledge test comparison post vs. pre: • Action-independent knowledge: experimental group improved in absolute difference by 27.5% more than control group • Action-substantiating knowledge: experimental group improved in absolute difference by 47.5% more than control group Practical application (working task at the learning factory): • Experimental group: divided into three groups, each took 10 to 30 min. without support • Control group: divided into two groups, each took approximately 60 min. with much support |

| Kesavadas, 2013 [21] (Conference paper) | USA (virtual) | Project work (14 weeks) | N = 38 (Bachelor’s and Master’s students in engineering) | Questionnaire for self-evaluation (post) | Questionnaire: Two items on learning/competences, 3-point scale from “fully agree” to “do not agree” | Responses that “fully agree” and “partially agree”: • Virtual Learning Factory helped to better understand the concepts taught in class: 42% fully agree, 47% partially agree • Virtual Factory project helped experience the progress better than the more traditional individual group/class project formats: 47% fully agree, 37% partially agree |

| Riffelmacher, 2013 [22] (Doctoral thesis) | Germany (physical, digital, and virtual) | Qualification (results based on several trainings; duration not specified) | N = approximately 13 (professionals from industries of variant-rich series production and with background in industrial engineering) | Questionnaire for self-evaluation (post, ex post after 6 month) | Questionnaire: Post 7 to 14 questions for each learning module, ex post 6 questions in total, 6-point scale from 1 “fulfilled” to 6 “not fulfilled” | Post questionnaire: • As far as reported, the mean values of the individual items ranged between M = 1.1 and M = 2.3. Mean values of relevant items from the ex post questionnaire: • “Have you personally used methods and tools to manage turbulence?”—M = 1.2 • “In order to manage turbulences, have you carried out the planning steps foreseen in the qualification concept that fall within your area of responsibility?”—M = 1.4 • “Were you able to transfer the content and approach to turbulence management into your daily work routine and thus apply it?”—M = 1.4 |

| Ovais, Liukkunen, and Markkula, 2014 [23] (Conference paper) | Finland (physical Software Factory) | Project course (7 projects at the software factory; duration not specified) | N = 19 (Master’s students in engineering) | Questionnaire for self-evaluation (post) | Questionnaire: 8 items for improvement in eight competence areas, 5-point scale from 1 “strongly disagree” to 5 “strongly agree” | Reported mean values of the competences: • Effective task management—M = 3.68 • Solving complex problems—M = 3.58 • Sharing responsibilities—M = 3.74 • Developing a shared vision—M = 3.68 • Building a positive relationship—M = 3.84 • Negotiating with other groups—M = 3.89 • Use of rational argument to persuade others—M = 3.32 • Resolving conflict—M = 3.21 |

| Plorin, Jentsch, Hopf, and Müller, 2015 [24] (Conference paper) | Germany (physical and digital) | Trainings (different group compositions and contents from a module pool; duration not specified) | N = 31 (employees from various backgrounds) | Questionnaire for self-evaluation (pre, ex post 2 month after training) | Questionnaire: 12 knowledge and competence areas queried, 6-point scale from 1 “very good” to 6 “very bad” | Improvement of the mean values of the effectiveness of knowledge transfer in all queried areas: Environmental influences, energy policy, energy needs assessment of buildings, forms of energy in general, energy balance, energy generation, energy conversion, energy distribution, energy recovery, compressed air leaks, approaches to increase energy efficiency, energy management according to DIN ISO 50001 |

| Zinn, Güzel, Walker, Nickolaus, Sari, and Hedrich, 2015 [25] (Journal article) | Germany (physical and simulated) | Training (3 days) | N = 35 (trainees, dual students, inexperienced employees from mechatronics and service technology); Group 1: n = 7, Group 2: n = 13, Group 3: n = 5 | Questionnaire for self-evaluation (pre, post); knowledge test questionnaire (pre, post); practical application (post) | Questionnaire for self-evaluation: 37 items on how confident participants feel with regard to certain areas, 5-point scale from 1 “not confident at all” to 5 “very confident” Knowledge test: 37 open questions (partly adapted based on Zinke et al. [26] and Abele et al. [27]), answers for analysis evaluated Computer simulation: Processing 4 error cases, processing documented, + answer 5 competence items | Self-attributed professional knowledge: • Participants rate themselves significantly better after the training than before (p < 0.001, Cohen’s d = 0.73) Increase professional knowledge from pre to post (t-test): • Overall group: pre 48%, post 60% (p < 0.001, d = 0.71) • Group 1: pre 39%, post 52% (p < 0.001, d = 0.80) • Group 2: pre 54%, post 67% (p < 0.001, d = 0.92) • Group 3: pre 50%, post 60% (p < 0.01, d = 0.55) Increase in fault diagnosis competence from pre to post (t-test): • Overall group: pre 31%, post 46% (p < 0.001, d = 0.76) • Group 1: pre 29%, post 49% (p < 0.01, d = 1.1) • Group 2: pre 30%, post 35% (p = 0.305, d = 0.30) • Group 3: pre 35%, post 55% (p < 0.001, d = 1.0) |

| Granheim, 2016; Ogorodnyk, 2016 [15,16] (Master’s theses) | Norway (physical) | Case study (duration not specified) | N = 11 (Master’s students in engineering); Group 1: n = 6, Group 2: n = 5 | Practical application (continuously); group interview (post, ex post after 1 week) | Time measurement: How long did assembly 1 vs. assembly 2 take (between assembly cycles, participants could adjust assembly stations according to their own preferences) Group interviews: Open questions on knowledge and application, content evaluation of the answers | Time measurement: • Group 1 (one pair of roller skis): assembly cycle 1 (first roller ski) = 25 min., assembly cycle 2 (second roller ski) = 13 min. • Group 2 (two pairs of roller skis): assembly cycle 1 = 35 min. (first roller ski) assembly cycle 2 = 16 min. (three roller ski with approximately 5 min. each) Interviews post: Participants of both groups were able to answer all questions (e.g., changes/improvements related to the theory; What does kaizen mean to you now? Is it better to use pull or push?) Interviews ex post: Participants of both groups could answer all questions (What theoretical aspects do you remember?; What types of waste do you remember?; How did you apply this part of the theory in the activity?) |

| Henning, Hagedorn-Hansen, and von Leipzig, 2017 [28] (Journal article) | South Africa (physical) | Games (duration not specified) | N = 368 (students); Beer Game: n = 195, Lego Car Game: n = 78, Train Game: n = 12, Off-Roader LEGO Car Game: n = 11, The Fresh Connection: n = 72 | Questionnaire for self-evaluation (post) | Questionnaire: Knowledge estimation using knowledge dimension levels based on the revised Bloom’s taxonomy [29] with 4 levels: 1 “factual”, 2 “conceptual”, 3 “procedural”, 4 “etacognitive” (no information given on scales or items) | 70% of participants of Train Game chose levels 3 and 4 and over 90% of participants of all other games chose levels 3 and 4, indicating that the students have learned certain terms or theories through the experience |

| Makumbe, 2017; Makumbe, Hattingh, Plint, and Esterhuizen, 2018 [17,18] (Master’s thesis, Conference paper) | South Africa (physical) | Training (2 days plus coaching and implementation in the workplace) | N = 26 (mining employees); Group 1: n = 12 (engineers, production crew, business improvement specialists), Group 2: n = 14 (operations support services) | Knowledge test questionnaire (pre, post); observation (continuously); Interviews (ex post); practical application (ex post with two times of measurement) | Knowledge test: Pre 5 and post 11 open questions regarding the understanding of the 5 lean principles, answers for evaluation evaluated on 4-point scale from 1 “not understood” to 4 “well understood” Observation: By researchers using structured observation tables Interview: Unstructured open questions regarding knowledge and application Practical application: Implementation on the job, analysis of statistical process control charts | Knowledge test: • Group 1: significant improvement of 3 out of 5 lean principles, but improvement overall not significant (t-test) • Group 2: significant improvement of 4 out of 5 lean principles, improvement overall significant (t-test) Observations: • Support the results of the knowledge tests for both groups Interview ex post (both groups combined): • Participants still remembered the theoretical concepts they learned during the activity, and were able to define all of them and give examples of how they were applied during the activity • Participants indicated they could apply the knowledge they had acquired when needed as they knew how and where it could be used Process data on implementation in the company: • Group 1: Improvement resulted in reduction in variability, change in production is significant (t-test) • Group 2: No data on implementation |

| Glass, Miersch, and Metternich, 2018 [30] (Conference paper) | Germany (physical) | Practical exercises as part of a lecture (duration not specified) | N = 30 to 45 (Master’s students in engineering); no direct control group, but grade comparison with students who did not attend the intervention | Observation (continuously); questionnaire for self-evaluation (retrospective pre, post); exam grades (post) | Questionnaire: Self-assessment of the level of knowledge, items developed based on Erler et al. [31], 6-point scale from 1 “very good” to 6 “deficient” Observation: Depending on exercise, 25–40% of the participants, work samples during the exercises evaluated using the method of Schaper [32]; Exam grades | Questionnaire: • Yamazumi: Before the exercise the grades vary, and the ratings are spread. After the exercise, the grade two was selected more than 50% of the time. Grade one, two, and three together make up around 95% of all given grades. • Value-Stream-Mapping: improvement through the exercise is rated with over one whole grade point in the practical measures from pre M = 3.25 to post M = 2.10, in “evaluation and interpretation” with a half and in the social competences with a quarter of a grade point; t-test: overall improvement from pre to post is significant Observation: • Correlation with questionnaire data is 50% Exam grades: • 90% of the exam questions addressed in the practical exercises • t-tests: students, who attended at least 75% of the exercises, achieve more points than students, who did not visit the exercises; students who attended more than 75% of all exercises did not achieve a higher score on a task with no correlating exercise |

| Balve and Ebert, 2019 [33] (Conference paper) | Germany (physical) | Project work (15 weeks) | N = 45 (former Bachelor’s students in engineering) | Questionnaire for self-evaluation (ex post) | Questionnaire: Out of 42 competences participants asked to choose a maximum of 6 competences, that they believe were specifically strengthened using the learning factory | Overall ranking of competences of at least 30%: Organizational skills (47%), time management (42%), interdisciplinary thinking (36%), recognizing interrelations (31%), problem solving ability (31%); social competences were hardly selected |

| Reining, Kauffeld, and Herrmann, 2019 [34] (Conference paper) | Germany (physical) | Seminar with practical, research-based group work at the learning factory (one semester) | N = 8 (Master’s students in engineering); Group 1: n = 4, Group 2: n = 4 | Video data (continuously); questionnaire for self-evaluation (pre, post) | Video: Group work recorded, conversations divided into sense units and analyzed Questionnaire: Scale on affinity for technology (items negatively worded) adapted from Richter et al. [35], 5-point scale from 1 “not true at all” to 5 “very true” | Video: • Both groups: Interactions mostly address professional competences (M = 50%), followed by social competences (M = 32.2), methodological competences (M = 6.9%), and self-competences (M = 3.8%) • Most sense units allocated to the criterion “technical knowledge, knowledge of science and mechanics” (30.7%), “analytical thinking” (10.1%), and “communication skills” (31.3%) • Differences in the distribution of addressed competences: a) between groups, b) depending on whether groups work theoretically in the seminar room, on the computer or practically at the learning factory Questionnaire: • Participants rated their affinity for technology post (M = 1.83, SD = 0.60) somewhat more positively than pre (M = 2.04, SD = 0.77) Overall: • Comparison with existing competence model for intervention: all competences of the model addressed in videos or positively assessed in questionnaire |

| Devika, Raj, Venugopal, Thiede, Herrmann, and Sangwan, 2020 [36] (Conference paper) | India/Germany (physical) | No specific intervention (duration not specified) | N = 14 (students in engineering and instructors who have practical experience at a learning factory) | Interview (ex post) | Interview: Semi-structured to identify transversal competences that can be strengthened at the learning factory, recorded, transcribed, and coded with MAXQDA | Interview: • Learning factories provide an environment to develop four transversal competences: (1) teamwork (interaction, problem-solving, leadership), (2) communication (oral presentation, foster communication and interaction, cross-cultural communication), (3) creativity and innovation (idea generation, product generation, self-exposure), and (4) lifelong learning (reflection, acquiring and learning, initiating) • Learners were able to use transversal competences in all three phases (planning, execution, reflection) at the learning factory, although to varying degrees |

| Juraschek, Büth, Martin, Pulst, Thiede, and Herrmann, 2020 [37] (Conference paper) | Germany (physical) | GameJam (3 days) | N = 18 (no further information given) | Questionnaire for self-evaluation (pre, post) | Questionnaire: No information given on scales or items, 5-point scale from 1 “low consent” to 5 “high consent” | Cumulative self-assessment of relevant competences: Value increased from pre M = 3.0 to post M = 3.5 |

| Omidvarkarjan, Conrad, Herbst, Klahn, and Meboldt, 2020 [38] (Conference paper) | Switzerland (physical) | Training (2 days) | N = 7 (Bachelor’s and Master’s students in engineering) | Written feedback (post) | Written feedback: Participants put in writing their thoughts on their perceived learnings with regard to the agile principles, results were analyzed qualitatively | Number of mentions of learned agile principles, that are consistent with the principles taught during training: Frequent interactions = 3, test-driven development = 3, self-organizing teams = 4, iterative progression = 7, continuous improvement = 1, customer involvement = 2, accommodating change = 1, simplicity = 1 |

| Sieckmann, Petrusch, and Kohl, 2020 [39] (Conference paper) | Germany (physical) | Training (duration not specified) | N = 66 (Master’s students in engineering); 4 experimental groups, which differed in terms of problem (case study, own problem) and social form of interaction (small group, plenary): Group 1: n = 18, Group 2: n = 15, Group 3: n = 16, Group 4: n = 17 | Questionnaire for self-evaluation (pre, post) practical application (post) | Questionnaire: 1 item each for 7 lean methods, 5-point scale from 1 “unknown” to 5 “can moderate” Practical application: Application of A3 method in individual work, participants fill out a template for processing, which is evaluated | Questionnaire (evaluation of all participants): • Significant positive change in understanding of all elements of the learning unit from pre to post • A3 method: increase in reported ability to apply the method from pre 5.4% to post 66.2%, with an additional 21.6% confident in moderating the method • Ishikawa diagram: ability to apply and moderate increased from pre 67.5% to post over 90% • Must-criteria analysis: pre unknown to 87.7%, post 60% at least could apply the method Practical application: Mean values for practical problem solving depending on the previous experimental group Group 1: small group, own problem—M = 90.3% Group 2: small group, case study—M = 73.6% Group 3: plenum, own problem—M = 77.0% Group 4: plenum, case study—M = 68.0% |

| Adam, Hofbauer, and Stehling, 2021 [40] (Journal article) | Austria (physical) | Training (1.5 days) | N = 234 (employees of various backgrounds) | Questionnaire for self-evaluation (post) | Questionnaire: Three items on understanding of lean tools (scale not given), four items on challenges (4-point scale from “very simple” to “difficult”), 4 items on types of help (4-point scale from “very helpful” to “less helpful”) | • Post: lean tools understood by 47% to 74% of participants • Significant correlation between understanding and a) an alternation between theory and practice and b) a do-it-yourself approach (Chi2, both p = 0.00) • Over 50% of participants refuse to implement even the simplest lean tool; shop floor members have more doubts than management and office staff (Chi2, p = 0.00) • Significant correlation between the intention to transfer and: (a) understanding of content (Chi2, p = 0.00), (b) how easy lean tools were to apply in training (Chi2, p = 0.00), (c) participants’ higher prior lean experience (Chi2, p = 0.00) |

| Mahmood, Otto, Kuts, Terkaj, Modoni, Urgo, Colombo, Haidegger, Kovacs, and Stahre, 2021 [41] (Conference paper) | not specified (virtual) | Workshop (duration not specified) | N = 15 (students) | Questionnaire for self-evaluation (post) | Questionnaire: Evaluation of the achieved learning level and self-assessment, 5-point scale from 1 “very dissatisfied” to 5 “very satisfied” | Questionnaire: Evaluation of the reached learning level of methods and digital tools with “very satisfied” and “satisfied”: • Workflow definition: 80% • Performance evaluation: 70% • Virtual modelling: 60% • Self-evaluation: acquired competences: 80% |

| Roll and Ifenthaler, 2021 [42] (Journal article) | Germany (physical) | Vocational training (8 × 45 min) | N = 71 (trainees of electronic professions); experimental group 1 (much time at learning factory): n = 18, experimental group 2 (medium amount of time at learning factory): n = 24, control group (no time at learning factory): n = 21 | Knowledge test questionnaire (pre, post, ex post after 4 weeks) | Knowledge test: • (a) Multidisciplinary digital competences: pre 13, post 12, and ex post 12 open questions, responses analyzed for evaluation with the help of content analyses; (b) Subject-related competences: pre 7, post 6 and ex post 10 open questions, responses rated for evaluation on 5-point scale from 0 “answer blank” to 4 “complete and perfect answer” | Multidisciplinary digital competences: • No significant interaction effect of interaction level and time of survey on multidisciplinary digital competences (df = 4, SS = 10,091, H = 3.37, p = 0.50, η2 = 0.02) • Significant results of a small interaction effect of interaction level and time of survey on the competence dimension problem solving (df = 4, SS = 28,812, H = 9.66, p = 0.05, η2 = 0.05), control group scored lower than both experimental groups Subject-related competences: • Interaction effect of interaction level and time of survey on technical competences was significant and had a medium strength (df = 4, SS = 4436, H = 14.88, p = 0.01, η2 = 0.08), with control group scores mostly lower than both experimental groups’ scores • Experimental group 1 and experimental group 2 each had performance peaks at post measurement and improved significantly here compared to the pre survey (experimental group 1: Diff = −0.70, p = 0.00, r = 0.33; experimental group 2: Diff = −2.42, p < 0.00, r = 0.77). • Experimental group 2 improved significantly in the long term, with a large effect at ex post survey compared to pre survey (Diff = 1.10, p = 0.00, r = 0.60) • Experimental group 1 improved significantly in the ex post survey compared to the pre-survey, but with negligible effect size (Diff = −0.13, p = 0.00, r = 0.03) |

| Kleppe, Bjelland, Hansen, and Mork, 2022 [43] (Conference paper) | Norway (physical) | Project work (duration not specified) | N = 13 (Bachelor’s and Master’s students in engineering) | Questionnaire for self-evaluation (post) | Questionnaire: Three items on learning/competences, 5-point scale from 1 “strongly disagree” to 5 “strongly agree” | Number of participants (out of 13) who “strongly agree” and “agree” with statements: “Project work in the IdeaLab increased my understanding for connecting design and manufacturing”: n = 12 “Use of machines and equipment at the IdeaLab have taught me skills I would not have learned in traditional classroom settings”: n = 13 “Assess to the IdeaLab have made it easier for me to solve cases and assignments given in the course”: n = 13 |

| Urgo, Terkaj, Mondellini, and Colombo, 2022 [44] (Journal article) | Italy (virtual) | Serious Game (duration not specified) | N = 60 (Bachelor’s students) [Number of responses per level: level 1: n = 60, level 2: n = 59, level 3: n = 21, as not all participants completed the game] | Questionnaire for self-evaluation (post); grades (post) | Questionnaire: • 1 item on perceived knowledge, 5-point scale from 1 “strongly disagree” to 5 “strongly agree” Grade: From A to F: calculated on the basis of answers to each level as well as an assessment of the students’ knowledge and skills by Moodle | Questionnaire: “After taking part to this experience, my knowledge on the topic is better than before.”: participants that answered with “agree” or “strongly agree”: Level 1: 72%, Level 2: 61%, Level 3: 47% Grades: Level 1: A + B = 63%, C = 20%, D + F = 17% Level 2: A + B = 53%, C = 10%, D + F = 37% Level 3: A + B = 43%, C = 33%, D + F = 24% |

3.2. Assessment of Learning Success and Competence Development at Learning Factories

3.3. Empirical Findings on Learning Success and Competence Development at Learning Factories

3.4. Summary of the Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kauffeld, S.; Maier, G.W. Digitalisierte Arbeitswelt. Gr. Interakt. Organ. Z. Für Angew. Organ. 2020, 51, 1–4. [Google Scholar] [CrossRef] [Green Version]

- Kauffeld, S.; Albrecht, A. Kompetenzen Und Ihre Entwicklung in Der Arbeitswelt von Morgen: Branchenunabhängig, Individualisiert, Verbunden, Digitalisiert? Gr. Interakt. Organ. Z. Für Angew. Organ. (GIO) 2021, 52, 1–6. [Google Scholar] [CrossRef]

- Hulla, V.; Herstätter, M.; Moser, P.; Burgsteiner, D.; Ramsauer, H. Competency Models for the Digital Transformation and Digitalization in European SMEs and Implications for Vocational Training in Learning Factories and Makerspaces. Proc. Eur. Conf. Educ. Res. (ECER) 2021, IV, 98–107. [Google Scholar] [CrossRef]

- Kipper, L.M.; Iepsen, S.; Dal Forno, A.J.; Frozza, R.; Furstenau, L.; Agnes, J.; Cossul, D. Scientific Mapping to Identify Competencies Required by Industry 4.0. Technol. Soc. 2021, 64, 101454. [Google Scholar] [CrossRef]

- Blumberg, V.S.L.; Kauffeld, S. Kompetenzen Und Wege Der Kompetenzentwicklung in Der Industrie 4.0. Gr. Interakt. Organ. Z. Für Angew. Organ. (GIO) 2021, 52, 203–225. [Google Scholar] [CrossRef]

- Abele, E. CIRP Encyclopedia of Production Engineering; Chatti, S., Tolio, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; ISBN 978-3-642-35950-7. [Google Scholar] [CrossRef] [Green Version]

- Abele, E.; Metternich, J.; Tisch, M. Learning Factories; Springer International Publishing: Cham, Switzerland, 2019; ISBN 978-3-319-92260-7. [Google Scholar] [CrossRef]

- Munn, Z.; Peters, M.D.J.; Stern, C.; Tufanaru, C.; McArthur, A.; Aromataris, E. Systematic Review or Scoping Review? Guidance for Authors When Choosing between a Systematic or Scoping Review Approach. BMC Med. Res. Methodol. 2018, 18, 143. [Google Scholar] [CrossRef]

- Gusenbauer, M.; Haddaway, N.R. Which Academic Search Systems Are Suitable for Systematic Reviews or Meta-analyses? Evaluating Retrieval Qualities of Google Scholar, PubMed, and 26 Other Resources. Res. Synth. Methods 2020, 11, 181–217. [Google Scholar] [CrossRef] [Green Version]

- Arksey, H.; O’Malley, L. Scoping Studies: Towards a Methodological Framework. Int. J. Soc. Res. Methodol. Theory Pract. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Peters, M.D.J.; Godfrey, C.; McInerney, P.; Munn, Z.; Trico, A.C.; Khalil, H. Chapter 11: Scoping Reviews (2020 Version). In JBI Manual for Evidence Synthesis; Aromatis, E., Munn, Z., Eds.; JBI: Adelaide, Australia, 2020; Available online: https://synthesismanual.jbi.global (accessed on 30 September 2022). [CrossRef]

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 Explanation and Elaboration: Updated Guidance and Exemplars for Reporting Systematic Reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef] [Green Version]

- Waffenschmidt, S.; Knelangen, M.; Sieben, W.; Bühn, S.; Pieper, D. Single Screening versus Conventional Double Screening for Study Selection in Systematic Reviews: A Methodological Systematic Review. BMC Med. Res. Methodol. 2019, 19, 132. [Google Scholar] [CrossRef] [PubMed]

- Ogorodnyk, O. Development of Educational Activity Based on Learning Factory in Order to Enhance Learning Experience. Master’s Thesis, Norwegian University of Science and Technology Gjøvik, Gjøvik, Norway, 2016. [Google Scholar]

- Granheim, M.V. Norway’s First Learning Factory—A Learning Outcome Case Study. Master’s Thesis, Norwegian University of Science and Technology Gjøvik, Gjøvik, Norway, 2016. [Google Scholar]

- Makumbe, S. The Effectiveness of Using Learning Factories to Impart Lean Principles to Develop Business Improvement Skills in Mining Employees. Master’s Thesis, University of the Witwatersrand, Johannesburg, South Africa, 2017. [Google Scholar]

- Makumbe, S.; Hattingh, T.; Plint, N.; Esterhuizen, D. Effectiveness of Using Learning Factories to Impart Lean Principles in Mining Employees. Procedia Manuf. 2018, 23, 69–74. [Google Scholar] [CrossRef]

- Morell de Ramirez, L.; Velez-Arocho, J.I.; Zayas-Castro, J.L.; Torres, M.A. Developing and Assessing Teamwork Skills in a Multi-Disciplinary Course. In Proceedings of the FIE ’98. 28th Annual Frontiers in Education Conference. Moving from “Teacher-Centered” to “Learner-Centered” Education. Conference Proceedings (Cat. No.98CH36214), Tempe, AZ, USA, 4–7 November 1998; IEEE: New York, NY, USA, 1998; Volume 1, pp. 432–446. [Google Scholar] [CrossRef]

- Cachay, J.; Wennemer, J.; Abele, E.; Tenberg, R. Study on Action-Oriented Learning with a Learning Factory Approach. Procedia Soc. Behav. Sci. 2012, 55, 1144–1153. [Google Scholar] [CrossRef] [Green Version]

- Kesavadas, T. V-Learn-Fact: A New Approach for Teaching Manufacturing and Design to Mechanical Engineering Students. In Volume 5: Education and Globalization; American Society of Mechanical Engineers: San Diego, CA, USA, 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Riffelmacher, P.P. Konzeption Einer Lernfabrik Für Die Variantenreiche Montage. Doctoral Thesis, Universität Stuttgart, Stuttgart, Germany, 2013. [Google Scholar]

- Ahmad, M.O.; Liukkunen, K.; Markkula, J. Student Perceptions and Attitudes towards the Software Factory as a Learning Environment. In Proceedings of the 2014 IEEE Global Engineering Education Conference (EDUCON), Istanbul, Turkey, 3–5 April 2014; IEEE: New York, NY, USA, 2014; pp. 422–428. [Google Scholar] [CrossRef]

- Plorin, D.; Jentsch, D.; Hopf, H.; Müller, E. Advanced Learning Factory (ALF)—Method, Implementation and Evaluation. Procedia CIRP 2015, 32, 13–18. [Google Scholar] [CrossRef]

- Zinn, B.; Güzel, E.; Walker, F.; Nickolaus, R.; Sari, D.; Hedrich, M. ServiceLernLab—Ein Lern- Und Transferkonzept Für (Angehende) Servicetechniker Im Maschinen- Und Anlagenbau. J. Tech. Educ. 2015, 3, 116–149. [Google Scholar]

- Zinke, G.; Schenk, H.; Wasiljew, E. Berufsfeldanalyse Zu Industriellen Elektroberufen Als Voruntersuchung Zur Bildung Einer Möglichen Berufsgruppe Abschlussbericht. Abschlussbericht. Heft-Nr. 155; Bundesinstitut für Berufsbildung: Bonn, Germany, 2014. [Google Scholar]

- Abele, S.; Behrendt, S.; Weber, W.; Nickolaus, R. Berufsfachliche Kompetenzen von Kfz-Mechatronikern—Messverfahren, Kompetenzdimensionen Und Erzielte Leistungen (KOKO Kfz). In Technologiebasierte Kompetenzmessung in der Beruflichen Bildung. Ergebnisse aus der BMBF-Förderinitiative ASCOT; Oser, F., Landenberger, M., Beck, K., Eds.; WBV: Bielefeld, Germany, 2016. [Google Scholar]

- Henning, M.; Hagedorn-Hansen, D.; von Leipzig, K. Metacognitive Learning: Skills Development through Gamification at the Stellenbosch Learning Factory as a Case Study. S. Afr. J. Ind. Eng. 2017, 28, 105–112. [Google Scholar] [CrossRef] [Green Version]

- Krathwohl, D.R. A Revision of Bloom’s Taxonomy: An Overview. Theory Pract. 2002, 41, 212–218. [Google Scholar] [CrossRef]

- Glass, R.; Miersch, P.; Metternich, J. Influence of Learning Factories on Students’ Success—A Case Study. Procedia CIRP 2018, 78, 155–160. [Google Scholar] [CrossRef]

- Erler, W.; Gerzer-Sass, A.; Nußhart, C.; Sass, J. Die Kompetenzbilanz. Ein Instrument Zur Selbsteinschätzung Und Beruflichen Entwicklung. In Handbuch der Kompetenzmessung; Erpenbeck, J., von Rosenstiel, L., Eds.; Schäffer-Poeschel: Stuttgart, Germany, 2003; pp. 167–179. [Google Scholar]

- Schaper, N. Arbeitsproben Und Situative Fragen Zur Messung Arbeitsplatzbezogener Kompetenzen. In Handbuch Kompetenzmessung; Erpenbeck, J., Rosenstiel, L., Grote, S., Sauter, W., Eds.; Schäffer-Poeschel: Stuttgart, Germany, 2017; pp. 523–537. [Google Scholar]

- Balve, P.; Ebert, L. Ex Post Evaluation of a Learning Factory—Competence Development Based on Graduates Feedback. Procedia Manuf. 2019, 31, 8–13. [Google Scholar] [CrossRef]

- Reining, N.; Kauffeld, S.; Herrmann, C. Students’ Interactions: Using Video Data as a Mean to Identify Competences Addressed in Learning Factories. Procedia Manuf. 2019, 31, 1–7. [Google Scholar] [CrossRef]

- Richter, T.; Naumann, J.; Groeben, N. The Computer Literacy Inventory (INCOBI): An Instrument for the Assessment of Computer Literacy and Attitudes toward the Computer in University Students of the Humanities and the Social Sciences. Psychol. Erzieh. Und Unterr. 2001, 48, 1–13. [Google Scholar]

- Devika Raj, P.; Venugopal, A.; Thiede, B.; Herrmann, C.; Sangwan, K.S. Development of the Transversal Competencies in Learning Factories. Procedia Manuf. 2020, 45, 349–354. [Google Scholar] [CrossRef]

- Juraschek, M.; Büth, L.; Martin, N.; Pulst, S.; Thiede, S.; Herrmann, C. Event-Based Education and Innovation in Learning Factories—Concept and Evaluation from Hackathon to GameJam. Procedia Manuf. 2020, 45, 43–48. [Google Scholar] [CrossRef]

- Omidvarkarjan, D.; Conrad, J.; Herbst, C.; Klahn, C.; Meboldt, M. Bender—An Educational Game for Teaching Agile Hardware Development. Procedia Manuf. 2020, 45, 313–318. [Google Scholar] [CrossRef]

- Sieckmann, F.; Petrusch, N.; Kohl, H. Effectivity of Learning Factories to Convey Problem Solving Competencies. Procedia Manuf. 2020, 45, 228–233. [Google Scholar] [CrossRef]

- Adam, M.; Hofbauer, M.; Stehling, M. Effectiveness of a Lean Simulation Training: Challenges, Measures and Recommendations. Prod. Plan. Control 2021, 32, 443–453. [Google Scholar] [CrossRef]

- Mahmood, K.; Otto, T.; Kuts, V.; Terkaj, W.; Modoni, G.; Urgo, M.; Colombo, G.; Haidegger, G.; Kovacs, P.; Stahre, J. Advancement in Production Engineering Education through Virtual Learning Factory Toolkit Concept. Proc. Est. Acad. Sci. 2021, 70, 374. [Google Scholar] [CrossRef]

- Roll, M.; Ifenthaler, D. Learning Factories 4.0 in Technical Vocational Schools: Can They Foster Competence Development? Empir. Res. Vocat. Educ. Train. 2021, 13, 20. [Google Scholar] [CrossRef]

- Kleppe, P.S.; Bjelland, O.; Hansen, I.E.; Mork, O.-J. Idea Lab: Bridging Product Design and Automatic Manufacturing in Engineering Education 4.0. In Proceedings of the 2022 IEEE Global Engineering Education Conference (EDUCON), Tunis, Tunisia, 28–31 March 2022; IEEE: New York, NY, USA, 2022; pp. 195–200. [Google Scholar] [CrossRef]

- Urgo, M.; Terkaj, W.; Mondellini, M.; Colombo, G. Design of Serious Games in Engineering Education: An Application to the Configuration and Analysis of Manufacturing Systems. CIRP J. Manuf. Sci. Technol. 2022, 36, 172–184. [Google Scholar] [CrossRef]

- Erpenbeck, J.; von Rosenstiel, L.; Grote, S.; Sauter, W. (Eds.) Handbuch Kompetenzmessung: Erkennen, Verstehen Und Bewerten von Kompetenzen in Der Betrieblichen, Pädagogischen Und Psychologischen Praxis, 3rd ed.; Schäffer-Poeschel: Stuttgart, Germany, 2017; ISBN 978-3-7910-3511-6. [Google Scholar]

- Kauffeld, S. Kompetenzen Messen, Bewerten, Entwickeln—Ein Prozessanalytischer Ansatz Für Gruppen; Schäffer-Poeschel: Stuttgart, Germany, 2006; ISBN 3-7910-2508-2/978-3-7910-2508-7. [Google Scholar]

- Kauffeld, S. Das Kompetenz-Reflexions-Inventar (KRI)—Konstruktion Und Erste Psychometrische Überprüfung Eines Messinstrumentes. Gr. Interakt. Organ. Z. Für Angew. Organ. (GIO) 2021, 52, 289–310. [Google Scholar] [CrossRef]

- Ugurlu, S.; Gerhard, D. Integrative Product Creation—Results from a New Course in a Learning Factory. In Proceedings of the 16th International Conference on Engineering and Product Design Education: Design Education and Human Technology Relations, E and PDE 2014, Enschede, The Netherlands, 4–5 September 2014; pp. 543–548, ISBN 978-1-904670-56-8. [Google Scholar]

- Andersen, A.-L.; Brunoe, T.D.; Nielsen, K. Engineering Education in Changeable and Reconfigurable Manufacturing: Using Problem-Based Learning in a Learning Factory Environment. Procedia CIRP 2019, 81, 7–12. [Google Scholar] [CrossRef]

- Balve, P.; Albert, M. Project-Based Learning in Production Engineering at the Heilbronn Learning Factory. Procedia CIRP 2015, 32, 104–108. [Google Scholar] [CrossRef] [Green Version]

- Ogorodnyk, O.; Granheim, M.; Holtskog, H.; Ogorodnyk, I. Roller Skis Assembly Line Learning Factory—Development and Learning Outcomes. Procedia Manuf. 2017, 9, 121–126. [Google Scholar] [CrossRef]

- Grøn, H.G.; Lindgren, K.; Nielsen, I.H. Presenting the UCN Industrial Playground for Teaching and Researching Industry 4.0. Procedia Manuf. 2020, 45, 196–201. [Google Scholar] [CrossRef]

- Gento, A.M.; Pimentel, C.; Pascual, J.A. Lean School: An Example of Industry-University Collaboration. Prod. Plan. Control 2021, 32, 473–488. [Google Scholar] [CrossRef]

- Kauffeld, S.; Zorn, V. Evaluationen Nutzen—Ergebnis-, Prozess- Und Entwicklungsbezogen. Handb. Qual. Stud. Lehre Und Forsch. 2019, 67, 37–62. [Google Scholar]

- Kauffeld, S. Nachhaltige Personalentwicklung Und Weiterbildung; Springer: Berlin/Heidelberg, Germany, 2016; ISBN 978-3-662-48129-5. [Google Scholar] [CrossRef]

- Section 5 of the Lower Saxony (Germany) Higher Education Act [Niedersächsisches Hochschulgesetz (NHG)] on Evaluation of Research and Teaching. 2007. Available online: https://www.nds-voris.de/jportal/?quelle=jlink&query=HSchulG+ND+%C2%A7+5&psml=bsvorisprod.psml&max=true (accessed on 30 September 2022).

- Teizer, J.; Chronopoulos, C. Learning Factory for Construction to Provide Future Engineering Skills beyond Technical Education and Training. In Proceedings of the Construction Research Congress 2022, Arlington, VA, USA, 9–12 March 2022; American Society of Civil Engineers: Reston, VA, USA, 2022; pp. 224–233. [Google Scholar] [CrossRef]

- Tommasi, F.; Perini, M.; Sartori, R. Multilevel Comprehension for Labor Market Inclusion: A Qualitative Study on Experts’ Perspectives on Industry 4.0 Competences. Educ. Train. 2022, 64, 177–189. [Google Scholar] [CrossRef]

| Characteristics | Number of Studies | |

|---|---|---|

| Type of learning factory | ||

| Physical | 16 | |

| Simulated/virtual/digital | 3 | |

| Physical and simulated/virtual/digital | 3 | |

| Type of intervention | ||

| Seminars, project work, lectures | 7 | |

| Training, workshop, qualification, education | 9 | |

| Vocational training | 1 | |

| Game intervention | 3 | |

| Case study | 1 | |

| Not specified | 1 | |

| Type of assessment on learning and competence development | ||

| Self-assessment questionnaire | 16 | |

| Practical application | 5 | |

| Knowledge test | 4 | |

| Interview | 3 | |

| Observation | 2 | |

| Grades | 2 | |

| Videos | 1 | |

| Written feedback | 1 | |

| Experimental and control groups | ||

| Experimental group(s) only and no control group | 20 | |

| Both experimental and control groups | 2 (+1) | |

| Number of participants | ||

| Up to 15 | 7 | |

| 16 to 30 | 4 | |

| 31 to 50 | 5 | |

| 51 to 100 | 3 | |

| Over 100 | 3 | |

| Background of participants | ||

| Students | 16 | |

| Trainees | 2 | |

| Professionals | 6 | |

| Stakeholders | 1 | |

| Not specified | 1 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reining, N.; Kauffeld, S. Empirical Findings on Learning Success and Competence Development at Learning Factories: A Scoping Review. Educ. Sci. 2022, 12, 769. https://doi.org/10.3390/educsci12110769

Reining N, Kauffeld S. Empirical Findings on Learning Success and Competence Development at Learning Factories: A Scoping Review. Education Sciences. 2022; 12(11):769. https://doi.org/10.3390/educsci12110769

Chicago/Turabian StyleReining, Nine, and Simone Kauffeld. 2022. "Empirical Findings on Learning Success and Competence Development at Learning Factories: A Scoping Review" Education Sciences 12, no. 11: 769. https://doi.org/10.3390/educsci12110769

APA StyleReining, N., & Kauffeld, S. (2022). Empirical Findings on Learning Success and Competence Development at Learning Factories: A Scoping Review. Education Sciences, 12(11), 769. https://doi.org/10.3390/educsci12110769