Abstract

As the COVID-19 pandemic recedes, its legacy has been to disrupt universities across the world, most immediately in developing online adjuncts to face-to-face teaching. Behind these problems lie those of assessment, particularly traditional summative assessment, which has proved more difficult to implement. This paper models the current practice of assessment in higher education as influenced by ten factors, the most important of which are the emerging technologies of artificial intelligence (AI) and learning analytics (LA). Using this model and a SWOT analysis, the paper argues that the pressures of marketisation and demand for nontraditional and vocationally oriented provision put a premium on courses offering a more flexible and student-centred assessment. This could be facilitated through institutional strategies enabling assessment for learning: an approach that employs formative assessment supported by AI and LA, together with collaborative working in realistic contexts, to facilitate students’ development as flexible and sustainable learners. While literature in this area tends to focus on one or two aspects of technology or assessment, this paper aims to be integrative by drawing upon more comprehensive evidence to support its thesis.

1. Introduction

Artificial intelligence (AI) is the most recent of a number of emerging technologies with the potential to enable a new assessment model for universities. The aims of this paper are to examine this potential within the context of a changing higher education (HE) landscape and to consider how assessment for learning might be a better fit for future needs than the traditional assessment practice. Assessment for Learning (AfL) is an approach that employs collaborative working in authentic contexts to facilitate students’ development as flexible and sustainable learners. AfL is closely related to the approach of formative assessment, in which learning is assessed in situ on a continuing basis rather than summatively at the end of the learning process. In this paper, the two terms are used interchangeably. The paper begins by outlining the current HE assessment landscape and modelling it as influenced by ten factors. These have been grouped into three domains: commercial, technological and inertial, and are summarised in Table 1.

Table 1.

Ten Factors Influencing Assessment in HE.

A detailed explanation of the ten Factors follows in the next two sections. In brief, this paper argues that Factors A–G, driven by commercial and technological pressures, are putting a premium on institutions that can offer flexible courses with student-centred and performance-based assessment. These pressures will progressively erode the inertial Factors H–J, leading to a radical change in assessment practice.

This paper has several original features. Existing literature on new technologies for assessment tends to concentrate on a limited range of topics; by contrast, this paper aims to be integrative in drawing upon more comprehensive evidence to support its thesis. The paper is also innovative in presenting a conceptual model that relates external and internal factors key to the current and future development of assessment in HE and in its use of these factors in an analysis of the strengths, weaknesses, opportunities, and threats faced by the post-pandemic university. The paper will argue that in order to remain competitive in a rapidly changing market, universities must move from sole reliance on conventional warranting through summative assessment towards a flexible and integrative model employing AfL.

2. Commercial and Technological Pressures for Change

This section outlines and situates commercial and technological Factors A–G in relation to the supporting literature.

2.1. Factor A: Growth in Knowledge-Based Graduate Occupations

Changes in the content and function of university courses reflect changes in the graduate professions, which are becoming more transient, less focused on the acquisition of propositional knowledge, and more concerned with the creation of procedural knowledge.

The relevance and currency of many traditional HE disciplines are under threat. The distinction between propositional and procedural knowledge [1], also known as declarative and functional knowledge [2], has been widely discussed. Traditionally, the main purpose of university teaching was the transmission of propositional knowledge—knowing-what—leaving the acquisition of procedural knowledge—knowing-how—to professionals’ experience and application in the workplace. Although this orientation suited the twentieth century, twenty-first century professional roles have changed. Stein [3] notes the shrinking half-life of propositional knowledge accompanied by rapid growth in procedural ‘frontier knowledge’, and recent years have seen increasing pressure in many knowledge-intensive academic disciplines to keep their curricula current and relevant. The World Economic Forum’s report, The Future of Jobs [4] (p. 3), found that “in many industries and countries, the most in-demand occupations or specialties did not exist ten or even five years ago, and the pace of change is set to accelerate”. A later report [5] (p. 6) noted that “for those workers set to remain in their roles in the next five years, nearly 50% will need reskilling for their core skills”.

Dolan and Garcia [6] argue that a more global, complex, and transient twenty-first-century environment makes new demands on the managers of the future and on their induction as professionals. In the 2022 Horizon report [7], the digital economy is identified as an important trend. There is growing evidence of how the rise of the knowledge-intensive services sector is reshaping the demands and ways of working among the graduate professions [8,9]. This is characterised by self-managed careers [10] and short-term project-specific employment [11]. Lai and Viering [12] listed what they call twenty-first-century skills as: critical thinking, creativity, collaboration, metacognition, and motivation. McAfee and Brynjolfsson [13] see the development of soft skills and dispositions in emerging occupations as becoming of comparable importance to the propositional knowledge acquired in degree courses. The World Economic Forum report Jobs of Tomorrow [14] makes a similar point, identifying the emergence of seven key professional clusters, some involving new digital technologies and others reflecting the continuing importance of human interaction and cross-functional collaborative skills. Finally, in a review of 30 UK and international reports, the UK National Foundation for Educational Research [15] identifies the top five most frequently mentioned skills as: problem solving/decision making; critical thinking/analysis; communication; collaboration/cooperation; and creativity/innovation.

Against this consensus, it is difficult to defend the practices of traditional universities, in which an emphasis remains on the acquisition of (increasingly transient) propositional knowledge, an implicit assumption that it will be applied by lone professionals, and its assessment through the performance of academic exercises by individuals working in isolation.

2.2. Factor B: Competitive Pressures on the HE Market

The outreach of HE at national and global levels is responding to a market that shifts the focus from institutions towards student consumers; established universities also face increasing competition from private providers.

The traditional three- or four-year full-time undergraduate model is under threat from competition by cost and convenience. The 2022 Horizon report [7] identifies the cost and perceived value of university degrees as an important trend, and this price inflation has led to increasingly consumerist attitudes among students. The costs of a traditional three-year, full-time campus-based degree course have risen sharply. In the US, the average total cost of an undergraduate course in 2021 was 48,510 USD a year [16]. In the UK, the average undergraduate course costs 80,000 USD [17]. These developments appear to have encouraged the consumerist expectations of students, and Harrison and Risler [18] see public universities in the US operating increasingly similar to corporations in order to compensate for declining funding. They discuss consumerism as associated with diminished student achievement and argue that educational quality is compromised when students are treated as customers to be satisfied rather than learners to be challenged. Similar findings come from the UK, where in a survey of undergraduates, Bunce et al. [19] found higher consumer orientation to be associated with lower academic performance. This effect was greater for students of science, technology, engineering, and mathematics, for whom tuition fees were higher.

Private universities and for-profit agencies are becoming more competitive with traditional institutions. The Program for Research on Private Higher Education Levy [20] reports that almost a third of higher education students globally are studying through private providers. Seventy percent of these are from countries with less developed higher education infrastructures. Qureshi and Khawaja [21] drew similar conclusions, noting that private HE providers have benefited from a shift in most countries from nationalisation to liberalisation, privatisation, and marketisation. It has become dominant where the capacity of public higher education has been inadequate to satisfy rising demands. This is particularly evident in Asia and regions such as the Middle East, where it is likely to soon overtake the public sector.

These competitive pressures present major challenges to established universities, not just in the provision of attendance models that meet twenty-first-century needs but also in flexible and relevant assessment practices—a topic that will be examined in the next section.

2.3. Factor C: Increasing Qualification Unbundling and Credit Transfer

The ‘unbundling’ of degree course modules into externally sold products is creating a credit transfer market that is more responsive to student demand and engagement.

One of the key technologies discussed in the 2022 Horizon report [7] is the use of microcredentials: ‘unbundled’ course units sold independently of the degrees to which they are a part. Although this is not a new development, universities are increasingly offering vocationally relevant short courses with flexible access requirements to cater to a more diverse student base. Pioneers of this trend include The Open University [22], established in the UK in 1969, and Western Governors University [23] in the USA, founded in 1997. However, these are specialist institutions; what is new is the mass entry of traditional mainstream providers into this market.

The transferability of free-standing courses within a modular system has precedent in the California universities’ model [24] and the European credit transfer and accumulation system [25]. This trend has accelerated and globalised through the burgeoning growth of information and communication technologies (ICT), enabling the arrival of MOOCs—massive open online courses [26] and microcredentials [27,28,29], which have led to what Czerniewicz [30] calls an unbundling of HE. Swinnerton et al. [31] noted the emergence of online programme management companies that have sought partnerships with high-status institutions. Such developments threaten the traditional mainstream university. McCowan [32] predicts that unbundling—with its emphasis on personalisation and employability—weakens universities’ synergies between teaching and research, particularly in the pursuit of basic research with long-term benefits. Furthermore, it undermines the mission of the academy to promote the public good and ensure equality of opportunity.

The wide availability of small course qualifications has exacerbated the problems of transferability and authenticity—for which one potential solution is open digital badges. A leading example is the open badges [33] system, described by Acclaim [34] (p. 3) as “Secure, verified, web-enabled credentials in the form of badges that contain metadata documenting the badge issuer, requirements, and evidence that complement traditional certifications by making them more transparent and easier to transport, share and verify”. Rather than a proprietary system, open badges define a format for the creation of microcredentials by institutions and warranting authorities. In this regard, the system supports the aggregation of badges into a user’s profile account “to organize their own achievements across issuers and learning experiences and broadcast their qualifications with employers, professional networks and others” [34] (p. 3). The acclaim document lists ten US universities that employ this system, and The Open University’s OpenLearn initiative [35] in the UK offers over 80 courses warranted with the OU proprietary badge. However, the educational value of badges is more than just warranting; Cheng et al. [36] foresee the role of open digital badges as going beyond that of a microcredential and argue for their fuller integration into goal-setting to enhance learning experiences.

An international marketplace of millions of proprietary badges attracts the dangers of counterfeiting, and this is where blockchain technology offers greater security. Blockchain is a decentralised, public-facing, but tamper-proof digital ledger that provides a record of timestamped digital transactions. Originally developed to support cryptocurrency, its heavy environmental overhead has hitherto hampered widespread acceptance. In late 2022, the Ethereum blockchain system merged with a separate proof-of-stake technology, reducing its energy consumption by a claimed 99.9% [37] and opening up the potential for general application. The smart contracts feature of blockchain enables trusted transactions to be made without the need for mediation or central authority and in a way that secures them as traceable and irreversible. Williams [38] discusses the potential of this feature for the automated certification of student achievement through online activity, making the point that it enables procedural knowledge gains to be assessed in real time, including through engagement in simulated ‘real world’ contexts or through workplace activity. This formative assessment potential will be discussed later in the section on AfL.

A recent development in blockchain is non-fungible tokens (NFTs). An analysis by Wu and Liu [39] identified several educational applications of NFTs in addition to microcredentials, including transcripts and records, content creation, and learning experiences. In the view of Sutikno and Aisyahrani [40], there will be an important role for NFTs in education alongside future possibilities involving decentralised autonomous organisations, Web 3.0, and an immersive 3D educational metaverse—all of which are likely to have significant impacts on traditional assessment practices.

2.4. Factor D: Growth in Competency-Based Education

Competency-based education offers a vocationally focused and more flexible alternative to traditional full-time campus-based degrees.

Skills-based learning is one of the important trends identified in the 2022 Horizon report [7]. Competency-based education (CBE) is one strategic response to the pressures for greater relevance and currency discussed in relation to Factor A and to the competitive pressures of Factor B. CBE emphasises the mastery by students of explicit learning objectives. Western Governors University [23] was an early implementer and is an important member of the competency-based education network of universities and colleges [41]; in the US, this includes California Community Colleges and the Texas Collaboratory, and in the UK, the London School of Economics. Lentz et al. [42] note how the advent of CBE in medical education in the early 2020s marked a paradigm shift in assessment. Various implementations of CBE range from a utilitarian skillset to what Sturgis [43] describes as a learning environment that provides timely and personalised support and formative feedback [44], with the aim being for students to develop and apply a broad set of skills and dispositions to foster critical thinking, problem-solving, communication, and collaboration [45].

Although CBE has proved very popular with older learners, typically seeking specific skills for career enhancement, it has attracted criticism for the narrowness of its educational focus [46,47]. In the view of the veteran author Tony Bates [48], the weaknesses of CBE are an objectivist approach to learning that makes it unsuitable for subject areas where it is more difficult to prescribe specific competencies and areas where social learning is important. On the other hand, its strengths include lower tuition fees and the flexibility of home-based or work-based study. It is these latter features that make CBE a challenge to many subject areas of the traditional university.

2.5. Factor E: Growth in Online Education and Trace Data of Student Activity

The COVID-19 pandemic has accelerated a trend towards greater use of online education, with growth in the volume of trace data evidencing student activity; however, many studies suggest the speed of change has resulted in poorer learning outcomes.

Pokhrel and Chhetri [49] (p. 133) describe the COVID-19 pandemic as “the largest disruption of education systems in human history, affecting nearly 1.6 billion learners in more than 200 countries”. According to the OECD [50], the effect of the pandemic was a shift from face-to-face teaching towards online delivery and engagement, and the 2022 Horizon report [7] notes the increasing employment of hybrid learning spaces beset by problems of unreliable technology and its inexpert use by academic staff—which has often led to disappointing results. A literature review by Basilotta-Gómez-Pablos et al. [51] of 56 articles indicates that many lecturers recognise their low or medium-low digital competence, especially in the evaluation of educational practice. In another review of the experiences of staff and students over the 2020–2022 period, Koh and Daniel [52] examined 36 articles, identifying coping strategies used by lecturers for: classroom replication; online practical skills training; online assessment integrity; and ways to increase student engagement. For students, the isolation of the home environment and online access challenges were considerable, especially for those with ineffective online participation. Over the same period, a quantitative survey by Karadağ et al. [53] of 30 universities in Turkey found similar problems of low student engagement but noted fewer issues in universities with developed distance education capacities. In a smaller-scale qualitative study of UK student perceptions during the pandemic conducted by Dinu et al. [54], there were positive as well as negative views. Some students saw greater opportunities in online working, but others found significant challenges; however, all students welcomed the greater flexibility of online study. Similar findings came from an international survey by Fhloinn and Fitzmaurice [55] of mathematics lecturers from 29 countries, where respondents reported their students struggling with technical issues, including poor internet connections and the problems of studying from home. Overall, there were lower levels of engagement compared to in-person lectures, and as Koh and Daniel [52] found, these particularly hampered less motivated students. Finally, in a large study by Arsenijević et al. [56] conducted in Russia and five Balkan countries, higher satisfaction levels were associated with the active organisation and management of students’ collaborative work. The need to maintain a strong social presence was found to be more important for students with level academic performance, for whom blended learning was also more successful than a fully online delivery model.

In summary, the evidence examined points to the need for more radical and high-level institutional engagement with the move to online working. It requires more informed and responsive strategies by lecturers, more reliable communications infrastructure, and better preparation and resourcing for students.

The increased usage of universities’ learning management systems as a result of the pandemic has generated considerable student activity data associated with the learning process. The analysis of this for the purposes of assessment will be discussed in the next section.

2.6. Factor F: Increasing Employment of Learning Analytics

The large quantity of student activity data amassed in university learning management systems and elsewhere enables learning analytics and artificial intelligence (AI) processors to provide more informed formative assessment for teachers and learners.

Learning analytics (LA) is one of the important trends identified in the 2022 Horizon report [7]. The trace data of students’ activity via university learning management systems (LMS) and cloud storage repositories can be used to monitor, assess, and predict future achievement through LA. Long and Siemens [57] (p. 34) define this as “the measurement, collection, analysis, and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs”. According to the Society for Learning Analytics Research [58], developments in data capture and analysis have two main benefits. Firstly, they present evidence-based and timely feedback to students on their progress to date, plus formative assessment to support future learning [44]. Secondly, they provide evidence of effectiveness for educators, materials and course designers, and institutional managers. LA can be of many types: for example, descriptive analytics provide insight into the past; diagnostic analytics illuminate why things happened; and prescriptive analytics advise on possible outcomes [59]. The potential of LA to support assessment is discussed by Gašević et al. [45], who detail a number of practical approaches to assuring validity in measurement.

A full review of the substantial literature on LA is beyond the scope of this section, but some issues of particular relevance are addressed here.

An early implementation was at Purdue University in the USA, where LA provided automated real-time feedback to students. This was presented as traffic light signals showing ratings made by student success algorithms [60] from students’ grade records and usage patterns of the learning management system. The system informed early interventions by tutors to target academic support and improve student satisfaction and course retention.

Later applications of LA have employed more complex dashboard visualisations. Many studies suggest the most effective student feedback to be in the form of visual dashboards providing succinct, easily understood evidence and formative assessment advice [61,62]. Visual summaries are also useful to educators, as Papamitsiou and Economides [63] found in a study conducted with 32 Secondary Education teachers. Here, visualisation of the temporal dimension of students’ behaviour increased teachers’ awareness of students’ progress, possible misconceptions and task difficulty.

Ethical concerns over the surveillance potential of LA [64] have led to various privacy guidelines implemented by universities. However, the extensive literature review conducted by Tzimas and Demetriadis [65] indicates there remains a shortage of empirical evidence-based guidelines and codes of practice to monitor and evaluate LA ethics policies. This view is echoed in the 2022 Horizon report [7], which notes little progress in equity and inclusion over recent years.

Developing views of the scope of LA go beyond the rating of individual students’ performance to the assessment of their collaborative work. The discussion in relation to Factor A identified the growing importance of project teamwork and soft skills in the new graduate professions. In contrast, traditional assessment practices have focused on the performance of individual students, and their collaborative learning has been a lesser concern [66]. Social Learning Analytics (SLA) was developed to address this issue. SLA is defined by Ferguson and Buckingham Shum [67] (p. 22), as “a subset of learning analytics, which draws on the substantial body of work evidencing that new skills and ideas are not solely individual achievements, but are developed, carried forward, and passed on through interaction and collaboration”. In this way, Buckingham Shum and Deakin Crick [68] see SLA as embracing five components: learners’ collaborative networking; discourse analysis of textual exchanges; learner-generated content, for example, through collaborative bookmarking; disposition analytics, exploring learners’ intrinsic motivations; and context analytics concerning the range of contexts and locations in which learning takes place.

A recent development with relevance to SLA has been multi-modal learning analytics (MMLA), which employs AI to integrate the analysis of trace data from university LMS with that from other sources. Blikstein and Worsley [69] reviewed research literature on the use of video, logs, text, artefacts, audio, gestures, and biosensors to examine learning in realistic, ecologically valid, and social learning environments. For example, eye-tracking technology has been used to study small collaborative learning groups, where the frequency and duration of students’ joint visual attention correlate highly with the quality of group collaboration.

A contrasting interpretation of the potential of SLA goes beyond the relatively simple matters of providing students with more fine-grained feedback and improving institutions’ course retention. Buckingham Shum and Deakin Crick [70] address the mapping of the five SLA components to twenty-first century competencies. In a reflection on several papers, they comment on its interdisciplinary nature—embracing pedagogy, learning sciences, computation, technology, and assessment—and conceive SLA as operating on a number of levels, from individual students to institutions and beyond. For this complex system to interoperate, major changes will be necessary to transition from an institution-centric to a student-centric way of working. The value of LA in eliciting students’ conceptions of learning and thereby enhancing their learning is reported by Stanja et al. [71].

In summary, LA in its various forms is becoming a powerful tool for monitoring students’ learning and for providing real-time formative feedback—a feature that will be explored in more detail later in the discussion of AfL.

2.7. Factor G: Emergence of Large Language Model Generative AI

The recent emergence of large language model generative AI will destabilise the traditional monopoly of delivery and assessment enjoyed by universities.

AI has developed rapidly in the last 20 years. The 2022 Horizon report [7] identifies AI applications to LA as a key technology that has made considerable contributions to the function and versatility of LA and has transformative potential across HE. In 1997, IBM’s supercomputer Deep Blue beat the chess grandmaster Garry Kasparov, and in 2011, the IBM Watson system defeated champions of the television quiz show Jeopardy! Although these were significant achievements, the latest AI systems are more versatile by several orders of magnitude. Natural language processing and large language model generative AI (LLMG AI) can now employ statistical models of publicly available texts to generate output that is, in some cases, indistinguishable from that of humans [72].

The world’s largest online media corporations have raced to market with rival LLMG AI systems. The ChatGPT (generative pretrained transformer) is a general-purpose chatbot released in 2022 by OpenAI [73] with funding from Microsoft Corporation to enhance its Bing search engine with a conversational interface. Google Corporation has similar plans for Bard, its AI system for Google Search [74]. Meta, the former Facebook Company, launched a similar product called Llama [75]; Baidu, based in China and one of the largest AI and Internet companies in the world, launched Ernie Bot; and the Apple Corporation is working on a similar development [76].

There is considerable concern about the success of LLMG AI agents in passing complex standardised tests. Benuyenah [77] reports that GPT-4 [78], released in 2023, passed the Uniform Bar Exam (UBE) with a score in the top 10% of human test takers. This standardised test is administered by the National Conference of Bar Examiners in the United States to test the knowledge and skills necessary to practise law. Similarly, a score of over 50% was achieved in the US Medical Licensing Examination (USMLE). Benuyenah [77] says this should be a matter of concern, not because an AI agent can pass these tests, but because they require an insufficiently complex challenge. One reaction has been attempts to ban educational chatbots (Grassini [79]); another has been to upgrade plagiarism-detection software to better detect AI-generated text submitted by students. However, as Liu et al. [80] discuss, LLMG AI can be guided to evade AI-generated text detection. The more general issue of cheating is addressed in Section 5 of the paper.

3. Inertial Resistance to Change

This section outlines and situates the inertial Factors H–J in relation to supporting literature.

Inertial resistance to change has been the subject of study in business management literature. Gilbert [81] draws a distinction between two categories of organisational inertia: resource rigidity, a failure to change resource investment patterns, and routine rigidity, a failure to change the organisational processes that deploy those resources. He maintains that a strong perception of external threats helps reduce resource rigidity but simultaneously increases routine rigidity. Hence, threats to an organisation can cause the external appearance of adaptation through resource investment, but internally, the organisation may be doubling down on its core routines. Zhang et al. [82] made a similar finding in relation to information technology (IT) resources. Their large questionnaire survey in China found that although organisational inertia negatively influences an organisation’s agility, the effect of IT exploration and IT exploitation is positively related to organisational agility, with IT exploitation being dominant. As discussed earlier, increased routine rigidity and a doubling down on traditional assessment have been evident in many institutional reactions to LLMG AI.

The implications of these findings for assessment in HE are that the technological pressures discussed in relation to Factors A–G may have a strong potential to influence change in the expectations of external accreditation bodies and in the assessment practices of universities.

3.1. Factor H: Expectations of External Accreditation Bodies

External professional bodies that accredit entry qualifications for some occupations tend to be cautious towards assessment innovation.

The external accreditation of university degrees has developed considerably over the last fifty years, during which it has contributed to the quality and comparability of courses; however, there is growing evidence that its focus on adherence to standards may be proving a barrier to innovation. A positive view is taken by Cura and Alani [83], who argue that international professional bodies such as the Association of MBAs [84] have encouraged business schools around the world to improve and enhance the quality of education, and they point to the positive effects of accreditation on students and faculty members. However, in the field of teacher education in the US, Johnson [85] takes a contrasting view, contending that the National Council for Accreditation of Teacher Education lacks transparency in its personnel and operations. Its emphasis on conformance to standardised practices at the expense of a more holistic appreciation of quality, he says, diverts tutors from the principal task of helping their students become more effective teachers. Moreover, he finds scant evidence that the onerous inspection and validation process yields real benefits. Romanowski [86] also notes an absence of empirical research on the impact of accreditation on improvement across HE. He likens the view of universities towards external bodies as a form of idolatry rather than as an interactive partnership with shared goals. A more nuanced analysis comes from Horn and Dunagan [87], who acknowledge the benefits of external accreditation but say this can come at the expense of innovation. Citing three US universities as examples, they comment on two considerations. The first is inconsistencies between accreditation teams in the interpretation of the same sets of rules. The second is an accreditation focus on inputs—such as institutions’ policy statements—rather than educational outcomes; in other words, assessing what is easier to assess. The resulting uncertainty inhibits institutions’ preparedness to innovate. Importantly, they note the emergence of non-HE commercial agencies operating outside the purview of accreditation systems that are eroding universities’ traditional monopoly in this student market. This mirrors the findings discussed earlier in relation to Factor B.

In summary, there is conflicting evidence as to whether professional accreditation bodies encourage or inhibit innovation. It might be that more vocationally focused subjects with greater contact with employers have different priorities than more academically oriented ones.

3.2. Factor I: Institutional Inertia around Assessment

External professional bodies that accredit entry qualifications for some occupations tend to be cautious towards assessment innovation.

Universities vary in the priority they assign to the management of assessments. For world-class institutions in an increasingly competitive market, reputation is key. In the Times Higher Education World University Rankings by Reputation 2022 [88], six US and four UK universities occupy the top ten positions, but competition is growing from other countries. These large universities attract high-achieving postgraduate students and the biggest research grants, and they benefit from considerable endowment income; so, as with external accreditation, the conservative emphasis on quality management can be a disincentive to innovation.

By contrast, the majority of universities are less financially secure. Many are smaller, their income from research and endowments is lower, and their business models are more reliant on undergraduate students. Moreover, as noted by the 2022 Horizon report [7], they have been subject to years of reductions in public funding. As a result, they are more exposed to Factors A–G as discussed above. For such universities, reputation management is a lower priority compared to financial management and institutional survival, where agility in adapting to externally imposed change is key.

There is evidence that academic staff view innovation in assessment differently than other areas of their practice. Deneen and Boud [89] analysed staff dialogues at a university embarking on a major attempt to change assessment practices. They found that resistance to change was not a unitary concept but differed by type and context. In particular, reaction to change in assessment practice was met by the most significant resistance and had limited success compared to areas such as reconstructing outcomes and enhancing learning activities. Two recent studies conducted during the move to greater online working necessitated by COVID-19 found similar conservatism around assessment. Koh and Daniel [52] reviewed 36 empirical articles describing strategies used by HE lecturers and students to maintain educational continuity. They found many limitations in adapting learning materials and student support, particularly for less engaged students. The greatest problems were encountered in designing and implementing robust assessment, and they recommend greater online dexterity in the development of institutional frameworks to ensure assessment equity. Slade et al. [90] also comment on assessment practices. In a survey of 70 lecturers in an Australian university, they found pedagogical interactions with students had been prioritised in the rapid pivot to online working, leaving the composition and relative weighting of assessment as an afterthought. In their view:

This speaks to a larger issue of assessment being separated from pedagogy in everyday teaching practice of university academics. … There is much work ahead to move beyond the mindset that assessment is what happens at designated times, particularly at the end of the semester, to test knowledge and award grades.(p. 602)

Pressures for change and strategies to reduce inertial drag on assessment will be examined later.

3.3. Factor J: Employers’ Conservatism on New Academic Practices and Awards

Many employers may prefer traditional to innovative academic courses and awards.

Societal acceptance of innovation is a slow process, and resistance takes many forms. Henry Ford, the pioneer of popular motoring, famously said, “If I had asked people what they wanted they would have said faster horses”. There is a natural tendency to interpret the novel in terms of the familiar, as evidenced by terms such as ‘horseless carriage’ and ‘wireless radio’. Similarly, many employers exhibit what Brinkman and Brinkman [91] call cultural lag in their attitudes towards educational innovation. As with the conservatism of academic staff discussed in the previous section, it may be that cognitive dissonance [92], manifesting in social and psychological conflicts, is the cause of resistance to alternative assessment and awards.

There may also be cognitive dissonance between some employers’ resistance to novel educational practices and what they expect of their graduate employees. As discussed earlier with respect to Factor A, soft skills and dispositions are of comparable importance to the propositional knowledge acquired in degree courses. According to Rolfe [93], the top five generic skills sought by employers are interpersonal skills, leadership skills, adaptability, initiative, and confidence. It will be argued later in the paper that these skills are better developed and assessed through innovative educational approaches than by conventional practice in the traditional university.

The next section will relate these ten factors to a SWOT analysis of assessment in HE.

4. SWOT Analysis of Assessment in the Traditional University

SWOT: the analysis of strengths, weaknesses, opportunities, and threats has been employed in business education for several decades as a useful summarising tool [94]. In this section of the paper, it is used in the construction of the main argument: that the strengths and opportunities of the enabling Factors A–G will progressively erode the constraining Factors H–J to facilitate a greater adoption of AfL in mainstream HE practice. In Table 2, the ten factors are presented in a SWOT matrix, which also includes AfL as an Opportunity, and institutional reputation and warranting status as Strengths of the traditional university in the face of competition from the non-HE commercial agencies discussed in Factor B. Factor H appears as both a Strength and a Weakness, reflecting discussion in that section of the discipline-specific influence of professional accreditation bodies.

Table 2.

Strengths, Weaknesses, Opportunities, and Threats of the Ten Factors Influencing Assessment in HE.

The next section will relate this SWOT analysis and the ten factors to the practice of assessment in HE.

5. Two Functions of Assessment

Educational assessment is a complex and contested practice, and its nature and purpose are subject to contrasting implementations. A distinction is made in this paper between two very different realisations: assessment for warranting and assessment for learning. Assessment for warranting is the employment of summative and typically norm-referenced assessment to grade and warrant student performance—an orientation associated with the traditional university. AfL is defined as the employment of formative and typically criterion-referenced assessment to provide feedback on student and course performance. This section will discuss and compare the two approaches.

Assessment for warranting has many critics. A major claim is that the focus on end outcomes and quantification draws attention and resources away from the learning process. As Boud and Falchikov [95] (p. 3) contend, “Commonly, assessment focuses little on the processes of learning and on how students will learn after the point of assessment. In other words, assessment is not sufficiently equipping students to learn in situations in which teachers and examinations are not present to focus their attention. As a result, we are failing to prepare them for the rest of their lives”. Biggs [96] dubbed this a ‘backwash’ effect that influences strategic learners to focus only on what will gain them higher grades. A second claimed shortcoming of summative methods in assessment for warranting is the limited use of feedback. Well-constructed formative feedback has been shown to have high motivational value to enhance learning [97,98,99,100], but the high status accorded to examinations—and their scheduling after the end of the teaching and learning process—limits these benefits. These issues also relate to the successful use of formative feedback in CBE, as discussed in the earlier discussion under Factor D. Sambell and Brown [101] review the literature critical of traditional examinations and summarise the arguments that—in addition to the lack of feedback—the task of writing performatively with pen and paper for a number of hours is a very narrow task with little relevance to future needs.

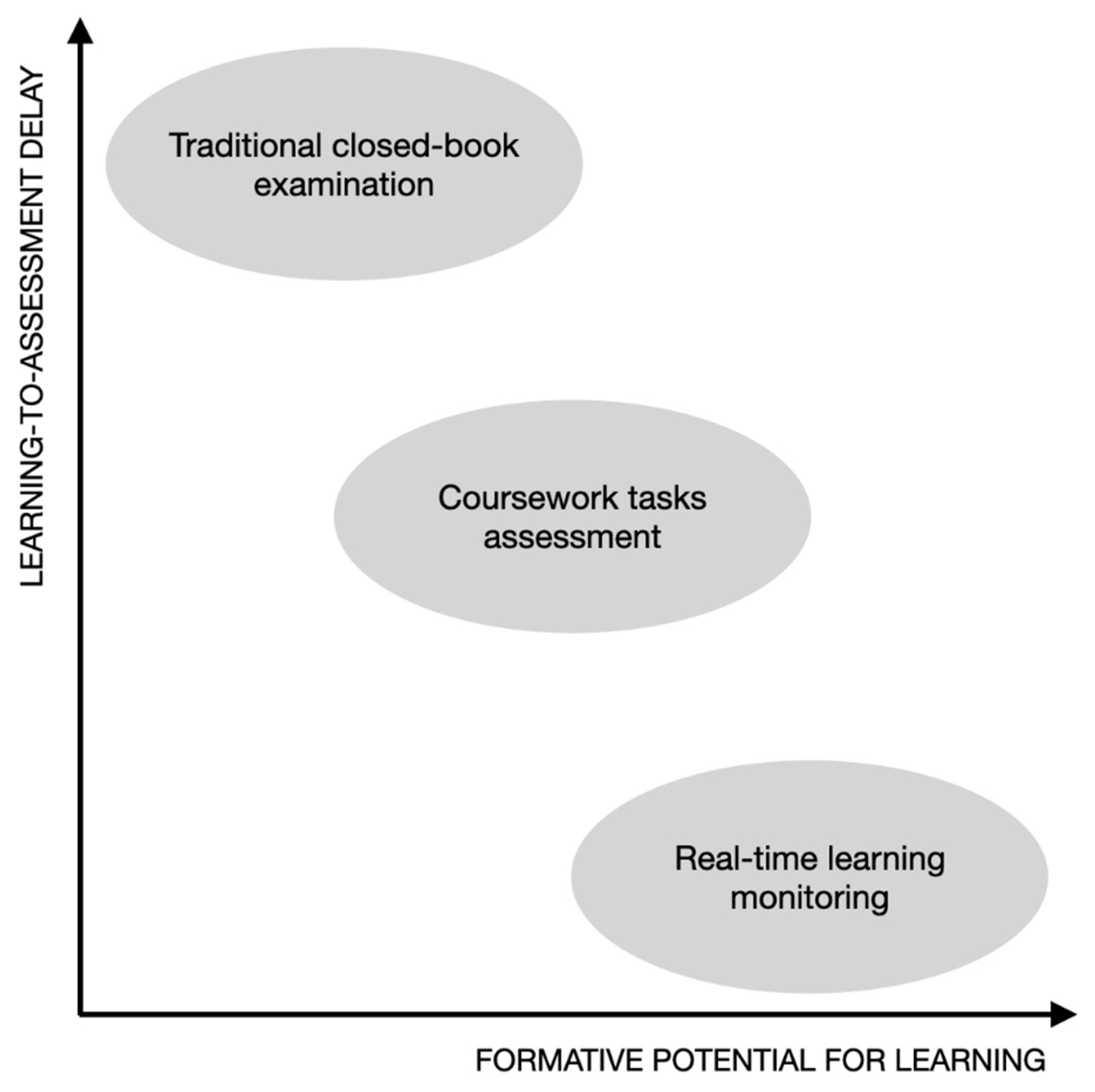

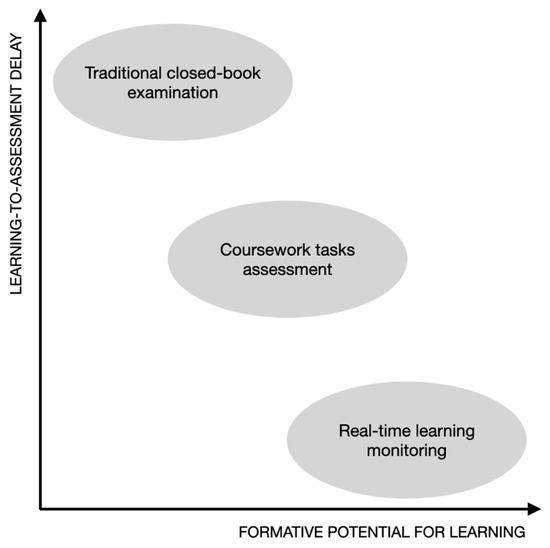

Figure 1 compares the relatively long learning-to-assessment delay of traditional examinations, administered typically at the end of modules or courses, with the real-time monitoring of learning that is now becoming possible with LA and the Socratic tutoring potential of AI: which will be detailed later. The formative feedback afforded by this approach can guide and enhance the learning process [44,102,103]. Coursework tasks, typically completed during modules or courses, occupy an intermediate position and can offer some degree of formative feedback.

Figure 1.

Locations of Assessment Methods on Dimensions of Learning-to-Assessment Delay and Formative Potential for Learning.

A third claimed shortcoming is that assessment for warranting treats numerical marks obtained from summative assessment, as if they were valid indicators to provide the quality assurance confidence demanded for course certification. However, as wide variations exist across British universities in the proportions of ‘good honours’ degrees awarded in different academic subjects [104], the assumption of objectivity and comparability must be questioned. For Kahneman et al. [105], this variation can be explained as noise: a flaw in human judgement that results in “undesirable variability in judgments of the same problem”. Their book provides examples across many professions of the adverse effects of such judgements and goes on to recommend the use of AI systems to provide more reliable decision-making.

In the past, traditional examinations predominated because more interactive methods could not be conducted at scale. Later sections of the paper will discuss how digital technologies offer new ways to combine the acquisition of propositional knowledge with the real-time monitoring of learning and assessment at scale.

The rapid deployment of LLMG AI injects a new urgency into this debate, as many conventional types of assessment are susceptible to cheating by the students who use them. Table 3 compares ten types of assessment, mainly summative, in terms of their potential for cheating. The left-hand column lists assessment types at risk; for example, ChatGPT-4 is well suited to generating conventional essays and reports but is far less adept at the creation of novel artefacts and solutions to original problems. Problem-based learning involving collaborative teamwork [106] has been located in the right-hand column of the table because, although AI could be used to support the team, this approach already necessitates access to information sources, so cheating could be made more difficult if team members were required to account for their individual contributions to the task. Generally, cheating with LLMG AI is more difficult in face-to-face activities such as practicals, performances, and collaborations. This also applies to conventional, proctored, closed-book examinations in which students have no access to external sources; by contrast, the potential for cheating in non-proctored open-book examinations is much higher. This might prompt universities to double down on conventional examinations in the manner discussed by Gilbert [81], and as Selwyn et al. [107] argued, the proctoring of online examinations opens the dangers of surveillance.

Table 3.

A Comparison of Assessment Types Compared by the Potential of Cheating with the Aid of LLMG AI.

In any case, this move would produce no solutions to the weaknesses and threats around Factors A–G. Conventional examinations and warranting have their place, but it is clear from the evidence discussed earlier that sole reliance upon this one method of assessment is becoming an inadequate strategy for current and future needs.

The implications of cheating with AI are profound, and as it would be impossible to ban the use of AI by students, the blanket use of summative assessment should be regarded as a weakness that must be transformed. This, in turn, raises questions as to the purposes of assessment and even the delivery and warranting of HE. These considerations considerably support the argument for universities to build on their strengths and opportunities, such as the assessment types in the right-hand column of Table 3, to bring AfL into mainstream practice—as will now be discussed.

Assessment for Learning

This section will examine the affordances of AfL, some arguments around authentic learning and authentic assessment, and the opportunities of e-assessment for AfL.

In contrast to Assessment for Warranting, the affordances of AfL offer a more flexible and sustainable match to the needs of knowledge-based graduate occupations. Wiliam [108] notes the close similarity between AfL and formative assessment, commenting on their operational definition in terms of teaching strategies and practical techniques to improve the quality of evidence on which educational decisions may be made. He also discusses many studies showing how attention to assessment as an integral part of teaching improves learning outcomes for students. Masters [103] (p. iv) goes further, contending that “The fundamental purpose of assessment is to establish where learners are in their learning at the time of assessment.” In this view, assessment must go beyond the institutional demands of examining and testing “towards one focused on the developmental needs of each student derived from evidence-gathering and observation with respect to empirically based learning progressions.” [103] (p. v). AfL is contrasted by Schellekens et al. [109] with the related notions of assessment as learning and assessment of learning, synthesising their common characteristics in terms of student-teacher roles and relationships, learning environment, and educational outcomes.

Proponents of AfL support the employment of authentic contexts for learning with collaborative, self- and peer assessment, which they argue have greater usefulness and relevance to emerging needs, including sustainable and lifelong learning. Price et al. [110] call for a shift in emphasis from summative to formative assessment, away from marks and grades towards evaluative feedback focused on intended learning outcomes. They also argue for students to become more actively engaged and take greater ownership of their learning. Similarly, Traxler [111] advocates authentic learning that involves or occurs in the ‘real world’, or with real-world problems that are relevant to the learner. This situated learning happens during an activity or in appropriate and meaningful contexts. Hence, it enables personalised learning, which considers the individual student and the accommodation of personal needs.

Authentic assessment is highly relevant to future employability, but its purpose can be seen as broader and more nuanced. Sambell and Brown [101] challenged the utilitarian view of Villarroel et al. [112] that the aim of authentic assessment is to replicate the tasks and performance requirements of the workplace. They argue (p. 13) that “if they are to become agents for change in their own lives and beyond, students need assessment which involves cognitive challenge, development of metacognitive capabilities, shaping of identity, building of confidence and supports a growth towards active citizenship”. This broader conceptualisation is shared by McArthur [113], who takes the view that the student’s relationship to vocationally oriented tasks must be seen from a wider societal perspective that goes beyond acceptance and perpetuation of the status quo.

A focus on the student as an active agent in their own learning is reflected in the holistic model of six affordances of AfL provided by Sambell et al. [114] (p. 5). In this, AfL:

- “is rich in formal feedback (e.g., tutor comment, self-review logs);

- is rich in informal feedback (e.g., peer review of drafts, collaborative project work);

- offers extensive confidence-building opportunities and practice;

- has an appropriate balance of summative and formative assessment;

- emphasises authentic and complex assessment tasks;

- develops students’ abilities to evaluate own progress, direct own learning”.

Summative assessment has a place in this model, but it is a minor one, with the emphasis being what Hattie and Timperley [100] call feedforward. This concept is examined by Sadler et al. [115] in a literature review of 68 articles on feedforward practices. Here, they found the most important component of feedforward to be alignment and timing: comprising opportunities to gain feedback before summative assessment; the clarification of expectations through illustration and discussion; and feedback aimed at supporting students beyond their present course of study. This emphasis on providing future opportunities rather than recording past achievements draws another distinction between AfL and traditional assessment. AfL is one of six tenets underpinning the transforming assessment framework produced for the UK HE community by Advance HE [116]. The framework links innovative assessment and feedback practices with self- and peer- assessment.

International case studies of AfL in practice report gains in student achievement, but caution that AfL adoption entails the development of teachers’ professional skills. Haugan et al. [117] found that replacing coursework assignments in an engineering degree in Norway with formative-only assessment prompted an increase in students’ study hours and improved academic performance. Chinese students also had an affirmative experience of AfL in a large survey by Gan et al. [118] across three universities. Here, students’ perceptions of AfL were found to be significantly positively correlated with a tendency to use a deep approach to learning. Other studies note that AfL adoption carries challenges. In a large action research project in Ireland, Brady et al. [119] identify lessons learned from the implementation of an online simulation for assessment purposes. They concluded that the project was successful but required major changes in educational practice for staff—including increased workload and new professional skills—and changes in the engagement model for students. The issue of professional development was central to a large experimental study of the impact on student achievement of an AfL teacher development programme at a university in the Netherlands [120]. The complex professional competencies addressed by this programme identified successful change strategies and resulted in a statistically significant improvement in student achievement.

The employment of e-assessment—digital technologies to enhance and extend assessment—reflects a cognate pedagogical orientation to that of AfL. Geoffrey Crisp’s pioneering e-Assessment Handbook [121] predated LA and AI developments, but advocated a close alignment of e-assessment with interactive learning and authentic assessment. These ideas were developed further by Crisp in a paper emphasising the importance of feedback through timely formative tasks [122]. In these, he noted the potential of e-assessment, proposing an integrative approach to assessment design to incorporate tasks that were diagnostic and formative, as well as integrative and summative.

More recent developments in the literature on e-assessment have considered it a catalyst for AfL. St-Onge et al. [123] interviewed thirty-one university teachers during the COVID-19 pandemic on their views on assessment. A major finding was that, while the pandemic revealed universities were ill prepared for the greater integration of e-assessment, it was nevertheless a tipping point to critically appraise and change assessment practices. This potential is also noted by O’Leary et al. [124], who echo Crisp [122] in their views on the transformative effects of facilitating the integration of formative activities with accountability. They see e-assessment as a tool to broaden the range of abilities and the scope of constructs that can be assessed, including complex twenty-first century skills in large-scale contexts, and innovations involving virtual reality simulations. An example of successful e-assessment in the workplace is discussed by Tepper et al. [125], who describes how Australian medical students use mobile phones to log their patient interactions and evidence of skill competency in clinical ePortfolios—a model with the potential for multiple workplace applications.

The remainder of this paper examines ways in which integrated strategies might be realised within the framework of AfL through a reappraisal of the purpose and practice of assessment in HE.

6. Towards a New Assessment Model for Universities

‘When the river freezes, we must learn to skate.’

This traditional Russian proverb supports an argument for seizing the opportunities of AfL, supported by Factors E, F, and G (summarised in Table 2), in response to the changing landscape of Factors A, B, C, and D. This section will first discuss how new technologies—principally AI—might be harnessed to this end, and second, how the close alignment of these opportunities with AfL makes it a more suitable assessment model for twenty-first-century universities than Assessment for Warranting.

6.1. New Study and Assessment Opportunities Enabled by AI

The advances in AI outlined in the discussion of Factor G can be seen as both opportunities and threats, but the contention of this paper is that a pivot away from Assessment for Warranting and towards AfL increases the opportunities while reducing the threats.

The educational potential of AI has long been anticipated. In their book, The Global Virtual University, Tiffin and Rajasingham [126] envisaged what they call JITAITs: just-in-time artificially intelligent tutors that would work with individual learners to provide personal tuition and act as an assistant, information collators, and progress recorders. The authors see this as a Vygotskian approach in which the learner is supported—but not led or directed—by a ‘more knowledgeable other’, in a manner that promotes ‘next development’ [127]. Other commentators, such as the potential of JITAITs to the Socratic method [128], in which the tutor poses appropriate questions to prompt the learner’s understanding.

Recent research indicates how LLMG AI might be employed as a tutor-assessor. Yildirim-Erbasli and Bulut [129] discussed the enhancement of learners’ assessment experiences using AI conversational agents to administer tasks and provide formative feedback. Similarly, a study by Pogorskiy and Beckmann [130] explored the effectiveness of a virtual learning assistant to enhance learners’ self-regulatory skills. Finally, Diwan et al. [131] reported research with the GPT-2 AI system to generate narrative fragments: interactive learning narratives and formative assessments as auxiliary learning content to facilitate students’ understanding.

In addition to the potential for Socratic tutoring, AI might also support collaborative teamwork, as discussed under Factor A. The information assistant and curator roles could extend to providing support for team communications and intelligently filtering just-in-time information for relevant team members. At Georgia Tech in the USA, Lee and Soylu [132] envisage the employment of AI in collaborative activities such as discussions or research and analysis, in which students are assessed—including potential peer evaluations—in the co-construction of knowledge and application of skills.

These new study and assessment opportunities enabled by AI are summarised in Table 4.

Table 4.

New Study and Assessment Opportunities Enabled by AI.

6.2. AfL with AI: Towards a New Assessment Model for Universities

There is a close alignment between the AI-enabled assessment opportunities in Table 4 and the employment of AfL. In both cases, assessment is contemporaneous with learning activities that can be situated in authentic contexts (Factors A and D); it is flexible and compatible with microcredentialing (Factors B and C); and it generates data suitable for use by LA (Factors E and F). This section will consider some ways in which a synergy between AI and AfL could enable a new assessment model for universities.

Studies on the use of AI with existing methods of enhancing assessment and feedback are beginning to emerge. Research by Hooda et al. [133] compared the performance of seven AI and machine learning algorithms on the UK Open University Learning Analytics dataset with the task of enhancing students’ learning outcomes. Although the study recognises many limitations, not least the complexities of implementing AI-enhanced LA in practice, it does provide ‘proof of concept’ that this strategy can be successful. A different approach is taken by Ikenna et al. [134], in which six AI models were compared to assist in the content analysis of feedback provided by instructors for feedback practices measured on self, task, process, and self-regulation levels. Similar to the study by Hooda et al. [133], it identified a clearly winning AI model and contributed to research in this emerging field. Tirado-Olivares et al. [135] reported a study in which text generated by ChatGPT was employed to enhance the critical thinking skills of preservice teachers to question sources, evaluate biases, and consider credibility. Comparable studies have been conducted on AI-assisted knowledge assessment techniques for adaptive learning environments [136] and in competency-based medical education, where Lentz et al. [42] make a similar observation to Hooda et al. [133] that the rapid growth of online education, coinciding with the increasing power of AI (Factors E and G), is enabling a shift from the assessment of learning towards AfL. However, as with many emerging developments in technology in which novelty can trump a full understanding of potential, there is the danger of exaggerated claims. Nemorin et al. [137] discuss four ways in which hype about AI is disseminated: as a panacea for economic growth; as an adjunct to the standardised testing of learning; as an international policy consensus; and as a source from which significant profits can be made by AI educational technology corporations.

A new assessment model for universities is now possible, in which existing e-assessment techniques might be employed alongside LA and AI to ‘square the circle’ between the demands on the assessment of institutional managers and the educational benefits of improved formative assessment [138]. Recent studies are encouraging.

Sambell and Brown [101] (p. 11) see the aftermath of the pandemic as “a once-in-a-generation opportunity to reimagine assessment for good rather than simply returning to the status quo”. The goal, they argue, is to reconceptualise assessment and feedback practices in light of future needs. These needs and the ways in which they might be addressed will now be examined by considering synergies between AI and AfL. Writing at a time before the arrival of these technologies, an authoritative group of educationalists in Australia led by David Boud [139] advanced seven propositions for assessment reform in higher education, saying that assessment is most effective when:

- it is used to engage students in learning that is productive;

- it is used actively to improve student learning;

- students play a greater role as partners in learning and assessment;

- students are supported in the transition to university study;

- AfL is placed at the centre of curriculum planning;

- AfL is a focus for staff and institutional development;

- overall achievement and certification are based on assessments of integrated learning that richly portray students’ achievements.

It is apparent that in order for these propositions to be realised, a comprehensive reform of university practice would be necessary. For example, a ‘bolt-on’ of propositions 5 and 6 would be ineffective if proposition 7 were not addressed, and more active roles for students would be needed for the full benefits of AfL to be realised. In view of the institutional inertia around assessment (Factor I), university senior teams would need to be convinced of the competitive benefits of assessment reform before progress could be made. Koomen and Zoanetti [140] propose two planning tools to assist institutional policymakers in bridging the gap between assessment planning and organisational strategy. The first is an assessment planning and review framework: a high-level interdisciplinary tool, based upon existing HE models, to facilitate a valid and reliable assessment of eleven conceptual and operational assessment nodes. The second is an assessment-capability matrix, which maps the eleven nodes to institutional strategy concerns comprising workforce capability, technology platform, procurement and partnerships, and corporate services. While Koomen and Zoanetti [140] take a generic stance, Carless [141] focuses on how AfL might be scaled up to the institutional level. In this, four main AfL strategies are examined: productive assessment task design, effective feedback processes, the development of student understanding of quality, and activities where students make judgments. These are then related to institutional concerns of quality assurance, leadership, and incentives, the development of assessment literacy through professional development, and the potential of technology in enabling AfL. Most recently, a model to integrate AI across the curriculum has been developed at the University of Florida by Southworth et al. [142]. The authors argue that AI education, innovation, and literacy are set to become cornerstones of the HE curriculum. The goal of the ‘AI Across the Curriculum’ model at Florida is, through campus-wide investment in AI, to innovate curriculum and implement opportunities for student engagement within identified areas of AI literacy in ways that nurture interdisciplinary working while ensuring student career readiness.

Earlier sections of the paper have outlined the problems of assessment that face traditional universities in the aftermath of the pandemic. This final section has reviewed emerging strategies to envision AfL and AI as catalysts in the process of evolution, impelled by Factors A–G, from Assessment for Warranting to AfL.

7. Summary and Conclusions

This paper has examined the thesis that, in order to remain competitive in a rapidly changing market, universities must move away from sole reliance on traditional warranting with summative assessment towards a flexible and integrative model employing AfL. To support this argument, an original conceptual model has been employed, comprising ten external and internal factors that are key to the current and future development of assessment in HE. These factors, which are evidenced by reference to a wide range of recent and authoritative sources, have been incorporated into an analysis of the strengths, weaknesses, opportunities, and threats faced by the post-pandemic university in relation to assessment. From this, it is recommended that AfL, supported by AI and LA, should move into mainstream use as universities increasingly employ new technologies in their course delivery and adapt to a changing market.

Table 5 presents a summary comparison of AfL with traditional assessment for warranting against each of the commercial and technological domain Factors A–G; this consolidates the case that traditional assessment shows declining suitability for twenty-first-century needs. For Factors A and B, AfL shows greater compatibility with new occupations in the knowledge economy and is more quickly responsive to new assessment requirements. For Factors C and D, AfL is more compatible with the microcredentialing and continuing professional updating that reflect the growing practice of CBE. For Factors E and F, AfL is congruent with the aggregation and analysis of student activity data, providing formative feedback, and assessing collaboration in work-related environments. Finally, for Factor G, AI is a potential partner for AfL in supporting continuing authentic assessment and personalised Socratic tuition.

Table 5.

Summary Comparison of Assessment for Learning with Traditional Assessment in Respect of Factors A–G.

Arguments for AfL are of two types: educational and pragmatic. For reasons discussed around Factors H, I, and J, the current emphasis on warranting by summative assessment is unlikely to be dislodged in the minds of institutional managers by arguments about its poor educational benefits. Similarly, calls for a change in the traditional role of lecturers—from “delivering expert knowledge” to being supportive “learning designers” fostering students’ knowledge creation (Deakin Crick and Goldspink, [143] (p. 17)—have gone largely unheeded since the days of Socrates [144].

A more pragmatic and persuasive case to institutional managers is made by commercial and technological Factors A–G, particularly in respect of the global marketplace for prospective and continuing students with singular requirements. As HE courses are more widely promoted at a modular level, institutions are in an increasingly competitive market to provide experiences tailored to the circumstances and needs of individual students—and the potential of AI is a wildcard that could strongly influence expectations. It would be a clear competitive advantage for universities to attract and retain students by ensuring personalised experiences designed to equip their graduates with sustainable long-term skills for an unpredictable future; if high-status universities led this way, it is likely that many more would follow. However, as this direction is incompatible with a sole focus on assessment for warranting, a gradual transition towards AfL might be through blended assessment, with an emphasis on collaborative working in authentic environments.

In whatever way the move towards AfL might progress, it is crucial that institutional managers be well informed of these options—if they are to avoid the unfortunate experience of Hemingway’s character Mike in the novel The Sun Also Rises, to whom unexpected change came “gradually, then suddenly”.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The author declares no conflict of interest.

References

- Gibbons, M.; Limoges, C.; Nowotny, H.; Scott, P.; Schwartzman, S.; Trow, M. The New Production of Knowledge: The Dynamics of Science and Research in Contemporary Society; Sage: London, UK, 1994; Available online: https://www.researchgate.net/publication/225088790_The_New_Production_of_Knowledge_The_Dynamics_of_Science_and_Research_in_Contemporary_Societies (accessed on 1 August 2023).

- Biggs, J.B. Aligning Teaching and Assessment to Curriculum Objectives, Learning and Teaching Support Network, September 2003; Advance HE: York, UK, 2003; Available online: https://www.advance-he.ac.uk/knowledge-hub/aligning-teaching-and-assessment-curriculum-objectives (accessed on 1 August 2023).

- Stein, R.M. The Half-Life of Facts: Why Everything We Know Has an Expiration Date. Quant. Financ. 2014, 14, 1701–1703. [Google Scholar] [CrossRef]

- World Economic Forum. The Future of Jobs: Employment, Skills and Workforce Strategy for the Fourth Industrial Revolution; World Economic Forum: Cologny, Switzerland, 2016; Available online: https://www3.weforum.org/docs/WEF_Future_of_Jobs.pdf (accessed on 1 August 2023).

- World Economic Forum. The Future of Jobs Report 2020; World Economic Forum: Cologny, Switzerland, 2020; Available online: https://www.weforum.org/reports/the-future-of-jobs-report-2020 (accessed on 1 August 2023).

- Dolan, S.L.; Garcia, S. Managing by Values. J. Manag. Dev. 2002, 21, 101–117. [Google Scholar] [CrossRef]

- Pelletier, K.; McCormack, M.; Reeves, J.; Robert, J.; Arbino, N.; Al-Freih, M.; Dickson-Deane, C.; Guevara, C.; Koster, L.; Sánchez-Mendiola, M.; et al. 2022 EDUCAUSE Horizon Report, Teaching and Learning Edition; EDUCAUSE: Boulder, CO, USA, 2022; Available online: https://library.educause.edu/-/media/files/library/2022/4/2022hrteachinglearning.pdf (accessed on 1 August 2023).

- Castells, M. The Rise of the Network Society; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Gotsch, M.; Hipp, C. Measurement of innovation activities in the knowledge-intensive services industry: A trademark approach. Serv. Ind. J. 2012, 32, 2167–2184. [Google Scholar] [CrossRef]

- Manyika, J.; Lund, S.; Singer, M.; White, O.; Berry, C. Digital Finance for All: Powering Inclusive Growth in Emerging Economies; McKinsey Global Institute: New York, NY, USA, 2016; Available online: https://www.findevgateway.org/sites/default/files/publications/files/mg-digital-finance-for-all-full-report-september-2016.pdf (accessed on 1 August 2023).

- Bersin, J. Catch the wave: The 21st-century career. Deloitte Rev. 2017, 21, 62–79. Available online: https://www2.deloitte.com/us/en/insights/deloitte-review/issue-21/changing-nature-of-careers-in-21st-century.html (accessed on 1 August 2023).

- Lai, E.R.; Viering, M.M. Assessing 21st Century Skills: Integrating Research Findings National Council on Measurement in Education; Pearson: London, UK, 2012; Available online: https://www.semanticscholar.org/paper/Assessing-21-st-Century-Skills-:-Integrating-on-in-Lai-Viering/d0a64ed0dcbd7ea9c88d8f7148189094e5340c02 (accessed on 1 August 2023).

- McAfee, A.; Brynjolfsson, E. Machine, Platform, Crowd: Harnessing Our Digital Future; W. W. Norton: New York, NY, USA, 2017; Available online: https://books.google.co.uk/books/about/Machine_Platform_Crowd_Harnessing_Our_Di.html?id=zh1DDQAAQBAJ&redir_esc=y (accessed on 1 August 2023).

- World Economic Forum. Jobs of Tomorrow: Mapping Opportunity in the New Economy; World Economic Forum: Cologny, Switzerland, 2020; Available online: https://www3.weforum.org/docs/WEF_Jobs_of_Tomorrow_2020.pdf (accessed on 1 August 2023).

- NFER. The Skills Imperative 2035: What Does the Literature Tell Us about Essential Skills Most Needed for Work? NFER: London, UK, 2022; Available online: https://www.nfer.ac.uk/the-skills-imperative-2035-what-does-the-literature-tell-us-about-essential-skills-most-needed-for-work/ (accessed on 1 August 2023).

- National Center for Education Statistics. Fast Facts: Tuition Costs of Colleges and Universities (76). Available online: https://nces.ed.gov/fastfacts/display.asp?id=76 (accessed on 1 August 2023).

- QS International. QS World University Rankings, Events & Careers Advice. Top Universities. Available online: https://www.topuniversities.com/ (accessed on 1 August 2023).

- Harrison, L.M.; Risler, L. The Role Consumerism Plays in Student Learning. Act. Learn. High. Educ. 2015, 16, 67–76. [Google Scholar] [CrossRef]

- Bunce, L.; Baird, A.; Jones, S. The student-as-consumer approach in higher education and its effects on academic performance. Stud. High. Educ. 2017, 42, 1958–1978. [Google Scholar] [CrossRef]

- Levy, D. Global Private Higher Education: An Empirical Profile of Its Size and Geographical Shape. High. Educ. 2018, 76, 701–715. [Google Scholar] [CrossRef]

- Qureshi, F.H.; Khawaja, S. The Growth of Private Higher Education: Overview in The Context of Liberalisation, Privatisation, and Marketisation. Eur. J. Educ. Stud. 2021, 8, 9. [Google Scholar] [CrossRef]

- The Open University. Available online: https://www.open.ac.uk/ (accessed on 1 August 2023).

- Western Governors University. Available online: https://www.wgu.edu/ (accessed on 1 August 2023).

- Master Plan for Higher Education in California, Office of the President, University of California, USA. 1960. Available online: https://www.ucop.edu/acadinit/mastplan/MasterPlan1960.pdf (accessed on 1 August 2023).

- European Credit Transfer and Accumulation System. ECTS. Available online: http://www7.bbk.ac.uk/linkinglondon/resources/apel-credit-resources/report_July2009_UKHEGuidanceCreditinEngland-ECTS.pdf (accessed on 1 August 2023).

- Mooc.org. MOOC.org|Massive Open Online Courses|An edX Site. Available online: https://www.mooc.org/ (accessed on 1 August 2023).

- Credentialate. The Unbundling of Education and What It Means—Edalex Blog. Edalex. 30 July 2023. Available online: https://www.edalex.com/blog/lens-on-educators-unbundling-education-what-it-means (accessed on 1 August 2023).

- Kumar, J.A.; Richard, R.J.; Osman, S.; Lowrence, K. Micro-Credentials in Leveraging Emergency Remote Teaching: The Relationship between Novice Users’ Insights and Identity in Malaysia. Int. J. Educ. Technol. High. Educ. 2022, 19, 18. [Google Scholar] [CrossRef]

- Maina, M.F.; Ortiz, L.G.; Mancini, F.; Melo, M.M. A Micro-Credentialing Methodology for Improved Recognition of HE Employability Skills. Int. J. Educ. Technol. High. Educ. 2022, 19, 10. [Google Scholar] [CrossRef]

- Czerniewicz, L. Unbundling and Rebundling Higher Education in an Age of Inequality. Educause, September 2018. Available online: https://er.educause.edu/articles/2018/10/unbundling-and-rebundling-higher-education-in-an-age-of-inequality (accessed on 1 August 2023).

- Swinnerton, B.; Coop, T.; Ivancheva, M.; Czerniewicz, L.; Morris, N.P.; Swartz, R.; Walji, S.; Cliff, A. The Unbundled University: Researching Emerging Models in an Unequal Landscape. In Research in Networked Learning; Springer: New York, NY, USA, 2020; pp. 19–34. [Google Scholar]

- McCowan, T. Higher Education, Unbundling, and the End of the University as We Know It. Oxf. Rev. Educ. 2017, 43, 733–748. [Google Scholar] [CrossRef]

- IMS Open Badges. Available online: https://openbadges.org/ (accessed on 1 August 2023).

- Open Badges for Higher Education, Acclaim, Pearson.com. Available online: https://www.pearson.com/content/dam/one-dot-com/one-dot-com/ped-blogs/wp-content/pdfs/Open-Badges-for-Higher-Education.pdf (accessed on 1 August 2023).

- Delivering Free Digital Badges. The Open University. Available online: https://www.open.ac.uk/about/open-educational-resources/openlearn/delivering-free-digital-badges (accessed on 1 August 2023).

- Cheng, Z.; Watson, S.L.; Newby, T.J. Goal Setting and Open Digital Badges in Higher Education. TechTrends 2018, 62, 190–196. [Google Scholar] [CrossRef]

- Ethereum. The Merge|Ethereum.org. ethereum.org. Available online: https://ethereum.org/en/upgrades/merge/ (accessed on 1 August 2023).

- Williams, P. Does Competency-Based Education with Blockchain Signal a New Mission for Universities? J. High. Educ. Policy Manag. 2018, 41, 104–117. [Google Scholar] [CrossRef]

- Wu, C.-H.; Liu, C.-Y. Educational Applications of Non-Fungible Token (NFT). Sustainability 2022, 15, 7. [Google Scholar] [CrossRef]

- Sutikno, T.; Aisyahrani, A.I.B. Non-Fungible Tokens, Decentralized Autonomous Organizations, Web 3.0, and the Metaverse in Education: From University to Metaversity. J. Educ. Learn. 2023, 17, 1–15. [Google Scholar] [CrossRef]

- Competency-Based Education Network: Enabling CBE Growth. C-BEN. Available online: https://www.c-ben.org/ (accessed on 1 August 2023).

- Lentz, A.; Siy, J.O.; Carraccio, C. AI-Ssessment: Towards Assessment as a Sociotechnical System for Learning. Acad. Med. 2021, 96, S87–S88. [Google Scholar] [CrossRef]

- Sturgis, C. Reaching the Tipping Point: Insights on Advancing Competency Education in New England. Competency Work. 2016. Available online: https://www.competencyworks.org/wp-content/uploads/2016/09/CompetencyWorks_Reaching-the-Tipping-Point.pdf (accessed on 1 August 2023).

- Black, P.; Wiliam, D. Classroom Assessment and Pedagogy. Assess. Educ. Princ. Policy Pract. 2018, 25, 551–575. [Google Scholar] [CrossRef]

- Gašević, D.; Greiff, S.; Shaffer, D.W. Towards strengthening links between learning analytics and assessment: Challenges and potentials of a promising new bond. Comput. Hum. Behav. 2022, 134, 107304. [Google Scholar] [CrossRef]

- Ralston, S.J. Higher Education’s Microcredentialing Craze: A Postdigital-Deweyan Critique. Postdigital Sci. Educ. 2020, 3, 83–101. [Google Scholar] [CrossRef]

- Naranjo, N. Criticisms of the competency-based education (CBE) approach. In Social Work in the Frame of a Professional Competencies Approach; Springer Nature: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Bates, T. The Strengths and Weaknesses of Competency-Based Learning in a Digital Age. Online Learning and Distance Education Resources. 2014. Available online: https://www.tonybates.ca/2014/09/15/the-strengths-and-weaknesses-of-competency-based-learning-in-a-digital-age/ (accessed on 1 August 2023).