Abstract

This paper describes a robotic system that supports the remote teaching of technical drawing. The aim of the system is to enable a remote class of paper-based technical drawing, where the students draw the drawing in a classroom, and the teacher gives instructions to the students from a remote place while confirming the paper drawing. The robotic system has a document camera for confirming the paper, a projector, a flat screen to project a cursor on the paper, and a video conference system for communication between the teacher and the students. We conducted two experiments. The first experiment verified the usefulness of a projected cursor. Eight participants evaluated the comprehensibility of the drawing check instruction with or without the projected cursor, and the results suggested that the use of the cursor made the instructions more comprehensible. The second experiment was conducted in a real drawing class. We asked the students in the class to answer a questionnaire to evaluate the robotic system. The result showed that the students had a good impression (useful, easy to use, and fun) of the system. The contribution of our work is twofold. First, it enables a teacher in a remote site to point to a part of the paper to enhance the interaction. Second, the developed system enabled both the students and the teacher to view the paper from their own viewpoints.

1. Introduction

The COVID-19 pandemic began in early 2020 and is still spreading worldwide [1]. Japan has experienced a large number of cases since 2020 [2]. Universities and other educational institutions have been taking infection control measures, including changing face-to-face classes to online classes [3]. The Japanese Ministry of Education, Culture, Sports, Science, and Technology (MEXT) announced the requirement for educational institutions to take appropriate measures to prevent infection and ensure learning opportunities through effective implementation of face-to-face and remote classes. Although most classes can be held online, conducting practical training classes online is difficult. There are several approaches to teaching such classes online. One approach is the virtual lab, which prepares all environments for experiments in virtual space [4]. Another approach is the remote lab, where a student remotely controls equipment in a real laboratory [5]. In addition, education using a telepresence robot can be delivered [6], where the teacher remotely controls a robot, and the robot and the students are in the same classroom.

This paper adopts the third approach, in which a robot is in a classroom with the students, and the teacher teaches the practice through the remote-controlled robot. Our study was conducted in a technical drawing class in which the teacher inspected each student’s drawing face-to-face. Our robot has the following functions: (1) providing face-to-face communication between the teacher and the students, (2) providing a method for the teacher to confirm a student’s drawing, and (3) providing a way for the teacher to point to the drawing paper.

The rest of the paper is organized as follows. First, Section 2 describes the related works, such as virtual labs, remote labs, and teleoperated robots for education. Then, in Section 3, we describe the requirements and design of the proposed system and the implementation of the system. Section 4 describes the detail of the developed robotic system. Section 5 describes the evaluation experiments and the result of the real use of the system in a class. In Section 6, we summarize and discuss the results and remaining problems. Finally, in Section 7, we describe our conclusions and future research directions.

2. Related Work

2.1. Virtual and Remote Labs

As described in the introduction, there are several approaches to teaching practical classes online. There are at least two distinct purposes for online classes: the first is to let a teacher teach classes remotely, and the second is to allow students to take classes remotely.

The approach based on the virtual lab [4,7,8] achieves both purposes because this approach allows all equipment needed for the experiment to be reconstructed in a virtual space. Virtual labs at the beginning of the 2000s offered multimedia content, such as video clips and interactive content, in addition to texts and images [9]. Subsequently, virtual labs have incorporated simulations to provide students with the same experience as a real lab. For example, Tinkercad [10] is a virtual lab environment for building electric circuits with Arduino. Virtual labs have been developed not only for the engineering field but also in other areas, such as biology [11], chemistry [12,13], and even vocational education [14]. In general, the development of a virtual lab is not easy. To make the development easier, a modular virtual lab system was proposed [15].

Meanwhile, the remote lab is an approach that allows students to operate actual equipment in a laboratory remotely [5,16,17], such as in automation [18], chemistry [19], and physics [20].

Virtual and remote labs are often combined to provide a realistic lab experience at a low cost [21,22,23,24].

2.2. Remote Teaching Using Robots

Virtual and remote labs are approaches for students to participate in practical classes from a distance. Conversely, remote teaching is an approach where the teacher is in a distant place and teaches students using systems such as telepresence robots. For example, Okamura and Tanaka developed a remote teaching robot system for elderly people to teach children [25]. In addition, Zhang et al. developed a telepresence robot [26] that is remote-controlled by a teacher but can also detect students and navigate itself. Similarly, Bravo et al. developed robots that tell stories to the students for science education, where the robots’ motion could be operated remotely by a human operator [27]. Students are also candidates for the use of telepresence robots [28]. For example, Chavez et al. [29] developed a technological model for students in primary schools to attend classes using telepresence robots.

Telepresence robots are particularly used for language teaching [30]. For example, Kwon et al. developed a telepresence robot for English tutoring [6]. In their study, the pedagogical effect of telepresence robots was compared with that of autonomous robots. Similarly, Yun et al. developed a small mobile robot, EngKey, for remote English education [31]. The study suggested that telepresence robots operated by human teachers were preferred over autonomous robots [32].

2.3. Combination of Remote Labs and Robots

Remote labs and robots are often combined; however, in most cases, robots were used as the educational materials [33,34,35,36], and these works did not intend for the robots to be the teaching medium. On the other hand, as shown in the previous section, most telepresence robots used for teaching did not have functions for practical training (other than language teaching, which does not need any instruments).

One exception is the framework proposed by Tan et al. [37]. They proposed a “telepresence robot empowered smart lab” (TRESL), where a student operates a telepresence robot to manipulate equipment in a lab. The TRESL can be viewed as a combination of a remote lab and a telepresence robot. However, their concept model had only a limited ability to manipulate objects remotely.

3. Objectives and Requirements

From the literature surveyed in the previous section, it was found that no system achieved the actual deployment of remote teaching for practical training. Therefore, our research question is how we can develop a robot that actually can be used for practical training.

Our work aims to develop a telepresence robot for remotely teaching practical training. As reviewed in the previous section, virtual and remote lab systems have been devised with which students can experience practical training online and telepresence systems that enable teachers to lecture from a distance to the students in the classroom. However, no system has enabled a teacher to train the students in a remote classroom in practical training. Virtual and remote labs are used for self-learning, but some forms of training require one-to-one interaction between teachers and students. Additionally, conventional telepresence robots were intended to give lectures or interact with language learning, which cannot be used for practical training such as laboratory experiments.

This paper focuses on developing a robot for teaching a technical drawing class. Teaching technical drawing requires interaction between a teacher and a student [38]. Using computer-aided design (CAD) tools, we can relatively easily teach technical drawing online [39]. However, it is said that a traditional style of technical drawing based on paper and pencil is better for developing students’ skills than CAD [40]. Therefore, it is important to provide a method of teaching paper-based technical drawing remotely in the COVID-19 pandemic.

When using telepresence robots for education, there are two possibilities for the use of robots. One case involves students operating the robots remotely, where the robots are in a classroom with a teacher and other students. The other case involves the teacher operating the robots remotely while all the students are in a classroom. Since technical drawing requires equipment such as a drafting table, a T-square, or a drafting machine, which are difficult to use in a student’s personal room, it is reasonable to expect that the students are in a drafting room. In addition, even when all students are in the same room, it is possible to maintain distance between them. However, when a teacher gives instruction to a student face-to-face, it is difficult to maintain distance between the teacher and the student. Therefore, we assume that the teacher is in a different location and gives instructions remotely.

To realize a system to teach technical drawing using the telepresence technique, we require the following functions:

- (1)

- The teacher and the student can communicate with each other with speech and images.

- (2)

- The teacher can confirm the drawing paper and drawing behavior on the paper.

- (3)

- The teacher can point to a specific part of the drawing paper.

All of these functions should be provided in real time. In addition, the system should move around the classroom so that no other staff are needed to set up the system. The results of (1), (2), and (3) are described in Section 5.1, Section 5.2 and Section 5.3, respectively.

4. Telepresence Robot for Remote Teaching of Practical Training

4.1. The Task

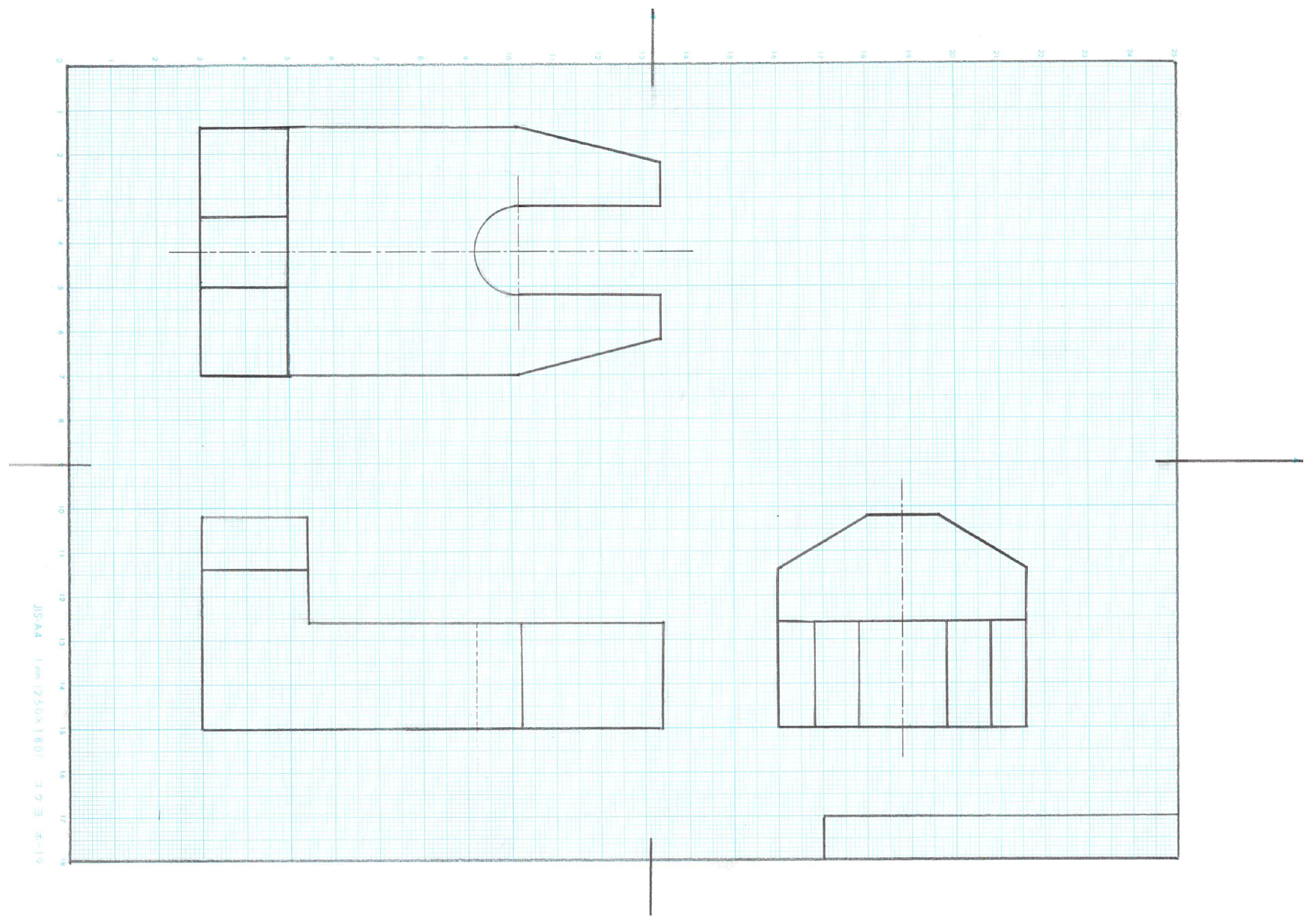

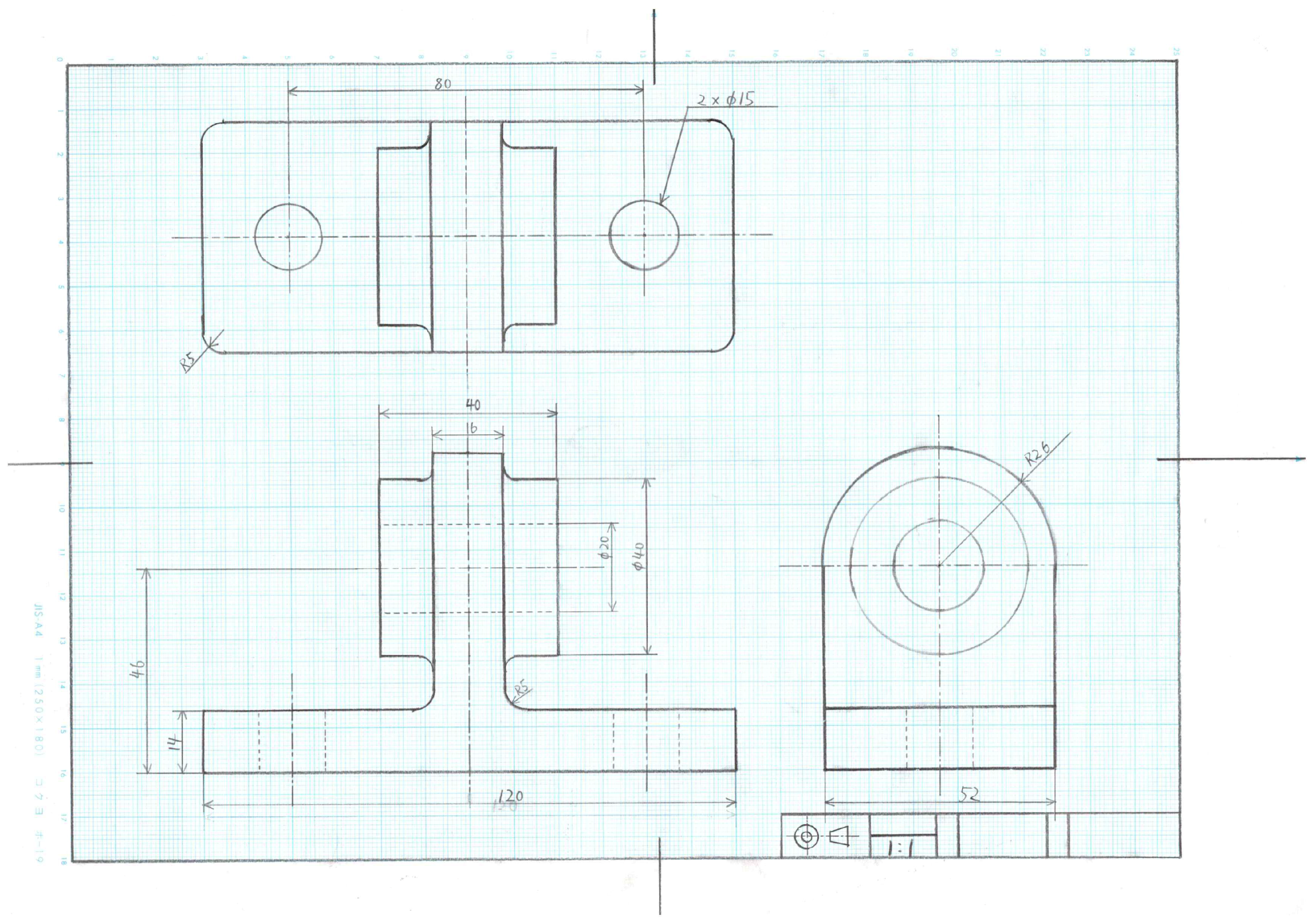

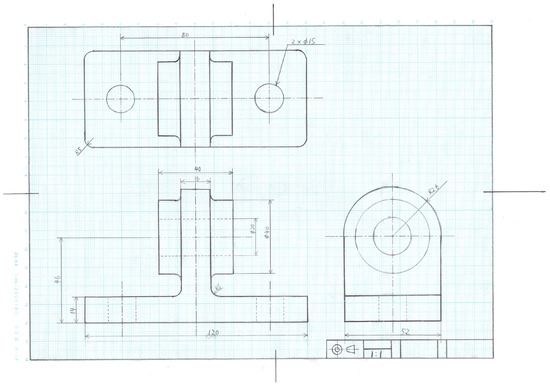

The task conducted by the teacher using the robot is to give instructions for drawing inspection. The material is a drawing of an object as a third-angle orthographic projection, as shown in Figure 1. The teacher asks questions about which parts of the drawing are incorrect, and the student is told to mark the incorrect parts.

Figure 1.

An example of drawing material. The material is a drawing of an object as a third-angle orthographic projection. The teacher asks questions about which parts of the drawing are incorrect, and the student is told to mark the incorrect parts.

Since the students in the class are likely beginners in design and drafting, the drawing inspection is used to point out drawing errors, such as shape errors and omissions of dimensions. Therefore, the robot needs to point out the errors in the drawings so that the students can intuitively understand the correction points.

For example, suppose that a dashed line is drawn for the center line instead of a chain line. If the instructor only says “Please draw a checkmark on the dashed line”, students who are not familiar with drawing will not understand which part is being pointed out. In the case of person-to-person instruction, the teacher can give an instruction both verbally by saying what to do and non-verbally by pointing to the part. As another example, suppose that the dimension of a part is incorrect. If the teacher indicates this face-to-face, they may say, “This dimension here is off by X mm”, while pointing to the part where the incorrect dimension is drawn. As shown in these examples, conversations often include directive words such as “here” and “that”.

Therefore, to conduct this task, the teacher and student face each other; the teacher instructs by pointing to the drawing; the student marks the drawing; and the teacher confirms the student’s behavior and the marked place by watching the drawing paper.

4.2. Overview of the System

We designed the system so that the requirements described in Section 3 are fulfilled. For requirements (1) and (2), we used two individual web conference systems: one was used for face-to-face communication, and the other one for confirmation of the drawing paper and pointing to it. Requirement (3) was resolved by projecting the mouse cursor from the backside of the drawing paper using a mobile projector.

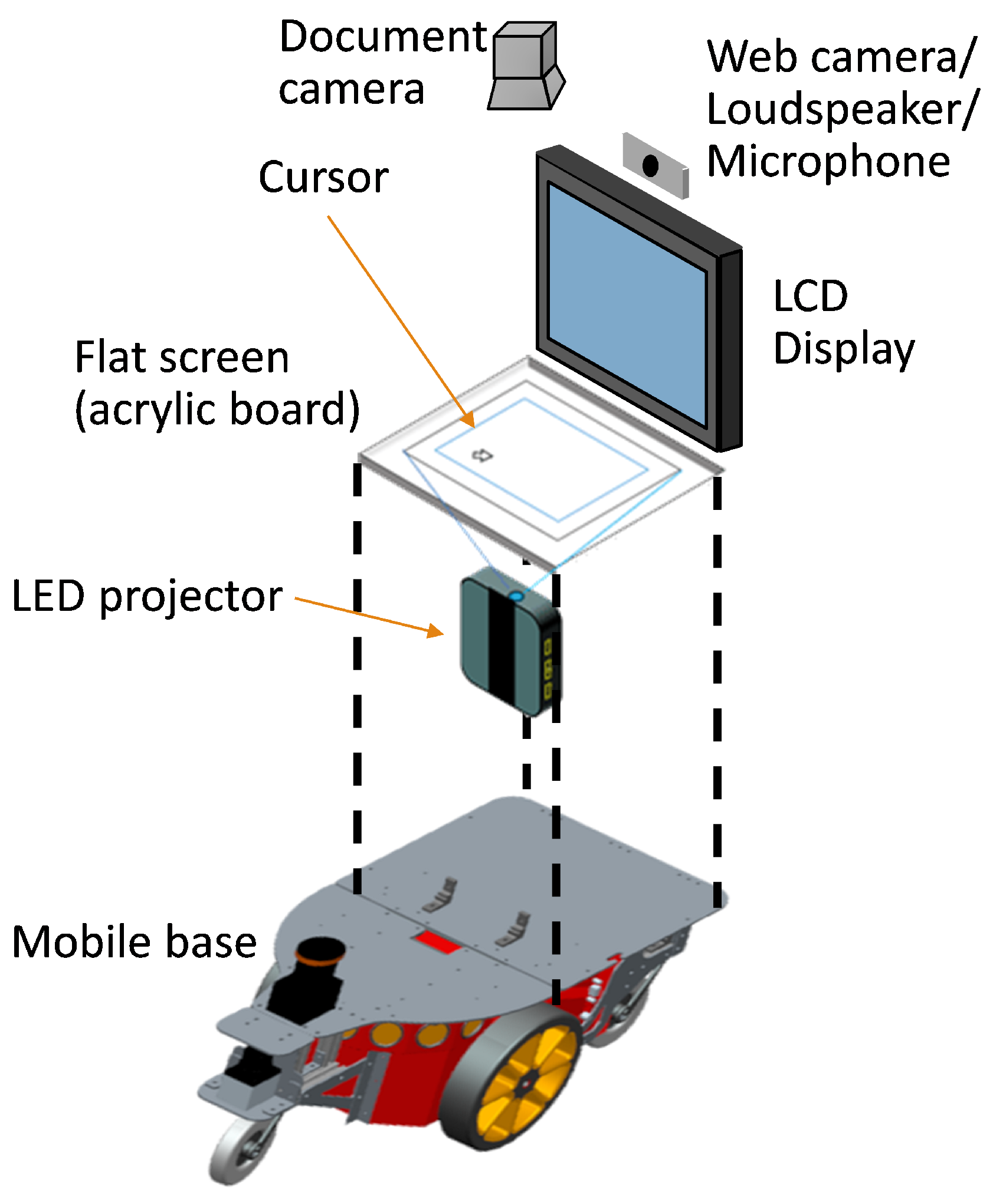

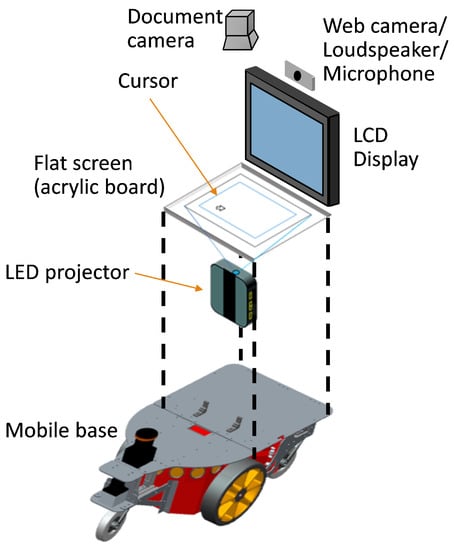

Figure 2 shows an overview of the proposed robotic system. The system is built on a mobile base, and a projector is built into the body. The mobile base is the same as the robot ASAHI [41]. The projector projects the cursor on the flat screen at the top of the system. A display, web camera, loudspeaker, and microphone are mounted on the system, and the document camera is mounted above the flat screen. Figure 3a,b shows the front and side views of the system.

Figure 2.

Structure of the proposed robot. The system is built on a mobile base, and a projector is built into the body. The projector projects the cursor on the flat screen at the top of the system. A display, web camera, loudspeaker, and microphone are mounted on the system, and the document camera is mounted above the flat screen.

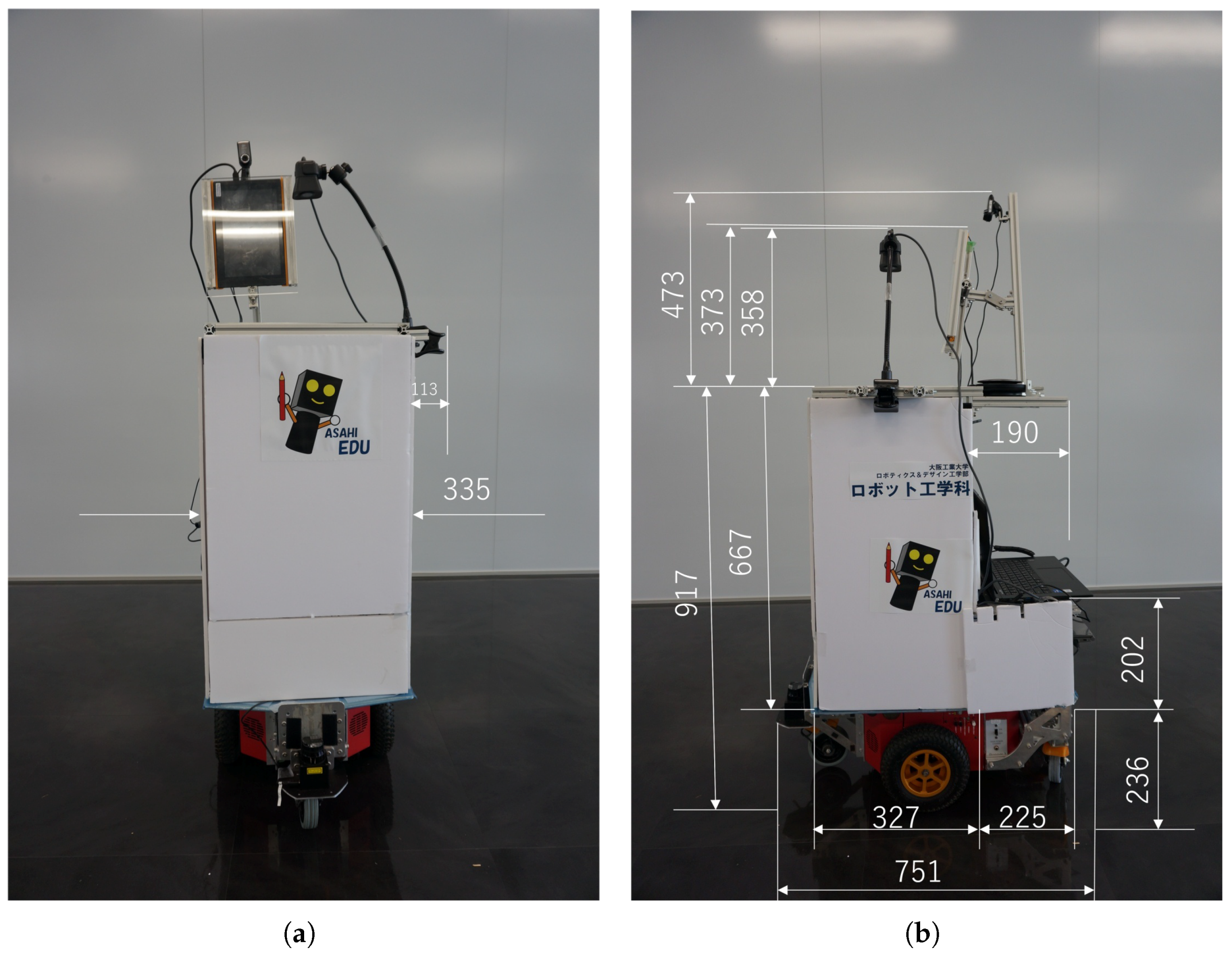

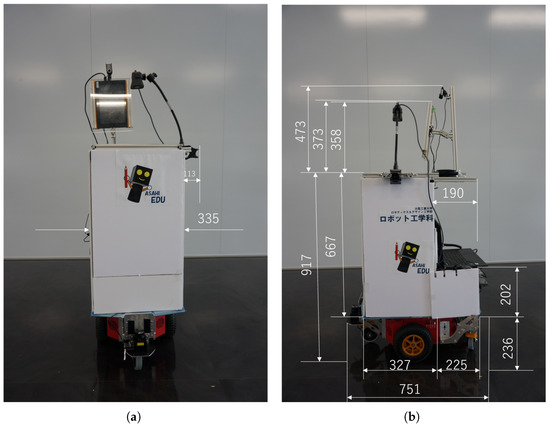

Figure 3.

The front and side view of the proposed system. Its height and weight are 1390 mm and 27.45 kg, respectively. (a) Front view. (b) Side view.

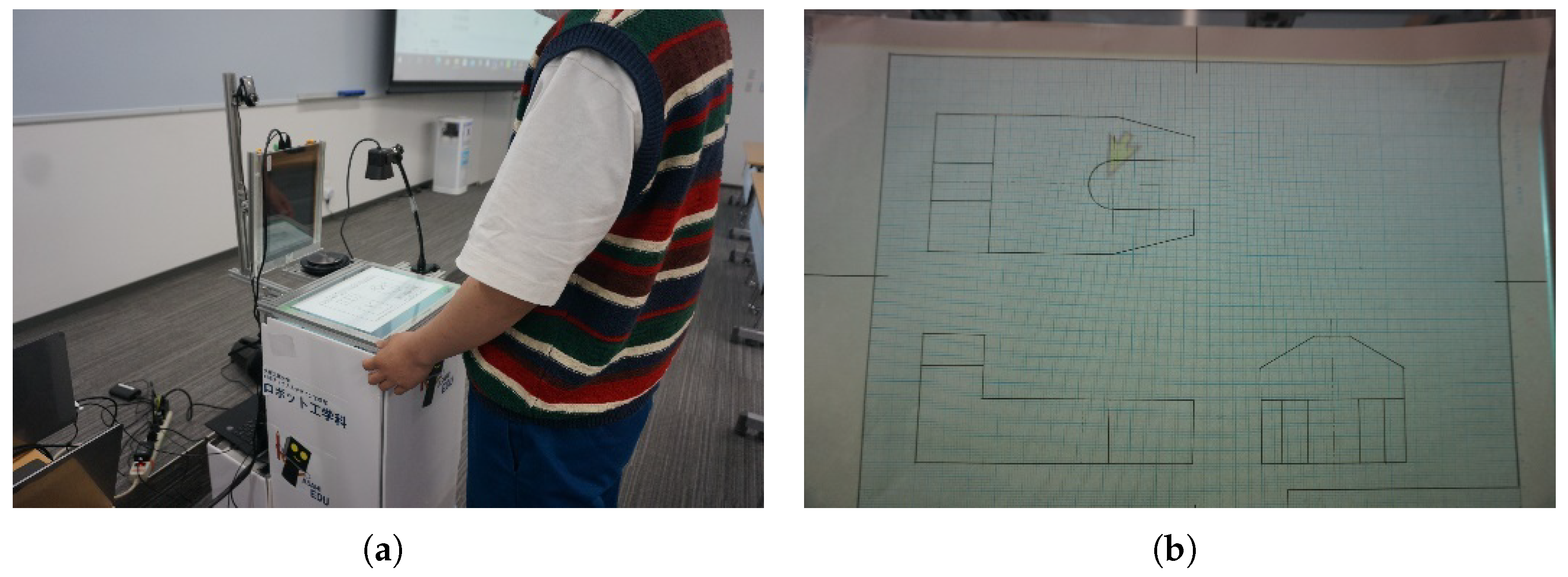

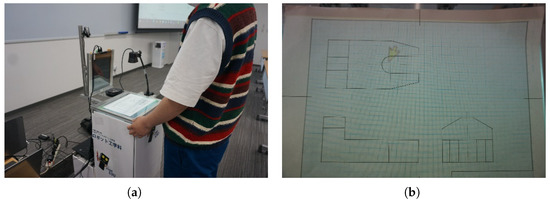

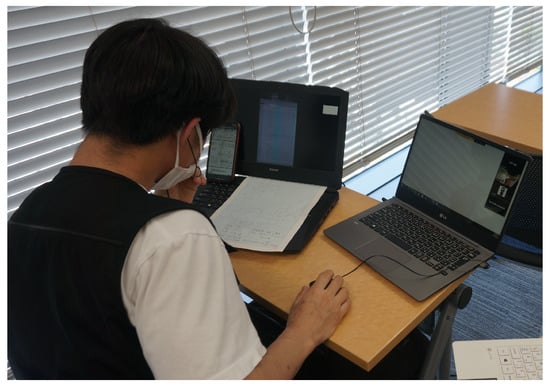

Figure 4 shows the student’s side of the system. A student puts a piece of drawing paper on the flat screen in the direction that they can read it. Then, the student converses with the teacher using the screen, microphone, web camera, and loudspeaker. When the student puts the paper on the screen, the projector projects a mouse cursor from the back of the paper so that the teacher can point to a specific part of the paper. The document camera captures the paper from above. Figure 5 shows the teacher’s side. The teacher uses two PCs to operate the system, where one PC (the right side) is used to confirm the paper and point to the paper and the other PC (the left side) is used to make conversation with the student. Specifications of the hardware are shown in Table 1.

Figure 4.

The student’s side. A student stands in front of the robot, puts the drawing paper on the flat screen, and converses with the teacher using the display, loudspeaker, and microphone. (a) A student puts the paper on the screen and has a conversation with the teacher. (b) The paper on the screen. A cursor is projected from the backside of the paper.

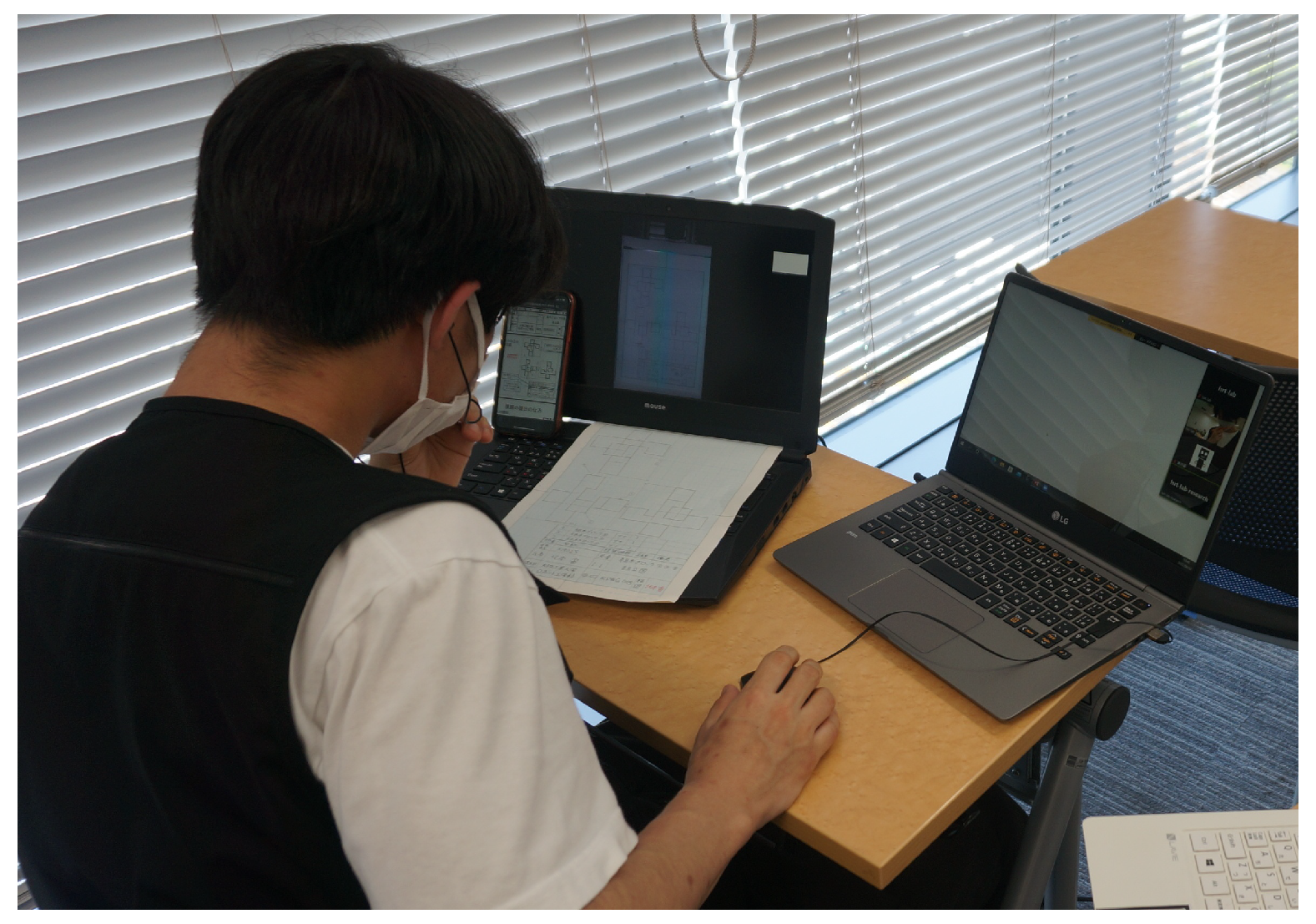

Figure 5.

The teacher’s side. The teacher uses two PCs: one for conversation with the student, and another for confirming and pointing to the student’s drawing paper.

Table 1.

The hardware specification.

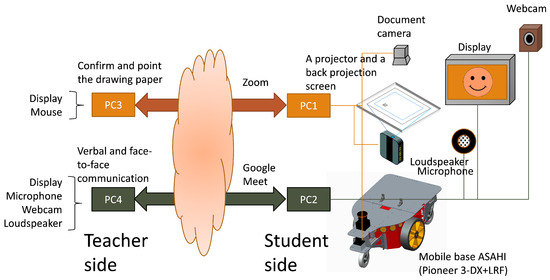

4.3. The Communication System

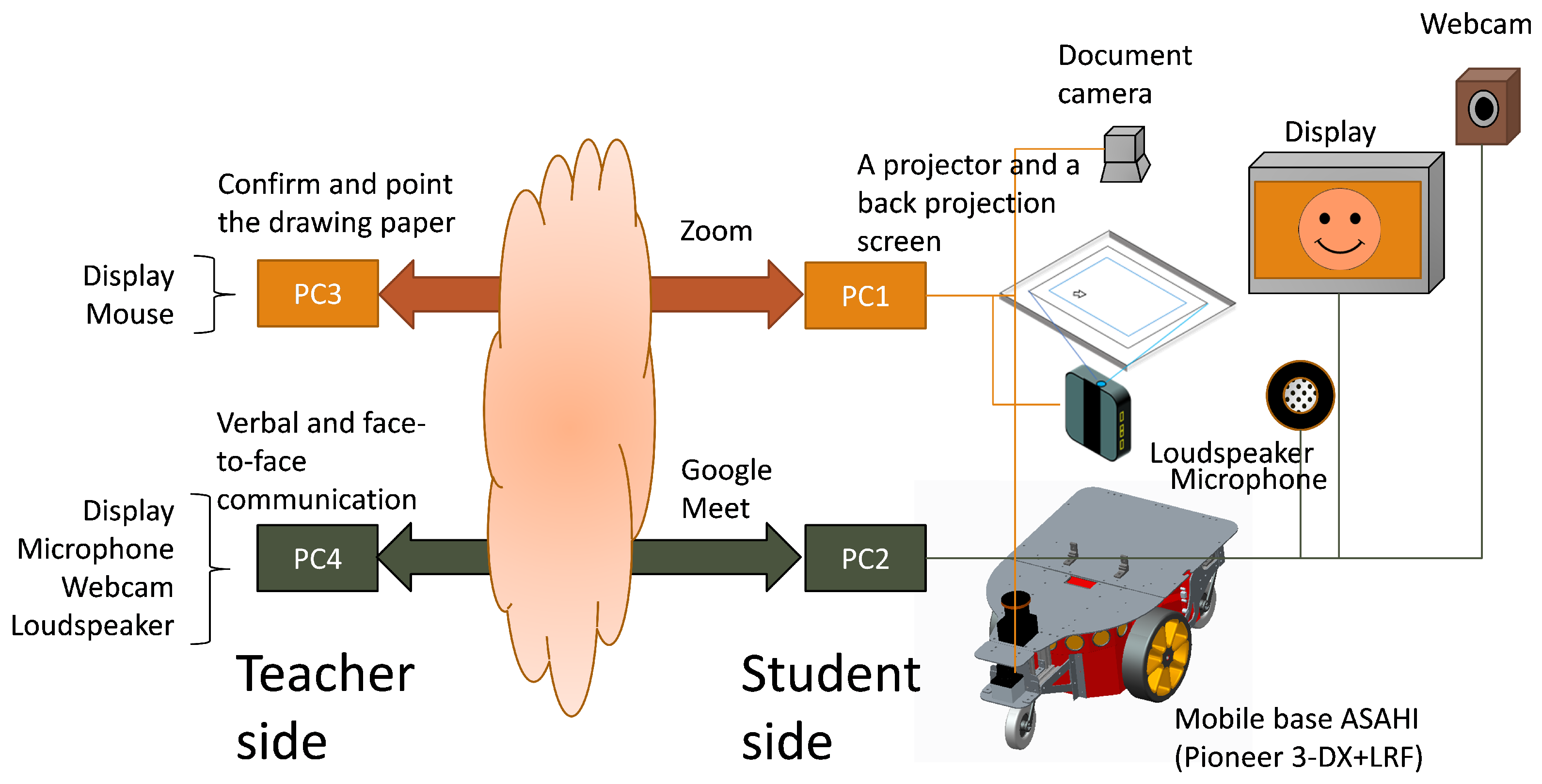

Figure 6 shows the diagram of the communication system. The system is equipped with two PCs (PC1 and PC2), and the teacher uses two PCs corresponding to the two PCs of the system (PC3 and PC4). The system has two communication channels between the teacher and the student. One channel captures the drawing paper and points to the paper (between PC1 and PC3), and the web conference system Zoom is used for bidirectional image communication. Another channel is used for conversations between the teacher and the student (between PC2 and PC4), and we used Google Meet as the conference system.

Figure 6.

A schematic diagram of the communication system. The system uses two channels of web-based conference systems (Zoom for confirming the drawing paper and Google Meet for conversation between the teacher and the student).

5. Experiments and Results

5.1. Overview

We carried out two experiments to investigate the usefulness of the proposed robot. First of all, we needed to confirm that the teacher’s pointing using back-projection could be properly perceived by students. Therefore, as a first experiment, we investigated whether the use of a mouse cursor projected on the flat screen was effective or not. The objective of the proposed system is to share the drawing paper between the teacher and the student and to enable the teacher to point to the specific part of the drawing from a remote site. This experiment investigated whether the developed system realized this objective. After the first experiment, we conducted a second experiment to investigate the total usefulness of the proposed system in an actual class.

Note that we conducted no comparison to any existing robotic system because no system that enables the remote teaching of technical drawing has yet been developed.

5.2. Verification of Projector-Based Pointing

In the first experiment, we employed two experimenters (experimenters A and B). One was to give participants an explanation of the experiment and assist; the other one played the role of the teacher. Participants were recruited among the third year students of the university who participated in a drawing and drafting class in their second year. We chose eight participants from the applicants.

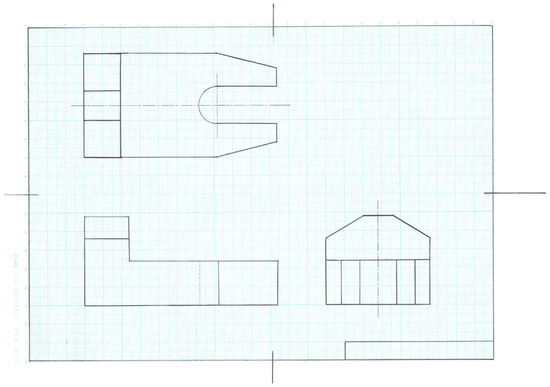

The procedure of the experiment was as follows. First, experimenter A showed the participant a drawing (shown in Figure 1) as an example and gave them the following instruction: “This drawing is an example of a third-angle orthographic projection. Please look at the front view. It lacks a hidden line. Please mark the incorrect part using a red pen”. After the instruction, experimenter A brought the participant to the robot and put the second drawing, shown in Figure 7, on the flat screen of the robot.

Figure 7.

The drawing used in the experiment. The students were told to find incorrect parts in the drawing and mark those parts.

Next, experimenter B talked with the participant through the robot. First, experimenter B instructed the participant to confirm the drawing for one minute. After that, experimenter B gave the following four tasks:

- 1.

- The dimension R5 is a diameter in the front view; it should be a radius. Please mark this part.

- 2.

- The side view lacks a dimension of 52 mm. Please mark this part.

- 3.

- In the top view, the dimensions of the holes are incorrectly written; it should be . Please mark this part.

- 4.

- The center lines are drawn as dashed lines in the top view; they should be chain lines. Please mark these parts.

We prepared two conditions, with and without a mouse cursor projected on the drawing. In the “with cursor” condition, a mouse cursor was projected from the back of the drawing paper (as shown in Figure 4b), while no cursor was used in the “without cursor” condition. The order and combination of the four tasks and the conditions were randomized.

After the session, the participants compared the two conditions. They evaluated the difference using a seven-point Likert scale, where −3 represented “the second condition was much more difficult than the first one to understand”, and 3 represented “the second condition was much easier than the first one to understand”. Since the order of the tested conditions was randomized, we converted all the data so that the “with cursor” condition came after the “without cursor” condition. In addition, we asked the participants to write the reason for their evaluation. Table 2 summarizes the conditions of the experiment.

Table 2.

Conditions of the first experiment.

Table 3a shows the evaluation result. As explained above, a positive number indicates that the participant evaluated the “with cursor” condition better than the “without cursor” condition. The average of the grade points was 1.1. We conducted a one-sided t-test to validate whether the average grade point was larger than zero, as shown in Table 3b. The result was and p-value = 0.042, which showed that the “with cursor” condition was evaluated significantly better than the other condition.

Table 3.

Result of verification experiment of the “with cursor” condition. The participants evaluated the “with cursor” condition in a seven-point Likert scale, where a positive number indicates that the “with cursor” condition was preferred. (a) Number of participants who chose a specific grade. The number in the second row is the number of participants who chose that value. (b) Result of t-test. According to the t-test, the average grade was significantly larger than zero at the 5% significance level.

5.3. Evaluation of the Total System

The second experiment was conducted within an actual technical drawing class at Osaka Institute of Technology. In this class, each student made a drawing of the specified object. After finishing the drawing, the student moved in front of the robot and put the drawing on the flat screen. The student and the teacher then began a session. The students and the teacher were in different rooms. After the session, the teacher asked the students to fill out a questionnaire. The answers to the questionnaire were optional, and the answers of the students who responded were analyzed.

The questionnaire contained three statements: (1) This robotic system was useful for training; (2) This robotic system was easy to understand; and (3) It was fun to use this system. The students rated these statements on a five-point Likert scale, from “5: Strongly agree” to “1: Strongly disagree”. Table 4 summarizes the conditions of the experiment.

Table 4.

Conditions of the second experiment.

The results of the questionnaire are shown in Table 5. These results show that the students felt that the robotic system was somewhat useful, easy, and fun.

Table 5.

Results of the questionnaire in the second experiment. The participants evaluated the proposed system on a five-point Likert scale, where 1 is the lowest and 5 the highest. The numbers from the second to sixth columns show the number of participants who chose that evaluation value, and the rightmost column is the average of the chosen grades.

We gathered other opinions from the participants. One opinion stated that the system was good because the student did not need to see the drawing upside down, which happened when the student and the teacher faced each other so that the teacher saw the drawing from the correct orientation.

5.4. Actual Use in the Class

The proposed system was used in actual classes at Osaka Institute of Technology. First, we operated the robot in the class on 15 April 2021. Since this was the first time the robot was used, the inspector, who played the role of a teacher, inspected the drawing from a few meters away from the robot to be able to respond immediately in case of any trouble.

In 2022, face-to-face classes were held every week from 21 April to 2 June (14 classes). The system was used in 8 out of 14 classes. Although the number of participants varied from class to class, about 50 to 100 participants took part in the robot inspection in each class. A problem at the beginning of this period was that there was only one robot, so the students needed to wait for inspection because most of the students finished their drawings at a similar time. Therefore, we added two more learning support robots later to relieve congestion.

We introduced a reservation system for drawing inspection. After finishing the drawing, the students reserved inspection using the reservation system. When the system called a student, that student went to the robot to have their drawing inspected. The reservation system was based on Microsoft Teams, in which a student filled in their student ID in the Excel worksheet in the Teams class. We did not use an original reservation system mainly for security reasons. There were several issues in the use of the reservation system and the robots. We called out to the students by their numbers for their turn, but some students did not notice the call because they were deeply concentrating on their drawing. However, as a result, the inspection proceeded smoothly with the three robots.

In the last session, the inspection was performed completely remotely from another room. The operation was the same as in the same room, and we encountered no problems in the class. Several students misunderstood the orientation of the drawing paper when putting it on the flat screen, placing it upside down. This might happen because when a student has their drawing checked by a teacher in a face-to-face situation, they will place the paper so that the teacher can read it. In this case, the teacher instructed the student to change the orientation of the paper.

6. Results and Discussion

In this section, we first summarize the research problems, their solutions, and the experimental results. The problem was how to develop a robotic system that enables remote teaching of practical training (technical drawing in this case). The solution was to create a robot equipped with a video-based communication system and an image-based communication system to confirm a drawing paper and point to the paper using a cursor.

We developed a robotic system and evaluated its usefulness through two evaluation experiments and actual use in classes as described in the previous section. First, we confirmed the usefulness of the bidirectional communication using a mouse cursor projection and a document camera, and the result was that the deployment of a cursor was more useful than communication without a cursor. Next, we evaluated the total system. The evaluation results were promising because of the relatively high evaluation value of usefulness. Finally, we used the system in actual classes.

The use of a robot in a classes in conventional studies has been limited to language education [30] or other special purposes [25], in which the robot only gave lectures and conversed with students. Thus, our robot is the first attempt to apply a teleoperated robot to a practical exercise such as technical drawing. From the results of the questionnaire and the experience in the actual class, it was proved that the proposed robotic system can be used for actual technical drawing classes.

There were a couple of problems we encountered in the actual use of the robot. Although the robot only provides communication through a camera and a LCD display, some students had difficulty understanding the instructions. These students could not check the designated part of the drawing and returned to their seats without completing the task in the first session. It may be beneficial if the robot could draw on the actual paper to demonstrate the task.

7. Conclusions

We described a teleoperated robotic system for a remote class of technical drawing. The proposed system allows a teacher to give instructions on paper-based technical drawing to the students in a classroom. The robot is equipped with a document camera to capture the student’s drawing paper, a flat screen, and a projector to project a cursor on the paper, as well as an online meeting tool for communication between the teacher and the student. From the experimental results, we confirmed that projecting a cursor on the paper using a projector is useful for a teacher to give instructions. The students’ opinions in the actual drawing class revealed that the system was actually useful in a class.

The contribution of our work is twofold. First, it enabled the teacher in a remote site to point to a part of the paper to enhance interaction. If we project the cursor from above, the cursor may be occluded by hands or arms on the paper. Second, the developed system enabled both the student and the teacher to view the paper from their own viewpoints. When a student and a teacher interact face-to-face, the paper is inevitably upside-down from one point of view.

There are two problems and limitations in the current system. The first one is that the cursor is projected from the underside of the drawing paper. Therefore, the cursor is hard to see when the paper is thick. To solve this problem, the system could project the cursor from both the underside and from above using another device, such as a laser pointer. The second problem is that the teacher cannot draw lines or letters directly onto the paper, which is possible when teaching face-to-face. We can realize this function by adding a drawing mechanism, such as a pen plotter.

In the experiment, we did not use the mobility of the robot. Considering the actual use-case of the system, we need to convey the system from classroom to classroom, which needs another member of staff at the location if the system has no moving ability. Therefore, the ability of the robot to move is important. In fact, we conducted another experiment and confirmed that the operator could drive the robot between two classrooms. Additionally, we have developed a teleoperated robot that can be controlled from a remote site and modifies its movement automatically according to local observation [42]. This control method could contribute to the actual deployment of the proposed robot.

In addition to the current functionality of the robot, we are planning to add a few more functions to the robot, such as a robot avatar [41,43] for communication aid and a pen plotter to enable the teacher to draw lines on the paper.

Author Contributions

Conceptualization, Y.H. and A.I.; methodology, Y.H.; software, Y.H.; validation, Y.H.; formal analysis, A.I.; investigation, A.I.; resources, Y.H. and A.I.; data curation, A.I.; writing—original draft preparation, A.I.; writing—review and editing, A.I.; visualization, A.I.; supervision, A.I.; project administration, Y.H.; funding acquisition, Y.H. and A.I. All authors have read and agreed to the published version of the manuscript.

Funding

Part of this work was supported by Tough Cyberphysical AI Research Center, Tohoku University and JSPS KAKENHI JP20K04389.

Institutional Review Board Statement

This study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Life Science Ethics Committee of Osaka Institute of Technology (approval number 2021-16, 21 May 2021, approval number 2021-8-1, 25 March 2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Acknowledgments

Ryuichi Urita, Hironobu Wakabayashi, Rikuto Suzuki, and Sora Kitamoto contributed to conduct the experiment.

Conflicts of Interest

The authors declare no conflict of interest.

References

- World Health Organization. COVID-19 Weekly Epidemiological Update, 84th ed.; World Health Organization: Geneva, Switzerland, 22 March 2022.

- Amengual, O.; Atsumi, T. COVID-19 pandemic in Japan. Rheumatol. Int. 2021, 41, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Nae, N. Online learning during the pandemic: Where does Japan stand? Euromentor J. 2020, 11, 7–24. [Google Scholar]

- Lynch, T.; Ghergulescu, I. Review of virtual labs as the emerging technologies for teaching STEM subjects. In Proceedings of the INTED2017 Proceedings, 11th International Technology, Education and Development Conference, Valencia, Spain, 6–8 March 2017; pp. 6–8. [Google Scholar]

- de la Torre, L.; Sánchez, J.P.; Dormido, S. What remote labs can do for you. Phys. Today 2016, 69, 48–53. [Google Scholar] [CrossRef]

- Kwon, O.H.; Koo, S.Y.; Kim, Y.G.; Kwon, D.S. Telepresence robot system for English tutoring. In Proceedings of the 2010 IEEE Workshop on Advanced Robotics and Its Social Impacts, Seoul, Republic of Korea, 26–28 October 2010; pp. 152–155. [Google Scholar]

- Ray, S.; Srivastava, S. Virtualization of science education: A lesson from the COVID-19 pandemic. J. Proteins Proteom. 2020, 11, 77–80. [Google Scholar] [CrossRef] [PubMed]

- Usman, M.; Suyanta; Huda, K. Virtual lab as distance learning media to enhance student’s science process skill during the COVID-19 pandemic. J. Phys. Conf. Ser. 2021, 1882, 012126. [Google Scholar] [CrossRef]

- Huang, C. Virtual labs: E-learning for tomorrow. PLoS Biol. 2004, 2, e157. [Google Scholar] [CrossRef]

- Abburi, R.; Praveena, M.; Priyakanth, R. TinkerCad–A Web Based Application for Virtual Labs to help Learners Think, Create and Make. J. Eng. Educ. Transform. 2021, 34, 535–541. [Google Scholar] [CrossRef]

- Campbell, A.M.; Zanta, C.A.; Heyer, L.J.; Kittinger, B.; Gabric, K.M.; Adler, L. DNA microarray wet lab simulation brings genomics into the high school curriculum. CBE—Life Sci. Educ. 2006, 5, 332–339. [Google Scholar] [CrossRef]

- Tatli, Z.; Ayas, A. Virtual laboratory applications in chemistry education. Procedia-Soc. Behav. Sci. 2010, 9, 938–942. [Google Scholar] [CrossRef]

- Warning, L.A.; Kobylianskii, K. A choose-your-own-adventure-style virtual lab activity. J. Chem. Educ. 2021, 98, 924–929. [Google Scholar] [CrossRef]

- Sasongko, W.D.; Widiastuti, I. Virtual lab for vocational education in Indonesia: A review of the literature. AIP Conf. Proc. 2019, 2194, 020113. [Google Scholar]

- Almaatouq, A.; Becker, J.; Houghton, J.P.; Paton, N.; Watts, D.J.; Whiting, M.E. Empirica: A virtual lab for high-throughput macro-level experiments. Behav. Res. Methods 2021, 53, 2158–2171. [Google Scholar] [CrossRef] [PubMed]

- Chacon, J.; Vargas, H.; Farias, G.; Sanchez, J.; Dormido, S. EJS, JIL server, and LabVIEW: An architecture for rapid development of remote labs. IEEE Trans. Learn. Technol. 2015, 8, 393–401. [Google Scholar] [CrossRef]

- Borish, V.; Werth, A.; Sulaiman, N.; Fox, M.F.; Hoehn, J.R.; Lewandowski, H. Undergraduate student experiences in remote lab courses during the COVID-19 pandemic. Phys. Rev. Phys. Educ. Res. 2022, 18, 020105. [Google Scholar] [CrossRef]

- Pereira, F.; Felgueiras, C. Learning automation from remote labs in higher education. In Proceedings of the Eighth International Conference on Technological Ecosystems for Enhancing Multiculturality, Salamanca, Spain, 21–23 October 2020; pp. 558–562. [Google Scholar]

- Xie, C.; Li, C.; Ding, X.; Jiang, R.; Sung, S. Chemistry on the cloud: From wet labs to web labs. J. Chem. Educ. 2021, 98, 2840–2847. [Google Scholar] [CrossRef]

- Matarrita, C.A.; Concari, S.B. Remote laboratories used in physics teaching: A state of the art. In Proceedings of the 13th International Conference on Remote Engineering and Virtual Instrumentation (REV), Madrid, Spain, 24–26 February 2016; pp. 385–390. [Google Scholar]

- De Jong, T.; Linn, M.C.; Zacharia, Z.C. Physical and virtual laboratories in science and engineering education. Science 2013, 340, 305–308. [Google Scholar] [CrossRef] [PubMed]

- De Jong, T.; Sotiriou, S.; Gillet, D. Innovations in STEM education: The Go-Lab federation of online labs. Smart Learn. Environ. 2014, 1, 3. [Google Scholar] [CrossRef]

- Grodotzki, J.; Ortelt, T.R.; Tekkaya, A.E. Remote and virtual labs for engineering education 4.0: Achievements of the ELLI project at the TU Dortmund University. Procedia Manuf. 2018, 26, 1349–1360. [Google Scholar] [CrossRef]

- Trentsios, P.; Wolf, M.; Frerich, S. Remote Lab meets Virtual Reality–Enabling immersive access to high tech laboratories from afar. Procedia Manuf. 2020, 43, 25–31. [Google Scholar] [CrossRef]

- Okamura, E.; Tanaka, F. A pilot study about remote teaching by elderly people to children over a two-way telepresence robot system. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 489–490. [Google Scholar]

- Zhang, M.; Duan, P.; Zhang, Z.; Esche, S. Development of telepresence teaching robots with social capabilities. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Pittsburgh, PA, USA, 9–15 November 2018; Volume 52064, p. V005T07A017. [Google Scholar]

- Bravo, F.A.; Hurtado, J.A.; González, E. Using robots with storytelling and drama activities in science education. Educ. Sci. 2021, 11, 329. [Google Scholar] [CrossRef]

- Velinov, A.; Koceski, S.; Koceska, N. A review of the usage of telepresence robots in education. Balk. J. Appl. Math. Inform. 2021, 4, 27–40. [Google Scholar] [CrossRef]

- Chavez, D.Y.; Romero, G.V.; Villalta, A.B.; Gálvez, M.C. Telepresence Technological Model Applied to Primary Education. In Proceedings of the 2020 IEEE XXVII International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Lima, Peru, 3–5 September 2020; pp. 1–4. [Google Scholar]

- van den Berghe, R.; Verhagen, J.; Oudgenoeg-Paz, O.; Van der Ven, S.; Leseman, P. Social robots for language learning: A review. Rev. Educ. Res. 2019, 89, 259–295. [Google Scholar] [CrossRef]

- Yun, S.; Shin, J.; Kim, D.; Kim, C.G.; Kim, M.; Choi, M.T. Engkey: Tele-education robot. In Proceedings of the Social Robotics: Third International Conference, ICSR 2011, Amsterdam, The Netherlands, 24–25 November 2011; pp. 142–152. [Google Scholar]

- Edwards, A.; Edwards, C.; Spence, P.R.; Harris, C.; Gambino, A. Robots in the classroom: Differences in students’ perceptions of credibility and learning between “teacher as robot” and “robot as teacher”. Comput. Hum. Behav. 2016, 65, 627–634. [Google Scholar] [CrossRef]

- Neamtu, D.V.; Fabregas, E.; Wyns, B.; De Keyser, R.; Dormido, S.; Ionescu, C.M. A remote laboratory for mobile robot applications. IFAC Proc. 2011, 44, 7280–7285. [Google Scholar] [CrossRef]

- Casan, G.A.; Cervera, E.; Moughlbay, A.A.; Alemany, J.; Martinet, P. ROS-based online robot programming for remote education and training. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6101–6106. [Google Scholar]

- Casini, M.; Garulli, A.; Giannitrapani, A.; Vicino, A. A remote lab for experiments with a team of mobile robots. Sensors 2014, 14, 16486–16507. [Google Scholar] [CrossRef]

- Bunse, C.; Wieck, T. Experiences in Developing and Using a Remote Lab in Teaching Robotics. In Proceedings of the 2022 IEEE German Education Conference (GeCon), Berlin, Germany, 11–12 August 2022; pp. 1–6. [Google Scholar]

- Tan, Q.; Denojean-Mairet, M.; Wang, H.; Zhang, X.; Pivot, F.C.; Treu, R. Toward a telepresence robot empowered smart lab. Smart Learn. Environ. 2019, 6, 1–19. [Google Scholar] [CrossRef]

- Sharma, G.V.S.S.; Prasad, C.L.V.R.S.V.; Rambabu, V. Online machine drawing pedagogy—A knowledge management perspective through maker education in the COVID-19 pandemic era. Knowl. Process. Manag. 2021, 29, 231–241. [Google Scholar] [CrossRef]

- Bernardes, M.; Oliveira, G. Teaching Practices of Computer-Aided Technical Drawing in the Time of COVID-19. In Proceedings of the 23rd International Conference on Engineering and Product Design Education (E and PDE 2021), Herning, Denmark, 9–10 September 2021. [Google Scholar]

- McLaren, S.V. Exploring perceptions and attitudes towards teaching and learning manual technical drawing in a digital age. Int. J. Technol. Des. Educ. 2008, 18, 167–188. [Google Scholar] [CrossRef]

- Hiroi, Y.; Ito, A. ASAHI: OK for Failure—A Robot for Supporting Daily Life, Equipped with a Robot Avatar. In Proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction, Tokyo, Japan, 3–6 March 2013; pp. 141–142. [Google Scholar]

- Kasai, Y.; Hiroi, Y.; Miyawaki, K.; Ito, A. Development of a Teleoperated Play Tag Robot with Semi-Automatic Play. In Proceedings of the 2022 IEEE/SICE International Symposium on System Integration (SII), Narvik, Norway, 9–12 January 2022; pp. 165–170. [Google Scholar]

- Hiroi, Y.; Ito, A.; Nakano, E. Evaluation of robot-avatar-based user-familiarity improvement for elderly people. KANSEI Eng. Int. 2009, 8, 59–66. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).