Comparing the Use of Two Different Approaches to Assess Teachers’ Knowledge of Models and Modeling in Science Teaching

Abstract

:1. Introduction

2. Theoretical Backgrounds

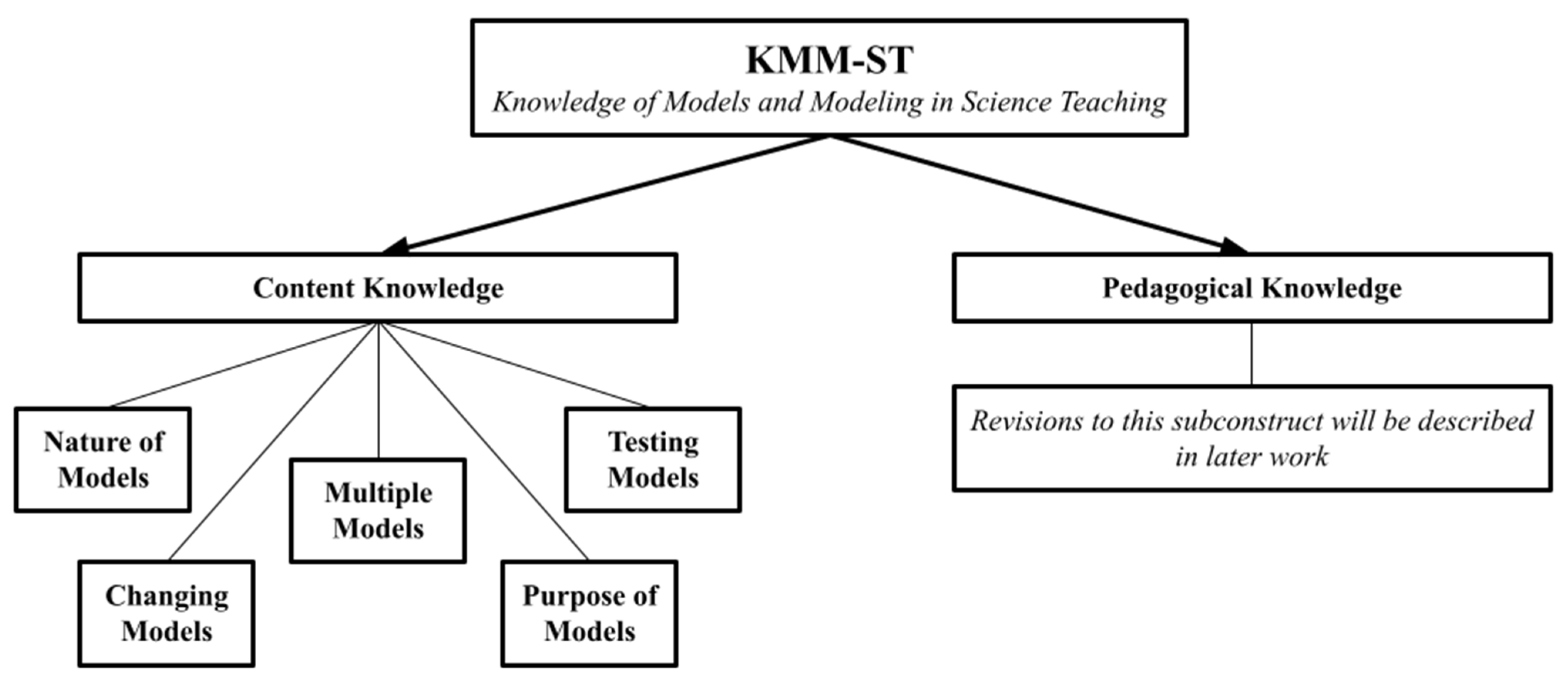

2.1. Conceptualizations of the KMM-ST Construct Used to Develop the 1st Version Survey

2.1.1. Content Knowledge for Models and Modeling

2.1.2. Pedagogical Knowledge for Models and Modeling

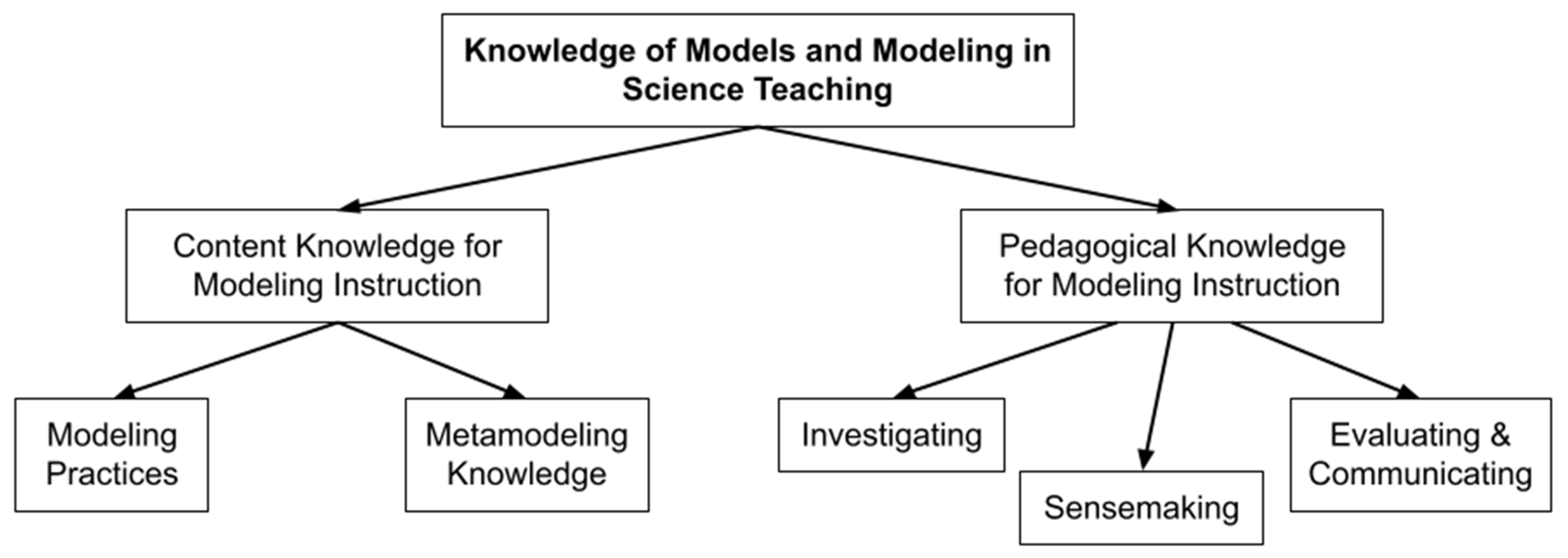

2.1.3. Initial KMM-ST Conceptual Framework

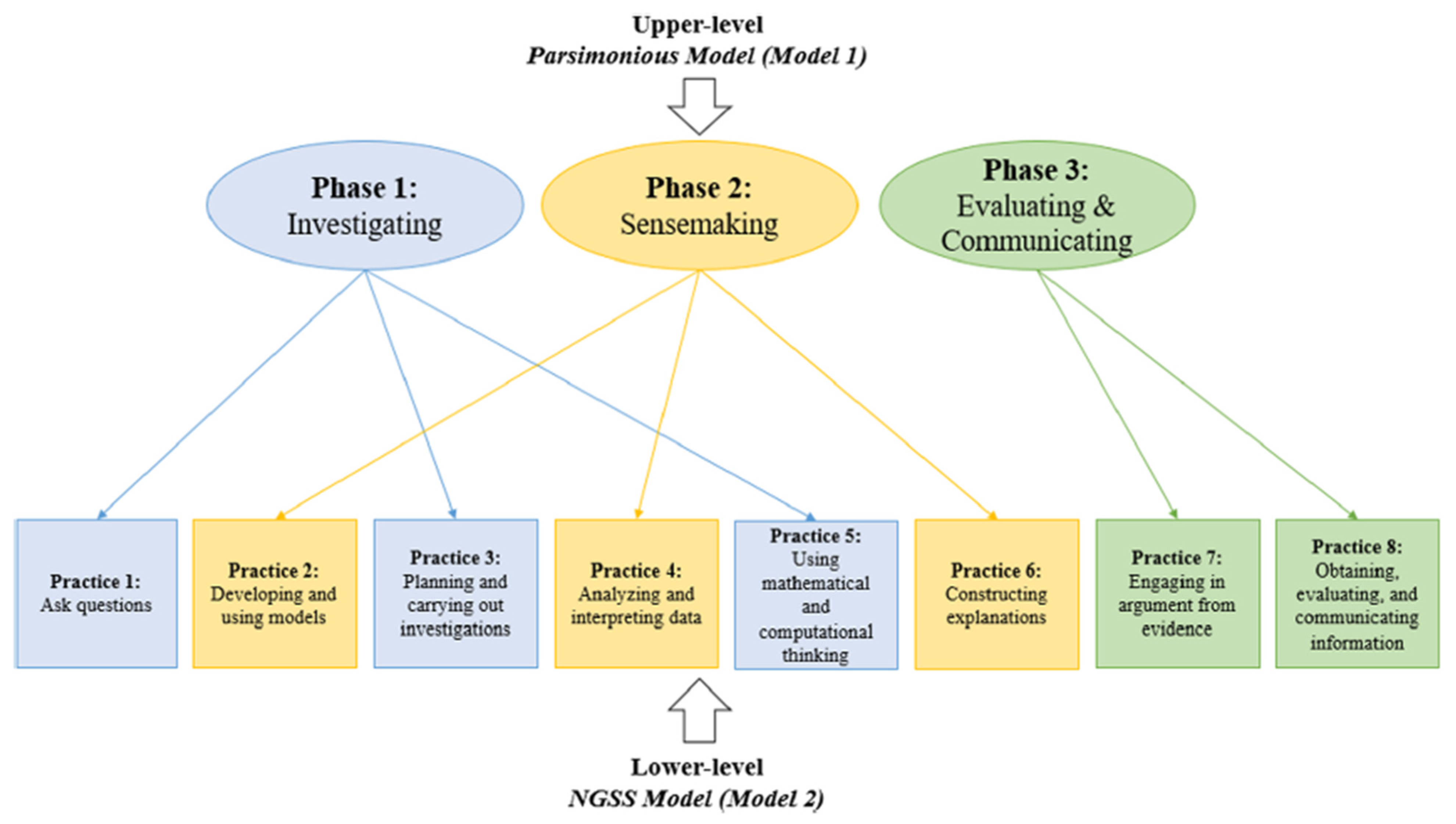

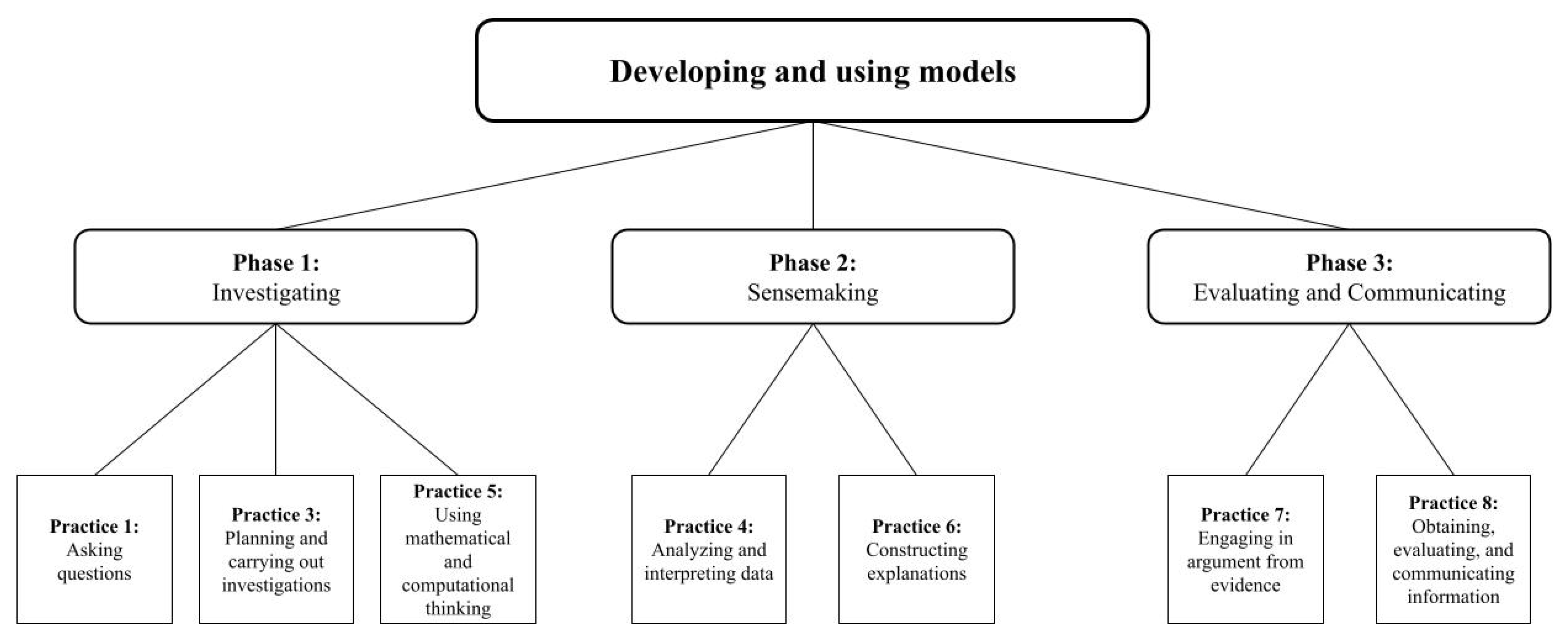

2.2. Conceptualizations of the KMM-ST Construct Used to Develop the 2nd Version of the Survey

Revised KMM-ST Conceptual Framework

3. Methods

3.1. The 1st Version of the KMM-ST Survey

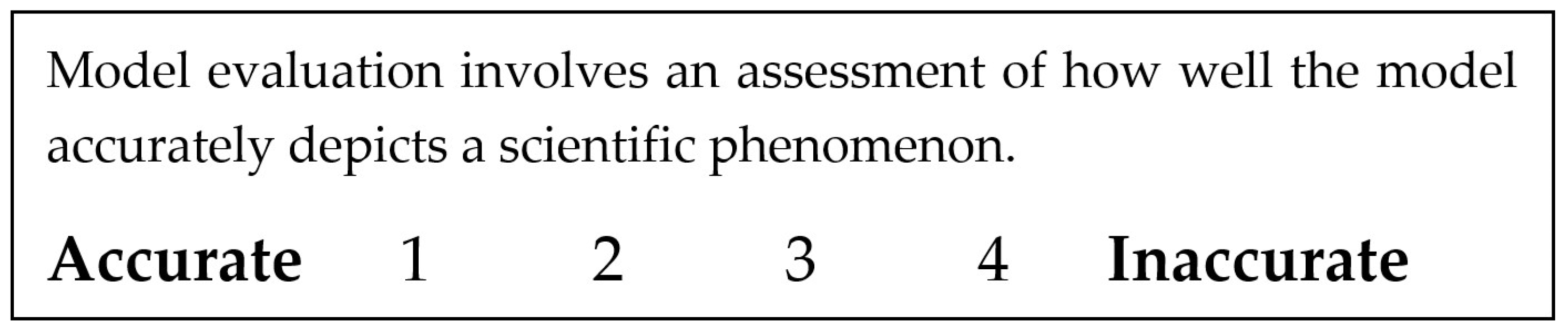

3.1.1. 1st Version Item Development and Content Validation

3.1.2. Administration

3.1.3. Construct Validation and Revision

3.2. The 2nd Version of the KMM-ST Survey

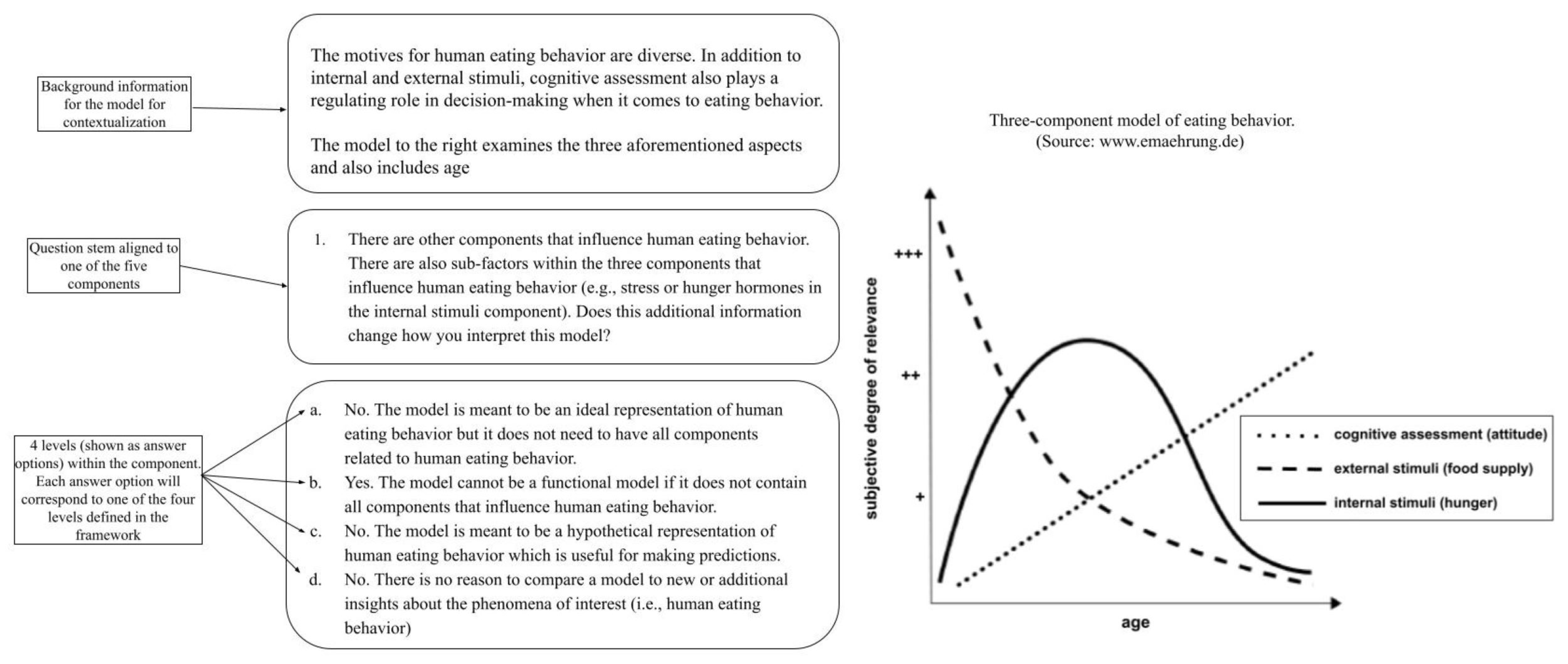

3.2.1. 2nd Version Item Development and Content Validation

3.2.2. Administration

3.2.3. Construct Validation and Revision

4. Results

4.1. Validation and Revision of the 1st Version of the KMM-ST Survey

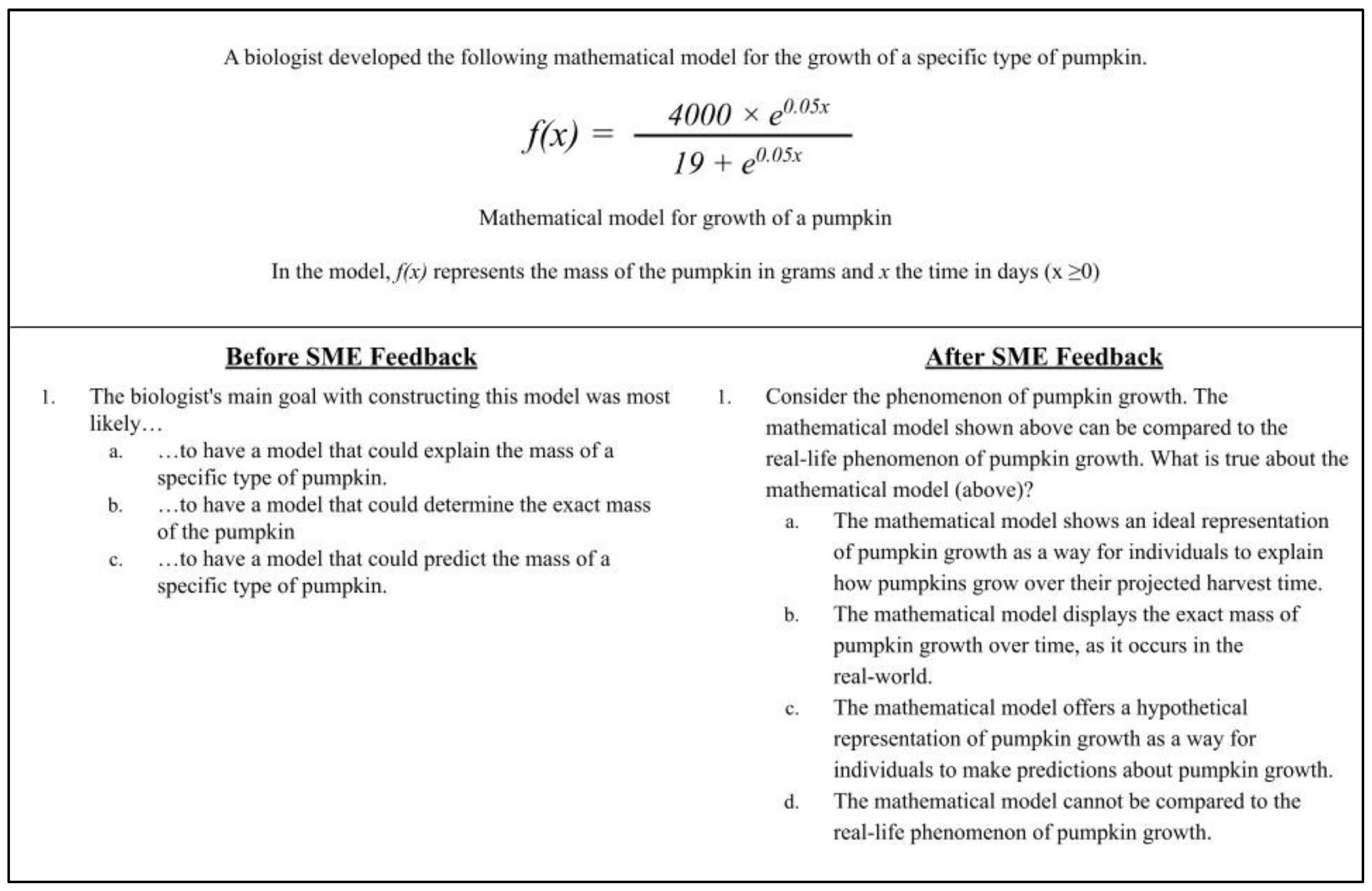

4.1.1. Content Validation through SME Consultation

4.1.2. Analysis of Responses to the 1st Version of the KMM-ST Survey

Descriptive Statistics

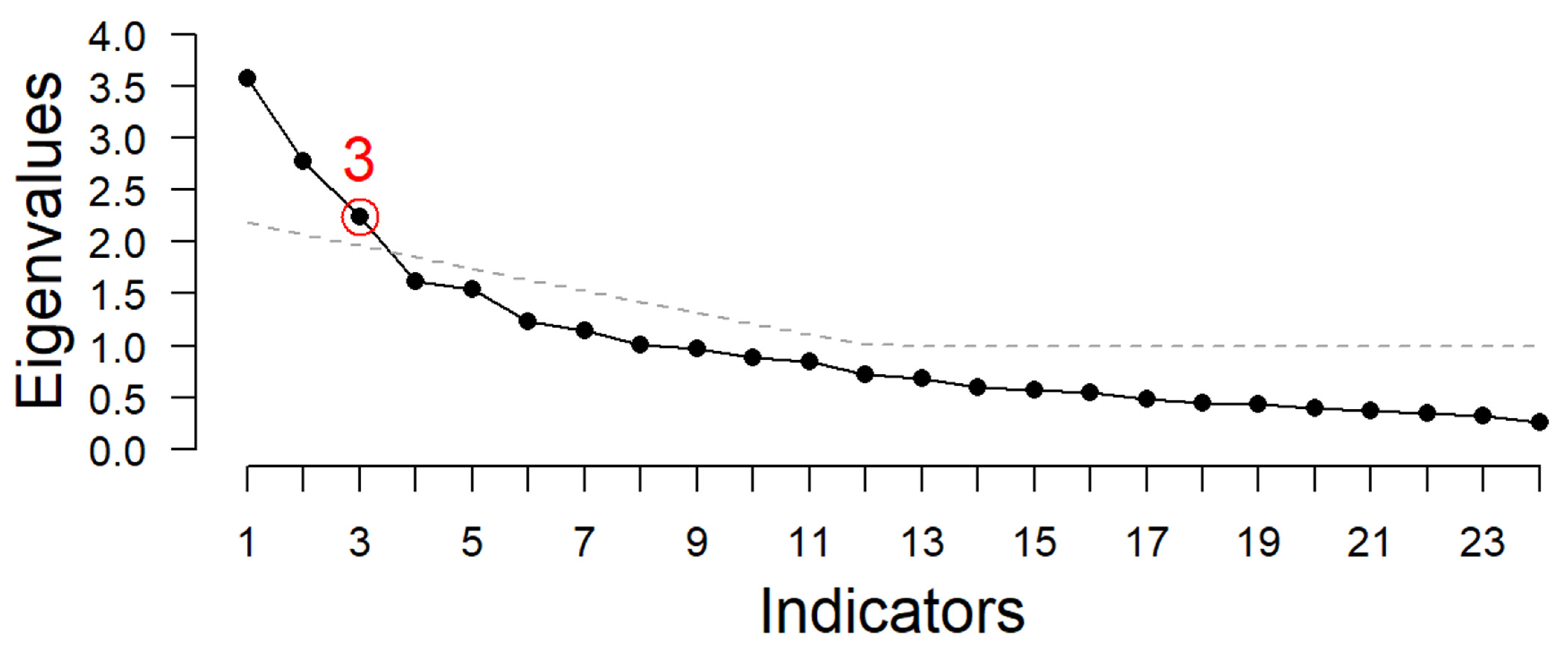

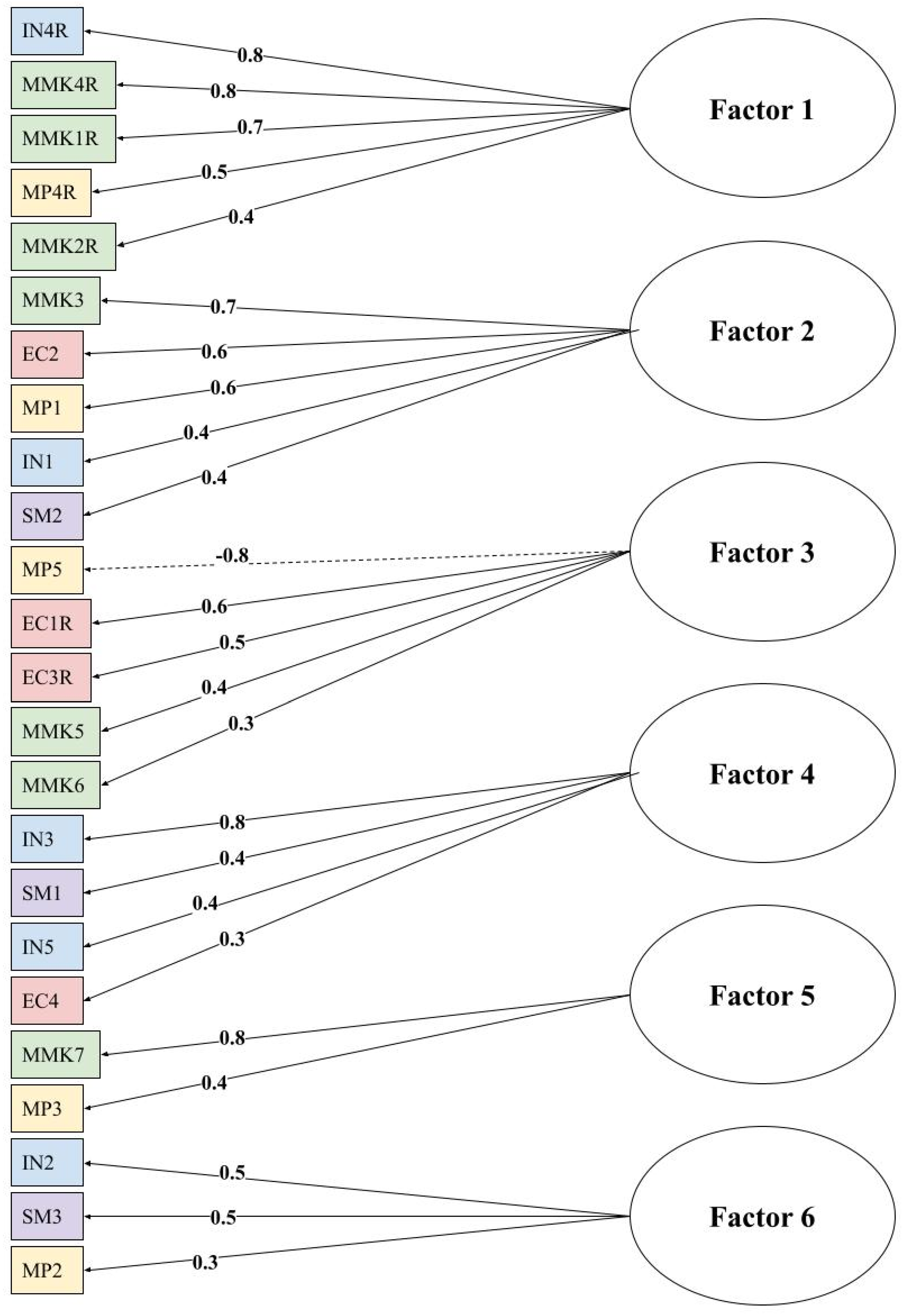

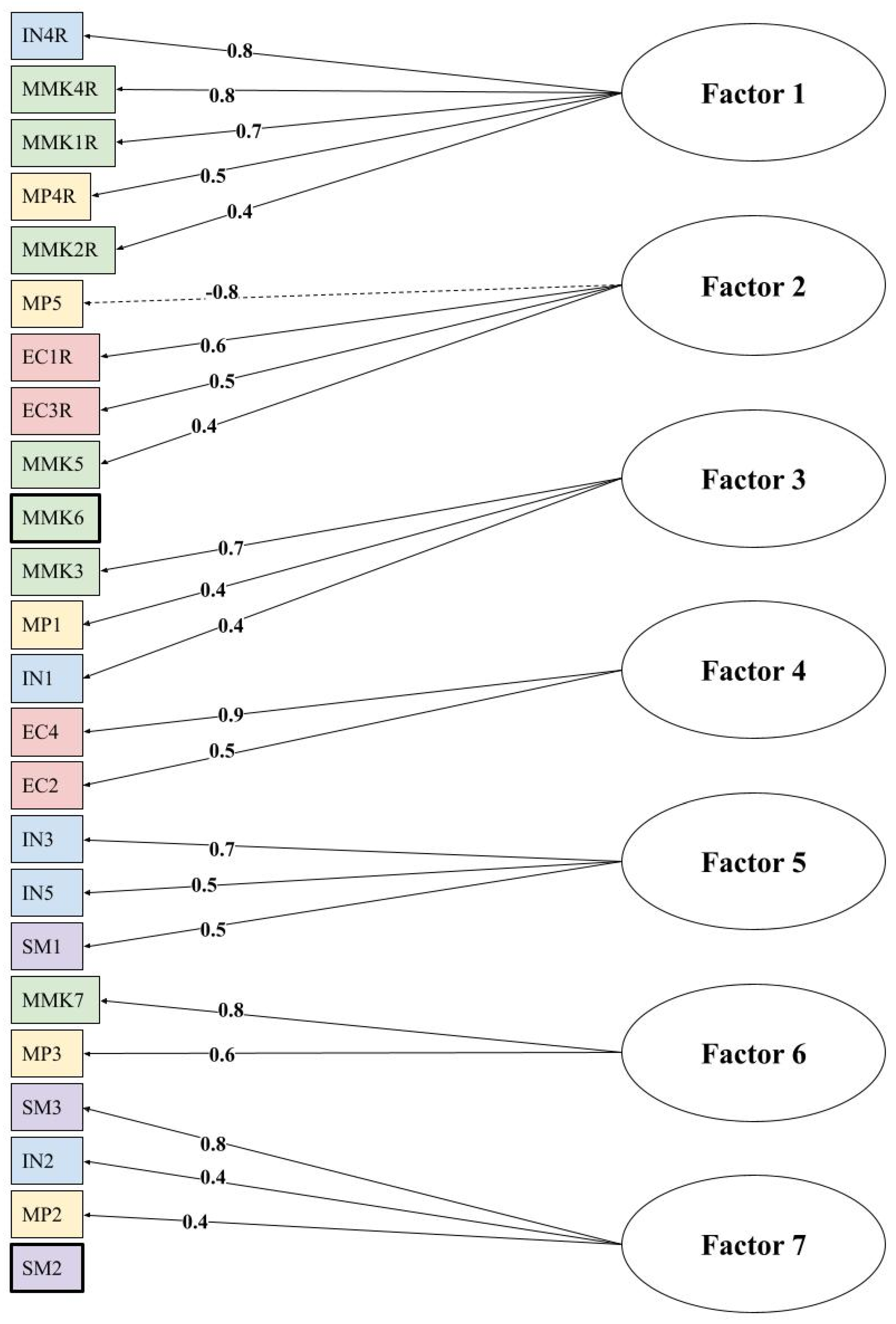

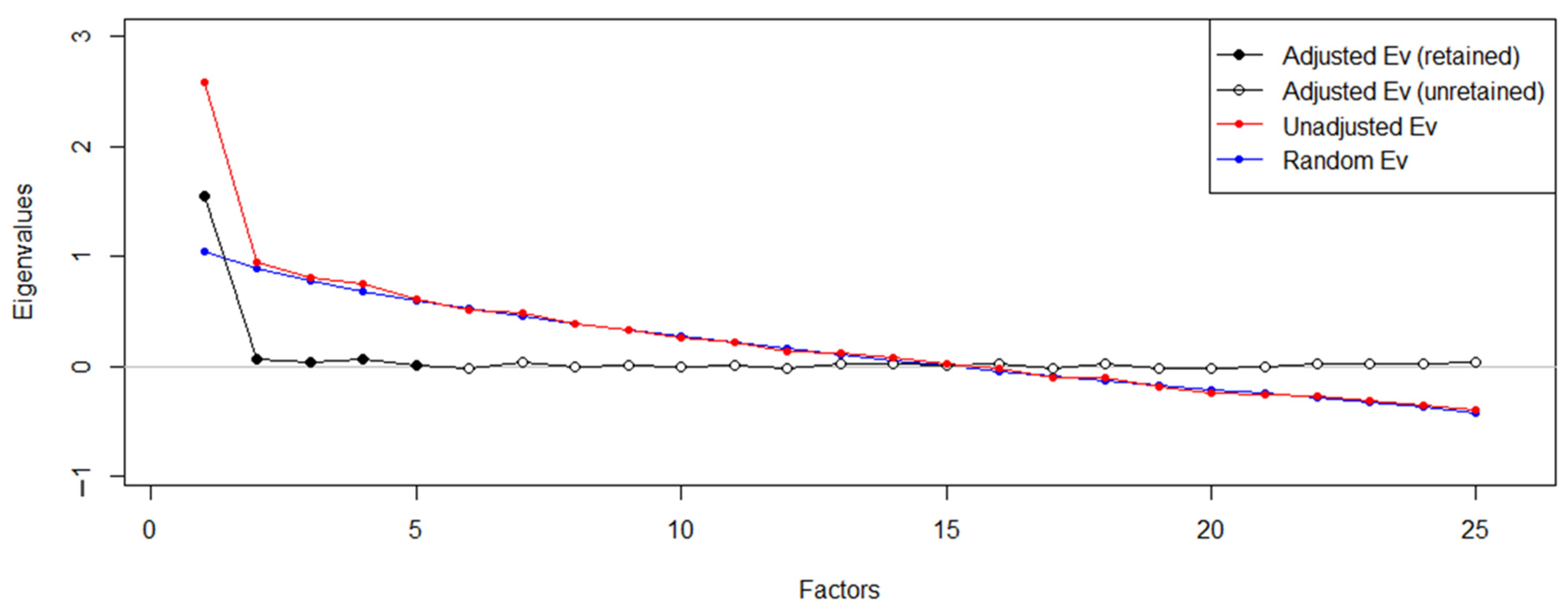

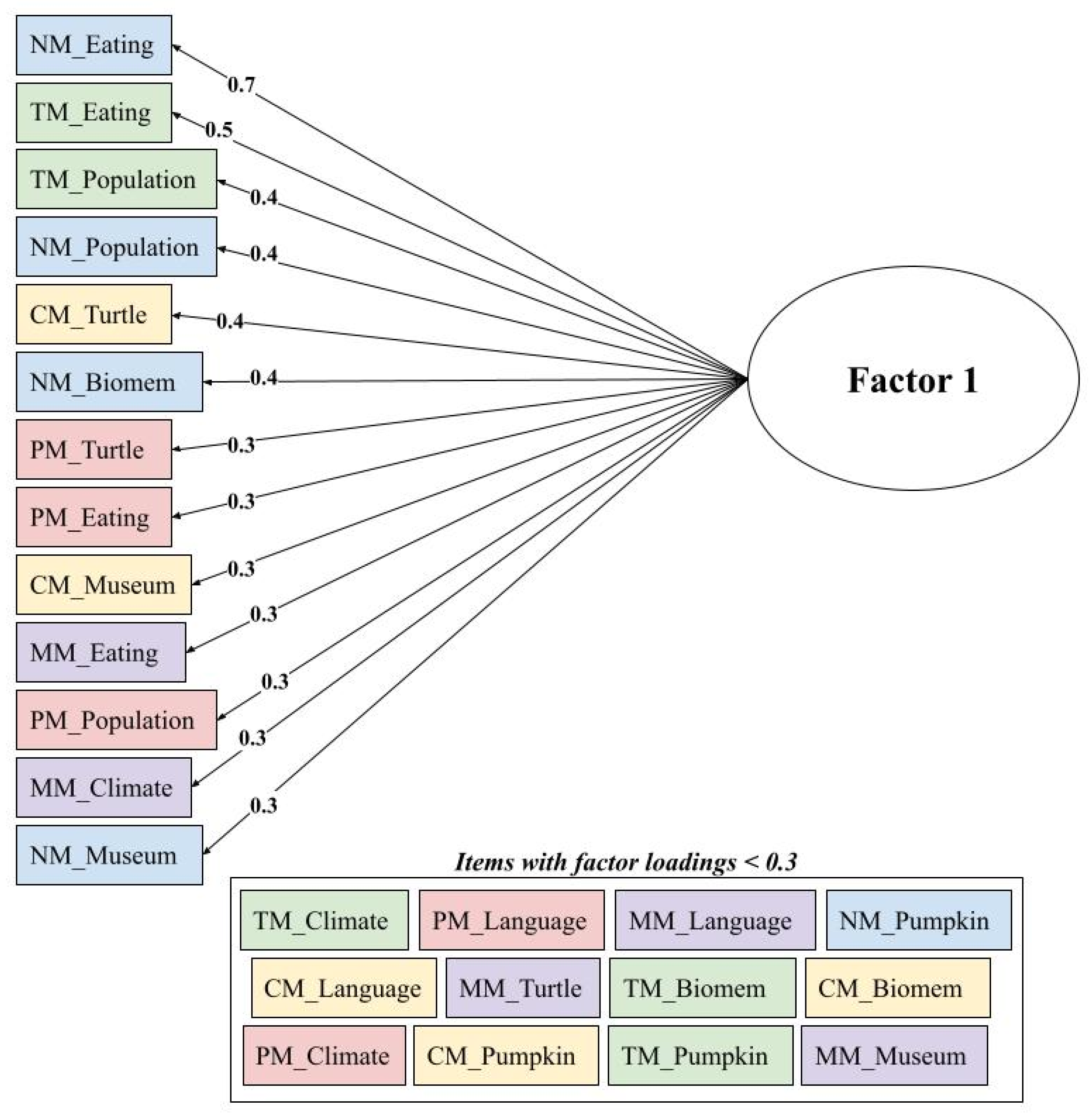

Results from Exploratory Factor Analysis

4.2. Validation and Revision of the 2nd Version of the KMM-ST Survey

4.2.1. Content Validation through SME Consultation

4.2.2. Analysis of Responses to the 1st Version of the KMM-ST Survey

Descriptive Statistics and Item Analysis

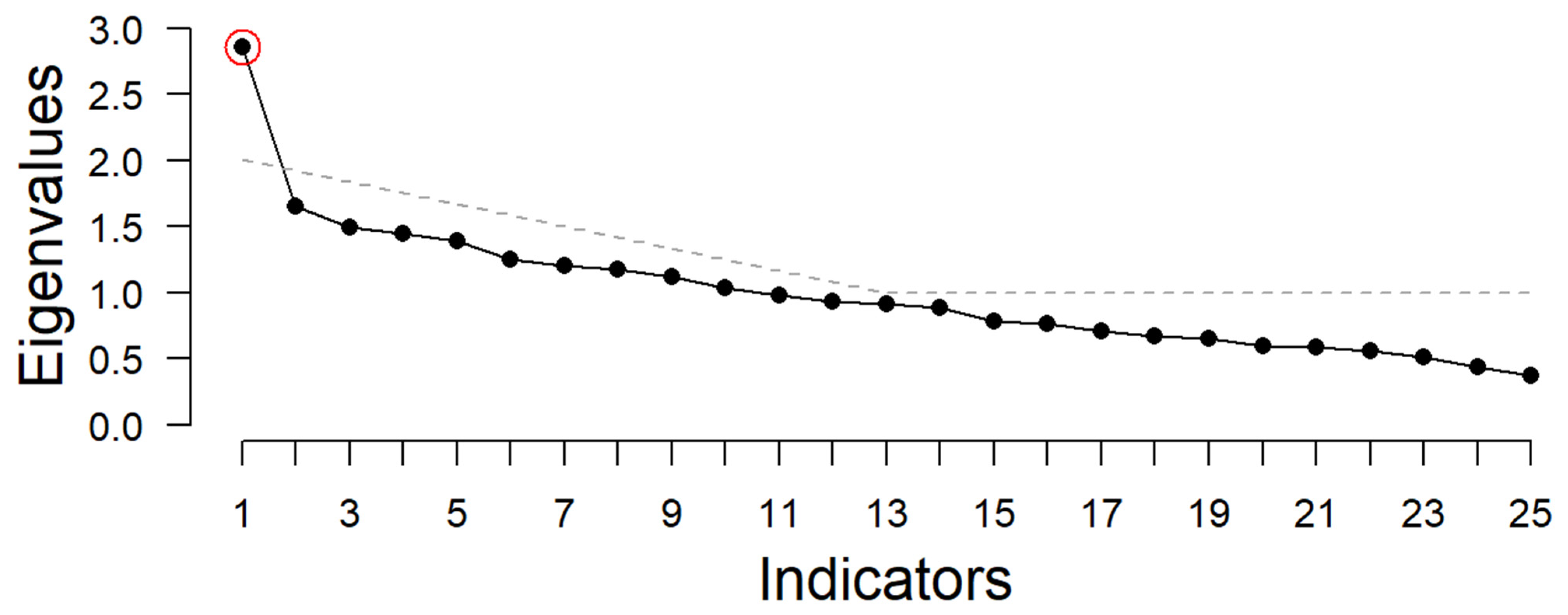

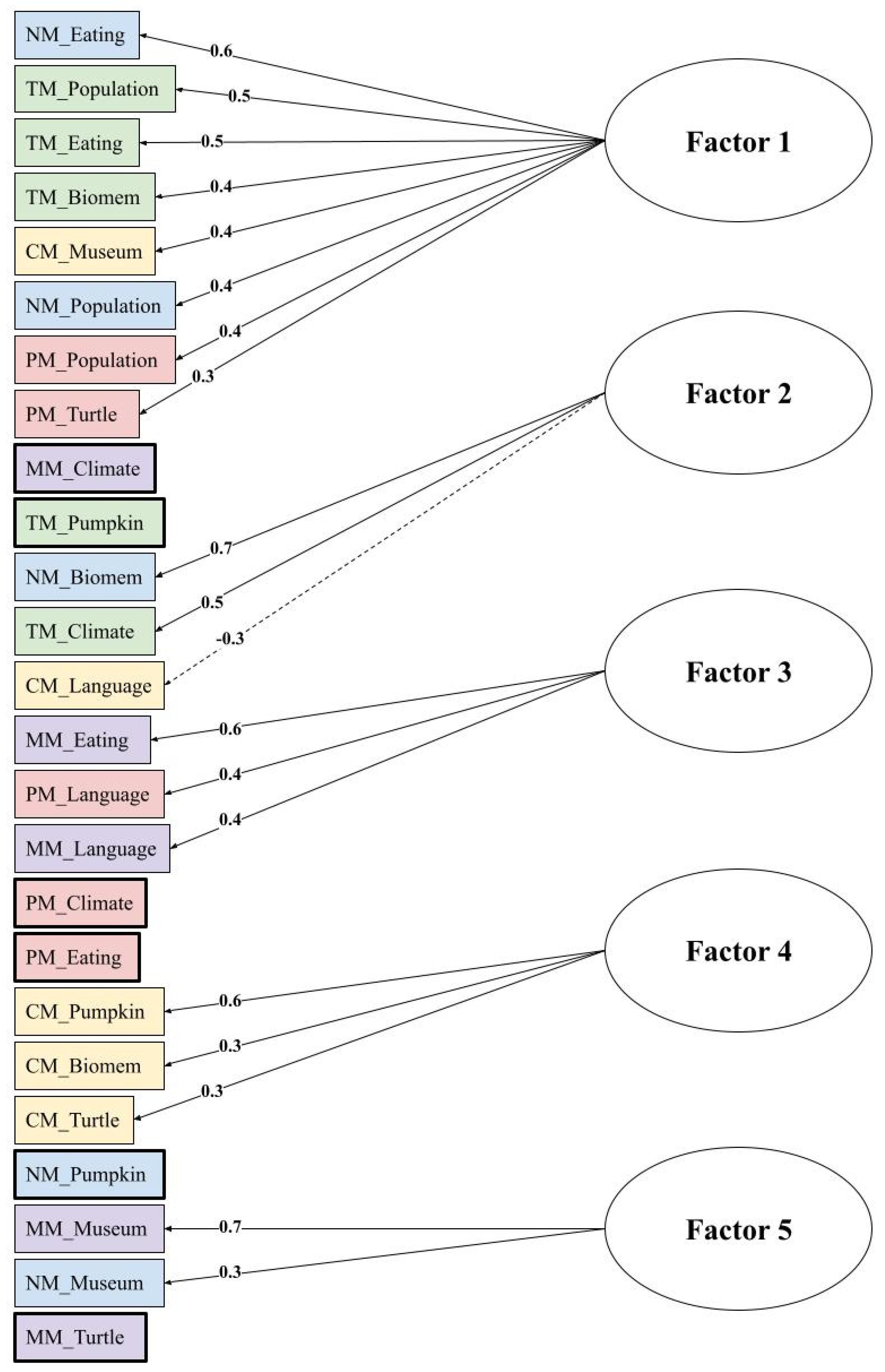

Results from Exploratory Factor Analysis

5. Discussion

5.1. Discussion of the Findings from the 1st Version of the KMM-ST Survey

5.2. Discussion of the Findings from the 2nd Version of the KMM-ST Survey

5.3. Limitations and Future Work

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ke, L.; Schwarz, C.V. Supporting students’ meaningful engagement in scientific modeling through epistemological messages: A case study of contrasting teaching approaches. J. Res. Sci. Teach. 2021, 58, 335–365. [Google Scholar] [CrossRef]

- NGSS Lead States. Next Generation Science Standards: For States, by States; National Academies Press: Washington, DC, USA, 2013. [Google Scholar]

- OECD. PISA 2018 Assessment and Analytical Framework. In OECD, PISA 2018 Science Framework; OECD Publishing: Paris, France, 2019; pp. 97–117. [Google Scholar] [CrossRef]

- Berland, L.K.; Schwarz, C.V.; Krist, C.; Kenyon, L.; Lo, A.S.; Reiser, B.J. Epistemologies in practice: Making scientific practices meaningful for students. J. Res. Sci. Teach. 2016, 53, 1082–1112. [Google Scholar] [CrossRef]

- Gouvea, J.; Passmore, C. ‘Models of’ versus ‘Models for’: Toward an agent-based conception of modeling in the science classroom. Sci. Educ. 2017, 26, 49–63. [Google Scholar] [CrossRef]

- National Research Council (NRC). A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas; National Academies Press: Washington, DC, USA, 2012. [Google Scholar]

- Chen, Y.; Terada, T. Development and validation of an observation-based protocol to measure the eight scientific practices of the next generation science standards in K-12 science classrooms. J. Res. Sci. Teach. 2021, 58, 1489–1526. [Google Scholar] [CrossRef]

- Inkinen, J.; Klager, C.; Juuti, K.; Schneider, B.; Salmela-Aro, K.; Krajcik, J.; Lavonen, J. High school students’ situational engagement associated with scientific practices in designed science learning situations. Sci. Educ. 2020, 104, 667–692. [Google Scholar] [CrossRef]

- Barth-Cohen, L.A.; Braden, S.K.; Young, T.G.; Gailey, S. Reasoning with evidence while modeling: Successes at the middle school level. Phys. Rev. Phys. Educ. Res. 2021, 17, 020106, Education Database. [Google Scholar] [CrossRef]

- Chang, H.-Y. Teacher guidance to mediate student inquiry through interactive dynamic visualizations. Instr. Sci. 2013, 41, 895–920. [Google Scholar] [CrossRef]

- Hmelo-Silver, C.E.; Jordan, R.; Eberbach, C.; Sinha, S. Systems learning with a conceptual representation: A quasi-experimental study. Instr. Sci. 2017, 45, 53–72. [Google Scholar] [CrossRef]

- Hung, J.-F.; Tsai, C.-Y. The effects of a virtual laboratory and meta-cognitive scaffolding on students’ data modeling competences. J. Balt. Sci. Educ. 2020, 19, 923–939, Education Database. [Google Scholar] [CrossRef]

- Cuperman, D.; Verner, I.M. Learning through creating robotic models of biological systems. Int. J. Technol. Des. Educ. 2013, 23, 849–866. [Google Scholar] [CrossRef]

- Lehrer, R.; Schauble, L. Scientific thinking and science literacy: Supporting development in learning in contexts. In Handbook of Child Psychology, 6th ed.; Damon, W., Lerner, R.M., Renninger, K.A., Sigel, I.E., Eds.; John Wiley and Sons: Hoboken, NJ, USA, 2006; Volume 4. [Google Scholar]

- Passmore, C.; Stewart, J.; Cartier, J. Model-based inquiry and school science: Creating connections. Sch. Sci. Math. 2009, 109, 394–402. [Google Scholar] [CrossRef]

- Windschitl, M.; Thompson, J.; Braaten, M. Beyond the scientific method: Model-based inquiry as a new paradigm of preference for school science investigations. Sci. Educ. 2008, 92, 941–967. [Google Scholar] [CrossRef]

- Banilower, E.R. Understanding the big picture for science teacher education: The 2018 NSSME+. J. Sci. Teach. Educ. 2019, 30, 201–208. [Google Scholar] [CrossRef]

- Park, S.; Kite, V.; Suh, J.; Jung, J.; Rachmatullah, A. Investigation of the relationships among science teachers’ epistemic orientations, epistemic understanding, and implementation of Next Generation Science Standards science practices. J. Res. Sci. Teac. 2022, 59, 561–584. [Google Scholar] [CrossRef]

- Campbell, T.; Oh, P.S.; Maughn, M.; Kiriazis, N.; Zuwallack, R. A review of modeling pedagogies: Pedagogical functions, discursive acts, and technology in modeling instruction. EURASIA J. Math. Sci. Technol. Educ. 2015, 11, 159–176. [Google Scholar] [CrossRef]

- Danusso, L.; Testa, I.; Vicentini, M. Improving prospective teachers’ knowledge about scientific models and modelling: Design and evaluation of a teacher education intervention. Int. J. Sci. Educ. 2010, 32, 871–905. [Google Scholar] [CrossRef]

- Krell, M.; Krüger, D. University students’ meta-modelling knowledge. Res. Sci. Technol. Educ. 2017, 35, 261–273. [Google Scholar] [CrossRef]

- Van Driel, J.H.; Verloop, N. Teachers’ knowledge of models and modelling in science. Int. J. Sci. Educ. 1999, 21, 1141–1153. [Google Scholar] [CrossRef]

- Shi, F.; Wang, L.; Liu, X.; Chiu, M. Development and validation of an observation protocol for measuring science teachers’ modeling-based teaching performance. J. Res. Sci. Teach. 2021, 58, 1359–1388. [Google Scholar] [CrossRef]

- Shulman, L.S. Those Who Understand: Knowledge Growth in Teaching. Educ. Res. 1986, 15, 4–14. [Google Scholar] [CrossRef]

- Park, S.; Chen, Y.-C. Mapping out the integration of the components of pedagogical content knowledge (PCK) for teaching photosynthesis and heredity. J. Res. Sci. Teach. 2012, 49, 922–941. [Google Scholar] [CrossRef]

- Gess-Newsome, J. Pedagogical content knowledge: An introduction and orientation. In Examining Pedagogical Content Knowledge; Springer: Dordrecht, The Netherlands, 1999; pp. 3–17. [Google Scholar]

- Förtsch, S.; Förtsch, C.; von Kotzebue, L.; Neuhaus, B. Effects of teachers’ professional knowledge and their use of three-dimensional physical models in biology lessons on students’ achievement. Educ. Sci. 2018, 8, 118. [Google Scholar] [CrossRef]

- Henze, I.; Van Driel, J.; Verloop, N. The change of science teachers’ personal knowledge about teaching models and modelling in the context of science education reform. Int. J. Sci. Educ. 2007, 29, 1819–1846. [Google Scholar] [CrossRef]

- Henze, I.; Van Driel, J.H.; Verloop, N. Development of experienced science teachers’ pedagogical content knowledge of models of the solar system and the universe. Int. J. Sci. Educ. 2008, 30, 1321–1342. [Google Scholar] [CrossRef]

- Gilbert, J.K.; Justi, R. Modelling-Based Teaching in Science Education; Springer International Publishing: Cham, Switzerland, 2016; Volume 9. [Google Scholar] [CrossRef]

- Schwarz, C.V.; Reiser, B.J.; Davis, E.A.; Kenyon, L.; Achér, A.; Fortus, D.; Shwartz, Y.; Hug, B.; Krajcik, J. Developing a learning progression for scientific modeling: Making scientific modeling accessible and meaningful for learners. J. Res. Sci. Teach. 2009, 46, 632–654. [Google Scholar] [CrossRef]

- Magnusson, S.; Krajcik, J.; Borko, H. Nature, sources, and development of pedagogical content knowledge for science teaching. In Examining Pedagogical Content Knowledge; Springer: Dordrecht, The Netherlands, 1999; pp. 95–132. [Google Scholar]

- Schwarz, C.V.; White, B.Y. Metamodeling Knowledge: Developing Students’ Understanding of Scientific Modeling. Cogn. Instr. 2005, 23, 165–205. [Google Scholar] [CrossRef]

- Upmeier zu Belzen, A.; van Driel, J.; Krüger, D. Introducing a framework for modeling competence. In Towards a Competence-Based View on Models and Modeling in Science Education; Upmeier zu Belzen, A., Krüger, D., van Driel, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 12, pp. 3–20. [Google Scholar] [CrossRef]

- Mathesius, S.; Krell, M. Assessing modeling competence with questionnaires. In Towards a Competence-Based View on Models and Modeling in Science Education; Upmeier zu Belzen, A., Krüger, D., van Driel, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 12, pp. 117–130. [Google Scholar] [CrossRef]

- Nicolaou, C.T.; Constantinou, C.P. Assessment of the modeling competence: A systematic review and synthesis of empirical research. Educ. Res. Rev. 2014, 13, 52–73. [Google Scholar] [CrossRef]

- Louca, L.T.; Zacharia, Z.C. Modeling-based learning in science education: Cognitive, metacognitive, social, material and epistemological contributions. Educ. Rev. 2012, 64, 471–492. [Google Scholar] [CrossRef]

- Oh, P.S.; Oh, S.J. What teachers of science need to know about models: An overview. Int. J. Sci. Educ. 2011, 33, 1109–1130. [Google Scholar] [CrossRef]

- Grünkorn, J.; Upmeier zu Belzen, A.; Krüger, D. Assessing students’ understandings of biological models and their use in science to evaluate a theoretical framework. Int. J. Sci. Educ. 2014, 36, 1651–1684. [Google Scholar] [CrossRef]

- Upmeier zu Belzen, A.; Alonzo, A.C.; Krell, M.; Krüger, D. Learning progressions and competence models: A comparative analysis. In Bridging Research and Practice in Science Education; McLoughlin, E., Finlayson, O.E., Erduran, S., Childs, P.E., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 6, pp. 257–271. [Google Scholar] [CrossRef]

- Grosslight, L.; Unger, C.; Jay, E.; Smith, C.L. Understanding models and their use in science: Conceptions of middle and high school students and experts. J. Res. Sci. Teach. 1991, 28, 799–822. [Google Scholar] [CrossRef]

- Crawford, B.A.; Cullin, M.J. Supporting prospective teachers’ conceptions of modelling in science. Int. J. Sci. Educ. 2004, 26, 1379–1401. [Google Scholar] [CrossRef]

- Jansen, S.; Knippels, M.; van Joolingen, W. Assessing students’ understanding of models of biological processes: A revised framework. Int. J. Sci. Educ. 2019, 41, 981–994. [Google Scholar] [CrossRef]

- Krell, M.; Reinisch, B.; Kruger, D. Analyzing students’ understanding of models and modeling referring to the disciplines biology, chemistry, and physics. Res. Sci. Educ. 2015, 45, 367–393. [Google Scholar] [CrossRef]

- StataCorp. Stata Statistical Software: Release 16; StataCorp LLC: College Station, TX, USA, 2019. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021; Available online: https://www.R-project.org/ (accessed on 2 April 2023).

- Yong, A.G.; Pearce, S. A beginner’s guide to factor analysis: Focusing on exploratory factor analysis. Tutor. Quant. Methods Psychol. 2013, 9, 79–94. [Google Scholar] [CrossRef]

- Warner, R.M. Applied Statistics: From Bivariate through Multivariate Techniques; Sage Publications: Thousand Oaks, CA, USA, 2012. [Google Scholar]

- Kaiser, H.F. The application of electronic computers to factor analysis. Educ. Psychol. Meas. 1960, 20, 141–151. [Google Scholar] [CrossRef]

- Horn, J.L. A rationale and test for the number of factors in factor analysis. Psychometrika 1965, 30, 179–185. [Google Scholar] [CrossRef]

- Braeken, J.; Van Assen, M.A. An empirical Kaiser criterion. Psychol. Methods 2017, 22, 450. [Google Scholar] [CrossRef]

- Krüger, D.; Hartmann, S.; Nordmeier, V.; Upmeier zu Belzen, A. Measuring scientific reasoning competencies. In Student Learning in German Higher Education; Zlatkin-Troitschanskaia, O., Pant, H., Toepper, M., Lautenbach, C., Eds.; Springer: Wiesbaden, Germany, 2020; pp. 261–280. [Google Scholar]

- Krell, M.; Redman, C.; Mathesius, S.; Krüger, D.; van Driel, J. Assessing pre-service science teachers’ scientific reasoning competencies. Res. Sci. Educ. 2018, 50, 2305–2329. [Google Scholar] [CrossRef]

- Krell, M.; Mathesius, S.; van Driel, J.; Vergara, C.; Krüger, D. Assessing scientific reasoning competencies of pre-service science teachers: Translating a German multiple-choice instrument into English and Spanish. Int. J. Sci. Educ. 2020, 42, 2819–2841. [Google Scholar] [CrossRef]

- Finch, W.H. Using Fit Statistic Differences to Determine the Optimal Number of Factors to Retain in an Exploratory Factor Analysis. Educ. Psychol. Meas. 2020, 80, 217–241. [Google Scholar] [CrossRef] [PubMed]

- Preacher, K.J.; Zhang, G.; Kim, C.; Mels, G. Choosing the optimal number of factors in exploratory factor analysis: A model selection perspective. Multivar. Behav. Res. 2013, 48, 28–56. [Google Scholar] [CrossRef] [PubMed]

- Ford, J.K.; MacCallum, R.C.; Tait, M. The application of exploratory factor analysis in applied psychology: A critical review and analysis. Pers. Psychol. 1986, 39, 291–314. [Google Scholar] [CrossRef]

- National Research Council (NRC). National Science Education Standards; National Academy Press: Washington, DC, USA, 1996. [Google Scholar]

- Rudolph, J.L. How We Teach Science: What’s Changed, and Why It Matters; Harvard University Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Rost, M.; Knuuttila, T. Models as epistemic artifacts for scientific reasoning in science education research. Educ. Sci. 2022, 12, 276. [Google Scholar] [CrossRef]

- OECD. PISA 2015 Results (Volume II): Policies and Practices for Successful Schools; OECD Publishing: Paris, France, 2016. [Google Scholar] [CrossRef]

- Sonderen, E.V.; Sanderman, R.; Coyne, J.C. Ineffectiveness of reverse wording of questionnaire items: Let’s learn from cows in the rain. PLoS ONE 2013, 8, e68967. [Google Scholar] [CrossRef]

- Lindwall, M.; Barkoukis, V.; Grano, C.; Lucidi, F.; Raudsepp, L.; Liukkonen, J.; Thøgersen-Ntoumani, C. Method effects: The problem with negatively versus positively keyed items. J. Personal. Assess. 2012, 94, 196–204. [Google Scholar] [CrossRef]

- Crocker, L.M.; Algina, J. Item Analysis. In Introduction to Classical and Modern Test Theory; Crocker, L.M., Algina, J., Eds.; Wadsworth Group: Belmont, CA, USA, 1986. [Google Scholar]

- Hinkin, T.R. A brief tutorial on the development of measures for use in survey questionnaires. Organ. Res. Methods 1998, 1, 104–121. [Google Scholar] [CrossRef]

- Rummel, R.J. Applied Factor Analysis; Northwestern University Press: Evanston, IL, USA, 1988. [Google Scholar]

- Schwab, D.P. Construct validity in organizational behavior. In Research in Organizational Behavior; Staw, B.M., Cummings, L.L., Eds.; JAI: Greenwich, CT, USA, 1980; Volume 2, pp. 3–43. [Google Scholar]

- Park, S.; Oliver, J.S. Revisiting the conceptualisation of pedagogical content knowledge (PCK): PCK as a conceptual tool to understand teachers as professionals. Res. Sci. Educ. 2008, 38, 261–284. [Google Scholar] [CrossRef]

| Aspect | Level I | Level II | Level III |

|---|---|---|---|

| Nature of models | Replication of the original | Idealized representation of the original | Theoretical reconstruction of the original |

| Multiple models | Different model objects | Different foci on the original | Different hypotheses about the original |

| Purpose of models | Describing the original | Explaining the original | Predicting something about the original |

| Testing models | Testing the model object | Comparing the model and the original | Testing hypotheses about the original |

| Changing models | Correcting defects of the model object | Revising due to new insights | Revising due to the falsification of hypotheses about the original |

| Item | Mean | |||

|---|---|---|---|---|

| MP1 | 0.92 | Mean of MP Items | Mean of all CK Items | Total Mean Score |

| MP2 | 0.99 | 4.43 (of 5) | 10.21 (of 12) | 20.34 (of 24) |

| MP3 | 0.92 | |||

| MP4R | 0.70 | |||

| MP5 | 0.90 | |||

| MMK1R | 0.93 | Mean of MMK Items | ||

| MMK2R | 0.45 | 5.78 (of 7) | ||

| MMK3 | 0.97 | |||

| MMK4R | 0.89 | |||

| MMK5 | 0.76 | |||

| MMK6 | 0.85 | |||

| MMK7 | 0.93 | |||

| IN1 | 0.65 | Mean of IN Items | Mean of all PK Items | |

| IN2 | 0.93 | 4.35 (of 5) | 10.13 (of 12) | |

| IN3 | 0.97 | |||

| IN4R | 0.81 | |||

| IN5 | 0.99 | |||

| SM1 | 0.99 | Mean of SM Items | ||

| SM2 | 0.97 | 2.92 (of 3) | ||

| SM3 | 0.96 | |||

| EC1R | 0.47 | Mean of EC Items | ||

| EC2 | 0.96 | 2.86 (of 4) | ||

| EC3R | 0.44 | |||

| EC4 | 0.99 |

| RMSEA | TLI | BIC | |

|---|---|---|---|

| Two-Factor Model | 0.09 | 0.52 | −649 |

| Three-Factor Model | 0.07 | 0.68 | −645 |

| Four-Factor Model | 0.06 | 0.76 | −606 |

| Five-Factor Model | 0.05 | 0.84 | −563 |

| Six-Factor Model | 0.04 | 0.90 | −513 |

| Seven-Factor Model | 0.01 | 0.99 | −470 |

| Item Name | Mean (SD) | Endorsed Level 0 | Endorsed Level 1 | Endorsed Level 2 | Endorsed Level 3 |

|---|---|---|---|---|---|

| CM_Museum | 2.49 (0.84) | 7 (4.9%) | 12 (8.3%) | 29 (20.1%) | 96 (66.7%) |

| CM_Turtle | 2.09 (0.79) | 2 (1.3%) | 33 (23.0%) | 59 (41.0%) | 50 (34.7%) |

| CM_Language | 1.73 (0.96) | 7 (4.9%) | 69 (47.9%) | 24 (16.7%) | 44 (30.5%) |

| CM_Biomem | 1.88 (0.89) | 17 (11.8%) | 16 (11.1%) | 79 (54.9%) | 32 (22.2%) |

| CM_Pumpkin | 1.72 (0.91) | 1 (0.7%) | 82 (56.9%) | 18 (12.5%) | 43 (29.9%) |

| NM_Museum | 2.37 (0.79) | 0 (0.0%) | 28 (19.4%) | 35 (24.3%) | 81 (56.3%) |

| NM_Eating | 2.33 (0.72) | 2 (1.4%) | 15 (10.4%) | 60 (41.7%) | 67 (46.5%) |

| NM_Biomem | 2.63 (0.60) | 3 (2.1%) | 0 (0.0%) | 44 (30.6%) | 97 (67.4%) |

| NM_Pumpkin | 2.56 (0.62) | 1 (0.7%) | 7 (4.9%) | 46 (31.9%) | 90 (62.5%) |

| NM_Population | 2.45 (0.66) | 3 (2.1%) | 4 (2.7%) | 62 (43.1%) | 75 (52.1%) |

| MM_Museum | 2.42 (0.79) | 3 (2.1%) | 18 (12.5%) | 39 (27.1%) | 84 (58.3%) |

| MM_Turtle | 1.75 (0.71) | 1 (0.7%) | 56 (38.9%) | 65 (45.1%) | 22 (15.3%) |

| MM_Language | 2.17 (0.81) | 2 (1.4%) | 31 (21.5%) | 52 (36.1%) | 59 (41.0%) |

| MM_Eating | 2.38 (0.64) | 1 (0.7%) | 9 (6.3%) | 69 (47.9%) | 65 (45.1%) |

| MM_Climate | 2.50 (0.73) | 0 (0.0%) | 20 (13.9%) | 32 (22.2%) | 92 (63.9%) |

| PM_Turtle | 2.38 (0.70) | 2 (1.4%) | 12 (8.3%) | 60 (41.7%) | 70 (48.6%) |

| PM_Language | 2.65 (0.62) | 1 (0.7%) | 8 (5.6%) | 32 (22.2%) | 103 (71.5%) |

| PM_Eating | 2.56 (0.67) | 2 (1.4%) | 8 (5.6%) | 42 (29.1%) | 92 (63.9%) |

| PM_Climate | 2.01 (0.66) | 0 (0.0%) | 31 (21.5%) | 81 (56.3%) | 32 (22.2%) |

| PM_Population | 2.45 (0.87) | 6 (4.2%) | 18 (12.5%) | 25 (17.4%) | 95 (65.9%) |

| TM_Eating | 2.45 (0.76) | 1 (0.7%) | 21 (14.6%) | 34 (23.6%) | 88 (61.1%) |

| TM_Climate | 2.22 (0.66) | 2 (1.4%) | 13 (9.0%) | 81 (56.3%) | 48 (33.3%) |

| TM_Biomem | 2.19 (0.60) | 1 (0.7%) | 12 (8.3%) | 90 (62.5%) | 41 (28.5%) |

| TM_Pumpkin | 2.52 (0.74) | 2 (1.4%) | 15 (10.4%) | 33 (22.9%) | 94 (65.3%) |

| TM_Population | 2.83 (0.49) | 2 (1.4%) | 1 (0.7%) | 17 (11.8%) | 124 (86.1%) |

| RMSEA | TLI | BIC | |

|---|---|---|---|

| One-Factor Model | 0.03 | 0.83 | −1062 |

| Two-Factor Model | 0.02 | 0.90 | −981 |

| Three-Factor Model | 0.01 | 0.96 | −900 |

| Four-Factor Model | 0.00 | 1.06 | −826 |

| Five-Factor Model | 0.00 | 1.10 | −745 |

| Cronbach’s Alpha (α) | Item | Factor 1 | Factor 2 | Factor 3 | Factor 4 | Communality |

|---|---|---|---|---|---|---|

| Factor 1 | TM_Population | 0.68 | 0.47 | |||

| α = 0.63 | TM_Eating | 0.65 | 0.47 | |||

| TM_Biomem | 0.63 | 0.62 | ||||

| NM_Eating | 0.62 | 0.59 | ||||

| NM_Population | 0.53 | 0.41 | ||||

| Factor 2 | MM_Language | 0.72 | 0.54 | |||

| α = 0.35 | MM_Eating | 0.70 | 0.52 | |||

| Factor 3 | CM_Pumpkin | 0.81 | 0.68 | |||

| α = 0.43 | CM_Biomem | 0.74 | 0.57 | |||

| Factor 4 | PM_Climate | 0.77 | 0.69 | |||

| α = 0.27 | PM_Population | 0.68 | 0.57 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carroll, G.; Park, S. Comparing the Use of Two Different Approaches to Assess Teachers’ Knowledge of Models and Modeling in Science Teaching. Educ. Sci. 2023, 13, 405. https://doi.org/10.3390/educsci13040405

Carroll G, Park S. Comparing the Use of Two Different Approaches to Assess Teachers’ Knowledge of Models and Modeling in Science Teaching. Education Sciences. 2023; 13(4):405. https://doi.org/10.3390/educsci13040405

Chicago/Turabian StyleCarroll, Grace, and Soonhye Park. 2023. "Comparing the Use of Two Different Approaches to Assess Teachers’ Knowledge of Models and Modeling in Science Teaching" Education Sciences 13, no. 4: 405. https://doi.org/10.3390/educsci13040405

APA StyleCarroll, G., & Park, S. (2023). Comparing the Use of Two Different Approaches to Assess Teachers’ Knowledge of Models and Modeling in Science Teaching. Education Sciences, 13(4), 405. https://doi.org/10.3390/educsci13040405