Development and Evaluation of Digital Learning Tools Promoting Applicable Knowledge in Economics and German Teacher Education

Abstract

:1. Relevance

- (I)

- To investigate the effects of the digital teaching-and-learning tools on applicable PK among trainee teachers in a pre-post-test;

- (II)

- To investigate the subjective ratings of the digital teaching-and-learning tools reported by students and instructors.

2. Applicable Pedagogical Knowledge

- (1)

- (2)

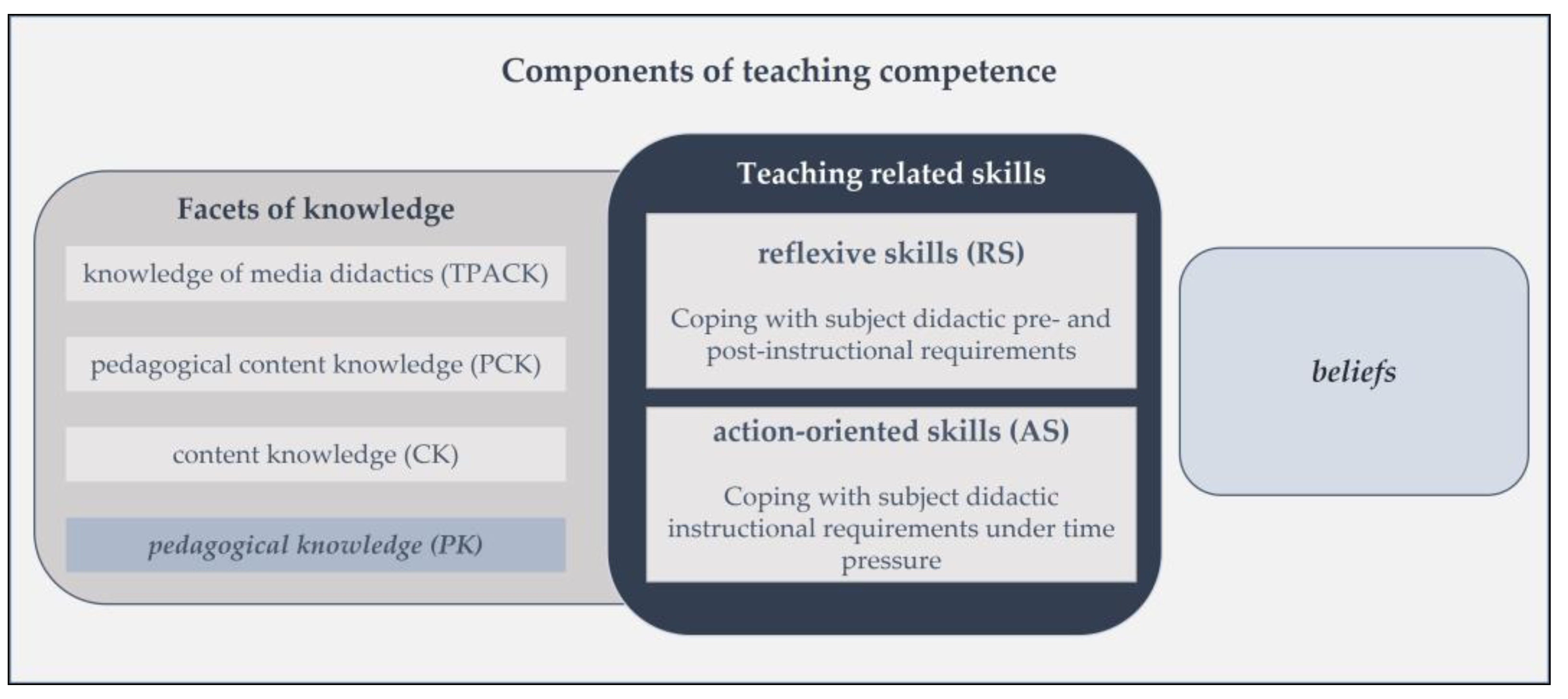

- These dispositions may determine the level of situation-specific skills [2,4]. In this context, PK can be considered as the basis (besides CK) for developing PCK as well as TPACK. Thereby, PK can be seen as the knowledge of processes, practices, and methods of learning and teaching [32,33,34]. According to Koehler and Mishra ([33], p. 64), “this generic form of knowledge applies to understanding how students learn, general classroom management skills, lesson planning, and student assessment.” This goes in line with the assumption by Voss and colleagues ([35], p. 953), who define general PK as “the knowledge needed to create and optimize teaching-learning situations”.

- (3)

- Furthermore, situation-specific skills can be differentiated according to the two major professional demands in teaching practice, which build on the professional knowledge base CK, PK, PCK and TPACK, action-related skills (AS) in the classroom, and reflection skills (RS) before and after teaching in a class (see Figure 1).

3. Promoting Applicable Pedagogical Knowledge in Teacher Education—Current Developments and Instructional Designs

3.1. Current Challenges for Instructional Designs

3.2. Design of the New Digital Tools

4. Topics of the New Digital Teaching-and-Learning Tools and Their Application in Teacher Education

4.1. Focal Topics

4.1.1. Digital Tool: ‘Professional Communication in Classrooms‘

4.1.2. Digital Tool: ‘Multilingualism in Classrooms’

4.2. Application and Evaluation of the New Tools in a Quasi-Experimental Study

5. Methods

5.1. Study Design

5.2. Instruments

5.2.1. Instrument on Professional Communication in Classrooms

5.2.2. Instrument on Multilingualism in Classrooms

5.3. Sample Description

5.4. Statistical Procedure

6. Results

6.1. Changes in PK Facets (RQ 1)

6.2. Usage Behavior and Its Influence on Changes in PK (RQ 2)

6.3. Usability and Usefulness from the Students’ Perspectives (RQ 3)

6.4. Usability and Usefulness from the Instructors’ Perspective (RQ 4)

7. Discussion

7.1. Summary and Interpretation of the Findings

7.2. Limitations and Outlook

7.3. Implications for Research and Practice

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Minea-Pic, A. Innovation teachers’ profesional learning trough digital technologies. In OECD Education Working Papers; OECD Publishing: Paris, France, 2020; Volume 237. [Google Scholar] [CrossRef]

- Mason, L.; Boldrin, A.; Ariasi, N. Searching the Web to learn about a controversial topic: Are students epistemically active? Instr. Sci. 2010, 38, 607–633. [Google Scholar] [CrossRef]

- Yu, R.; Gadiraju, U.; Holtz, P.; Rokicki, M.; Kemkes, P.; Dietze, S. Predicting User Knowledge Gain in Informational Search Sessions. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 75–84. [Google Scholar] [CrossRef]

- Laurillard, D. Rethinking University Teaching: A Conversational Framework for the Effective Use of Learning Technologies; Routledge: Milton Park, UK; Abingdon, UK; Oxfordshire, UK, 2002. [Google Scholar] [CrossRef]

- Spooner-Lane, R.; Tangen, D.; Mercer, K.L.; Hepple, E.; Carrington, S. Building intercultural competence one “patch” at a time. Educ. Res. Int. 2013, 2013, 394829. [Google Scholar] [CrossRef]

- Aljawarneh, S.A. Reviewing and exploring innovative ubiquitous learning tools in higher education. J. Comput. High. Educ. 2020, 32, 57–73. [Google Scholar] [CrossRef]

- Biggs, J.; Tang, C. Teaching for Quality Learning at University, 4th ed.; McGraw-Hill: New York, NY, USA, 2011; ISBN 9780335242757. [Google Scholar]

- Ribeiro, L.R.C. The Pros and Cons of Problem-Based Learning from the Teacher’s Standpoint. J. Univ. Teach. Learn. Pract. 2011, 8, 34–51. [Google Scholar] [CrossRef]

- UNESCO. Education: From Disruption to Recovery. Available online: https://en.unesco.org/covid19/educationresponse (accessed on 21 March 2023).

- Mulenga, E.M.; Marbán, J.M. Is COVID-19 the Gateway for Digital Learning in Mathematics Education? Contemp. Educ. Technol. 2020, 12, ep269. [Google Scholar] [CrossRef] [PubMed]

- Zawacki-Richter, O. The current state and impact of COVID-19 on digital higher education in Germany. Hum. Behav. Emerg. Technol. 2021, 3, 218–226. [Google Scholar] [CrossRef]

- Kuzmanovic, M.; Martic, M.; Popovic, M.; Savic, G. A new approach to evaluation of university teaching considering heterogeneity of students’ preferences. Int. J. High. Educ. Res. 2013, 66, 153–171. [Google Scholar] [CrossRef]

- Woods, R.; Baker, J.D.; Hopper, D. Hybrid structures: Faculty use and perception of web-based courseware as a supplement to face-to-face instruction. Internet High. Educ. 2004, 7, 281–297. [Google Scholar] [CrossRef]

- Bond, M.; Marín, V.I.; Dolch, C.; Bedenlier, S.; Zawacki-Richter, O. Digital transformation in German higher education: Student and teacher perceptions and usage of digital media. Int. J. Educ. Technol. High. Educ. 2018, 15, 1–20. [Google Scholar] [CrossRef]

- Breen, P. Developing Educatiors for The Digital Age: A Framework for Capturing Knowledge in Action; University of Westminster Press: London, UK, 2018. [Google Scholar] [CrossRef]

- Fitchett, P.G.; McCarthy, C.J.; Lambert, R.G.; Boyle, L. An examination of US first-year teachers’ risk for occupational stress: Associations with professional preparation and occupational health. Teach. Teach. 2018, 24, 99–118. [Google Scholar] [CrossRef]

- Keller-Schneider, M.; Zhong, H.F.; Yeung, A.S. Competence and challenges in professional development: Teacher perceptions at different stages of career. J. Educ. Teach. 2020, 46, 36–54. [Google Scholar] [CrossRef]

- Cooper, J.M. Classroom Teaching Skills; Wadsworth Cengage Learning: Belmont, CA, USA, 2010; ISBN-13: 978-1-133-60276-7. [Google Scholar]

- Kersting, N.B.; Sutton, T.; Kalinec-Craig, C.; Stoehr, K.J.; Heshmati, S.; Lozano, G.; Stigler, J.W. Further exploration of the classroom video analysis (CVA) instrument as a measure of usable knowledge for teaching mathematics: Taking a knowledge system perspective. ZDM 2016, 48, 97–109. [Google Scholar] [CrossRef]

- Oser, F.; Salzmann, P.; Heinzer, S. Measuring the competence-quality of vocational teachers: An advocatory approach. Empir. Res. Vocat. Educ. Train. 2009, 1, 65–83. [Google Scholar] [CrossRef]

- DeLoach, S.B.; Perry-Sizemore, E.; Borg, M.O. Creating quality undergraduate research programs in economics: How, when, where (and why). Am. Econ. 2012, 57, 96–110. [Google Scholar] [CrossRef]

- Branch, J.W.; Burgos, D.; Serna, M.D.A.; Ortega, G.P. Digital Transformation in Higher Education Institutions: Between Myth and Reality. In Radical Solutions and eLearning. Lecture Notes in Educational Technology; Burgos, D., Ed.; Springer: Berlin/Heidelberg, Germany, 2020; pp. 41–50. [Google Scholar] [CrossRef]

- Ugur, N.G. Digitalization in higher education: A qualitative approach. Int. J. Technol. Educ. Sci. 2020, 4, 18–25. [Google Scholar] [CrossRef]

- Falloon, G. From digital literacy to digital competence: The teacher digital competency (TDC) framework. Educ. Tech. Res. Dev. 2020, 68, 2449–2472. [Google Scholar] [CrossRef]

- van Lier, L. Action-based Teaching, Autonomy and Identity. Innov. Lang. Learn. Teach. 2007, 1, 46–65. [Google Scholar] [CrossRef]

- Piccardo, E.; North, B. The Action-Oriented Approach: A Dynamic Vision of Language Education; Multilingual Matters: Bristol, UK, 2019. [Google Scholar] [CrossRef]

- Weinert, F.E. Concept of competence: A conceptual clarification. In Defining and Selecting Key Competencies; Rychen, D.S., Salganik, L.H., Eds.; Hogrefe & Huber: Bern, Switzerland, 2001; pp. 45–65. [Google Scholar]

- Hakim, A. Contribution of Competence Teacher (Pedagogical, Personality, Professional Competence and Social) On the Performance of Learning. Int. J. Eng. Sci. 2015, 4, 1–12. [Google Scholar] [CrossRef]

- König, J. Teachers’ Pedagogical Beliefs. Definition and Operationalisation, Connections to Knowledge and Performance, Development and Change; Waxmann: Münster, Deutschland, 2012. [Google Scholar] [CrossRef]

- Blömeke, S.; Gustafsson, J.-E.; Shavelson, R.J. Beyond dichotomies: Competence viewed as a continuum. Z. Für Psychol. 2015, 223, 3–13. [Google Scholar] [CrossRef]

- Gregoire, M. Is it a challenge or a threat? A dual-process model of teachers’ cognition and appraisal processes during conceptual change. Educ. Psychol. Rev. 2003, 15, 147–179. [Google Scholar] [CrossRef]

- Shulman, L.S. Those who understand: Knowledge growth in teaching. Educ. Res. 1986, 15, 4–14. [Google Scholar] [CrossRef]

- Koehler, M.J.; Mishra, P. What is technological pedagogical content knowledge? Contemp. Issues Technol. Teach. Educ. 2009, 9, 60–70. [Google Scholar] [CrossRef]

- Chai, C.; Koh, J.; Tsai, C.-C. A Review of Technological Pedagogical Content Knowledge. J. Educ. Technol. Soc. 2013, 16, 31–51. [Google Scholar]

- Voss, T.; Kunter, M.; Baumert, J. Assessing Teacher Candidates’ General Pedagogical/Psychological Knowledge: Test Construction and Validation. J. Educ. Psychol. 2011, 103, 952–969. [Google Scholar] [CrossRef]

- Heinze, A.; Dreher, A.; Lindmeier, A.; Niemand, C. Akademisches versus schulbezogenes Fachwissen—Ein differenzierteres Modell des fachspezifischen Professionswissens von angehenden Mathematiklehrkräften der Sekundarstufe [Academic versus school-based professional knowledge—A more sophisticated model of the subject-specific professional knowledge of prospective secondary mathematics teachers]. Z. Für Erzieh. 2016, 19, 329–349. [Google Scholar]

- Applegate, J.L. Adaptive communication in educational contexts: A study of teachers’ communicative strategies. Commun. Educ. 2009, 29, 158–170. [Google Scholar] [CrossRef]

- De Corte, E. Constructive, Self-Regulated, Situated, and Collaborative Learning: An Approach for the Acquisition of Adaptive Competence. J. Educ. 2020, 192, 33–47. [Google Scholar] [CrossRef]

- Morris-Rothschild, B.K.; Brassard, M.K. Teachers’ conflict management styles: The role of attachment styles and classroom management efficacy. J. Sch. Psychol. 2006, 44, 105–121. [Google Scholar] [CrossRef]

- Brühwiler, C.; Vogt, F. Adaptive teaching competency. Effects on quality of instruction and learning outcomes. J. Educ. Res. 2020, 1, 119–142. [Google Scholar] [CrossRef]

- Vogt, F.; Rogalla, M. Developing adaptive teaching competency through coaching. Teach. Teach. Educ. 2009, 25, 1051–1060. [Google Scholar] [CrossRef]

- Bell, S.R. Power, territory, and interstate conflict. Confl. Manag. Peace Sci. 2017, 34, 160–175. [Google Scholar] [CrossRef]

- Morine-Dershimer, G.; Kent, T. The complex nature and sources of teachers’ pedagogical knowledge. In Examining Pedagogical Content Knowledge; Gess-Newsome, J., Lederman, N.G., Eds.; Springer: Berlin/Heidelberg, Germany, 1999; pp. 21–50. [Google Scholar] [CrossRef]

- Schipper, T.; Goei, S.L.; de Vries, S.; van Veen, K. Professional growth in adaptive teaching competence as a result of Lesson Study. Teach. Teach. Educ. 2017, 68, 289–303. [Google Scholar] [CrossRef]

- Huang, K.; Lubin, I.A.; Ge, X. Situated learning in an educational technology course for pre-service teachers. Teach. Teach. Educ. 2011, 27, 1200–1212. [Google Scholar] [CrossRef]

- Renkl, A. Toward an instructionally oriented theory of example-based learning. Cogn. Sci. 2014, 38, 1–37. [Google Scholar] [CrossRef] [PubMed]

- Catalano, A. The effect of a situated learning environment in a distance education information literacy course. J. Acad. Librariansh. 2015, 41, 653–659. [Google Scholar] [CrossRef]

- Nuthall, G. Relating classroom teaching to student learning: A critical analysis of why research has failed to bridge the theory-practice gap. Harv. Educ. Rev. 2004, 74, 273–306. [Google Scholar] [CrossRef]

- Allen, J.M. Valuing practice over theory: How beginning teachers re-orient their practice in the transition from the university to the workplace. Teach. Teach. Educ. 2009, 25, 647–654. [Google Scholar] [CrossRef]

- Korthagen, F.A.; Loughran, J.J.; Russell, T. Developing fundamental principles for teacher education programs and practices. Teach. Teach. Educ. 2006, 22, 1020–1041. [Google Scholar] [CrossRef]

- Dicke, T.; Elling, J.; Schmeck, A.; Leutner, D. Reducing reality shock: The effects of classroom management skills training on beginning teachers. Teach. Teach. Educ. 2015, 48, 1–12. [Google Scholar] [CrossRef]

- Walstad, W.B.; Salemi, M.K. Results from a Faculty Development Program in Teaching Economics. J. Econ. Educ. 2011, 42, 283–293. [Google Scholar] [CrossRef]

- Codreanu, E.; Sommerhoff, D.; Huber, S.; Ufer, S.; Seidel, T. Between authenticity and cognitive demand: Finding a balance in designing a video-based simulation in the context of mathematics teacher education. Teach. Teach. Educ. 2020, 95, 1–12. [Google Scholar] [CrossRef]

- Schaeper, H. Development of competencies and teaching–learning arrangements in higher education: Findings from Germany. Stud. High. Educ. 2009, 34, 677–697. [Google Scholar] [CrossRef]

- Cullen, R.; Harris, M.; Hill, R.R. The Learner-Centered Curriculum: Design and Implementation; John Wiley & Sons: Hoboken, NJ, USA, 2012; ISBN 978-1-118-17102-8. [Google Scholar]

- Loyens, S.M.; Magda, J.; Rikers, R.M. Self-directed learning in problem-based learning and its relationships with self-regulated learning. Educ. Psychol. Rev. 2008, 20, 411–427. [Google Scholar] [CrossRef]

- Loeng, S. Self-directed learning: A core concept in adult education. Educ. Res. Int. 2020, 2020, 3816132. [Google Scholar] [CrossRef]

- Pourshafie, T.; Murray-Harvey, R. Facilitating problem-based learning in teacher education: Getting the challenge right. J. Educ. Teach. 2013, 39, 169–180. [Google Scholar] [CrossRef]

- Merrill, M.D. First principles of instruction. Educ. Technol. Res. Dev. 2002, 50, 43–49. [Google Scholar] [CrossRef]

- Seidel, T.; Stürmer, K. Modeling and Measuring the Structure of Professional Vision in Pre-Service Teachers. Am. Educ. Res. J. 2014, 51, 739–771. [Google Scholar] [CrossRef]

- van Es, E.A.; Sherin, M.G. Learning to Notice: Scaffolding New Teachers’ Interpretations of Classroom Interactions. J. Technol. Teach. Educ. 2002, 10, 571–596. [Google Scholar]

- Ataizi, M. Situated Cognition. In Encyclopedia of the Sciences of Learning; Seel, N.M., Ed.; Springer Science & Business Media: Bostan, MA, USA, 2012; pp. 3082–3084. ISBN 978-1-4419-1428-6. [Google Scholar]

- Hirsch, S.E.; Kennedy, M.J.; Haines, S.J.; Thomas, C.N.; Alves, K.D. Improving preservice teachers’ knowledge and application of functional behavioral assessments using multimedia. Behav. Disord. 2015, 41, 38–50. [Google Scholar] [CrossRef]

- Kuhn, C.; Alonzo, A.C.; Zlatkin-Troitschanskaia, O. Evaluating the pedagogical content knowledge of pre- and in-service teachers of business and economics to ensure quality of classroom practice in vocational education and training. Empir. Res. Vocat. Educ. Train. 2016, 8, 1–18. [Google Scholar] [CrossRef]

- Alonzo, A.C.; Kobarg, M.; Seidel, T. Pedagogical content knowledge as reflected in teacher-student interactions: Analysis of two video cases. J. Res. Sci. Teach. 2012, 49, 1211–1239. [Google Scholar] [CrossRef]

- Pal, D.; Patra, S. University Students’ Perception of Video-Based Learning in Times of COVID-19: A TAM/TTF Perspective. Int. J. Hum. Comput. Interact. 2021, 37, 903–921. [Google Scholar] [CrossRef]

- Chen, G.; Chan, C.K.; Chan, K.K.; Clarke, S.N.; Resnick, L.B. Efficacy of video-based teacher professional development for increasing classroom discourse and student learning. J. Learn. Sci. 2020, 29, 642–680. [Google Scholar] [CrossRef]

- Gaudin, C.; Chalies, S. Video viewing in teacher education and professional development: A literature review. Educ. Res. Rev. 2015, 16, 41–76. [Google Scholar] [CrossRef]

- Almara’beh, H.; Amer, E.F.; Sulieman, A. The effectiveness of multimedia learning tools in education. Int. J. 2015, 5, 761–764. [Google Scholar]

- Wadill, D. Action E-Learning: An Exploratory Case Study of Action Learning Applied Online. Hum. Resour. Dev. Int. 2006, 9, 157–171. [Google Scholar] [CrossRef]

- Brückner, S.; Saas, H.; Reichert-Schlax, J.; Zlatkin-Troitschanskaia, O.; Kuhn, C. Digitale Medienpakete zur Förderung handlungsnaher Unterrichtskompetenzen [Digital media packages for the promotion of action-oriented teaching skills]. Bwp@ Berufs-und Wirtsch.–Online 2021, 40, 1–25. [Google Scholar]

- Dadvand, B.; Behzadpoor, F. Pedagogical knowledge in English language teaching: A lifelong-learning, complex-system perspective. Lond. Rev. Educ. 2020, 18, 107–126. [Google Scholar] [CrossRef]

- Brown, D.F. The Significance of Congruent Communication in Effective Classroom Management. Clear. House A. J. Educ. Strateg. Issues Ideas 2005, 79, 12–15. [Google Scholar] [CrossRef]

- Pecore, J.L. Beyond beliefs: Teachers adapting problem-based learning to preexisting systems of practice. Interdiscip. J. Probl. Based Learn. 2013, 7, 7–33. [Google Scholar] [CrossRef]

- Richards, K. ‘Being the teacher’: Identity and classroom conversation. Appl. Linguist. 2006, 27, 51–77. [Google Scholar] [CrossRef]

- Zimmerman, D.H. Discoursal identities and social identities. In Identities in Talk; Antaki, C., Widdicombe, S., Eds.; Sage: Thousand Oaks, CA, USA, 1998; pp. 87–106. [Google Scholar] [CrossRef]

- Lindner, K.-T.; Nusser, L.; Gehrer, K.; Schwab, S. Differentiation and Grouping Practices as a Response to Heterogeneity–Teachers’ Implementation of Inclusive Teaching Approaches in Regular, Inclusive and Special Classrooms. Front. Psychol. 2021, 12, 1–16. [Google Scholar] [CrossRef]

- Gibbons, P. Scaffolding Language, Scaffolding Learning: Teaching Second Language Learners in the Mainstream Classroom; Heinemann: Portsmouth, NH, USA, 2002. [Google Scholar]

- Hammond, J.; Gibbons, P. What is scaffolding. Teach. Voices 2005, 8, 8–16. [Google Scholar]

- Müller, A. Profession und Sprache: Die Sicht der (Zweit-)Spracherwerbsforschung [Profession and language: The view of (second) language acquisition research]. In Kindheit und Profession—Konturen und Befunde eines Forschungsfeldes [Childhood and Profession—Contours and Findings of a Field of Research]; Betz, T., Cloos, P., Eds.; Beltz Juventa: Weinheim, Germany, 2014; pp. 66–83. [Google Scholar]

- Hopp, H.; Thoma, D.; Tracy, R. Sprachförderkompetenz pädagogischer Fachkräfte [Language promotion competence of pedagogical staff]. Z. Für Erzieh. 2010, 13, 609–629. [Google Scholar] [CrossRef]

- Eberhardt, A.; Witte, A. Professional Development of Foreign Language Teachers: The Example of the COMENIUS Project Schule im Wandel (School Undergoing Change). Teanga 2016, 24, 34–43. [Google Scholar] [CrossRef]

- Okoli, A.C. Relating Communication Competence to Teaching Effectiveness: Implication for Teacher Education. J. Educ. Pract. 2017, 8, 150–154. [Google Scholar]

- Kuh, G.D.; Kinzie, J.; Buckley, J.A.; Bridges, B.K.; Hayek, J.C. What Matters to Student Success: A Review of the Literature; National Postsecondary Education Cooperative: Washington, DC, USA, 2006. [Google Scholar]

- Gardner, M.M.; Hickmott, J.; Ludvik, M.J.B. Demonstrating Student Success: A Practical Guide to Outcomes-Based Assessment of Learning and Development in Student Affairs; Stylus Publishing, LLC: Sterling, VA, USA, 2012. [Google Scholar]

- Aristovnik, A.; Keržič, D.; Tomaževič, N.; Umek, L. Demographic determinants of usefulness of e-learning tools among students of public administration. Interact. Technol. Smart Educ. 2016, 13, 289–304. [Google Scholar] [CrossRef]

- Turner, W.D.; Solis, O.J.; Kincade, D.H. Differentiating instruction for large classes in higher education. Int. J. Teach. Learn. High. Educ. 2017, 29, 490–500. [Google Scholar]

- Leiner, D.J. SoSci Survey, Version 3.1.06. Computer Software. 2019. Available online: https://www.soscisurvey.de (accessed on 5 May 2023).

- Hu, L.T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Marsh, H.W.; Hau, K.T.; Wen, Z. In search of golden rules: Comment on hypothesis-testing approaches to setting cutoff values for fit indexes and dangers in overgeneralizing Hu and Bentler’s (1999) findings. Struct. Equ. Model. 2004, 11, 320–341. [Google Scholar] [CrossRef]

- Stata Corp. Stata Statistical Software: Release 16; StataCorp LLC: College Station, TX, USA, 2019. [Google Scholar]

- Ledermüller, K.; Fallmann, I. Predicting learning success in online learning environments: Self-regulated learning, prior knowledge and repetition. Z. Für Hochsch. 2017, 12, 79–99. [Google Scholar] [CrossRef]

- Ezziane, Z. Information Technology Literacy: Implications on Learning and Teaching. Educ. Technol. Soc. 2007, 10, 175–191. [Google Scholar] [CrossRef]

- Educational Testing Services [ETS]. Digital Transformation. A Framework for ICT Literacy. Available online: https://www.ets.org/Media/Research/pdf/ICTREPORT.pdf (accessed on 21 March 2023).

- Anthonysamy, L.; Choo, A. Investigating Self-Regulated Learning Strategies for Digital Learning Relevancy. Malays. J. Learn. Instr. 2021, 18, 29–64. [Google Scholar] [CrossRef]

- Jhangiani, R.S. The Philosophy and Practices That Are Revolutionizing Education and Science; Ubiquity Press: London, UK, 2017. [Google Scholar] [CrossRef]

| Variable | Complete Sample n = 63 | Group A (M) na = 16 | Group B (C) nb = 47 | Differences between the Groups * |

|---|---|---|---|---|

| Gender, male, n/N (%) | 11/63 (17.46%) | 7/16 (43.75%) | 4/47 (8.51%) | 0.001 |

| Age, M ± SD | 23.98 (±4.039) | 25.00 (±3.033) | 23.63 (±4.307) | 0.246 |

| UEQ grade, M ± SD | 2.36 (±0.624) | 2.18 (±0.529) | 2.43 (±0.647) | 0.179 |

| Study phase, masters’ program, n/N (%) | 32/63 (50.79%) | 16/16 (100%) | 16/47 (34.04%) | <0.001 |

| Content | Measurement Point | Group Affiliation | Differences between Groups, p | |

|---|---|---|---|---|

| Group A (M) | Group B (C) | |||

| Score PK on conversation, M ± SD | t1 | 3.67 (± 1.291) | 3.05 (± 1.290) | 0.191 |

| t2 | 4.00 (± 1.464) | 3.65 (± 1.325) | 0.396 | |

| Differences between time points, p | p | 0.371 | 0.011 | |

| Score PK on multilingualism, M ± SD | t1 | 6.38 (± 2.705) | 9.20 (± 1.651) | <0.001 |

| t2 | 9.19 (± 2.007) | 9.59 (± 1.317) | 0.369 | |

| Differences between time points, p | p | 0.002 | 0.130 | |

| Variables | Answer Options | Group A (M) | Group B (C) | ||

|---|---|---|---|---|---|

| n/N (%) | Difference Score L, M ± SD | n/N (%) | Difference Score C, M ± SD | ||

| Frequency | At least several times a week | 0/16 (0.00%) | - | 12/47 (25.53%) | 1.36 ± 1.433 |

| Up to once a week | 16/16 (100.00%) | 2.81 ± 3.167 | 35/47 (74.47%) | 0.34 ± 1.428 | |

| Duration | <90 min | 8/16 (50.00%) | 1.13 ± 2.748 | 19/47 (40.43%) | 0.61 ± 1.650 |

| >90–120 min | 8/16 (50.00%) | 4.50 ± 2.726 | 28/47 (59.57%) | 0.60 ± 1.384 | |

| Learning form | Alone | 16/16 (100.00%) | 2.81 ± 3.167 | 45/47 (97.83%) | 0.55 ± 1.452 |

| With others | 0/16 (0%) | - | 1/47 (2.17%) | 3.00 ± 0.000 | |

| Variable | Groups | M ± SD | Differences between Groups, p |

|---|---|---|---|

| Comparison of duration groups | |||

| Frequency: overall | <90 min | 0.61 (±1.649) | 0.981 |

| >90 min | 0.60 (±1.384) | ||

| Frequency: at least several times a week | <90 min | 2.67 (±1.528) | 0.059 |

| >90 min | 0.88 (±1.126) | ||

| Frequency: up to once a week | <90 min | 0.20 (±1.373) | 0.601 |

| >90 min | 0.47 (±1.505) | ||

| Comparison of frequency groups | |||

| Duration: overall | At least several times a week | 1.36 (±1.433) | 0.048 |

| Up to once a week | 0.34 (±1.428) | ||

| Duration: <90 min | At least several times a week | 2.67 (±1.528) | 0.013 |

| Up to once a week | 0.20 (±1.373) | ||

| Duration: >90 min | At least several times a week | 0.88 (±1.126) | 0.507 |

| Up to once a week | 0.47 (±1.505) | ||

| F(3, 39) = 2.88, p = 0.048, R2 = 0.181 | ||||

|---|---|---|---|---|

| Usage Behavior | ß | B | SE | p |

| Frequency of use, at least several times a week | 0.349 | 1.170 | 0.497 | 0.024 |

| Duration, >90 min | 0.103 | −0.306 | 0.442 | 0.492 |

| Social form, with others | 0.297 | 2.890 | 1.430 | 0.050 * |

| Constant | 1.586 | 0.529 | 0.005 | |

| Group A (M) | Group B (C) | |||

|---|---|---|---|---|

| Variable | Item | Answer Options | M ± SD | M ± SD |

| Overall evaluation of the tool | ||||

| Overall satisfaction | Overall satisfaction with tool. | 1 (fully satisfied) to 5 (not satisfied/deficient) | 2.00 ± 0.756 | 2.48 ± 1.151 |

| Usefulness | I found the tool useful for my (future) work as a teacher. | 1 (not at all) to 4 (full agreement) | 3.07 ± 0.704 | 3.23 ± 0.859 |

| Innovative addition | The tool is an innovative addition to the curriculum. | 1 (not at all) to 4 (full agreement) | 3.20 ± 0.775 | 2.95 ± 0.914 |

| Relevance of content | The contents are highly relevant for me. | 1 (not at all) to 4 (full agreement) | 2.87 ± 0.640 | 3.18 ± 0.691 |

| Recommendation | Would you recommend the tool to other students? | 1 (not at all) to 4 (full agreement) | 3.13 ± 0.640 | 3.07 ± 0.950 |

| Added value | Overall, the tool was very well done and represents an added value in my studies. | 1 (not at all) to 4 (full agreement) | 3.13 ± 0.516 | 3.20 ± 0.851 |

| Motivational facets | ||||

| Arousing interest | The tool aroused my interest and attention. | 1 (not at all) to 4 (full agreement) | 2.93 ± 0.704 | 3.02 ± 0.876 |

| Enjoyable | I enjoyed working with the tool. | 1 (not at all) to 4 (full agreement) | 3.13 ± 0.834 | 2.73 ± 0.872 |

| Working with the tool | ||||

| Self-regulated learning | The tool enables self-regulated learning. | 1 (not at all) to 4 (full agreement) | 3.73 ± 0.458 | 3.59 ± 0.542 |

| Clarity of goals | The goals of the tool became clear to me. | 1 (not at all) to 4 (full agreement) | 3.33 ± 0.724 | 3.36 ± 0.718 |

| Intuitive usage | The tool enabled simple intuitive use. | 1 (not at all) to 4 (full agreement) | 3.33 ± 0.724 | 3.39 ± 0.655 |

| Impact of the tool | ||||

| Concrete actions learned | I have learned not only facts but also concrete actions. | 1 (not at all) to 4 (full agreement) | 2.53 ± 0.640 | 3.09 ± 0.802 |

| Expanded knowledge | My professional knowledge was expanded. | 1 (not at all) to 4 (full agreement) | 3.20 ± 0.561 | 3.32 ± 0.829 |

| Successful performance | I consider my performance successful. | 1 (not at all) to 4 (full agreement) | 2.80 ± 0.561 | 2.89 ± 0.538 |

| Learning progress | How would you rate your learning progress with the tool? | 1 (very high) to 5 (very low) | 2.67 ± 0.617 | 2.30 ± 0.765 |

| F(15, 27) = 2.08, p = 0.047, R2 = 0.536 | ||||

|---|---|---|---|---|

| Subjective Evaluations | ß | B | SE | p |

| Overall satisfaction *, not satisfied/deficient | −0.445 | −0.579 | 0.320 | 0.081 |

| Usefulness, fully agree | −0.505 | −0.870 | 0.644 | 0.188 |

| Innovative addition, fully agree | −0.048 | −0.078 | 0.503 | 0.878 |

| Relevance of content, fully agree | −0.041 | −0.086 | 0.376 | 0.820 |

| Recommendation, fully agree | −1.134 | −1.77 | 0.513 | 0.002 |

| Added value, fully agree | −0.380 | −0.655 | 0.628 | 0.306 |

| Arousing interest, fully agree | 0.057 | 0.097 | 0.435 | 0.826 |

| Enjoyable, fully agree | 0.270 | 0.454 | 0.418 | 0.287 |

| Self-regulated learning, fully agree | −0.252 | −0.685 | 0.450 | 0.181 |

| Clarity of goals, fully agree | 0.247 | 0.508 | 0.457 | 0.275 |

| Intuitive usage, fully agree | 0.214 | 0.483 | 0.575 | 0.408 |

| Concrete actions learned, fully agree | −0.917 | −1.703 | 0.458 | 0.001 |

| Expanded knowledge, fully agree | 0.982 | 1.750 | 0.601 | 0.007 |

| Successful performance, fully agree | 0.047 | 0.136 | 0.463 | 0.771 |

| Learning progress *, very low | −0.783 | −1.503 | 0.550 | 0.011 |

| Constant | 12.916 | 3.964 | 0.003 | |

| Items | Open Responses |

|---|---|

| This was particularly well done. |

|

| This should be revised/Potential for improvement. |

|

| Instructor No. | Positive Feedback | Critical Feedback |

|---|---|---|

| 1 | [The digital media tool] was very well prepared and informative, the benefit given. Especially the explanatory videos [...] were well received for self-education. The examples in the videos were also very appropriate. | The reflection prompt at the end was rated by students as problematic for self-regulated learning, many [students] wished for discussions in the group and a more detailed application of the contents. |

| 2 | The participation of the students in the processing of the digital media tools can be assessed as quite positive. The tool is overall helpful. [The digital media tools are] a very nice opportunity for interested and committed students further engage with the contents as a self-learning offer. Videos [...] also address interesting interactions in the classroom. | The tools could be extended, or complementary tools could be developed on other relevant aspects. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reichert-Schlax, J.; Zlatkin-Troitschanskaia, O.; Frank, K.; Brückner, S.; Schneider, M.; Müller, A. Development and Evaluation of Digital Learning Tools Promoting Applicable Knowledge in Economics and German Teacher Education. Educ. Sci. 2023, 13, 481. https://doi.org/10.3390/educsci13050481

Reichert-Schlax J, Zlatkin-Troitschanskaia O, Frank K, Brückner S, Schneider M, Müller A. Development and Evaluation of Digital Learning Tools Promoting Applicable Knowledge in Economics and German Teacher Education. Education Sciences. 2023; 13(5):481. https://doi.org/10.3390/educsci13050481

Chicago/Turabian StyleReichert-Schlax, Jasmin, Olga Zlatkin-Troitschanskaia, Katharina Frank, Sebastian Brückner, Moritz Schneider, and Anja Müller. 2023. "Development and Evaluation of Digital Learning Tools Promoting Applicable Knowledge in Economics and German Teacher Education" Education Sciences 13, no. 5: 481. https://doi.org/10.3390/educsci13050481

APA StyleReichert-Schlax, J., Zlatkin-Troitschanskaia, O., Frank, K., Brückner, S., Schneider, M., & Müller, A. (2023). Development and Evaluation of Digital Learning Tools Promoting Applicable Knowledge in Economics and German Teacher Education. Education Sciences, 13(5), 481. https://doi.org/10.3390/educsci13050481