Abstract

This paper presents a bibliometric systematic review on model-based learning analytics (MbLA), which enable coupling between teachers and intelligent systems to support the learning process. This is achieved through systems that make their models of student learning and instruction transparent to teachers. We use bibliometric network analysis and topic modelling to explore the synergies between the related research groups and the main research topics considered in the 42 reviewed papers. Network analysis depicts an early stage community, made up of several research groups, mainly from the fields of learning analytics and intelligent tutoring systems, which have had little explicit and implicit collaboration but do share a common core literature. Th resulting topics from the topic modelling can be grouped into the ones related to teacher practices, such as awareness and reflection, learning orchestration, or assessment frameworks, and the ones related to the technology used to open up the models to teachers, such as dashboards or adaptive learning architectures. Moreover, results show that research in MbLA has taken an individualistic approach to student learning and instruction, neglecting social aspects and elements of collaborative learning. To advance research in MbLA, future research should focus on hybrid teacher–AI approaches that foster the partnership between teachers and technology to support the learning process, involve teachers in the development cycle from an early stage, and follow an interdisciplinary approach.

1. Introduction

The application of intelligent technologies (e.g., artificial intelligence, or AI) in domains where experts have to make professional decisions is currently an open research topic [1]. Some agreement already exists that intelligent systems, instead of replacing humans, could be better used to enhance experts’ capabilities by automating parts of the tasks that they perform. In the context of Technology-Enhanced Learning (TEL), similar proposals are being advanced by research in “hybrid human–AI technologies” [2,3,4]. However, an open research question is how teachers and technology are working together in different learning environments to support student learning. While the traditional approach taken by intelligent tutoring systems (ITS) has been to focus on the technology that enables autonomous decisions, recent research in learning analytics (LA) has also tried to involve teachers in the decision loop, especially in cases wherein they are better suited to make decisions about students’ learning [2,5].

Notwithstanding, there is a lack of a system-level perspective on how technology and teachers influence their respective decisions that aim to support the learning process. One way of modelling this teacher–technology interplay would be to focus on the knowledge used by each of them, as well as on how this knowledge is exchanged among teachers and technology when supporting student learning practices. This approach is similar to the way in which, by supporting the practices of experts and novices, as well as their interactions, knowledge structures can mediate professional learning and knowledge creation in the workplace [6]. With the purpose of applying this idea in the dual context of teachers and technology, we could consider that there exists a tight coupling between the teacher cognition (i.e., the understanding that a teacher has of a student’s learning process, and the knowledge behind their instructional decisions), and the way that the intelligent system operates (i.e., how it evaluates students’ learning and knowledge, and how it chooses possible learning trajectories). For instance, an ITS might implement a hierarchical model of interrelated knowledge elements to diagnose the current student’s knowledge and to later adapt the tasks that the student will have to perform. It is conceivable that a system and a teacher can influence each other, especially if we consider that teachers also apply a similar logic to think about the domain of teaching (e.g., by being guided by specific knowledge models). We refer to this situation whereby knowledge models are responsible for the coupling between teachers and intelligent learning systems as model-based learning analytics (MbLA). Additionally, MbLA is an attempt to respond to recent calls for more LA that is rooted in theory [7,8]. In this context, in addition to using theory to guide the analyses, MbLA would also require theory to be embedded into the LA solution as a computational model that enables autonomous decisions [9,10].

Realising MbLA is a highly complex and interdisciplinary endeavour. It requires tight collaboration across disciplinary boundaries. Learning scientists need to be involved to specify models of learning through which learning can be evidenced in the classroom. Computer and data scientists need to help to make sense of the abundance of learning traces that are available in the current digital systems. Experts on human–computer interaction (HCI) need to find ways to support human sensemaking with the results from the analyses. Finally, domain experts and educational practitioners need to be involved to validate the approaches. Managing this kind of interdisciplinarity is a general challenge in TEL [11], but it is even more of an issue in situations when a tight coupling needs to be created between the teacher’s understanding of learning and the models used by intelligent systems. See [12] for an example of a coupling between an open model of a web tutoring system and teachers’ expertise about their students and the related learning activities. In our previous work, we have conducted a systematic literature review on MbLA, guided by several qualitative analyses that aimed to identify the types of models that have been used, the context in which they have been used, and the benefits that their usage have had on teaching and learning practices [13]. Results from this previous research indicate that the lack of integrated interdisciplinary research may be a major roadblock in the realisation of MbLA. Specifically, we identified only 42 papers that used a model of teaching and learning and that also provided results of the automated analyses to teachers. Excluded papers (especially the ones that were manually excluded in the last round of the filtering process) were either lacking a model of teaching and learning (indicating a possible lack of collaboration or alignment with work from learning sciences) or did not include teachers in the loop (who are the main domain experts in this context).

The current paper aims to further explore the nature and dynamics inside the community of research that has managed to produce MbLA solutions. Our main goals are to uncover elements at a community level that have enabled the implementation of successful MbLA solutions, and to identify potential constraints or research gaps that could trigger future research avenues. A community of practice (such as the research community under investigation) consists of a social structure and a common knowledge (or epistemic) base. Thus, to understand the social structure of the community, we conducted several bibliometric analyses, while, to explore the main themes that have been studied in MbLA, we used topic-based analyses of the 42 papers previously identified. Concretely, we focused on the structure of the research community, guided by bibliometric network analyses about co-authoring, co-citations, considered literature, and considered publishing venues. To discover research topics that might be relevant to the implementation of MbLA (and that we might have understated or overlooked in our previous analyses due to both their qualitative nature and the different research questions that guided them), we considered both explicit and implicit topics found in the 42 papers. We identified explicit topics through the network of the main keywords, as mentioned by the authors of the papers. Implicit topics were automatically identified by using Latent Dirichlet Allocation, a machine learning algorithm for topic modelling. Finally, we used Epistemic Network Analysis [7] to visualise the relations between the identified latent topics and the models of teaching and learning used in the systems presented in the papers under review (discussed in detail in Section 2). Results from these analyses enabled us to propose a set of guidelines that could help the LA community to move forward in an attempt to address hybrid settings in which teachers collaborate with intelligent systems in education.

The rest of the paper is structured as follows: Section 2 presents the related work; Section 3 describes the methodology followed for the bibliometric systematic review, the guiding research questions, and the data analysis methods; Section 4 presents the main findings; Section 5 discusses the implications from the results, gives an outlook of future research, and considers the limitations of this study; Section 6 concludes the paper.

2. Related Literature Reviews

Several literature reviews exist that have focused on the use of intelligent learning systems by teachers. These reviews can be grouped into LA- and ITS-related. LA-related reviews have mainly focused on the interactions of teachers and dashboards. For instance, a review on monitoring for awareness and reflection in blended learning concluded that there had been little focus on teacher practices, as well as on explaining the theoretical models behind the LA solutions [14]. In a similar fashion, a review on LA dashboards found that while teachers have been the main targeted stakeholders, there has been little focus on understanding the underlying models to explain students’ learning behaviour [15]. A more recent review on teachers’ interaction with AI applications concluded that while AI has the potential to help teachers to make instructional and assessment decisions, current implementations usually employ data from a single source, which might be insufficient to model the complexity of the learning process or to guide effective learning interventions [16].

ITS-related reviews have mainly focused on opening learning models up to teachers. For instance, existing reviews on open learner models call for multi-dimensional models of learners that can provide learning experiences tailored to the needs of individual learners [17,18], as well as for further research exploring in depth how teachers use the models [19]. A systematic review on open learner models and LA dashboards, which focused on the synergies between the two fields, concluded that learners that had access to their models demonstrated increased agency about their data, as well as awareness about their knowledge and needs [20]. These results emphasise that it is important to enable teachers and students to provide their own complementary input to LA processes.

In conclusion, MbLA solutions where knowledge models enable an interplay between teachers and intelligent systems are in their infancy. The feedback that is provided to teachers is mainly based on mirroring information, often without a strong theoretical framing, and thus providing little attainability. At the same time, there is a need for solutions that enable second-order adaptation, in which LA systems partially offload teachers’ scaffolding and decision making, by helping teachers to understand the learning processes of individual students, which could lead to better instructional decisions [5]. In our previous work, we intended to contribute to the research on the interaction between teachers and models of teaching and learning used by intelligent systems by conducting a systematic review on MbLA. Concretely, we focused on the types of models that have been used, the context in which they have been used, and the benefits that their usage have had for teaching and learning practices [13]. We identified that the models used to enable a teacher–system coupling could be clustered into five groups.

- Domain models that provide a model of the domain of instruction (e.g., computational thinking), the relation between the learning tasks, and the knowledge needed to solve each task. See, for instance, an example of a teacher dashboard that displays the correlation between student responses, questions, and course resources [21].

- Learner models that provide a representation of the knowledge of a student in time. For instance, predictive systems have been widely guided by learner models. For example, Ref. [22] used machine learning guided by students’ course activity to identify students at risk of not submitting the next assignment.

- Instructional models that include rules on how to adapt the instruction based on the domain model and the current knowledge of a student. See [23] for an example of a system that supports the regulation of learning by providing cognitive and behavioural feedback to teachers and learners.

- Collaborative models that model how collaborative learning should be conducted (e.g., through a collaborative learning script that is computational and open to teachers, such as in [24]).

- Social models that include information about the social structure of a group of students (see, for instance, [25]).

Most of the 42 papers under review used a combination of domain and learner models, suggesting that instructional decisions were usually left to teachers [13]. In the current paper, we complement the previous qualitative analyses with quantitative analyses that explore the research community that has produced the MbLA solutions, by focusing on its structure and the main research topics that have been considered. We will further make use of the models discussed above in our analyses when modelling the associations between them and the latent topics discovered in the papers under review.

3. Methodology

In this section, we first describe the bibliometric systematic review process. Next, we present the research questions that derive from our goal of understanding the dynamics inside the community that has implemented MbLA. This is followed by the data analysis methods used to respond to them.

3.1. Bibliometric Systematic Review Process

We followed the guidelines for conducting a systematic review proposed by Kitchenham and Charters [26]. Concretely, we started by defining several search queries that included keywords related to the following:

- Research fields inquiring about learning models designed to be transparent to teachers (i.e., open learner models and LA);

- Socio-technical contexts where MbLA have been applied (e.g., intelligent tutors or adaptive learning);

- The targeted stakeholders (e.g., teachers, instructors).

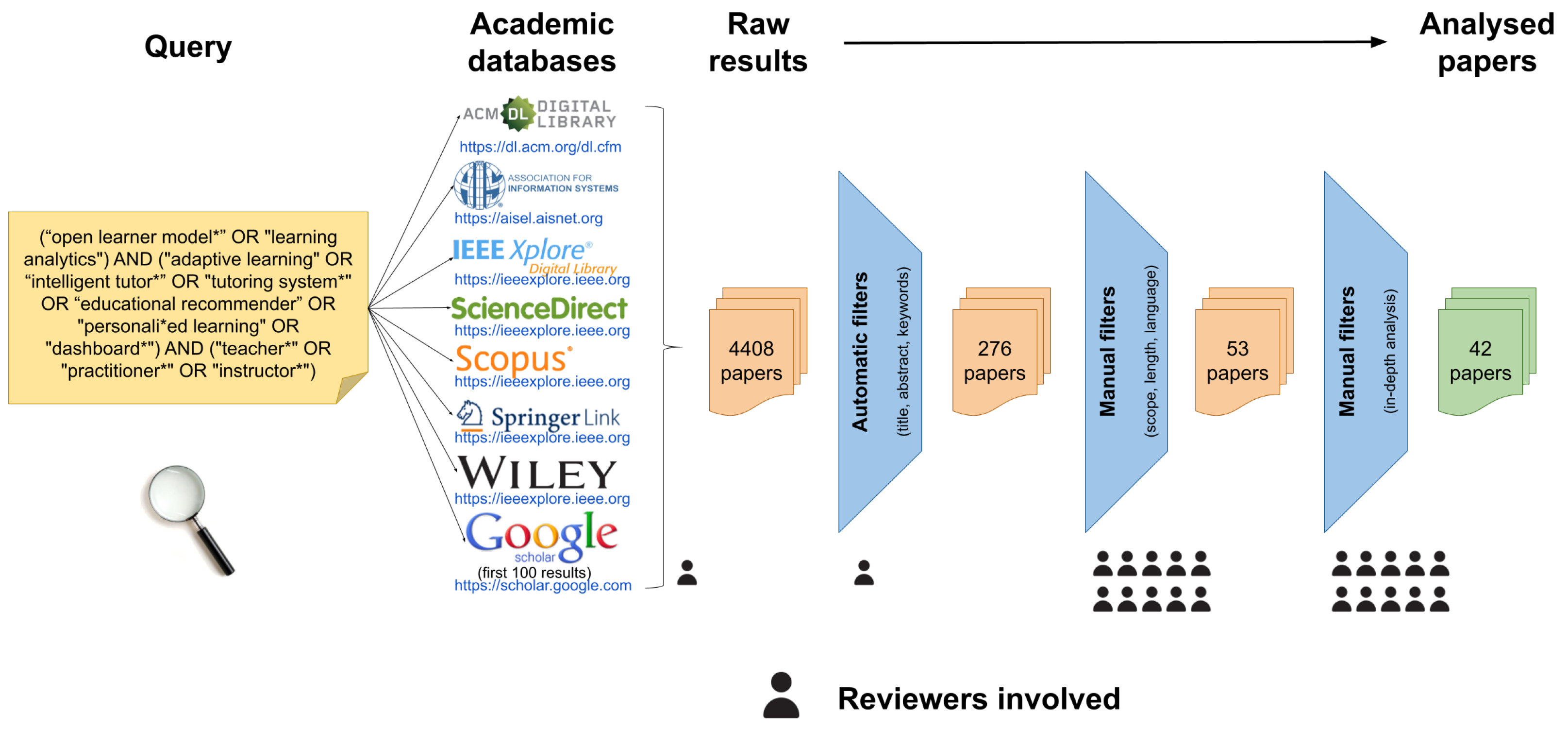

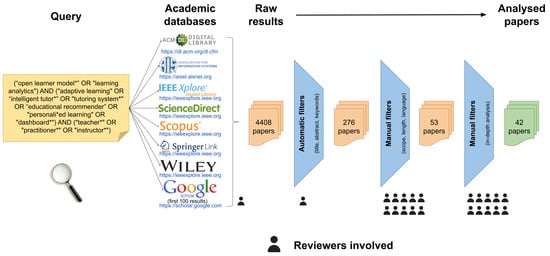

Next, we applied the queries (on 21 December 2020) in seven databases, all pertinent to research in TEL, as shown in Figure 1. To identify relevant grey literature (e.g., project deliverables), we also included the first 100 results from Google Scholar. We compared the output for each query in terms of the total number of papers returned and if the results included papers that we had previously identified as relevant for this research. The selected query that returned a total of 4408 papers was (“learning analytics” OR “open learner model*”) AND (“intelligent tutor*” OR “adaptive learning” OR “tutoring system*” OR “educational recommender” OR “personali*ed learning” OR “dashboard*”) AND (“teacher*” OR “practitioner*” OR “instructor*”).

Figure 1.

Stages of the bibliometric systematic review.

In continuation, we automatically filtered the resulting pool of papers for duplicates, obtaining 3590 unique documents. To identify papers in which our keywords played a pertinent role, as well as to standardise the process (as different databases have different filtering criteria), we automatically filtered the papers by applying the search query only to the main parts (i.e., title, abstract, and keywords), obtaining 276 papers. Next, we manually screened the remaining papers to identify cases where models were designed to be transparent to teachers. Ten reviewers (all researchers in TEL) manually checked the papers in two stages. During the first one, two randomly assigned reviewers separately checked the abstract (and, if needed, the full text) of each paper to identify if it was written in English and within the scope of the review, resulting in 53 papers. In the second stage, we used a second random assignment during which two reviewers separately checked the entire content of each paper to identify if it met the following criteria:

- It includes empirical work or uses case scenarios involving a model designed to be transparent to teachers;

- It presents an implemented and pedagogically grounded technological solution;

- Teachers are part of the target group of the proposed system/intervention in the paper;

- It describes the transparent model;

- The technological solution includes a computational model (i.e., autonomously used by a system), which is also interpretable by teachers (i.e., uses a meaningful pedagogical/psychological model of student learning).

Cases of disagreement during the manual screening phases were discussed among all the reviewers until reaching an agreement. The entire process of automatic and manual filtering resulted in a pool of 42 selected papers and is illustrated in Figure 1.

3.2. Data Analysis Methods

The methodology of the in-depth manual screening of the papers and the corresponding results are reported in [13]. In the current paper, we report on the following research questions (RQs) that derive from our goals (discussed in Section 1):

- RQ1. What are the synergies of the research community that has inquired about MbLA?

- RQ2. What are the main research topics that have been considered?

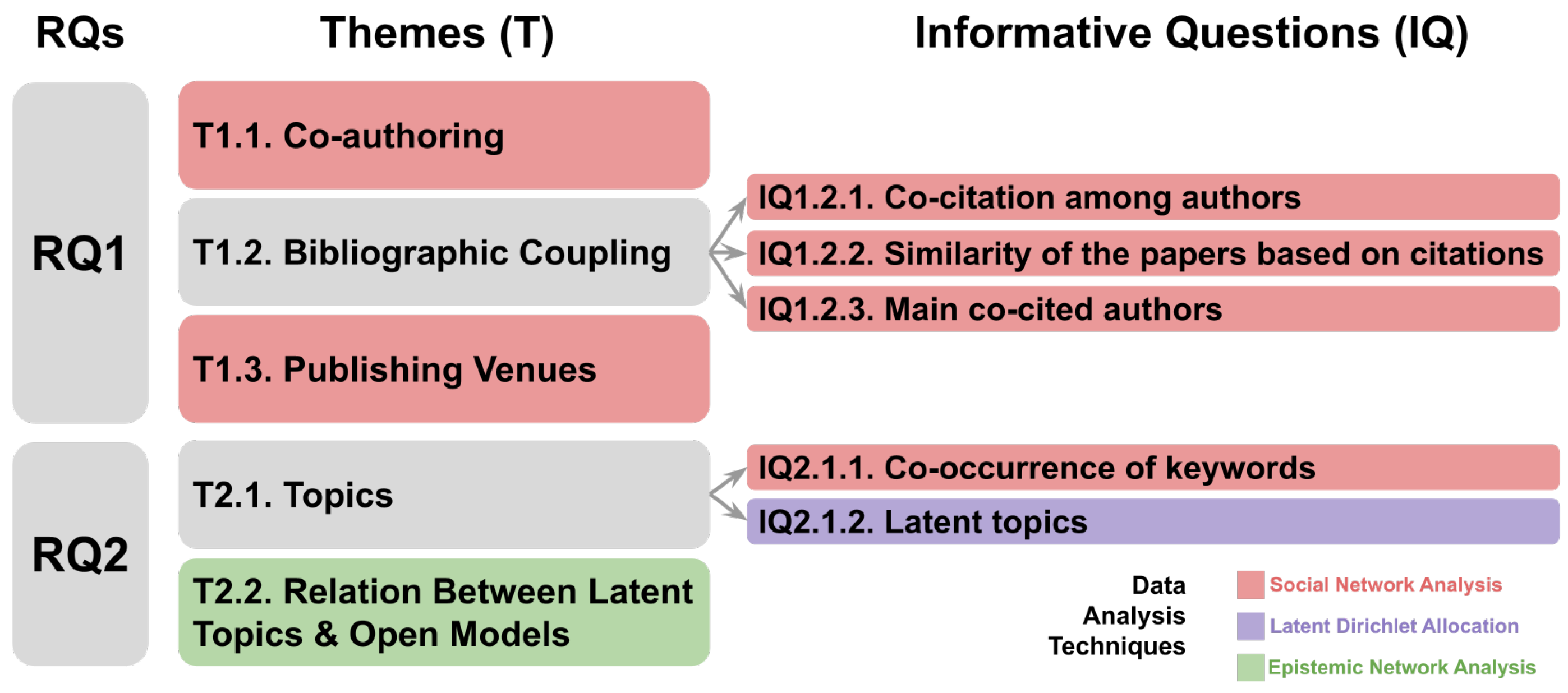

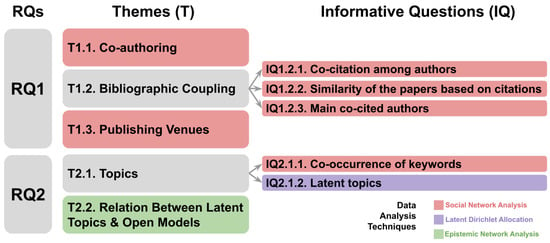

We further divided each RQ into themes (T) and, when necessary, into informative questions (IQ), as shown in Figure 2. To respond to each T/IQ, we conducted the following analyses.

Figure 2.

Research questions (RQ), topics (T), informative questions (IT), and data analysis techniques (represented by the colours).

- Social Network Analyses (with red in Figure 2), which are methods used to map and analyse the connections between individuals or groups in a given network [27]. We used them in RQ1 to check the synergy between the authors of the papers under review, in terms of (a) co-authoring (T1.1); (b) bibliographic coupling (T1.2), where we explored the network of co-citations among the authors of the papers under review in IQ1.2.1, the similarity of the papers based on the co-cited literature in IQ1.2.2, and the network of the main co-cited authors in IQ1.2.3; (c) publishing venues (T1.3). We also used network analysis in RQ2 to identify explicit research topics through the (d) network of co-occurrence of keywords (IQ2.1.1). We used Vosviewer https://www.vosviewer.com (accessed on 1 April 2023) to create the network visualisations. We applied fractional counting to allow for a more accurate representation of the strength of the relationship between the elements being visualised (such as papers, authors, etc.), as suggested by [27]. For instance, in the case of co-citations, this method weights the co-citation relationships between the papers, dividing the count of each citation by the total number of citations in the paper.

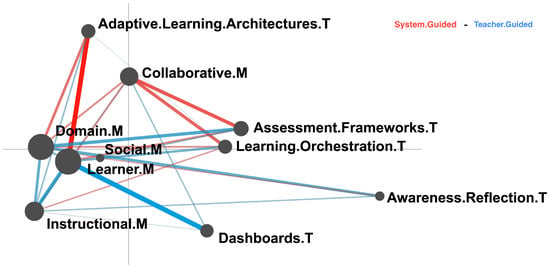

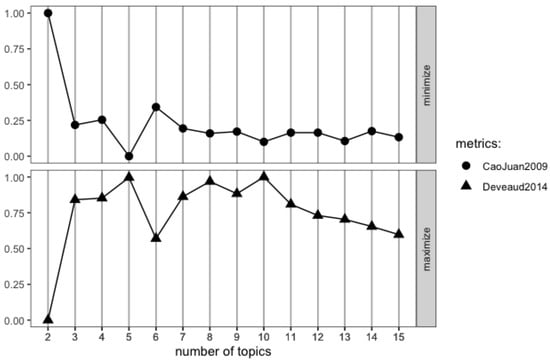

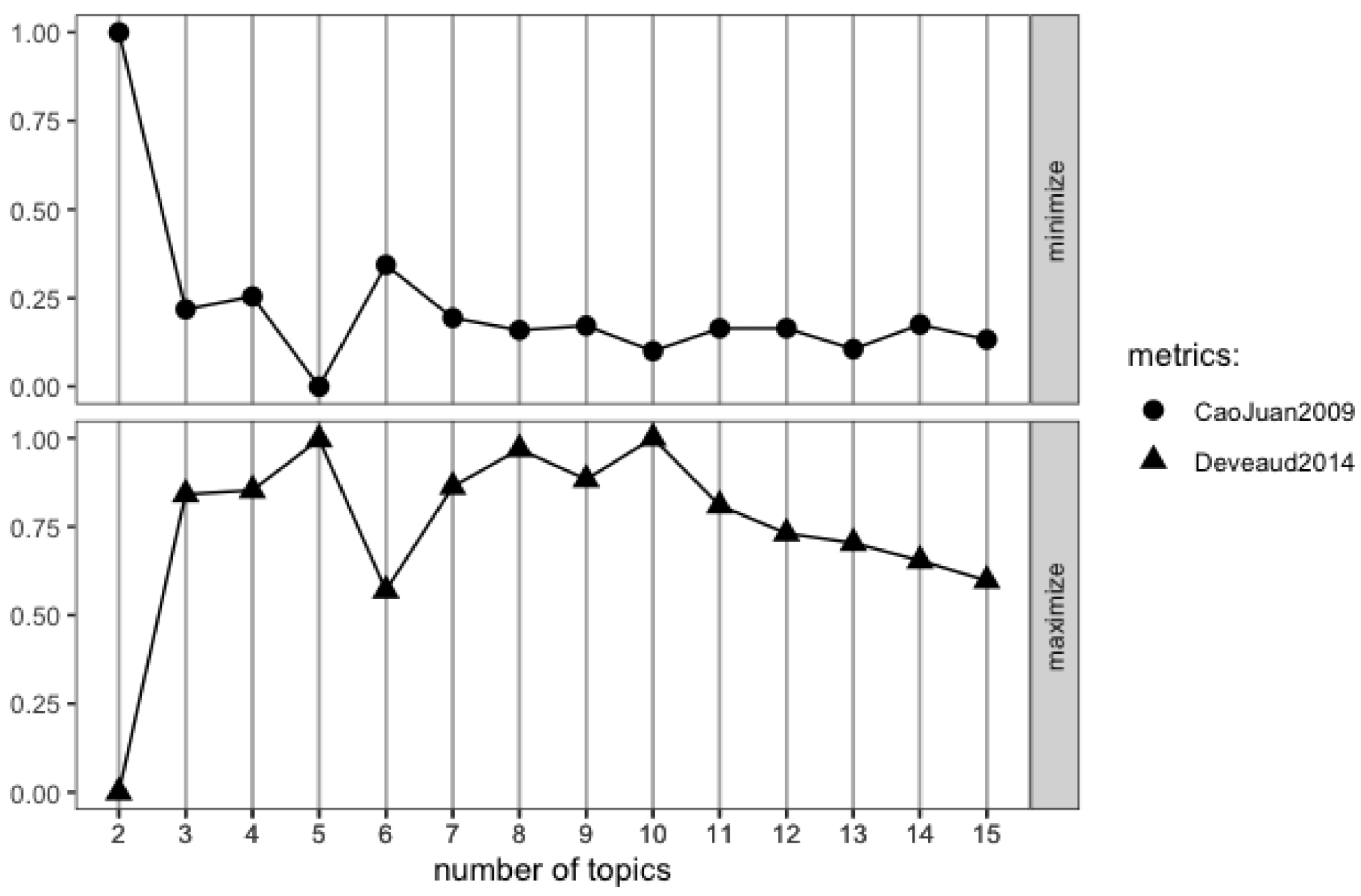

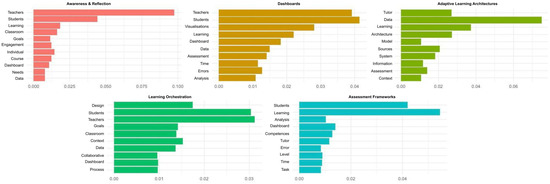

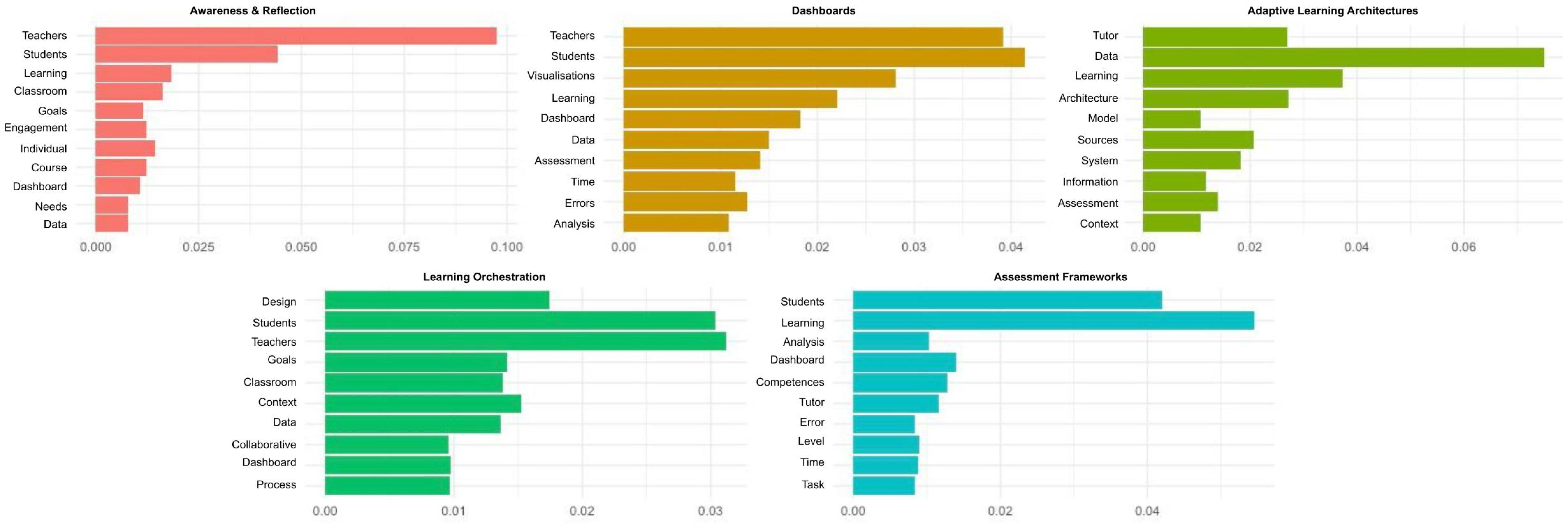

- Latent Dirichlet Allocation (LDA) (with purple in Figure 2), a generative statistical model used to discover the most important topics in our dataset of textual documents and to determine which documents belong to which topics. LDA considers that each document includes a small number of topics, each represented by the likelihood of containing specific words from a predetermined vocabulary [28]. We used LDA in RQ2 to automatically explore the latent topics found in the 42 papers under review (IQ2.1.2). We used as input the textual content of the papers, excluding authors’ details and bibliographies. LDA requires the specification of the number of topics to be discovered as input. In our case, the number of topics was not known beforehand and we used two metrics proposed by [29,30] to determine the optimal number of topics. In our study, the application of these metrics led to the conclusion that 5 was the optimal number of topics (see Figure A1 in Appendix B.1). To interpret the topics, two of the authors of this paper manually checked the most salient terms per topic (further discussed in Section 4, as well as in Figure A2 in Appendix B.2) and the content of the papers that were principally connected to each topic.

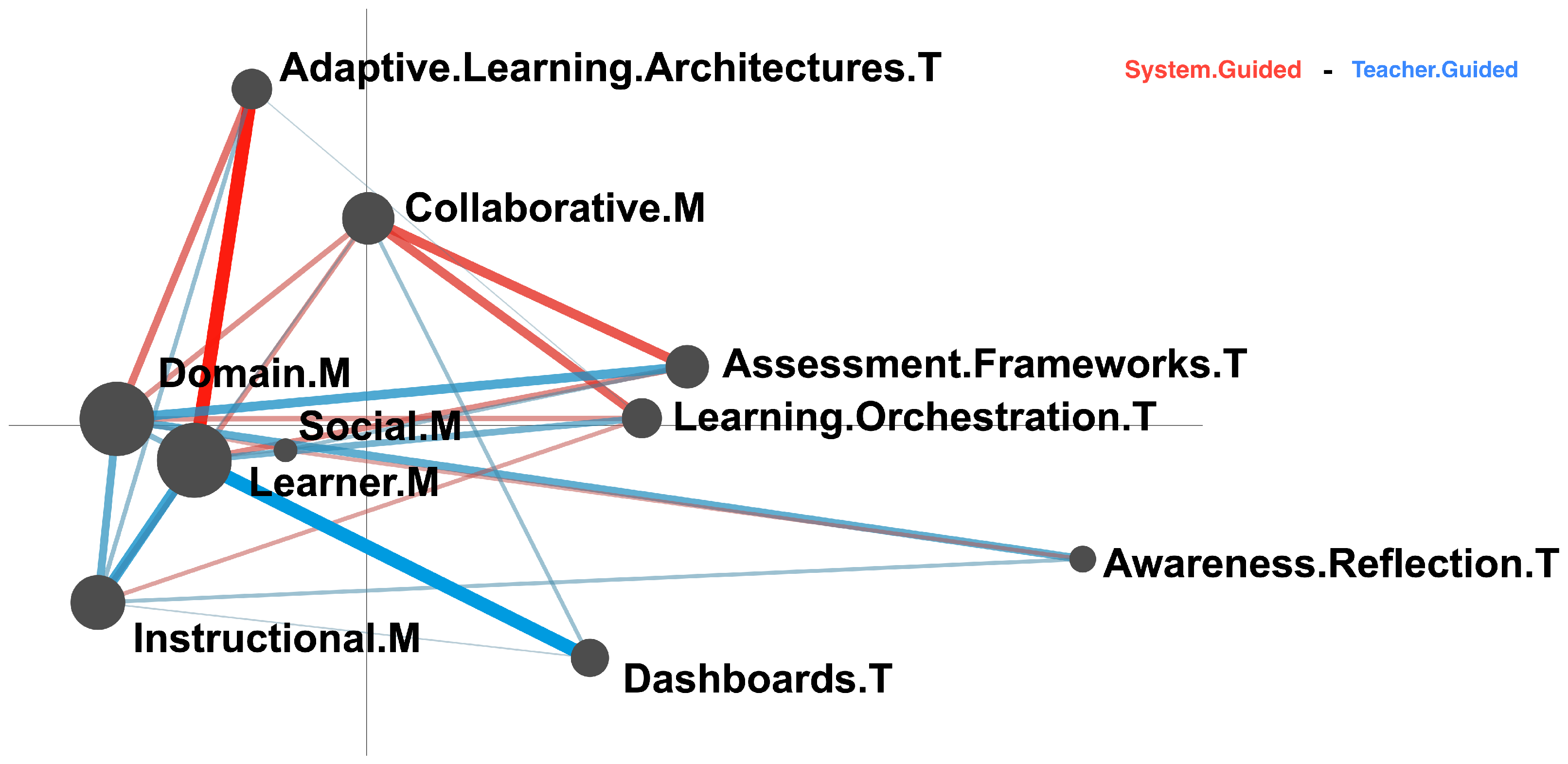

- Epistemic Network Analysis (ENA) (with green in Figure 2), usually applied to visually represent the connections between different coding schemes, mainly in discourse analysis, but also beyond [7]. In our context, we considered each paper as a conversation between the authors and the research community. We used ENA in T2.2 to explore the connection between the topics identified using LDA (see Section 4 for a description of each topic) and the types of models used by the technological systems that were proposed in the 42 papers under review to generate the teacher feedback (discussed previously in Section 2). Furthermore, to better visualise the connections between models, topics, and the types of systems proposed in the papers, we created and subtracted two ENA networks, one based on papers where the primary role of adapting the learning process was played by the proposed intelligent system, and the other based on papers where teachers were the primary agents of adaptation (further discussed in Section 4). We implemented LDA and ENA in R, using the topicmodels package https://cran.r-project.org/web/packages/topicmodels/index.html (accessed on 1 April 2023). In the SNA and ENA figures, the sizes of the nodes and ties correspond, respectively, to the importance of a node (e.g., a paper) and the strength of a connection.

4. Results

This section presents the results organised along the RQs, Ts, and IQs. For a general overview, from the 42 papers under review, 16 (38%) were journals, 23 (55%) were conferences, and 3 (7%) were workshop papers. While we did not restrict the search in time, the resulting papers were published between 2004 and 2021. Table A1 in Appendix A includes the full list of papers under review.

4.1. The Synergies Inside the Research Community (RQ1)

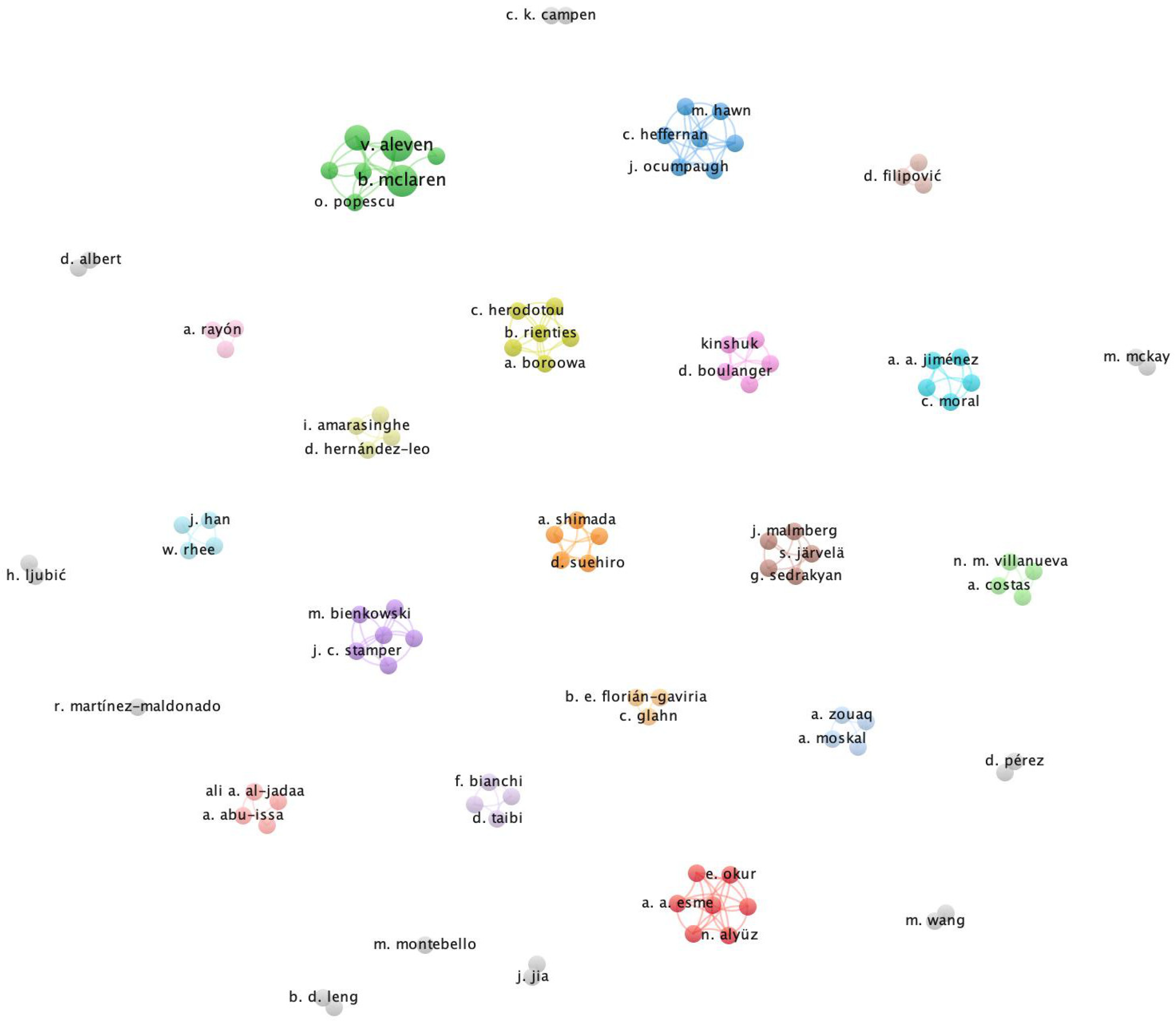

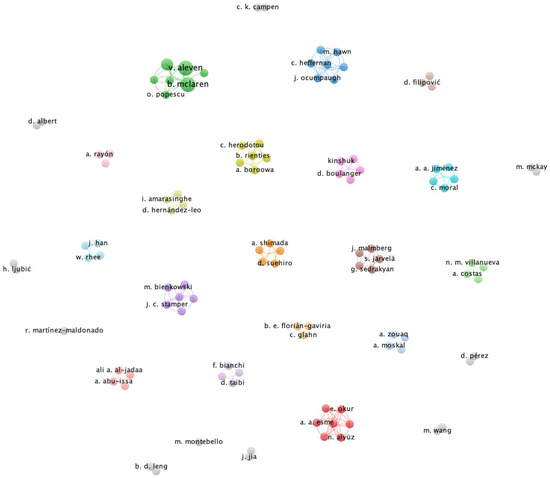

T.1. Co-authorship. The co-authoring network shows several disconnected research groups that have independently inquired about MbLA (represented in colours in Figure 3). We might deduce from this network that although in its infancy, the interest in MbLA is already broad in TEL, contrary to a network that would only have had a small number of interconnected authors.

Figure 3.

Network of the co-authorship of the papers under review.

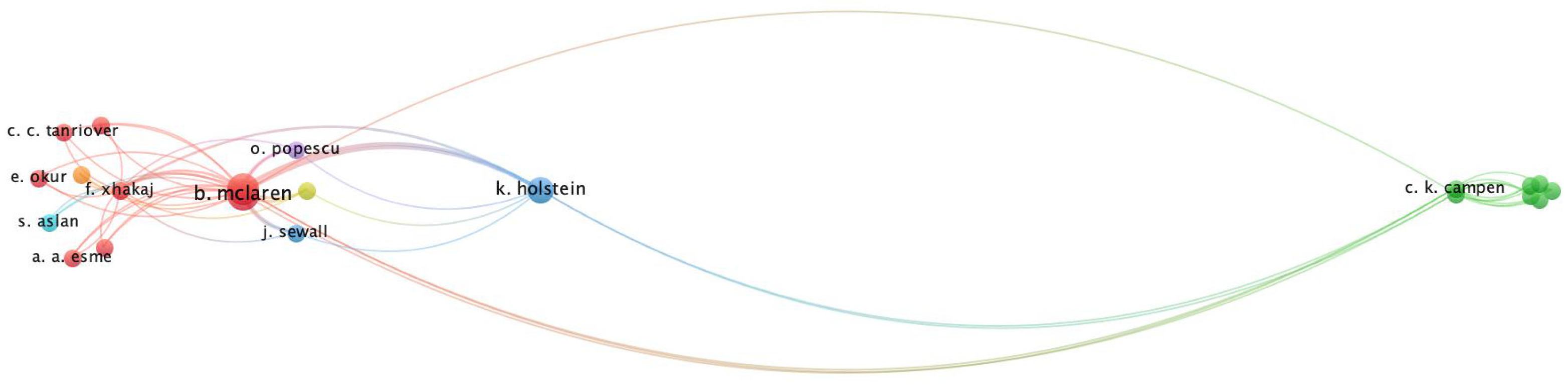

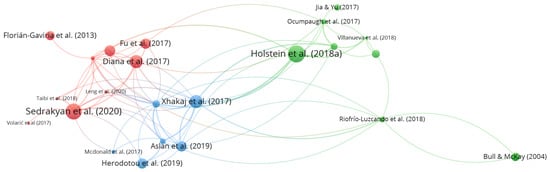

T.1.2. Bibliographic coupling. Apart from explicit collaborations between the authors (i.e., co-authoring), we also looked at networks that provided insights about potential implicit alignments among the papers under review. For instance, regarding IQ1.2.1. Co-citation among the authors, as we can notice in Figure 4, only a small number of authors have referenced each other, and they are usually the ones who tend to have co-authored together. This might suggest that the different research groups that have inquired about MbLA need to be more aware of each others’ work.

Figure 4.

Co-citation among authors of the papers under review, showing only the 21 authors that appear connected. The relatedness among the authors is specified based on the number of times they cite each other.

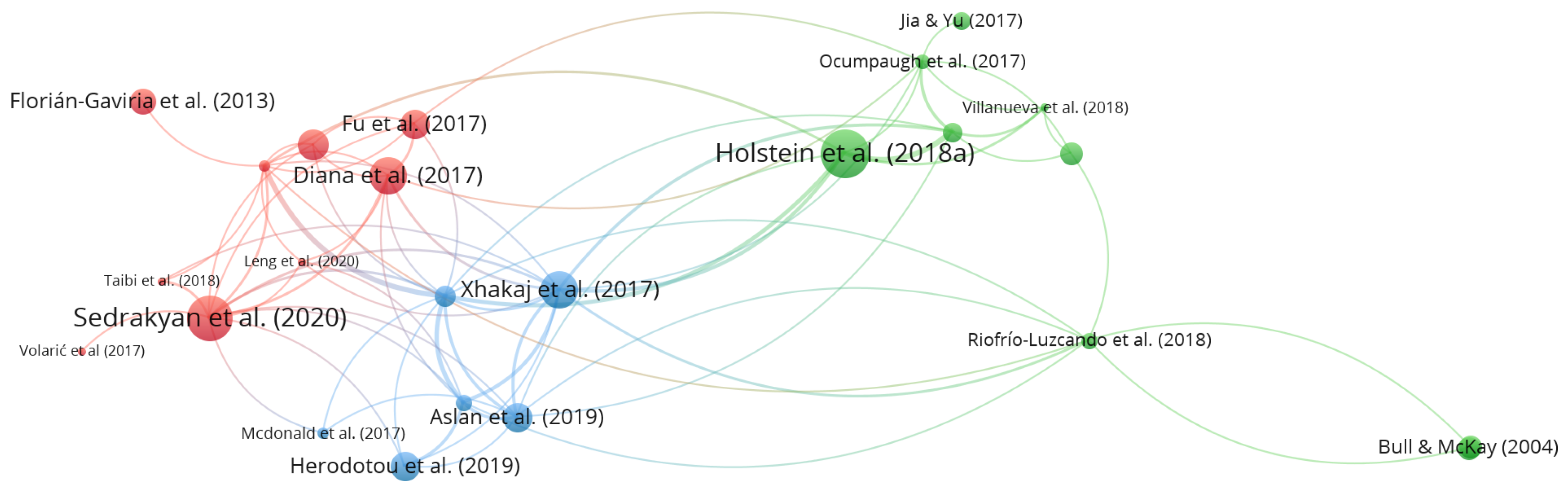

Concerning IQ1.2.2. Similarity of the papers based on citations that they share, as can be seen in Figure 5, most of the papers under review appear connected, suggesting that they share at least a core common literature. Further, in Figure 5, we can notice three main clusters. After checking the related papers, the cluster represented with green contained mainly ITS papers, where the main role of adapting and supporting the learning process was played by the system (using a pedagogical/psychological model). In these papers, teachers were also involved, usually by having access to the logic used by the model, but, in specific cases, they could even overwrite the decisions taken by the system (see, for instance, [31]). Papers with red came mainly from the field of LA. In these cases, the analyses of students’ data were guided by a pedagogical/psychological model of student learning, but teachers had to take the appropriate decision about how to intervene. The blue cluster in the middle corresponds to papers that describe cases where both teachers and intelligent systems work in tandem to support the learning process. As an example, Ref. [32] proposed a teacher dashboard that had to be used together with an ITS, aiming to help teachers to design and later orchestrate learning activities that involved the ITS.

Figure 5.

Similarity of the papers based on the body of citations that they share, showing 36 papers (out of 42) that appear connected. Colours represent papers mainly related to ITS (green), LA (red), or both of them (blue).

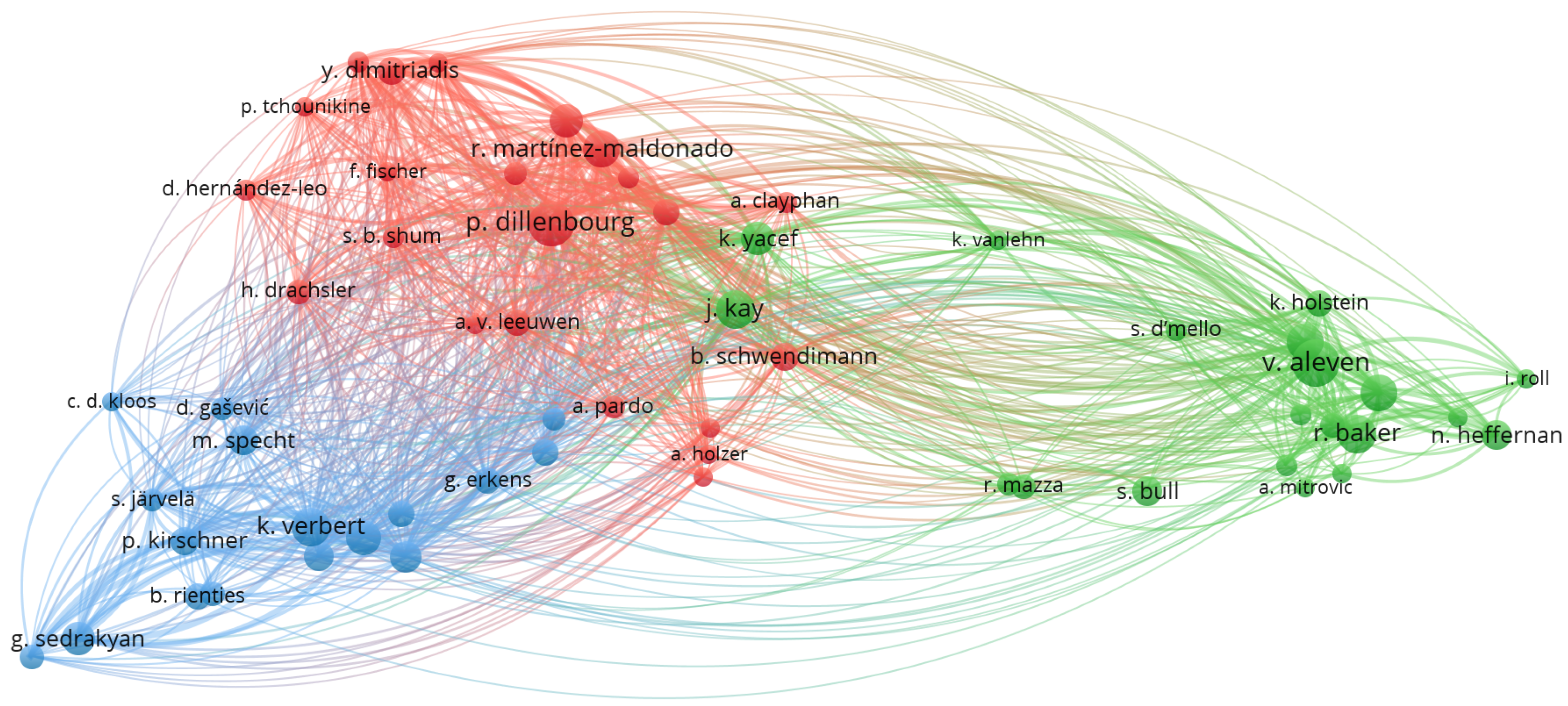

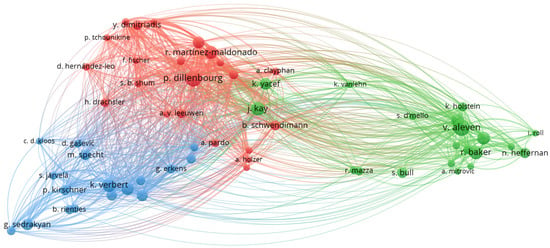

IQ1.2.3. Main co-cited authors helped us to further explore who the main authors in the identified common core literature are. While still interconnected, the authors cited can again be clustered into the ones mainly cited by LA (with red and blue in Figure 6) and ITS papers (with green in Figure 6). The red cluster includes authors that have a stronger focus on collaborative learning, while the blue cluster includes authors that have focused on supporting aspects that are more individualistic (e.g., self-regulated or personalised learning).

Figure 6.

Main co-cited authors, as found in the references of the papers under review. With green, on the right side, authors connected mostly with ITS. With red and blue, on the left, authors connected mostly with LA research. The red cluster includes authors related to collaborative learning, while the blue cluster includes authors that have focused on individualistic aspects (e.g., self-regulated learning).

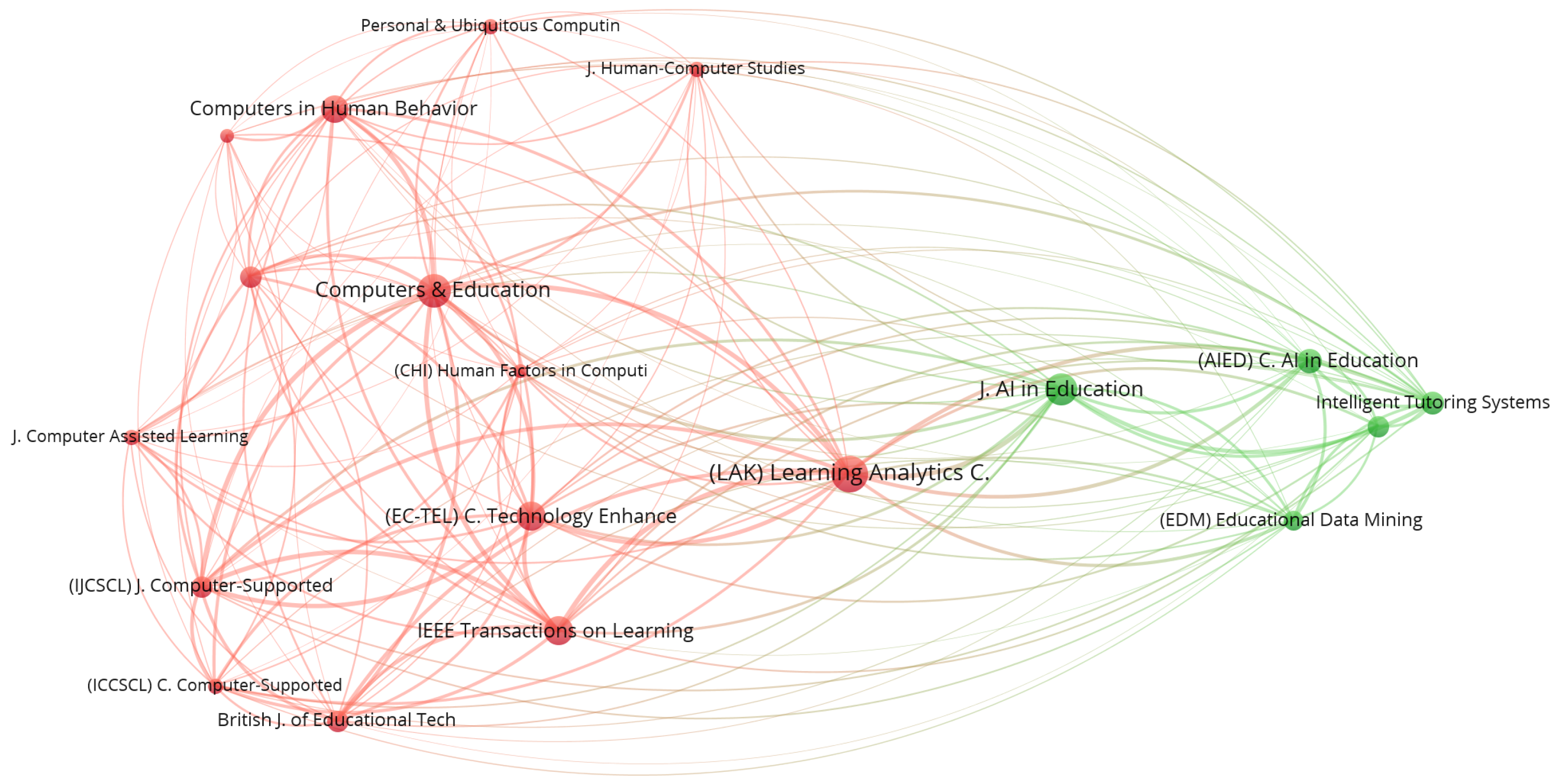

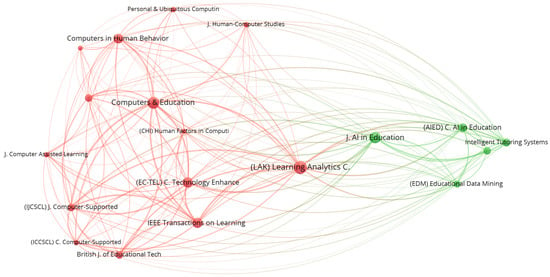

Publishing venues (T1.3). A similar picture appears when considering the main publishing venues that are co-cited in the papers under review. We can again notice two distinguished clusters of venues cited mainly by LA and ITS papers, represented, respectively, with red and green in Figure 7. While the extremes of this figure show mainly the community-oriented venues that have been targeted, there also exist common channels where these communities meet, such as the Learning Analytics and Knowledge (LAK) conference.

Figure 7.

The main publishing venues co-cited by the papers under review. With green, venues targeted by the ITS community; with red, the ones targeted by LA.

4.2. Main Research Topics (RQ2)

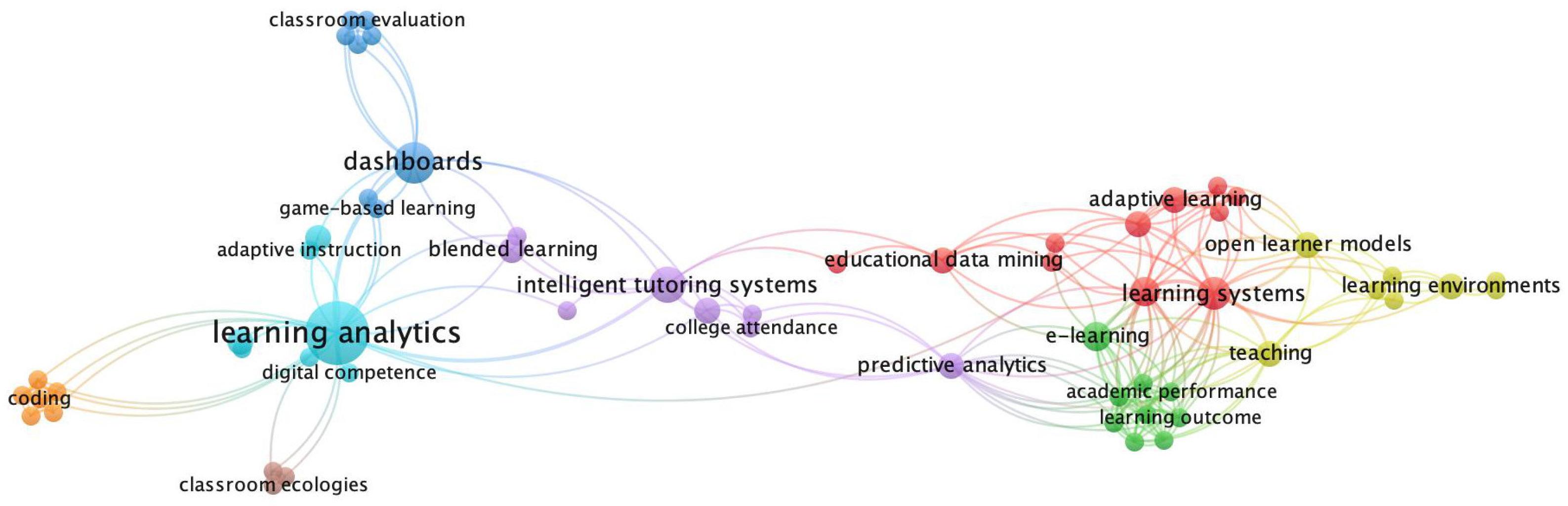

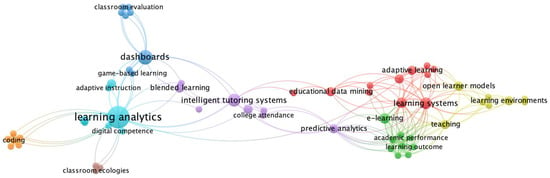

Topics (T2.1). We checked both explicit and implicit topics found in the papers under review, represented, respectively, by the keywords that the authors explicitly used in their papers, and the latent topics resulting from LDA. Apropos IQ2.1.1 The co-occurrence of keywords, apart from the obvious nodes (e.g., LA, ITS, adaptive learning, etc.), the emphasis seems to have been on several specific settings (e.g., game-based learning or blended learning), as well as on specific student practices and skills (e.g., digital competences, academic performance, college attendance, or coding). Dashboards constituted an important element of the technological context that made the models available to teachers (see Figure 8). Interestingly, few keywords explicitly referred to teacher practices, despite them being one of the main targeted stakeholders in the solutions proposed in the papers under review.

Figure 8.

Network of the most common keywords explicitly co-used in the papers under review.

Regarding IQ3.4.2 Latent topics found in the papers, Table 1 shows the five topics resulting from the LDA, two illustrative examples from the papers that were mainly connected to each of these topics, ten of the most salient terms per topic, and the number of papers per topic. See Figure A2 in Appendix B.2 and the online version of this table https://bit.ly/MbLALDA (accessed on 1 April 2023) for a visualisation of the most salient terms per topic and a short description of each paper connected to a topic.

Differently from the network of keywords, here, we may notice two topics that are connected to teacher practices, namely Learning Orchestration and Awareness and Reflection. These two topics, together with Dashboards, corroborate the results from our qualitative analyses, where we identified that dashboards were the main tool used to provide information from the open learner models to teachers, and that the main purpose of feeding back data to teachers has been to support pedagogical adaptions (e.g., by supporting teachers to provide personal guidance to students, or to improve their learning designs) [13]. Interestingly, LDA also highlighted the importance of two topics that went unnoticed in our qualitative analyses. The topic Adaptive Learning Architectures represents the technical infrastructure that supported the MbLA solutions proposed in the papers. For instance, Ref. [33] presents an architecture that supports the assessment of competence-based learning in blended learning environments, intended to be used to enable teachers to better adapt their learning designs to students’ needs. Another related example included a system that integrated feedback from students, parents, and teachers into the open model of a specific student [12]. Apart from the technical infrastructure, MbLA need to be rooted in learning theory, which is represented by the topic Assessment Frameworks. The most common types of assessment frameworks were competence frameworks that guided the assessment of student knowledge. These were usually predefined and implemented in the design of the intelligent system (see the examples in Table 1), but there were also cases whereby teachers could change it or implement their own assessment framework (see, for instance, [34]). See Table A1 in Appendix A for the full list of papers under review and their corresponding latent topics.

Table 1.

LDA topics with illustrative examples from the pool of papers under review, the ten most salient terms per topic, the number of papers per topic, and the number of papers that included each type of model.

Table 1.

LDA topics with illustrative examples from the pool of papers under review, the ten most salient terms per topic, the number of papers per topic, and the number of papers that included each type of model.

| LDA Topic | Examples from the Papers | Ten Most Salient Terms | No. Papers | Models |

|---|---|---|---|---|

| Awareness and Reflection | Ref. [35] proposed a system that can capture the behaviour of students in the classroom when learning programming and that consequently helps teachers to improve their learning materials.Ref. [21] presented a system that analyses students’ written responses in order to provide insights into student conceptions and that can inform teacher actions. | teachers, students, learning, classroom, goals, engagement, individual, course, dashboard, needs. | 9 |

|

| Dashboards | Ref. [36] presented a dashboard that provides adaptive support for collaborative argumentation in a face-to-face context.Ref. [23] introduced a dashboard that helps teachers to provide feedback to students on how to improve their learning behaviour and cognitive processes, guided by analytics informed by a process-oriented feedback model. | students, teachers, visualisations, learning, dashboard, data, assessment, time, errors, analysis. | 6 |

|

| Adaptive Learning Architectures | Ref. [33] presents an architecture that supports the assessment of competence-based learning in blended learning environments, intended to be used to enable teachers to better adapt their learning designs to students’ needs.Ref. [12] proposed a system that integrated feedback from students, parents, and teachers into the open model of a specific student. | data, learning, tutor, architecture, sources, system, assessment, model, information, context. | 10 |

|

| Learning Orchestration | Ref. [37] presented the design and evaluation of a teacher orchestration dashboard to enhance collaboration in the classroom.Ref. [32] explored how an ITS dashboard affects teachers’ decision making when orchestrating. | teachers, students, design, context, data, goals, classroom, dashboard, process, collaborative. | 8 |

|

| Assessment Frameworks | Ref. [38] presented an implementation of a competency assessment framework in university settings, called SCALA.Ref. [39] proposed an assessment framework for serious games that informs teachers on the competences acquired by learners. | learning, students, dashboard, competences, tutor, analysis, level, time, errors, task. | 9 |

|

T2.2. Relations between the latent topics and the models used to provide the feedback to teachers. Table 1, under the column ’Models’, shows the different types of models connected to each LDA topic and the number of papers where each model was used. We also used ENA to visualise the relations between the latent topics mentioned in IQ2.1.2 and the five main types of models that we identified in our previous qualitative analyses. See Section 2 for an explanation of each model group and Table A1 in Appendix A for the full list of papers under review and their corresponding models. Regarding the two-dimensional space of Figure 9, the horizontal and vertical lines seem to place the more technology-oriented topics (e.g., Adaptive Learning Architectures) and the more teacher-oriented ones (e.g., Awareness and Reflection) on opposite sides. One might think of the models, generally placed in the middle of the visualisation, as the links between the two sides. Furthermore, as we can see in Figure 9 and in Table 1, there appears to be a stronger connection between domain models (but also learner models) and topics such as Awareness and Reflection or Learning Orchestration. This suggests that teacher practices in the papers under review were mainly guided by models of the domain of instruction, as well as, to a lesser extent, by models representing the knowledge of the students. On the other hand, automatic decisions taken by the system (represented by topics such as Adaptive Learning Architectures or Assessment Frameworks) were mainly based on students’ current knowledge (i.e., learner models). In general, MbLA have been mainly based on learner, domain, and instructional models. While this has been a known trend in intelligent systems [40], as these models capture the essence of instructional decisions, it is interesting that there was no connection between the Adaptive Learning Architectures topic and social or collaborative models. These results highlight that the current MbLA systems have taken a rather individualistic view of student learning and instruction, while neglecting aspects that also make learning a social process. Indeed, it is visible in Table 1 and Figure 9 that collaborative and social models (which were underrepresented in our pool of papers) have weak ties regardless of the topic.

Figure 9.

ENA visualisation of the relations between the different models used to provide feedback to teachers and the latent topics. The visualisation is a result of the study of two networks based, respectively, on papers focused on teacher-guided and system-guided learning.

In our previous work, we classified the papers under review into two groups: those where the proposed systems played the primary role in adapting the learning process, and those where the teachers were the primary agents of adaptation (see Table A1 in Appendix A for more details). When building ENA, we created and subtracted two different networks based on these two groups of papers (respectively, with red and blue in Figure 9). It is unsurprising that the network of papers focused on system-guided learning displayed a higher level of interconnectedness between topics related to Adaptive Learning Architectures and Assessment Frameworks, or models such as domain and social models (evidenced by stronger red connections in the subtracted network in Figure 9). On the other hand, the network of papers focused on teacher-guided learning showed stronger connections between most of the models, as well as topics such as Dashboards or Awareness and Reflection (with blue in Figure 9).

5. Discussion

Regarding RQ1 (The synergies inside the community investigating MbLA), we identified several research groups that have inquired about MbLA. These groups have had little explicit and implicit interactions among them in this context, as seen from the disconnected co-authoring network in Figure 3, and the fact that only a core group of authors have co-cited each other, as shown in Figure 4. We also noted that LA and ITS are two specific fields in the broader context of TEL that can be considered as the main advocates of MbLA. Indeed, these two fields share a common body of literature in this context, which could be considered as a core literature in MbLA (as seen with the networks of the main co-cited authors in Figure 4, and the similarity of the papers based on their citations in Figure 5). Successful MbLA implementation will require further explicit alignment from these two fields. On the one hand, LA provides good practices on how LA-guided systems can inform and enhance teaching and learning practices [14,35,41,42,43]. On the other hand, ITS brings examples of how a system can adapt a learning activity to students’ needs and the role that a teacher can play in this process [5,32,44]. Clearly, both communities are complementary and would benefit from further explicit alignment in the future with respect to research on MbLA. In the short term, common venues might serve as a starting point, where future workshops around this topic might be organised.

Concerning RQ2 (Main research topics), our results showed that the body of research on MbLA has mainly focused on technological solutions that target teachers’ awareness and reflection about the learning process, as well as enabling them to intervene (i.e., in relation to learning orchestration). This result confirms the ones from our qualitative analyses that showed that our set of papers had a stronger focus on guidance, as opposed to mirroring, which had been the main focus in previous LA reviews (see, for instance, [14,15]). Not surprisingly, dashboards have been the main tool used to open the learner models up to teachers both in ITS and LA (as seen by the network of keywords in Figure 8 and the latent topics in Table 1). All together, the topics that we identified in our analyses could be considered as the basic prerequisites that make MbLA possible, rather than from a technical point of view (i.e., through system architectures and dashboards, which are guided by specific pedagogical frameworks), or by targeting the main teaching practices (i.e., related to design and orchestration). Nevertheless, future research should take a step further and improve the partnership between teachers and intelligent systems to support the learning process [2,3,5]. On the one hand, this means that future research could focus on systems that not only allow teachers to modify their models (such as in [31], where teachers had the option to overwrite the decisions of the system), but that are also able to improve over time by learning from the continuous teacher input. This second type of teacher–system interaction was missing in our pool of papers. On the other hand, teachers would also benefit from systems that move beyond transparency, such as by assisting teacher decision making by providing guidance. For instance, a starting point could be the use of AI systems that support teachers when designing and orchestrating a learning activity (such as in [43], where the system provides feedback to teachers about the learning design that they are creating in real time). It is worth mentioning that the implementation of AI-supported systems comes with related risks. For instance, datasets that include biased data could cause distortion in the decisions or suggestions made by the system. Moreover, the most powerful AI models are non-transparent black-box models, the decision making of which is unknown. Therefore, the future systems that we are advocating for should focus not only on training AI systems based on diverse and representative data, but also on the implementation of interpretable algorithms whenever possible. Recent advancements in machine learning that help to create black-box models more interpretable might also be useful in this context [45]. Moreover, it is necessary to consider distortion in the decisions made by the system based on a possible bias found in the dataset used to train it.

In our pool of papers, domain, learner, and instructional models predominated. Domain models have mainly guided teacher reflection practices, while a combination of learner, domain, and instructional models have been used to guide the adaptive learning decisions taken by intelligent systems (as seen in Figure 9). This suggests that current MbLA systems have taken an individualistic approach to student learning and instruction, while neglecting aspects that make learning a social process. Indeed, to enable a successful teacher–AI partnership, MbLA systems should take a step further and also include elements from social and collaborative models.

Helping teachers to adopt and use MbLA systems in a meaningful way would improve the support that they provide to students, by offering better and more personalised feedback or supporting students’ self-regulated learning, among others [31,46,47,48]. For the successful adoption of MbLA in teaching practices, it is essential to consider that teachers already struggle to adopt new technological and pedagogical practices, due to the challenge of learning the required new skills, as well as teachers’ already heavy workloads [49]. To facilitate this adoption, future research should explore the pedagogical aspects of implementing MbLA in different learning contexts. Moreover, training is one of the common approaches used to support teachers’ professional development. While teacher training could promote the adoption of MbLA, it is expensive and its effects are limited in time [45]. Therefore, future research should also focus on systems that include sufficient intelligent guidance that would not only require less training but that will also be perceived as useful by teachers. To accomplish this aim, teachers (together with learners) should be an essential part of the development process from an early stage, such as through the implementation of a participatory design approach, as suggested by recent initiatives on human-centred LA [24,49,50]. Only a few of the papers under review took a similar approach, as also shown by the lack of related topics in our analyses. A good example related to MbLA is provided by [32], which followed a participatory design approach to design a teacher dashboard intended to be used with an ITS.

Limitations of this study are related to the systematic review methodology and the methods used for the analyses. Potentially relevant keywords that we may have not included in our query might have obscured relevant work. To minimise this effect, we experimented with different queries before choosing one (see Section 3). Related relevant research might have been published since the time that we compiled our list of papers under review. We might have also missed relevant work published in other databases, or in languages other than English, which was one of our inclusion criteria. The small number of papers under review (42) is a limitation for LDA. Nevertheless, even with a small number of documents, LDA can still provide useful insights about the latent topics found in the papers under review in systematic reviews [51]. Although the nature of this study was mainly quantitative, when necessary, we also performed manual qualitative analyses. For instance, LDA included a subjective interpretation, which we tried to diminish by involving two researchers in the process and by discussing the results among all the co-authors.

6. Conclusions

We started this paper by emphasising that MbLA call for a challenging interdisciplinary approach that does not only require input from different fields (i.e., learning sciences, computer sciences, HCI, and domain experts), but one which additionally needs to create a tight coupling between teachers’ understanding of learning and models implemented in intelligent systems. Results from our bibliometric review revealed that research on MbLA is still in its early stage, with few examples that were rooted in interdisciplinary approaches (such as through the application of participatory design studies that integrate the input from stakeholders from different fields). Nevertheless, our review provides a pool of existing good practices on how to achieve the mentioned coupling of teachers and intelligent systems to support the learning process, through the multiple system architectures, guiding assessment frameworks, or dashboards.

The focus on guiding teachers in the papers under review is indeed a step in the right direction, as opposed to simply mirroring. The next step, which would truly enable a teacher–technology partnership to support learning practices, would be to focus on systems that provide higher-order guidance to teachers (e.g., through AI), and that also improve their model of learning and instruction over time (i.e., by learning from the continuous teacher input). To achieve this goal, future research in MbLA should not only take an individualistic approach to student learning and instruction, as seen in our results, but should also consider aspects of their social nature (e.g., social structures or patterns of collaborative learning).

Author Contributions

Conceptualisation, G.P. and T.L.; methodology, quantitative data analysis, and writing of the manuscript, G.P. All the authors equally contributed to the systematic review process. All authors have read and agreed to the published version of the manuscript.

Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 856954 (SEIS Project, http://seis.tlu.ee) (accessed on 1 April 2023), and the Estonian Research Council (grant PRG1634, Model-based Learning Analytics for Fostering Students’ Higher-order Thinking Skills, https://www.etis.ee/Portal/Projects/Display/701a49da-bfc9-4f68-b224-2f9722478274 (accessed on 1 April 2023).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

List of papers under review, with the corresponding types of models used in the systems that they proposed, the main LDA topics, and whether the learning activity was mainly teacher- vs. system-guided.

Table A1.

List of papers under review, with the corresponding types of models used in the systems that they proposed, the main LDA topics, and whether the learning activity was mainly teacher- vs. system-guided.

| Paper | Models | Main LDA Topic | Teacher vs. System Guidance |

|---|---|---|---|

| Abdi et al. [52] | Learner, Domain | Adaptive Learning Architectures | Teacher |

| Al-Jadaa et al. [12] | Learner, Domain | Adaptive Learning Architectures | System |

| Aleven et al. [53] | Learner, Domain | Learning Orchestration | Teacher |

| Amarasinghe et al. [37] | Instructional, Collaborative | Learning Orchestration | System |

| Aslan et al. [54] | Learner, Collaborative | Learning Orchestration | Teacher |

| Balaban et al. [55] | Learner | Assessment Frameworks | System |

| Boulanger et al. [56] | Learner | Dashboards | Teacher |

| Bull and McKay [31] | Learner, Domain | Adaptive Learning Architectures | System |

| Calvo-Morata et al. [39] | Collaborative | Assessment Frameworks | System |

| de Leng and Pawelka [57] | Domain | Dashboards | Teacher |

| Diana et al. [25] | Domain, Social | Awareness and Reflection | System |

| Ebner and Schön [58] | Learner, Domain | Assessment Frameworks | Teacher |

| Florian-Gaviria et al. [59] | Learner | Assessment Frameworks | System |

| Fouh et al. [60] | Domain | Awareness and Reflection | Teacher |

| Fu et al. [61] | Domain | Awareness and Reflection | Teacher |

| Guenaga et al. [38] | Learner, Domain | Assessment Frameworks | Teacher |

| Han et al. [36] | Collaborative | Dashboards | Teacher |

| Hardebolle et al. [35] | Domain | Awareness and Reflection | Teacher |

| Herodotou et al. [22] | Learner | Learning Orchestration | Teacher |

| Holstein et al. [44] | Learner, Domain, Instructional | Learning Orchestration | Teacher |

| Holstein et al. [62] | Learner | Adaptive Learning Architectures | Teacher |

| Jia and Yu [63] | Learner | Adaptive Learning Architectures | System |

| Johnson et al. [34] | Learner | Assessment Frameworks | Teacher |

| Kickmeier-Rust and Albert [64] | Learner, Domain | Assessment Frameworks | Teacher |

| Lazarinis & Retalis [65] | Learner, Domain, Instructional | Adaptive Learning Architectures | Teacher |

| Martinez-Maldonado [41] | Domain, Collaborative | Learning Orchestration | System |

| McDonald et al. [21] | Domain | Awareness and Reflection | Teacher |

| Molenaar and Knoop-van Campen [66] | Learner, Domain | Learning Orchestration | Teacher |

| Montebello [67] | Learner | Adaptive Learning Architectures | Teacher |

| Ocumpaugh et al. [68] | Learner | Adaptive Learning Architectures | Teacher |

| Pérez-Marín and Pascual-Nieto [69] | Learner, Domain | Awareness and Reflection | System |

| Riofrío-Luzcando et al. [70] | Learner, Domain | Assessment Frameworks | Teacher |

| Rudzewitz et al. [71] | Learner, Domain, Instructional | Awareness and Reflection | Teacher |

| Ruiz-Calleja et al. [72] | Social | Assessment Frameworks | Teacher |

| Sedrakyan et al. [23] | Learner, Domain, Instructional | Dashboards | Teacher |

| Taibi et al. [73] | Learner, Domain, Social | Awareness and Reflection | Teacher |

| Villamañe et al. [33] | Learner, Domain | Adaptive Learning Architectures | System |

| Villanueva et al. [74] | Learner, Domain, Instructional | Adaptive Learning Architectures | Teacher |

| Volarić and Ljubić [75] | Learner, Domain | Dashboards | Teacher |

| Xhakaj et al. [32] | Learner, Domain | Learning Orchestration | System |

| Yoo and Jin [76] | Learner | Dashboards | Teacher |

| Zhu and Wang [77] | Learner, Instructional | Awareness and Reflection | Teacher |

Appendix B

Appendix B.1

Figure A1.

Results from two metrics proposed by [29,30] to determine the optimal number of topics, which guided us to choose five as the most appropriate number.

Figure A1.

Results from two metrics proposed by [29,30] to determine the optimal number of topics, which guided us to choose five as the most appropriate number.

Appendix B.2

Figure A2.

Most salient terms for each topic resulting from the LDA.

Figure A2.

Most salient terms for each topic resulting from the LDA.

References

- Poquet, O.; De Laat, M. Developing capabilities: Lifelong learning in the age of AI. Br. J. Educ. Technol. 2021, 52, 1695–1708. [Google Scholar] [CrossRef]

- Baker, R.S. Stupid tutoring systems, intelligent humans. Int. J. Artif. Intell. Educ. 2016, 26, 600–614. [Google Scholar] [CrossRef]

- Cukurova, M.; Kent, C.; Luckin, R. Artificial intelligence and multimodal data in the service of human decision-making: A case study in debate tutoring. Br. J. Educ. Technol. 2019, 50, 3032–3046. [Google Scholar] [CrossRef]

- Holstein, K.; Aleven, V.; Rummel, N. A conceptual framework for human–AI hybrid adaptivity in education. In Proceedings of the International Conference on Artificial Intelligence in Education, Ifrane, Morocco, 6–10 July 2020; pp. 240–254. [Google Scholar] [CrossRef]

- Holstein, K.; McLaren, B.M.; Aleven, V. Intelligent tutors as teachers’ aides: Exploring teacher needs for real-time analytics in blended classrooms. In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, Vancouver, BC, Canada, 13–17 March 2017; pp. 257–266. [Google Scholar] [CrossRef]

- Ley, T. Knowledge structures for integrating working and learning: A reflection on a decade of learning technology research for workplace learning. Br. J. Educ. Technol. 2020, 51, 331–346. [Google Scholar] [CrossRef]

- Shaffer, D.; Ruis, A. Epistemic network analysis: A worked example of theory-based learning analytics. In Handbook of Learning Analytics; SOLAR: Ellicott City, MD, USA, 2017. [Google Scholar] [CrossRef]

- Knight, S.; Shum, S.B. Theory and learning analytics. In Handbook of Learning Analytics; SOLAR: Ellicott City, MD, USA, 2017; pp. 17–22. [Google Scholar] [CrossRef]

- Baker, R.S.; Clarke-Midura, J.; Ocumpaugh, J. Towards general models of effective science inquiry in virtual performance assessments. J. Comput. Assist. Learn. 2016, 32, 267–280. [Google Scholar] [CrossRef]

- Seitlinger, P.; Bibi, A.; Uus, Õ.; Ley, T. How working memory capacity limits success in self-directed learning: A cognitive model of search and concept formation. In Proceedings of the Tenth International Conference on Learning Analytics & Knowledge, Frankfurt, Germany, 23–27 March 2020; pp. 53–62. [Google Scholar] [CrossRef]

- Pammer-Schindler, V.; Wild, F.; Fominykh, M.; Ley, T.; Perifanou, M.; Soule, M.V.; Hernández-Leo, D.; Kalz, M.; Klamma, R.; Pedro, L.; et al. Interdisciplinary doctoral training in technology-enhanced learning in Europe. Front. Educ. 2020, 5, 150. [Google Scholar] [CrossRef]

- Al-Jadaa, A.A.; Abu-Issa, A.S.; Ghanem, W.T.; Hussein, M.S. Enhancing the intelligence of web tutoring systems using a multi-entry based open learner model. In Proceedings of the Second International Conference on Internet of Things, Data and Cloud Computing, Cambridge, UK, 22–23 March 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Ley, T.; Tammets, K.; Pishtari, G.; Chejara, P.; Kasepalu, R.; Khalil, M.; Saar, M.; Tuvi, I.; Väljataga, T.; Wasson, B. Towards a Partnership of Teachers and Intelligent Learning Technology: A Systematic Literature Review of Model-based Learning Analytics. J. Comput. Assist. Learn. 2023, in press. [Google Scholar]

- Rodríguez-Triana, M.J.; Prieto, L.P.; Vozniuk, A.; Boroujeni, M.S.; Schwendimann, B.A.; Holzer, A.; Gillet, D. Monitoring, awareness and reflection in blended technology enhanced learning: A systematic review. Int. J. Technol. Enhanc. Learn. 2017, 9, 126–150. [Google Scholar] [CrossRef]

- Schwendimann, B.A.; Rodriguez-Triana, M.J.; Vozniuk, A.; Prieto, L.P.; Boroujeni, M.S.; Holzer, A.; Gillet, D.; Dillenbourg, P. Perceiving learning at a glance: A systematic literature review of learning dashboard research. IEEE Trans. Learn. Technol. 2016, 10, 30–41. [Google Scholar] [CrossRef]

- Celik, I.; Dindar, M.; Muukkonen, H.; Järvelä, S. The Promises and Challenges of Artificial Intelligence for Teachers: A Systematic Review of Research. TechTrends 2022, 66, 616–630. [Google Scholar] [CrossRef]

- Abyaa, A.; Khalidi Idrissi, M.; Bennani, S. Learner modelling: Systematic review of the literature from the last 5 years. Educ. Technol. Res. Dev. 2019, 67, 1105–1143. [Google Scholar] [CrossRef]

- Desmarais, M.C.; Baker, R.S.D. A review of recent advances in learner and skill modeling in intelligent learning environments. User Model. User Adapt. Interact. 2012, 22, 9–38. [Google Scholar] [CrossRef]

- Bull, S. There are open learner models about! IEEE Trans. Learn. Technol. 2020, 13, 425–448. [Google Scholar] [CrossRef]

- Bodily, R.; Kay, J.; Aleven, V.; Jivet, I.; Davis, D.; Xhakaj, F.; Verbert, K. Open learner models and learning analytics dashboards: A systematic review. In Proceedings of the 8th International Conference on Learning Analytics and Knowledge, Sydney, NSW, Australia, 7–9 March 2018; pp. 41–50. [Google Scholar] [CrossRef]

- McDonald, J.; Bird, R.; Zouaq, A.; Moskal, A.C.M. Short answers to deep questions: Supporting teachers in large-class settings. J. Comput. Assist. Learn. 2017, 33, 306–319. [Google Scholar] [CrossRef]

- Herodotou, C.; Hlosta, M.; Boroowa, A.; Rienties, B.; Zdrahal, Z.; Mangafa, C. Empowering online teachers through predictive learning analytics. Br. J. Educ. Technol. 2019, 50, 3064–3079. [Google Scholar] [CrossRef]

- Sedrakyan, G.; Malmberg, J.; Verbert, K.; Järvelä, S.; Kirschner, P.A. Linking learning behavior analytics and learning science concepts: Designing a learning analytics dashboard for feedback to support learning regulation. Comput. Hum. Behav. 2020, 107, 105512. [Google Scholar] [CrossRef]

- Amarasinghe, I.; Michos, K.; Crespi, F.; Hernández-Leo, D. Learning analytics support to teachers’ design and orchestrating tasks. J. Comput. Assist. Learn. 2022. [Google Scholar] [CrossRef]

- Diana, N.; Eagle, M.; Stamper, J.; Grover, S.; Bienkowski, M.; Basu, S. An instructor dashboard for real-time analytics in interactive programming assignments. In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, Vancouver, BC, Canada, 13–17 March 2017; pp. 272–279. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering. 2007. Available online: https://www.elsevier.com/__data/promis_misc/525444systematicreviewsguide.pdf (accessed on 1 April 2023).

- Perianes-Rodriguez, A.; Waltman, L.; Van Eck, N.J. Constructing bibliometric networks: A comparison between full and fractional counting. J. Inf. 2016, 10, 1178–1195. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Cao, J.; Xia, T.; Li, J.; Zhang, Y.; Tang, S. A density-based method for adaptive LDA model selection. Neurocomputing 2009, 72, 1775–1781. [Google Scholar] [CrossRef]

- Deveaud, R.; SanJuan, E.; Bellot, P. Accurate and effective latent concept modeling for ad hoc information retrieval. Doc. Numérique 2014, 17, 61–84. [Google Scholar] [CrossRef]

- Bull, S.; McKay, M. An open learner model for children and teachers: Inspecting knowledge level of individuals and peers. In International Conference on Intelligent Tutoring Systems; Springer: Berlin/Heidelberg, Germany, 2004; pp. 646–655. [Google Scholar] [CrossRef]

- Xhakaj, F.; Aleven, V.; McLaren, B.M. Effects of a teacher dashboard for an intelligent tutoring system on teacher knowledge, lesson planning, lessons and student learning. In European Conference On Technology Enhanced Learning; Springer: Berlin, Germany, 2017; pp. 315–329. [Google Scholar] [CrossRef]

- Villamañe, M.; Álvarez, A.; Larrañaga, M.; Caballero, J.; Hernández-Rivas, O. Supporting Competence-based Learning in Blended Learning Environments. In Proceedings of the LASI-SPAIN, Santa Cruz de Tenerife, Spain, 17–20 April 2018; pp. 6–16. [Google Scholar] [CrossRef]

- Johnson, M.; Cierniak, G.; Hansen, C.; Bull, S.; Wasson, B.; Biel, C.; Debus, K. Teacher approaches to adopting a competency based open learner model. In Proceedings of the 21st International Conference on Computers in Education. Indonesia: Asia-Pacific Society for Computers in Education, Bali, Indonesia, 18–22 November 2013. [Google Scholar]

- Hardebolle, C.; Jermann, P.; Pinto, F.; Tormey, R. Impact of a learning analytics dashboard on the practice of students and teachers. In Proceedings of the SEFI 47th Annual Conference, Budapest, Hungary, 16–19 September 2019. [Google Scholar]

- Han, J.; Kim, K.H.; Rhee, W.; Cho, Y.H. Learning analytics dashboards for adaptive support in face-to-face collaborative argumentation. Comput. Educ. 2021, 163, 104041. [Google Scholar] [CrossRef] [PubMed]

- Amarasinghe, I.; Hernandez-Leo, D.; Michos, K.; Vujovic, M. An actionable orchestration dashboard to enhance collaboration in the classroom. IEEE Trans. Learn. Technol. 2020, 13, 662–675. [Google Scholar] [CrossRef]

- Guenaga, M.; Longarte, J.K.; Rayon, A. Standardized enriched rubrics to support competeney-assessment through the SCALA methodology and dashboard. In Proceedings of the 2015 IEEE Global Engineering Education Conference (EDUCON), Tallinn, Estonia, 18–20 March 2015; pp. 340–347. [Google Scholar] [CrossRef]

- Calvo-Morata, A.; Alonso-Fernández, C.; Pérez-Colado, I.J.; Freire, M.; Martínez-Ortiz, I.; Fernandez-Manjon, B. Improving Teacher Game Learning Analytics Dashboards through ad-hoc Development. J. Univers. Comput. Sci. 2019, 25, 1507–1530. [Google Scholar]

- Shute, V.; Towle, B. Adaptive e-learning. Educ. Psychol. 2018, 38, 105–114. [Google Scholar] [CrossRef]

- Martinez-Maldonado, R. A handheld classroom dashboard: Teachers’ perspectives on the use of real-time collaborative learning analytics. Int. J. Comput. Support. Collab. Learn. 2019, 14, 383–411. [Google Scholar] [CrossRef]

- Pishtari, G.; Rodríguez-Triana, M.J.; Sarmiento-Márquez, E.M.; Pérez-Sanagustín, M.; Ruiz-Calleja, A.; Santos, P.; Prieto, L.P.; Serrano-Iglesias, S.; Väljataga, T. Learning design and learning analytics in mobile and ubiquitous learning: A systematic review. Br. J. Educ. Technol. 2020, 51, 1078–1100. [Google Scholar] [CrossRef]

- Pishtari, G.; Rodríguez-Triana, M.J.; Prieto, L.P.; Ruiz-Calleja, A.; Väljataga, T. What kind of learning designs do practitioners create for mobile learning? Lessons learnt from two in-the-wild case studies. J. Comput. Assist. Learn. 2022. [Google Scholar] [CrossRef]

- Holstein, K.; McLaren, B.M.; Aleven, V. Student learning benefits of a mixed-reality teacher awareness tool in AI-enhanced classrooms. In Proceedings of the Artificial Intelligence in Education: 19th International Conference, AIED 2018, London, UK, 27–30 June 2018; Part I 19. pp. 154–168. [Google Scholar] [CrossRef]

- Pishtari, G.; Prieto, L.P.; Rodríguez-Triana, M.J.; Martinez-Maldonado, R. Design analytics for mobile learning: Scaling up the classification of learning designs based on cognitive and contextual elements. J. Learn. Anal. 2022, 9, 236–252. [Google Scholar] [CrossRef]

- Lim, L.A.; Dawson, S.; Gašević, D.; Joksimović, S.; Pardo, A.; Fudge, A.; Gentili, S. Students’ perceptions of, and emotional responses to, personalised learning analytics-based feedback: An exploratory study of four courses. Assess. Eval. High. Educ. 2021, 46, 339–359. [Google Scholar] [CrossRef]

- Pérez-Sanagustín, M.; Sapunar-Opazo, D.; Pérez-Álvarez, R.; Hilliger, I.; Bey, A.; Maldonado-Mahauad, J.; Baier, J. A MOOC-based flipped experience: Scaffolding SRL strategies improves learners’ time management and engagement. Comput. Appl. Eng. Educ. 2021, 29, 750–768. [Google Scholar] [CrossRef]

- Abdi, S.; Khosravi, H.; Sadiq, S.; Gasevic, D. Complementing educational recommender systems with open learner models. In Proceedings of the Tenth International Conference on Learning Analytics & Knowledge, Frankfurt, Germany, 23–27 March 2020; pp. 360–365. [Google Scholar] [CrossRef]

- Pishtari, G.; Rodríguez-Triana, M.J.; Väljataga, T. A multi-stakeholder perspective of analytics for learning design in location-based learning. Int. J. Mob. Blended Learn. 2021, 13, 1–17. [Google Scholar] [CrossRef]

- Buckingham Shum, S.; Ferguson, R.; Martinez-Maldonado, R. Human-centred learning analytics. J. Learn. Anal. 2019, 6, 2. [Google Scholar] [CrossRef]

- Pishtari, G.; Sarmiento-Márquez, E.M.; Tammets, K.; Aru, J. The Evaluation of One-to-One Initiatives: Exploratory Results from a Systematic Review. In European Conference on Technology Enhanced Learning; Springer: Cham, Switzerland, 2022; pp. 310–323. [Google Scholar] [CrossRef]

- Abdi, S.; Khosravi, H.; Sadiq, S.; Gasevic, D. A multivariate Elo-based learner model for adaptive educational systems. arXiv 2019. [Google Scholar] [CrossRef]

- Aleven, V.; Xhakaj, F.; Holstein, K.; McLaren, B.M. Developing a Teacher Dashboard for Use with Intelligent Tutoring Systems. 2016, pp. 15–23. Available online: https://www.semanticscholar.org/paper/Developing-a-Teacher-Dashboard-For-Use-with-Systems-Aleven-Xhakaj/1a530f2cb088129bcab99bfebac82222fa9b05a9 (accessed on 1 April 2023).

- Aslan, S.; Alyuz, N.; Tanriover, C.; Mete, S.E.; Okur, E.; D’Mello, S.K.; Arslan Esme, A. Investigating the impact of a real-time, multimodal student engagement analytics technology in authentic classrooms. In Proceedings of the 2019 Chi Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar] [CrossRef]

- Balaban, I.; Filipovic, D.; Peras, M. CRISS: A Cloud Based Platform for Guided Acquisition, Evaluation and Certification of Digital Competence. Int. Assoc. Dev. Inf. Soc. 2019. [Google Scholar] [CrossRef]

- Boulanger, D.; Seanosky, J.; Guillot, R.; Kumar, V.S. Breadth and Depth of Learning Analytics. In Innovations in Smart Learning; Springer: Singapore, 2017; pp. 221–225. [Google Scholar] [CrossRef]

- de Leng, B.; Pawelka, F. The use of learning dashboards to support complex in-class pedagogical scenarios in medical training: How do they influence students’ cognitive engagement? Res. Pract. Technol. Enhanc. Learn. 2020, 15, 14. [Google Scholar] [CrossRef]

- Ebner, M.; Schön, M. Why learning analytics in primary education matters. Bull. Tech. Comm. Learn. Technol. 2013, 15, 14–17. [Google Scholar]

- Florian-Gaviria, B.; Glahn, C.; Gesa, R.F. A software suite for efficient use of the European qualifications framework in online and blended courses. IEEE Trans. Learn. Technol. 2013, 6, 283–296. [Google Scholar] [CrossRef]

- Fouh, E.; Farghally, M.; Hamouda, S.; Koh, K.H.; Shaffer, C.A. Investigating Difficult Topics in a Data Structures Course Using Item Response Theory and Logged Data Analysis. In Proceedings of the International Educational Data Mining Society, Raleigh, NC, USA, 29 June–2 July 2016. [Google Scholar]

- Fu, X.; Shimada, A.; Ogata, H.; Taniguchi, Y.; Suehiro, D. Real-time learning analytics for C programming language courses. In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, Vancouver, BC, Canada, 13–17 March 2017; pp. 280–288. [Google Scholar] [CrossRef]

- Holstein, K.; Yu, Z.; Sewall, J.; Popescu, O.; McLaren, B.M.; Aleven, V. Opening up an intelligent tutoring system development environment for extensible student modeling. In Proceedings of the Artificial Intelligence in Education: 19th International Conference, AIED 2018, London, UK, 27–30 June 2018; Part I 19. pp. 169–183. [Google Scholar] [CrossRef]

- Jia, J.; Yu, Y. Online learning activity index (OLAI) and its application for adaptive learning. In Proceedings of the Blended Learning, New Challenges and Innovative Practices: 10th International Conference, ICBL 2017, Hong Kong, China, 27–29 June 2017; Proceedings 10. pp. 213–224. [Google Scholar] [CrossRef]

- Kickmeier-Rust, M.D.; Albert, D. Learning analytics to support the use of virtual worlds in the classroom. In Proceedings of the International Workshop on Human-Computer Interaction and Knowledge Discovery in Complex, Unstructured, Big Data, Maribor, Slovenia, 1–3 July 2013; pp. 358–365. [Google Scholar]

- Lazarinis, F.; Retalis, S. Analyze me: Open learner model in an adaptive web testing system. Int. J. Artif. Intell. Educ. 2007, 17, 255–271. [Google Scholar]

- Molenaar, I.; Knoop-van Campen, C. Teacher dashboards in practice: Usage and impact. In Proceedings of the Data Driven Approaches in Digital Education: 12th European Conference on Technology Enhanced Learning, EC-TEL 2017, Tallinn, Estonia, 12–15 September 2017; Proceedings 12. pp. 125–138. [Google Scholar] [CrossRef]

- Montebello, M. Assisting education through real-time learner analytics. In Proceedings of the 2018 IEEE Frontiers in Education Conference (FIE), San Jose, CA, USA, 3–6 October 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Ocumpaugh, J.; Baker, R.S.; San Pedro, M.O.; Hawn, M.A.; Heffernan, C.; Heffernan, N.; Slater, S.A. Guidance counselor reports of the ASSISTments college prediction model (ACPM). In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, Vancouver, BC, Canada, 13–17 March 2017; pp. 479–488. [Google Scholar] [CrossRef]

- Pérez-Marín, D.; Pascual-Nieto, I. Showing automatically generated students’ conceptual models to students and teachers. Int. J. Artif. Intell. Educ. 2010, 20, 47–72. [Google Scholar] [CrossRef]

- Riofrío-Luzcando, D.; Ramírez, J.; Moral, C.; de Antonio, A.; Berrocal-Lobo, M. Visualizing a collective student model for procedural training environments. Multimed. Tools Appl. 2019, 78, 10983–11010. [Google Scholar] [CrossRef]

- Rudzewitz, B.; Ziai, R.; Nuxoll, F.; De Kuthy, K.; Meurers, D. Enhancing a Web-based Language Tutoring System with Learning Analytics. In Proceedings of the EDM (Workshops), Montréal, QC, Canada, 2 July 2019; pp. 1–7. [Google Scholar]

- Ruiz-Calleja, A.; Dennerlein, S.; Ley, T.; Lex, E. Visualizing Workplace Learning Data with the SSS Dashboard. In Proceedings of the CrossLAK, Edinburgh, UK, 25–29 April 2016; pp. 79–86. [Google Scholar]

- Taibi, D.; Bianchi, F.; Kemkes, P.; Marenzi, I. A learning analytics dashboard to analyse learning activities in interpreter training courses. In Proceedings of the Computer Supported Education: 10th International Conference, CSEDU 2018, Funchal, Madeira, Portugal, 15–17 March 2018; Revised Selected Papers 10. pp. 268–286. [Google Scholar] [CrossRef]

- Villanueva, N.M.; Costas, A.E.; Hermida, D.F.; Rodríguez, A.C. Simplify its: An intelligent tutoring system based on cognitive diagnosis models and spaced learning. In Proceedings of the 2018 IEEE global engineering education conference (EDUCON), Santa Cruz de Tenerife, Spain, 17–20 April 2018; pp. 1703–1712. [Google Scholar] [CrossRef]

- Volarić, T.; Ljubić, H. Learner and course dashboards for intelligent learning management systems. In Proceedings of the 2017 25th International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 21–23 September 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Yoo, M.; Jin, S.H. Development and evaluation of learning analytics dashboards to support online discussion activities. Educ. Technol. Soc. 2020, 23, 1–18. [Google Scholar]

- Zhu, Q.; Wang, M. Team-based mobile learning supported by an intelligent system: Case study of STEM students. Interact. Learn. Environ. 2020, 28, 543–559. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).