1. Introduction

The need for specialised information is increasing with each passing year. Advances in technology combined with the demand for greater productivity, service expansion, and training are some of the factors driving the development of technical writing. There is also a rising demand in the market for technical translation due to increasing business globalisation. In fact, in 2022, the global market for language services was estimated to be worth USD 60.68 billion and is expected to reach USD 96.21 billion by the end of 2032. Furthermore, as a wide range of industries, including engineering, law, medical, and software, require translation, particularly technical translation, large corporations often spend 0.25 to 2.5% of their annual revenue on this service [

1].

Translating technical documents requires specialised knowledge, which explains the need for qualified technical translators in the market. Higher-education institutions (HEI) play a relevant role in translator training. High-quality training is essential for students to understand that technical translation requires a deep understanding of the terminology and concepts of a specific field, as well as of the context in which the text was created. In addition, training helps develop several areas of competence of crucial importance for a translator, namely, technical competence regarding computer-assisted translation (CAT) and other translation technologies such as MT, in order to meet the increasing demands of the translation market [

2]. In fact, the MT market is expected to reach a compound annual growth rate (CAGR) of 7.1% in the 2022–2027 period [

3].

Translator training consequently entails having a deep grasp of the areas of competence needed from translation students and of the tasks/activities that may help them develop that competence. Using conventional translation assignments, which can be carried out by NMT in a matter of seconds, is no longer the most useful way to develop students’ translation competence.

Given this context, a study was carried out to confirm whether students do, in fact, use MT/NMT, as well as to determine how teachers can best take this reality into account in both translator instruction and assessment and overall competence development. In other words, this article has a two-fold purpose:

- -

To provide insight into the current use of MT by technical-translation students at ISCAP;

- -

To put forward a set of teaching and assessment activities for the translation classroom that is able to incorporate MT.

The set of activities put forward is intended to cope with the difficulties faced by teachers as a result of students’ frequent use of NMT. This frequent use, as shown by the questionnaire described in this article, makes it challenging for teachers to understand students’ real level of proficiency. The introduction of indirect activities in translation classes will hopefully allow for a more objective assessment of students’ competence.

2. Theoretical Background

2.1. Technical Translation and Translation Tools

Technical texts are found in a wide range of contexts and include different text types that, though perhaps not identified as technical straightaway, may be technical in nature. A descriptive text may be technical, as may a narrative or even an argumentative text. Though Jodie Byrne’s classification is adopted for pedagogical purposes (see

Section 5.1.4), there is a number of ways of classifying technical texts. Those typologies may focus on the type of information or the degree of specialisation contained in those texts.

According to Pringle and O’Keefe, it is possible to categorise different types of information within a technical text into the following types: interface information, reference information, conceptual information, and procedural information [

4]. Interface information identifies what a particular piece of information looks like visually, reference information indicates what a particular function is, procedural information refers to how to use that particular function, and conceptual information explains the circumstances under which function X is a better choice than function Y. However, the process of creating content is not straightforward nor limited to a particular type of information. As a result, it is equally crucial to deal with procedural information and conceptual information while developing content for technical writing, which makes its production more challenging. In fact, as argued by Pringle and O’Keefe, creating this kind of information requires a considerably more thorough understanding of the product than simply recording actions [

4].

Moreover, the degree of specialisation of the information presented in a technical text may vary. Cabré et al. pointed out that a technical text’s degree of specialisation may be classified into the following three levels: high, medium, and low [

5]. These classifications, while still considering the author of the technical text as a specialist, also accommodate the text’s target audience and any necessary variations tailored to that audience, which may comprise specialists, students, or the general public.

In short, regardless of the level of specialisation, it is always important to make sure that technical writing is straightforward, exact, detailed, and accurate to manage its specialised language and deliver its content effectively. For the same reasons, it is equally crucial that translations of technical texts show similar characteristics to preserve their primary goal.

When translating technical texts, the main objective is to facilitate the communication of technical knowledge across language barriers, accurately reproducing both its semantic content in the target language and the technical precision and clarity of the source text. This calls for in-depth familiarity with the subject matter, as well as with the source and target languages. Technical translation is crucial, as it enables businesses and organisations to interact with partners and customers worldwide, increase their market share, and disseminate vital technical knowledge to a global audience. Additionally, accurate technical translations can aid in avoiding potential misunderstandings, mistakes, and legal problems.

While raising students’ awareness of these issues, technical-translator training involves not only becoming acquainted with a variety of text types but also making use of the most suitable set of tools available for a translation task. Consequently, students’ learning process involves being conversant with computer-assisted translation (CAT) tools—such as Trados or MemoQ, to name but a few—and with MT tools, particularly since their development in the artificial-intelligence (AI) domain, which led to NMT.

2.2. Neural Machine Translation

MT focuses on the development of programmes that allow a computer to perform translation operations from one natural language to another. As pointed out by Bowker and Ciro [

6], MT is an area of research and development in which computational linguists make use of use software to translate texts from one natural language to another. Of an interdisciplinary nature, MT brings together knowledge from various scientific areas such as computer science, linguistics, computational linguistics and translation studies, among others. As a result, in MT or unassisted translation, the system translates the entire text without the translator’s intervention.

Although MT is part of the history of translation, its importance has recently grown significantly, especially after the rise of artificial intelligence (AI) [

2]. The most recent developments in this field began in 2016 and led to the development of neural machine translation (NMT) [

7].

NMT is an artificial-intelligence-based technology that uses deep neural networks [

8] to automatically translate text from one language to another. The NMT system is trained on a large set of sentence pairs in the source and target languages, which are used to construct an artificial neural network that can learn the patterns of word and phrase translations. Its most remarkable feature is the ability to actually learn, in an “end-to-end” model, the mapping of the source text associated with the output text [

9]. This enables the system to tackle more complex issues such as syntax and grammar and to provide more fluent translations than other previous MT systems, such as conventional phrase-based translation systems.

Due to the introduction of AI to NMT systems, the quality of MT outputs has significantly increased [

10]. This has implications not only for professional translation but also in the classroom, as students start to shift their attention to MT results rather than to other translation strategies. Although MT seems to be helpful, it also raises some concerns—as pointed out by Liu et al.: “as learners are still at the stage of acquiring translation competence, placing heavy reliance on these tools may impede learners’ development as independent and competent translators” [

2] (p. 2). In addition, the high fluency of NMT outputs makes errors less conspicuous and therefore more difficult to detect, especially by less experienced students [

11].

Despite the challenges posed by MT, it certainly has an important role in translation students’ instruction. In fact, the integration of MT into CAT tools is an example of how MT enhances, rather than competes with, the productivity of human translators [

11].

Thus, given the significant improvements that MT presents, post-editing (PE) has been getting more attention. The term “post-editing” gained more relevance in the world of translation when MT systems started to produce translation outcomes that were seen as “good enough” to be edited and turned into quality translated texts. Thus, it may look as if MT has assumed a significant role in the translation process and PE in the revision process [

12].

Typically, and according to the literature, proficient users of MT (translators or experienced translation students) choose from three options, depending on the text’s goal, life cycle, and target audience: “(1) not correcting the MT output at all, (2) applying light corrections to ensure the understandability of the text or (3) applying a full post-editing which requires linguistic as well as stylistic changes to achieve a text of publishable quality that reads well and does not contain any errors” [

13] (p. 2). Though the level of PE may vary, it would be naive to believe that MT can be trusted without some level of post-editing in order to check for contextual and colloquial appropriateness, as well as any remaining lexical and grammatical problems [

2] or terminological accuracy. It seems, however, that most students are aware of this, as seen in,

Section 4. The awareness shown by students makes their deliberate use of MT even more challenging to assess.

2.3. Related Work on Machine-Translation Use

The published studies on the use of MT, especially those published after 2016 that contemplated the advances made in the area of NMT, are especially aimed at professional translators and/or other language-service providers [

14,

15,

16]. Most of them showed that respondents were already using MT at some point in their translation workflow, whereas others claimed that they were planning to use it in the near future.

Surveys on MT usage among translation students, however, are noticeably scarce. When targeting students, those studies are more focused on MT usage for foreign-language learning [

17,

18,

19]. Closer to our perspective, a 2022 article focused on translation students from Hong Kong, exploring translation learners’ and instructors’ perceptions of the usefulness and influence of MT on translation-competence acquisition, as well as of the need to incorporate MT training into the translation curriculum [

2]. As the authors of the present study acknowledge the importance of including MT in the curriculum (as one of the topics taught in the course units dealing with computer-assisted translation tools, both in the BA and MA programmes (the structures of ISCAP’s BA and MA programmes in translation can be found at

https://www.iscap.ipp.pt/cursos/licenciatura/561 and

https://www.iscap.ipp.pt/cursos/mestrado/580, respectively—accessed on 12 April 2022), new layers were added when dealing with a sample of Portuguese translation students. Since data concerning the use of MT by translation students was scarce, a questionnaire was carried out to confirm whether our Portuguese students were using CAT tools and/or MT as core tool for their translating assignments, as well as to understand students’ perceived satisfaction regarding these tools and their perception of their usefulness (

Section 3).

3. Methods and Procedures

3.1. Sample and Participants

As one of the main purposes of this study was to analyse translations students’ usage and perceptions of translation tools, a survey was conducted during the 2022–2023 school year targeting technical-translation students from ISCAP, a Portuguese HEI. The potential sample included 114 students—90 students enrolled in the bachelor’s degree (BA) in Translation and Administrative Assistance (third-year students) and 24 students enrolled in the masters’ degree (MA) in Specialised Translation and Interpreting (first-year students).

The respondents involved in this study made up a convenience sample, which is a non-probability sampling method [

20]. The use of a convenience sample for this study was justified by the need to focus on a specific population with particular characteristics (translation students), as well as by the difficulty of expanding the research to other universities offering translation courses.

The sample of the experiment consisted of 64 participants out of the 114 students, which means that 55% of the total enrolled students participated in this study.

3.2. Data-Collection Instruments

To clearly confirm whether the translation students were, in fact, using translation tools regularly and to understand which ones were preferred, dimensions such as actual use, perceived satisfaction, and perceived usefulness were analysed through the questions asked. An online questionnaire was made available at the beginning of the second semester of 2022–2023. Students were asked to answer anonymously and voluntarily through the LimeSurvey platform.

Students were involved in this research only in the second semester, since only then would most of them have had a chance to engage with previous translation-related subjects and to develop an opinion about the translation techniques they found most effective. Students from the BA had already taken Technical Translation I (EN–PT), whereas students from the MA had had Economic Translation (EN–PT and ES–PT or FR–PT, RU–PT, or DE–PT) in their first semester. This prior experience is believed to be crucial to making students more aware of their preferences while working on translation projects.

As previously mentioned, the questionnaire was anonymised, because anonymous questionnaires can help reduce social-desirability bias [

21], which occurs when respondents provide answers that they believe are more socially acceptable or desirable than their actual beliefs or behaviours. Anonymous respondents do not have to worry about what their peers, teachers, or other stakeholders may think of their answers, and therefore feel encouraged to deliver honest answers. In this case, anonymity was thought to foster students’ transparency, who may have been hesitant at first to admit that they frequently use MT for their translation assignments, fearing that their responses may have a negative impact on their grades.

The questions in the questionnaire were categorised into three theoretical dimensions (

Table 1) regarding user experience in general.

The questionnaire had 16 questions in total, although, depending on the answers, not all of the questions were shown to the respondents. Conditional branching was implemented, and only 13 questions were shown to every respondent, regardless of their previous answers. The questionnaire was brief, taking under 5 min, because it was essential to ensure the students’ motivation to answer it. Longer questionnaires have been linked to higher perceived burden, reduced participation, and changes in data quality in traditional cross-sectional studies, as stated by Eisele et al. and supported in previous research [

22].

The questions implemented in the questionnaire were divided into demographic questions (Q1 to Q3) and specific questions (Q4 to Q9). The specific questions were as follows (

Table 2):

All questions (except question Q8a) were closed-ended questions, some of which used Likert scales, as will be shown when analysing the results in

Section 4.

The questionnaire was first subjected to a pilot test as a trial run for the study with a sample of eight students (second-year students from the bachelor’s degree in Translation and Administrative Assistance). Though also made up of translation students, this group of students was selected because they were not expected to participate in the final questionnaire. After answering the questionnaire, the respondents of the pilot test were encouraged to participate in a discussion to share their opinions about the survey. The sole topic that came up in the course of the discussion was linked to question Q9, which at first offered the following options: (1) All types; (2) All types except creative texts; (3) Technical; (4) Scientific; (5) Legal and Other. As suggested by the students, “I have never thought about it” was added to the selection as a sixth option. Regarding the other questions, no issues were raised.

4. Results and Findings

The questionnaire focused on the first main objective of this study, i.e., to provide insight into the current use of MT among translation students. For that purpose, it was developed and distributed among technical-translation students. The questionnaire was answered by 45 students from the BA in Translation and Administrative Assistance and 19 students from the MA in Specialised Translation and Interpreting, with ages ranging from 23 to 30 (average age 25) as it is possible to verify (

Table 3).

The following questions were developed based on three dimensions: actual use, perceived satisfaction, and perceived usefulness.

4.1. Actual Use of Translation Tools

The students were asked about how often they use CAT tools (such as Trados) and MT tools (such as Google Translate and DeepL) to complete their translation assignments. Answers were anchored to a six-point frequency Likert scale (Never—0% of my assignments; Rarely—Up to 25% of my assignments; Sometimes—Between 25% and 50% of my assignments; Often—Between 50% and 75% of my assignments; Very often—More than 75% of my assignments; Always—All of my assignments). The results are presented in

Table 4.

Overall, the frequency of use of CAT tools was much lower (x = 2.91) both for BA and MA students compared to the average use of MT tools (x = 5.05). Considering both groups, it is worth noticing that MA students tended to use MT tools (x = 5.53) more often than BA students (x = 4.84), and that BA students were those who used CAT tools more frequently (x = 3.09). A pair of chi-squared tests, however, revealed that these differences in the use of CAT tools (χ2(5) = 3.452; p = 0.631) and MT tools (χ2(4) = 9.039; p = 0.060) between the two programmes were not statistically significant.

The students were provided with a list of potential motivations for the use of CAT and machine-learning tools, as well as with an optional field where they could add others. The results are presented in

Table 5.

Regarding the reasons why students choose to use CAT tools, the answers were evenly distributed, and no option stood out with a statistically significant difference. On the other hand, among the reasons for using MT, some options were more relevant, namely, the speed associated with MT, as well as the fact that it works as a starting point for translation. In addition, most BA and MA students did not consider that MT provides better outputs than them.

The reasons added by the students in the “Others” field mentioned the fact that CAT tools allow translators to keep the original format of the source documents and that MT also plays a relevant role in offering alternative translation options that they had not previously considered.

The following

Table 6 shows that the students who do not use MT tools were fewer than those who do not use CAT tools.

Other specific reasons for not using CAT tools were related to the difficult access to Trados, as the licence was only available while at school.

4.2. Perceived Satisfaction

Perceived satisfaction was evaluated essentially by the need to post-edit the machine-translated texts before handing them in. In general, students tended to be careful before submitting the text to the MT by reading the source text more than once (73.4%), trying to identify beforehand passages of the text that would need more revision (45%), and scanning the output for spelling mistakes, punctuation, etc. (35.9%), as shown in

Table 7.

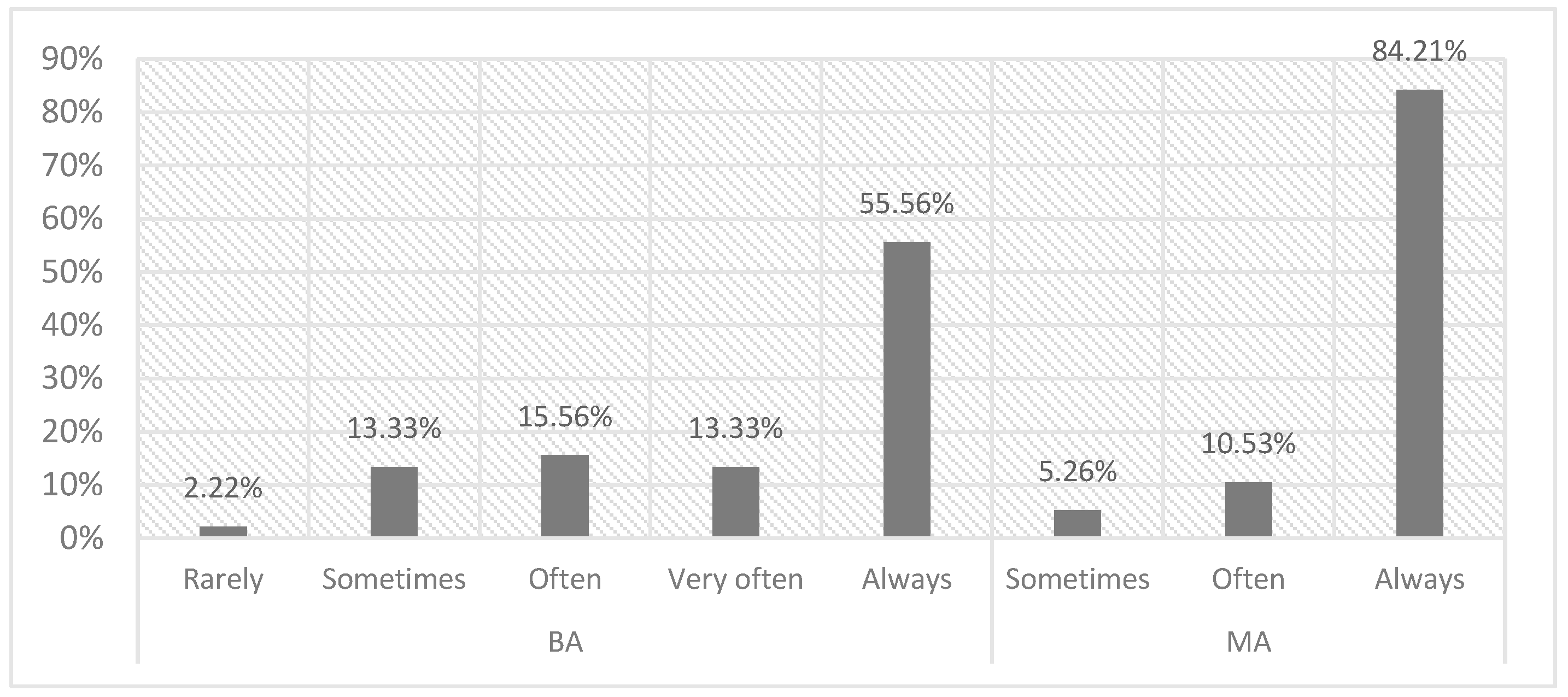

The majority of students claimed to post-edit the translated texts, as presented in

Figure 1. The overall frequency tended to be higher for MA students, although there was no statistical significance (χ

2(4) = 5.704;

p = 0.222).

When asked about the reasons why they tended to post-edit or not, the students revealed that the need to improve fluency (12.5%) and to confirm the accurateness of the semantic information (12.5%) were the main drivers, particularly for BA students. Correcting terminology and verifying grammar, however, were also relevant aspects for both groups (

Table 8).

As previously stated, PE was a frequent task, and only a non-relevant number of students indicated that the quality of MT texts had improved significantly, that the MT output was reliable, and that their linguistic difficulties prevented them from doing a better job than the tool.

4.3. Perceived Usefulness

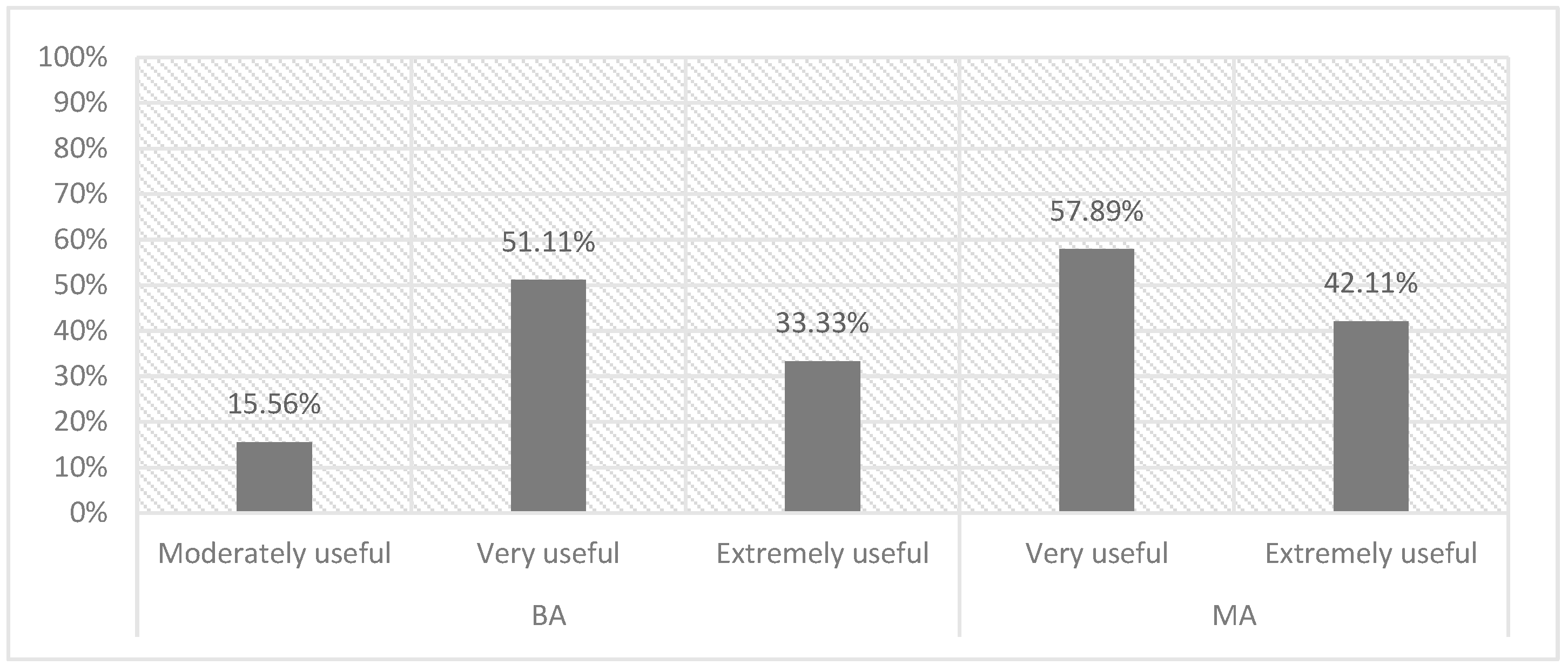

Students were also asked about the perceived usefulness of machine-generated translations, both in general and for different text types. In general (

Figure 2), students tended to find machine-generated translations very or extremely useful. MA students revealed higher perceived usefulness, though without statistical significance (χ

2(4) = 3.357;

p = 0.187).

The main reasons why students believed that machine-generated translations are very or extremely useful were classified according to the categories presented in

Table 9. Some students selected more than one option.

Regarding the use of machine-generated translations per text type, students tended to believe that it is more useful for technical (46.9%), scientific (21.9%), and non-creative texts (20.3%), although a relevant fraction of students (21.9%) had never thought about this issue, especially BA students (

Table 10). The perceived usefulness of machine-generated translations was higher for non-creative texts (26.7%) among BA students and higher for technical (52.6%) and scientific (36.8%) texts among MA students.

BA students also identified other types of use in which machine-generated translations can be useful, such as individual words and plain texts.

As shown by the questionnaire conducted among translation students (BA and MA at ISCAP), students seemed to favour the use of MT in their translation exercises, focusing their work mostly on post-editing tasks rather than exploring other translation strategies and complementary resources.

In the next section, translator teaching and assessment is revisited considering the findings of this survey. With the goal of accommodating MT as a regular working resource in the classroom, more relevance is given to pre- and post-translation activities in order to identify and help develop each student’s proficiency and skills.

5. Proposed Teaching and Assessment Activities

Until not long ago, many translation teachers resisted the introduction of (at the time) new technologies such as computer-assisted translation (CAT) tools, translation memories, and terminology-management systems [

23] on the grounds that those tools failed to show students’ actual command of both the target and source languages and hindered the development of their translation competence. Before that, in the 1990s, spelling and grammar checkers found similar opposition.

Today, such efforts to keep technology out of the translation classroom certainly seem futile. As the market expects translation graduates to be conversant with a wide array of CAT tools, higher-education translation programmes have indeed incorporated them and include them as mandatory skills to be mastered by aspiring translators, in line with the areas of competence defined by the EMT competence framework, which itemise a number of areas of technology competence, including the command of corpus-based CAT and quality-assurance tools, among others [

24]. Fighting students’ use of MT may well be another lost battle. As shown by the results of the questionnaire, students do use it for all types of translation assignments, even adopting it before mastering CAT tools. Moreover, they may be expected to rely still more heavily on machine-generated translations in the near future as the efficiency of NMT systems continues to grow. In this scenario, it is necessary to reconsider the teacher’s approach to instruction and assessment, as a traditional translation assignment can, for the most part, be performed by a machine in a couple of seconds. The prevalent learning-by-doing method must therefore include, rather than translation proper, a wide variety of activities both in and out of the classroom, as well as allow more room for learning by thinking.

It has long been acknowledged that mere translation assignments, i.e., asking students to translate a text, do not guarantee the acquisition of translation competence. In addition, as it does not provide enough information about the translation process followed by students to reach a particular result [

25]—the strategies used, the choices made, the possible solutions considered and left out—student assessment based on translated texts fails to take into account the diverse areas of competence and skills making up translation competence. There is, in fact, a number of “indirect tasks”—understood as “complementary tasks that make it possible to assess some of the important skills involved in translation competence, which cannot be assessed by a simple text translation exercise” [

26] (p. 239; our translation)—currently used by translation teachers, such as pre-translation (explaining the source text, identifying translation problems, whether linguistic or extralinguistic, etc.) and post-translation (error detection, accounting for specific translation choices, explaining the documentation process followed, among others) activities.

The following activities are aimed at third-year students of the BA in Translation and Administrative Assistance and at first-year students of the MA in Specialised Translation and Interpreting, both at ISCAP, though they can be adapted to other translation programmes. Both groups of students attend mandatory course units dealing with computer-assisted translation tools and technical translation. Using texts with varying levels of complexity [

5], depending on students’ expected expertise, indirect tasks carried out in the classroom focus on pre- and post-translation activities considered the most suitable for the development and assessment of translation competence, even when MT is used to translate the entire source text.

5.1. Pre-Translation Tasks

Pre-translation tasks go well beyond processing the source text to optimise MT output. In fact, as efficient pre-editing involves some degree of manipulation of the source text (such as correcting punctuation or simplifying syntactic structures), thorough understanding of the text becomes crucial to keeping its semantic information unchanged. As pointed out by Lonsdale [

27], a number of translation mistakes derive not only from inadequate reformulation of the text in the target language but also from lack of understanding of the source text, which may be caused by insufficient pragmatic or extra-linguistic knowledge, or even by weak reading skills. This point still holds true today, as computers can translate large amounts of text in seconds, but so far they “do not understand a text the way humans can” [

28] (p. 223), a fact at the root of most mistranslations found in machine-translated texts.

It is therefore agreed that, in order to produce “adequate translations of homogeneous quality” [

28] (p. 223), thorough reading and analysis of the source text is essential in order to identify its linguistic and extralinguistic characteristics, particularly those that may pose a particular challenge for translation. Its importance was acknowledged by the questionnaire respondents, and pre-translation tasks may therefore involve a number of different activities to make students aware of those characteristics, such as intensive reading [

29], summarisation, and gist translation [

30], among others. Below, four different pre-translation tasks are described, as they are considered the most efficient for the translation classroom, i.e., explaining the source text, pre-editing, spotting cultural references, and identifying text-type characteristics in the source and target languages.

5.1.1. Explaining the Source Text

Less formal and time-consuming than summarising or gist translation, explaining the ST is a form of very loose paraphrasing in which students provide an oral account—in their mother tongue, which is usually the target language—of its main contents or arguments. This activity is based on Jay Lemke’s argument that “we need to be able to make sense of the text, to read it meaningfully […]. To comprehend it, we need to be able to paraphrase it, restate it in our own words, and translate its meanings into the more comfortable patterns of spoken language” [

31] (p. 136). Although more than 70% of the respondents to the survey claimed to read the source text more than once (see

Table 7), actual understanding of a text does not depend exclusively on the number of readings—one way to be sure is being able to explain it to others.

In particularly challenging texts, the explanation may be prompted by means of comprehension questions, as well as by giving students missing parts of the text to be inserted in the right places or by asking them to provide short titles for each paragraph. As students are usually acquainted with these types of exercises (they are widely used in foreign-language learning), they may work as ice breakers and lead to the actual purpose of the activity, i.e., informal oral paraphrasing of the text. In addition, this activity not only encourages the development of interpersonal skills through peer and group collaboration and assessment but also activates a number of areas of translation competence, as defined by the EMT’s competence framework.

5.1.2. Pre-Editing

As defined by Vieira [

11] (p. 323), pre-editing “consists of ridding the source text of features that are known to pose a challenge for MT” in order to reduce the need for interventions in the post-editing stage. Pre-editing may therefore go from the correction of basic mistakes in the ST (such as spelling, punctuation, and grammar) to more complex issues, such as sentence length, syntactic structure, and the distribution of different parts of speech. As technical communication, however, has the essential goal of conveying objective, factual information in a clear, concise, and useful manner, syntactic and stylistic complexity tends to be low [

32]. This is not the same as saying that technical texts are plain or devoid of linguistic identity [

33], which is why the translation of these texts involves more than just dealing with terminology, even though novel translators usually identify the frequency of specialised terminology as one of the most challenging features of technical translation.

The use of translation memories and specialised glossaries (either provided by the client or built by translators themselves), as well as of subject-matter specialists, makes up the usual “tips” pointed out by the industry to deal with specialised terminology (see, for instance, webpages such as Comtec (

http://clearwordstranslations.com/language/es/overcome-challenges-technical-translations/—accessed on 5 April 2023) or STAR (

https://www.star-ts.com/translation-faq/what-is-terminology/—accessed on 5 April 2023). However, as technical and scientific knowledge and innovation expands (a new feature in a car, a new drug or treatment), new specialised lexical items are constantly being coined, and translators must be able to spot them in the ST, as well as to find or suggest equivalents in the target language. Though terminology extractors are available, whether integrated in CAT tools such as Wordbee or as free online tools (TerMine, topia.termextract, and others), the translator’s capacity to identify specialised terminology in a an ST may be developed through the analysis of comparable corpora, composed of authentic texts produced under comparable situations in the target culture [

34].

Able to provide “more abundant and reliable information to the translator than traditional reference tools, such as dictionaries and parallel texts” [

35] (p. ix), comparable corpora provide more contextual information for the meaning and usage of a term or expression, making them useful to find the most suitable translation equivalent. In addition, “they provide information about text and discourse structure, text-type conventions, etc., and [are] a source of data on translation strategies” [

36] (p. 170), a characteristic that becomes particularly useful in

Section 5.1.4. As the analysis of corpora is a time-consuming task, students may be introduced to this activity by having access to a limited number of comparable texts provided by the instructor, which they must contrast with the ST to be translated. Students are then expected not only to identify the specialised terminology present in the ST but also to suggest possible translation equivalents in the target language. The creation of a dynamic table located in a shared cloud folder makes it easier to retrieve those equivalents once they are validated at a later stage.

5.1.3. Spotting Cultural References

Although the cultural variability of technical texts is rarely high, or, at any rate, not high enough to make the ST unsuitable for MT [

37], the translation of technical texts “is not confined solely to a linguistic microcosm of textual relations. Rather, it constitutes an act of intercultural communication, one whose prime objective is an explicit transfer of knowledge” [

38] (p. 206). Technical texts may therefore contain a variety of cultural references, i.e., specific textually actualised lexical items [

39] denoting the tangible or intangible manifestations of a particular community and its members.

There are several strategies for the translation of these lexical items, from cultural borrowing to the use of functional or cultural equivalents, as direct, word-for-word translation often results in mistranslations or even nonsense. Before any transfer can be attempted, however, cultural references must be spotted by the students, an ability that depends on their knowledge of the source culture, their backgrounds, and their subjective interpretation of the text [

40]. Using that plurality of views to the advantage of the class, a collaborative activity was developed in which students are asked to identify possible cultural references in the ST and explain it to their classmates. This is especially useful for students to learn to identify translation challenges in advance, especially when considering that this practice was followed by less than half of the respondents (see

Table 7). A dynamic table accessible to all of the students through a shared cloud folder is then created in which they are asked to introduce the cultural lexical item and include a brief explanation or description in different columns. For pedagogical purposes, cultural references can be loosely classified as belonging to the material, institutional, and social culture [

41]. This classification can be used as prompts, if necessary, by asking students to identify an item related to material, i.e., a dish, or institutional culture. The dynamic table created by the students can be retrieved during the post-translation stage in order to discuss a suitable equivalent for an item that the machine has failed to account for.

5.1.4. Identifying Text-Type Characteristics in the Source and Target Languages

Due to its simplicity, we follow, for pedagogical purposes, Jody Byrne’s classification of “typical technical documents” into proposals, reports, instructions, and software documentation [

33] (p. 50–54), as it offers clear-cut boundaries between broad-gauged groups of technical texts. Depending on the language pair, text-type characteristics and norms may vary considerably, not only in their structure but also in their grammatical and syntactic features (use of verb tenses, preference for active or passive voice, prevalence of nouns over verbs, use of personal pronouns, to name but a few), texture (use of cohesive devices, such as sentence connectors), degree of formality, or even ideological aspects such as the use of gender-neutral language. Being aware of the norms for writing a particular technical text in the target language is crucial to producing an adequate translation.

Though more time-consuming than providing students with a checklist of characteristics to follow, asking them to make some generalisations from text analysis allows them to activate their powers of observation and inductive reasoning. The comparable texts used in

Section 5.1.2, provided they belong to the same text type, may be used for this activity. More inexperienced students often need some initial prompts, such as drawing their attention to specific passages, or more direct instructions (asking them to look for some specific feature in the text, for example). At the end of the activity, a checklist is produced, which is validated and applied during the post-translation tasks.

5.2. Post-Translation Tasks

Students in the BA in Translation and Administrative Assistance and the MA in Specialised Translation and Interpreting acquire basic post-editing competence and skills during course units on computer-assisted translation tools, as “much of the preliminary content associated with post-editing is technical and focused on interaction with algorithmic machines” [

42] (p. 196). Consequently, post-translation tasks begin after light post-editing [

43] has been applied to the raw MT output, i.e., the correction of grammar and spelling mistakes and of terminological errors, in order to obtain an intelligible translated text that accurately represents the content of the source text.

The following activities were designed for students to obtain translated texts of publishable quality as defined by TAUS [

44], i.e., similar or equal to a text produced by a human translator. The main steps identified comprise cloze exercises, paraphrasing, monolingual checks, and comparing alternative versions, though, as explained below, they may be reduced as the students’ level of expertise grows.

5.2.1. Cloze Exercises

A canonical activity in foreign-language acquisition, the cloze, or fill-in-the-blank exercise, is a type of exercise wherein a passage or sentence is presented with certain words or phrases removed and learners are tasked with filling in the blanks. Due to its versatility and the role it plays in vocabulary building and comprehension practice, the cloze has long been used in translator and interpreter training [

45] to assess the student’s mastery in the source and/or target language. The cloze, however, can also be used to develop students’ awareness of alternative versions for a target text, as well as their paraphrasing abilities.

By presenting one or more versions of the same translated text, in addition to the machine-translation output, the cloze may prompt students to use voluntary shifts [

46] and modulations [

47] to complete the texts, such as changes in word class and sentence structure, active/passive voice, collocations, and others. The cloze is also useful as a preliminary activity leading to the next step, paraphrasing, though it may be skipped at more advanced levels, namely, students pursuing a master’s degree. As MT has reduced efficiency at handling specialised language (unless the system’s database is properly stocked with the necessary vocabulary), fill-in-the-blank activities may also be used to call students’ attention to terminological issues in technical and scientific fields, encouraging them to conduct additional searches in parallel and comparable texts and other sources.

5.2.2. Paraphrasing

Considered a form of intralingual translation [

48], paraphrasing may be defined as rewording without loss of meaning and is one of the areas of translation competence listed by the EMT competence framework [

24]. In the context of translation, paraphrasing requires not only understanding, analysing, and evaluating the information found in the source material (now the target text) but also a careful selection and organisation of the information. The survey results also revealed that students tended to use MT for that purpose, since some of the responses classified as “MT as a method of providing other translation choices” (see

Table 9) actually referred to the fact that MT is able to provide them with alternative translations to the ones they have. However, that is not the proper method for honing paraphrase competence, which is why it is a type of activity that requires more formal training.

There are numerous downloadable and online paraphrasing tools that students may and do use, such as InstaText, Quillbot, Rephraser, Wordtune, and a long list of others, as well as paraphrasing apps (like PrepostSEO or Enzipe). NMT systems are also capable of paraphrase generation, as pointed out by the questionnaire respondents. Though they prove useful, students’ ability to paraphrase without electronic aids should be developed. In this activity, less experienced learners are given a variety of prompts as they progress from sentence to paragraph level. These prompts may be either positive or negative, i.e., elements that they must use (the beginning of a sentence/paragraph, a word class, merging or splitting sentences/paragraphs, etc.) or avoid (linking verbs, subordinate clauses, gender-marked words, among other prompts). If carried out individually, this activity leads to the production of fairly different versions, which are compared after the monolingual check.

5.2.3. Monolingual Check

Whereas light post-editing is performed at the segment level, with source and target texts presented side by side with the CAT tool, the monolingual check (assuming that the semantic content of the ST has been preserved) is an activity carried out in view of the entire target text, as taking into account the text as a whole makes it possible to assess its adequacy to the norms and conventions applicable to that particular text type in the target language. The TT is therefore examined according to the checklist produced in

Section 5.1.4, making the necessary adaptations in the text structure, as well as in the use of cohesion devices (addition or elimination of sentence connectors and correction in the frequency of repetitions, for example). The necessary degree of formality is also analysed, in addition to possible grammatical and syntactic adjustments.

The dynamic table created in

Section 5.1.3 is now retrieved, and the most suitable strategies are discussed and compared in order to deal with the cultural references in the ST, from glossing or replacing them with cultural equivalents to omitting them altogether. It is important that students realise that the choice of strategy is text-specific; for this purpose, a set of alternative translation strategies may also be discussed by changing the target audience or by introducing a different type of text containing the same reference in the source language.

In addition to improving the adequacy of the target text, the monolingual check raises learners’ awareness of the variety of requirements for a good translation, promotes “advanced levels of target language attainment” [

49] (p. 191), and encourages students to handle the target text as an autonomous entity that has to work and be effective in the target language.

5.2.4. Comparing Alternative Versions

Resulting from the activities carried out in

Section 5.2.2 and

Section 5.2.3, all students have, at this point, produced their own individual target texts, which they hand in through the Moodle platform. After the files have been anonymised, they are all made available to the class, who examine them, locating and describing the differences between them [

3]. In the course of the activity, the author of the target text under examination is invited to identify him/herself, describe the strategies followed, and account for their choices. The purpose of this activity is not to choose one version over the others but rather to encourage the reconstruction of the entire process leading to each target text.

Even at the risk of fostering over-editing, thorough post-translation activities aim at developing and consolidating core translation competence, such as good language and writing skills, analytic and critical skills, and documentation skills, among others. As concluded by Yamada [

49] (p. 102), “NMT+PE requires almost the same competence as ordinary human translation. Therefore, translation training is necessary for students to be able to shift their attention to the right problems.” On the other hand, if it is true that “students or novice translators might tend to accept errors (calques) because they have become used to MT […], or they might accept NMT proposals because the text reads fluently, even where there are mistranslations” [

50] (p. 226), those core areas of competence prove to be as necessary as they have always been.

6. Conclusions

This study aimed to provide insight into the current use of NMT among technical-translation students and to put forward teaching and assessment activities for translation classes that can integrate the current NMT use.

Although the size of the sample does not allow for the generalisation of the results, the results suggest that there is a growing preference for the use of machine translation. The results of the questionnaire completed by ISCAP’s translation students were able to confirm the students’ deep reliance on MT software to complete translation tasks. Students are eager users of MT, particularly since the development of NMT, and heavily rely on it to perform translation assignments, a fact that poses challenges for translation teaching and assessment.

Quality translator teaching and assessment is important for learners to become full-fledged translators able to succeed as language-service providers. Improving attention to detail, identifying one’s own strengths and weaknesses, and providing targeted feedback to support one’s learning sets students on the right path to achieving that goal.

In the age of NMT, teachers therefore need to implement other strategies that go beyond mere translation exercises that can be performed in a matter of seconds by using machine translation. Those strategies will hopefully contribute to gaining more insight into students’ skills and provide them with more meaningful strategies that help them to properly reflect on their translation tasks.

In this context, this study put forward a sequence of activities intended to fill a gap in the literature, related to translation students’ training and assessment in the NMT era. These activities are also expected to open the debate about the best practices required to integrate the most recent technological advancements into the translation classroom through the adjustment of training and assessment strategies in order to encourage students’ acquisition and development of relevant skills.

This set of activities needs to be implemented and tested in the coming course years to confirm the efficacy of secondary tasks alone in the development of translation skills in contrast with more traditional translation assignments. By focusing on different pre- and post-translation tasks, such as those presented in this article, future research will hopefully reveal students’ strengths and weaknesses, as well as provide valuable insights into learners’ translation proficiency and their ability to understand and convey complex ideas, such as those usually contained in technical texts.

Author Contributions

Conceptualization, C.T., L.T. and S.R.; methodology, C.T. and L.O.; formal analysis, C.T., L.T. and L.O.; investigation, C.T., L.T., S.R. and L.O.; resources, C.T., L.T., S.R. and L.O.; data curation, C.T. and L.T.; writing—original draft preparation, C.T. and L.T.; writing—review and editing, C.T., L.T., L.O. and S.R.; visualization, L.O.; supervision, C.T.; project administration, C.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research has received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fact.MR End-Use Industry—Global Market Insights 2022 to 2032. Available online: https://www.factmr.com/report/language-services-market (accessed on 2 February 2023).

- Liu, K.; Kwok, H.L.; Liu, J.; Cheung, A.K.F. Sustainability and Influence of Machine Translation: Perceptions and Attitudes of Translation Instructors and Learners in Hong Kong. Sustainability 2022, 14, 6399. [Google Scholar] [CrossRef]

- Mordor Intelligence Machine Translation Market Analysis—Industry Report—Trends, Size & Share. Available online: https://www.mordorintelligence.com/industry-reports/machine-translation-market (accessed on 4 February 2023).

- Pringle, A.S.; O’Keefe, S. Technical Writing 101: A Real-World Guide to Planning and Writing Technical Documentation, 3rd ed.; Scriptorium Pub. Services: Research Triangle Park, NC, USA, 2009; ISBN 978-0-9704733-6-3. [Google Scholar]

- Cabré, M.T.; da Cunha, I.; SanJuan, E.; Torres-Moreno, J.-M.; Vivaldi, J. Automatic Specialized vs. Non-Specialized Text Differentiation: The Usability of Grammatical Features in a Latin Multilingual Context. In Languages for Specific Purposes in the Digital Era; Bárcena, E., Read, T., Arús, J., Eds.; Educational Linguistics; Springer International Publishing: Cham, Switzerland, 2014; Volume 19, pp. 223–241. ISBN 978-3-319-02221-5. [Google Scholar]

- Bowker, L.; Ciro, J.B. Machine Translation and Global Research: Towards Improved Machine Translation Literacy in the Scholarly Community, 1st ed.; Emerald Publishing: Bingley, UK, 2019; ISBN 978-1-78756-722-1. [Google Scholar]

- Lee, S.-M. The Effectiveness of Machine Translation in Foreign Language Education: A Systematic Review and Meta-Analysis. Comput. Assist. Lang. Learn. 2023, 36, 103–125. [Google Scholar] [CrossRef]

- Castilho, S.; Moorkens, J.; Gaspari, F.; Calixto, I.; Tinsley, J.; Way, A. Is Neural Machine Translation the New State of the Art? Prague Bull. Math. Linguist. 2017, 108, 109–120. [Google Scholar] [CrossRef]

- Wu, Y.; Schuster, M.; Chen, Z.; Le, Q.V.; Norouzi, M.; Macherey, W.; Krikun, M.; Cao, Y.; Gao, Q.; Macherey, K.; et al. Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation. arXiv 2016, arXiv:1609.08144. [Google Scholar] [CrossRef]

- Jooste, W.; Haque, R.; Way, A. Knowledge Distillation: A Method for Making Neural Machine Translation More Efficient. Information 2022, 13, 88. [Google Scholar] [CrossRef]

- Nunes Vieira, L. Post-Editing of Machine Translation. In The Routledge Handbook of Translation and Technology; O’Hagan, M., Ed.; Routledge: Abingdon, UK, 2019; pp. 319–335. ISBN 978-1-138-23284-6. [Google Scholar]

- Carmo, F.; Moorkens, J. Differentiating Editing, Post-Editing and Revision. In Translation Revision and Post-Editing; Koponen, M., Mossop, B., Robert, I., Scocchera, G., Eds.; Routledge: London, UK, 2020; pp. 35–49. ISBN 978-1-00-309696-2. [Google Scholar]

- Vardaro, J.; Schaeffer, M.; Hansen-Schirra, S. Translation Quality and Error Recognition in Professional Neural Machine Translation Post-Editing. Informatics 2019, 6, 41. [Google Scholar] [CrossRef]

- Gaspari, F.; Almaghout, H.; Doherty, S. A Survey of Machine Translation Competences: Insights for Translation Technology Educators and Practitioners. Perspectives 2015, 23, 333–358. [Google Scholar] [CrossRef]

- Farrell, M. Do Translators Use Machine Translation and If so, How? Results of a Survey Held among Professional Translators. 2022. Available online: https://www.researchgate.net/profile/Michael-Farrell-34/publication/368363793_Do_translators_use_machine_translation_and_if_so_how_Results_of_a_survey_held_among_professional_translators/links/642c1ee74e83cd0e2f8dc52d/Do-translators-use-machine-translation-and-if-so-how-Results-of-a-survey-held-among-professional-translators.pdf (accessed on 1 May 2023). [CrossRef]

- CIOL Insights: Building Bridges|CIOL (Chartered Institute of Linguists). Available online: https://www.ciol.org.uk/ciol-insights-building-bridges-0 (accessed on 10 March 2023).

- Deng, X.; Yu, Z. A Systematic Review of Machine-Translation-Assisted Language Learning for Sustainable Education. Sustainability 2022, 14, 7598. [Google Scholar] [CrossRef]

- Kelly, R.; Hou, H. Empowering Learners of English as an Additional Language: Translanguaging with Machine Translation. Lang. Educ. 2022, 36, 544–559. [Google Scholar] [CrossRef]

- Beiler, I.R.; Dewilde, J. Translation as Translingual Writing Practice in English as an Additional Language. Mod. Lang. J. 2020, 104, 533–549. [Google Scholar] [CrossRef]

- Etikan, I. Comparison of Convenience Sampling and Purposive Sampling. AJTAS 2016, 5, 1. [Google Scholar] [CrossRef]

- Larson, R.B. Controlling Social Desirability Bias. Int. J. Mark. Res. 2019, 61, 534–547. [Google Scholar] [CrossRef]

- Eisele, G.; Vachon, H.; Lafit, G.; Kuppens, P.; Houben, M.; Myin-Germeys, I.; Viechtbauer, W. The Effects of Sampling Frequency and Questionnaire Length on Perceived Burden, Compliance, and Careless Responding in Experience Sampling Data in a Student Population. Assessment 2022, 29, 136–151. [Google Scholar] [CrossRef] [PubMed]

- Bowker, L. Computer-Aided Translation Technology: A Practical Introduction; University of Ottawa Press: Ottawa, ON, Canada, 2012; ISBN 978-0-7766-1567-7. [Google Scholar]

- European Masters in Translation EMT Competence Framework. Available online: https://commission.europa.eu/news/updated-version-emt-competence-framework-now-available-2022-10-21_en (accessed on 10 April 2023).

- Galán-Mañas, A.; Hurtado Albir, A. Competence Assessment Procedures in Translator Training. Interpret. Transl. Train. 2015, 9, 63–82. [Google Scholar] [CrossRef]

- Martínez, N. Évaluation et Didactique de la Traduction le cas de la Traduction dans la Langue Étrangère; Universitat Autònoma de Barcelona: Bellaterra, Spain, 2001; ISBN 978-84-699-7850-4. [Google Scholar]

- Beeby Lonsdale, A. Teaching Translation from Spanish to English: Worlds beyond Words; Didactics of translation series; University of Ottawa Press: Ottawa, ON, Canada, 1996; ISBN 978-0-7766-0399-5. [Google Scholar]

- Schmitt, P.A. Translation 4.0—Evolution, Revolution, Innovation or Disruption? Leb. Sprachen 2019, 64, 193–229. [Google Scholar] [CrossRef]

- Nuttall, C.E. Teaching Reading Skills in a Foreign Language; Macmillan books for teachers; 12. print.; Hueber: Ismaning, Germany, 2014; ISBN 978-1-4050-8005-7. [Google Scholar]

- Ihnatenko, V. Main Principles of Teaching Gist and Abstract Translation. In Proceedings of the Materials of the XVI International Scientific and Practical Conference, Sheffield, UK, 28 February–7 March 2020; Science and Education Ltd.: Sheffield, UK, 2020; Volume 6, ISBN 978-966-8736-05-6. [Google Scholar]

- Lemke, J.L. Making Text Talk. Theory Pract. 1989, 28, 136–141. [Google Scholar] [CrossRef]

- Rus, D. Technical Communication as Strategic Communication. Characteristics of the English Technical Discourse. Procedia Technol. 2014, 12, 654–658. [Google Scholar] [CrossRef]

- Byrne, J. Technical Translation: Usability Strategies for Translating Technical Documentation; Springer: Dordrecht, The Netherlands, 2006; ISBN 978-1-4020-4652-0. [Google Scholar]

- Snell-Hornby, M. Translation Studies: An Integrated Approach; John Benjamins Publishing Company: Amsterdam, The Netherlands, 1988; ISBN 978-90-272-2056-1. [Google Scholar]

- Aston, G. Foreword. In Benjamins Translation Library; Beeby, A., Rodríguez Inés, P., Sánchez-Gijón, P., Eds.; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2009; Volume 82, pp. ix–x. ISBN 978-90-272-2426-2. [Google Scholar]

- Olohan, M. Introducing Corpora in Translation Studies; Routledge: Abingdon, UK, 2004; ISBN 978-1-134-49221-3. [Google Scholar]

- King, P. Small and Medium-Sized Enterprise (SME) Translation Service Provider as Technology User: Translation in New Zealand. In The Routledge Handbook of Translation and Technology; Routledge: Abingdon, UK, 2019; ISBN 978-1-315-31125-8. [Google Scholar]

- Folaron, D. Technology, Technical Translation and Localization. In The Routledge Handbook of Translation and Technology; Routledge: Abingdon, UK, 2019; ISBN 978-1-315-31125-8. [Google Scholar]

- Aixela, J.F. Culture Specific Items in Translation. In Translation, Power, Subversion; Vidal, M.C.A., Ed.; Topics in translation; Multilingual Matters: Clevedon, UK; Philadelphia, PA, USA, 1996; pp. 52–78. ISBN 978-1-85359-351-2. [Google Scholar]

- González Davies, M.; Scott-Tennent, C. A Problem-Solving and Student-Centred Approach to the Translation of Cultural References. Meta 2005, 50, 160–179. [Google Scholar] [CrossRef]

- Tallone, L. (Ed.) Do Texto ao Contexto—Novas Propostas Pedagógicas para a Tradução Técnica, em Quatro Línguas; De Facto Editores: Santo Tirso, Portugal, 2020; ISBN 978-989-8557-74-2. [Google Scholar]

- Konttinen, K.; Salmi, L.; Koponen, M. Revision and Post-Editing Competences in Translator Education. In Translation Revision and Post-Editing; Koponen, M., Mossop, B., Robert, I.S., Scocchera, G., Eds.; Routledge: London, UK; New York, NY, USA, 2020; pp. 187–202. ISBN 978-1-00-309696-2. [Google Scholar]

- Hu, K.; Cadwell, P. A Comparative Study of Post-Editing Guidelines. In Proceedings of the 19th Annual Conference of the European Association for Machine Translation, Riga, Latvia, 30 May–1 June 2016; pp. 34206–34353. [Google Scholar]

- Massardo, I.; Jaap van der Meer; Sharon O’Brien; Fred Hollowood; Aranberri, N.; Drescher, K. MT Post-Editing Guidelines; TAUS Signature Editions: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Lambert, S. The Cloze Technique as A Pedagogical Tool for the Training of Translators and Interpreters. Target 1992, 4, 223–236. [Google Scholar] [CrossRef]

- Catford, J.C. A Linguistic Theory of Translation: An Essay in Applied Linguistics, 5th ed.; Language and language learning; Oxford Univ. Press: Oxford, UK, 1978; ISBN 978-0-19-437018-9. [Google Scholar]

- Vinay, J.-P.; Darbelnet, J. Stylistique Comparée du Français et de l’Anglais: Méthode de Traduction; Bibliothèque de stylistique comparée; Nouvelle éd. revue et corr.; Didier: Paris, France, 1977; ISBN 978-0-7750-0469-4. [Google Scholar]

- Brower, R.A. (Ed.) Roman Jakobson on Linguistic Aspects of Translation. In On Translation; Harvard University Press: Harvard, MA, USA, 1959; pp. 232–239. ISBN 978-0-674-73161-5. [Google Scholar]

- Yamada, M. Language Learners and Non-Professional Translators as Users. In The Routledge Handbook of Translation and Technology; Routledge: Abingdon, UK, 2019; ISBN 978-1-315-31125-8. [Google Scholar]

- Guerberof Arenas, A.; Moorkens, J. Machine Translation and Post-Editing Training as Part of a Master’s Programme. J. Spec. Transl. 2019, 31, 217–238. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).