1. Introduction

Judging contests train students to conduct logical analyses, and enable them to develop independent thoughts and to apply them to the materials being judged [

1]. Soil judging is an educational and practical, in-person, hands-on learning activity organized yearly at various levels [

2,

3]. Future Farmers of America (FFA) and 4-H (Americas’ largest youth development organization) conduct programs for high-school-level soil judging, while the North American Colleges and Teachers of Agriculture (NACTA) association organizes a college-level contest. Likewise, the Soil Science Society of America (SSSA) and the American Society of Agronomy (ASA) organize separate college-level contests at regional (during fall) and national (spring) levels [

4]. Under the SSSA-ASA format, states in the US are divided into seven regions (Region I to VII). Each region hosts a regional competition during the fall (the number of participating teams vary by region) to determine which teams will qualify to compete at the national contest held each spring [

4]. Soil judging contests such as these provide platforms for training undergraduate students to identify, describe, and interpret physical, chemical, hydrological, and biological characteristics of soils and how they are classified.

Participating in experiential learning activities such as soil judging is multi-faceted in helping students succeed. Studies have documented several benefits of hands-on and co-operative learning activities like soil judging contests. For example, soil judging contests motivate a student’s learning process by working independently and collaboratively as a team with other students, promote student engagement due to coach–student interactions, and help explore, describe, and interpret soils in their in-situ state [

2,

5,

6]. Rees & Johnson [

4] (2020) reported that in-person soil judging contests help students understand location-specific soils and develop positive attitudes toward soil science. In addition, soil judging contests increase students’ critical thinking, confidence, and interpersonal and problem-solving skills [

3,

4,

5], each of which is essential to pursue soil-related careers [

7]. Further, the team judging part of the soil judging contest and the opportunity to interact with other students and coaches from different universities can create a co-operative learning environment, which can help students to learn, and problem solve in real-time through working with their peers, improve their communication skills, and prepare students to work as a team member [

8]. In essence, soil judging contests allow students to learn about various soil types in a given region and interact with students and peers from their university and other participating schools [

3,

5,

6]. The framework of the SSSA-ASA regional and national contests is the same, but the format of individual soil judging contests may differ. For example, in the fall of 2019, Region IV organized an intensive week-long in-person soil judging contest with multiple days of field practice and a final day of competition at the end of the week. On the other hand, Region VII (limited by resources, long-distance travel, and potentially very remote contest sites) used virtual contests with physical soil samples used for competition mailed to participating teams.

Researchers have evaluated and made recommendations to improve soil judging competition formats such as these over time. For in-person competitions in the field, [

9] recommended providing many horizons and depths during soil judging, including more learning experiences in conjunction with the contest to improve the students’ learning experience. Cooper [

5] tested group judging, implemented nationally in 2003 [

10]. He reported that 96% of the students expressed their desire to continue group judging, which has become one of the most popular sections students enjoy in current competition formats. The authors of [

6] indicated that soil judging concepts should be modified to expose students and teach observational, descriptive, and critical thinking skills to apply their learning to real-world experiences. Likewise, [

6] reported that 70% of the participant students agreed or strongly agreed to make jumble judging (students were randomly assigned to teams containing members from different universities) a part of future contests, and 54% of students cited jumble judging among the best parts of the contest. Major challenges of virtual learning can be the inability to conduct traditional laboratory exercises and field trips, low student engagement, and less instructor interaction [

11,

12]. However, others have reported that virtual laboratories and field exercises can be as effective as traditional lab exercises and demonstrations [

12,

13,

14]. More recently, evaluations of virtual soil judging contests indicate that this format can provide increased soil diversity seen by student competitors, and overall accessibility for students to be able to participate [

15]. Furthermore, virtual soil judging contests and material can be adopted by smaller universities or those universities that lack traditional soil- or agriculture-specific departments in order to provide interested students with the necessary training and educational resources needed to prepare for competition in soil judging contests. In this way, incorporating new approaches to soil judging contests can expand the reach of soil science education and improve the field’s ability to meet science-trained workforce preparation in the soil science discipline.

In 2020, the National Collegiate Soils Contest (NCSC) canceled the national soil judging contest scheduled at Ohio State University due to the COVID-19 pandemic. This cancellation forced many regions to either cancel, reschedule, or redesign their regular soil judging contests for 2020. These decisions were critical for student learning and development, given that regional contests are often the first for many students [

10], and cancellations of soil judging contests likely created delays in the students’ learning process resulting from inabilities to apply and test their knowledge of soils in situ. Therefore, in response to the broad-scale shift to remote learning prompted by the COVID-19 pandemic, we developed a virtual soil judging contest using previously available soil profile data for soils in SSSA-ASA Region IV to keep students engaged in soil judging and continue their learning and engagement with soil science. The objectives of this study were (i) to quantify students’ and coaches’ perspectives on virtual soil judging contests and (ii) to determine if the virtual soil judging contest can be a viable alternative to in-person contests. The data were collected from the students and coaches that participated in the virtual regional (Region IV) contest.

2. Materials and Methods

The SSSA-ASA Region IV competition includes participants from the states of Texas, Oklahoma, Arkansas, Louisiana, and Mississippi. For the 2020 virtual soil judging contest, six universities agreed to participate (University of Arkansas, Texas A&M-College Station, Texas A&M-Kingsville, West Texas A&M University, Texas Tech University, and Tarleton State University).

2.1. Contest Preparation

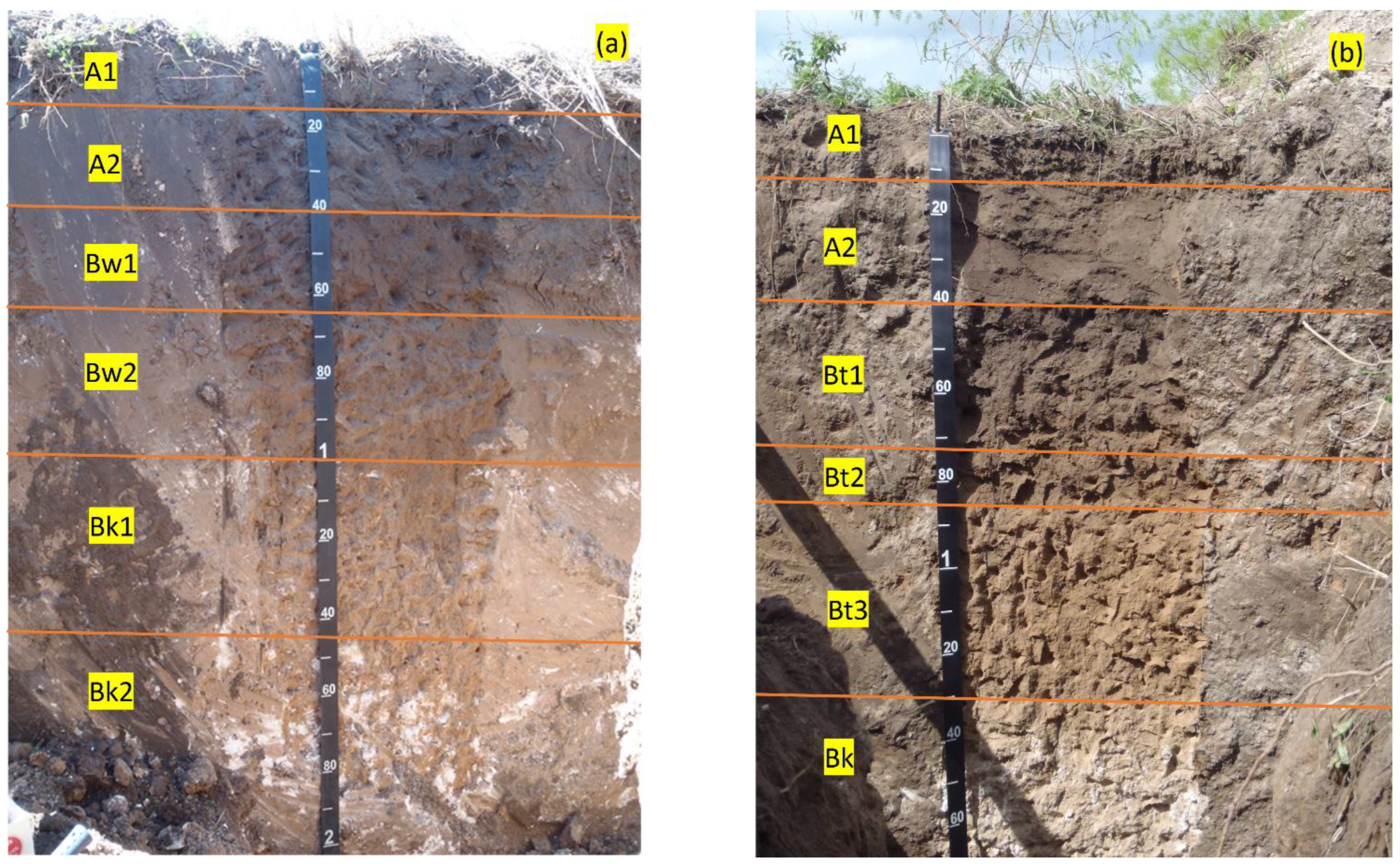

Soil profile pictures used in the contest were obtained from Natural Resources Conservation Service (NRCS) archives, and were provided by Gary Harris (NRCS soil scientist). For the competition, we used 15 total soil profile pictures representing typical South Texas soils. Out of 15 archived soil profile pictures, 10 were used for practice, consistent with the historical in-person contest of the region. The remaining five were used for the official contest.

The soil profile pictures were compared with the National Soil Information System (NASIS) United States Department of Agriculture (USDA) pedon description to describe soil morphological, physical, and chemical characteristics and develop the keys for the competition. The NASIS information was used as a reference or baseline from which to develop the soil profile morphological characteristics used for the contest (

https://nasis.sc.egov.usda.gov/NasisReportsWebSite/limsreport.aspx?report_name=WEB-Masterlist (accessed on 10 September 2019). For example, soil horizon depth, soil color, lower boundary, and boundary distinctness were modified based on the soil profile pictures (rather than simply copying and pasting the morphological characteristics from the NASIS pedon description). Several USDA-NRCS soil scientists assisted as independent reviewers to compare and revise the modified morphological characteristics. Likewise, for soil texture and soil chemical properties, such as soil pH, organic carbon, base saturation, sodium adsorption ratio (SAR), and calcium carbonate equivalent (CCE), the National Cooperative Soil Survey (NCSS) primary soil characterization database (

https://ncsslabdatamart.sc.egov.usda.gov/ (accessed on 12 September 2019) was used. Actual landscape pictures were available for some soil profiles. If the landscape pictures were unavailable, we used Google Maps to assess the landscape for the 10 soil profiles used for the virtual practice round.

Practice materials were sent to participating universities two weeks prior to the competition to ensure adequate team preparation time. Most students were given two weeks to review the practice materials. A coaches meeting was held via Zoom

® to clarify any questions about the practice materials. Two representative soil profile pictures used in the virtual context are provided (

Figure 1a,b).

Since students would be unable to describe morphology without physical samples, we modified the typical face-to-face scorecard and provided students with a minimum level of soil morphological and site characteristics (e.g., percent sand and silt, soil color, structure, slope gradient, hillslope profile, and aerial landscape pictures) needed to describe their soil interpretations and complete the sections pertaining to soil taxonomy. A sample scorecard developed for the event, which could be used as a reference for similar virtual contests in the future, is provided (

Figure 2).

The remote soil judging competition was hosted on Friday, 30 October 2020, by Texas A&M University-Kingsville. We held a 90 min coaches meeting on Wednesday, 27 October, to discuss the logistics of the contest. On competition day, we used five soil profile pictures for the contest: four individual profile pictures and one as a team profile picture, consistent with a face-to-face competition. The competition materials were emailed to all coaches two hours before the competition to provide enough time to print and share materials with student competitors. We then connected coaches and participating students on a video call via Zoom. Students were given 45 min to complete each soil pit, consistent with an in-person contest. The coaches then scanned and returned their student’s scorecards immediately after students completed each virtual soil profile. Coaches from one university graded the scorecard of another university to avoid coaches grading their own students. A coach from a non-participating university helped to verify the final scorecard. The results were announced on 6 November 2020, via a Zoom meeting.

2.2. Data Acquisition and Analysis

Post-contest survey questions were designed to assess both student learning and the coaches’ experiences in a virtual soil judging contest. The post-contest student survey contained 15 questions, reflecting on the contest’s quality and format, students’ self-evaluation of their understanding after the contest, and the feasibility of virtual contests in the future (

Table 1 and

Table 2). The survey for coaches included 16 questions, reflecting on the quality of the contest, their student’s ability to apply their knowledge, the student learning experience, the coach’s experience in preparation for the virtual compared to past face-to-face in-person contests, and time (

Table 3 and

Table 4). The surveys were distributed electronically via Survey MonkeyTM from 30 October to 15 November 2020. All responses were evaluated on a five-point Likert scale [

16]—strongly disagree (1), disagree (2), neither agree nor disagree (3), agree (4), and strongly agree (5). Sample sizes of

n = 31 and

n = 6, respectfully, for students and coaches were attained, representing 86% and 100% response rates.

We estimated mean, mode, and standard deviation (SD) of the collected data. By providing mode and standard deviation, we believe we are adequately covering the nature of the responses. Range, or simply the difference between high and low values, is less informative given the scale of the survey (1 to 5), so that any one outlier in the respondents will generate a large range, but such responses will have less impact on the standard deviation and no impact on the mode.

4. Discussion

In this study, we tried to understand the potentiality, feasibility, and benefits of virtual soil judging contests during the COVID-19 pandemic using a survey instrument administered to both students and coaches. Because of the high response rates (86% of students and 100% of coaches), we were able to complete statistical analyses of the surveys, but small sample sizes (

n = 31 and

n = 6) may limit the interpretation of the results or extrapolation to other regions or soil judging contexts. Region IV students gained valuable soil judging experience based on the survey responses. They were satisfied with the contest’s quality, clarity of the instruction, format, pre-event communications, and accuracy in measuring their actual soil judging knowledge and skills (

Table 3). Interestingly, students enjoyed the flexibility of the contest compared to the traditional in-person contest, which is typically intense and conducted for a whole week (

Table 4). Web-based, online, and virtual learning opportunities have been shown to provide desired flexibility to students [

17,

18], indicating that innovations and virtual learning platforms are emerging and will potentially continue to grow even after the COVID-19 pandemic. Thus, the virtual soil judging contest we used in this study has the potential to continue in some form in the future (e.g., providing additional training, judging, and contest interpretation experiences prior to in-person regional and national contests).

However, there were some challenges with the format and testing of students’ skills with virtual soil judging. For example, soil morphological characteristics, such as soil texture, color, structure, and effervescence, and site characteristics, such as slope gradient, etc., were challenging to describe in the virtual soil judging contest. Thus, we provided this information to the students during the contest. Owen et al. [

3] also indicated that virtual soil judging contests did not allow students to gain significant learning experience in describing and identifying soil texture, soil structure, recognizing indicators of high-water tables, etc. Further, students mentioned that staying focused, understanding, and demonstrating their skills in the virtual soil judging contest was challenging.

The study indicates that students prefer face-to-face in-person contests to virtual soil judging contests (

Table 2), perhaps due to difficulties identifying some soil characteristics with profile pictures, lack of interaction with peers, and ability to demonstrate their skills during the virtual contest. In an upper-division pedology class, [

18] reported similar results, where students favored in-person field experiences over remote delivery options. In addition, in-person and hands-on learning is difficult to replicate virtually [

2,

19], which might limit students’ hands-on field experience and learning opportunities [

4]. Perhaps, digging fresh soil pits and providing a fresh soil profile picture (3-D pictures, if possible) with better qualities may have improved the students’ experience with the virtual contest. Further, Ref. [

3] recommended that providing filmed videos for the profile descriptions as an example during the contest preparation may help partially replicate and enhance the hands-on experience.

Participants in the Region IV virtual soil judging contest indicated that they enjoyed the flexibility of the virtual contest. Students in Region V also reported similar results, and indicated that they enjoyed the scheduling flexibility during their virtual contest [

3]. Participants in the study reported that the virtual soil judging contest format did not affect their focus. This corroborates the results that [

3] reported, where students reported fewer distractions and disturbances during the virtual compared to typical in-person contests, creating a favorable learning environment.

This study also includes the coach’s perspective on the virtual soil judging contest, the first-ever virtual soil judging contest hosted in Region IV. Therefore, coaches’ experience and feedback on this new soil judging format will benefit future virtual contests. The study indicates that coaches were satisfied with the format of the event, clarity of instruction to students, and quality of the instruction given to students during the virtual soil judging contest. However, coaches were slightly skeptical or neutral about the accuracy of the virtual soil judging contest to measure student knowledge and skills on soil judging (

Table 4). Like the students, the coaches preferred face-to-face to virtual soil judging contests. The coaches also indicated that they prepared more for the virtual contest than in-person, which might be due to their first-time participation in the virtual soil judging contest in Region IV, spending more time to familiarize themselves with the online logistics, and understanding newly developed specific guidelines for the virtual contest. Hill et al. [

20] documented a significant benefit of workshops to coaches in terms of familiarizing them with the soils near the contest site and the descriptive techniques used in the contest area. Thus, coaches (especially those not familiar with the virtual contest) might need workshops or other platforms to familiarize themselves with the new format of the virtual soil judging contest to be more effective in helping the students better prepare for the future.

The coaches also indicated that they might select a hybrid option (some virtual and some face-to-face practice) if it could be implemented with well-developed guidelines. This question was not included in the student survey, as some participants needed to be more familiar with the face-to-face contest and were first-time participants in the soil judging contest. Thus, the hybrid option can be a way forward, as it may help save travel requirements and economically benefit the universities. For instance, the traditional format of regional soil judging, which takes place face-to-face for a whole week, can be shortened to three days of face-to-face meetings (two days of practice and one day of contest) if we can incorporate some virtual practices.

Further, the virtual soil judging contest can provide increased accessibility to participants. For example, the guidelines we used in the contest could be replicated using available profile pictures to develop practice materials to train students on soil science in the future. It can be an opportunity to establish a cost-effective learning platform for future generations of soil scientists and communities with limited resources as an alternative to face-to-face in-person training. The virtual soil judging format we developed can also be adapted to help students in small schools that lack soil- and agriculture-specific departments, and thus lack student familiarity with soil judging, but are interested in starting a soil judging program, as stated by [

21], and to provide students with the necessary skills and experience. Virtual reality field trips to soil judging sites and online videos could help provide future remote learning and soil judging opportunities.

5. Conclusions

The overall results show that virtual soil judging using archived profile pictures might be used as an alternative to help train students. However, coaches preferred face-to-face contests due to the lack of hands-on activities in the virtual format for soil texture, color, and other soil characteristics, including landscape information. Because of the COVID-19 pandemic, this is one of the first virtual soil contests of its kind, and it offers a completely new approach to soil judging for both coaches and students. Components of the virtual competition approach (e.g., soil profile pictures and associated data; landscape photographs) can still be used to augment the training resources for students (including new students from universities without a history of soil judging) who often are limited geographically to the type and diversity of soil pits they can practice on prior to competition, especially if coaches from varying universities share example soil profile data (illustrated above). Given the neutral responses of coaches to potential hybrid soil judging events, such material may continue to be improved and used to design hybrid contests for instances when face-to-face events are impossible (due to a pandemic) or become uneconomical (limited university resources or restrictions, long-distance travel, limited time availability, and remotely located and spread-out regions) due to the length of face-to-face contests, which generally last four to five days. Hybrid contests may also offer an economical way to gain additional judging contest experience prior to face-to-face contests. The design and implementation of hybrid events is therefore a fruitful area of future soil science pedagogical research (e.g., development of virtual reality 3-D soil pits).