Teachers’ Evaluation of the Usability of a Self-Assessment Tool for Mobile Learning Integration in the Classroom

Abstract

:1. Introduction

2. Evaluating and Ensuring Usability in Educational Technology

- Provide training and support: provide training and support to educators and students to ensure that they can use the tool effectively [29].

3. Mobile Learning: Learning and Methodology

- Flexibility: mobile learning allows students to learn at their own pace and according to their own schedule, which can be useful for students who need more time or who have other commitments outside of school.

- Accessibility: with mobile learning, students can access educational materials from anywhere, which can be useful for students who live in remote areas or who have limited access to traditional educational resources.

- Motivation: mobile devices can be used to create interactive and attractive learning experiences, which can help keep students interested and motivated.

- Meaningful learning: mobile devices can be used to provide authentic, contextualized learning experiences, which can help students apply what they have learned in real-world contexts.

- Technical problems: mobile devices can be prone to technical problems, such as connectivity problems or software failures, which can interrupt the learning process.

- Distraction: mobile devices can be a source of distraction for students, especially if not used properly.

- Cognitive load: mobile learning can be cognitively demanding if students are required to perform multiple tasks or switch between different applications or activities.

- Teacher confidence: teachers may lack confidence in their ability to use mobile devices effectively in the classroom, which may limit the potential benefits of this technology.

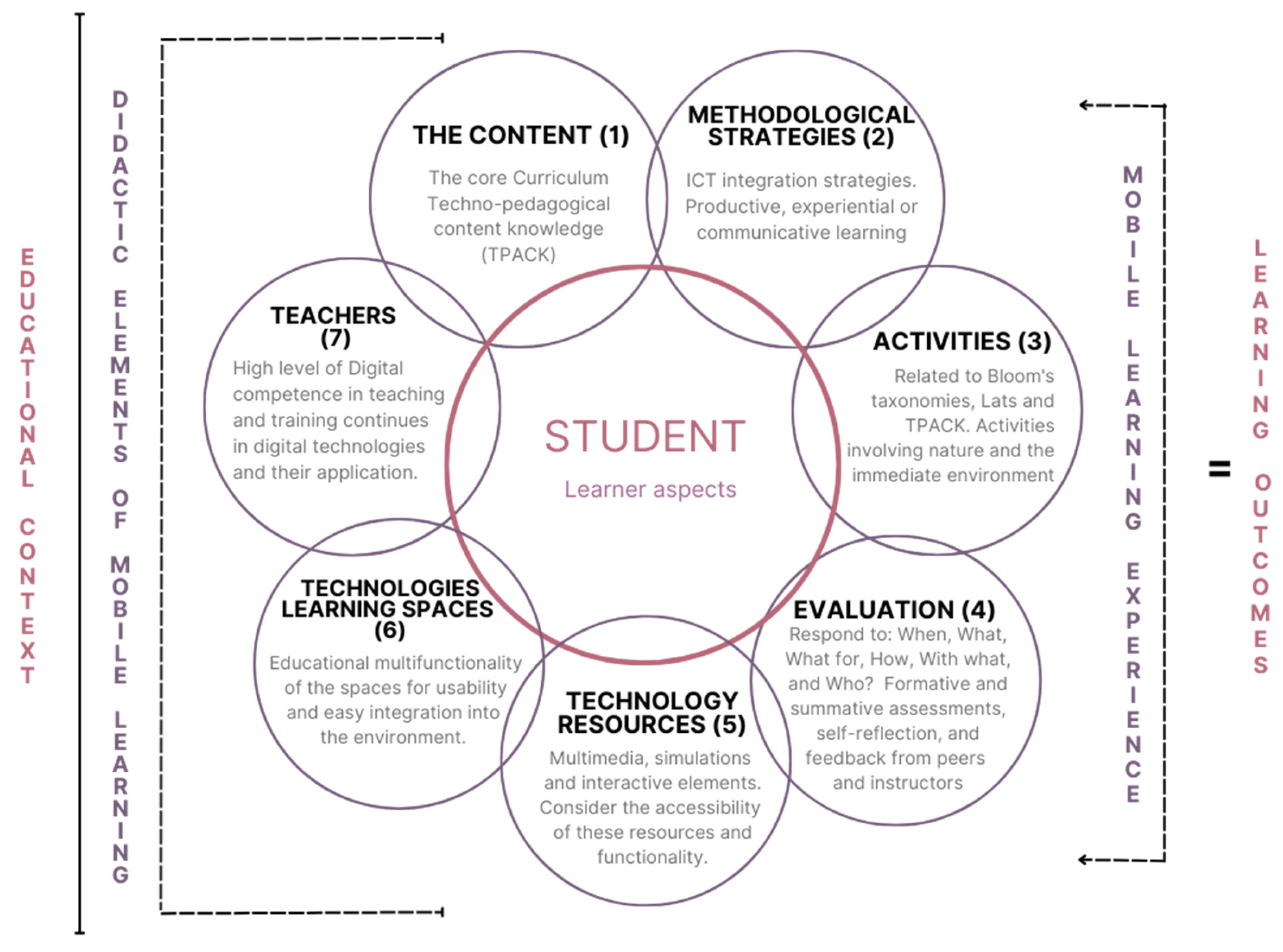

4. Self-Evaluation Tool for Design Activities with Mobile Devices

- The content: Refers to what students will learn and how the teacher can transform the content into techno-pedagogical knowledge. Related questions include knowledge of the educational framework, availability of educational resources of scientific value, and mastery of the content by the teacher.

- Methodological strategies: Focuses on strategies that promote meaningful learning and the incorporation of mobile devices. This includes the selection of strategies that encourage the acquisition and production of knowledge with mobile devices and the proposal of productive and experiential activities.

- Activities: Deals with the selection and design of appropriate activities to work with the content in a meaningful way. This includes consideration of realistic applicability, rationality in the type of activities, student diversity, and design based on taxonomies of cognitive, procedural, and attitude domains.

- Evaluation: Reviews the type of evaluation that respects the student’s learning process based on the use of mobile devices. This includes questions such as when, what, why, how, with what, and who to evaluate, and evaluation of both the creation process and the final product.

- Mobile resources: Focuses on the selection of optimal technology and resources for pedagogical usability. This includes consideration of technological functionality, motivation, and accessibility.

- Technological learning spaces: Refers to the characteristics of spaces that enhance learning with mobile devices. This includes the analysis, design, and preparation of the spaces, the organizational function of the technological space, and the proposal of activities that can be carried out in different places.

- The teacher: Focuses on the teacher’s level of digital competence and the role he or she must play to enhance learning with mobile devices.

5. Methodology

5.1. Participants

5.2. Instruments and Process of Collecting the Information

- I think I would use this self-assessment tool frequently.

- I find this self-assessment tool unnecessarily complex.

- I think the self-assessment tool was easy to use.

- I think I would need the help of a person with technical knowledge to use this self-assessment tool.

- The functions of this self-evaluation tool are well integrated.

- I think the self-evaluation tool is very inconsistent.

- I imagine that most people would learn to use this self-assessment tool very quickly.

- I find the self-assessment tool very difficult to use.

- It gives me confidence when I use this self-assessment tool.

- I needed to learn a lot before I could use this self-assessment tool.

6. Results

6.1. Block 1: Usability of the Pedagogic Content

6.2. Block 2: System Usability Scale (SUS)

7. Discussion

- Description and appropriateness of the self-assessment tool: Items 1 and 2 show high acceptance of the description and suitability of the self-assessment tool, with ease rates of 81.02% and 80.34%, respectively. This suggests that teachers find the tool understandable and suitable for their purposes.

- Increased use of mobile devices: Item 3 reflects that 75.25% of teachers have increased the number of mobile activities in the classroom. The standard deviation of 19.06% indicates variability in responses, which could reflect differences in technology adoption among teachers due to a complex combination of social, technological, political, and pedagogical factors. For example, implementation and support strategies should be developed that are sensitive to contextual and personal differences, ensuring effective integration of technology in various educational environments through the flexibility of the tool to be used, taking this variability into account.

- Reflection and improvement in education: Items 4 and 5 highlight how the self-assessment tool has encouraged reflection on educational topics (78.31%) and helped improve mobile activities (76.61%). The discriminatory efficiency of these items (64.90% and 76.39%) suggests that teachers using the self-assessment tool tend to be more aware of effective pedagogical practices and are more inclined to adapt and improve their teaching methods.

- Feedback and training needs: Items 6 and 7 show that the feedback provided has been useful in identifying training needs (78.31%) and providing necessary training resources (80.00%). This underlines the importance of the personalized feedback provided in the tool for teachers, as they have had the option to improve in the weaker areas identified.

- Perception of the tool’s continuous utility: Item 8, with an easy-to-use rate of 81.69%, indicates a strong belief in the continuous usefulness of the self-assessment tool. This suggests a positive perception of its long-term value in teaching practice. This is positive because the purpose of the tool is that it can be used when the teacher needs it, either to get started in the design of activities with mobile devices or to improve and review their proposals.

- In general, it is important to note that the use of mobile devices in education for teaching is still relatively new and may require a learning curve for some teachers [56]. Effective deployment of mobile devices in the classroom requires a clear understanding of how technology can enhance the learning experience [57] and a capacity to design and carry out effective activities that make use of digital technologies [58].

- Addressing generational and experience diversity: research revealed significant variability in usability scores according to teachers’ ages and educational stages. Younger and more experienced teachers showed a more positive perception of the tool’s usability compared to the age range of 34 to 42. In addition, the early stages of education showed a more heterogeneous usability experience. This variability could be attributed to differences in teachers’ prior exposure to mobile technology and digitization in the classroom. It is therefore crucial to consider these differences in the design and implementation of the tool, offering guidance and support tailored to the needs and experiences of different age groups and educational stages.

- Enhance items with positive scores: The study results highlighted several positive attributes of the tool, such as ease of use, trust generated, and function integration. These positive aspects not only reflect the effectiveness of the tool but can also be used to enhance its usefulness. For example, ease of use, reflected in item 3, “I think the self-assessment tool was easy to use”, with a median of 3.11, could be promoted as a key feature in the promotion and adoption of the tool among teachers [50,51]. Furthermore, the confidence generated by the tool could be used to encourage greater experimentation and creativity in the application of mobile technologies in the classroom.

- Develop strategies to turn “passive” teachers into “promoters”. The Net Promoter Score (NPS) revealed that 36.4% of teachers were “passive”, suggesting neutral perceptions towards the self-assessment tool. Although these teachers expressed no major concerns, their neutrality indicates room for improvement. Developing strategies for this group could include identifying their specific needs and concerns, offering personalized training and support, and highlighting the benefits and successes of the tool in similar contexts. The conversion of these “passive” teachers into “promoters” could have a significant impact on the adoption and success of the tool in a broader educational context.

- Promoting training and technical support: Some items on the SUS scale highlighted the perception of complexity and the need for technical assistance in the use of the tool. These findings underline the importance of providing continuous training and support to teachers. Training could include practical workshops, online tutorials, and self-directed learning resources. Technical support could be offered through the same project, “Pla Mòbils.Edu” through training and follow-up mentors. The combination of training and technical support could not only address concerns related to perceived complexity but would also enhance the confidence and competence of teachers in the use of the tool.

- In addition, the integration of mobile devices into education is a complex and dynamic process that requires a deep understanding of how technology, content, and pedagogy interact. As reflected in the usability of the evaluated self-assessment tool, the teacher requires continuous training and support in the integration of mobile devices, aligning the technology with educational and curricular objectives [59,60]. Furthermore, the diversity in the perception and adoption of the self-assessment tool, especially in terms of age and experience, reflects a complexity in integrating technology into education as it can be influenced by factors such as confidence in technology, attitude towards innovation, prior exposure to technology, and a need for differentiated training and support to meet the needs and expectations of different groups of teachers.

- Finally, the validity of the proposed self-evaluation tool in research in terms of usability has been positively demonstrated through the SUS test and can be used in the classroom as a valuable strategy to enhance the integration of mobile devices in education, offering teachers an effective guide and support on their way to teaching enriched with digital technologies [61,62].

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- UNESCO. Desglosar el Objetivo de Desarrollo Sostenible 4: Educación 2030. 2017. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000246300_spa (accessed on 17 April 2023).

- UNESCO. Género, Medios & TIC: Nuevos Enfoques de Investigación, Educación & Capacitación; French, L., Vega Montiel, A., Padovani, C., Eds.; UNESCO: Paris, France, 2021; Available online: https://unesdoc.unesco.org/ark:/48223/pf0000375656 (accessed on 22 February 2023).

- McGarr, O.; Johnston, K. Exploring the Evolution of Educational Technology Policy in Ireland: From Catching-Up to Pedagogical Maturity. Educ. Policy 2019, 35, 089590481984359. [Google Scholar] [CrossRef]

- Guillén-Gámez, F.D.; Mayorga-Fernández, M.J. Prediction of Factors That Affect the Knowledge and Use Higher Education Professors from Spain Make of ICT Resources to Teach, Evaluate and Research: A Study with Research Methods in Educational Technology. Educ. Sci. 2020, 10, 276. [Google Scholar] [CrossRef]

- Forkosh-Baruch, A.; Phillips, M.; Smits, A. Reconsidering teachers’ pedagogical reasoning and decision making for technology integration as an agenda for policy, practice and research. Educ. Technol. Res. Dev. 2021, 69, 2209–2224. [Google Scholar] [CrossRef]

- Martínez-Pérez, S.; Cabero-Almenara, J.; Barroso-Osuna, J.; Palacios-Rodríguez, A. T-MOOC for Initial Teacher Training in Digital Competences: Technology and Educational Innovation. Front. Educ. 2022, 7, 846998. [Google Scholar] [CrossRef]

- Rikala, J. Designing a Mobile Learning Framework for a Formal Educational Context. Ph.D Thesis, University of Jyväskylä, Jyväskylä, Finland, 2015. Available online: https://bit.ly/3IBzSW2 (accessed on 8 October 2019).

- Sun, J.C. Gaps, guesswork, and ghosts lurking in technology integration: Laws and policies applicable to student privacy. Br. J. Educ. Technol. 2023, 54, 1604–1618. [Google Scholar] [CrossRef]

- Tirado-Morueta, R.; García-Ruíz, R.; Hernando-Gómez, Á.; Contreras-Pulido, P.; Aguaded-Gómez, J.I. The role of teacher support in the acquisition of digital skills associated with technology-based learning activities: The moderation of the educational level. Res. Pract. Technol. Enhanc. Learn. 2023, 18, 010. [Google Scholar] [CrossRef]

- Mountford-Zimdars, A.; Moore, J.; Shiner, R. Enhancing Widening Participation Evaluation through the development of a selfassessment tool for practitioners: Learning from the Standards of Evaluation Practice (Phase 2) project 2017–2019. Widening Particip. Lifelong Learn. 2020, 22, 44–66. [Google Scholar] [CrossRef]

- Baena-Morales, S.; Martinez-Roig, R.; Hernádez-Amorós, M.J. Sustainability and Educational Technology—A Description of the Teaching Self-Concept. Sustainability 2020, 12, 10309. [Google Scholar] [CrossRef]

- Achahod, S. Towards reflection and action on the development of a hybrid learning model to promote the adoption of mobile devices in schools. In Proceedings of the 11th International Conference on Education and New Learning Technologies 2019, Palma, Spain, 1–3 July 2019; pp. 8274–8279. [Google Scholar] [CrossRef]

- Dorouka, P.; Papadakis, S.; Kalogiannakis, M. Tablets and apps for promoting robotics, mathematics, STEM education and literacy in early childhood education. Int. J. Mob. Learn. Organ. 2020, 14, 255. [Google Scholar] [CrossRef]

- Haga, S. Integrating Mobile-Assisted Language Learning: Teacher Beliefs in Japanese EFL Higher Education. In Proceedings of the 2nd International Conference on New Approaches in Education, Icnaeducation, Oxford, UK, 27–29 March 2020; Available online: https://www.doi.org/10.33422/2nd.icnaeducation.2020.03.147 (accessed on 15 November 2022).

- Siani, A. BYOD strategies in higher education: Current knowledge, students’ perspectives, and challenges. New Dir. Teach. Phys. Sci. 2017, 12. [Google Scholar] [CrossRef]

- Taharim, N.F.; Lokman, A.M.; Hanesh, A.; Aziz, A.A. Feasibility study on the readiness, suitability, and acceptance of M-Learning AR in learning History. Nucleation Atmos. Aerosols. 2016, 1705, 020009. [Google Scholar] [CrossRef]

- Carrera, X.; Coiduras, J.; Lazaro, J.L.; Pérez, F. La competencia digital docente: Definición y formación del profesorado. In ¿Cómo Abordar la Educación Del Futuro? Conceptualización, Desarrollo y Evaluación Desde la Competencia Digital Docente; Gisbert, M., Esteve, V., Lázaro, J.L., Eds.; Ediciones Octaedro: Las Gabias, Spain, 2019; pp. 59–78. [Google Scholar]

- Castañeda, L.; Esteve-Mon, F.M.; Adell, J.; Prestridge, S. International insights about a holistic model of teaching competence for a digital era: The digital teacher framework reviewed. Eur. J. Teach. Educ. 2021, 45, 493–512. [Google Scholar] [CrossRef]

- Wang, Y.; Hu, W. Analysis about serious game innovation on mobile devices. In Proceedings of the IEEE/ACIS 16th International Conference on Computer and Information Science (ICIS), Wuhan, China, 24–26 May 2017; pp. 627–630. [Google Scholar] [CrossRef]

- Pribeanu, C.; Gorghiu, G.; Lamanauskas, V.; Slekiene, V. Use of mobile technology in the teaching/learning process—Opportunities and barriers. In ELearning and Software for Education; ELSE: Bucharest, Romania, 2021; Volume 1, pp. 376–383. [Google Scholar] [CrossRef]

- Gao, Y. A Survey Study on the Application of Modern Educational Technology in English Major College Teaching in the Age of 5G Communication. Theory Pract. Lang. Stud. 2021, 11, 202. [Google Scholar] [CrossRef]

- Tengku Sharif, T.I.S.; Mohamad Noor, M.Y.; Omar, S.R.; Seong, T.K. Humanising mobile online esl blended learning model. J. Nusant. Stud. 2022, 7, 473–494. [Google Scholar] [CrossRef]

- Balanyà Rebollo, J.; De Oliveira, J.M. Los elementos didácticos del aprendizaje móvil: Condiciones en que el uso de la tecnología puede apoyar los procesos de enseñanza y aprendizaje. Edutec. Rev. Electrón. Tecnol. Educ. 2022, 80. [Google Scholar] [CrossRef]

- Hall, R.; Atkins, L.; Fraser, J. Defining a self-evaluation digital literacy framework for secondary educators: The DigiLit Leicester project. Res. Learn. Technol. 2014, 22, 21440. [Google Scholar] [CrossRef]

- Liang, B.; Gregory, M.A.; Li, S. Latency Analysis for Mobile Cellular Network uRLLC Services. J. Telecommun. Digit. Econ. 2022, 10, 39–57. [Google Scholar] [CrossRef]

- Salloum, R.G.; Theis, R.P.; Pbert, L.; Gurka, M.J.; Porter, M.; Lee, D.; Shenkman, E.A.; Thompson, L.A. Stakeholder Engagement in Developing an Electronic Clinical Support Tool for Tobacco Prevention in Adolescent Primary Care. Children 2018, 5, 170. [Google Scholar] [CrossRef]

- Van Nuland, S.E.; Eagleson, R.; Rogers, K.A. Educational software usability: Artifact or Design? Anat. Sci. Educ. 2016, 10, 190–199. [Google Scholar] [CrossRef]

- Nielsen, J.; Levy, J. Measuring usability—Preference vs. performance. Commun. ACM 1994, 37, 66–75. [Google Scholar] [CrossRef]

- Choo, S.; Kim, J.Y.; Jung, S.Y.; Kim, S.; Kim, J.E.; Han, J.S.; Kim, S.; Kim, J.H.; Kim, J.; Kim, Y.; et al. Development of a Weight Loss Mobile App Linked With an Accelerometer for Use in the Clinic: Usability, Acceptability, and Early Testing of its Impact on the Patient-Doctor Relationship. JMIR MHealth UHealth 2016, 4, e24. [Google Scholar] [CrossRef] [PubMed]

- Dekhane, S.; Tsoi, M.Y.; Johnson, C. Mobile Application Development by Students to Support Student Learning. In Mobile and Blended Learning Innovations for Improved Learning Outcomes; IGI Global: Hershey, PA, USA, 2020. [Google Scholar] [CrossRef]

- Manohar, P.; Acharya, S.; Wu, P.Y.; Ansari, A.; Schilling, W. Case Study Based Educational Tools for Teaching Software V&V Course at Undergraduate Level. American Society for Engineering Education. In Proceedings of the 122nd ASEE Anual Conference & Exposition, Seattle, WA, USA, 14–17 June 2015. [Google Scholar]

- Zhao, M.; Larson, J.; Jordan, M. Design and Development: NSF Engineering Research Centers Unite: Developing and Testing a Suite of Instruments to Enhance Overall Education Program Evaluation. In Proceedings of the ASSE Annual Conference, Virtual, 19–26 July 2021; American Society for Engineering Education: Washington, DC, USA, 2021. [Google Scholar]

- Plomp, T.; Nieveen, N. An Introduction to Educational Design Research SLO • Netherlands institute for curriculum development. In Proceedings of the seminar conducted at the East China Normal University, Shanghai, China, 23–26 November 2007. [Google Scholar]

- Lehtonen, D. Constructing a design framework and design methodology from educational design research on real-world educational technology development. EDeR Educ. Des. Res. 2021, 5, 38. [Google Scholar] [CrossRef]

- Chuenyindee, T.; Montenegro, L.D.; Ong, A.K.S.; Prasetyo, Y.T.; Nadlifatin, R.; Ayuwati, I.D.; Sittiwatethanasiri, T.; Robas, K.P.E. The perceived usability of the learning management system during the COVID-19 pandemic: Integrating system usability scale, technology acceptance model, and task-technology fit. Work 2022, 73, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Emil, R.; Kaburuan, J.L. Evaluation of User Experience on Digital Learning Platform Website Using System Usability Scale. Turk. J. Comput. Math. Educ. (TURCOMAT) 2021, 12, 1595–1606. [Google Scholar] [CrossRef]

- Santágueda Villanueva, M.; LLopis Nebot, M.Á.; Esteve Mon, F.M. A mobile application for working on university service learning: Usability, adequacy and perceptions of usefulness. Edutec. Rev. Electrón. Tecnol. Educ. 2021, 78, 22–37. [Google Scholar] [CrossRef]

- Sari, R.P.; Henim, S.R. The application of system usability scale method to measure the usability of electronic learning system (e-learning) of politeknik caltex riau. ILKOM J. Ilm. 2021, 13, 266–271. [Google Scholar] [CrossRef]

- Criollo-C, S.; Lujan-Mora, S.; Jaramillo-Alcazar, A. Advantages and Disadvantages of M-Learning in Current Education. In Proceedings of the 2018 IEEE World Engineering Education Conference (EDUNINE), Buenos Aires, Argentina, 11–14 March 2018. [Google Scholar] [CrossRef]

- Palalas, A.; Wark, N. A Framework for Enhancing Mobile Learner-Determined Language Learning in Authentic Situational Contexts. Int. J. Comput.-Assist. Lang. Learn. Teach. 2020, 10, 83–97. [Google Scholar] [CrossRef]

- Lai, C. Trends of mobile learning: A review of the top 100 highly cited papers. Br. J. Educ. Technol. 2019, 51, 721–742. [Google Scholar] [CrossRef]

- El-Sofany, H.F.; El-Haggar, N. The Effectiveness of Using Mobile Learning Techniques to Improve Learning Outcomes in Higher Education. Int. J. Interact. Mob. Technol. (IJIM) 2020, 14, 4. [Google Scholar] [CrossRef]

- Naciri, A.; Baba, M.A.; Achbani, A.; Kharbach, A. Mobile Learning in Higher Education: Unavoidable Alternative during COVID-19. Aquademia 2020, 4, ep20016. [Google Scholar] [CrossRef]

- Kiat, L.B.; Ali, M.B.; Abd Halim, N.D.; Ibrahim, H.B. Augmented Reality, Virtual Learning Environment and Mobile Learning in education: A comparison. In Proceedings of the 2016 IEEE Conference on E-Learning, E-Management and E-Services (IC3e), Langkawi, Malaysia, 10–12 October 2016. [Google Scholar] [CrossRef]

- Diacopoulos, M.M.; Crompton, H. A systematic review of mobile learning in social studies. Comput. Educ. 2020, 154, 103911. [Google Scholar] [CrossRef]

- Moya, S.; Camacho, M. Developing a Framework for Mobile Learning Adoption and Sustainable Development. Technol. Knowl. Learn. 2021, 28, 727–744. [Google Scholar] [CrossRef]

- Lotero-Echeverri, G. Capacidades de los docentes para la incorporación de estrategias m-learning en sus procesos de enseñanza y aprendizaje. Estudio de un caso colombiano. Saber Cienc. Lib. 2021, 16, 220–232. [Google Scholar] [CrossRef]

- Ehrlinger, J.; Johnson, K.; Banner, M.; Dunning, D.; Kruger, J. Why the unskilled are unaware: Further explorations of (absent) self-insight among the incompetent. Organ. Behav. Hum. Decis. Process. 2008, 105, 98–121. [Google Scholar] [CrossRef] [PubMed]

- Karaman, P. The Impact of Self-assessment on Academic Performance: A Meta-analysis Study. Int. J. Res. Educ. Sci. 2021, 7, 1151–1166. [Google Scholar] [CrossRef]

- Sailer, M.; Stadler, M.; Schultz-Pernice, F.; Schöffmann, C.; Paniotova, V.; Husagic, L.; Fischer, F. Technology-related teaching skills and attitudes: Validation of a scenario-based self-assessment instrument for teachers. Comput. Hum. Behav. 2021, 115, 106625. [Google Scholar] [CrossRef]

- Balanyà Rebollo, J.; Minelli De Oliveira, J. The crux of mobile learning: Key aspects in teaching with mobile devices. International Conferences Mobile Learning. In Proceedings of the International Association for Development of the Information Society (IADIS), Virtual, 3–5 March 2021. [Google Scholar]

- Lehrmann, A.L.; Skovbjerg, H.M.; Arnfred, S.J. Design-based research as a research methodology in teacher and social education—A scoping review. EDeR Educ. Des. Res. 2022, 6, 54. [Google Scholar] [CrossRef]

- Fabila Echauri, A.M.; Minami, H.; Izquierdo Sandoval, M.J. La Escala de Likert en la evaluación docente: Acercamiento a sus características y principios metodológicos. Perspect. Docentes 2012, 50, 31–40. [Google Scholar]

- Brooke, J. SUS: A retrospective. JUX J. User Exp. 2013, 8, 29–40. [Google Scholar]

- Lewis, J.R. Usability: Lessons Learned… and Yet to Be Learned. Int. J. Hum.-Comput. Interact. 2014, 30, 663–684. [Google Scholar] [CrossRef]

- Keiningham, T.L.; Cooil, B.; Andreassen, T.W.; Aksoy, L. A Longitudinal Examination of Net Promoter and Firm Revenue Growth. J. Mark. 2007, 71, 39–51. [Google Scholar] [CrossRef]

- Riaza, B.; Rodríguez, A. Students’ Perception of the Integration of Mobile Devices as Learning Tools in Pre-Primary and Primary Teacher Training Degrees. In Mobile Devices in Education: Breakthroughs in Research and Practice; Information Resources Management Association; IGI Global: Hershey, PA, USA, 2020; pp. 374–391. [Google Scholar] [CrossRef]

- Marques, M.M.; Pombo, L. The Impact of Teacher Training Using Mobile Augmented Reality Games on Their Professional Development. Educ. Sci. 2021, 11, 404. [Google Scholar] [CrossRef]

- Nikolopoulou, K. Mobile devices in early childhood education: Teachers’ views on benefits and barriers. Educ. Inf. Technol. 2021, 26, 3279–3292. [Google Scholar] [CrossRef]

- Henriksen, D.; Mishra, P.; Creely, E.; Henderson, M. The Role of Creative Risk Taking and Productive Failure in Education and Technology Futures. TechTrends 2021, 65, 602–605. [Google Scholar] [CrossRef]

- Estrada, B.; Zapata, C. Definición de un meta-modelo para el diseño de aplicaciones de software educativo basado en usabilidad y conocimiento pedagógico. Inf. Tecnol. 2022, 33, 35–48. [Google Scholar] [CrossRef]

- Clark, R.C.; Mayer, R.E.; Thalheimer, W. E-learning and the science of instruction: Proven guidelines for consumers and designers of multimedia learning. Perform. Improv. 2003, 42, 41–43. [Google Scholar] [CrossRef]

| Items Block 1. | Analysis of the Questionnaire Structure | |||

|---|---|---|---|---|

| Facility Index | Standard Deviation | Discrimination Index | Discrimination Efficiency | |

| 1.- I consider that the characteristics presented in the self-assessment tool are sufficiently descriptive. | 81.02% | 15.50% | 47.97% | 56.53% |

| 2.- I think the list of characteristics of each element of the self-assessment checklist is adequate. | 80.34% | 12.59% | 40.54% | 53.00% |

| 3.- As a teacher registered in the “Pla Mòbils.edu” I have increased the number of activities that I propose in the classroom with mobile devices. | 75.25% | 19.06% | 43.49% | 45.85% |

| 4.- Utilizing the self-assessment tool has encouraged me to examine educational issues that I had not previously considered when designing mobile activities. | 78.31% | 12.48% | 55.64% | 64.90% |

| 5.- I consider that using the self-assessment tool has helped me to improve my mobile activities. | 76.61% | 10.60% | 62.36% | 76.39% |

| 6.- The feedback provided by the self-assessment tool has helped me to identify training needs. | 78.31% | 14.99% | 59.98% | 66.31% |

| 7.- I believe that the feedback from the self-assessment questionnaire has provided me with the necessary training resources to improve my level in the use of mobile devices in education. | 80.00% | 14.38% | 54.82% | 60.44% |

| 8.- I think it would be beneficial to have the self-assessment tool so that I can utilise it whenever necessary. | 81.69% | 14.04% | 48.42% | 53.60% |

| Items Block 2 SUS | Results 5-Point Likert Scale Item Scores | Total Items (M) | ||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 1. (+) I think I would use this self-assessment tool frequently. | 3.68 | 3.70 | 3.71 | 3.71 | 3.71 | 3.70 |

| 2. (−) I consider this self-assessment tool to be unnecessarily complex. | 2.21 | 2.22 | 2.22 | 2.21 | 2.24 | 2.22 |

| 3. (+) I think the self-assessment tool was easy to use. | 3.85 | - | 3.89 | 3.89 | 3.91 | 3.11 |

| 4. (−) I think I would need help from a person with technical knowledge to use this self-assessment tool. | 2.10 | 2.11 | 2.12 | 2.10 | 2.11 | 2.11 |

| 5. (+) The functionality of this self-assessment tool is highly integrated. | - | 3.79 | 3.78 | 3.81 | 3.80 | 3.04 |

| 6. (−) I think the self-assessment tool is very inconsistent. | 2 | 2 | 2.01 | 2 | - | 1.60 |

| 7. (+) I imagine that most people would learn to use this self-assessment tool very quickly. | - | 3.67 | 3.70 | 3.70 | 3.76 | 2.97 |

| 8. (−) I consider this self-assessment tool very difficult to use. | 1.96 | 1.96 | 1.98 | 1.97 | - | 1.57 |

| 9. (+) It gives me confidence when I use this self-assessment tool. | 0 | 3.73 | 3.72 | 3.72 | 3.75 | 2.98 |

| 10. (−) I needed to learn many things before I was able to use this self-assessment tool. | 2.22 | 2.22 | 2.23 | 2.24 | 2.29 | 2.24 |

| Sum of Squares | df | Mean Squares | F | p | |

|---|---|---|---|---|---|

| Educational Stage | 1340.55 | 4 | 335.14 | 3.65 | 0.007 |

| Residual | 20,470.28 | 223 | 91.79 | ||

| Total | 21,810.83 | 227 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balanyà Rebollo, J.; De Oliveira, J.M. Teachers’ Evaluation of the Usability of a Self-Assessment Tool for Mobile Learning Integration in the Classroom. Educ. Sci. 2024, 14, 1. https://doi.org/10.3390/educsci14010001

Balanyà Rebollo J, De Oliveira JM. Teachers’ Evaluation of the Usability of a Self-Assessment Tool for Mobile Learning Integration in the Classroom. Education Sciences. 2024; 14(1):1. https://doi.org/10.3390/educsci14010001

Chicago/Turabian StyleBalanyà Rebollo, Judith, and Janaina Minelli De Oliveira. 2024. "Teachers’ Evaluation of the Usability of a Self-Assessment Tool for Mobile Learning Integration in the Classroom" Education Sciences 14, no. 1: 1. https://doi.org/10.3390/educsci14010001