Abstract

This study evaluated the effectiveness of neurodiversity training modules from a mental health literacy program for primary school staff offered over a three-year period (2013–2015), entitled ‘Teaching and Mental Health’. Using archival data, this study aimed to identify how much teachers learned, how confident they were about using this newly acquired knowledge, and how well the training met the teachers’ learning needs. This study also aimed to explore the relationship between knowledge and confidence, and how satisfaction indicators affected this relationship. Key ethical considerations of neurodiversity training and outcome measurement are discussed. A pre-test and post-test survey design was conducted with 99 primary school teachers from the Brisbane region in Australia. Analyses included three paired-samples t-tests, descriptive statistics, a linear regression analysis, and a mediation analysis. Significant increases of knowledge and confidence were found compared to baseline, and mean satisfaction ratings were high. Knowledge was a significant predictor of confidence, and mental health presenter ratings partially mediated this relationship. It is proposed that future delivery of the program should invest in improving presenter effectiveness.

1. Introduction

Children with neurodevelopmental disorders experience difficulties ranging from dysregulation in physiological systems and sensory systems to sleep disturbances and dysregulated emotions [1]. Neurodevelopmental disorders are related to poor mental health, as both lead to a reduced quality of life, academic difficulties, and problems with emotions, socialisation, and behaviour [2,3]. These difficulties make it problematic for neurodiverse children to cope in mainstream schools, and often require substantial adjustments and support in the classroom. In Australia, the current estimate of autism diagnosis is 1.3% by age 12, while an ADHD diagnosis is approximately 7.4% among children aged 4–17 years [4,5].

In 2016, approximately 13% of all Australian children aged 4–11 years experienced a documented mental disorder (~6% excluding ADHD) [5]. These figures are similar to other epidemiological studies that estimate that 10–20% of children worldwide are affected by clinical levels of mental illness or behavioural disorders [6]. Children with neurodevelopmental disabilities are far more likely to have mental health difficulties than their neurotypical peers; an Australian study found that 48.8% of school aged children with one neurodevelopmental disability had co-occurring mental health conditions, while 71.3% of school aged children with two or more neurodevelopmental disabilities had co-occurring mental health conditions [3].

Mental illness and developmental disorders represent a substantial cost to an individual and their family’s quality of life, and a substantial financial cost to the community. The Australian Institute of Health and Welfare’s (2022) report on burden of disease identified that mental health and respiratory diseases contributed the highest level of burden for children aged 5–14 [7]. Long-term, mental health issues have been estimated to cost the Australian economy approximately USD 60 billion a year in direct care costs, lost productivity and indirect costs [8]. Recent estimates by the World Economic Forum reported that mental illness represented approximately 35% of the global economic burden (for non-communicable diseases). It was forecast that by 2030, mental health conditions would cost almost USD one trillion in lost productively annually [9]. Across recent literature, it is estimated that close to half of all lifelong mental disorders occur during childhood, highlighting the importance of addressing mental health concerns in school settings worldwide [10].

Stephenson and colleagues carried out a longitudinal study identifying the main barriers to inclusion for autistic students [11]. They found the most commonly occurring factors for students and parents were that educators had unrealistic expectations, were unsupportive, and lacked understanding of autism. Students and parents also commonly stated that the school did not understand their needs, and used inappropriate strategies and curricula [11]. Increasing identification rates of neurodiversity along with these barriers, and given that most classrooms in Australia will already have a neurodivergent child, due to inclusion policies across Australia, and the long-term cost of mental health conditions, mental health literacy (MHL) for education staff has become a critical need in the school environment [12,13].

Early intervention for individuals with neurodiversity is a well-established critical factor for long-term improved outcomes [14,15,16]. School attendance makes up a considerable proportion of a child’s waking hours each week. This gives education staff a unique position to recognise potential mental health concerns, and offers valuable early opportunities for intervention support, adjustment, and referral [17,18]. The education environment can lead the way and play a key role in promoting and improving youth MHL in the community [19]. Without the appropriate MHL knowledge and skills, the education environment cannot adequately support early intervention, and educators cannot comply with disability, educational, and ethical standards [15,16,20]. Education staff need access to cost-effective, evidence-based MHL programs for schools to deliver high-quality learning environments that comply with Disability Standards for Education 2005, as well as state and federal education policies, procedures, and codes of conduct [21,22,23]. Evaluations of MHL training that include neurodiversity literacy for primary school educators are imperative.

This pilot study evaluates the effectiveness of neurodiversity training modules that form part of an MHL program for primary school educators offered over a three-year period (2013–2015). The training consisted of twelve 90 min face-to-face training sessions, each covering different MHL topics. This study looks at three of these modules: Autism Spectrum Disorders, Attention Deficits and Hyperactivity, and Sensory Processing and Learning [24,25]. This study aims to measure the outcomes of the training, looking at knowledge and confidence gained and training satisfaction. Measuring knowledge gain is an effective measure of the transfer of information, while confidence gives an insight into future motivations to use that knowledge. Increasing the perceived ability to implement strategies and identify student needs leads to a strengthened belief in positive outcome expectations, which increases motivation to engage in the behaviour [26]. While it is useful to identify that increases in knowledge predict increases in confidence, having a deeper understanding of what influences this relationship and how it can be improved is particularly useful. This study aims to identify the mediating effect of collaborative teaching on the relationship between knowledge and confidence, with the aim of improving the future efficacy of autism, ADHD, and sensory MHL training.

1.1. Literature Review Summary

Educator professional development training on autism spectrum disorder (ASD), sensory processing disorder (SPD), and attention-deficit/hyperactivity disorder (ADHD) have been evaluated by many studies. The literature search was conducted via the PsychINFO, Google Scholar, and Education Resources Information Center (ERIC) databases. Most of the relevant studies found were case studies, leaving only seven notable articles, and a total of 17 articles that reported quantitative educator outcomes for professional development for primary school educators on neurodevelopmental disorders (3 for ASD, none for SPD, and 14 for ADHD). These studies were conducted in diverse locations (Alegre, Brazil; Kaduna, Nigeria; Qalqilya, Egypt; Karachi, Pakistan; Sydney, Australia; New York State, America; Małopolska, Poland) and consistently demonstrated significant knowledge enhancement post-training [27,28,29,30,31]. Syed and colleagues also reported that knowledge gains were largely maintained at 6-month follow-up [30], while Lasisi and colleagues [28] and Shehata and colleagues [29] found that training also improved educator attitudes and beliefs about students with ADHD.

Studies that included both educator confidence and knowledge gain included one in ADHD training by Latouche and Gascoigne [32] in Sydney, Australia, and two in ASD training by Corona and colleagues [33] in New York State, America and Kossewska and colleagues [34] in Małopolska, Poland. All three studies reported significant increases in educator knowledge and confidence post-training; in addition, Corona and colleagues [33] found that knowledge did not predict confidence. Latouche and Gascoigne conducted a randomised controlled trial, reporting large and moderate group-by-time effects for knowledge and confidence gains, respectively, and these results remained significant at 1-month follow-up [32].

1.2. Summary and Aims

Most of the existing research on educator neurodivergent training measured knowledge, confidence, and attitudes, and no studies investigated presenter or collaborative training factors. In addition, no studies were found that investigated underlying variables that may impact effective outcomes. To address these gaps in the research, this study evaluates knowledge and confidence gains, and aims to explore the reasons for training efficacy in the teacher professional development space. This study will look at the effect of collaborative training satisfaction ratings on the relationship between educators’ knowledge and confidence gain.

This study aims to answer the following questions:

- Do educators feel they have improved their mental health literacy knowledge from completing the neurodiversity modules?

- Do educators feel more confident with understanding, supporting, and identifying the mental health needs of students with neurodiversity?

- How much did educators learn from this training?

- Does perceived knowledge predict confidence?

- How does the quality of collaborative training delivery impact the relationship between perceived knowledge and confidence?

2. Materials and Methods

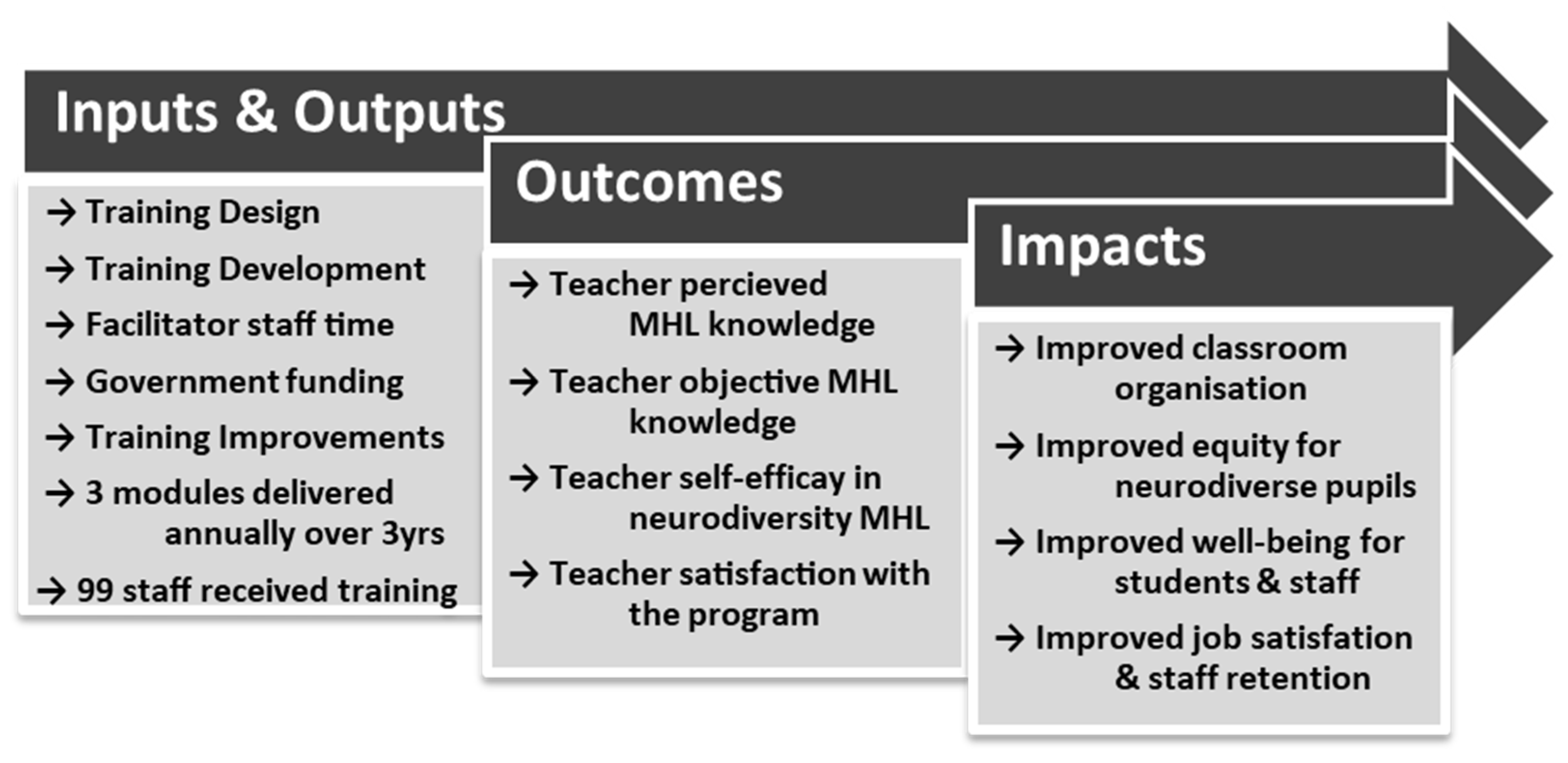

The Teaching and Mental Health training modules were developed in partnership between government-funded Child and Youth Mental Health Services (CYMHS) and specialist, publicly funded behaviour support teams. The program was a pilot program developed in response to requests from public primary school educators in and around Southeast Queensland, Australia. Ethics was approved by the University Human Research Ethics Committee (HREC; approved H22REA132). A simplified logic model for the MHL program is presented in Figure 1.

Figure 1.

Program evaluation logic model.

The full MHL program offered 12 optional modules based on subject, including the three modules presenting MHL for neurodivergent students in this evaluation, which made up part of the overall program. Each module covered a specific topic and included a speaker from the public Child and Adolescent Mental Health Services, who presented a 45 min ‘framework for understanding’, which included psychoeducation and mental health concerns, and a speaker from the specialist behaviour support team, who provided a presentation for 45 min on a ‘framework of practice’. This section included case studies that illustrated the application of the key principles of the ‘framework for understanding’. The content of each module was developed as a collaboration between presenters and the research team, and the program features were designed to assist educators in addressing specific learning needs [24]. The training was provided at no cost to the participants, with the aim of improving accessibility for participants who would otherwise have to be approved for government funding to attend [35].

2.1. Participants

The participants were education staff from a variety of primary schools in Southeast Queensland, Australia. A total of 99 participants from 2013, 2014, and 2015 make up the sample who attended the autism, sensory, and ADHD modules; 81% were teachers, 12% were support teachers, 4% were teacher aids, and 3% were school leaders (principal or deputy principal). The age of participants ranged from 22 to 59 years of age. The mean age was 39.47, with a standard deviation of 8.34. Approximately 96% of the sample identified as women, and 4% identified as men. Participants’ years of experience working in education ranged from 0 to 30 years, with a mean of 10.8 years and a standard deviation of 6.68 years. Most of the participants were from state primary schools, as this is where the program was advertised. Recruitment of participants was achieved using posters that were displayed in staffrooms across public primary schools in Brisbane, Southeast Queensland. Posters in staffrooms were chosen due to ethical concerns of using social media to attract participants [36]. The only inclusion criteria were employment in a primary school setting.

2.2. Materials

The registration process for each module was done via email prior to attending training, which collected participants’ demographic data and responses to the pre-training surveys. At the end of each training session, participants completed the post-training survey in exchange for completion certificates. After the post-training surveys were completed, summaries of the training content were provided as a single-page handout. The demographic information collected included four items: 1. Age, 2. Gender, 3. Role, and 4. Years of experience. This information was collected to obtain an understanding of the background characteristics of the participants in the study.

2.3. Pre-Training Measures

The pre-training survey asked participants to create a non-identifiable participant ID using numbers and letters of their birth month, mother’s maiden name, and birthday. The pre-training survey also included a pre-knowledge measure containing six true or false questions relating to the module topic participants were attending, which included the options: true, false, and guess or do not know. The final component of the pre-knowledge survey was two questions requiring a 5-point Likert style response, ‘How much prior knowledge do you feel you have in this topic?’ (1 = no prior knowledge, 2 = little prior knowledge, 3 = a moderate amount, 4 = a considerable amount, and 5 = lots of prior knowledge), and ‘How confident are you about this topic?’ (1 = not confident, 2 = a little confident, 3 = moderately confident, 4 = considerably confident, and 5 = very confident).

2.4. Post-Training Measures

The post-training survey asked participants to re-enter their participant ID using the same format as the pre-training survey to allow data matching. Following that, the post-training survey included six additional true or false questions (questions 7–12), again relating to the module participants had attended. As before, possible responses were true, false, and guess or do not know. The following section of the post-training survey covered questions assessing what participants thought they had learned using a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). Two questions were asked: 1. I have better knowledge on which to base my decisions and actions and, 2. I am confident that I will be able to use the ideas presented in this training to change how I work (confidence rating).

2.5. Satisfaction Measures

Multiple satisfaction measures on presenter and training satisfaction were collected by the original study; however, this evaluation only assesses specific ratings of trainer satisfaction. The post-training survey used a 5-point Likert scale ranging from 1 (low) to 5 (high), asking participants to rate presenters on how ‘effective and helpful’ they were. Training presenters were assessed by educators in level of knowledge in content area, level of consistency between content and objectives, and how ‘effective and helpful’ they were. The questionnaire was designed by the original development team based on Kirkpatrick’s Model [24]. The subscale reliability (internal consistency) was high, and Cronbach’s alpha for the 4-item presenter satisfaction ratings was 0.82. Training satisfaction was rated by educators on six factors relating to the content, training methods, and venue, using the same 5-point Likert scale. Venue was excluded due to large amounts of missing data; a very high reliability was found for the remaining 5-item overall satisfaction ratings; Cronbach’s alpha was 0.96.

3. Results

A sensitivity test was performed using G*Power version 3.1.9.7., using the matched pairs t-test in G*Power to perform a two-tailed test with a set type one error rate of 0.05. The largest effect size detectable was determined as d = 0.37. The data analyses for these results were performed with IBM SPSS Statistics version: 28.0.1.1 (15) and PROCESS version 4.2 macro. A paired samples test was performed, followed by a mediation analysis. Performing Little’s missing completely at random (MCAR) test, estimated expectation-maximisation means were X2(129, N = 99) = 191.71, p = 0.000. The multiple imputation procedure was done for all missing values, rather than mean replacement, for all the variables with missing values to ensure consistency and efficiency [37,38]. To increase accuracy and avoid creating new values, aggregates of five imputed simulations were used and replaced automatically with rounding to the nearest whole number. Using Field’s chi-squared table [38], the Mahalanobis distance maximum value for the data set, X2(12) = 48.69, was substantially higher than the critical chi-squared value (21.03, when p = 0.05), indicating concerns with multivariate normality. The Kolmogorov–Smirnov test was significant for all variables at p < 0.001. As the level of skewness and kurtosis were not extreme, and parametric tests are required for mediation analysis, all analyses were performed using bootstrapping [37,38].

3.1. Participants

Most participants in the sample were female, approximately 96%. Participants’ age ranged from 22 to 59 years (M = 39.48, SD = 8.38), and participant years of experience ranged from 0 to 30 years (M = 10.8, SD = 6.68). A total of 99 participants attended the training modules; of those, 81% were teachers, 4% were teacher aids, 3% were school leaders, and 12% were support staff who were specialist inclusion support practitioners working in schools.

3.2. Pre- and Post-Training Paired Samples Test

To test research questions 1, 2, and 3, a two-tailed paired samples t-test was used to evaluate change in knowledge and confidence. A significant improvement in all three measures of knowledge and confidence was found, and the results of the analyses are reported in Table 1. Unsurprisingly, effect sizes for objective measures of knowledge gain were smaller than self-rated gains, t(98) = -3.12, p = 0.002, d = 0.33, 95% CI [−0.61, −0.14]. These results suggest that the MHL neurodiversity training was effective for increasing the knowledge and confidence of primary school educators. Post hoc power analysis was performed using G*Power version 3.1.9.7. The power level achieved given the 99 participants and an effect size of 0.33 was α = 0.05, power = 0.90.

Table 1.

Descriptive statistics and t-test results for knowledge and confidence.

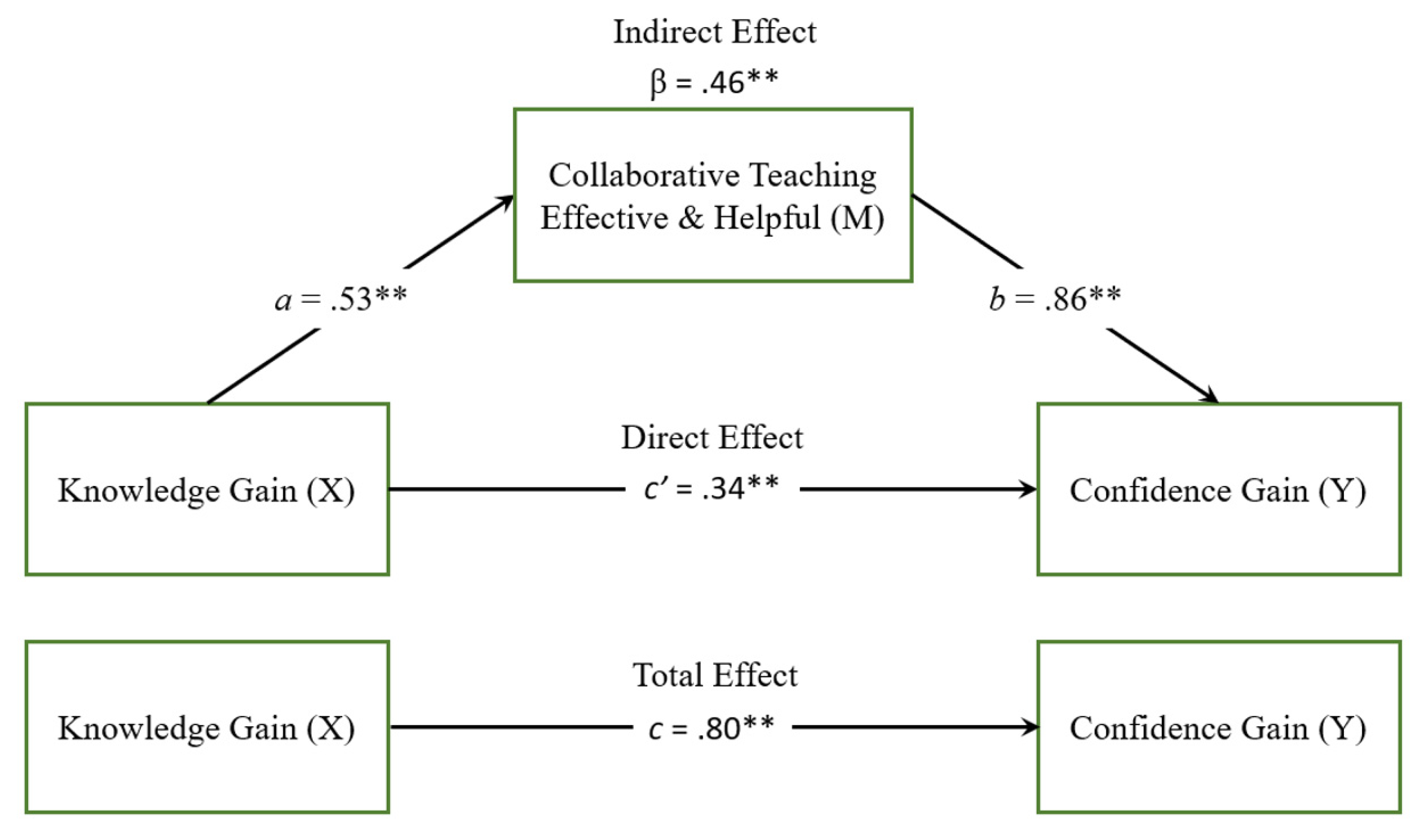

3.3. Mediation Model and Linear Regression Analysis

A linear regression analysis was done to explore research questions 4 and 5. The overall regression was significant, R2 = 0.63, F(1, 97) = 161.47, p = < 0.001. It was found that post-knowledge significantly predicted post-confidence, β = 0.79, p = < 0.001. Mediation analysis using PROCESS macro for SPSS, incorporating the Sobel test and bootstrapping for analysis of complex effects, offers a valuable tool for the development of new theoretical perspectives [39]. We aimed to identify the mediating effects of ‘effective and helpful’ collaborative teaching on the relationship between knowledge and confidence gain, using a mediation analysis. PROCESS Model 4 was used with the combined—presenters were helpful and effective ‘collaborative’ score—as the mediator. The total effect of post-knowledge on post-confidence was significant c = (β = 0.80, t = 12.67, p < 0.001). Age was included in the mediation analysis as a covariate, to control for an approximation of maturity and life experience-related factors. The mediation analysis results are presented in Figure 2 and Table 2.

Figure 2.

Program evaluation logic model. Note. Standard error based on bootstrapping with 5000 samples. n = 99. Dashed line illustrates not significant direct effect, solid lines indicate the direct effect through the mediators. * p < 0.05, ** p < 0.01.

Table 2.

Mediation pathways, indirect, direct, and total effects.

Mediation analysis results revealed a strong partial mediation; the direct effect of post-knowledge on post-confidence remained significant (b = 0.34, t = 5.51, p < 0.001), while the indirect effect of post-knowledge on post-confidence through collaborative teaching rating was also significant (b = 0.46, p < 0.001). The completely standardised indirect effect of post-knowledge on post-confidence was β = 0.46, SE = 0.06, 95% CI [0.33, 0.57]. This means that the presence of the mediator ‘effective collaborative training’ accounted for approximately 51% of the change in participant post-training confidence rating (R2 = 0.51), where the total proportion of change in post-confidence score without the presence of the mediator was 83% (R2 = 0.83).

4. Discussion

The current literature largely supports the use of professional development training sessions to meet the learning needs of educators. Educators’ objective knowledge and subjective confidence significantly improved in all the studies reviewed; unfortunately, most of them failed to report effect sizes [27,28,29,30,32,33,34]. Latouche and Gascoigne’s [32] study was the only study that identified and implemented a randomised controlled trial, and they found significant medium and large effect sizes for confidence and knowledge gains, respectively. The first three research aims in this study planned to investigate improvements in educator confidence and their subjective and objective knowledge gains. Similar to the majority of the existing literature, this study found a large significant effect for subjective knowledge and confidence ratings, and a moderate significant effect for objective knowledge ratings.

Considering the substantial differences in effect size between objective and subjective knowledge, several factors and explanations are explored. One factor is that educators scored relatively high (M = 2.8, SD = 1.2) in objective pre-knowledge scores, suggesting either educators were already well informed on these topics, the questions were too easy, or a combination of both. Another factor was that educators rated their perceived pre-knowledge lower than actual pre-knowledge measured (M = 2.4, SD = 0.6 compared to M = 2.8, SD = 1.2). Considering this, and the tendency for people to overestimate their ability, it suggests that it is likely that the pre-knowledge questions used in this study were aimed too low [40,41]. Another factor to consider is the differences in perceived versus actual post-knowledge scores (M = 3.2, SD = 1.4 compared to M = 4, SD = 0.8), suggesting either educators substantially overestimated their knowledge, the questions asked did not capture the breadth of knowledge obtained, or a combination of both. Given only six pre-knowledge and six post-knowledge questions were asked, and that people tend to overestimate their ability, a combination of both is likely [40,41].

While the descriptions available for these studies suggested or implied collaboratively designed training, none of them were collaboratively delivered. The present study found a much larger positive effect for teacher confidence gain t(98) = −13.85, p = <0.001, d = 1.38, 95% CI −1.78, −1.33]. In conjunction with the mediating effect of effective collaborative teaching delivery on the knowledge–confidence relationship, quality collaborative teaching may explain the differences in confidence effects found by this study. Taken together, these findings suggest it is likely that educators’ knowledge of neurodivergent MHL improved due to training. This study supports the existing literature while providing an additional Australian cultural perspective on the benefits of MHL and neurodiversity training for primary school educators (e.g., Schimke and colleagues’ study in 2022 [42]). The existing literature largely focuses on knowledge gain (outcome measurements) and reduction of symptoms (impact measurements). None of the literature identified by this study evaluated complex effects, or evaluated knowledge, confidence, and satisfaction in the same study. The authors acknowledge that while this study complements the findings of other published works, the benefits of teacher training in MHL for neurodivergent students are not yet conclusive.

Exploring research aim 4, we found that perceived knowledge gain predicted perceived confidence gain (β = 0.79, p ≤ 0.001). While Corona and colleagues [33] found that knowledge did not predict confidence, a study on educator’s perceptions of ADHD by Bussing and colleagues found that it did [43]. Another study looking at university students by Boswell [44] found that the relationship between knowledge gain and confidence gain improved when knowledge gain increased, suggesting that the more students learned, the greater the gain in confidence. The possibility of an exponential relationship was considered and analysed, but not found to be significant in this study. However, as with Bussing and colleagues’ and Boswell’s studies, our results support the conclusion that perceived knowledge gain can have a large and significant impact on confidence gain in that subject area.

To our knowledge, no studies that evaluate professional development training have reported or explored complex effects related to knowledge or confidence gain; however, we did find some similarities to studies in the related field of co-teaching in special education. While this study investigated in-service professional development training of adult educators, Stefanidis and colleagues [45] conducted a meta-analysis exploring the effectiveness of co-teaching in special education and the mediating factors of outcomes. They found that the pooled standardised effect size from 28 studies was close to zero (g = −0.12; SE = 0.09; 95% C.I. [−0.31, 0.08]; p = 0.215), and the moderating factors were publication date and state vs. non-state evaluation methods with very small effect sizes [45]. These results differ from the overall themes found in our literature review on educator MHL training. However, only the present study was explicitly collaboratively presented.

Combining knowledge, confidence, and presenter satisfaction ratings in one study enabled the exploration of the underlying factors affecting the relationship between knowledge and confidence. Exploring the mediating effect of effective collaborating presenters, we found that the direct effect of knowledge on confidence remained significant with a reduced effect size, b = 0.34, t = 5.51, p < 0.001, while collaborative presenter rating partially mediated the relationship between knowledge and confidence, standardised indirect effect—β = 0.46, SE = 0.06, 95% CI [0.33, 0.57]. Therefore, knowledge indirectly accounted for approximately 51% of the increase in confidence through the ratings for effective collaborating presenters (R2 = 0.51).

4.1. Theoretical Implications

Given the importance of increasing educator learning confidence with neurodivergent MHL and support strategies, understanding and identifying the important factors associated with positive outcomes prompts consideration of, and connections to, several theories. The style of confidence measured by this study—educator’s confidence in their ability to use strategies learned—aligns well with Bandura’s [26] description of self-efficacy, which has been shown in meta-analyses to have a considerable impact on motivation and commitment [46,47]. Bandura’s self-efficacy theory highlights the importance of the affect of vicarious learning and confidence in an individual’s motivation, suggesting that presenter efficacy and confidence may influence participant’s efficacy and confidence, leading to differences in participant motivation [26]. Instructional System Design (ISD) theory proposes that training must offer strong intrinsic or extrinsic motivation, and providing the ‘framework for understanding’ may provide this motivation [48,49].

Steingut and colleagues [50] conducted a meta-analysis of 23 studies exploring the effects of rationale provision on motivation and performance, and found that providing a rationale leads to enhanced motivation and performance. They conducted a moderation analysis and found that ‘subjective task value’ (d = 0.33, p = < 0.001) and ‘autonomy’ (d = 0.40, p = < 0.001) were the largest moderators of the overall effect of rationale on motivation and performance [50]. The ‘framework for understanding’ in the present study offered a comprehensive 90 min rationale, while 100% of the participants self-selected to participate in the training. These findings, along with the present study, support the theory that comprehensive rationale provision where participants have participatory autonomy can have a dramatic positive impact on learning and confidence.

4.2. Ethical and Practical Implications and Limitations

A review of the literature suggests that training length is not consistent with better results, and this study complements these findings. The present study demonstrated that three 90 min training sessions in the Teaching and Mental Health program on autism, ADHD, and sensory processing were highly effective in increasing teachers’ knowledge and confidence. Furthermore, presenting a collaborative approach may be an important mediating factor in identifying which aspects of a training program can be improved for the largest gains in confidence, implementation, and student wellbeing [39,42,51]. Another factor to note is that while ongoing educator professional development is essential for educators to stay up to date with the latest pedagogical knowledge and techniques, the training evaluated by this study was developed in response to educator needs. Core subjects in education degrees in Australia do not mandate training in special education, nor MHL or trauma-informed care, and internationally there is little to none [52,53,54,55]. Given the need to better prepare educators to support the mental health needs of their students, the results of this study and similar future research may have important implications for the development of university and other tertiary programs for pre-service educators.

This study has several practical limitations to report. Firstly, the current study’s longitudinal repeated measures design limits the generalisability of results. Improvement due to time and chance cannot be quantified, and therefore separated from the improvements due to training [56]. Secondly, it is essential that the ethical considerations of the impact of teacher training must be considered. Training must be designed to ensure that the recommended approaches and strategies align with the values held by lived experience members of neurodivergent communities, and do not cause harm or distress to students or educators. Long-term educator and student impact measures, such as educator, student, and parent satisfaction, classroom harmony, and improvements in student and educator wellbeing, were not collected by this study. Ethically valid impact measures would have contributed considerable information about the long-term benefits of the Teaching and Mental Health program.

4.3. Additional Limitations

As this study was a pilot program, and due to the nature of meeting the specific learning needs of educators, participation in the training was optional and therefore limited by convenience sampling. To allow educators to address learning requirements, training modules in the Teaching and Mental Health program were offered as individual courses, and educators attended the training by choice based on interests or to address personal learning requirements. It has been found that participants who attend mandatory training have very different attitudes and motivations from participants who choose to participate in training, which would impact on the generalisability of results [57]. Participants were educators in the Queensland, Australia, and largely came from public primary schools. Convenience sampling, participant geographical location restrictions, and a lack of representation from private school educators limit the generalisability of the results in this evaluation.

In addition, only six questions were asked before and after each training module to measure knowledge gained, which likely affected the reliability of the results. Finally, using archival data has several limitations. The original research and data collection were not designed to test a particular theory. Retrospective analysis requires an innovative approach to identify potential value within existing data and may explore research questions not originally considered or planned by the original research team. Additionally, this study has some limitations due to missing data. Teacher pre-knowledge ratings had 14.1% missing cases, which were not missing completely at random. While the data were replaced using the multiple imputations method, which is an acceptable method of replacement, the imputed data are still only a close approximation of the missing cases, which contributes to error [30,31]. The current study was limited by these factors.

4.4. Directions for Future Research

Cluster randomised controlled trials replicating the current study would remove selection bias and reduce sources of error. Randomisation of participants or groups of participants allows the use of probability theory, which establishes chance as the likely source for the differences in post-intervention outcomes [58]. Cluster randomisation is particularly important due to the nature of the teaching environment. Without cluster randomisation, intra-school contamination of results can occur due to teacher collaboration between training group participants and controls. Cluster randomisation reduces this risk by grouping educators by school and randomising the school cluster rather than the individual educators [59].

Mandatory MHL or neurodiversity training for educators would likely be counterproductive and at odds with adult learning theory [60]. However, using cluster randomisation combined with offering training at the designated schools could improve generalisability by increasing training accessibility. In conjunction with existing requirements in Australia for educators to participant in ongoing professional development, it is likely that increasing the convenience of MHL training is likely to be associated with increased attendance [61,62]. Future studies should implement integrated and streamlined evaluation measures to reduce the risk of missing data and ensure that all measures use the same scale. A larger sample size would have provided a buffer, allowing the ability to trim systematically missing data without substantially reducing statistical power [38].

Future studies would be improved by measuring impacts, specifically the inclusion of independently assessed educator implementation fidelity and ethically valid measurements of student impacts. Including ethically valid measurements of the benefits of training for neurodivergent students and classroom harmony at multiple time-points would be a considerable contribution to this field of research. Long-term improvements to the classroom environment and student impacts are the ultimate aim of educator training, and when combined with ethically valid impact measures, offer a considerable improvement to current validity and value of research findings.

Future research exploring the complex effects of collaborative training and rationale provision, and incorporating and evaluating complex effects in conjunction with implementation fidelity and ethical outcome measures, would provide valuable insights into program efficacy and improvement of future program design. Finally, future studies should include primary schools from multiple states and territories in Australia, in the private, denominational, and public sectors. This is important to provide a more representative sample of Australia’s multi-cultured and varied socioeconomic society, which would improve the generalisability of findings. This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

4.5. Conclusions

The evaluation of this co-designed and collaboratively delivered MHL program increased educator confidence and knowledge. Collecting multiple measures in one study permitted the opportunity to explore complex effects, offering new insights into underlying factors affecting the relationship between knowledge and confidence gain. Ratings for the collaborative presenter’s efficacy and helpfulness partially mediated the relationship between teacher knowledge and confidence gain. The mental health presenters provided a rationale for why participants should be motivated to learn and engage in the training through the ‘framework for understanding’. Presenter efficacy and confidence may influence participant efficacy and confidence, leading to differences in participant motivation. More research is needed to investigate these assumptions. This study highlights the need for neurodiversity training design and evaluation to emphasise a social model of disability, promoting support for neurodiverse individuals and avoiding methods that promote restrictive practices, segregation, or excessive focus on symptoms. These findings highlight the need for MHL to be co-designed and delivered collaboratively, to ensure a strengths-based, solutions-focused, and contextually relevant program to support educators of neurodivergent students.

Author Contributions

Conceptualization, R.T., G.K. and E.C.F.; methodology, G.K.; validation, R.T. and E.C.F.; formal analysis, R.T. and E.C.F.; investigation, G.K.; resources, G.K.; data curation, R.T.; writing—original draft preparation, R.T. and G.K.; writing—review and editing, G.K. and E.C.F.; visualization, R.T.; supervision, E.C.F.; project administration, G.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the University of Southern Queensland’s Human Research Ethics Committee (HREC; approved H22REA132, Approval date: 8 June 2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author in accordance with the privacy regulations of the participating industry partners.

Conflicts of Interest

The authors declare no conflict of interest.

References

- McLean, S. Supporting Children with Neurodiversity; Child Family Community Australia, Australian Institute of Family Studies: Melbourne, Australia, 2022. [Google Scholar]

- Eapen, V. Developmental and mental health disorders: Two sides of the same coin. Asian J. Psychiatry 2014, 8, 7–11. [Google Scholar] [CrossRef] [PubMed]

- Boulton, K.A.; Guastella, A.J.; Hodge, M.-A.; Demetriou, E.A.; Ong, N.; Silove, N. Mental health concerns in children with neurodevelopmental conditions attending a developmental assessment service. J. Affect. Disord. 2023, 335, 264–272. [Google Scholar] [CrossRef] [PubMed]

- Nielsen, T.C.; Nassar, N.; Boulton, K.A.; Guastella, A.J.; Lain, S.J. Estimating the prevalence of autism spectrum disorder in New South Wales, Australia: A data linkage study of three routinely collected datasets. J. Autism Dev. Disord. 2024, 54, 1558–1566. [Google Scholar] [CrossRef] [PubMed]

- Lawrence, D.; Johnson, S.; Hafekost, J.; Boterhoven de Haan, K.; Sawyer, M.; Ainley, J.; Zubrick, S.R. The Mental Health of Children and Adolescents: Report on the Second Australian Child and Adolescent Survey of Mental Health and Wellbeing; Department of Health: Canberra, Australia, 2015. [Google Scholar]

- Kieling, C.; Baker-Henningham, H.; Belfer, M.; Conti, G.; Ertem, I.; Omigbodun, O.; Rohde, L.A.; Srinath, S.; Ulkuer, N.; Rahman, A. Child and adolescent mental health worldwide: Evidence for action. Lancet 2011, 378, 1515–1525. [Google Scholar] [CrossRef] [PubMed]

- AIHW. Australia’s Children; CWS 69; Australian Institute of Health and Welfare (AIHW): Canberra, Australia, 2020. [Google Scholar]

- RANZCP. The Economic Cost of Serious Mental Illness and Comorbidities in Australia and New Zealand; RANZCP: Melbourne, Australia, 2016. [Google Scholar]

- Bloom, D.E.; Cafiero, E.; Jané-Llopis, E.; Abrahams-Gessel, S.; Bloom, L.R.; Fathima, S.; Feigl, A.B.; Gaziano, T.; Hamandi, A.; Mowafi, M. The Global Economic Burden of Noncommunicable Diseases; Program on the Global Demography of Aging: Geneva, Switzerland, 2012. [Google Scholar]

- Kessler, R.C.; Amminger, G.P.; Aguilar-Gaxiola, S.; Alonso, J.; Lee, S.; Üstün, T.B. Age of onset of mental disorders: A review of recent literature. Curr. Opin. Psychiatry 2007, 20, 359–364. [Google Scholar] [CrossRef] [PubMed]

- Stephenson, J.; Browne, L.; Carter, M.; Clark, T.; Costley, D.; Martin, J.; Williams, K.; Bruck, S.; Davies, L.; Sweller, N. Facilitators and barriers to inclusion of students with autism spectrum disorder: Parent, teacher, and principal perspectives. Australas. J. Spec. Incl. Educ. 2021, 45, 1–17. [Google Scholar] [CrossRef]

- Goodsell, B.; Lawrence, D.; Ainley, J.; Sawyer, M.; Zubrick, S.; Maratos, J. Child and Adolescent Mental Health and Educational Outcomes. An Analysis of Educational Outcomes from Young Minds Matter: The Second Australian Child and Adolescent Survey of Mental Health and Wellbeing; 1740523857; The University of Western Australia: Crawley, Australia, 2017. [Google Scholar]

- Lundström, S.; Taylor, M.; Larsson, H.; Lichtenstein, P.; Kuja-Halkola, R.; Gillberg, C. Perceived child impairment and the ‘autism epidemic’. J. Child Psychol. Psychiatry 2022, 63, 591–598. [Google Scholar] [CrossRef]

- Eldevik, S.; Hastings, R.P.; Hughes, J.C.; Jahr, E.; Eikeseth, S.; Cross, S. Meta-analysis of early intensive behavioral intervention for children with autism. J. Clin. Child Adolesc. Psychol. 2009, 38, 439–450. [Google Scholar] [CrossRef]

- Shephard, E.; Zuccolo, P.F.; Idrees, I.; Godoy, P.B.; Salomone, E.; Ferrante, C.; Sorgato, P.; Catao, L.F.; Goodwin, A.; Bolton, P.F. Systematic review and meta-analysis: The science of early-life precursors and interventions for attention-deficit/hyperactivity disorder. J. Am. Acad. Child Adolesc. Psychiatry 2022, 61, 187–226. [Google Scholar] [CrossRef]

- Virués-Ortega, J. Applied behavior analytic intervention for autism in early childhood: Meta-analysis, meta-regression and dose–response meta-analysis of multiple outcomes. Clin. Psychol. Rev. 2010, 30, 387–399. [Google Scholar] [CrossRef]

- Feeney-Kettler, K.A.; Kratochwill, T.R.; Kaiser, A.P.; Hemmeter, M.L.; Kettler, R.J. Screening young children’s risk for mental health problems: A review of four measures. Assess. Eff. Interv. 2010, 35, 218–230. [Google Scholar] [CrossRef]

- Loades, M.E.; Mastroyannopoulou, K. Teachers’ recognition of children’s mental health problems. Child Adolesc. Ment. Health 2010, 15, 150–156. [Google Scholar] [CrossRef] [PubMed]

- Kickbusch, I.; World Health, O.; Pelikan, J.M.; Apfel, F. Health Literacy. The Solid Facts; WHO Regional Office for Europe: Copenhagen, Denmark, 2013. [Google Scholar]

- Jennings, P.A.; Greenberg, M.T. The prosocial classroom: Teacher social and emotional competence in relation to student and classroom outcomes. Rev. Educ. Res. 2009, 79, 491–525. [Google Scholar] [CrossRef]

- Anderson, M.; Werner-Seidler, A.; King, C.; Gayed, A.; Harvey, S.B.; O’Dea, B. Mental health training programs for secondary school teachers: A systematic review. Sch. Ment. Health 2019, 11, 489–508. [Google Scholar] [CrossRef]

- Yamaguchi, S.; Foo, J.C.; Nishida, A.; Ogawa, S.; Togo, F.; Sasaki, T. Mental health literacy programs for school teachers: A systematic review and narrative synthesis. Early Interv. Psychiatry 2020, 14, 14–25. [Google Scholar] [CrossRef]

- Yates, B.T. Research on improving outcomes and reducing costs of psychological interventions: Toward delivering the best to the most for the least. Annu. Rev. Clin. Psychol. 2020, 16, 125–150. [Google Scholar] [CrossRef]

- Krishnamoorthy, G. Teaching and Mental Health: Mental Health Literacy for Educators, Training Evaluation Report; Mater Child And Youth Mental Health Service: Inala, Australia, 2014. [Google Scholar]

- Bowyer, M.; Fein, E.C.; Krishnamoorthy, G. Teacher mental health literacy and child development in Australian primary schools: A program evaluation. Educ. Sci. 2023, 13, 329. [Google Scholar] [CrossRef]

- Bandura, A. Self-efficacy: Toward a unifying theory of behavioral change. Psychol. Rev. 1977, 84, 191. [Google Scholar] [CrossRef]

- Aguiar, A.P.; Kieling, R.R.; Costa, A.C.; Chardosim, N.; Dorneles, B.V.; Almeida, M.R.; Mazzuca, A.C.; Kieling, C.; Rohde, L.A. Increasing Teachers’ Knowledge About ADHD and Learning Disorders:An Investigation on the Role of a Psychoeducational Intervention. J. Atten. Disord. 2014, 18, 691–698. [Google Scholar] [CrossRef]

- Lasisi, D.; Ani, C.; Lasebikan, V.; Sheikh, L.; Omigbodun, O. Effect of attention-deficit–hyperactivity-disorder training program on the knowledge and attitudes of primary school teachers in Kaduna, North West Nigeria. Child Adolesc. Psychiatry Ment. Health 2017, 11, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Shehata, A.; Mahrous, E.; Farrag, E.; Hassan, Z. Effectiveness of structured teaching program on knowledge, attitude, and management strategies among teachers of primary school toward children with attention deficit hyperactivity disorder. IOSR J. Nurs. Health Sci. 2016, 5, 29–37. [Google Scholar]

- Syed, E.U.; Hussein, S.A. Increase in teachers’ knowledge about ADHD after a week-long training program: A pilot study. J. Atten. Disord. 2010, 13, 420–423. [Google Scholar] [CrossRef] [PubMed]

- Ward, R.J.; Bristow, S.J.; Kovshoff, H.; Cortese, S.; Kreppner, J. The effects of ADHD teacher training programs on teachers and pupils: A systematic review and meta-analysis. J. Atten. Disord. 2022, 26, 225–244. [Google Scholar] [CrossRef] [PubMed]

- Latouche, A.P.; Gascoigne, M. In-service training for increasing teachers’ ADHD knowledge and self-efficacy. J. Atten. Disord. 2019, 23, 270–281. [Google Scholar] [CrossRef] [PubMed]

- Corona, L.L.; Christodulu, K.V.; Rinaldi, M.L. Investigation of school professionals’ self-efficacy for working with students with ASD: Impact of prior experience, knowledge, and training. J. Posit. Behav. Interv. 2017, 19, 90–101. [Google Scholar] [CrossRef]

- Kossewska, J.; Bombińska-Domżał, A.; Cierpiałowska, T.; Lubińska-Kościółek, E.; Niemiec, S.; Płoszaj, M.; Preece, D.R. Towards inclusive education of children with Autism Spectrum Disorder. The impact of teachers’ autism-specific professional development on their confidence in their professional competences. Int. J. Spec. Educ. 2021, 36, 27–35. [Google Scholar] [CrossRef]

- Hardy, I. The Politics of Teacher Professional Development: Policy, Research and Practice; Routledge: Brighton, UK, 2012. [Google Scholar]

- Hokke, S.; Hackworth, N.J.; Bennetts, S.K.; Nicholson, J.M.; Keyzer, P.; Lucke, J.; Zion, L.; Crawford, S.B. Ethical considerations in using social media to engage research participants: Perspectives of Australian researchers and ethics committee members. J. Empir. Res. Hum. Res. Ethics 2020, 15, 12–27. [Google Scholar] [CrossRef]

- Fein, E.C.; Gilmour, J.; Machin, T.; Hendry, L. Statistics for Research Students: An Open Access Resource with Self-Tests and Illustrative Examples; University of Southern Queensland: Queensland, Australia, 2022. [Google Scholar]

- Field, A. Discovering Statistics Using IBM SPSS Statistics; Sage Publications Limited: London, UK, 2024. [Google Scholar]

- Abu-Bader, S.; Jones, T.V. Statistical mediation analysis using the sobel test and hayes SPSS process macro. Int. J. Quant. Qual. Res. Methods 2021, 24, 1918–1927. [Google Scholar]

- Lee, E.J. Biased self-estimation of maths competence and subsequent motivation and achievement: Differential effects for high-and low-achieving students. Educ. Psychol. 2021, 41, 446–466. [Google Scholar] [CrossRef]

- Mabe, P.A.; West, S.G. Validity of self-evaluation of ability: A review and meta-analysis. J. Appl. Psychol. 1982, 67, 280. [Google Scholar] [CrossRef]

- Schimke, D.; Krishnamoorthy, G.; Ayre, K.; Berger, E.; Rees, B. Multi-tiered culturally responsive behavior support: A qualitative study of trauma-informed education in an Australian primary school. Front. Educ. 2022, 7, 866266. [Google Scholar] [CrossRef]

- Bussing, R.; Gary, F.A.; Leon, C.E.; Garvan, C.W.; Reid, R. General Classroom Teachers’ Information and Perceptions of Attention Deficit Hyperactivity Disorder. Behav. Disord. 2002, 27, 327–339. [Google Scholar] [CrossRef]

- Boswell, S.S. Undergraduates’ Perceived Knowledge, Self-Efficacy, and Interest in Social Science Research. J. Eff. Teach. 2013, 13, 48–57. [Google Scholar]

- Stefanidis, A.; King-Sears, M.E.; Strogilos, V.; Berkeley, S.; DeLury, M.; Voulagka, A. Academic achievement for students with and without disabilities in co-taught classrooms: A meta-analysis. Int. J. Educ. Res. 2023, 120, 102208. [Google Scholar] [CrossRef]

- Chesnut, S.R.; Burley, H. Self-efficacy as a predictor of commitment to the teaching profession: A meta-analysis. Educ. Res. Rev. 2015, 15, 1–16. [Google Scholar] [CrossRef]

- Stajkovic, A.D.; Luthans, F. Self-efficacy and work-related performance: A meta-analysis. Psychol. Bull. 1998, 124, 240. [Google Scholar] [CrossRef]

- Reigeluth, C.M. Instructional-Design Theories and Models: A New Paradigm of Instructional Theory, Volume II; Routledge: Brighton, UK, 2013. [Google Scholar]

- Reigeluth, C.M.; Myers, R.D.; Lee, D. The learner-centered paradigm of education. In Instructional-Design Theories and Models, Volume IV; Routledge: Brighton, UK, 2016; pp. 5–32. [Google Scholar]

- Steingut, R.R.; Patall, E.A.; Trimble, S.S. The effect of rationale provision on motivation and performance outcomes: A meta-analysis. Motiv. Sci. 2017, 3, 19. [Google Scholar] [CrossRef]

- Bellamy, T.; Krishnamoorthy, G.; Ayre, K.; Berger, E.; Machin, T.; Rees, B.E. Trauma-informed school programming: A partnership approach to culturally responsive behavior support. Sustainability 2022, 14, 3997. [Google Scholar] [CrossRef]

- Hess, K.L.; Morrier, M.J.; Heflin, L.J.; Ivey, M.L. Autism treatment survey: Services received by children with autism spectrum disorders in public school classrooms. J. Autism Dev. Disord. 2008, 38, 961–971. [Google Scholar] [CrossRef]

- Morrier, M.J.; Hess, K.L.; Heflin, L.J. Teacher training for implementation of teaching strategies for students with autism spectrum disorders. Teach. Educ. Spec. Educ. 2011, 34, 119–132. [Google Scholar] [CrossRef]

- Autism Spectrum Australia. Australian Government Initial Teacher Education Review: Autism Spectrum Australia (Aspect) Submission; Autism Spectrum Australia: Chatswood, Australia, 2021. [Google Scholar]

- Collier, S.; Trimmer, K.; Krishnamoorthy, G. Roadblocks and enablers for teacher engagement in professional development opportunities aimed at supporting trauma-informed classroom pedagogical practice. J. Grad. Educ. Res. 2022, 3, 51–65. [Google Scholar]

- McLeod, S. Controlled Experiment. Available online: https://www.simplypsychology.org/controlled-experiment.html (accessed on 26 October 2023).

- Ricci, F.; Chiesi, A.; Bisio, C.; Panari, C.; Pelosi, A. Effectiveness of occupational health and safety training: A systematic review with meta-analysis. J. Workplace Learn. 2016, 28, 355–377. [Google Scholar] [CrossRef]

- Cook, A.J.; Delong, E.; Murray, D.M.; Vollmer, W.M.; Heagerty, P.J. Statistical lessons learned for designing cluster randomized pragmatic clinical trials from the NIH Health Care Systems Collaboratory Biostatistics and Design Core. Clin. Trials 2016, 13, 504–512. [Google Scholar] [CrossRef] [PubMed]

- Hemming, K.; Girling, A. Cluster randomised trials: Useful for interventions delivered to groups. BJOG: Int. J. Obstet. Gynaecol. 2018, 126, 340. [Google Scholar] [CrossRef] [PubMed]

- Short, A. Mandatory pedagogical training for all staff in higher education by 2020—A great idea or bridge too far. J. Eur. High. Educ. Area 2015, 3, 1–21. [Google Scholar]

- Alqarni, A.S. Technology as a Motivational Factor for Using Online Professional Development for Saudi Teachers. Int. J. Comput. Sci. Netw. Secur. 2022, 22, 455–460. [Google Scholar]

- Mann, D.; Mann, B. Professional Development. Available online: http://www.aussieeducator.org.au/teachers/professionaldevelopment.html (accessed on 14 March 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).