The Mediating Role of Generative AI Self-Regulation on Students’ Critical Thinking and Problem-Solving

Abstract

:1. Introduction

2. Literature Review and Hypothesis Development

2.1. The Impact of AI on Skill Development

2.2. The Mediating Role of Self-Regulation

3. Method

3.1. Instrument and Sample

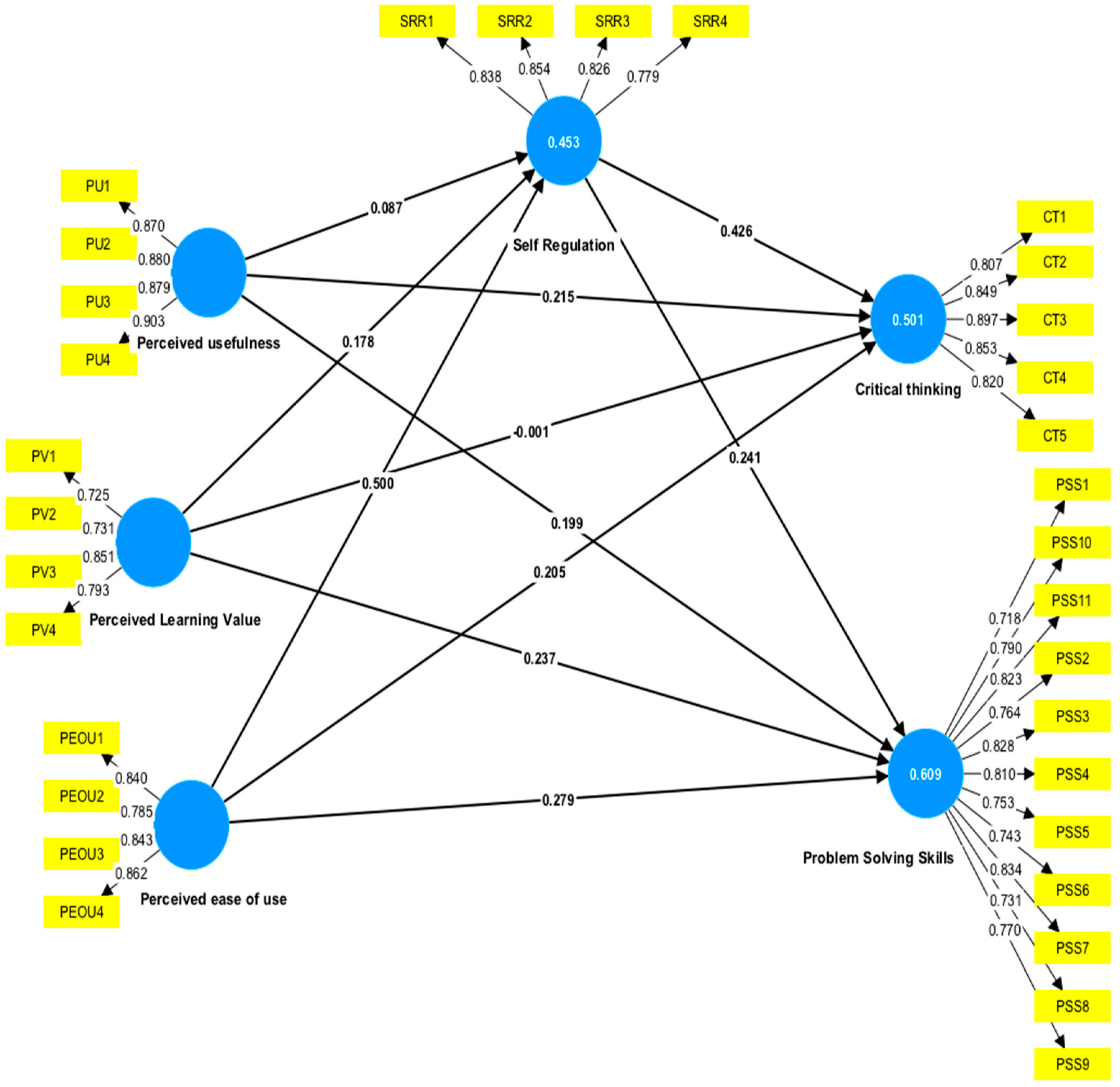

3.2. Model Evaluation

4. Results

5. Discussion

5.1. The Impact of GenAI on Employability Development

5.2. Results of the Mediating Role of Self-Regulation

5.3. Theoretical and Practical Implications

5.4. Limitation and Future Direction

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- O’Dea, X. Generative AI: Is it a paradigm shift for higher education? Stud. High. Educ. 2024, 49, 1–6. [Google Scholar] [CrossRef]

- Molenaar, I.; de Mooij, S.; Azevedo, R.; Bannert, M.; Järvelä, S.; Gašević, D. Measuring self-regulated learning and the role of AI: Five years of research using multimodal multichannel data. Comput. Hum. Behav. 2023, 139, 107540. [Google Scholar] [CrossRef]

- Essien, A.; Bukoye, O.T.; O’Dea, X.; Kremantzis, M. The influence of AI text generators on critical thinking skills in UK business schools. Stud. High. Educ. 2024, 49, 865–882. [Google Scholar] [CrossRef]

- Lodge, J.M.; de Barba, P.; Broadbent, J. Learning with generative artificial intelligence within a network of co-regulation. J. Univ. Teach. Learn. Pract. 2023, 20. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Alfadda, H.A.; Mahdi, H.S. Measuring students’ use of Zoom application in language course based on the technology acceptance model (TAM). J. Psycholing. Res. 2021, 50, 883–900. [Google Scholar] [CrossRef]

- Maheshwari, G. Factors influencing entrepreneurial intentions the most for university students in Vietnam: Educational support, personality traits or TPB components? Edu. Train. 2021, 63, 1138–1153. [Google Scholar] [CrossRef]

- Penkauskienė, D.; Railienė, A.; Cruz, G. How is critical thinking valued by the labour market? Employer perspectives from different European countries. Stud. High. Educ. 2019, 44, 804–815. [Google Scholar] [CrossRef]

- Paul, R.; Elder, L. The Miniature Guide to Critical Thinking Concepts and Tools; Rowman & Littlefield: Lanham, MD, USA, 2019. [Google Scholar]

- Ennis, R.H. Critical thinking across the curriculum: A vision. Topoi 2018, 37, 165–184. [Google Scholar] [CrossRef]

- Davies, M.; Barnett, R. (Eds.) The Palgrave Handbook of Critical Thinking in Higher Education; Palgrave Macmillan US: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Bailey, D.; Almusharraf, N.; Hatcher, R. Finding Satisfaction: Intrinsic Motivation for Synchronous and Asynchronous Communication in the Online Language Learning Context; Springer: New York, NY, USA, 2021; p. 3. [Google Scholar] [CrossRef]

- Walter, Y. Embracing the future of artificial intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education. Int. J. Educ. Technol. High. Educ. 2024, 21, 15. [Google Scholar] [CrossRef]

- Farrokhnia, M.; Banihashem, S.K.; Noroozi, O.; Wals, A. A SWOT analysis of ChatGPT: Implications for educational practice and research. Innov. Educ. Teach. Int. 2023, 61, 460–474. [Google Scholar] [CrossRef]

- Sun, G.H.; Hoelscher, S.H. The ChatGPT storm and what faculty can do. Nurse Educ. 2023, 48, 119–124, advance online publication. [Google Scholar] [CrossRef] [PubMed]

- Musi, E.; Carmi, E.; Reed, C.; Yates, S.; O’Halloran, K. Developing misinformation immunity: How to reason-check fallacious news in a human–computer interaction environment. Soc. Media Soc. 2023, 9. [Google Scholar] [CrossRef]

- Cam, E.; Kiyici, M. The impact of robotics assisted programming education on academic success, problem solving skills and motivation. JETOL 2022, 5, 47–65. [Google Scholar] [CrossRef]

- Urban, M.; Děchtěrenko, F.; Lukavský, J.; Hrabalová, V.; Švacha, F.; Brom, C.; Urban, K. ChatGPT improves creative problem-solving performance in university students: An experimental study. Comput. Educ. 2024, 215, 105031. [Google Scholar] [CrossRef]

- Schunk, D.H.; Zimmerman, B.J. (Eds.) Handbook of Self-Regulation of Learning and Performance; Taylor & Francis: Abingdon, UK, 2018. [Google Scholar]

- Beckman, K.; Apps, T.; Bennett, S.; Dalgarno, B.; Kennedy, G.; Lockyer, L. Self-regulation in open-ended online assignment tasks: The importance of initial task interpretation and goal setting. Stud. High. Educ. 2021, 46, 821–835. [Google Scholar] [CrossRef]

- Lau, J.Y. Metacognitive Education: Going Beyond Critical Thinking. In The Palgrave Handbook of Critical Thinking in Higher Education; Davies, M., Barnett, R., Eds.; Palgrave Macmillan US: New York, NY, USA, 2015; pp. 373–389. [Google Scholar]

- Winne, P.H. Leveraging Big Data to Help Each Learner and Accelerate Learning Science. Teach. Coll. Rec. Voice Scholarsh. Educ. 2017, 119, 1–24. [Google Scholar] [CrossRef]

- Greene, J.A.; Azevedo, R. A theoretical review of Winne and Hadwin’s model of self-regulated learning: New perspectives and directions. Rev. Educ. Res. 2007, 77, 334–372. [Google Scholar] [CrossRef]

- Seufert, T. The interplay between self-regulation in learning and cognitive load. Educ. Res. Rev. 2018, 24, 116–129. [Google Scholar] [CrossRef]

- Zhou, X.; Schofield, L. Using social learning theories to explore the role of generative artificial intelligence (AI) in collaborative learning. JLDHE 2024, 30. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, J.J.; Chen, C. Unveiling students’ experiences and perceptions of artificial intelligence usage in higher education. J. Univ. Teach. Learn. Pract. 2024, 21. [Google Scholar] [CrossRef]

- Pinto, P.H.R.; de Araujo, V.M.U.; Junior, C.D.S.F.; Goulart, L.L.; Beltrão, J.V.C.; Aguiar, G.S.; Avelino, E.L. Assessing the psychological impact of generative AI on data science education: An exploratory study. Preprints 2023, 2023120379. [Google Scholar] [CrossRef]

- Li, K. Determinants of college students’ actual use of AI-based systems: An extension of the technology acceptance model. Sustainability 2023, 15, 5221. [Google Scholar] [CrossRef]

- Al-Abdullatif, A.M. Modeling Students’ Perceptions of Chatbots in Learning: Integrating Technology Acceptance with the Value-Based Adoption Model. Educ. Sci. 2023, 13, 1151. [Google Scholar] [CrossRef]

- Alves, H. The measurement of perceived value in higher education: A unidimensional approach. Serv. Ind. J. 2011, 31, 1943–1960. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Attaining Self-Regulation: A Social Cognitive Perspective. In Handbook of Self-Regulation; Boekaerts, M., Pintrich, P.R., Zeidner, M., Eds.; Elsevier: Amsterdam, The Netherlands, 2000; pp. 13–39. [Google Scholar]

- Pintrich, P.R. A Manual for the Use of the Motivated Strategies for Learning Questionnaire (MSLQ); U.S. Department of Education: Washington, DC, USA, 1991.

- Rosenbaum, M. A schedule for assessing self-control behaviors: Preliminary findings. Behav. Ther. 1980, 11, 109–121. [Google Scholar] [CrossRef]

- Kang, H. Sample size determination and power analysis using the G* Power software. J. Educ. Eval. Health Prof. 2021, 18. [Google Scholar] [CrossRef]

- Muthén, B.; Kaplan, D.; Hollis, M. On structural equation modeling with data that are not missing completely at random. Psychometrika 1987, 52, 431–462. [Google Scholar] [CrossRef]

- Bentler, P.M.; Speckart, G. Models of attitude–behavior relations. Psychol. Rev. 1979, 86, 452. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Hu, L.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Modeling. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Ghotbi, N.; Ho, M.T.; Mantello, P. Attitude of college students towards ethical issues of artificial intelligence in an international university in Japan. AI Soc. 2022, 37, 283–290. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Hu, W. Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 2023, 20, 43. [Google Scholar] [CrossRef]

- Rudolph, J.; Tan, S.; Tan, S. ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? J. Appl. Learn. Teach. 2023, 6, 342–363. [Google Scholar] [CrossRef]

| Variables | Items | Source |

|---|---|---|

| Perceived Usefulness | 1. Using GenAI improves my performance in my study. | Davis (1989) [5] |

| 2. Using GenAI in my study increases my productivity. | ||

| 3. Using GenAI enhances my effectiveness in my study. | ||

| 4. I find GenAI to be useful in my study. | ||

| Perceived Learning Value | 1. The experience I have gained in using GenAI will help me get a good job. (Future goals) 2. Taking into consideration the price I pay for using GenAI (fees, charges, etc.), I believe GenAI provides quality service. (Trade-off price/quality) 3. Compared with other learning-supporting software, I consider that I receive quality service for the price that I pay for GenAI. (Comparison with alternatives) 4. I feel happy about my choice of GenAI tools. (emotion) | Alves (2011) [30] |

| Perceived Ease of Use | 1. My interaction with GenAI is clear and understandable. | Davis (1989) [5] |

| 2. Interacting with GenAI does not require a lot of my mental effort. | ||

| 3. I find GenAI to be easy to use. | ||

| 4. I find it easy to get GenAI to do what I want it to do. | ||

| Self-Regulation | When I use GenAI to read for this course, I make up questions to help focus my reading. | Pintrich (1991) [32] |

| If the materials provided by GenAI are difficult to understand, I am able to change the way I read the material. | ||

| I try to change the way I use GenAI for study in order to fit the course requirements and instructors’ teaching style. | ||

| When I use GenAI, I try to think through a topic and decide what I am supposed to learn from it rather than just reading it over. | ||

| Critical Thinking | 1. I often find myself questioning things I read from GenAI to decide if I find them convincing. | Pintrich (1991) [32] |

| 2. When a theory, interpretation or conclusion is presented in GenAI, I try to decide if there is good supporting evidence. | ||

| 3. I treat GenAI content as a starting point and try to develop my own ideas about it. | ||

| 4. I try to play around with ideas of my own related to what I am learning in GenAI. | ||

| 5. Whenever I read an assertation or conclusion generated by GenAI, I think about possible alternatives. | ||

| Problem Solving | 1. When I use GenAI to do my learning tasks, I think about the less boring parts of the task and the reward that I will receive once I am finished. | Rosenbaum (1980) [33] |

| 2. When I have to do something that is anxiety arousing for me, I try to visualize how I will overcome my anxieties while doing it with GenAI. | ||

| 3. When I am faced with a difficult problem, I try to approach its solution in a systematic way using GenAI. | ||

| 4. When I find that I have difficulties in concentrating on my learning, I look for ways to increase my concentration with GenAI. | ||

| 5. When I plan to learn with GenAI, I remove all the things that are not relevant to my learning. | ||

| 6. When I use GenAI to get rid of a bad habit, I first try to find out all the factors that maintain this habit. | ||

| 7. When I find it difficult to settle down and do a certain task, I use GenAI to help me look for ways to settle down. | ||

| 8. GenAI tool help me to finish a learning task I have to do and then start doing the things I really like. | ||

| 9. Facing the need to make a decision I usually find out all the possible alternatives with the help of GenAI instead of deciding quickly and spontaneously. | ||

| 10. I usually plan my work with GenAI when faced with a number of things to do. | ||

| 11. If I find it difficult to concentrate on a certain task, I use GenAI to help me divide the job into smaller segments. |

| Details | Respondents | Percentage | |

|---|---|---|---|

| Gender | Female | 148 | 67% |

| Male | 75 | 33% | |

| Age | 18–21 years | 121 | 54.26% |

| 22–25 years | 62 | 27.80% | |

| 26–30 years | 18 | 8.07% | |

| Over 30 years | 22 | 9.87% | |

| Academic Level | Undergraduate | 155 | 69.51% |

| Master’s | 65 | 29.15% | |

| PhD | 3 | 1.35% |

| Cronbach’s Alpha | Composite Reliability (rho_a) | Composite Reliability (rho_c) | Average Variance Extracted (AVE) | |

|---|---|---|---|---|

| Critical thinking | 0.900 | 0.901 | 0.926 | 0.716 |

| Perceived learning value | 0.782 | 0.797 | 0.858 | 0.603 |

| Perceived ease of use | 0.854 | 0.867 | 0.901 | 0.694 |

| Perceived usefulness | 0.906 | 0.910 | 0.934 | 0.780 |

| Problem-solving skills | 0.935 | 0.937 | 0.944 | 0.608 |

| Self-regulation | 0.843 | 0.843 | 0.895 | 0.680 |

| Critical Thinking | Perceived Learning Value | Perceived Ease of Use | Perceived Usefulness | Problem-Solving Skills | Self-Regulation | |

|---|---|---|---|---|---|---|

| Critical thinking | 0.846 | |||||

| Perceived learning value | 0.480 | 0.776 | ||||

| Perceived ease of use | 0.578 | 0.580 | 0.833 | |||

| Perceived usefulness | 0.493 | 0.644 | 0.459 | 0.883 | ||

| Problem-solving skills | 0.591 | 0.654 | 0.663 | 0.584 | 0.780 | |

| Self-regulation | 0.650 | 0.525 | 0.644 | 0.432 | 0.631 | 0.825 |

| Critical Thinking | Perceived Learning Value | Perceived Ease of Use | Perceived Usefulness | Problem-Solving Skills | Self-Regulation | |

|---|---|---|---|---|---|---|

| Critical thinking | ||||||

| Perceived learning value | 0.549 | |||||

| Perceived ease of use | 0.645 | 0.682 | ||||

| Perceived usefulness | 0.543 | 0.760 | 0.514 | |||

| Problem-olving skills | 0.641 | 0.755 | 0.729 | 0.630 | ||

| Self-regulation | 0.746 | 0.623 | 0.745 | 0.490 | 0.707 |

| Hypotheses | Original Sample (O) | Sample Mean (M) | Standard Deviation (STDEV) | T Statistics (|O/STDEV|) | p Values | Results |

|---|---|---|---|---|---|---|

| H1a: Perceived usefulness → critical thinking | 0.215 | 0.213 | 0.082 | 2.638 | 0.008 | Support |

| H1b: Perceived learning value → critical thinking | −0.001 | −0.001 | 0.082 | 0.017 | 0.986 | Reject |

| H1c: Perceived ease of use → critical thinking | 0.205 | 0.205 | 0.080 | 2.580 | 0.010 | Support |

| H2a: Perceived usefulness → problem-solving skills | 0.199 | 0.201 | 0.064 | 3.121 | 0.002 | Support |

| H2b: Perceived learning value → problem-solving skills | 0.237 | 0.238 | 0.072 | 3.280 | 0.001 | Support |

| H2c: Perceived ease of use → problem-solving skills | 0.279 | 0.281 | 0.066 | 4.202 | 0.000 | Support |

| H3a: Perceived usefulness → self-regulation → critical thinking | 0.037 | 0.040 | 0.033 | 1.130 | 0.259 | Reject |

| H3b: Perceived learning value → self-regulation → critical thinking | 0.076 | 0.075 | 0.041 | 1.841 | 0.066 | Reject |

| H3c: Perceived ease of use → self-regulation → critical thinking | 0.213 | 0.215 | 0.050 | 4.230 | 0.000 | Support |

| H4a: Perceived usefulness → self-regulation → problem-solving skills | 0.021 | 0.021 | 0.018 | 1.150 | 0.250 | Reject |

| H4b: Perceived learning value → self-regulation → problem-olving skills | 0.043 | 0.043 | 0.027 | 1.610 | 0.107 | Reject |

| H4c: Perceived ease of use → self-regulation → problem-solving skills | 0.120 | 0.120 | 0.036 | 3.375 | 0.001 | Support |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Teng, D.; Al-Samarraie, H. The Mediating Role of Generative AI Self-Regulation on Students’ Critical Thinking and Problem-Solving. Educ. Sci. 2024, 14, 1302. https://doi.org/10.3390/educsci14121302

Zhou X, Teng D, Al-Samarraie H. The Mediating Role of Generative AI Self-Regulation on Students’ Critical Thinking and Problem-Solving. Education Sciences. 2024; 14(12):1302. https://doi.org/10.3390/educsci14121302

Chicago/Turabian StyleZhou, Xue, Da Teng, and Hosam Al-Samarraie. 2024. "The Mediating Role of Generative AI Self-Regulation on Students’ Critical Thinking and Problem-Solving" Education Sciences 14, no. 12: 1302. https://doi.org/10.3390/educsci14121302

APA StyleZhou, X., Teng, D., & Al-Samarraie, H. (2024). The Mediating Role of Generative AI Self-Regulation on Students’ Critical Thinking and Problem-Solving. Education Sciences, 14(12), 1302. https://doi.org/10.3390/educsci14121302