Recommender Systems for Teachers: A Systematic Literature Review of Recent (2011–2023) Research

Abstract

1. Introduction

- What is the extent of interest in research on RSs for teachers, as expressed by the volume and other features of recent publications?

- What are the research aims, research questions and approaches adopted for the design and development of RSs?

- In which educational settings or contexts are RSs employed and evaluated?

- What are the methods, algorithms and tools employed for the generation of recommendations?

- What are the RS quality evaluation methods and tools and the evaluation results obtained?

- What is the impact of the use of RSs and their endorsement by researchers and teachers?

2. Research Methodology and Selection Procedure

2.1. Research Methodology

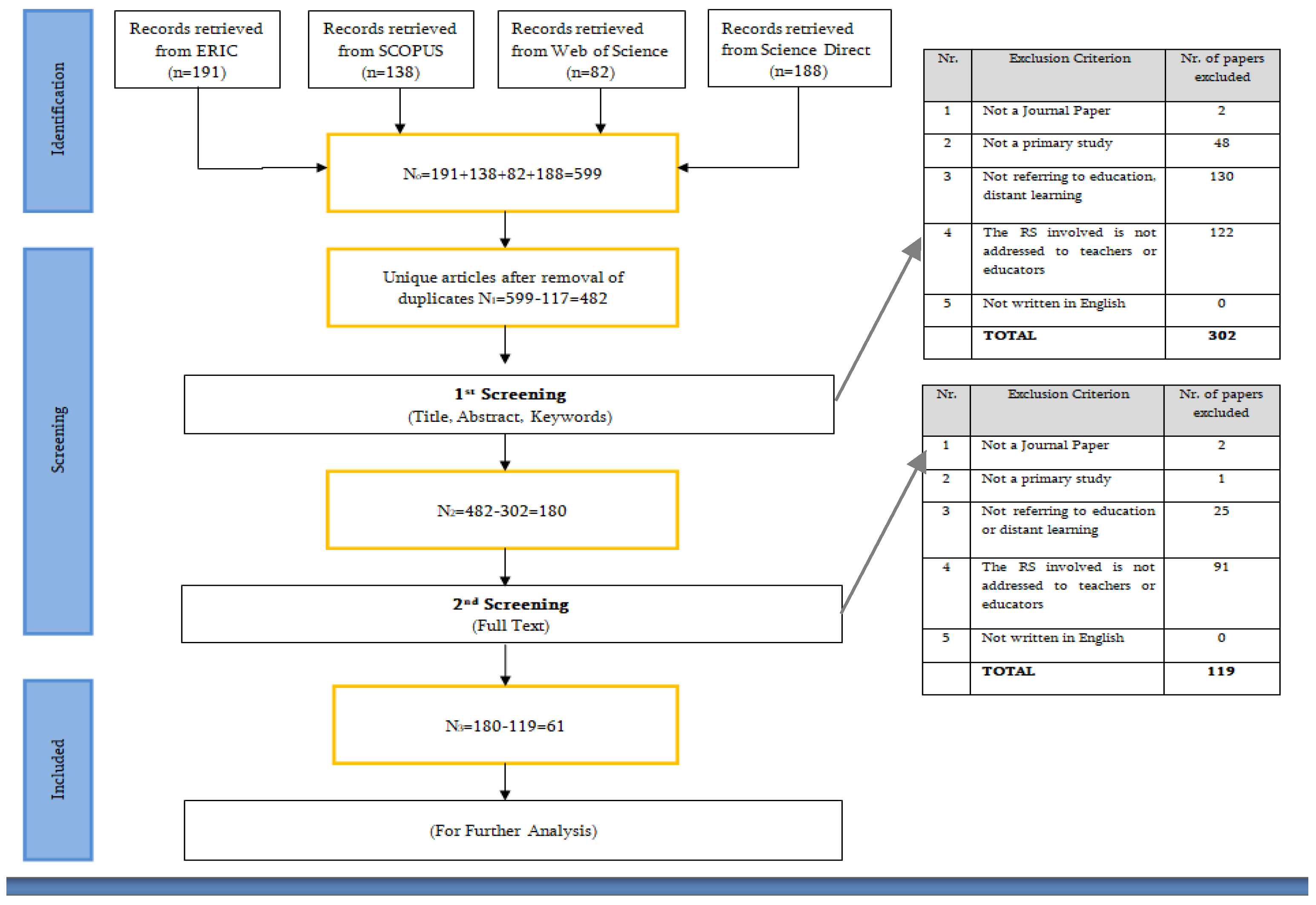

2.2. Selection Procedure

- Not a journal paper (e.g., article in conference proceedings, book, patent, technical report, thesis, etc.);

- Not a primary study (e.g., review or meta-analysis);

- Not referring to e-learning or distant learning;

- The RS involved is not addressed to teachers or educators;

- Not an English-language publication.

3. Analysis and Results

3.1. RQ1: What Is the Extent of Interest in Research on RSs for Teachers, as Expressed by the Volume and Other Features of Recent Publications?

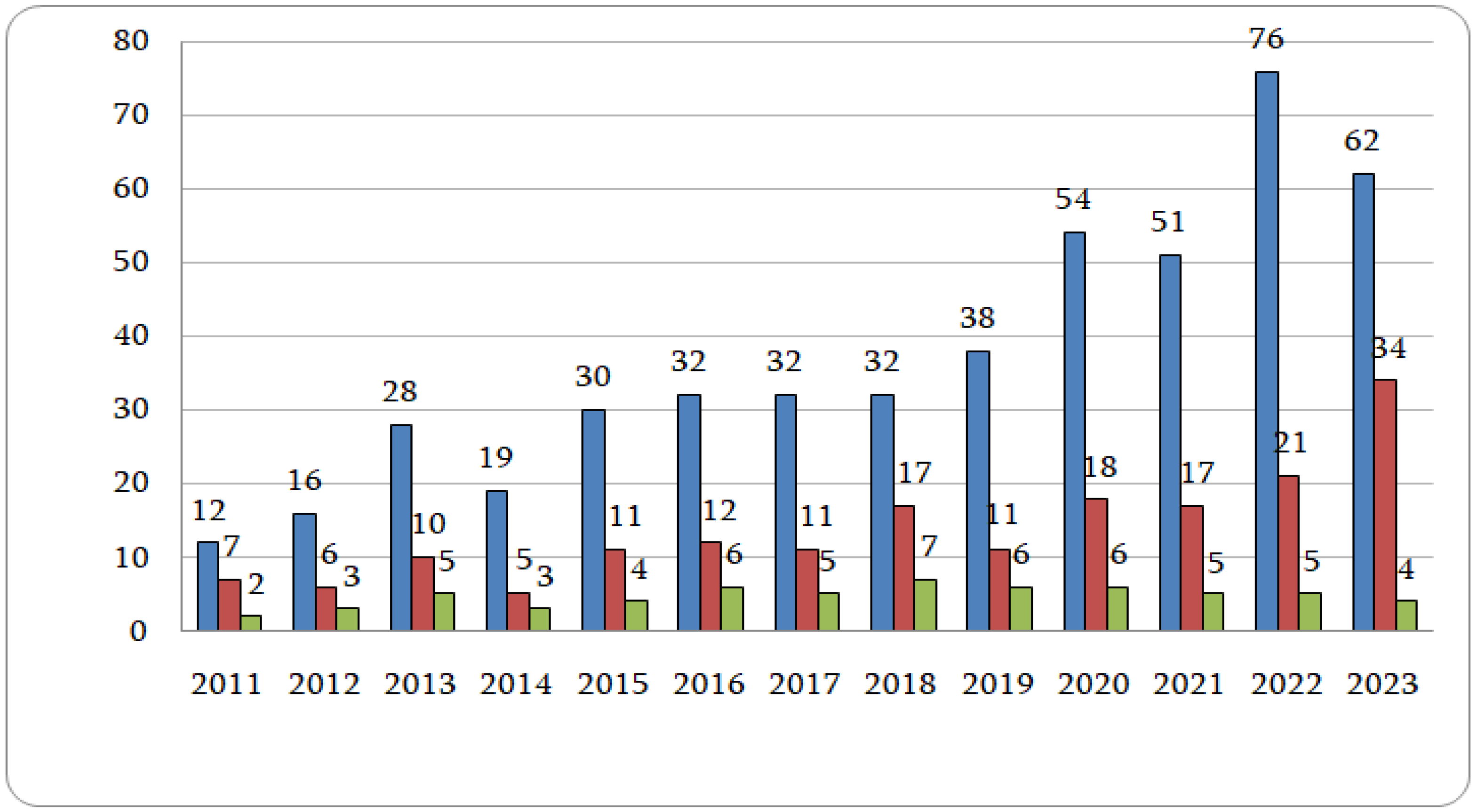

3.1.1. Evolution of the Number of Publications on RSs for Teachers over Time

3.1.2. Number of Authors per Publication

3.1.3. Journals That Host Relevant Publications

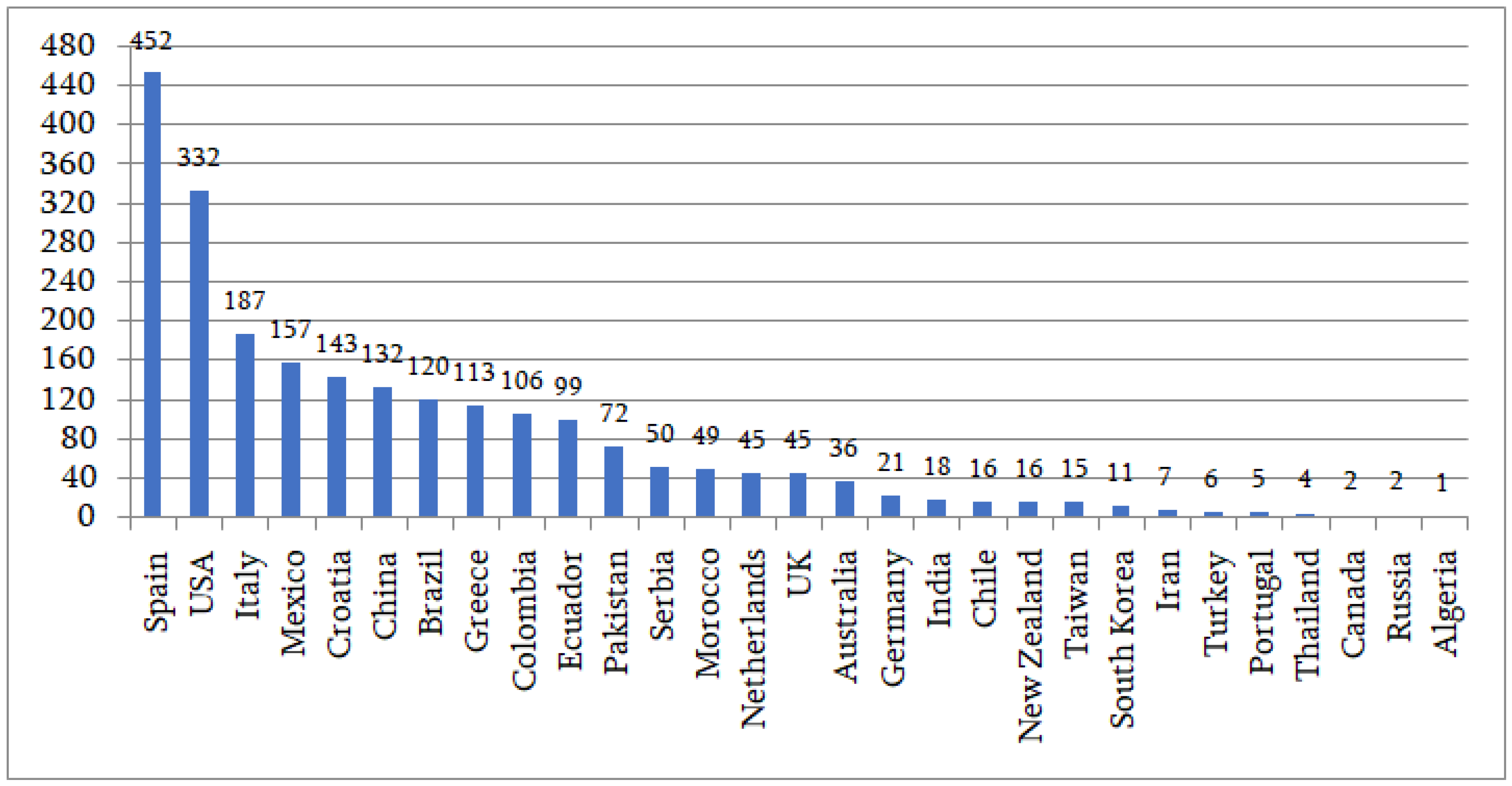

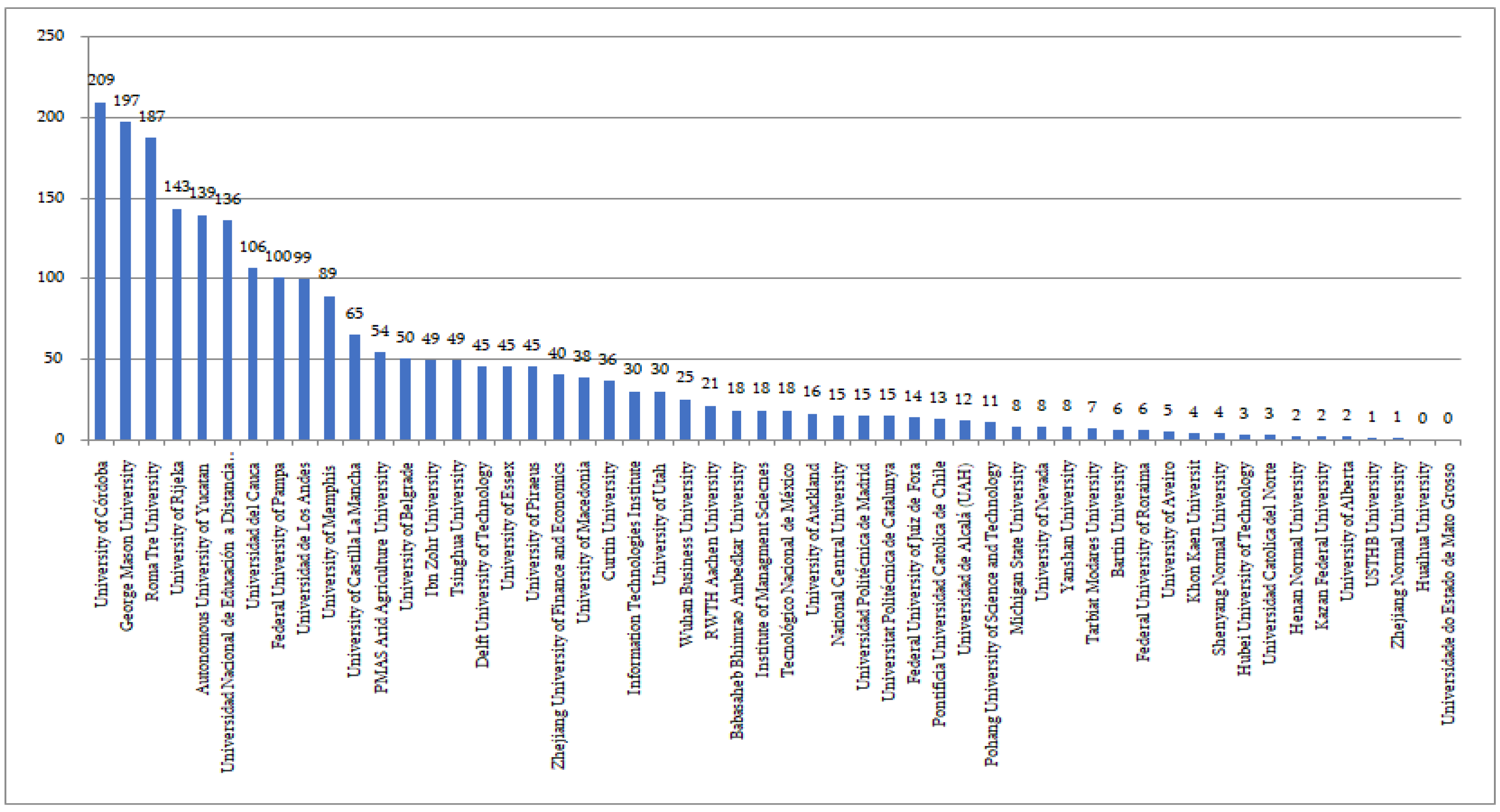

3.1.4. Geographic Distribution of Research on RSs for Teachers

3.2. RQ2: What Are the Aims of the Recommendations and the Aims of Research on RSs, as Expressed by the Respective Research Questions?

3.2.1. The Identification of the Major Aims of the Generated Recommendations

3.2.2. Aims of Research on RSs, as Expressed through the Respective Research Questions

3.3. RQ3: In Which Educational Settings or Contexts Are RSs Employed and Evaluated?

3.4. RQ4: What Are the Filtering Methods, Algorithms and Tools Employed for the Generation of Recommendations?

3.4.1. Filtering Methods Adopted in the Design and Development of the RS

3.4.2. Algorithms and Tools Employed for the Generation of the Recommendations

3.5. RQ5: What Are the RS Quality Evaluation Methods and Tools and the Evaluation Results Obtained?

3.5.1. Research Methodology (Experimental Plan) Used for Evaluation of the Proposed RS

3.5.2. The Characteristics of the Sample Used for Evaluation of the Proposed RS

3.5.3. RS Evaluation Results Reported in the Reviewed Publications

3.6. RQ6: What Is the Impact of the Use of RS and Their Endorsement by Researchers and Teachers?

4. Discussion

5. Conclusions–Further Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Uta, M.; Felfernig, A.; Le, V.-M.; Tran, T.N.T.; Garber, D.; Lubos, S.; Burgstaller, T. Knowledge-based recommender systems: Overview and research directions. Front. Big Data 2024, 7, 1304439. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, C.C. Recommender Systems: The Textbook, 1st ed.; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Souabi, S.; Retbi, A.; KhalidiIdrissi, M.; Bennani, S. Recommendation Systems on E-Learning and Social Learning: A Systematic Review. Electron. J. e-Learn. 2021, 19, 5. [Google Scholar] [CrossRef]

- Ricci, F.; Rokach, L.; Shapira, B. Recommender Systems Handbook, 2nd ed.; Springer: New York, NY, USA, 2015. [Google Scholar]

- Schwartz, B. The Paradox of Choice. Why More Is Less, 1st ed.; HarperCollins: New York, NY, USA, 2004. [Google Scholar]

- Manouselis, N.; Drachsler, H.; Verbert, K.; Duval, E. Recommender Systems for Learning, 1st ed.; Springer: New York, NY, USA, 2013. [Google Scholar]

- Khanal, S.S.; Prasad, P.W.C.; Alsadoon, A.; Maag, A. A Systematic Review: Machine Learning Based Recommendation Systems for E-Learning. Educ. Inf. Technol. 2020, 25, 2635–2664. [Google Scholar] [CrossRef]

- Yanes, N.; Mostafa, A.M.; Ezz, M.; Almuayqil, S.N. A machine learning-based recommender system for improving students learning experiences. IEEE Access 2020, 8, 201218–201235. [Google Scholar] [CrossRef]

- Bodily, R.; Verbert, K. Review of Research on Student-Facing Learning Analytics Dashboards and Educational Recommender Systems. IEEE Trans. Learn. Technol. 2017, 10, 405–418. [Google Scholar] [CrossRef]

- Deschênes, M. Recommender systems to support learners’ Agency in a Learning Context: A systematic review. Int. J. Educ. Technol. High. Educ. 2020, 17, 50. [Google Scholar] [CrossRef]

- Sandoussi, R.; Hnida, M.; Daoudi, N.; Ajhoun, R. Systematic Literature Review on Open Educational Resources Recommender Systems. Int. J. Interact. Mob. Technol. (iJIM) 2022, 16, 44–77. [Google Scholar] [CrossRef]

- Tarus, J.; Niu, Z.; Mustafa, G. Knowledge-based recommendation: A review of ontology-based recommender systems for e-learning. Artif. Intell. Rev. 2018, 50, 21–48. [Google Scholar] [CrossRef]

- George, G.; Lal, A.M. Review of ontology-based recommender systems in e-learning. Comput. Educ. 2019, 142, 103642. [Google Scholar] [CrossRef]

- Rahayu, N.W.; Ferdiana, R.; Kusumawardani, S.S. A systematic review of ontology use in E-learning recommender system. Comput. Educ. Artif. Intell. 2022, 3, 100047. [Google Scholar] [CrossRef]

- Khalid, A.; Lundqvist, K.; Yates, A. Recommender Systems for MOOCs: A Systematic Literature Survey (January 1, 2012–July 12, 2019). Int. Rev. Res. Open Distrib. Learn. 2020, 21, 255–291. [Google Scholar] [CrossRef]

- Gonzalez Camacho, L.A.; Alves-Souza, S.N. Social network data to alleviate cold-start in recommender system: A systematic review. Inf. Process. Manag. 2018, 54, 529–544. [Google Scholar] [CrossRef]

- Alhijawi, B.; Kilani, Y. The recommender system: A survey. Int. J. Adv. Intell. Paradig. 2020, 15, 229–251. [Google Scholar] [CrossRef]

- Zhang, S.; Yao, L.; Sun, A.; Tay, Y. Deep Learning Based Recommender System: A Survey and New Perspectives. ACM Comput. Surv. 2019, 52, 1–38. [Google Scholar] [CrossRef]

- Da’u, A.; Salim, N. Recommendation system based on deep learning methods: A systematic review and new directions. Artif. Intell. Rev. 2020, 53, 2709–2748. [Google Scholar] [CrossRef]

- Liu, T.; Wu, Q.; Chang, L.; Gu, T. A review of deep learning-based recommender system in e-learning environments. Artif. Intell. Rev. 2022, 55, 5953–5980. [Google Scholar] [CrossRef]

- Erdt, M.; Fernández, A.; Rensing, C. Evaluating Recommender Systems for Technology Enhanced Learning: A Quantitative Survey. IEEE Trans. Learn. Technol. 2015, 8, 326–344. [Google Scholar] [CrossRef]

- Pai, M.; McCulloch, M.; Gorman, J.D.; Pai, N.; Enanoria, W.; Kennedy, G.; Tharyan, P.; Colford, J. Systematic reviews and meta-analyses: An illustrated, step-by-step guide. Natl. Med. J. India 2004, 17, 86–95. [Google Scholar] [PubMed]

- Kitchenham, B.A. Procedures for Undertaking Systematic Reviews (Report No. TR-SE 0401); Report No. 0400011T.1; Computer Science Department, Keele University: Keele, UK; National ICT: Eversleigh, Australia, 2004. [Google Scholar]

- Kitchenham, B.A.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Report No. EB-SE 2007-001; School of Computer Science and Mathematics, Keele University: Keele, UK; University of Durham: Durham, UK, 2007. [Google Scholar]

- Kitchenham, B.A.; Brereton, P. A systematic review of systematic review process research in software engineering. Inf. Softw. Technol. 2013, 55, 2049–2075. [Google Scholar] [CrossRef]

- Rethlefsen, M.L.; Kirtley, S.; Waffenschmidt, S.; Ayala, A.P.; Moher, D.; Page, M.J.; Koffel, J.B. PRISMA-S: An extension to the PRISMA statement for reporting literature searches in systematic reviews. J. Med. Libr. Assoc. 2021, 109, 174–200. [Google Scholar] [CrossRef]

- Dhahri, M.; Khribi, M.K. A Review of Educational Recommender Systems for Teachers. In Proceedings of the 18th International Conference on Cognition and Exploratory Learning in Digital Age (CELDA 2021), Virtual, 13–15 October 2021. [Google Scholar]

- Liu, C.; Zhang, H.; Zhang, J.; Zhang, Z.; Yuan, P. Design of a Learning Path Recommendation System Based on a Knowledge Graph. Int. J. Inf. Commun. Technol. Educ. (IJICTE) 2023, 19, 1–18. [Google Scholar] [CrossRef]

- Tahir, S.; Hafeez, Y.; Abbas, M.; Nawaz, A.; Hamid, B. Smart Learning Objects Retrieval for E-Learning with Contextual Recommendation based on Collaborative Filtering. Educ. Inf. Technol. 2022, 27, 8631–8668. [Google Scholar] [CrossRef]

- Yao, D.; Deng, X.; Qing, X. A Course Teacher Recommendation Method Based on an Improved Weighted Bipartite Graph and Slope One. IEEE Access 2022, 10, 129763–129780. [Google Scholar] [CrossRef]

- Liu, H.; Sun, Z.; Qu, X.; Yuan, F. Top-aware recommender distillation with deep reinforcement Learning. Inf. Sci. 2021, 576, 642–657. [Google Scholar] [CrossRef]

- Ma, Z.H.; Hwang, W.Y.; Shih, T.K. Effects of a peer tutor recommender system (PTRS) with machine learning and automated assessment on vocational high school students’ computer application operating skills. J. Comput. Educ. 2020, 7, 435–462. [Google Scholar] [CrossRef]

- Gordillo, A.; López-Fernández, D.; Verbert, K. Examining the Usefulness of Quality Scores for Generating Learning Object Recommendations in Repositories of Open Educational Resources. Appl. Sci. 2020, 10, 4638. [Google Scholar] [CrossRef]

- Poitras, E.; Mayne, Z.; Huang, L.; Udy, L.; Lajoie, S. Scaffolding Student Teachers’ Information-Seeking Behaviours with a Network-Based Tutoring System. J. Comput. Assist. Learn. 2019, 35, 731–746. [Google Scholar] [CrossRef]

- Mimis, M.; El Hajji, M.; Es-saady, Y.; Guejdi, A.O.; Douzi, H.; Mammass, D. A framework for smart academic guidance using educational data mining. Educ. Inf. Technol. 2019, 24, 1379–1393. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Y.; Xu, H.; Qin, Z.; Zha, H. Adversarial distillation for efficient recommendation with external knowledge. ACM Trans. Inf. Syst. 2019, 37, 1–28. [Google Scholar] [CrossRef]

- Fazeli, S.; Drachsler, H.; Bitter-Rijpkema, M.; Brouns, F.; Van der Vegt, W.; Sloep, P.B. User-Centric Evaluation of Recommender Systems in Social Learning Platforms: Accuracy is Just the Tip of the Iceberg. IEEE Trans. Learn. Technol. 2018, 11, 294–306. [Google Scholar] [CrossRef]

- Wongthongtham, P.; Chan, K.Y.; Potdar, V.; Abu-Salih, B.; Gaikwad, S.; Jain, P. State-of-the-Art Ontology Annotation for Personalised Teaching and Learning and Prospects for Smart Learning Recommender Based on Multiple Intelligence and Fuzzy Ontology. Int. J. Fuzzy Syst. 2018, 20, 1357–1372. [Google Scholar] [CrossRef]

- Almohammadi, K.; Hagras, H.; Alzahrani, A.; Alghazzawi, D.; Aldabbagh, G. A type-2 fuzzy logic recommendation system for adaptive teaching. Soft Comput. 2017, 21, 965–979. [Google Scholar] [CrossRef]

- Knez, T.; Dlab, M.H.; Hoic-Bozic, N. Implementation of group formation algorithms in the ELARS recommender system. Int. J. Emerg. Technol. Learn. (Ijet) 2017, 12, 198–207. [Google Scholar] [CrossRef][Green Version]

- Hoic-Bozic, N.; HolenkoDlab, M.; Mornar, V. Recommender System and Web 2.0 Tools to Enhance a Blended Learning Model. IEEE Trans. Educ. 2016, 59, 39–44. [Google Scholar] [CrossRef]

- Khadiev, K.; Miftakhutdinov, Z.; Sidikov, M. Collaborative filtering approach in adaptive learning. Int. J. Pharm. Technol. 2016, 8, 15124–15132. [Google Scholar]

- Zervas, P.; Sergis, S.; Sampson, D.G.; Fyskilis, S. Towards Competence-Based Learning Design Driven Remote and Virtual Labs Recommendations for Science Teachers. Technol. Knowl. Learn. 2015, 20, 185–199. [Google Scholar] [CrossRef]

- Zapata, A.; Menéndez, V.H.; Prieto, M.E.; Romero, C. Evaluation and selection of group recommendation strategies for collaborative searching of learning objects. Int. J. Hum.-Comput. Stud. 2015, 76, 22–39. [Google Scholar] [CrossRef]

- Pedro, L.; Santos, C.; Almeida, S.F.; Ramos, F.; Moreira, A.; Almeida, M.; Antunes, M.J. The SAPO Campus Recommender System: A Study about Students’ and Teachers’ Opinions. Res. Learn. Technol. 2014, 22, 22921. [Google Scholar] [CrossRef]

- Thaiklang, S.; Arch-Int, N.; Arch-Int, S. Learning resources recommendation framework using rule-based reasoning approach. J. Theor. Appl. Inf. Technol. 2014, 69, 68–76. [Google Scholar]

- Cobos, C.; Rodriguez, O.; Rivera, J.; Betancourt, J.; Mendoza, M.; Leon, E.; Herrera-Viedma, E. A hybrid system of pedagogical pattern recommendations based on singular value decomposition and variable data attributes. Inf. Process. Manag. 2013, 49, 607–625. [Google Scholar] [CrossRef]

- García, E.; Romero, C.; Ventura, S.; De Castro, C. A collaborative educational association rule mining tool. Internet High. Educ. 2011, 14, 77–88. [Google Scholar] [CrossRef]

- Andrade, T.; Almeira, C.; Barbosa, J.; Rigo, S. Recommendation System model integrated with Active Methodologies, EDM, and Learning Analytics for dropout mitigation in Distance Education. Rev. Latinoam. Tecnol. Educ.-RELATEC 2023, 22, 185–205. [Google Scholar]

- Pereira, F.D.; Rodrigues, L.; Henklain, M.H.O.; Freitas, H.; Oliveira, D.F.; Cristea, A.I.; Carvlho, L.; Isotani, S.; Benedict, A.; Dorodchi, M.; et al. Toward Human–AI Collaboration: A Recommender System to Support CS1 Instructors to Select Problems for Assignments and Exams. IEEE Trans. Learn. Technol. 2023, 16, 457–472. [Google Scholar] [CrossRef]

- Kang, S.; Hwang, J.; Kweon, W.; Yu, H. Item-side ranking regularized distillation for recommender system. Inf. Sci. 2021, 580, 15–34. [Google Scholar] [CrossRef]

- Ali, S.; Hafeez, Y.; Abbas, M.A.; Aqib, M.; Nawaz, A. Enabling remote learning system for virtual personalized preferences during COVID-19 pandemic. Multimed. Tools Appl. 2021, 80, 33329–33355. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Yue, X.G.; Hao, L.; Crabbe, M.J.C.; Manta, O.; Duarte, N. Optimization Analysis and Implementation of Online Wisdom Teaching Mode in Cloud Classroom Based on Data Mining and Processing. Int. J. Emerg. Technol. Learn. (iJET) 2021, 16, 205–218. [Google Scholar] [CrossRef]

- Bulut, O.; Cormier, D.C.; Shin, J. An Intelligent Recommender System for Personalized Test Administration Scheduling with Computerized Formative Assessments. Front. Educ. 2020, 5, 572612. [Google Scholar] [CrossRef]

- De Medio, C.; Limongelli, C.; Sciarrone, F.; Temperini, M. MoodleREC: A recommendation system for creating courses using the Moodle e-learning Platform. Comput. Hum. Behav. 2020, 104, 106168. [Google Scholar] [CrossRef]

- Li, J.; Ye, Z. Course Recommendations in Online Education Based on Collaborative Filtering Recommendation Algorithm. Complexity 2020, 2020, 6619249. [Google Scholar] [CrossRef]

- Graesser, A.C.; Hu, X.; Nye, B.D.; VanLehn, K.; Kumar, R.; Heffernan, C.; Heffernan, N.; Woolf, B.; Olney, A.M.; Rus, V.; et al. ElectronixTutor: An Intelligent Tutoring System with Multiple Learning Resources for Electronics. Int. J. STEM Educ. 2018, 5, 15. [Google Scholar] [CrossRef]

- Karga, S.; Satratzemi, M. A Hybrid Recommender System Integrated into LAMS for Learning Designers. Educ. Inf. Technol. 2018, 23, 1297–1329. [Google Scholar] [CrossRef]

- Afridi, A.H. Stakeholders Analysis for Serendipitous Recommenders system in Learning Environments. Procedia Comput. Sci. 2018, 130, 222–230. [Google Scholar] [CrossRef]

- Revilla Muñoz, O.; Alpiste Penalba, F.; Fernández Sánchez, J. The Skills, Competences, and Attitude toward Information and Communications Technology Recommender System: An online support program for teachers with personalized recommendations. New Rev. Hypermedia Multimed. 2016, 22, 83–110. [Google Scholar] [CrossRef]

- Bozo, J.; Alarcon, R.; Peralta, M.; Mery, T.; Cabezas, V. Metadata for recommending primary and secondary level learning resources. JUCS—J. Univers. Comput. Sci. 2016, 22, 197–227. [Google Scholar]

- Sergis, S.; Sampson, D.G. Learning Object Recommendations for Teachers Based on Elicited ICT Competence Profiles. IEEE Trans. Learn. Technol. 2016, 9, 67–80. [Google Scholar] [CrossRef]

- Zapata, A.; Menéndez, V.H. A framework for recommendation in learning object repositories: An example of application in civil engineering. Adv. Eng. Softw. 2013, 56, 1–14. [Google Scholar] [CrossRef]

- Berkani, L.; Chikh, A.; Nouali, O. Using hybrid semantic information filtering approach in communities of practice of E-learning. J. Web Eng. 2013, 12, 383–402. [Google Scholar]

- Cechinel, C.; Sicilia, M.Á.; Sánchez-Alonso, S.; García-Barriocanal, E. Evaluating collaborative filtering recommendations inside large learning object repositories. Inf. Process. Manag. 2013, 49, 34–50. [Google Scholar] [CrossRef]

- Peiris, K.D.A.; Gallupe, R.B. A Conceptual Framework for Evolving, Recommender Online Learning Systems. Decis. Sci. J. Innov. Educ. 2012, 10, 389–412. [Google Scholar] [CrossRef]

- Zhu, Z.; Sun, Y. Personalized information push system for education management based on big data mode and collaborative filtering algorithm. Soft Comput. 2023, 27, 10057–10067. [Google Scholar] [CrossRef]

- Tong, W.; Wang, Y.; Su, Q.; Hu, Z. Digital twin campus with a novel double-layer collaborative filtering recommendation algorithm framework. Educ. Inf. Technol. 2022, 27, 11901–11917. [Google Scholar] [CrossRef]

- Dias, L.L.; Barrere, E.; De Souza, J.F. The impact of semantic annotation techniques on content-based video lecture recommendation. J. Inf. Sci. 2021, 47, 740–752. [Google Scholar] [CrossRef]

- Rawat, B.; Dwivedi, S.K. Discovering Learners’ Characteristics through Cluster Analysis for Recommendation of Courses in E-Learning Environment. Int. J. Inf. Commun. Technol. Educ. (IJICTE) 2019, 15, 42–66. [Google Scholar] [CrossRef]

- Karga, S.; Satratzemi, M. Using Explanations for Recommender Systems in Learning Design Settings to Enhance Teachers’ Acceptance and Perceived Experience. Educ. Inf. Technol. 2019, 24, 2953–2974. [Google Scholar] [CrossRef]

- Peralta, M.; Alarcon, R.; Pichara, K.; Mery, T.; Cano, F.; Bozo, J. Understanding learning resources metadata for primary and secondary education. IEEE Trans. Learn. Technol. 2018, 11, 456–467. [Google Scholar] [CrossRef]

- Liu, L.; Liang, Y.; Li, W. Dynamic assessment and prediction in online learning: Exploring the methods of collaborative filtering in a task recommender system. Int. J. Technol. Teach. Learn. 2017, 13, 103–117. [Google Scholar]

- Dabbagh, Ν.; Fake, H. Tech Select Decision Aide: A Mobile Application to Facilitate Just-in-Time Decision Support for Instructional Designers. TechTrends 2017, 61, 393–403. [Google Scholar] [CrossRef]

- Aguilar, J.; Valdiviezo-Diaz, P.; Riofrio, G. A general framework for intelligent recommender systems. Appl. Comput. Inform. 2017, 13, 147–160. [Google Scholar] [CrossRef]

- Sweeney, M.; Rangwala, H.; Lester, J.; Johri, A. Next-Term Student Performance Prediction: A Recommender Systems Approach. J. Educ. Data Min. 2016, 8, 22–50. [Google Scholar]

- Niemann, K.; Wolpers, M. Creating Usage Context-based Object Similarities to Boost Recommender Systems in Technology Enhanced Learning. IEEE Trans. Learn. Technol. 2015, 8, 274–285. [Google Scholar] [CrossRef]

- Santos, O.C.; Boticario, J.G. User-centred design and educational data mining support during the recommendations elicitation process in social online learning environments. Expert Syst. 2015, 32, 293–311. [Google Scholar] [CrossRef]

- Kortemeyer, G.; Droschler, S.; Pritchard, D.E. Harvesting latent and usage-based metadata in a course management system to enrich the underlying educational digital library: A case study. Int. J. Digit. Libr. 2014, 14, 1–15. [Google Scholar] [CrossRef][Green Version]

- Anaya, A.R.; Luque, M.; García-Saiz, T. Recommender system in collaborative learning environment using an influence diagram. Expert Syst. Appl. 2013, 40, 7193–7202. [Google Scholar] [CrossRef]

- Sevarac, Z.; Devedzic, V.; Jovanovic, J. Adaptive neuro-fuzzy pedagogical recommender. Expert Syst. Appl. 2012, 39, 9797–9806. [Google Scholar] [CrossRef]

- Ferreira-Satler, M.; Romero, F.P.; Menendez-Dominguez, V.H.; Zapata, A.; Prieto, E. Fuzzy ontologies-based user profiles applied to enhance e-learning activities. Soft Comput. 2012, 16, 1129–1141. [Google Scholar] [CrossRef]

- Alinaghi, T.; Bahreininejad, A. A multi-agent question-answering system for E-learning and collaborative learning environment. Int. J. Distance Educ. Technol. (IJDET) 2011, 9, 23–39. [Google Scholar] [CrossRef][Green Version]

- Caglar-Ozhan, S.; Altun, A.; Ekmekcioglu, E. Emotional patterns in a simulated virtual classroom supported with an affective recommendation system. Br. J. Educ. Technol. 2022, 53, 1724–1749. [Google Scholar] [CrossRef]

- López, M.B.; Alor-Hernández, G.; Sánchez-Cervantes, J.L.; Paredes-Valverde, M.A.; Salas-Zárate, M.D.P. EduRecomSys: An Educational Resource Recommender System Based on Collaborative Filtering and Emotion Detection. Interact. Comput. 2020, 32, 407–432. [Google Scholar] [CrossRef]

- Poitras, E.G.; Fazeli, N.; Mayne, Z.R. Modeling Student Teachers’ Information-Seeking Behaviors While Learning with Network-Based Tutors. J. Educ. Technol. Syst. 2018, 47, 227–247. [Google Scholar] [CrossRef]

- Clemente, J.; Yago, H.; Pedro-Carracedo, J.; Bueno, J. A proposal for an adaptive Recommender System based on competences and ontologies. Expert Syst. Appl. 2022, 208, 118171. [Google Scholar] [CrossRef]

- Song, B.; Li, X. The Research of Intelligent Virtual Learning Community. Int. J. Mach. Learn. Comput. 2019, 9, 621–628. [Google Scholar] [CrossRef]

- Schafer, J.B.; Frankowski, D.; Herlocker, J.; Sen, S. Collaborative Filtering Recommender Systems. In The Adaptive Web. Lecture Notes in Computer Science, 1st ed.; Brusilovsky, P., Kobsa, A., Nejdl, W., Eds.; Springer: Heidelberg, Germany, 2007; Volume 4321, pp. 291–324. [Google Scholar]

- Adomavicius, G.; Tuzhilin, A. Toward the next generation of recommender systems: A survey of the state-of-the-art and possible extensions. IEEE Trans. Knowl. Data Eng. 2005, 17, 734–749. [Google Scholar] [CrossRef]

- Pérez-Almaguer, Y.; Yera, R.; Alzahrani, A.A.; Martínez, L. Content-based group recommender systems: A general taxonomy and further improvements. Expert Syst. Appl. 2021, 184, 115444. [Google Scholar] [CrossRef]

- da Silva, F.L.; Slodkowski, B.K.; da Silva, K.K.A.; Cazella, S.C. A systematic literature review on educational recommender systems for teaching and learning: Research trends, limitations and opportunities. Educ. Inf. Technol. 2023, 28, 3289–3328. [Google Scholar] [CrossRef]

- Urdaneta-Ponte, M.C.; Mendez-Zorrilla, A.; Oleagordia-Ruiz, I. Recommendation Systems for Education: Systematic Review. Electronics 2021, 10, 1611. [Google Scholar] [CrossRef]

| Database | Keywords | Articles (Retrieved) | Duplicates (Excluded) | Articles (Remaining) |

|---|---|---|---|---|

| ERIC | Recommendation System(s) OR Recommender System(s) | 191 | 12 | 179 |

| SCOPUS | (Recommendation System(s) OR Recommender System(s)) AND (Teacher OR Educator) | 138 | 9 | 129 |

| Web of Science | (Recommendation System(s) OR Recommender System(s)) AND (Teacher OR Educator) | 82 | 74 | 8 |

| Science Direct | (Recommendation System(s) OR Recommender System(s)) AND (Teacher OR Educator) | 188 | 22 | 166 |

| Total | 599 | 117 | 482 |

| Nr | Exclusion Criterion | 1st Screening {Title, Abstract, Keywords} | 2nd Screening {Full Text} | ||||||

|---|---|---|---|---|---|---|---|---|---|

| ERIC | Scopus | Web of Science | Science Direct | ERIC | Scopus | Web of Science | Science Direct | ||

| 1 | Not a journal paper (e.g., article in conference proceedings, book, etc.) | 0 | 0 | 2 | 0 | 0 | 0 | 2 | 0 |

| 2 | Not a primary study (e.g., review or meta-analysis) | 21 | 5 | 0 | 22 | 0 | 1 | 0 | 0 |

| 3 | Not referring to e-learning or distant learning | 19 | 29 | 0 | 82 | 4 | 10 | 1 | 10 |

| 4 | The RS involved is not addressed to teachers or educators | 81 | 11 | 0 | 30 | 33 | 42 | 1 | 15 |

| 5 | Not an English-language publication | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Total Excluded | 121 | 45 | 2 | 134 | 37 | 53 | 4 | 25 | |

| Number of Authors | Number of Publications (Absolute Number) | Number of Publications (Percentage) |

|---|---|---|

| >5 | 10 | 16.39 |

| 5 | 8 | 13.12 |

| 4 | 12 | 19.67 |

| 3 | 17 | 27.87 |

| 2 | 13 | 21.31 |

| 1 | 1 | 1.64 |

| Total | 61 | 100% |

| Number of Publications Hosted | Number of Journals | Journal Titles (in Alphabetic Order within Each Cell) |

|---|---|---|

| 5 | 2 | Education and Information Technologies; IEEE Transactions on Learning Technologies |

| 3 | 2 | Expert Systems with Applications; Soft Computing |

| 2 | 4 | International Journal of Emerging Technologies in Learning; Information Sciences; International Journal of Information and Communication Technology Education; Information Processing & Management |

| 1 | 37 | Journal of Computers in Education; Computers in Human Behavior; IEEE Access; Advances in Engineering Software; Applied Computing and Informatics; Applied Sciences; British Journal of Educational Technology; Complexity; Decision Sciences Journal of Innovative Education; Expert Systems; Frontiers in Education; IEEE Transactions on Education; Interacting with Computers; International Journal of Fuzzy Systems; International Journal of Human-Computer Studies; International Journal of Machine Learning and Computing; International Journal of Pharmacy and Technology; International Journal of STEM Education; International Journal of Technology in Teaching and Learning; International Journal on Digital Libraries; Journal of Computer Assisted Learning; Journal of Educational Data Mining; Journal of Educational Technology Systems; Journal of Information Science; Journal of Theoretical and Applied Information Technology; JUCS—Journal of Universal Computer Science; Multimedia Tools and Applications; New Review of Hypermedia and Multimedia; Research in Learning Technology; Technology, Knowledge and Learning; TechTrends; The Internet and Higher Education; ACM Transactions on Information Systems; International Journal of Distance Education Technologies; Revista Latinoamericana de Tecnologia Educativa (RELATEC); Journal of Web Engineering; Institute of Management Sciences. |

| Total | 45 | 100% |

| Continent | Number of Publications (Absolute Number) | Number of Publications (Percentage) |

|---|---|---|

| Asia | 20 | 32.79 |

| Europe | 19 | 31.14 |

| The Americas | 18 | 29.51 |

| Oceania | 2 | 3.28 |

| Africa | 2 | 3.28 |

| Total | 61 | 100.00 |

| Recommendation Aims | Number of Publications (Absolute Number) | Number of Publications (Percentage) |

|---|---|---|

| Improve Teaching Practices | 20 | 32.79 |

| Personalized Recommendations for Users (including Teachers) | 15 | 24.59 |

| Personalized Search/Recommendation for Learning Objects (LOs) | 14 | 22.95 |

| Personalized Recommendations for Teachers | 10 | 16.39 |

| Personalized Recommendations for Social Navigation | 2 | 3.28 |

| Total | 61 | 100.00 |

| Research Aims, as Expressed in the Research Questions Posed | Nr. of Publications (abs. nr.) | Nr. of Publications (%) | References to Reviewed Publications |

|---|---|---|---|

| 1. Improvement of RS efficiency/quality/accuracy | 21 | 34.42 | [28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48] |

| 2. Personalization in the RS | 18 | 29.51 | [49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66] |

| 3. Technology-specific RS | 17 | 27.87 | [67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83] |

| 4. Affective/emotional aspects in RS | 3 | 4.92 | [84,85,86] |

| 5. RS based on teachers’ ICT prοfiles/competences/skills/attitudes | 2 | 3.28 | [87,88] |

| Total | 61 | 100.00 |

| (a) | ||

| Educational Setting or Context | Number of Publications (Absolute Number) | Number of Publications (Percentage over 61) |

| Educational Environments | 37 | 60.65 |

| Decision-Support Systems or Frameworks | 19 | 31.14 |

| Educational Tool Collections | 15 | 24.59 |

| Repositories | 10 | 16.39 |

| (b) | ||

| Educational Setting or Context | Number of Publications (Absolute Number) | Number of Publications (Percentage) |

| Educational Environments | 29 | 47.54 |

| Decision-Support Systems or Frameworks | 9 | 14.75 |

| Educational Tool Collections | 3 | 4.92 |

| Repositories | 0 | 0.0 |

| Educational Environments and Decision-Support Systems or Frameworks | 2 | 3.28 |

| Educational Environments and Educational Tool Collections | 6 | 9.84 |

| Educational Environments and Repositories | 0 | 0.00 |

| Decision-Support Systems or Frameworks and Educational Tool Collections | 2 | 3.28 |

| Decision-Support Systems or Frameworks and Repositories | 6 | 9.84 |

| Educational Tool Collections and Repositories | 4 | 6.55 |

| Total | 61 | 100.00 |

| Filtering Method | Number of Publications (Absolute Number) | Number of Publications (Percentage) |

|---|---|---|

| Collaborative Filtering | 26 | 42.62 |

| Content-Based Filtering | 13 | 21.30 |

| Hybrid Filtering | 22 | 36.07 |

| Total | 61 | 100.00 |

| (A) Supervised Learning Algorithms | Used in nr. of Papers (Absolute Number) | Used in nr. of Papers (Percentage) |

| Ranking algorithms: kNN (9), Personal Rank Algorithm (1), instance-based classifier—IBK, a form of kNN (1), Item-kNN (1), Scoring Algorithms-Page Rank (1), Search Ranking Algorithm (1), Ranking Algorithm (query based) for Text Documents (1), Item-side ranking regularized distillation (1), MostPop Algorithm (1) | 17 | 15.32 |

| Text Mining—NLP algorithms: NLP (1), Text Mining and Topics Retrieval Algorithms–Latent Dirichlet Allocation, Matrix Factorization (4), Singular Value Decomposition-SVD (6), Factorization Machine (1), Key-phrase Extraction Algorithm-KEA (1), Text Pre-processing (1), Latent factor-based method (1), Latent Factors Model-BPRMF (1) | 16 | 14.41 |

| Tree and Graph algorithms: Decision Tree (6), Random Forest (3), C4.5 Algorithm (J48) (3), Algorithm 1: Available Set of previous and current Similar Multi-perspective preferences (1), Graph-searching algorithms (Dijkstra’s Shortest Path First (1), Breadth First Search (Graph Search Algorithm) (1), influence diagrams (IDs) (1) | 16 | 14.41 |

| ANN and Factorization algorithms: Artificial Neural Networks–MLP (4), Deep Neural Networks—DNN (1), Convolutional Neural Networks—CNN (3), KERAS Neural Network deep learning library with TensorFlow (1), RNN-LSTM (1), Neural Matrix Factorization-NeuMF (1) | 11 | 9.91 |

| Classification algorithms: Naϊve Bayes (5), Support Vector Machines—SVMs (1), LogLikelihood Algorithm (1) | 7 | 6.31 |

| Association Rule algorithms: Rule—Induction Algorithm (1), A priori algorithm (2), Ripper Algorithm (2) | 5 | 4.51 |

| Filtering Algorithms: Filtering (Collaborative (1), Content-based (1), Hybrid (1)) | 3 | 2.70 |

| Evolutionary Computing algorithms: Genetic Algorithms (1), Particle Swarm Optimization (1) | 2 | 1.80 |

| Meta-Algorithms: Adaboost (1) | 1 | 0.90 |

| Total Supervised | 78 | 70.27 |

| (B) Unsupervised Learning Algorithms | Used in nr. of papers (absolute number) | Used in nr. of papers (percentage) |

| K-means-family of algorithms: k-means (2), k-means++ (3), Fuzzy c-means (1), Expectation–Maximization—EM (2), Top-N (3), Affinity Propagation (1), Compatibility Degree Algorithm (1) | 13 | 11.72 |

| Other clustering/grouping algorithms: Clustering Algorithm (1), Algorithm 1– Calculating group sizes (1), Algorithm 2—Forming homogeneous groups (1), Algorithm 3—Forming heterogeneous groups (1) | 4 | 3.60 |

| Model-driven–Performance Criterion Optimization algorithms: Random Stochastic Gradient Descent Regression—SGD (1), simple weighted summation average (1), Complex weighted summation average (1), Personalized Linear Multiple Regression—PLMR (1) | 4 | 3.60 |

| Total Unsupervised | 21 | 18.92 |

| (C) Algorithms used are not reported | 12 | 10.81 |

| Total cases of algorithm use | 111 | 100.00 |

| Problem Addressed | Number of Publications (Absolute Number) | Number of Publications (Percentage over 61) |

|---|---|---|

| Prediction | 28 | 45.90 |

| Classification | 25 | 40.98 |

| Identification | 22 | 36.06 |

| Clustering | 16 | 16.00 |

| Detection | 9 | 9.00 |

| Experimental Design | Number of Publications (Absolute Number) | Number of Publications (Percentage) |

|---|---|---|

| Quasi experiment | 47 | 77.05 |

| Case study | 8 | 13.11 |

| Pure experiment | 4 | 6.56 |

| No evaluation/not reported | 2 | 3.28 |

| Total | 61 | 100.00 |

| No. of Individuals/Items | Teachers | Students | Users in General |

|---|---|---|---|

| [1–20) | 8 | 5 | 7 |

| [20–40) | 7 | 8 | 1 |

| [40–60) | 2 | 2 | 0 |

| [60–80) | 2 | 2 | 3 |

| [80–100) | 0 | 0 | 1 |

| [100–120) | 0 | 3 | 0 |

| [120–140) | 1 | 0 | 0 |

| [140–… | 1 | 6 | 5 |

| Not reported | 7 | 6 | 6 |

| Total | 28 | 32 | 23 |

| Number of Items | Learning Objects | Movies, etc. |

|---|---|---|

| [1–500) | 8 | 6 |

| [500–1000) | 1 | 1 |

| [1000–1500) | 2 | 1 |

| [1500–2000) | 2 | 2 |

| [2000–2500) | 0 | 2 |

| [2500–3000) | 1 | 0 |

| [3000–3500) | 1 | 0 |

| [3500–… | 0 | 8 |

| Not reported | 5 | 3 |

| Total | 20 | 23 |

| Evaluation Results | Number of Publications (Absolute Number) | Number of Publications (Percentage) |

|---|---|---|

| Positive | 47 | 77.05 |

| Neutral | 14 | 22.95 |

| Negative | 0 | 0.00 |

| Total | 61 | 100.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Siafis, V.; Rangoussi, M.; Psaromiligkos, Y. Recommender Systems for Teachers: A Systematic Literature Review of Recent (2011–2023) Research. Educ. Sci. 2024, 14, 723. https://doi.org/10.3390/educsci14070723

Siafis V, Rangoussi M, Psaromiligkos Y. Recommender Systems for Teachers: A Systematic Literature Review of Recent (2011–2023) Research. Education Sciences. 2024; 14(7):723. https://doi.org/10.3390/educsci14070723

Chicago/Turabian StyleSiafis, Vissarion, Maria Rangoussi, and Yannis Psaromiligkos. 2024. "Recommender Systems for Teachers: A Systematic Literature Review of Recent (2011–2023) Research" Education Sciences 14, no. 7: 723. https://doi.org/10.3390/educsci14070723

APA StyleSiafis, V., Rangoussi, M., & Psaromiligkos, Y. (2024). Recommender Systems for Teachers: A Systematic Literature Review of Recent (2011–2023) Research. Education Sciences, 14(7), 723. https://doi.org/10.3390/educsci14070723