Safety, Identity, Attitude, Cognition, and Capability: The ‘SIACC’ Framework of Early Childhood AI Literacy

Abstract

:1. Introduction

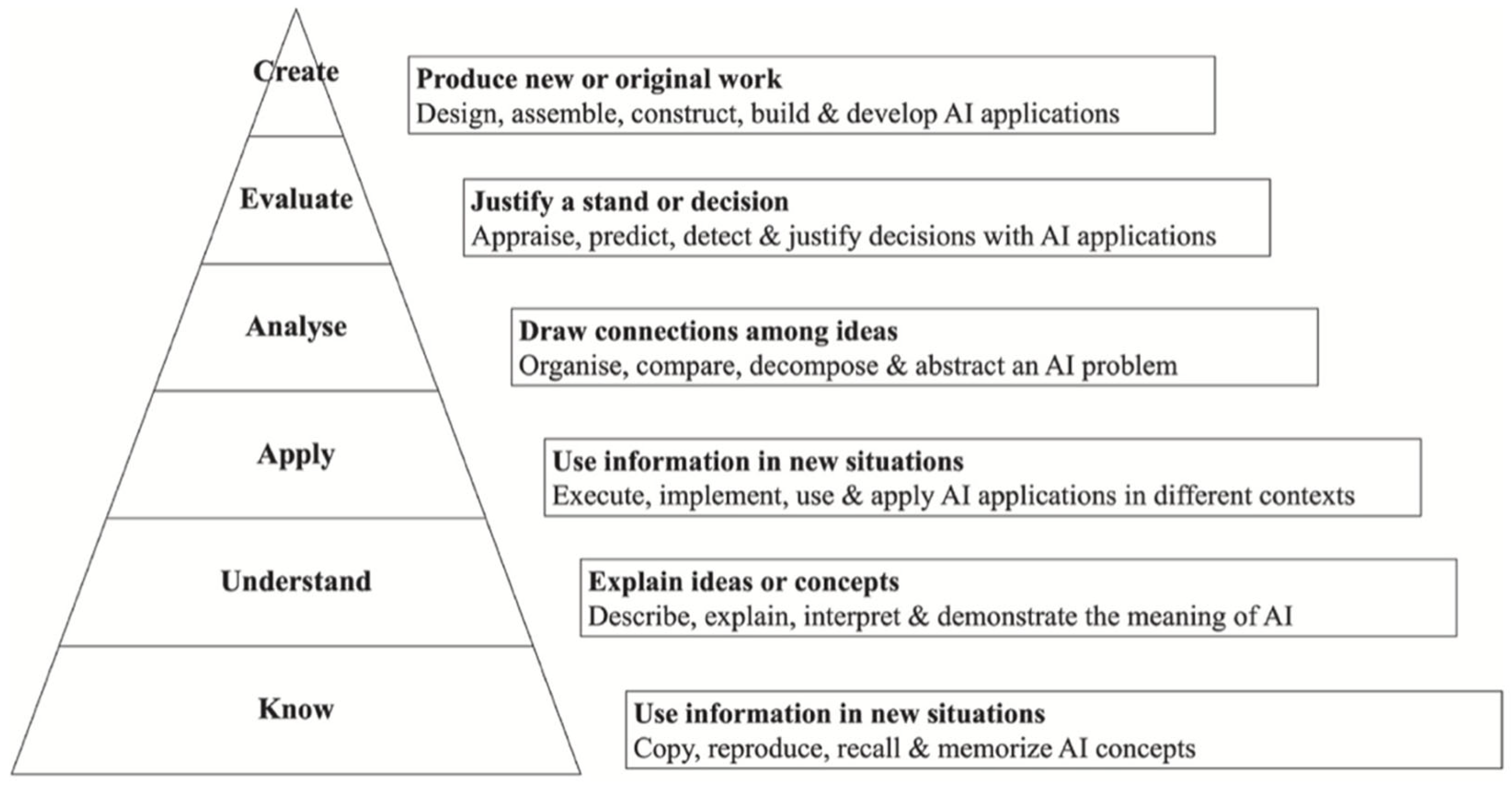

1.1. The Definitions of AI Literacy

1.2. The Constructs of AI Literacy

1.3. The Context of This Study

- What is the definition of young children’s AI literacy, according to Chinese experts?

- What constructs are identified by Chinese experts as central to young children’s AI literacy?

2. Method

2.1. Grounded Theory Approach Based on Expert Interview

2.2. Participants

2.3. Data Collection

2.4. Data Analysis

3. Findings and Discussion

3.1. The Definition of Young Children’s AI Literacy

Young Children’s AI literacy means being ethically and appropriately capable of interacting with, utilizing, and controlling AI in their daily lives.

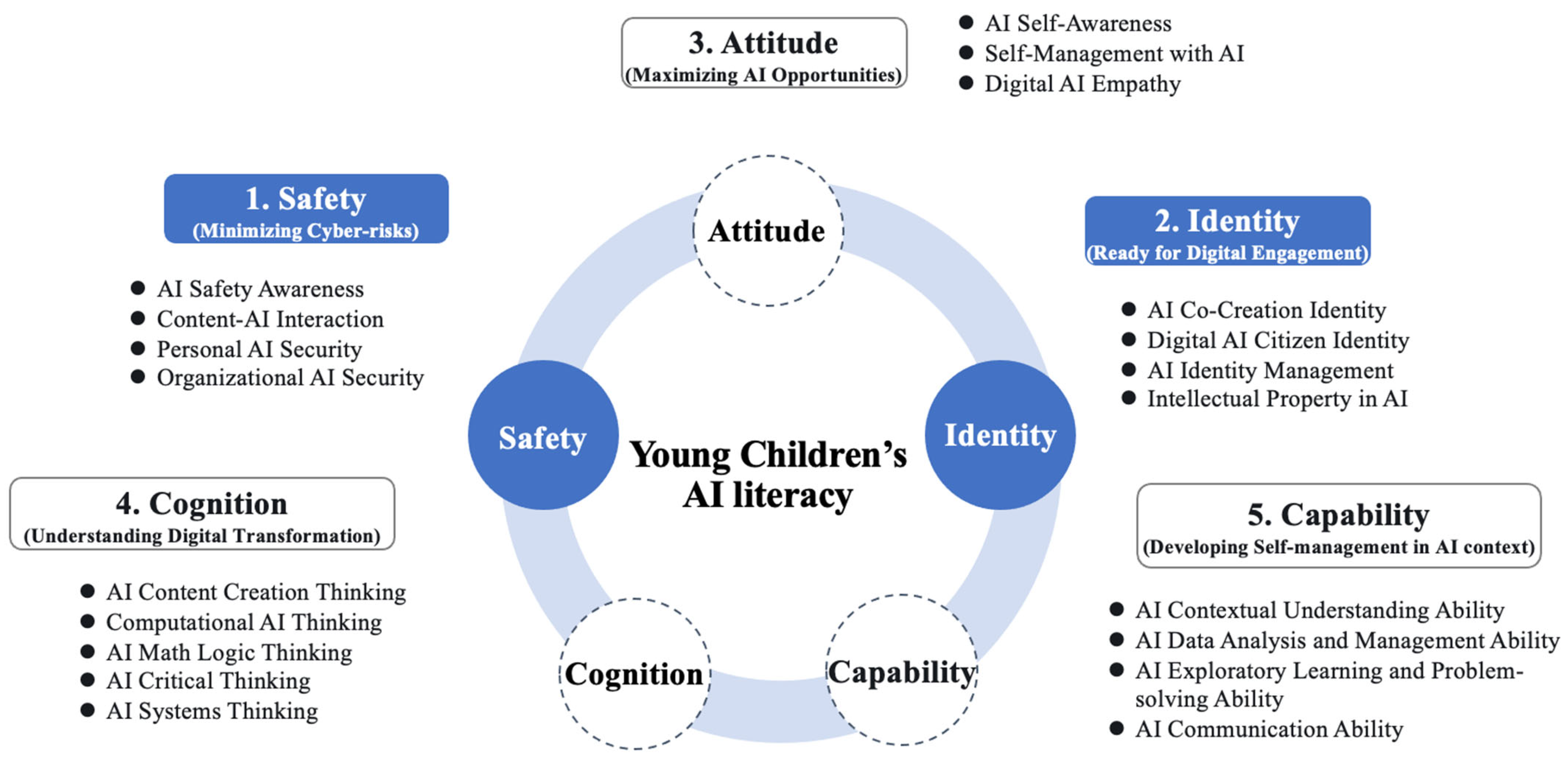

3.2. The Construct of Young Children’s AI Literacy

3.2.1. Dimension 1: Safety

- AI Safety Awareness

Young children need to have the concept of AI alertness. Young children should know which things they encounter contain AI and which do not. Since AI can fabricate, lose control, or “go crazy”, AI is risky. If young children are unaware of the existence of AI, they might make mistakes or even be deceived and exploited by AI. Therefore, children must have a complex and challenging understanding of AI’s external form.

- 2.

- Content-AI Interaction

As principals, we value cultivating children’s early AI literacy, especially in content creation and communication. We offer a range of activities for interacting with AI tools, such as using AI drawing programs and participating in robot conversations. These activities are all aimed at helping children understand the functions of AI and learn to collaborate with it in creation.

- 3.

- Personal AI Security

In our kindergarten, we are committed to creating a safe learning environment by using stories and role-playing games to help children understand how they should protect themselves when interacting with AI, such as not providing personal information to unknown software and letting them know that not all online requests should be responded to. Our goal is to nurture children to become little experts in information security, enabling them to learn how to protect their data and privacy through games.

- 4.

- Organizational AI Security

Our kindergarten operates at the practical level, and how to make good use of AI is very important. Isn’t it said that AI will control humans in the future? These matters must first be addressed at the adult level in kindergartens, such as kindergarten teachers and caregivers, and then permeate to the young children, giving children the correct values. Machines are meant to serve humans and should not develop to the point where they violate ethical norms to enhance AI’s level of intelligence. It should be used to support and enhance students’ learning experience rather than becoming the center of learning.

3.2.2. Dimension 2: Identity

- AI Co-Creation Identity

In constructing AI Co-creation identity, we need to explore the role and boundaries of AI with young children. It is not just a technical implementation but a process of shaping values. We must guide the children to understand how their interaction and symbiotic relationship with AI will affect their identity, behavior, and decision-making in the digital world.

- 2.

- Digital AI Citizen Identity

I believe that Digital AI Citizen Identity is about understanding AI technology and knowing how to play a responsible role in a digital society. Our children are digital natives; their early exposure to technology and AI profoundly impacts their cognitive and behavioral patterns. Therefore, we must focus on cultivating their correct understanding of AI technology, including its benefits and potential risks, and how to use AI safely and effectively daily. Our joint responsibility as educators and technology experts is to ensure they are well-prepared as citizens of the digital age.

- 3.

- AI Identity Management

- 4.

- Intellectual Property in AI

In the field of AI, protecting the intellectual property of young children is crucial. We must ensure that AI applications do not infringe upon children’s creative thinking and original expression. Simultaneously, we must develop educational tools that stimulate children’s creativity and respect and protect their original ideas.

We need to research and propose policies that ensure the sensible use of AI in early childhood education while protecting children’s intellectual property. We should promote an environment that leverages the advantages of AI and simultaneously respects and protects the original thinking of young children.

3.2.3. Dimension 3: Attitude

- AI Self-Awareness

The attitude is that we cannot wholly trust AI, nor can we completely deny it. We should have an objective and comprehensive understanding and attitude toward AI, which involves recognizing its benefits, acknowledging its drawbacks, and balancing its pros and cons for our use to enhance and promote human knowledge.

- 2.

- Self-Management with AI

From an attitude perspective from a technical standpoint, it is necessary to explain to young children the working principles and capabilities of AI. Children need to understand that while AI may appear intelligent, it is still limited by the rules set by programmers and is just a set of programming languages. This understanding helps young children maintain appropriate expectations when interacting with AI without over-relying on it.

That is the young child’s world, their understanding. Telling them that AI is fake, just a program, would confuse them. It is unnecessary for children three to six years old. Their thinking stage is imagination, where things we adults find incredible are possible in their world. Telling them it is just a program would diminish their imagination.

One child in our kindergarten said, ‘I love kindergarten, but when I am sick and cannot come, I plan to send my robot to replace me’. This imagination would no longer exist if they knew AI was just a program.

- 3.

- Digital AI Empathy

Young children view AI entirely differently from us adults. From an adult perspective, we have experienced the transition from a world without AI to one with AI, so we need to adopt an accepting attitude toward it. However, children do not face this issue of change and acceptance. They are born into this world as it is, part of the alpha generation, where the concept of change does not exist, but rather there is an inherent digital AI empathy.

AI is no longer just about interacting with a machine. Its design is increasingly aimed at mimicking human behavior and emotions. In this process, young children need to maintain their capacity for love, that is, a disposition towards love, aiding them in developing a more comprehensive and responsible attitude in the digital world.

Digital AI empathy is not a one-way street from young children to AI, but a complex network of interactions. Adult attitudes toward AI will directly affect young children’s attitudes toward AI. Adults with negative attitudes toward AI are prone to disallow young children’s use of AI, which will fundamentally change young children’s attitudes. The extent to which adults should be involved in young children’s use of AI is a question worth exploring. We can’t just tell young children that AI is just a program, as this might stifle their imagination and creativity. However, we also need to inform them that AI can make mistakes and is not perfect.

3.2.4. Dimension 4: Cognition

- AI Content Creation Thinking

We emphasize the importance of AI Content Creation Thinking in young children’s cognitive development of AI literacy. We must cultivate children’s understanding and innovative thinking towards AI-generated content. AI can create various educational materials, but it is equally important to teach children how to assess the quality and applicability of these contents. This is about technological education and maintaining analytical and innovative thinking in a world where technology is constantly evolving.

- 2.

- Computational AI Thinking

- 3.

- AI Math Logic Thinking

As an essential aspect of AI literacy cognitive development, AI Math Logic thinking should be actively integrated into the daily development of young children. Through simple mathematical games and AI interactive tools, we encourage children to explore the fun of mathematics while developing their logical thinking. Such learning helps children grow in mathematics and lays a solid foundation for their cognitive development in the digital and intelligent world.

- 4.

- AI Critical Thinking

- 5.

- AI Systems Thinking

AI Systems Thinking is vital in helping children understand the interactions and dependencies between systems in the digital world. This way of thinking allows them to understand better the comprehensiveness and complexity of AI and the underlying logic of why AI evolves so quickly.

3.2.5. Dimension 5: Capability

- AI Contextual Understanding Ability

AI insight is a crucial ability for young children, involving innate aptitude and individual differences. It also relates to acquired experiences, upbringing, and experiences. Reflecting on myself, I feel that my distinct feature is having five to ten years of advanced insight compared to others, allowing me to always be one step ahead in keenly foreseeing future issues. This insight is more critical. For instance, if a child can discern the core of a problem and pinpoint it accurately, they can then communicate and manage various interactions with AI.

In discussing young children’s AI literacy abilities, I consider ‘AI Scene Perception’ a key factor. We must understand that AI is a collection of programming and algorithms and an entity embedded in children’s daily lives. Children must learn to identify and understand AI elements in their environment, whether in intelligent toys or online learning tools. This perceptual ability is the foundation for their understanding and adaptation to a technology-driven world. We should develop corresponding educational tools and curricula to help children cultivate this ability, enabling them to navigate more comfortably in an AI-rich world.

AI contextual understanding ability guides children to understand AI’s application and impact in different contexts, discerning its applicability and limitations. The focus is on cultivating an understanding of AI’s functions and boundaries and the ability to adapt and apply AI in diverse environments.

- 2.

- AI Data Analysis and Management Ability

In this data-driven era, children must learn how to extract and interpret data from AI systems. This is not just about the data itself but, more importantly, about converting data into meaningful information and decisions. We should develop tools suitable for children to help them grasp the basic concepts and practices of data analysis.

In kindergarten, simple games and activities can guide young children in learning how to process and analyze data. For instance, using smart toys to collect information and then guiding children to do basic categorization and analysis of this information is not only interesting but also provides them with initial data handling experience.

AI systems are not flawless and may display errors or abnormal behaviors. Educating children to identify and handle these anomalies is very important. This involves technical skills, the cultivation of safety awareness, and a sense of responsibility.

AI Data Analysis and Management Ability should be a holistic concept, including data understanding, information processing, and response to anomalies. Through simulation activities and interactive learning, we can allow children to practice these skills in real-life scenarios to enhance their ability to interpret AI-generated data and troubleshoot anomalies or errors in AI systems.

- 3.

- AI Exploratory Learning and Problem-solving Ability

In AI learning, cultivating children’s ability to ask questions is crucial. Children should learn how to pose meaningful questions to AI systems, not just seeking information but also enhancing the depth of knowledge and understanding. Asking questions is the starting point of exploratory learning and the key to driving innovation and deep understanding.

Our educational goal is to encourage children to engage in self-directed AI learning. In this process, children learn to set their learning objectives and independently explore AI tools and concepts. Such autonomy promotes personalized learning and lays the foundation for children to confidently explore more complex AI environments in the future.

- 4.

- AI Communication Ability

The key to AI communication ability lies in educating children on effectively interacting with AI systems. This involves inputting commands and interpreting information, understanding AI feedback, and adjusting communication methods to optimize interaction. We must develop educational tools that enable children to learn AI communication through practical operation.

In an AI environment, we must also emphasize the communication skills between people. AI can serve as a tool to enhance children’s social skills, such as through team-based AI projects where children learn to express their ideas, understand others’ perspectives, and cooperate effectively.

Children’s interaction with AI is based on norms jointly formulated by adult society, educational experts, and AI specialists (AI interaction protocol). We need to teach children how to internalize these norms and transform them into practical communication skills while emphasizing interpersonal communication skills.

3.3. General Discussion

3.3.1. The Interconnected Five Dimensions

3.3.2. The Uniqueness of the SIACC Model

4. Conclusions, Limitations, and Implications

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ali, S.; DiPaola, D.; Lee, I.; Sindato, V.; Kim, G.; Blumofe, R.; Breazeal, C. Children as creators, thinkers and citizens in an AI-driven future. Comput. Educ. Artif. Intell. 2021, 2, 100040. [Google Scholar] [CrossRef]

- Chen, J.J.; Lin, J.C. Artificial intelligence as a double-edged sword: Wielding the POWER principles to maximize its positive effects and minimize its negative effects. Contemp. Issues Early Child. 2024, 25, 146–153. [Google Scholar] [CrossRef]

- Su, J.; Yang, W. Artificial intelligence in early childhood education: A scoping review. Comput. Educ. Artif. Intell. 2022, 3, 100049. [Google Scholar] [CrossRef]

- Luo, W.; Yang, W.; Berson, I.R. Digital transformations in early learning: From touch interactions to AI conversations. Early Educ. Dev. 2024, 35, 3–9. [Google Scholar] [CrossRef]

- Luo, W.; Berson, I.R.; Berson, M.J.; Han, S. Between the folds: Reconceptualizing the current state of early childhood technology development in China. Educ. Philos. Theory 2021, 54, 1655–1669. [Google Scholar] [CrossRef]

- Luo, W.; He, H.; Liu, J.; Berson, I.R.; Berson, M.J.; Zhou, Y.; Li, H. Aladdin’s Genie or Pandora’s Box for early childhood education? Experts chat on the roles, challenges, and developments of ChatGPT. Early Educ. Dev. 2023, 35, 96–113. [Google Scholar] [CrossRef]

- Su, J.; Ng, D.T.K.; Chu, S.K.W. Artificial intelligence (AI) literacy in early childhood education: The challenges and opportunities. Comput. Educ. Artif. Intell. 2023, 4, 100124. [Google Scholar] [CrossRef]

- Berson, I.R.; Berson, M.J.; Luo, W.; He, H. Intelligence augmentation in early childhood education: A multimodal creative inquiry approach. In International Conference on Artificial Intelligence in Education; Springer Nature: Cham, Switzerland, 2023; pp. 756–763. [Google Scholar] [CrossRef]

- Kewalramani, S.; Kidman, G.; Palaiologou, I. Using Artificial Intelligence (AI)-interfaced robotic toys in early childhood settings: A case for children’s inquiry literacy. Eur. Early Child. Educ. Res. J. 2021, 29, 652–668. [Google Scholar] [CrossRef]

- Allehyani, S.H.; Algamdi, M.A. Digital competencies: Early childhood teachers’ beliefs and perceptions of ChatGPT application in teaching English as a second language (ESL). Int. J. Learn. Teach. Educ. Res. 2023, 22, 343–363. [Google Scholar] [CrossRef]

- Su, J.; Yang, W. AI literacy curriculum and its relation to children’s perceptions of robots and attitudes towards engineering and science: An intervention study in early childhood education. J. Comput. Assist. Learn. 2024, 40, 241–253. [Google Scholar] [CrossRef]

- Burgsteiner, H.; Kandlhofer, M.; Steinbauer, G. Irobot: Teaching the basics of artificial intelligence in high schools. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 4126–4127. [Google Scholar]

- Kandlhofer, M.; Steinbauer, G.; Hirschmugl-Gaisch, S.; Huber, P. Artificial intelligence and computer science in education: From kindergarten to university. In Proceedings of the 2016 IEEE Frontiers in Education Conference (FIE), Erie, PA, USA, 12–15 October 2016; pp. 1–9. [Google Scholar]

- Long, D.; Magerko, B. What is AI literacy? Competencies and design considerations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–16. [Google Scholar] [CrossRef]

- Mercer, N.; Hennessy, S.; Warwick, P. Dialogue, thinking together and digital technology in the classroom: Some educational implications of a continuing line of inquiry. Int. J. Educ. Res. 2019, 97, 187–199. [Google Scholar] [CrossRef]

- Ng, D.T.K.; Leung, J.K.L.; Chu, S.K.W.; Qiao, M.S. Conceptualizing AI literacy: An exploratory review. Comput. Educ. Artif. Intell. 2021, 2, 100041. [Google Scholar] [CrossRef]

- Druga, S.; Christoph, F.L.; Ko, A.J. Family as a third space for AI literacies: How do children and parents learn about AI together? In Proceedings of the CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 30 April–5 May 2022; pp. 1–17. [Google Scholar]

- Steinbauer, G.; Kandlhofer, M.; Chklovski, T.; Heintz, F.; Koenig, S. A differentiated discussion about AI education K-12. KI-Künstliche Intell. 2021, 35, 131–137. [Google Scholar] [CrossRef] [PubMed]

- Druga, S.; Vu, S.T.; Likhith, E.; Qiu, T. Inclusive AI literacy for kids around the world. In Proceedings of the FabLearn 2019, New York, NY, USA, 9–10 March 2019; pp. 104–111. [Google Scholar] [CrossRef]

- Rodríguez-García, A.; Arias-Gago, A.R. Revisión de propuestas metodológicas: Una taxonomía de agrupación categórica. ALTERIDAD. Rev. Educ. 2020, 15, 146–160. [Google Scholar] [CrossRef]

- Pinski, M.; Benlian, A. AI literacy for users—A comprehensive review and future research directions of learning methods, components, and effects. Comput. Hum. Behav. Artif. Hum. 2024, 2, 100062. [Google Scholar] [CrossRef]

- Yang, W. Artificial Intelligence education for young children: Why, what, and how in curriculum design and implementation. Comput. Educ. Artif. Intell. 2022, 3, 100061. [Google Scholar] [CrossRef]

- Brennan, K.; Resnick, M. New frameworks for studying and assessing the development of computational thinking. In Proceedings of the 2012 Annual Meeting of the American Educational Research Association, Vancouver, BC, Canada, 13–17 April 2012; p. 25. [Google Scholar]

- Kim, S.; Jang, Y.; Kim, W.; Choi, S.; Jung, H.; Kim, S.; Kim, H. Why and what to teach: AI curriculum for elementary school. Proc. AAAI Conf. Artif. Intell. 2021, 3, 15569–15576. [Google Scholar] [CrossRef]

- Su, J.; Zhong, Y.; Ng, D.T.K. A meta-review of literature on educational approaches for teaching AI at the K-12 levels in the Asia-Pacific region. Comput. Educ. Artif. Intell. 2022, 3, 100065. [Google Scholar] [CrossRef]

- Williams, R.; Park, H.W.; Breazeal, C. A is for artificial intelligence: The impact of artificial intelligence activities on young children’s perceptions of robots. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–11. [Google Scholar]

- Williams, R.; Park, H.W.; Oh, L.; Breazeal, C. Popbots: Designing an artificial intelligence curriculum for early childhood education. Proc. AAAI Conf. Artif. Intell. 2019, 33, 9729–9736. [Google Scholar] [CrossRef]

- Almatrafi, O.; Johri, A.; Lee, H. A systematic review of AI literacy conceptualization, constructs, implementation, and assessment efforts (2019–2023). Comput. Educ. Open 2024, 6, 100173. [Google Scholar] [CrossRef]

- Lin, P.; Van Brummelen, J.; Lukin, G.; Williams, R.; Breazeal, C. Zhorai: Designing a conversational agent for children to explore machine learning concepts. Proc. AAAI Conf. Artif. Intell. 2020, 34, 13381–13388. [Google Scholar] [CrossRef]

- Sakulkueakulsuk, B.; Witoon, S.; Ngarmkajornwiwat, P.; Pataranutaporn, P.; Surareungchai, W.; Pataranutaporn, P.; Subsoontorn, P. Kids making AI: Integrating machine learning, gamification, and social context in STEM education. In Proceedings of the 2018 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Wollongong, Australia, 4–7 December 2018; pp. 1005–1010. [Google Scholar] [CrossRef]

- Vartiainen, H.; Tedre, M.; Valtonen, T. Learning machine learning with very young children: Who is teaching whom? Int. J. Child-Comput. Interact. 2020, 25, 100182. [Google Scholar] [CrossRef]

- Su, J.; Yang, W. Artificial Intelligence (AI) literacy in early childhood education: An intervention study in Hong Kong. Interact. Learn. Environ. 2023, 31, 1–15. [Google Scholar] [CrossRef]

- Glaser, B.G.; Strauss, A.L. The Discovery of Grounded Theory: Strategies for Qualitative Research; Routledge: London, UK, 2017. [Google Scholar]

- Glaser, B.G. Basics of Grounded Theory Analysis; Sociology Press: Mill Valley, CA, USA, 1992. [Google Scholar]

- Littig, B.; Pöchhacker, F. Socio-translational collaboration in qualitative inquiry: The case of expert interviews. Qual. Inq. 2014, 20, 1085–1095. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, B.; Zhao, Y.; Zheng, C. China’s preschool education toward 2035: Views of key policy experts. ECNU Rev. Educ. 2022, 5, 345–367. [Google Scholar] [CrossRef]

- Van Audenhove, L.; Donders, K. Expert interviews and elite interviews. In Handbook of Media Policy Methods; Van den Bulck, H., Puppis, M., Donders, K., Van Audenhove, L., Eds.; Palgrave MacMillan: London, UK, 2019; pp. 179–197. [Google Scholar]

- Suri, H. Purposeful sampling in qualitative research synthesis. Qual. Res. J. 2011, 11, 63–75. [Google Scholar] [CrossRef]

- Tracy, S.J. Qualitative Research Methods: Collecting Evidence, Crafting Analysis, Communicating Impact; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Punch, K. Developing Effective Research Proposals; SAGE: London, UK, 2000. [Google Scholar]

- Charmaz, K. Constructing Grounded Theory, 2nd ed.; SAGE: London, UK, 2014. [Google Scholar]

- Miles, M.B.; Huberman, A.M.; Huberman, M.A.; Huberman, M. Qualitative Data Analysis: An Expanded Sourcebook; SAGE: London, UK, 1994. [Google Scholar]

- Cohen, L.; Manion, L.; Morrison, K. Research Methods in Education; Routledge: London, UK, 2002. [Google Scholar]

- Creswell, J.W.; Creswell, J.D. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches; SAGE Publications: London, UK, 2017. [Google Scholar]

- Su, J.; Zhong, Y. Artificial Intelligence (AI) in early childhood education: Curriculum design and future directions. Comput. Educ. Artif. Intell. 2022, 3, 100072. [Google Scholar] [CrossRef]

- Shamir, G.; Levin, I. Teaching machine learning in elementary school. Int. J. Child-Comput. Interact. 2022, 31, 100415. [Google Scholar] [CrossRef]

| Name | Title | Location | Research Field or Key Characteristics |

|---|---|---|---|

| Expert A | Chair Professor | Hong Kong, China | Digital Transformation in Early Childhood Education; Educational Policy; Early Childhood Education; Curriculum Theory; Pragmatics; Psycholinguistics; Cognitive Psychology |

| Expert B | Vice Dean and Professor | Shanghai, China | Digital Parenting; Digital Pedagogy; Early Childhood Mathematics Education |

| Expert C | Secretary General and Professor | Shanghai, China | Computer Science and Technology; Communication and Information Engineering |

| Expert D | Principal | Shanghai, China | Shanghai Education digital Technology Benchmark Preschool Nationally recognized principal |

| Expert E | Principal | Shanghai, China | Shanghai Education digital Technology Benchmark Preschool |

| Expert F | Director | Shanghai, China | Head of the Preschool Teaching and Research Department in Xuhui District, Shanghai |

| Expert G | Superfine Teacher and Director | Shanghai, China | The Deputy Director of the Early Childhood Education Information Department at the Shanghai Municipal Education Commission Information Center |

| Core Theme | Refined Theme |

|---|---|

| Safety | AI Safety Awareness |

| Content-AI Interaction | |

| Personal AI Security | |

| Organizational AI Security | |

| Identity | AI Co-Creation Identity |

| Digital AI Citizen Identity | |

| AI Identity Management | |

| Intellectual Property in AI | |

| Attitude | AI Self-Awareness |

| Self-Management with AI | |

| Digital AI Empathy | |

| Cognition | AI Content Creation Thinking |

| Computational AI Thinking | |

| AI Math Logic Thinking | |

| AI Critical Thinking | |

| AI Systems Thinking | |

| Capability | AI Contextual Understanding Ability |

| AI Data Analysis and Management Ability | |

| AI Exploratory Learning and Problem-solving Ability | |

| AI Communication Ability |

| Name | Each Expert’s Definition |

|---|---|

| Expert A | Young Children’s AI literacy means capable to interact, control and utilize AI in their daily lives. |

| Expert B | Young Children’s AI literacy can be defined as their capability to understand the basic functions of AI and ethically use AI applications in their daily lives. |

| Expert C | Young children’s AI literacy means appropriately understanding the basic ideas behind smart machines like robots and computer programs. It includes recognizing how these machines can help us in simple tasks and learning to interact with them in a safe and kind way. |

| Expert D | Young Children’s AI literacy means ethically and appropriately using AI in their daily lives. |

| Expert E | Young children’s AI literacy refers to the ability to recognize and use simple AI tools in their surroundings. This involves identifying everyday technology that has AI, like interactive toys or learning apps, and understanding how to use them responsibly and ethically. |

| Expert F | Young children’s AI literacy can be defined as the early stage of understanding and engaging with AI. It focuses on familiarizing young children with the concept of artificial intelligence through interactive and age-appropriate examples, fostering an awareness of how AI is a part of their daily life and encouraging a thoughtful and ethical approach to its use. |

| Expert G | Young Children’s AI literacy means young children make a wise decision while using, creating, and controlling technology. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, W.; He, H.; Gao, M.; Li, H. Safety, Identity, Attitude, Cognition, and Capability: The ‘SIACC’ Framework of Early Childhood AI Literacy. Educ. Sci. 2024, 14, 871. https://doi.org/10.3390/educsci14080871

Luo W, He H, Gao M, Li H. Safety, Identity, Attitude, Cognition, and Capability: The ‘SIACC’ Framework of Early Childhood AI Literacy. Education Sciences. 2024; 14(8):871. https://doi.org/10.3390/educsci14080871

Chicago/Turabian StyleLuo, Wenwei, Huihua He, Minqi Gao, and Hui Li. 2024. "Safety, Identity, Attitude, Cognition, and Capability: The ‘SIACC’ Framework of Early Childhood AI Literacy" Education Sciences 14, no. 8: 871. https://doi.org/10.3390/educsci14080871