Evaluating an Artificial Intelligence (AI) Model Designed for Education to Identify Its Accuracy: Establishing the Need for Continuous AI Model Updates

Abstract

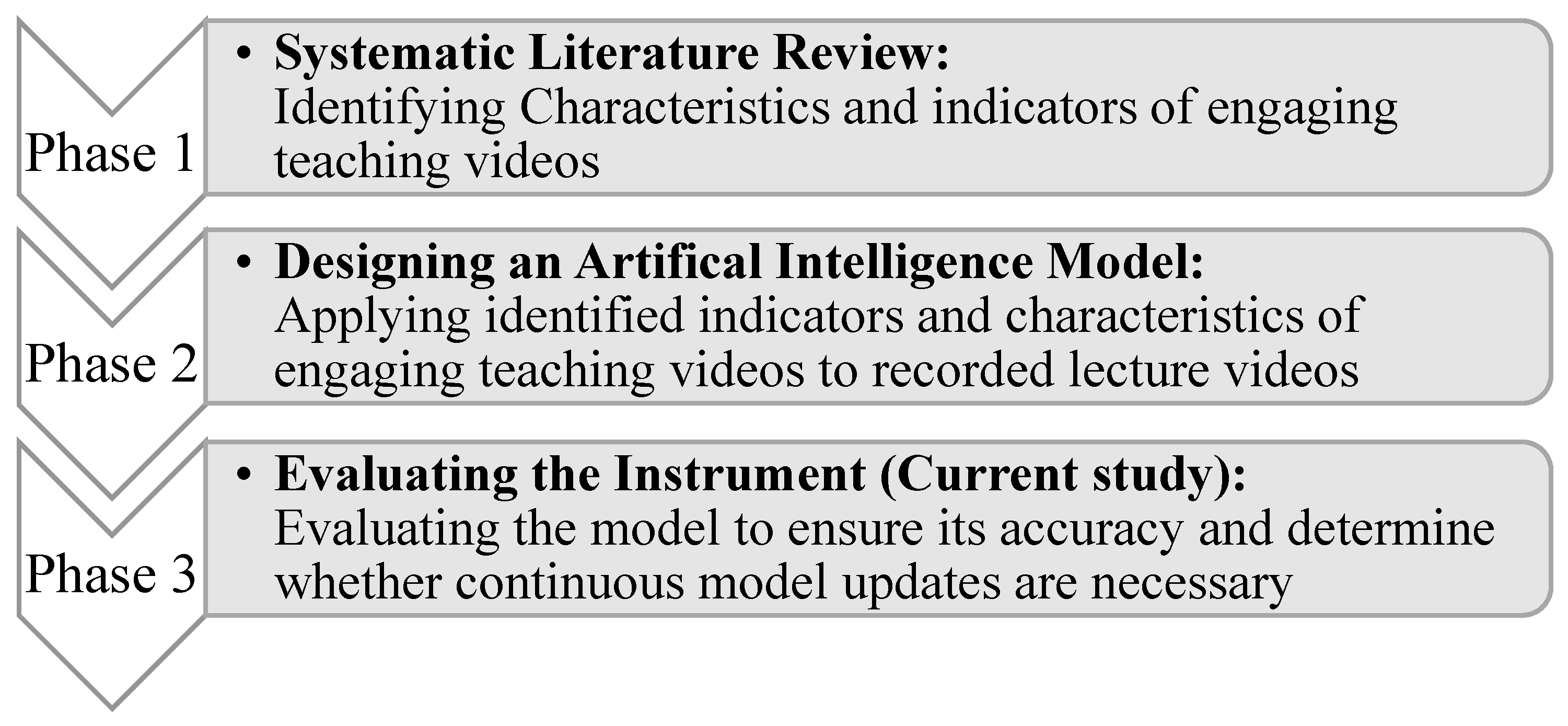

:1. Introduction

“How accurately can an AI model generate a report for characteristics and indicators of engaging teaching videos based on teachers’ behaviours and movements?”(RQ1)

By addressing these questions, this research aims to contribute to the ongoing effort to accurately and sustainably integrate AI into online learning.“Why is it important to continuously update the AI model designed to enhance online learning and teaching?”(RQ2)

2. Background

2.1. Previous Phases

2.2. Evaluation Methods in Education

2.3. Evaluation Methods in AI

3. Methods

- AI process

- Data pre-processing

- Deep learning model

3.1. Data Collection

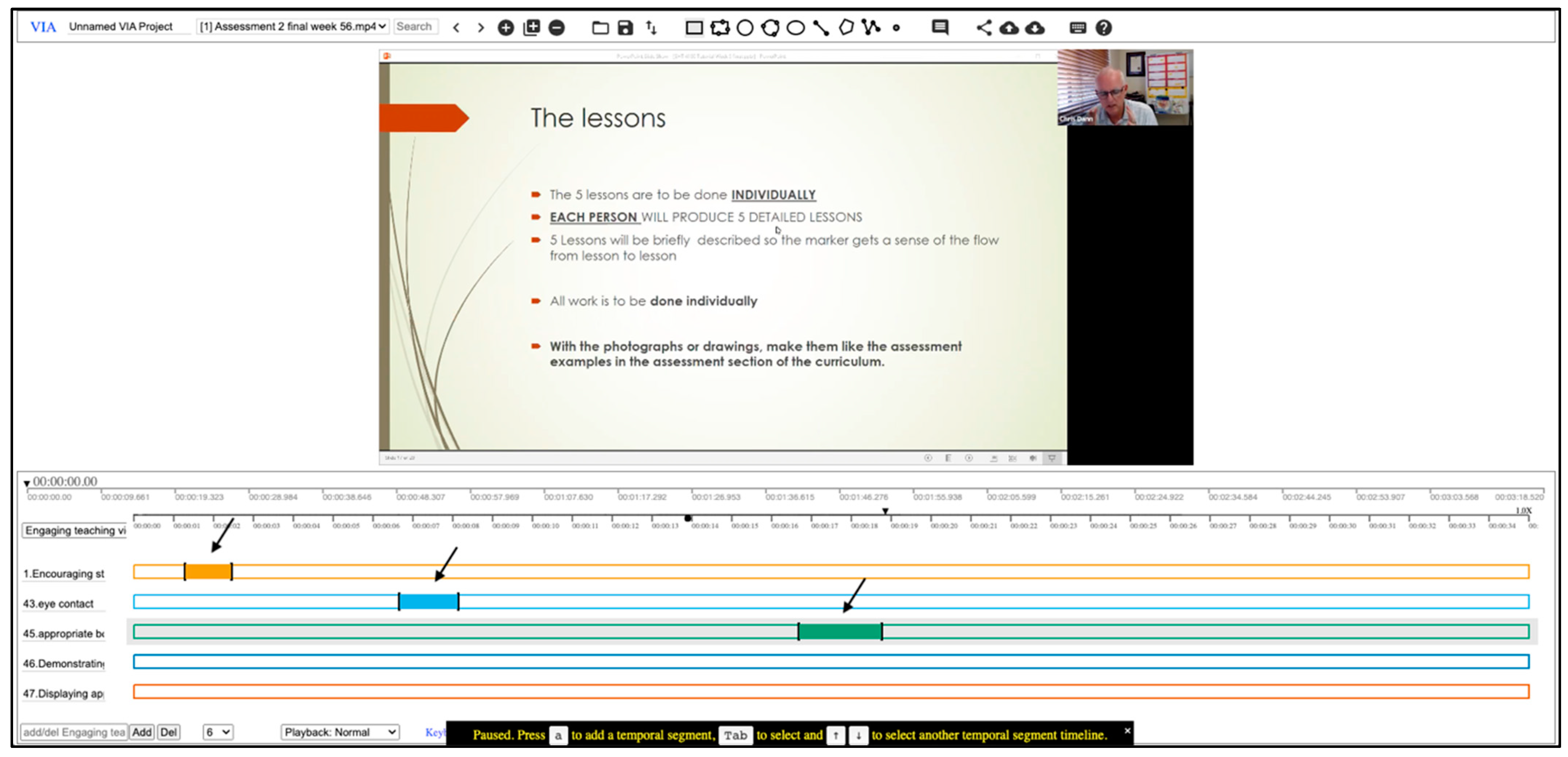

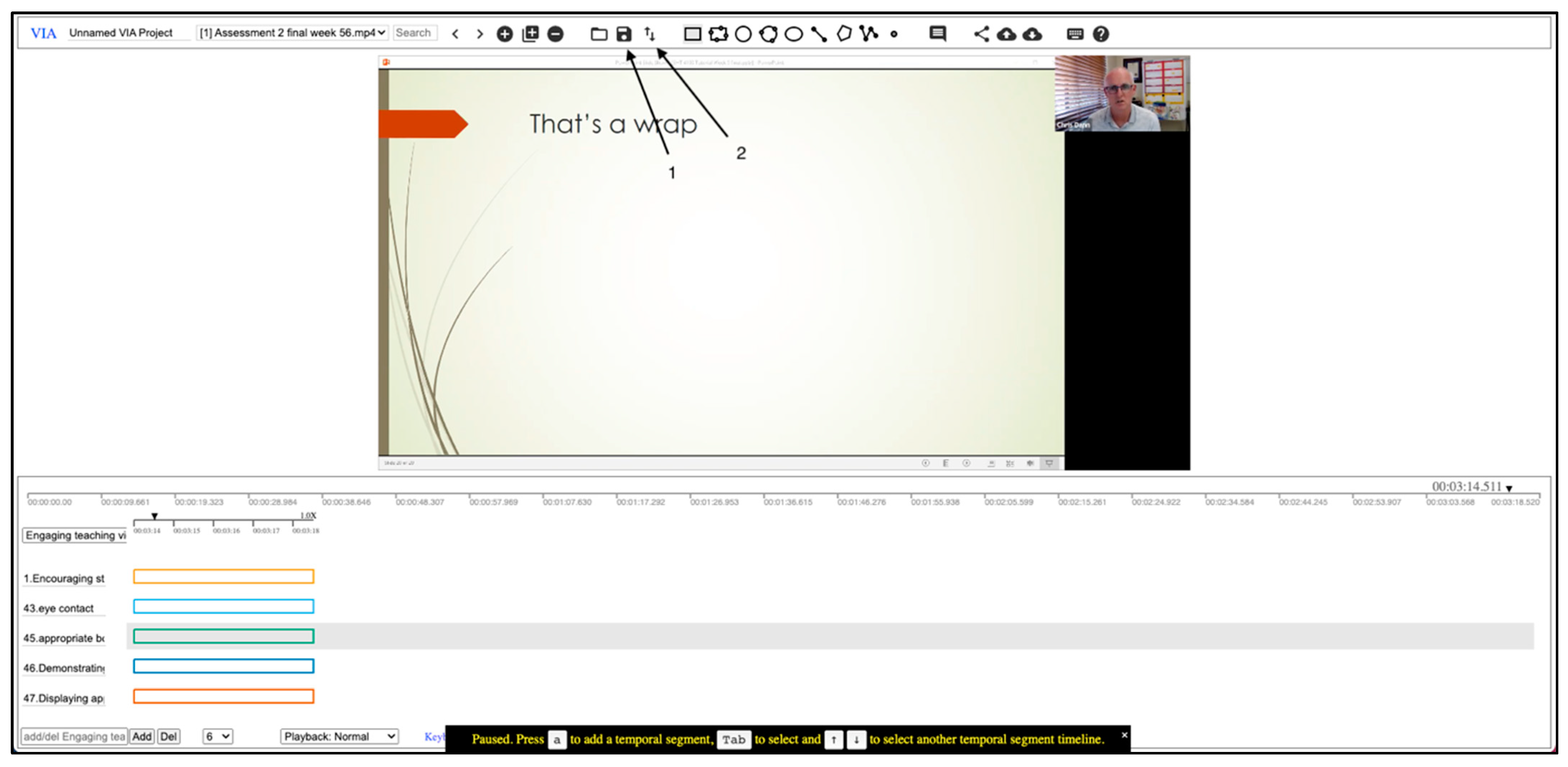

3.2. Video Analysis

3.2.1. Expert Involvement

3.2.2. AI Reports

3.3. Data Analysis

4. Results

4.1. Explanation of Findings

4.1.1. Video 1 Results

4.1.2. Video 2 Results

5. Discussion

5.1. Exploration of Research Findings

5.2. Implications

6. Limitations and Future Directions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviation | Definition |

| AI | Artificial Intelligence |

| CNN | Convoluted Neural Network |

| COVID-19 | Coronavirus Disease 2019 |

| DBR | Design-based Research |

| VIA | VGG Image Annotator |

Appendix A

Appendix A.1

| Main Theme | Characteristics | Indicators |

| Teachers’ Behaviours | Encouraging Active Participation |

|

| Establishing Teacher Presence |

| |

| Establishing Social Presence |

| |

| Establishing Cognitive Presence |

| |

| Questions and Feedback |

| |

| Displaying Enthusiasm |

| |

| Establishing Clear Expectations |

| |

| Demonstrating Empathy |

| |

| Demonstrating Professionalism |

| |

| Teachers’ Movements | Using Nonverbal Cues |

|

| Use of Technology | Using Technology Effectively |

|

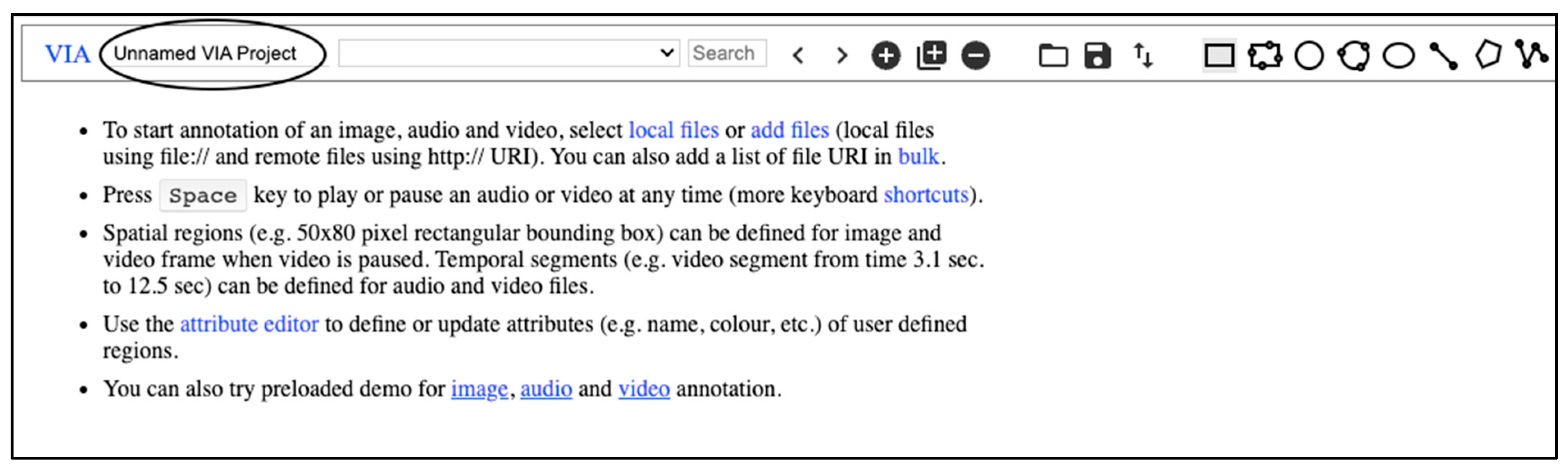

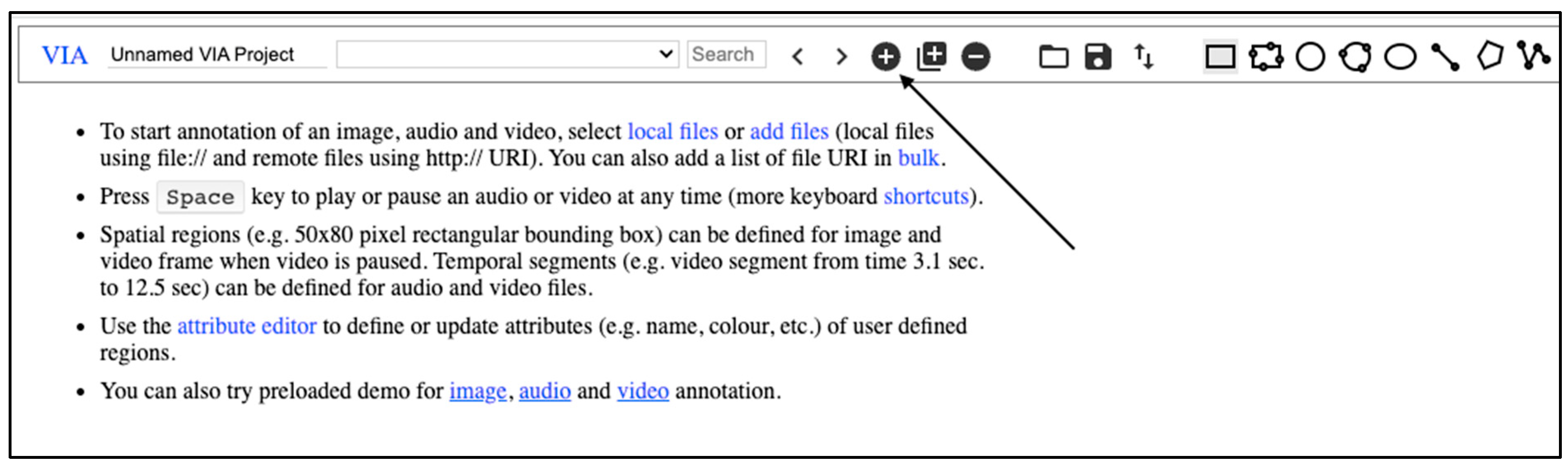

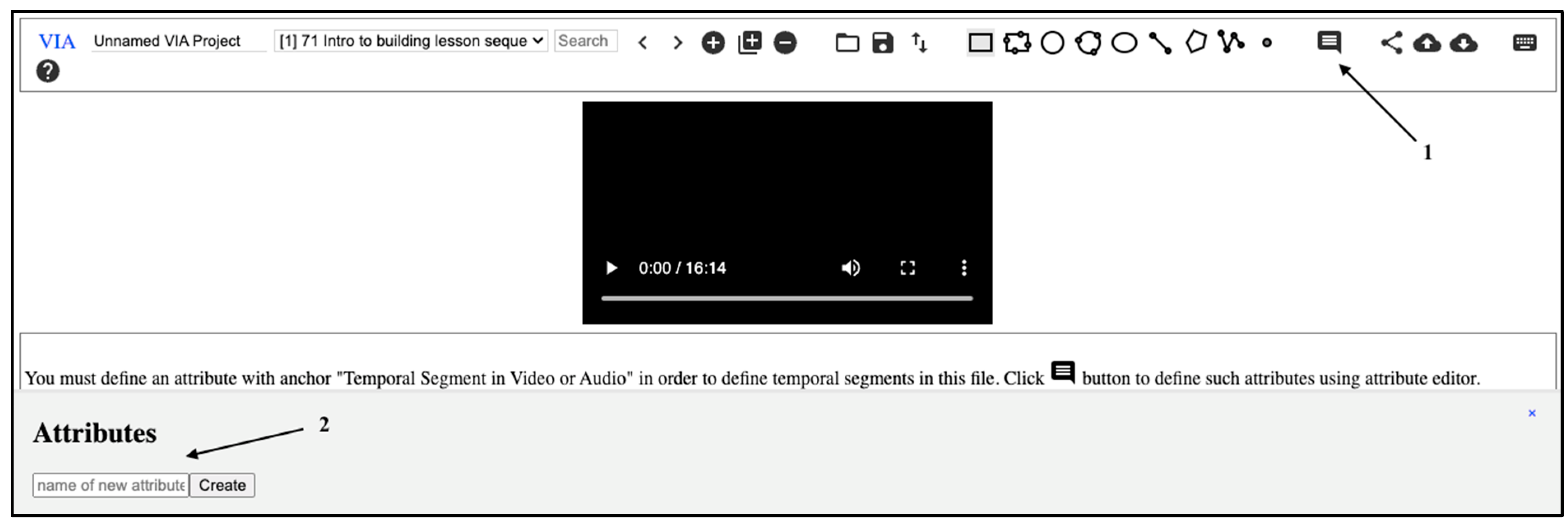

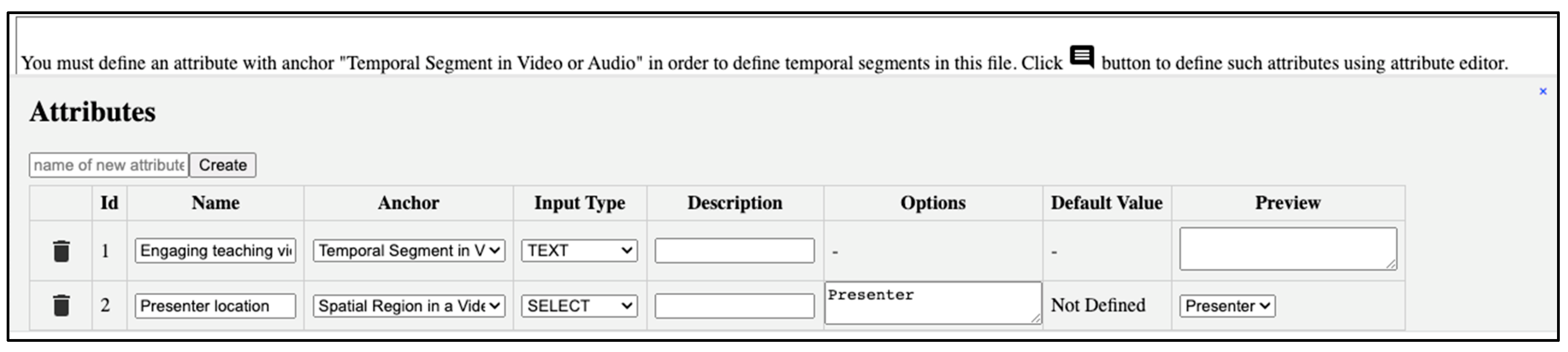

Appendix A.2. Manual Video Annotation Procedure

| Indicators | Description |

| Teachers to engage students in discussions or debates to attract their interest and motivate a deeper understanding |

| Teachers to ask for students’ participation in active learning methods by sharing their perceptions, knowledge, and ideas |

| Teachers to create a safe and open environment that allows students to ask their questions, to enhance the student interaction experience |

| Teachers to create opportunities for students to interact with each other through group activities or collaborative work |

| Teachers to construct a welcoming and efficient online learning environment by fostering regular and meaningful communication with students and providing meaningful answers to students’ enquiries |

| Teachers to provide students with various learning resources, videos, etc., to increase students’ active participation |

| Teachers to be clear and detailed in communicating the instructions, expectations, roles, and responsibilities, to show commitment to meeting the course goals |

| Teachers to clearly outline and communicate the topics and instructions to increase student engagement in online learning |

| Teachers to read and respond to perceived restlessness by using appropriate changes in tone of voice or changes in direction |

| Teachers to maintain appropriate facial expressions such as smiling and nodding |

| Teachers to maintain eye contact with students in online learning |

| Teachers to maintain appropriate body language in the online classroom |

| Teachers to increase the value of the online learning experience by enabling class recording, which allows students access to classroom sessions from the comfort of their home and if they want to review afterwards |

| Teachers to assure students of their presence and positively impact student engagement and satisfaction by communicating in real-time through a chat, camera, microphone, and screen sharing |

| Teachers to vary the presentation media (e.g., videos, slides, note sharing, etc.) to capture students’ attention and foster engagement |

References

- Aldoseri, A., Al-Khalifa, K. N., & Hamouda, A. M. (2023). Re-thinking data strategy and integration for Artificial Intelligence: Concepts, opportunities, and challenges. Applied Sciences, 13(12), 7082. [Google Scholar] [CrossRef]

- Alenezi, E., Alfadley, A. A., Alenezi, D. F., & Alenezi, Y. H. (2022). The sudden shift to distance learning: Challenges facing teachers. Journal of Education and Learning, 11(3), 14. [Google Scholar] [CrossRef]

- Apicella, A., Arpaïa, P., Frosolone, M., Improta, G., Moccaldi, N., & Pollastro, A. (2022). EEG-based measurement system for monitoring student engagement in learning 4.0. Scientific Reports, 12(1), 5857. [Google Scholar] [CrossRef]

- Ashwin, T. S., & Guddeti, R. M. R. (2019). Automatic detection of students’ affective states in classroom environment using hybrid convolutional neural networks. Education and Information Technologies, 25(2), 1387–1415. [Google Scholar] [CrossRef]

- Beaver, I., & Mueen, A. (2022). On the care and feeding of virtual assistants: Automating conversation review with AI. AI Magazine, 42(4), 29–42. [Google Scholar] [CrossRef]

- Behera, A., Matthew, P., Keidel, A., Vangorp, P., Fang, H., & Canning, S. (2020). Associating facial expressions and upper-body gestures with learning tasks for enhancing intelligent tutoring systems. International Journal of Artificial Intelligence in Education, 30(2), 236–270. [Google Scholar] [CrossRef]

- Bell, J. (1999). Doing your research project: A guide for first-time researchers in education and social science (3rd ed.). Open University Press. [Google Scholar]

- Castro, M. D. B., & Tumibay, G. M. (2021). A literature review: Efficacy of online learning courses for higher education institution using meta-analysis. Education and Information Technologies, 26, 1367–1385. [Google Scholar] [CrossRef]

- Cents-Boonstra, M., Lichtwarck-Aschoff, A., Lara, M. M., & Denessen, E. (2021). Patterns of motivating teaching behaviour and student engagement: A microanalytic approach. European Journal of Psychology of Education, 37, 227–255. [Google Scholar] [CrossRef]

- Chiu, T. K. F. (2021). Applying the self-determination theory (SDT) to explain student engagement in online learning during the COVID-19 pandemic. Journal of Research on Technology in Education, 54(Suppl. S1), S14–S30. [Google Scholar] [CrossRef]

- De Silva, D., Kaynak, O., El-Ayoubi, M., Mills, N., Alahakoon, D., & Manic, M. (2024). Opportunities and challenges of Generative artificial intelligence: Research, education, industry engagement, and social impact. IEEE Industrial Electronics Magazine, 2–17. [Google Scholar] [CrossRef]

- Dhawan, S. (2020). Online learning: A panacea in the time of COVID-19 crisis. Journal of Educational Technology Systems, 49(1), 5–22. [Google Scholar] [CrossRef]

- Giang, T. T. T., Andre, J., & Lan, H. H. (2022). Student engagement: Validating a model to unify in-class and out-of-class contexts. Journal of Education and Learning, 8(4), 1–14. [Google Scholar] [CrossRef]

- Gillett-Swan, J. (2017). The challenges of online learning: Supporting and engaging the isolated learner. Journal of Learning Design, 10(1), 20–30. [Google Scholar] [CrossRef]

- Harry, A., & Sayudin, S. (2023). Role of AI in education. Interdiciplinary Journal and Humanity (Injurity), 2(3), 260–268. [Google Scholar] [CrossRef]

- Heale, R., & Twycross, A. (2018). What is a case study? Evidence-Based Nursing, 21(1), 7–8. [Google Scholar] [CrossRef]

- Heeg, D. M., & Avraamidou, L. (2023). The use of Artificial intelligence in school science: A systematic literature review. Educational Media International, 60(2), 125–150. [Google Scholar] [CrossRef]

- Hew, K. F. (2016). Promoting engagement in online courses: What strategies can we learn from three highly rated MOOCS. British Journal of Educational Technology, 47(2), 320–341. [Google Scholar] [CrossRef]

- Huang, A. Y. Q., Lu, O. H. T., & Yang, S. J. H. (2023). Effects of artificial Intelligence–Enabled personalised recommendations on learners’ learning engagement, motivation, and outcomes in a flipped classroom. Computers & Education, 194, 104684. [Google Scholar] [CrossRef]

- Kvale, S. (1996). Interview views: An Introduction to qualitative research interviewing. Sage Publications. [Google Scholar]

- Lee, J., Song, H., & Hong, A. J. (2019). Exploring factors, and indicators for measuring students’ sustainable engagement in e-Learning. Sustainability, 11(4), 985. [Google Scholar] [CrossRef]

- Li, J., Lin, F., Yang, L., & Huang, D. (2023). AI service placement for Multi-Access Edge Intelligence systems in 6G. IEEE Transactions on Network Science and Engineering, 10(3), 1405–1416. [Google Scholar] [CrossRef]

- Liang, R., & Chen, D. T. V. (2012). Online learning: Trends, potential and challenges. Creative Education, 3(8), 1332. [Google Scholar] [CrossRef]

- Limna, P., Jakwatanatham, S., Siripipattanakul, S., Kaewpuang, P., & Sriboonruang, P. (2022). A review of artificial intelligence (AI) in education during the digital era. Advance Knowledge for Executives, 1(1), 1–9. Available online: https://ssrn.com/abstract=4160798 (accessed on 5 January 2024).

- Ma, J., Han, X., Yang, J., & Cheng, J. (2015). Examining the necessary condition for engagement in an online learning environment based on learning analytics approach: The role of the instructor. The Internet and Higher Education, 24, 26–34. [Google Scholar] [CrossRef]

- Ma, X., Xu, M., Dong, Y., & Sun, Z. (2021). Automatic student engagement in online learning environment based on Neural Turing Machine. International Journal of Information and Education Technology, 11(3), 107–111. [Google Scholar] [CrossRef]

- Murtaza, M., Ahmed, Y., Shamsi, J. A., Sherwani, F., & Usman, M. (2022). AI-Based personalised E-Learning systems: Issues, challenges, and solutions. IEEE Access, 10, 81323–81342. [Google Scholar] [CrossRef]

- Nguyen, N. D. (2023). Exploring the role of AI in education. London Journal of Social Sciences, 6, 84–95. [Google Scholar] [CrossRef]

- Nikoloutsopoulos, S., Koutsopoulos, I., & Titsias, M. K. (2024, May 5–8). Kullback-Leibler reservoir sampling for fairness in continual learning. 2024 IEEE International Conference on Machine Learning for Communication and Networking (ICMLCN) (pp. 460–466), Stockholm, Sweden. [Google Scholar] [CrossRef]

- Ocaña, M. G., & Opdahl, A. L. (2023). A software reference architecture for journalistic knowledge platforms. Knowledge-Based Systems, 276, 110750. [Google Scholar] [CrossRef]

- Pianykh, O. S., Langs, G., Dewey, M., Enzmann, D. R., Herold, C. J., Schoenberg, S. O., & Brink, J. A. (2020). Continuous Learning AI in radiology: Implementation principles and early applications. Radiology, 297(1), 6–14. [Google Scholar] [CrossRef]

- Pingenot, A., & Shanteau, J. (2009). Expert opinion. In M. W. Kattan (Ed.), Encyclopedia of medical decision making. Sage Publications, Inc. Available online: https://www.researchgate.net/publication/263471207_Expert_Opinion (accessed on 2 January 2024).

- Roshanaei, M., Khan, M. R., & Sylvester, N. N. (2024). Enhancing Cybersecurity through AI and ML: Strategies, challenges, and future directions. Journal of Information Security, 15(3), 320–339. [Google Scholar] [CrossRef]

- Sandelowski, M. (2000). Combining qualitative and quantitative sampling, data collection, and analysis techniques. Research in Nursing & Health, 23(3), 246–255. [Google Scholar] [CrossRef]

- Shaikh, A. A., Kumar, A., Jani, K., Mitra, S., García-Tadeo, D. A., & Devarajan, A. (2022). The role of Machine Learning and Artificial Intelligence for making a digital classroom and its sustainable impact on education during COVID-19. Materials Today Proceedings, 56, 3211–3215. [Google Scholar] [CrossRef] [PubMed]

- Shekhar, P., Prince, M. J., Finelli, C. J., DeMonbrun, M., & Waters, C. (2018). Integrating quantitative and qualitative research methods to examine student resistance to active learning. European Journal of Engineering Education, 44(1–2), 6–18. [Google Scholar] [CrossRef]

- Tahiru, F. (2021). AI in education. Journal of Cases on Information Technology, 23(1), 1–20. [Google Scholar] [CrossRef]

- Tinoca, L., Piedade, J., Santos, S., Pedro, A., & Gomes, S. (2022). Design-Based research in the educational field: A systematic literature review. Education Sciences, 12(6), 410. [Google Scholar] [CrossRef]

- Turner, D. J. (2010). Qualitative interview design: A practical guide for novice investigators. The Qualitative Report, 15(3), 754–760. [Google Scholar] [CrossRef]

- Verma, N., Getenet, S., Dann, C., & Shaik, T. (2023a). Characteristics of engaging teaching videos in higher education: A systematic literature review of teachers’ behaviours and movements in video conferencing. Research and Practice in Technology Enhanced Learning, 18, 040. [Google Scholar] [CrossRef]

- Verma, N., Getenet, S., Dann, C., & Shaik, T. (2023b). Designing an artificial intelligence tool to understand student engagement based on teacher’s behaviours and movements in video conferencing. Computers & Education: Artificial Intelligence, 5, 100187. [Google Scholar] [CrossRef]

- Wang, C., Yang, Z., Li, Z. S., Damian, D., & Lo, D. (2024). Quality assurance for Artificial intelligence: A study of industrial concerns, challenges and best practices. arXiv, arXiv:2402.16391. [Google Scholar] [CrossRef]

- Wang, X., & Yin, M. (2023, April 23–28). Watch out for updates: Understanding the effects of model explanation updates in ai-assisted decision making. 2023 CHI Conference on Human Factors in Computing Systems (pp. 1–19), Hamburg, Germany. [Google Scholar] [CrossRef]

- Weng, X., Ng, O.-L., & Chiu, T. K. F. (2023). Competency development of pre-service teachers during video-based learning: A systematic literature review and meta-analysis. Computers & Education, 199, 104790. [Google Scholar] [CrossRef]

- Xie, J., A, G., Rice, M. F., & Griswold, D. E. (2021). Instructional designers’ shifting thinking about supporting teaching during and post-COVID-19. Distance Education, 42, 1–21. [Google Scholar] [CrossRef]

- Žliobaite, I., Budka, M., & Stahl, F. (2015). Towards cost-sensitive adaptation: When is it worth updating your predictive model? Neurocomputing, 150, 240–249. [Google Scholar] [CrossRef]

| Characteristics | Indicators |

|---|---|

| Encouraging Active Participation |

|

| Establishing Teacher Presence |

|

| Establishing Clear Expectations |

|

| Demonstrating Empathy |

|

| Using Nonverbal Cues |

|

| Using Technology Effectively |

|

| Video 1 | AI Model | Expert 1 | Expert 2 |

|---|---|---|---|

| Segment 0 | 1 | 1 | 14 |

| Segment 1 | 6 | 8 | 6 |

| Segment 2 | 6 | 8 | 6 |

| Segment 3 | 14 | 8 | 14 |

| Segment 4 | 1 | 14 | 8 |

| Segment 5 | 15 | 7 | 15 |

| Segment 6 | 7 | 7 | No identified indicator |

| Segment 7 | 5 | 9 | No identified indicator |

| Segment 8 | 2 | 8 | No identified indicator |

| Segment 9 | 5 | 9 | No identified indicator |

| Segment 10 | 9 | 9 | No identified indicator |

| Segment 11 | 5 | 9 | No identified indicator |

| Video 2 | AI Model | Expert 1 | Expert 2 |

|---|---|---|---|

| Segment 0 | 1 | 14 | 15 |

| Segment 1 | 10 | 8 | 15 |

| Segment 2 | 5 | 7 | 5 |

| Segment 3 | 5 | 4 | 5 |

| Segment 4 | 1 | 7 | 2 |

| Segment 5 | 12 | 12 | 4 |

| Segment 6 | 5 | 7 | 2 |

| Segment 7 | 10 | 12 | 10 |

| Segment 8 | 5 | 7 | 7 |

| Segment 9 | 7 | 12 | 7 |

| Segment 10 | 1 | 12 | 1 |

| Segment 11 | 1 | 12 | No identified indicator |

| Segment 12 | 5 | 9 | No identified indicator |

| Segment 13 | 1 | 12 | No identified indicator |

| Segment 14 | 1 | 12 | No identified indicator |

| Segment 15 | 9 | 7 | No identified indicator |

| Segment 16 | 5 | 7 | No identified indicator |

| Segment 17 | 14 | 15 | No identified indicator |

| Segment 18 | 5 | 12 | No identified indicator |

| Segment 19 | 14 | 12 | No identified indicator |

| Segment 20 | 1 | 9 | No identified indicator |

| Segment 21 | 14 | 15 | No identified indicator |

| Segment 22 | 1 | 1 | No identified indicator |

| Segment 23 | 5 | 12 | No identified indicator |

| Statistical Measure | AI Tool vs. Expert 1 | AI Tool vs. Expert 2 | Interpretation |

|---|---|---|---|

| Cohen’s Kappa | 0.09 | 0.07 | Slight agreement |

| Bland–Altman Analysis | |||

| -Mean Difference | 4.92 | 2.24 | Moderate variability in differences |

| -Standard Deviation of Differences | 4.55 | 6.18 | |

| -95% Limits of Agreement | (−4.00, 13.84) | (−9.87, 14.35) | |

| Intraclass Correlation Coefficient (ICC2k) | 0.45 | 0.45 | Moderate reliability |

| Pearson Correlation Coefficient | 0.09 | −0.02 | Weak linear relationship |

| Spearman Correlation Coefficient | 0.09 | −0.10 | Weak rank-order relationship |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Verma, N.; Getenet, S.; Dann, C.; Shaik, T. Evaluating an Artificial Intelligence (AI) Model Designed for Education to Identify Its Accuracy: Establishing the Need for Continuous AI Model Updates. Educ. Sci. 2025, 15, 403. https://doi.org/10.3390/educsci15040403

Verma N, Getenet S, Dann C, Shaik T. Evaluating an Artificial Intelligence (AI) Model Designed for Education to Identify Its Accuracy: Establishing the Need for Continuous AI Model Updates. Education Sciences. 2025; 15(4):403. https://doi.org/10.3390/educsci15040403

Chicago/Turabian StyleVerma, Navdeep, Seyum Getenet, Christopher Dann, and Thanveer Shaik. 2025. "Evaluating an Artificial Intelligence (AI) Model Designed for Education to Identify Its Accuracy: Establishing the Need for Continuous AI Model Updates" Education Sciences 15, no. 4: 403. https://doi.org/10.3390/educsci15040403

APA StyleVerma, N., Getenet, S., Dann, C., & Shaik, T. (2025). Evaluating an Artificial Intelligence (AI) Model Designed for Education to Identify Its Accuracy: Establishing the Need for Continuous AI Model Updates. Education Sciences, 15(4), 403. https://doi.org/10.3390/educsci15040403