Evaluating Competency Development and Academic Outcomes: Insights from Six Semesters of Data-Driven Analysis

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

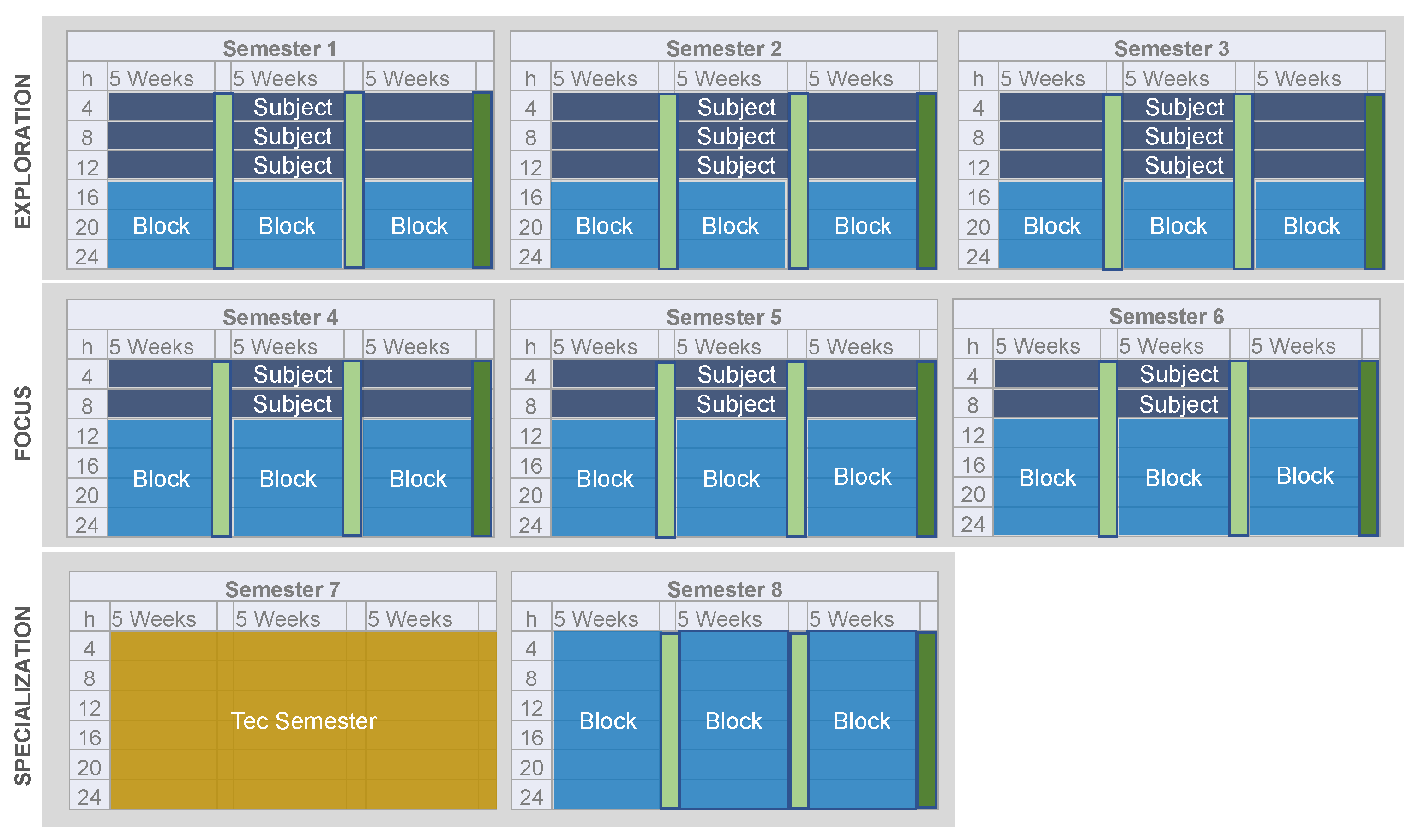

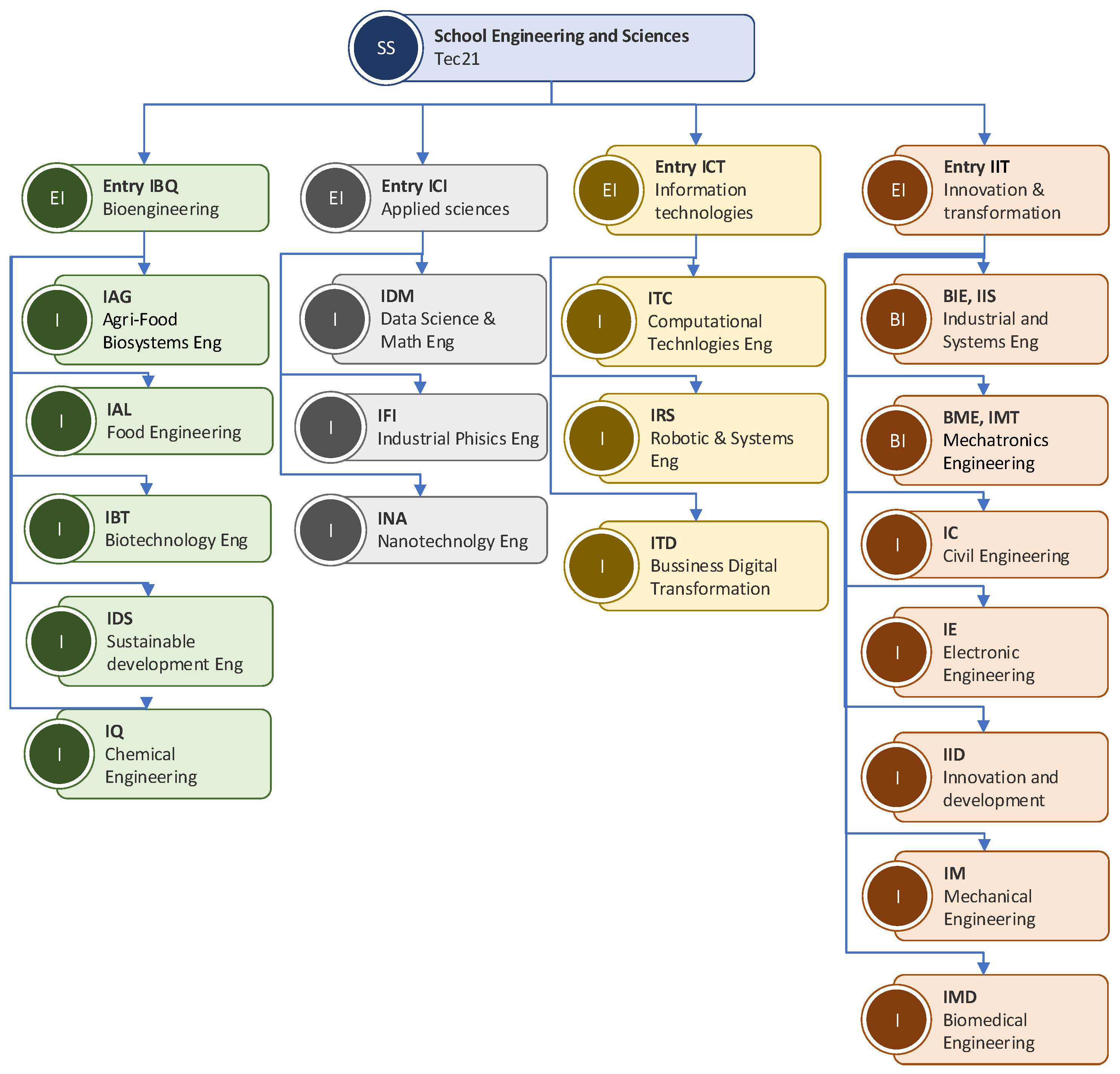

3.1. The Tec21 Pedagogical Model and the Structure of Tec’s STEM Programs

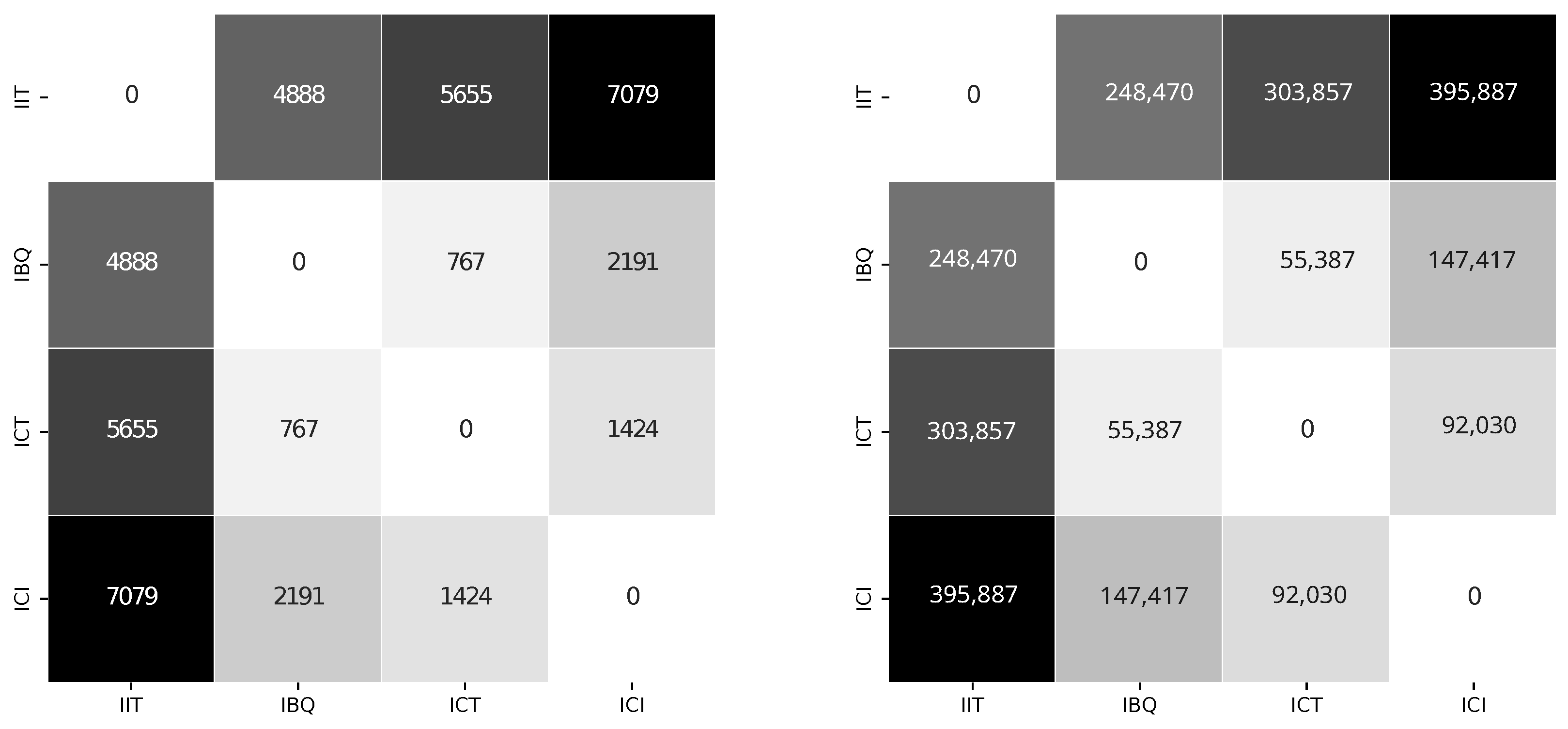

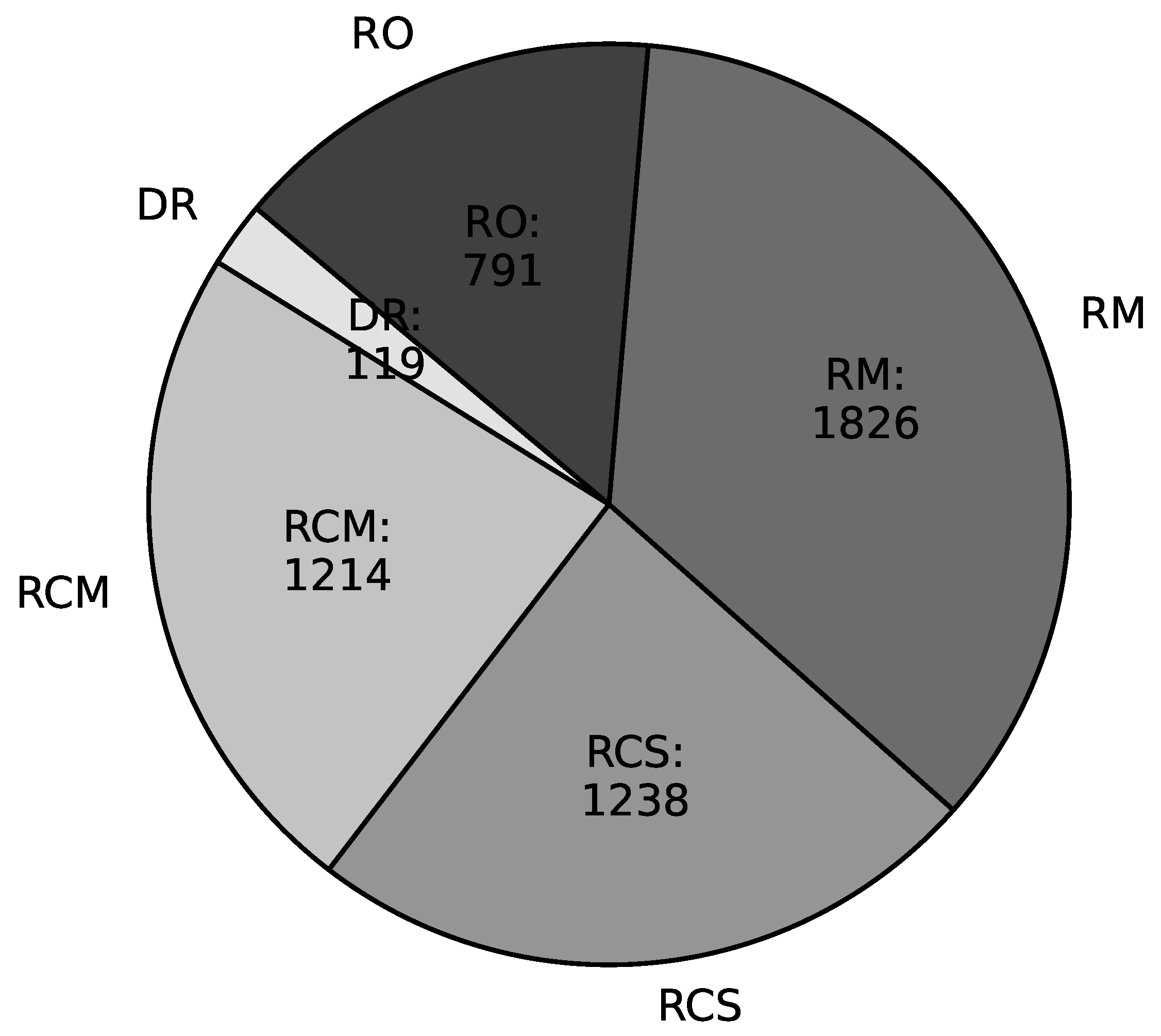

3.2. Database and Sample Description

4. Results and Discussion

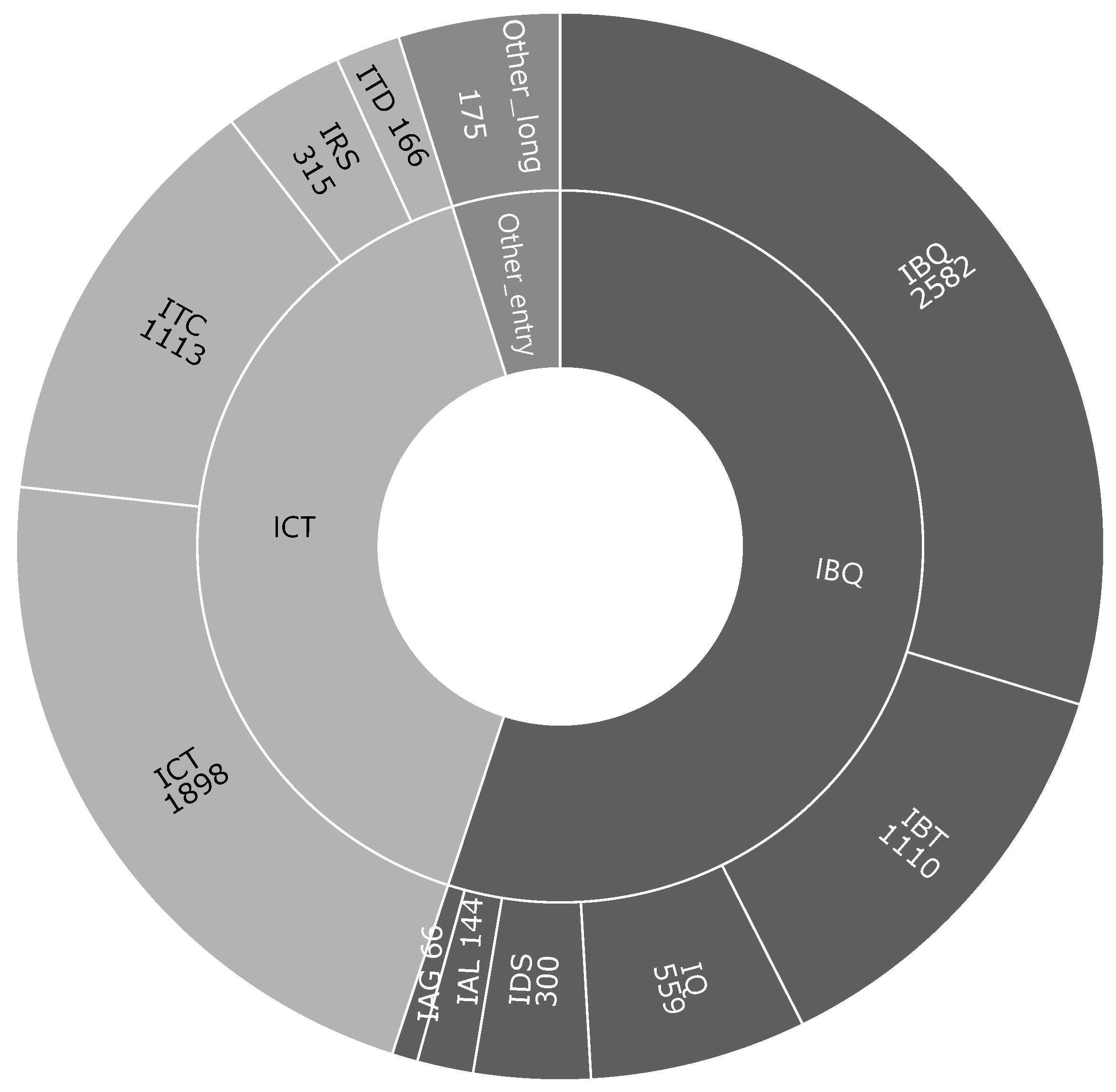

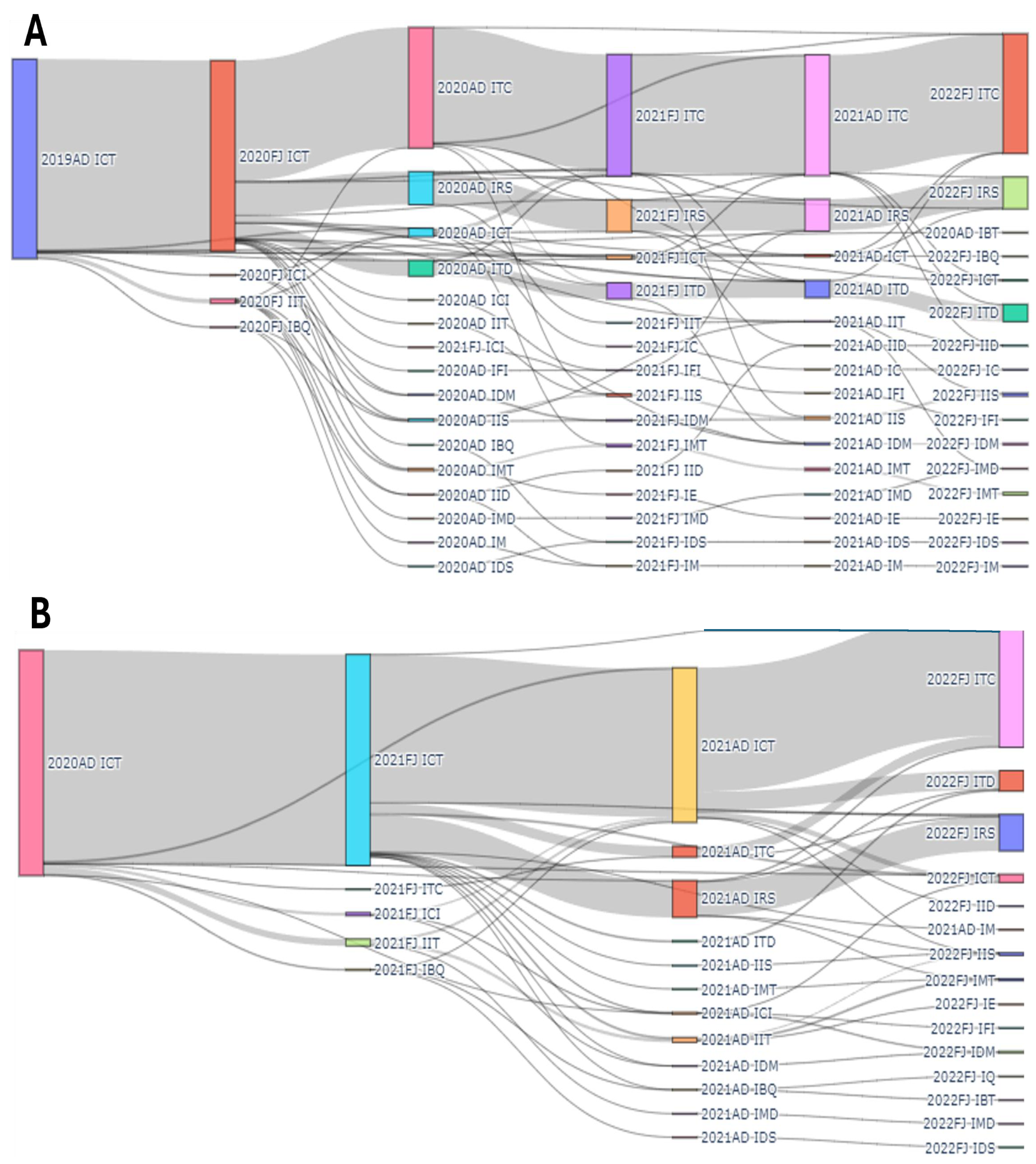

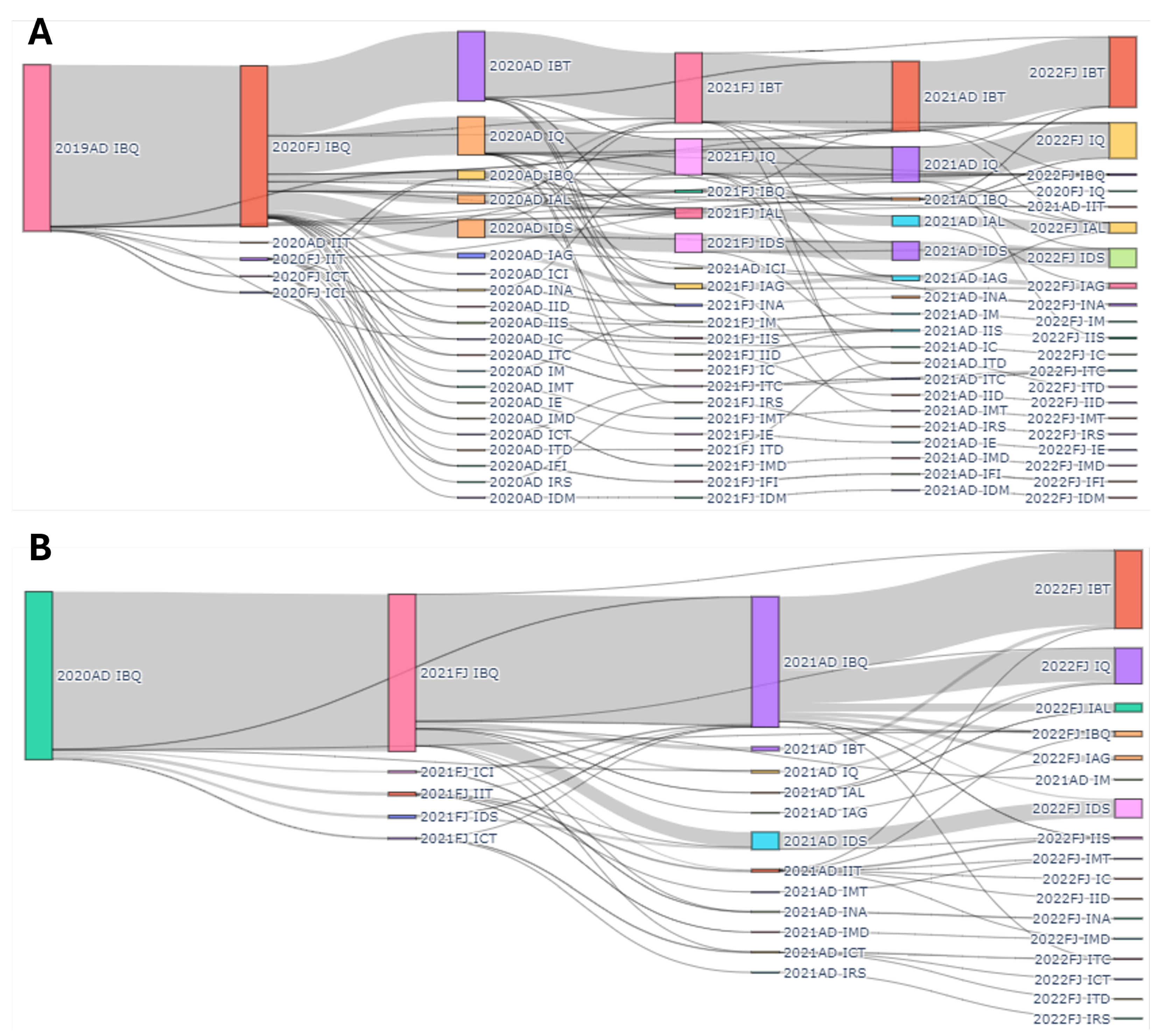

4.1. Entry Program or Long-Term Program? An Analysis of Student Performance

Comparison of Academic Performance

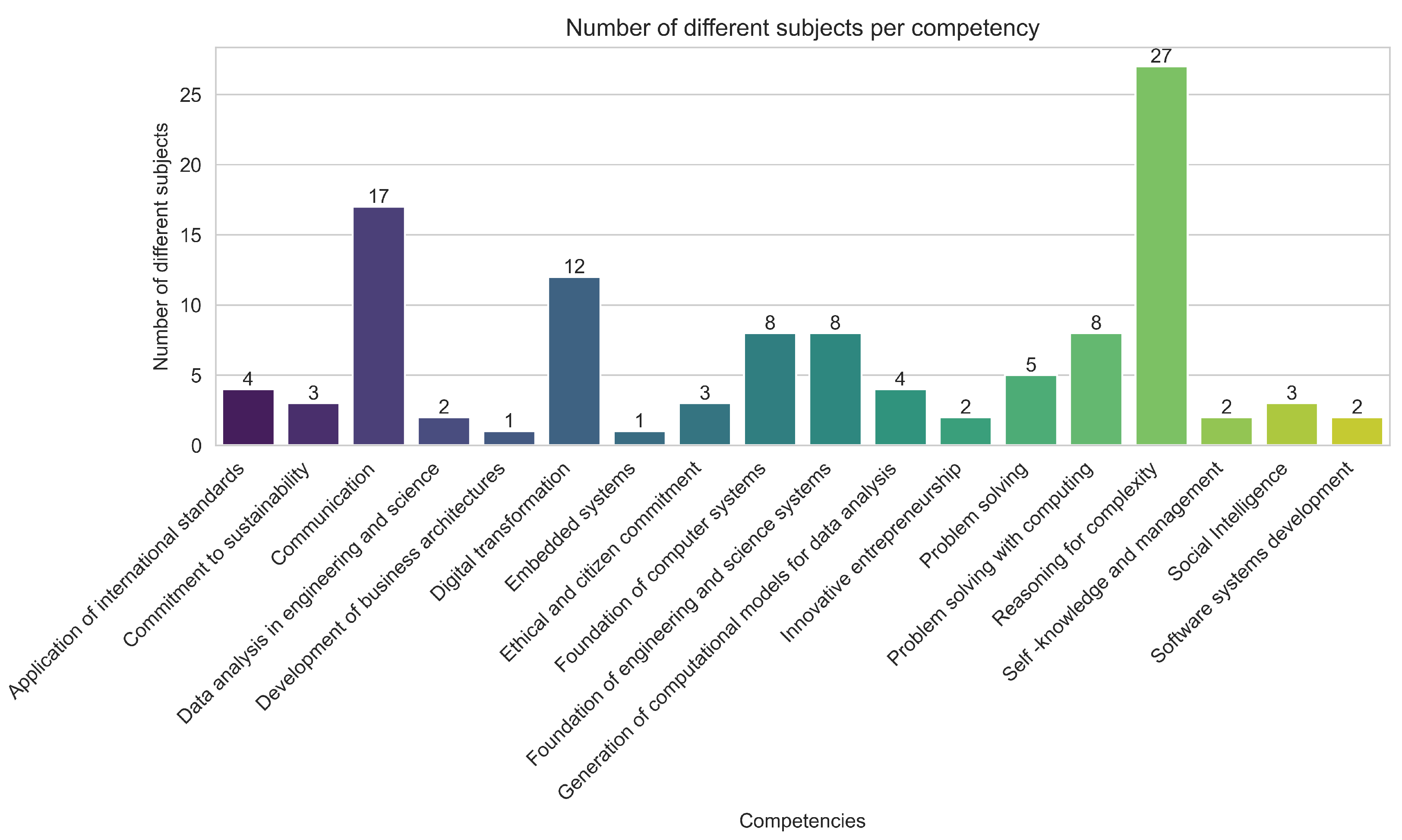

4.2. An Analysis of Competency Difficulty

4.3. Analysis of Competency Development Against Type and Grade of the Formative Unit

Student Workload Versus Failure Rate

4.4. Limitations

5. Conclusions and Directions for Further Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AD | August to December |

| CBE | Competency-Based Education |

| FJ | February to June |

| FTE | Full-Time Equivalent |

| HE | Higher Education |

| IBQ | Bioengineering and Chemical Process |

| ICT | Computer Science and Information Technologies |

| ID | Identification |

| ITC | B.S. in Computer Science and Technology |

| RCM | Monterrey Region |

| RCS | Central/South Region |

| RM | Monterrey Region |

| RO | West Region |

| SD | Standard Deviation |

| STEM | Science, Technology, Engineering, and Mathematics |

Appendix A. List of Competencies by Type

Appendix B. Feature Description by Categories

| Variable | Domain | Description |

|---|---|---|

| student.id | Numeric | Unique identifier for each student |

| term_period.id | Categorical | Academic period of the evaluation, where the first four digits indicate the year and the second two chars indicate the starting and end months, e.g., 2019AD is August–December 2019 |

| student.age | Numeric | Student’s age in the academic period |

| student.nationality | Categorical | Student’s nationality |

| student.isForeign | Boolean | True if their student residence is in the same city as the campus, False otherwise |

| student_origSchool.TEC | Boolean | Indicates whether the student studied in a high school that belongs to Tec de Monterrey |

| campus.region_name | Categorical | Code of the region of the enrollment campus: RM Monterrey Region, RO Western Region, RCS Central/South Region, RCM Mexico City Region, DR Other Regions |

| student.cohort.id | Numeric | Year of admission (cohort) of the student |

| student.isWoman | Boolean | Student’s gender, which is coded as True if the student is a woman and False if the student is a man. |

| semesters_from.enrollment | Numeric | Counts how many academic periods have passed since the student enrolled |

| enrollment_period.id | Categorical | Academic period where the student.id appears first in the data (2019FJ, 2020AD, etc.) |

| student.subject_sem_enrolled | Numeric | Counts how many training units the student has enrolled in during the current academic period |

| Variable | Domain | Description |

|---|---|---|

| student.status_desc | Categorical | Description of the student’s academic status |

| student.isConditioned | Boolean | Whether the student is in conditional academic status |

| program.major_id | Categorical | Acronym of the academic program of the student |

| student.semester_desc | Categorical | Description of the semester of the student |

| student.lastTerm_gpa | Numeric | Student’s last semester average |

| student.term_gpa_program | Numeric | Global average of the student’s academic program for the closing of the consulted academic term |

| student.fte | Numeric | Proportion of the number of training units the student has enrolled on in the academic period, where 1 indicates the exact number of training units, indicates fewer training units, and indicates more training units than scheduled for the academic period |

| subject.longName | Categorical | Training unit’s name |

| subject.tec21Type_desc | Categorical | Type of educational init in the Tec21 model |

| subject.type_desc | Categorical | Description of the type of training unit |

| group.isVirtual | Boolean | Indicates whether the classes in this group are taught virtually |

| group.isEnglishLanguage | Boolean | Indicates whether the training unit is taught in English |

| student_grades.final_numeric | Numeric | Student’s final grade in the training unit |

| group.modality | Categorical | Group’s modality |

| group.period | Categorical | Each semester has three periods of five weeks (1, 2, 3) |

| group.hasEvaluationInst | Boolean | Whether the educational unit has an evaluation instrument |

| group.duration_weeks | Numeric | Duration in weeks of the educational unit |

| group.size | Numeric | Count of student.id in the same group during the academic period |

| program.isAvenue | Boolean | Indicates whether the academic program is an entry program. Entry programs are initial academic programs spanning the first three academic periods, allowing students to explore various programs related to a specific knowledge area |

| Variable | Domain | Description |

|---|---|---|

| competency.desc | Categorical | Competency name according to the list in Appendix A |

| competency.type | Categorical | Type of competency: transversal, area, or disciplinary |

| sub-competency.desc | Categorical | Sub-competency name |

| sub-competency.level_required | Categorical | Level to be developed in the sub-competency: A, B, or C, where A is the simplest and C the hardest. Only one level is required for a sub-competency and subject |

| sub-competency.equivalent_key | Categorical | A code describing the sub-competency , where indicates the academic program, is the ID of the competency, is the ID of the sub-competency in the competency, and L indicates the sub-competency level required. Some equivalent sub-competencies have different codes; thus, they can be unified depending on the purpose of the study |

| sub-competency.observed_count | Numeric | Count of how many times the current sub-competency has been assessed as “Observed” or “Approved” for the current student |

| sub-competency.notobserved_count | Numeric | Count of how many times the current sub-competency has been assessed as “Not Observed” or “Failed” for the current student |

| sub-competency.level_assigned | Boolean | Evaluation assigned by the professor for the current student and sub-competency, coded as True for “Observed” and False for “Not Observed” |

| 1 | https://ifelldh.tec.mx/en/calls/competencies (accessed on 10 April 2025). |

References

- Casanova, J. R., Castro-López, A., Bernardo, A. B., & Almeida, L. S. (2023). The dropout of first-year STEM students: Is it worth looking beyond academic achievement? Sustainability, 15(2), 1253. [Google Scholar] [CrossRef]

- Henri, M., Johnson, M. D., & Nepal, B. (2017). A review of competency-based learning: Tools, assessments, and recommendations. Journal of Engineering Education, 106(4), 607–638. [Google Scholar] [CrossRef]

- Larre-Bolaños-Cacho, M., Hernández-Alamilla, S., Fuentes-Valdéz, R., & Najera-García, P. (2020). Data analytics and cloud computing vs breast cancer: Learning that helps. International Journal of Information and Education Technology, 10(4), 245–251. [Google Scholar]

- Liu, H.-Y. (2023). Design thinking competence as self-perceived by nursing students in Taiwan: A cross-sectional study. Nurse Education Today, 121, 105696. [Google Scholar] [CrossRef] [PubMed]

- Marcotte, K. M., & Gruppen, L. D. (2022). Competency-based education as curriculum and assessment for integrative learning. Education Sciences, 12(4), 267. [Google Scholar] [CrossRef]

- Miço, H., & Cungu, J. (2023). Entrepreneurship education, a challenging learning process towards entrepreneurial competence in education. Administrative Sciences, 13(1), 22. [Google Scholar] [CrossRef]

- Moonen-van Loon, J. M. W., Govaerts, M., Donkers, J., & van Rosmalen, P. (2022). Toward automatic interpretation of narrative feedback in competency-based portfolios. IEEE Transactions on Learning Technologies, 15(2), 179–189. [Google Scholar] [CrossRef]

- Navarro, I. J., Martí, J. V., & Yepes, V. (2023). Evaluation of higher education students’ critical thinking skills on sustainability. International Journal of Engineering Education, 39(3), 592–603. [Google Scholar]

- Olivares, S. L., Islas, J. R. L., Garín, M. J. P., Chapa, J. A. R., Hernández, C. H. A., & Ortega, L. O. P. (2021). Tec21 educational model: Challenges for a transformative experience. Editorial Digital del Tecnológico de Monterrey. [Google Scholar]

- Rasmussen, K., Northrup, P., & Colson, R. (2016). Handbook of research on competency-based education in university settings. IGI Global. [Google Scholar]

- Rustamov, I. T. (2023, January 8–10). Enhancing literature knowledge of future English teacher. Proceedings of International Conference on Scientific Research in Natural and Social Sciences (Vol. 2, ), Guangzhou, China. [Google Scholar]

- Sa’dijah, C., Purnomo, H., Abdullah, A. H., Permadi, H., Anwar, L., Cahyowati, E. T. D., & Sa’diyah, M. (2023). Students’ numeracy skills in solving numeracy tasks: Analysis of students of junior high schools. AIP Conference Proceedings, 2569(1), 040011. [Google Scholar]

- Sangwan, D., Sangwan, K. S., & Raj, P. (2022). 21st-century competencies in engineering education: Initiation, evolution, current, and now whither to. In Towards a new future in engineering education, new scenarios that european alliances of tech universities open up (pp. 672–681). Universitat Politècnica de Catalunya. [Google Scholar]

- Sánchez-Carracedo, F., Sabate, F., & Gibert, K. (2021). A methodology to assess the sustainability competencies in engineering undergraduate programs. International Journal of Engineering Education, 37(5), 1231–1243. [Google Scholar]

- Spady, W. G. (1977). Competency based education: A bandwagon in search of a definition. Educational Researcher, 6(1), 9–14. [Google Scholar] [CrossRef]

- Talamás-Carvajal, J. A., Ceballos, H. G., & Ramírez-Montoya, M.-S. (2024). Identification of complex thinking related competencies: The building blocks of reasoning for complexity. Journal of Learning Analytics, 11(1), 37–48. [Google Scholar] [CrossRef]

- Thanachawengsakul, N., & Wannapiroon, P. (2021). The development of a MOOCs knowledge repository system using a digital knowledge engineering process to enhance digital entrepreneurs’ competencies. International Journal of Engineering Pedagogy, 11(6), 85–101. [Google Scholar] [CrossRef]

- Valdes-Ramirez, D., de Armas Jacomino, L., Monroy, R., & Zavala, G. (2024). Assessing Sustainability competencies in contemporary STEM higher education: A data-driven analysis at tecnológico de monterrey. Frontiers in Education, 9, 1415755. [Google Scholar] [CrossRef]

- Vargas, H., Heradio, R., Farias, G., Lei, Z., & de la Torre, L. (2024). A Pragmatic framework for assessing learning outcomes in competency-based courses. IEEE Transactions on Education, 67(2), 224–233. [Google Scholar] [CrossRef]

| Program | Cohort | ITC | IRS | ITD | Other | Dropout STEM |

|---|---|---|---|---|---|---|

| ICT | 2019 | 57.096 | 15.385 | 8.234 | 6.176 | 13.109 |

| 2020 | 57.500 | 15.326 | 8.696 | 7.065 | 11.413 | |

| IBT | IQ | IDS | ||||

| IBQ | 2019 | 40.015 | 20.503 | 10.823 | 14.482 | 14.177 |

| 2020 | 43.776 | 20.217 | 10.526 | 12.865 | 12.615 |

| Program | Cohort | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|---|

| ICT | 2019 | 17.01 | 79.52 | 2.60 | 0.54 | 0.22 | 0.11 |

| 2020 | 12.39 | 27.94 | 58.04 | 1.63 | - | - | |

| IBQ | 2019 | 11.88 | 83.85 | 3.43 | 0.69 | 0.15 | 0.0 |

| 2020 | 12.10 | 18.89 | 67.44 | 1.57 | - | - |

| Entry Program | Semesters Completed | Dropout STEM | IRS | ITC | ITD | Other |

|---|---|---|---|---|---|---|

| ICT | 1 | 48.34 | 11.07 | 20.30 | 3.69 | 16.61 |

| 2 | 7.87 | 24.52 | 55.70 | 7.97 | 3.94 | |

| 3 | 3.05 | 1.61 | 80.29 | 12.01 | 3.05 | |

| IBT | IDS | IQ | ||||

| IBQ | 1 | 60.26 | 7.62 | 10.60 | 4.30 | 17.22 |

| 2 | 9.33 | 40.26 | 16.33 | 21.22 | 12.87 | |

| 3 | 3.73 | 57.51 | 2.21 | 25.15 | 11.41 |

| Campus Region | Avg Grade | Set | Approved Ratio |

|---|---|---|---|

| DR | 93.429467 | Complete | 0.950221 |

| DR | 92.646617 | Partial | 0.949588 |

| RCM | 94.022748 | Complete | 0.978515 |

| RCM | 92.055155 | Partial | 0.946551 |

| RCS | 93.549451 | Complete | 0.959267 |

| RCS | 91.987231 | Partial | 0.914206 |

| RM | 93.855562 | Complete | 0.950907 |

| RM | 91.955947 | Partial | 0.952878 |

| RO | 94.796176 | Complete | 0.932837 |

| RO | 91.636012 | Partial | 0.926436 |

| Subject Type | Avg Grade | Set | Approved Ratio |

|---|---|---|---|

| Block | 92.121894 | Complete | 0.946806 |

| Block | 90.153518 | Partial | 0.947961 |

| Subject | 92.382627 | Complete | 0.974444 |

| Subject | 90.170732 | Partial | 0.947870 |

| Tec week | 99.301826 | Complete | 0.902334 |

| Tec week | 99.194415 | Partial | 0.702470 |

| Sub-Competence Level | Approved Ratio | Set | Unique Student Count |

|---|---|---|---|

| A | 0.964370 | Complete | 501 |

| A | 0.930930 | Partial | 480 |

| B | 0.965177 | Complete | 501 |

| B | 0.966134 | Partial | 479 |

| C | 1.000000 | Partial | 1 |

| Competence Type | Approved Ratio | Set | Unique Student Count |

|---|---|---|---|

| Area | 0.963002 | Complete | 501 |

| Area | 0.951998 | Partial | 480 |

| Disciplinary | 0.883252 | Complete | 491 |

| Disciplinary | 1.000000 | Partial | 1 |

| General education | 0.958172 | Complete | 501 |

| General education | 0.908418 | Partial | 480 |

| Subject | Subject Type | SD | Avg | Fail | Pass | Sub-Competencies |

|---|---|---|---|---|---|---|

| Object-Oriented Programming | Subject | 15.94 | 88.48 | 34 | 663 | Problem evaluation, decision making, cutting-edge technologies |

| Object-Oriented Computational Thinking | Subject | 15.50 | 86.12 | 67 | 679 | Application of standards and norms, demonstration of the functioning of systems in engineering and sciences, cutting-edge technologies, problem evaluation, decision making, implementation of actions |

| Mathematical Thinking | Subject | 14.66 | 83.39 | 78 | 680 | Explanation of the functioning of systems in engineering and sciences, scientific thought, understanding others’ code, problem evaluation |

| Computational Thinking for Engineering | Subject | 13.27 | 88.11 | 40 | 762 | Application of standards and norms, demonstration of the functioning of systems in engineering and sciences, cutting-edge technologies, problem evaluation, decision making, implementation of actions |

| Computational Modeling Applying Conservation Laws | Block | 13.12 | 85.64 | 42 | 609 | Scientific thought, demonstration of the functioning of systems in engineering and sciences, explanation of the functioning of systems in engineering and sciences, systemic thinking, written language, problem evaluation, decision making, implementation of actions |

| Computational Biology Analysis | Subject | 13.09 | 90.49 | 26 | 687 | Scientific though, understanding others’ code, determination of patterns, application of sustainability principles |

| Fundamentals of Biological Systems | Subject | 12.91 | 85.54 | 3 | 46 | Explanation of the functioning of systems in engineering and sciences, demonstration of the functioning of systems in engineering and sciences, scientific thought, digital culture, wellness and self-regulation |

| Ecological Processes for Human Development | Subject | 12.91 | 85.32 | 2 | 45 | Application of standards and norms, scientific thought, digital culture, recognition and empathy, application of sustainability principles |

| Foundation of the Structure and Transformation of Matter | Subject | 12.21 | 84.44 | 62 | 761 | Explanation of the principles of systems in engineering and sciences, scientific thought, problem evaluation |

| Computational Modeling of Movement | Block | 11.85 | 86.17 | 44 | 594 | Scientific thought, demonstration of the principles of systems in engineering and sciences, explanation of the principles of systems in engineering and sciences, systemic thinking, written language, problem evaluation, decision making, implementation of actions |

| Sub-Competency | Level | Failed | Approved | Ratio |

|---|---|---|---|---|

| Problem evaluation | A | 594 | 6689 | 11.261 |

| Demonstration of the functioning of systems in engineering and sciences | A | 255 | 4771 | 18.71 |

| Scientific thought | A | 546 | 4692 | 8.593 |

| Decision making | A | 293 | 3896 | 13.30 |

| Explanation of the functioning of systems in engineering and sciences | A | 316 | 3612 | 11.43 |

| Implementation of actions | A | 185 | 3305 | 17.865 |

| Cutting-edge technologies | A | 131 | 2947 | 22.496 |

| Understanding others’ codes | A | 221 | 2273 | 10.285 |

| Critical thinking | A | 200 | 2017 | 10.085 |

| Explanation of the functioning of systems in engineering and sciences | B | 103 | 2013 | 19.544 |

| Subject | Men | Women | Fail Men | Fail Women | Women (%) | Fail Women (%) |

|---|---|---|---|---|---|---|

| Object-Oriented Programming | 563 | 135 | 31 | 3 | 19.34 | 8.82 |

| Object-Oriented Computational Thinking | 603 | 149 | 55 | 12 | 19.81 | 17.91 |

| Mathematical Thinking I | 606 | 157 | 69 | 9 | 20.58 | 11.54 |

| Computational Thinking for Engineering | 639 | 163 | 34 | 6 | 20.32 | 15.00 |

| Computational Modeling Applying Conservation Laws | 526 | 129 | 36 | 6 | 19.69 | 14.29 |

| Computational Biology Analysis | 571 | 142 | 26 | 0 | 19.92 | 0.00 |

| Fundamentals of Biological Systems | 38 | 11 | 3 | 0 | 22.45 | 0.00 |

| Ecological Processes for Human Development | 36 | 11 | 3 | 2 | 23.40 | 100.00 |

| Foundation of the Structure and Transformation… | 654 | 169 | 54 | 8 | 20.53 | 12.90 |

| Computational Modeling of Movement | 517 | 121 | 41 | 3 | 18.97 | 6.82 |

| Intermediate Mathematical Modeling | 563 | 140 | 25 | 1 | 19.91 | 3.85 |

| Statistic Analysis | 567 | 140 | 15 | 1 | 19.80 | 6.25 |

| Engineering and Science Modeling | 680 | 172 | 22 | 2 | 20.19 | 8.33 |

| Mathematics and Data Science for Decision Making | 43 | 10 | 1 | 0 | 18.87 | 0.00 |

| Computational Modeling of Electrical Systems | 512 | 132 | 16 | 0 | 20.50 | 0.00 |

| Subject | Avg | Fail | Pass | Sub-Competences |

|---|---|---|---|---|

| Foundation of the Structure and Transformation of Matter | 73.62 | 32 | 75 | Explanation of the functioning of systems in engineering and sciences, scientific thought, problem evaluation |

| Mathematical Thinking | 67.51 | 29 | 45 | Explanation of the functioning of systems in engineering and sciences, scientific thought, understanding others’ codes, problem evaluation |

| Object-Oriented Computational Thinking | 69.96 | 26 | 43 | Application of standards and norms, demonstration of the functioning of systems in engineering and sciences, cutting-edge technologies, problem evaluation, decision making, implementation of actions |

| Computational Modeling of Movement | 71.58 | 26 | 41 | Scientific thought, demonstration of the functioning of systems in engineering and sciences, explanation of the functioning of systems in engineering and sciences, systemic thinking, written language, problem evaluation, decision making, implementation of actions |

| Engineering and Science Modeling | 80.12 | 20 | 95 | Explanation of the functioning of systems in engineering and sciences, problem evaluation, self-knowledge |

| Computational Thinking for Engineering | 75.82 | 20 | 76 | Application of standards and norms, demonstration of the functioning of systems in engineering and sciences, cutting-edge technologies, problem evaluation, decision making, implementation of actions |

| Computational Modeling Applying Conservation Laws | 70.59 | 15 | 40 | Scientific thought, demonstration of the functioning of systems in engineering and sciences, explanation of the functioning of systems in engineering and sciences, systemic thinking, written language, problem evaluation, decision making, implementation of actions |

| Object-Oriented Programming | 62.59 | 9 | 13 | Problem evaluation, decision making, cutting-edge technologies |

| Computational Biology Analysis | 68.73 | 7 | 16 | Scientific thought, understanding others’ codes, determination of patterns, application of sustainability principles |

| Intermediate Mathematical Modeling | 79.04 | 5 | 20 | Problem evaluation, understanding others’ codes, explanation of the functioning of systems in engineering and sciences |

| Sub-Competency | Level | Evaluations | Ranking |

|---|---|---|---|

| Systemic thinking | A | 16 | 11 |

| Scientific thought | A | 13 | 3 |

| Written language | A | 10 | 13 |

| Understanding others’ codes | A | 10 | 8 |

| Problem evaluation | A | 10 | 1 |

| Critical thinking | A | 10 | 9 |

| Demonstration of the functioning of systems in engineering and sciences | A | 8 | 2 |

| Cutting-edge technologies | A | 7 | 7 |

| Decision making | A | 7 | 4 |

| Explanation of the functioning of systems in engineering and sciences | A | 7 | 5 |

| Digital culture | A | 6 | 21 |

| Implementation of actions | A | 6 | 6 |

| Explanation of the functioning of systems in engineering and sciences | B | 4 | 10 |

| Application of standards and norms | A | 4 | 12 |

| Wellness and self-regulation | A | 3 | 22 |

| Sub-Competency | Level | Failed |

|---|---|---|

| Problem evaluation | A | 367 |

| Scientific thought | A | 297 |

| Demonstration of the functioning of systems in engineering and sciences | A | 196 |

| Explanation of the functioning of systems in engineering and sciences | A | 192 |

| Decision making | A | 182 |

| Implementation of actions | A | 90 |

| Cutting-edge technologies | A | 89 |

| Critical thinking | A | 79 |

| Systemic thinking | A | 67 |

| Understanding others’ codes | A | 56 |

| Written language | A | 37 |

| Explanation of the functioning of systems in engineering and sciences | B | 34 |

| Application of standards and norms | A | 26 |

| Application of sustainability principles | A | 3 |

| Innovation | A | 1 |

| Implementation of actions | B | 1 |

| Competencies | Evaluated Sub-Competencies | Ranking |

|---|---|---|

| Application of international standards | Application of standards and norms—A, application of sustainability principles—A, application of sustainability principles—B, application of standards and norms | 12, 14, 36, 37 |

| Commitment to sustainability | Application of standards and norms—A, application of sustainability principles—A | 12, 14 |

| Communication | Understanding others’ codes—A, written language—A, oral language—A | 8, 13, 38 |

| Data analysis in engineering and science | Interpretation of variables—A, development of scenarios—A, development of scenarios—B, interpretation of variables—B | 17, 19, 20, 18 |

| Development of business architectures | Requirements analysis—A, incorporation of technology—A | 31, 33 |

| Digital transformation | Digital culture—A, cutting-edge technologies—A | 21, 7 |

| Embedded systems | Managing technological design projects—A | 39 |

| Ethical and citizen commitment | Recognition and empathy—A, citizen commitment for social transformation—A | 23, 40 |

| Foundation of computer systems | Demonstration of the functioning of systems in engineering and sciences—A, explanation of the functioning of systems in engineering and sciences—A, explanation of the functioning of systems in engineering and sciences—B, demonstration of the functioning of systems in engineering and sciences—B | 2, 5, 10, 27 |

| Foundation of engineering and science systems | Explanation of the functioning of systems in engineering and sciences—A, demonstration of the functioning of systems in engineering and sciences—A, explanation of the functioning of systems in engineering and sciences—B | 5, 2, 10 |

| Generation of computational models for data analysis | Determination of patterns—A, determination of patterns—B, interpretation of variables—B, development of scenarios—B | 16, 34, 18, 20 |

| Innovative entrepreneurship | Innovation—A | 26 |

| Problem solving | Problem evaluation—A, decision making—A, implementation of actions—A | 1, 4, 6 |

| Problem solving with computing | Problem evaluation—A, decision making—A, implementation of actions—A, problem evaluation—B, decision making—B, implementation of actions—B | 1, 4, 6, 30, 29, 25 |

| Reasoning for complexity | Scientific thought—A, systemic thinking—A, critical thinking—A | 3, 11, 9 |

| Self-knowledge and management | Wellness and self-regulation—A, self-knowledge—A | 22, 15 |

| Social intelligence | Diversity—A, collaboration—A | 24, 41 |

| Software systems development | Definition of software requirements—A, application of software methodologies—A, computer project management—A | 28, 32, 35 |

| Program | Semester | Mean 2019 | Weighted Mean 2019 | Mean 2020 | Weighted Mean 2020 |

|---|---|---|---|---|---|

| ICT | 1 | 85.197 | 85.512 | 89.671 | 90.185 |

| ICT | 2 | 89.697 | 89.870 | 89.986 | 89.874 |

| ICT | 3 | 88.488 | 88.583 | 90.345 | 90.578 |

| ICT | 4 | 70.502 | 70.788 | 82.094 | 81.707 |

| ICT | 5 | 66.775 | 66.570 | - | - |

| ICT | 6 | 85.485 | 83.921 | - | - |

| IBQ | 1 | 86.377 | 86.889 | 90.355 | 90.810 |

| IBQ | 2 | 89.926 | 89.430 | 91.154 | 91.099 |

| IBQ | 3 | 86.524 | 85.662 | 89.409 | 88.580 |

| IBQ | 4 | 83.256 | 82.572 | 81.973 | 82.249 |

| IBQ | 5 | 83.535 | 82.228 | - | - |

| IBQ | 6 | 86.731 | 86.944 | - | - |

| Subject | SD | Avg | Fail | Pass | Sub-Competences |

|---|---|---|---|---|---|

| Week 18 Engineering—1 | 18.33 | 96.52 | 29 | 805 | Self-knowledge |

| Week 18 Engineering—2 | 13.69 | 98.09 | 15 | 769 | Self-knowledge |

| Entrepreneurship with Purpose | 17.22 | 96.94 | 21 | 665 | Conscious entrepreneurship |

| Turn Your Wellness On | 10.51 | 98.88 | 4 | 353 | Wellness and self-regulation |

| My Selfie Today | 17.81 | 96.74 | 6 | 178 | Self-knowledge |

| A Trip to My Interior | 19.99 | 95.86 | 6 | 139 | Self-knowledge |

| Me, You, Others, Us | 12.04 | 98.54 | 2 | 135 | Diversity |

| Meaningful Theater | 14.67 | 97.81 | 2 | 89 | Innovation |

| Induction to the Social Service | 28.81 | 91.30 | 2 | 21 | Recognition and empathy |

| 18th Week All Entries | 47.05 | 69.57 | 7 | 16 | Self-knowledge |

| Week 18 Multientry Exploration—3 | 22.36 | 95.00 | 1 | 19 | Self-knowledge |

| Semest | Subject | SD | Avg | Fail | Pass | Sub-Competences |

|---|---|---|---|---|---|---|

| 4 | Week 18 Engineering ICT—4 | 6.50 | 99.57 | 2 | 466 | Self-knowledge |

| 5 | Week 18 Focus ITC—5 | 15.12 | 97.66 | 10 | 418 | Self-knowledge |

| 3 | Induction to the Social Service | 13.77 | 98.07 | 4 | 203 | Recognition and empathy |

| 5 | Next Level: Competitive Programming Expert | 10.19 | 98.95 | 2 | 189 | Collaboration, computational algorithm optimization |

| 3 | CS Tool—Mastering Programming | 0.00 | 100.00 | 0 | 139 | Application of standards and norms |

| 5 | Web World Connecting | 12.72 | 98.36 | 2 | 120 | Cutting-edge technologies, software component development |

| 5 | Diversity in a Globalized World | 20.07 | 95.83 | 5 | 115 | Diversity |

| 6 | One Click Away from your Professional Life | 12.86 | 98.33 | 2 | 118 | Self-knowledge |

| 4 | CS Tool—Mastering Programming | 16.01 | 97.39 | 3 | 112 | Application of standards and norms |

| 4 | CS Tool—Mastering Analytics | 9.76 | 99.05 | 1 | 104 | Interpretation of variables |

| 6 | Web World Connecting | 10.23 | 98.95 | 1 | 94 | Software component development, cutting-edge technologies |

| 4 | Ikigai: Building your Dreams | 14.51 | 97.87 | 2 | 92 | Wellness and self-regulation |

| 6 | Diversity in a Globalized World | 27.80 | 91.67 | 7 | 77 | Diversity |

| 5 | CS Tool—Mastering Programing | 10.98 | 98.80 | 1 | 82 | Application of standards and norms |

| 3 | CS Tool—Mastering Analytics | 26.35 | 92.59 | 6 | 75 | Interpretation of variables |

| 3 | Diversity in a Globalized World | 11.32 | 98.72 | 1 | 77 | Diversity |

| 4 | Multigenerational Leadership | 19.73 | 96.00 | 3 | 72 | Effectiveness in negotiation |

| 4 | The Art of Emotion | 11.55 | 98.67 | 1 | 74 | Understanding others’ codes |

| 5 | CS Tool—Mastering Analytics | 11.55 | 98.67 | 1 | 74 | Interpretation of variables |

| 3 | Multigenerational Leadership | 0.00 | 100.00 | 0 | 75 | Effectiveness in negotiation |

| 4 | The Role of Integrity in Practicing my Profession | 16.44 | 97.26 | 2 | 71 | Integrity |

| 4 | Diversity in a Globalized World | 20.69 | 95.59 | 3 | 65 | Diversity |

| 4 | Me, You, Others, Us | 12.22 | 98.51 | 1 | 66 | Diversity |

| 6 | CS Tool—Mastering Programing | 24.58 | 93.65 | 4 | 59 | Application of standards and norms |

| Sub-Competency | Level | False | True | Total |

|---|---|---|---|---|

| Self-knowledge | A | 835 | 1196 | 2031 |

| Conscious entrepreneurship | A | 24 | 666 | 690 |

| Wellness and self-regulation | A | 10 | 361 | 371 |

| Diversity | A | 6 | 150 | 156 |

| Innovation | A | 3 | 105 | 108 |

| Recognition and empathy | A | 2 | 27 | 29 |

| Application of standards and norms | A | 4 | 11 | 15 |

| Interpretation of variables | A | 0 | 8 | 8 |

| Collaboration | A | 0 | 7 | 7 |

| Understanding others’ codes | A | 0 | 7 | 7 |

| Effectiveness in negotiation | A | 1 | 6 | 7 |

| Digital culture | A | 1 | 2 | 3 |

| Sub-Competency | Level | False | True | Total |

|---|---|---|---|---|

| Self-knowledge | A | 491 | 933 | 1424 |

| Diversity | A | 16 | 562 | 578 |

| Application of standards and norms | A | 20 | 547 | 567 |

| Wellness and self-regulation | A | 13 | 414 | 427 |

| Collaboration | A | 7 | 360 | 367 |

| Interpretation of variables | A | 6 | 294 | 300 |

| Effectiveness in negotiation | A | 5 | 239 | 244 |

| Computational algorithm optimization | B | 1 | 225 | 226 |

| Recognition and empathy | A | 9 | 216 | 225 |

| Software component development | A | 3 | 214 | 217 |

| Cutting-edge technologies | A | 3 | 214 | 217 |

| Understanding others’ codes | A | 4 | 208 | 212 |

| Citizen commitment for social transformation | B | 4 | 158 | 162 |

| Integrity | B | 5 | 146 | 151 |

| Conscious entrepreneurship | A | 10 | 63 | 73 |

| Wellness and self-regulation | B | 2 | 32 | 34 |

| Digital culture | A | 0 | 8 | 8 |

| Innovation | A | 1 | 6 | 7 |

| Self-knowledge | B | 0 | 2 | 2 |

| student.fte | suspensions_count | |

|---|---|---|

| student.fte | 1.000000 | −0.035677 |

| suspensions_count | −0.035677 | 1.000000 |

| fte_category | student_grades.final_numeric_afterAdjustment |

|---|---|

| Above 1 | 87.186581 |

| Below 1 | 88.042601 |

| Exactly 1 | 90.308793 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Solera, E.; Menasalvas, E.; Martín, M.; Zorrilla, M.; Valdés-Ramírez, D.; Zavala, G.; Monroy, R. Evaluating Competency Development and Academic Outcomes: Insights from Six Semesters of Data-Driven Analysis. Educ. Sci. 2025, 15, 513. https://doi.org/10.3390/educsci15040513

Solera E, Menasalvas E, Martín M, Zorrilla M, Valdés-Ramírez D, Zavala G, Monroy R. Evaluating Competency Development and Academic Outcomes: Insights from Six Semesters of Data-Driven Analysis. Education Sciences. 2025; 15(4):513. https://doi.org/10.3390/educsci15040513

Chicago/Turabian StyleSolera, Enrique, Ernestina Menasalvas, Mario Martín, Marta Zorrilla, Danilo Valdés-Ramírez, Genaro Zavala, and Raúl Monroy. 2025. "Evaluating Competency Development and Academic Outcomes: Insights from Six Semesters of Data-Driven Analysis" Education Sciences 15, no. 4: 513. https://doi.org/10.3390/educsci15040513

APA StyleSolera, E., Menasalvas, E., Martín, M., Zorrilla, M., Valdés-Ramírez, D., Zavala, G., & Monroy, R. (2025). Evaluating Competency Development and Academic Outcomes: Insights from Six Semesters of Data-Driven Analysis. Education Sciences, 15(4), 513. https://doi.org/10.3390/educsci15040513