Mapping the Evaluation of Problem-Oriented Pedagogies in Higher Education: A Systematic Literature Review

Abstract

:1. Introduction

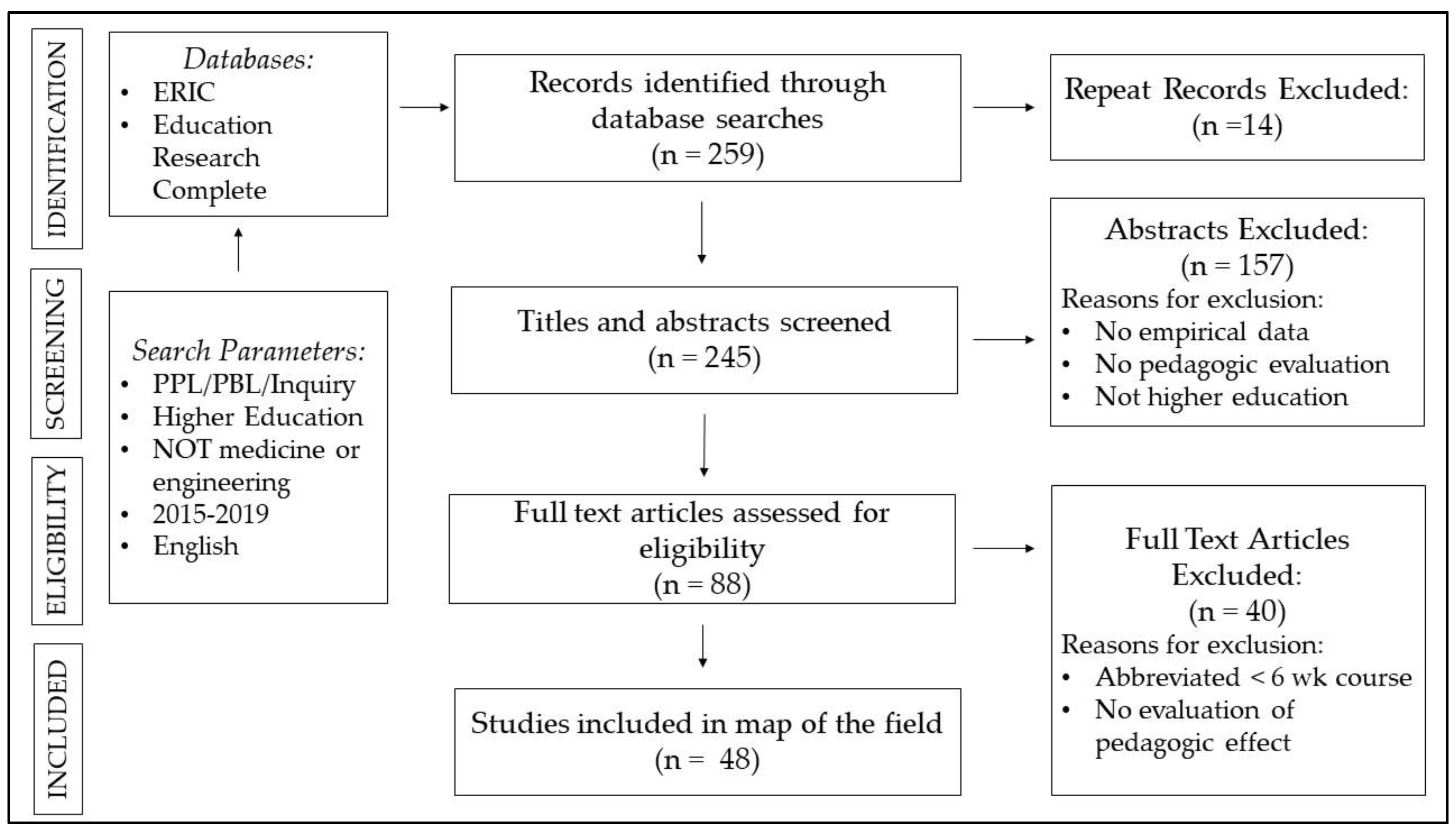

2. Method: A Systematic Literature Review

2.1. Search Methods

2.2. Screening Criteria

- Began with a complex situation, ‘wicked’ problem, or authentic challenge;

- Necessitated groupwork as an integral aspect of the learning design;

- Were sustained over the course of a semester (twelve to sixteen weeks).

2.3. Analysis

3. Results

3.1. Methodological Approaches and Common Methods

3.2. Scale and Scope

3.3. Evaluation Foci

4. Discussion

4.1. Prioritisation of Qualification Outcomes

4.2. Limitations in Scale and Scope

4.3. Accountability and Enhancement?

4.4. Limitations and Further Research

5. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- The Bologna Process and the European Higher Education Area. Available online: https://ec.europa.eu/education/policies/higher-education/bologna-process-and-european-higher-education-area_en (accessed on 19 February 2019).

- About Higher Education Policy. Available online: https://ec.europa.eu/education/policies/higher-education/relevant-and-high-quality-higher-education_en (accessed on 19 February 2019).

- Development of Skills. Available online: https://ec.europa.eu/education/policies/european-policy-cooperation/development-skills_en (accessed on 19 February 2019).

- Education Higher Education Area. Paris Communiqué. Available online: http://www.ehea.info/Upload/document/ministerial_declarations/EHEAParis2018_Communique_final_952771.pdf (accessed on 19 February 2019).

- Benham Rennick, J. Learning that makes a difference: Pedagogy and practice for learning abroad. Teach. Learn. Inq. 2015, 3, 71–88. [Google Scholar] [CrossRef]

- Savin-Badin, M. Using problem-based learning: New constellations for the 21st Century. J. Excell. Coll. Teach. 2014, 25, 1–24. Available online: https://core.ac.uk/download/pdf/42594749.pdf (accessed on 25 March 2019).

- Andersen, A.S.; Kjeldsen, T.H. Theoretical Foundations of PPL at Roskilde University. In The Roskilde Model: Problem-Oriented Learning and Project Work; Andersen, A.S., Heilesen, S.B., Eds.; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Savin-Badin, M. Problem-Based Learning in Higher Education: Untold Stories; The Society for Research into Higher Education & Open University Press: Buckingham, UK, 2000. [Google Scholar]

- Yusofa, K.M.; Hassan, S.A.H.S.; Jamaludina, M.Z.; Harun, N.F. Cooperative Problem-based Learning (CPBL): Framework for Integrating Cooperative Learning and Problem-based Learning. Procedia Soc. Behav. Sci. 2012, 56, 223–232. [Google Scholar] [CrossRef] [Green Version]

- Shavelson, R.; Zlatkin-Troitschanskaia, O.; Mariño, J. International performance assessment of learning in higher education (iPAL): Research and development. In Assessment of Learning Outcomes in Higher Education: Cross National Comparisons and Perspectives; Zlatkin-Troitschanskaia, O., Toepper, M., Pant, H.A., Lautenbach, C., Kuhn, C., Eds.; Springer International Publishing AG: Cham, Switzerland, 2018. [Google Scholar]

- Firn, J. ‘Capping off’ the development of graduate capabilities in the final semester unit for biological science students: Review and recommendations. J. Univ. Teach. Learn. Pract. 2015, 12, 1–16. Available online: http://ro.uow.edu.au/jutlp/vol12/iss3/3 (accessed on 11 August 2018).

- Standards and Guidelines for Quality Assurance in the European Higher Education Area (ESG). Available online: https://enqa.eu/wp-content/uploads/2015/11/ESG_2015.pdf. (accessed on 5 May 2019).

- Manatos, M.; Rosa, M.; Sarrico, C. The perceptions of quality management by universities’ internal stakeholders: Support, adaptation or resistance? In The University as a Critical Institution; Deem, R., Eggins, H., Eds.; Sense Publishers: Rotterdam, The Netherlands, 2017. [Google Scholar]

- Evans, C.; Howson, C.K.; Forsythe, A. Making sense of learning gain in higher education. High. Educ. Pedagog. 2018, 3, 1–45. [Google Scholar] [CrossRef] [Green Version]

- Biesta, G. Good Education in an Age of Measurement: Ethics, Politics, Democracy; Routledge: New York, NY, USA, 2010. [Google Scholar]

- Biesta, G. Good Education in an age of measurement: On the Need to Reconnect with the Question of Purpose in Education. Educ. Assess. Evaluat. Account. 2009, 21, 33–46. [Google Scholar] [CrossRef]

- Peters, M. Global university rankings: Metrics, performance, governance. Educ. Philos. Theory 2019, 51, 5–13. [Google Scholar] [CrossRef]

- Jin, J.; Bridges, S. Qualitative research in PBL in health sciences education: A review. Interdiscip. J. Probl. Based Learn. 2016, 10, 1–28. [Google Scholar] [CrossRef]

- Fink, A. Conducting Research Literature Reviews: From the Internet to Paper, 3rd ed.; SAGE Publications Ltd.: Thousand Oaks, CA, USA, 2010. [Google Scholar]

- Booth, A.; Papaioannou, D.; Sutton, A. Systematic Approaches to a Successful Literature Review; SAGE Publications Ltd.: London, UK, 2012. [Google Scholar]

- Denscombe, M. The Good Research Guide: For Small-Scale Social Research Projects, 3rd ed.; Open University Press: Maidenhead, UK, 2007. [Google Scholar]

- Alt, D. Assessing the contribution of a constructivist learning environment to academic self-efficacy in higher education. Learn. Environ. Res. 2015, 18, 47–67. [Google Scholar] [CrossRef]

- Andersen, A.S.; Wulf-Andersen, T.; Heilesen, S.B. The evolution of the Roskilde model in Denmark. Counc. Undergrad. Res. Q. 2015, 36, 22–28. [Google Scholar]

- Brassler, M.; Dettmers, J. How to enhance interdisciplinary competence—Interdisciplinary problem-based learning versus interdisciplinary project-based learning. Interdiscip. J. Probl. Based Learn. 2017, 11, 12. Available online: https://doi.org/10.7771/1541-5015.1686 (accessed on 6 February 2019).

- Carvalho, A. The impact of PBL on transferable skills development in management education. Innov. Educ. Teach. Int. 2016, 53, 35–47. [Google Scholar] [CrossRef]

- Chng, E.; Yew, E.; Schmidt, H. To what extent do tutor-related behaviours influence student learning in PBL? Adv. Health Sci. Educ. 2015, 20, 5–21. [Google Scholar] [CrossRef] [PubMed]

- Fujinuma, R.; Wendling, L. Repeating knowledge application practice to improve student performance in a large, introductory science course. Int. J. Sci. Educ. 2015, 37, 2906–2922. [Google Scholar] [CrossRef]

- González-Jiménez, E.; Enrique-Mirón, C.; González-García, J.; Fernández-Carballo, D. Problem-based learning in prenursing courses. Nurse Educ. 2016, 41, E1–E3. [Google Scholar] [CrossRef] [PubMed]

- Lucas, N.; Goodman, F. Well-being, leadership, and positive organizational scholarship: A case study of project-based learning in higher education. J. Leadersh. Educ. 2015, 14, 138–152. [Google Scholar] [CrossRef]

- Luo, Y. The influence of problem-based learning on learning effectiveness in students’ of varying learning abilities within physical education. Innov. Educ. Teach. Int. 2019, 56, 3–13. [Google Scholar] [CrossRef]

- Özbıçakçı, Ş.; Gezer, N.; Bilik, Ö. Comparison of effects of training programs for final year nursing students in Turkey: Differences in self-efficacy with regard to information literacy. Nurse Educ. Today 2015, 35, e73–e77. [Google Scholar] [CrossRef] [PubMed]

- Piercey, V.; Militzer, E. An inquiry-based quantitative reasoning course for business students. Primus Probl. Resour. Issues Math. Undergrad. Stud. 2017, 27, 693–706. [Google Scholar] [CrossRef]

- Santicola, C. Academic controversy in macroeconomics: An active and collaborative method to increase student learning. Am. J. Bus. Educ. 2015, 8, 177–184. [Google Scholar] [CrossRef]

- Valenzuela, L.; Jerez, O.; Hasbún, B.; Pizarro, V.; Valenzuela, G.; Orsini, C. Closing the gap between business undergraduate education and the organisational environment: A Chilean case study applying experiential learning theory. Innov. Educ. Teach. Int. 2018, 55, 566–575. [Google Scholar] [CrossRef]

- Yardimci, F.; Bektaş, M.; Özkütük, N.; Muslu, G.; Gerçeker, G.; Başbakkal, Z. A study of the relationship between the study process, motivation resources, and motivation problems of nursing students in different educational systems. Nurse Educ. Today 2017, 48, 13–18. [Google Scholar] [CrossRef]

- Zafra-Gómez, J.; Román-Martínez, I.; Gómez-Miranda, M. Measuring the impact of inquiry-based learning on outcomes and student satisfaction. Assess. Evaluat. High. Educ. 2015, 40, 1050–1069. [Google Scholar] [CrossRef]

- Assen, J.; Meijers, F.; Otting, H.; Poell, R. Explaining discrepancies between teacher beliefs and teacher interventions in a problem-based learning environment: A mixed methods study. Teach. Teach. Educ. 2016, 60, 12–23. [Google Scholar] [CrossRef]

- Carlisle, S.; Gourd, K.; Rajkhan, S.; Nitta, K. Assessing the Impact of Community-Based Learning on Students: The Community Based Learning Impact Scale (CBLIS). J. Serv. Learn. High. Educ. 2017, 6, 1–19. [Google Scholar]

- Cremers, P. Student-framed inquiry in a multidisciplinary bachelor course at a Dutch university of applied sciences. Counc. Undergrad. Res. Q. 2017, 37, 40–45. [Google Scholar] [CrossRef]

- Frisch, J.; Jackson, P.; Murray, M. Transforming undergraduate biology learning with inquiry-based instruction. J. Comput. High. Educ. 2018, 30, 211–236. [Google Scholar] [CrossRef]

- Hüttel, H.; Gnaur, D. If PBL is the answer, then what is the problem? J. Probl. Based Learn. High. Educ. 2017, 5, 1–21. [Google Scholar]

- Kelly, R.; McLoughlin, E.; Finlayson, O. Analysing student written solutions to investigate if problem-solving processes are evident throughout. Int. J. Sci. Educ. 2016, 38, 1766–1784. [Google Scholar] [CrossRef]

- Laursen, S.; Hassi, M.; Hough, S. Implementation and outcomes of inquiry-based learning in mathematics content courses for pre-service teachers. Int. J. Math. Educ. Sci. Technol. 2016, 47, 256–275. [Google Scholar] [CrossRef]

- Mohamadi, Z. Comparative effect of project-based learning and electronic project-based learning on the development and sustained development of English idiom knowledge. J. Comput. High. Educ. 2018, 30, 363–385. [Google Scholar] [CrossRef]

- Rossano, S.; Meerman, A.; Kesting, T.; Baaken, T. The Relevance of Problem-based Learning for Policy Development in University-Business Cooperation. Eur. J. Educ. 2016, 51, 40–55. [Google Scholar] [CrossRef]

- Serdà, B.; Alsina, Á. Knowledge-transfer and self-directed methodologies in university students’ learning. Reflective Pract. 2018, 19, 573–585. [Google Scholar] [CrossRef]

- Tarhan, L.; Ayyıldız, Y. The Views of Undergraduates about Problem-based Learning Applications in a Biochemistry Course. J. Biol. Educ. 2015, 49, 116–126. [Google Scholar] [CrossRef]

- Thomas, I.; Depasquale, J. Connecting curriculum, capabilities and careers. Int. J. Sustain. High. Educ. 2016, 17, 738–755. [Google Scholar] [CrossRef]

- Virtanen, J.; Rasi, P. Integrating Web 2.0 Technologies into Face-to-Face PBL to Support Producing, Storing, and Sharing Content in a Higher Education Course. Interdiscip. J. Probl. Based Learn. 2017, 11, 1–11. [Google Scholar] [CrossRef]

- Werder, C.; Thibou, S.; Simkins, S.; Hornsby, K.; Legg, K.; Franklin, T. Co-inquiry with students: When shared questions lead the way. Teach. Learn. Inq. 2016, 4, 1–15. [Google Scholar] [CrossRef]

- Wijnen, M.; Loyens, S.; Smeets, G.; Kroeze, M.; Van der Molen, H. Students’ and teachers’ experiences with the implementation of problem-based learning at a university law school. Interdiscip. J. Probl. Based Learn. 2017, 11, 1–10. [Google Scholar] [CrossRef]

- Zhao, S. The problem of constructive misalignment in international business education: A three-stage integrated approach to enhancing teaching and learning. J. Teach. Int. Bus. 2016, 27, 179–196. [Google Scholar] [CrossRef]

- Anthony, G.; Hunter, J.; Hunter, R. Prospective teachers development of adaptive expertise. Teach. Teach. Educ. 2015, 49, 108–117. [Google Scholar] [CrossRef]

- Aulls, M.; Magon, J.K.; Shore, B. The distinction between inquiry-based instruction and non-inquiry-based instruction in higher education: A case study of what happens as inquiry in 16 education courses in three universities. Teach. Teach. Educ. 2015, 51, 147–161. [Google Scholar] [CrossRef]

- Ayala, R.; Koch, T.; Messing, H. Understanding the prospect of success in professional training: An ethnography into the assessment of problem-based learning. Ethnogr. Educ. 2019, 14, 65–83. [Google Scholar] [CrossRef]

- Christensen, G. A poststructuralist view on student’s project groups: Possibilities and limitations. Psychol. Learn. Teach. 2016, 15, 168–179. [Google Scholar] [CrossRef]

- Hendry, G.; Wiggins, S.; Anderson, T. The discursive construction of group cohesion in problem-based learning tutorials. Psychol. Learn. Teach. 2016, 15, 180–194. [Google Scholar] [CrossRef]

- Hull, R.B.; Kimmel, C.; Robertson, D.; Mortimer, M. International field experiences promote professional development for sustainability leaders. Int. J. Sustain. High. Educ. 2016, 17, 86–104. [Google Scholar] [CrossRef]

- Jin, J. Students’ silence and identity in small group interactions. Educ. Stud. 2017, 43, 328–342. [Google Scholar] [CrossRef]

- Korpi, H.; Peltokallio, L.; Piirainen, A. Problem-Based Learning in Professional Studies from the Physiotherapy Students’ Perspective. Interdisc. J. Probl. Based Learn. 2018, 13, 1–18. [Google Scholar] [CrossRef]

- Müller, T.; Henning, T. Getting started with PBL—A reflection. Interdiscip. J. Probl. Based Learn. 2017, 11, 8. [Google Scholar] [CrossRef]

- Oliver, K.; Oesterreich, H.; Aranda, R.; Archeleta, J.; Blazer, C.; de la Cruz, K.; Martinez, D.; McConnell, J.; Osta, M.; Parks, L.; et al. ‘The sweetness of struggle’: Innovation in physical education teacher education through student-centered inquiry as curriculum in a physical education methods course. Phys. Educ. Sport Pedagog. 2015, 20, 97–115. [Google Scholar] [CrossRef]

- Podeschi, R.; Building, I.S. Professionals through a Real-World Client Project in a Database Application Development Course. Inf. Syst. Educ. J. 2016, 14, 34–40. [Google Scholar]

- Robinson, L. Age difference and face-saving in an inter-generational problem-based learning group. J. Furth. High. Educ. 2016, 40, 466–485. [Google Scholar] [CrossRef]

- Robinson, L.; Harris, A.; Burton, R. Saving face: Managing rapport in a Problem-Based Learning group. Active Learn. High. Educ. 2015, 16, 11–24. [Google Scholar] [CrossRef]

- Rosander, M.; Chiriac, E. The purpose of tutorial groups: Social influence and the group as means and objective. Psychol. Learn. Teach. 2016, 15, 155–167. [Google Scholar] [CrossRef]

- Ryberg, T.; Davidsen, J.; Hodgson, V. Understanding nomadic collaborative learning groups. Br. J. Educ. Technol. 2018, 49, 235–247. [Google Scholar] [CrossRef]

- Samson, P. Fostering student engagement: Creative problem-solving in small group facilitations. Collect. Essays Learn. Teach. 2015, 8, 153–164. Available online: https://files.eric.ed.gov/fulltext/EJ1069715.pdf (accessed on 6 February 2019). [CrossRef]

- Thorsted, A.C.; Bing, R.G.; Kristensen, M. Play as mediator for knowledge-creation in Problem Based Learning. J. Probl. Based Learn. High. Educ. 2015, 3, 63–77. [Google Scholar]

- Savin-Baden, M. Disciplinary differences or modes of curriculum practice? Who promised to deliver what in problem-based learning? Biochem. Mol. Biol. Educ. 2003, 31, 338–343. [Google Scholar]

- Feehily, R. Problem-based learning and international commercial dispute resolution in the Indian Ocean. Law Teach. 2018, 52, 17–37. [Google Scholar] [CrossRef]

- Boshier, R. Why is the scholarship of teaching and learning such a hard sell? High. Educ. Res. Dev. 2009, 28, 1–15. [Google Scholar] [CrossRef]

- Truly Civic: Strengthening the Connection Between Universities and their Places. The Final Report of the UPP Foundation Civic University Commission. Available online: https://upp-foundation.org/wp-content/uploads/2019/02/Civic-University-Commission-Final-Report.pdf (accessed on 5 May 2019).

- Unterhalter, E. Negative capability? Measuring the unmeasurable in education. Comp. Educ. 2017, 53, 1–16. [Google Scholar]

- Dewey, J. Democracy and Education: An Introduction to the Philosophy of Education; The Free Press: New York, NY, USA, 1916. [Google Scholar]

- Acton, R. Innovative Learning Spaces in Higher Education: Perception, Pedagogic Practice and Place. Ph.D. Thesis, James Cook University, Townsville, Australia, 2018. [Google Scholar]

- Kahu, E.; Nelson, K. Student engagement in the educational interface: Understanding the mechanisms of student success. High. Educ. Res. Dev. 2018, 37, 58–71. [Google Scholar] [CrossRef]

- Bell, A. Students as co-inquirers in Australian higher education: Opportunities and challenges. Teach. Learn. Inq. 2016, 4, 1–10. [Google Scholar] [CrossRef]

- Abbott, A. The Future of Knowing. “Brunch with Books” Sponsored by the University of Chicago Alumni Association and the University of Chicago Library. 2009. Available online: http://home.uchicago.edu/aabbott/Papers/futurek.pdf (accessed on 26 August 2019).

- Sutton-Brown, C. Photovoice: A methodological guide. Photogr. Cult. 2014, 7, 169–185. [Google Scholar] [CrossRef]

- Wang, C.; Burris, M.A. Photovoice: Concept, methodology, and use for participatory needs assessment. Health Educ. Behav. 1997, 24, 369–387. [Google Scholar] [CrossRef] [PubMed]

- Davies, R.; Dart, J. The ‘Most Significant Change’ (MSC) Technique: A Guide to its Use. 2005. Available online: https://www.kepa.fi/tiedostot/most-significant-change-guide.pdf (accessed on 25 July 2013).

- Acton, R.; Riddle, M.; Sellers, W. A review of post-occupancy evaluation tools, 203-221. In School Space and Its Occupation: The Conceptualisation and Evaluation of New Generation Learning Spaces; Alterator, S., Deed, C., Eds.; Brill Sense Publishers: Leiden, The Netherlands, 2018. [Google Scholar]

| Inclusion Criteria | Type |

| Evaluates problem-based, project-based or inquiry pedagogy | Program/Intervention |

| Includes primary empirical data | Research Design |

| University or college context | Setting |

| Published from 2015–current | Publication Date |

| Exclusion Criteria | Type |

| Conceptual, descriptive or theoretical articles | Content |

| Includes school students | Participants |

| Evaluates digital technologies, online implementation or tools | Research Design |

| Discipline of medicine or engineering | Setting |

| Quantitative 15 | Mixed Methods 16 | Qualitative 17 |

|---|---|---|

| [22] Alt (2015) [23] Andersen, Wulf-Andersen, and Heilesen (2015) [24] Brassler, and Dettmers (2017) [25] Carvalho (2016) [26] Chng, Yew and Schmidt (2015) [27] Fujinuma and Wendling (2015) [28] González-Jiménez, Enrique-Mirón, González-García, Fernández-Carballo (2016) [29] Lucas and Goodman (2015) [30] Luo (2019) [31] Özbıçakçı, Gezer and Bilik (2015) [32] Piercey and Militzer (2017) [33] Santicola (2015) [34] Valenzuela, Jerez, Hasbún, Pizarro, Valenzuela, and Orsini (2018) [35] Yardimci, Bektaş, Özkütük, Muslu, Gerçeker, and Başbakkal (2017) [36] Zafra-Gómez, Román-Martínez and Gómez-Miranda (2015) | [37] Assen, Meijers, Otting and Poell (2016) [38] Carlisle, Gourd, Rajkhan, and Nitta (2017) [39] Cremers (2017) [40] Frisch, Jackson and Murray (2018) [41] Hüttel and Gnaur (2017) [42] Kelly, McLoughlin and Finlayson (2016) [43] Laursen, Hassi and Hough (2016) [44] Mohamadi (2018) [45] Rossano, Meerman, Kesting, and Baaken (2016) [46] Serdà and Alsina (2018) [47] Tarhan and Ayyıldız (2015) [48] Thomas and Depasquale (2016) [49] Virtanen and Rasi (2017) [50] Werder, Thibou, Simkins, Hornsby, Legg and Franklin (2016) [51] Wijnen, Loyens, Smeets, Kroeze, Van der Molen (2017) [52] Zhao (2016) | [53] Anthony, Hunter, and Hunter (2015) [54] Aulls, Magon, and Shore (2015) [55] Ayala, Koch and Messing (2019) [56] Christensen (2016) [57] Hendry, Wiggins and Anderson (2016) [58] Hull, Kimmel, Robertson and Mortimer (2016) [59] Jin (2017) [60] Korpi, Peltokallio and Piirainen (2018) [61] Müller, and Henning (2017) [62] Oliver, Oesterreich, Aranda, Archeleta, Blazer, de la Cruz, Martinez, McConnell, Osta, Parks, and Robinson (2015) [63] Podeschi (2016) [64] Robinson (2016) [65] Robinson, Harris, and Burton (2015) [66] Rosander and Chiriac (2016) [67] Ryberg, Davidsen and Hodgson (2018) [68] Samson (2015) [69] Thorsted, Bing, and Kristensen (2015) |

| Study | Survey/Questionnaire | Interview | Focus Groups | Student Reflection | Observations | Student Achievement | Staff Notes/ Reflections | Documents | Photos | Institutional Evaluation | External Partner Evaluation | Case Study |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Alt (2015) | ✓ | |||||||||||

| Andersen et al. (2015) | ✓ | |||||||||||

| Anthony et al. (2015) | ✓ | ✓ | ✓ | ✓ | ||||||||

| Assen et al. (2016) | ✓ | ✓ | ✓ | |||||||||

| Aulls et al. (2015) | ✓ | ✓ | ✓ | ✓ | ||||||||

| Ayala et al. (2019) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||

| Brassler and Dettmers (2017) | ✓ | |||||||||||

| Carlisle et al. (2017) | ✓ | ✓ | ||||||||||

| Carvalho (2016) | ✓ | |||||||||||

| Chng et al. (2015) | ✓ | ✓ | ||||||||||

| Christensen (2016) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||

| Cremers (2017) | ✓ | |||||||||||

| Frisch et al. (2018) | ✓ | ✓ | ✓ | |||||||||

| Fujinuma and Wendling (2015) | ✓ | ✓ | ||||||||||

| González-Jiménez et al. (2016) | ✓ | ✓ | ✓ | |||||||||

| Hendry et al. (2016) | ✓ | ✓ | ||||||||||

| Hull et el (2016) | ✓ | |||||||||||

| Hüttel and Gnaur (2017) | ✓ | ✓ | ||||||||||

| Jin (2017) | ✓ | ✓ | ✓ | |||||||||

| Kelly et al. (2016) | ✓ | ✓ | ✓ | |||||||||

| Korpi, et al. (2018) | ✓ | |||||||||||

| Laursen et al. (2016) | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||

| Lucas and Goodman (2015) | ✓ | |||||||||||

| Luo (2019) | ✓ | ✓ | ||||||||||

| Mohamadi (2018) | ✓ | ✓ | ||||||||||

| Müller and Henning (2017) | ✓ | ✓ | ✓ | |||||||||

| Oliver et al. (2015) | ✓ | ✓ | ✓ | |||||||||

| Piercey and Militzer (2017) | ✓ | ✓ | ||||||||||

| Podeschi (2016) | ✓ | ✓ | ||||||||||

| Robinson (2016) | ✓ | ✓ | ✓ | |||||||||

| Robinson et al. (2015) | ✓ | ✓ | ✓ | |||||||||

| Rosander and Chiriac (2016) | ✓ | |||||||||||

| Rossano et al. (2016) | ✓ | |||||||||||

| Ryberg et al. (2018) | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||

| Samson (2015) | ✓ | |||||||||||

| Santicola (2015) | ✓ | |||||||||||

| Serdà and Alsina (2018) | ✓ | ✓ | ||||||||||

| Tarhan and Ayyıldız (2015) | ✓ | ✓ | ||||||||||

| Thomas and Depasquale (2016) | ✓ | |||||||||||

| Thorsted et al. (2015) | ✓ | ✓ | ✓ | |||||||||

| Valenzuela et al. (2018) | ✓ | ✓ | ||||||||||

| Virtanen and Rasi (2017) | ✓ | ✓ | ||||||||||

| Werder et al. (2016) | ✓ | ✓ | ✓ | |||||||||

| Wijnen et al. (2017) | ✓ | ✓ | ||||||||||

| Yardimci et al. (2017) | ✓ | |||||||||||

| Zafra-Gómez et al. (2015) | ✓ | ✓ | ||||||||||

| Zhao (2016) | ✓ | ✓ | ✓ | |||||||||

| Özbıçakçı et al. (2015) | ✓ | |||||||||||

| Totals | 27 | 14 | 10 | 10 | 12 | 14 | 4 | 7 | 3 | 5 | 1 | 4 |

| Study | One Cohort, One Course, One Institution | Multiple Cohorts, One Course, One Institution | One Cohort, Multiple Courses, One Institution | Multiple Cohorts, Multiple Courses, One Institution | One Country, One Cohort, Multi- Institution | One Country, Multi- Institution | Multiple Countries, One Cohort, Multiple Institution |

|---|---|---|---|---|---|---|---|

| Alt (2015) | ✓ | ||||||

| Andersen et al. (2015) | ✓ | ||||||

| Anthony et al. (2015) | ✓ | ||||||

| Assen et al. (2016) | ✓ | ||||||

| Aulls et al. (2015) | ✓ | ||||||

| Ayala et al. (2019) | ✓ | ||||||

| Brassleret al. (2017) | ✓ | ||||||

| Carlisle et al. (2017) | ✓ | ||||||

| Carvalho (2016) | ✓ | ||||||

| Chng et al. (2015) | ✓ | ||||||

| Christensen (2016) | ✓ | ||||||

| Cremers (2017) | ✓ | ||||||

| Frisch et al. (2018) | ✓ | ||||||

| Fujinumaet al. (2015) | ✓ | ||||||

| González-Jiménez (2016) | ✓ | ||||||

| Hendry et al. (2016) | ✓ | ||||||

| Hull et el (2016) | ✓ | ||||||

| Hüttelet al. (2017) | ✓ | ||||||

| Jin (2017) | ✓ | ||||||

| Kelly et al. (2016) | ✓ | ||||||

| Korpi et al. (2018) | ✓ | ||||||

| Laursen et al. (2016) | ✓ | ||||||

| Lucaset al. (2015) | ✓ | ||||||

| Luo (2019) | ✓ | ||||||

| Mohamadi (2018) | ✓ | ||||||

| Mülleret al. (2017) | ✓ | ||||||

| Oliver et al. (2015) | ✓ | ||||||

| Özbıçakçı et al. (2015) | ✓ | ||||||

| Pierceyet al. (2017) | ✓ | ||||||

| Podeschi (2016) | ✓ | ||||||

| Robinson (2016) | ✓ | ||||||

| Robinson et al. (2015) | ✓ | ||||||

| Rosanderet al. (2016) | ✓ | ||||||

| Rossano et al. (2016) | ✓ | ||||||

| Ryberg et al. (2018) | ✓ | ||||||

| Samson (2015) | ✓ | ||||||

| Santicola (2015) | ✓ | ||||||

| Serdà and Alsina (2018) | ✓ | ||||||

| Tarhanet al. (2015) | ✓ | ||||||

| Thomaset al. (2016) | ✓ | ||||||

| Thorsted et al. (2015) | ✓ | ||||||

| Valenzuela et al. (2018) | ✓ | ||||||

| Virtanenet al. (2017) | ✓ | ||||||

| Werder et al. (2016) | ✓ | ||||||

| Wijnen et al. (2017) | ✓ | ||||||

| Yardimci et al. (2017) | ✓ | ||||||

| Zafra-Gómez et al. (2015) | ✓ | ||||||

| Zhao (2016) | ✓ | ||||||

| Totals | 17 | 12 | 4 | 4 | 5 | 5 | 1 |

| Number of Participants | ||||||||

|---|---|---|---|---|---|---|---|---|

| Study | <10 | 10–20 | 21–50 | 51–100 | 101–200 | 201–300 | 301–500 | >501 |

| Alt (2015) | 167 stu. | |||||||

| Andersen et al. (2015) | Instit. | |||||||

| Anthony et al. (2015) | 2 stu. | |||||||

| Assen et al. (2016) | 57 sta. | |||||||

| Aulls et al. (2015) | 16 sta. | |||||||

| Ayala et al. (2019) | 8 stu. | |||||||

| Brassler and Dettmers (2017) | 278 stu. | |||||||

| Carlisle et al. (2017) | 195 stu. | |||||||

| Carvalho (2016) | 120 stu. | |||||||

| Chng et al. (2015) | 714 stu. | |||||||

| Christensen (2016) | 75 stu. 4 sta. | |||||||

| Cremers (2017) | 58 grad. | |||||||

| Frisch et al. (2018) | 43 stu. | |||||||

| Fujinuma and Wendling (2015) | 401 stu. | |||||||

| González-Jiménez et al. (2016) | 150 stu. | |||||||

| Hendry et al. (2016) | 31 stu. | |||||||

| Hull et el (2016) | 26 stu. | |||||||

| Hüttel and Gnaur (2017) | 46 stu. | |||||||

| Jin (2017) | 16 stu. 2 sta. | |||||||

| Kelly et al. (2016) | 95 stu. | |||||||

| Korpi et al. (2018) | 15 stu. | |||||||

| Laursen et al. (2016) | 544 stu. | |||||||

| Lucas and Goodman (2015) | 20 stu. | |||||||

| Luo (2019) | 140 stu. | |||||||

| Mohamadi (2018) | 90 stu. | |||||||

| Müller and Henning (2017) | 2 sta. | |||||||

| Oliver et al. (2015) | 11 stu. 2 sta. | |||||||

| Özbıçakçı et al. (2015) | 137 stu. | |||||||

| Piercey and Militzer (2017) | 216 stu. | |||||||

| Podeschi (2016) | 36 stu. ? part. | |||||||

| Robinson (2016) | 11 stu. | |||||||

| Robinson et al. (2015) | 11 stu. | |||||||

| Rosander and Chiriac (2016) | 147 stu. | |||||||

| Rossano et al. (2016) | 150 stu. + grad. | |||||||

| Ryberg et al. (2018) | 2 stu. groups | |||||||

| Samson (2015) | 1 stu. | |||||||

| Santicola (2015) | 34 stu. | |||||||

| Serdà and Alsina (2018) | 230 stu. 8 sta. | |||||||

| Tarhan and Ayyıldız (2015) | 36 stu. | |||||||

| Thomas and Depasquale (2016) | 26 grad. | |||||||

| Thorsted et al. (2015) | 2 stu. 1 sta. | |||||||

| Valenzuela et al. (2018) | 316 stu. | |||||||

| Virtanen and Rasi (2017) | 5 stu. | |||||||

| Werder et al. (2016) | ? stu. ? sta. | |||||||

| Wijnen et al. (2017) | 344 stu. 20 sta. | |||||||

| Yardimci et al. (2017) | 330 stu. | |||||||

| Zafra-Gómez et al. (2015) | 515 stu. | |||||||

| Zhao (2016) | 132 stu. | |||||||

| Total studies in each range | 6 | 9 | 8 | 5 | 9 | 3 | 4 | 4 |

| Study | Qualification | Socialisation | Subjectification | |

|---|---|---|---|---|

| Academic Achievement and Processes | Employability Competences | |||

| Alt (2015) | Academic self-efficacy (motivation and self-regulation) | |||

| Andersen et al. (2015) | Completion rates and times | |||

| Anthony et al. (2015) | Adaptive expertise | |||

| Assen et al. (2016) | Tutor beliefs and behaviours and their impact on learning | |||

| Aulls et al. (2015) | Supervisors’ beliefs and practices | |||

| Ayala et al. (2019) | Dynamics, power relations, cultural practice | |||

| Brassler and Dettmers (2017) | Interdisciplinary skills, reflective behaviour, and disciplinary perspectives | |||

| Carlisle et al. (2017) | Civic engagement, institutional/ community relations and student wellbeing | |||

| Carvalho (2016) | Transferable skills | |||

| Chng et al. (2015) | Academic achievement | Tutor behaviour | ||

| Christensen (2016) | Group work and its effects | |||

| Cremers (2017) | ‘Knowledge worker’ learning outcomes and professional development | Personal development | ||

| Frisch et al. (2018) | Graduate attributes (teamwork, self-regulation, critical thinking) | |||

| Fujinuma and Wendling (2015) | Academic achievement | |||

| González-Jiménez et al. (2016) | Knowledge acquisition skills, self-perception of competences and capabilities | |||

| Hendry et al. (2016) | Group cohesion | |||

| Hull et el (2016) | Sustainability knowledge and practice, collaborative problem solving, intercultural competencies | |||

| Hüttel and Gnaur (2017) | Information analysis, creativity and innovation, teamwork | |||

| Jin (2017) | Group discourse | |||

| Kelly et al. (2016) | Problem solving processes | Group collaboration | ||

| Korpi et al. (2018) | Professional identity and reflection as metacognitive learning skill (information-seeking, creative learning process, peer group work) | |||

| Laursen et al. (2016) | Academic achievement, attitudes, beliefs and confidence | |||

| Lucas and Goodman (2015) | Learning gains—perceived knowledge of and competence in positive organisations | Student wellbeing | ||

| Luo (2019) | Practical skills and motivation | |||

| Mohamadi (2018) | Academic achievement and perceptions | |||

| Müller and Henning (2017) | Challenges experienced by teachers | |||

| Oliver et al. (2015) | Benefits and challenges (students and staff) | |||

| Özbıçakçı et al. (2015) | Perceived self-efficacy with information literacy skills | |||

| Piercey and Militzer (2017) | Retention & math anxiety | |||

| Podeschi (2016) | Technical and professional skills | |||

| Robinson (2016) | Group dynamics (age differences) | |||

| Robinson et al. (2015) | Group dynamics | |||

| Rosander and Chiriac (2016) | The purpose of group work | |||

| Rossano et al. (2016) | Transversal skills | |||

| Ryberg et al. (2018) | Sociomaterial groupwork processes | |||

| Samson (2015) | Group dynamics | |||

| Santicola (2015) | Academic achievement | |||

| Serdà and Alsina (2018) | Academic achievement and self-directed learning | |||

| Tarhan and Ayyıldız (2015) | Problem quality and self-efficacy in information seeking | Tutor behaviour, group function | ||

| Thomas and Depasquale (2016) | Sustainability competences | |||

| Thorsted et al. (2015) | Creative thinking | |||

| Valenzuela et al. (2018) | Academic performance, value for learning | |||

| Virtanen and Rasi (2017) | Learning process, learning resources, and learning outcomes, emotions associated with learning | |||

| Werder et al. (2016) | Staff-student-community relations, community impact, growth promotion, personal impact | |||

| Wijnen et al. (2017) | Knowledge acquisition, study frequency, skill development, professional preparation | |||

| Yardimci et al. (2017) | Study processes and motivation | |||

| Zafra-Gómez et al. (2015) | Achievement, attendance and motivation | |||

| Zhao (2016) | Completion and pass rates, student grades | |||

| Total studies | 24 | 17 | 19 | 4 |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Acton, R. Mapping the Evaluation of Problem-Oriented Pedagogies in Higher Education: A Systematic Literature Review. Educ. Sci. 2019, 9, 269. https://doi.org/10.3390/educsci9040269

Acton R. Mapping the Evaluation of Problem-Oriented Pedagogies in Higher Education: A Systematic Literature Review. Education Sciences. 2019; 9(4):269. https://doi.org/10.3390/educsci9040269

Chicago/Turabian StyleActon, Renae. 2019. "Mapping the Evaluation of Problem-Oriented Pedagogies in Higher Education: A Systematic Literature Review" Education Sciences 9, no. 4: 269. https://doi.org/10.3390/educsci9040269