A Deep Learning Approach to Automatic Tooth Caries Segmentation in Panoramic Radiographs of Children in Primary Dentition, Mixed Dentition, and Permanent Dentition

Abstract

1. Introduction

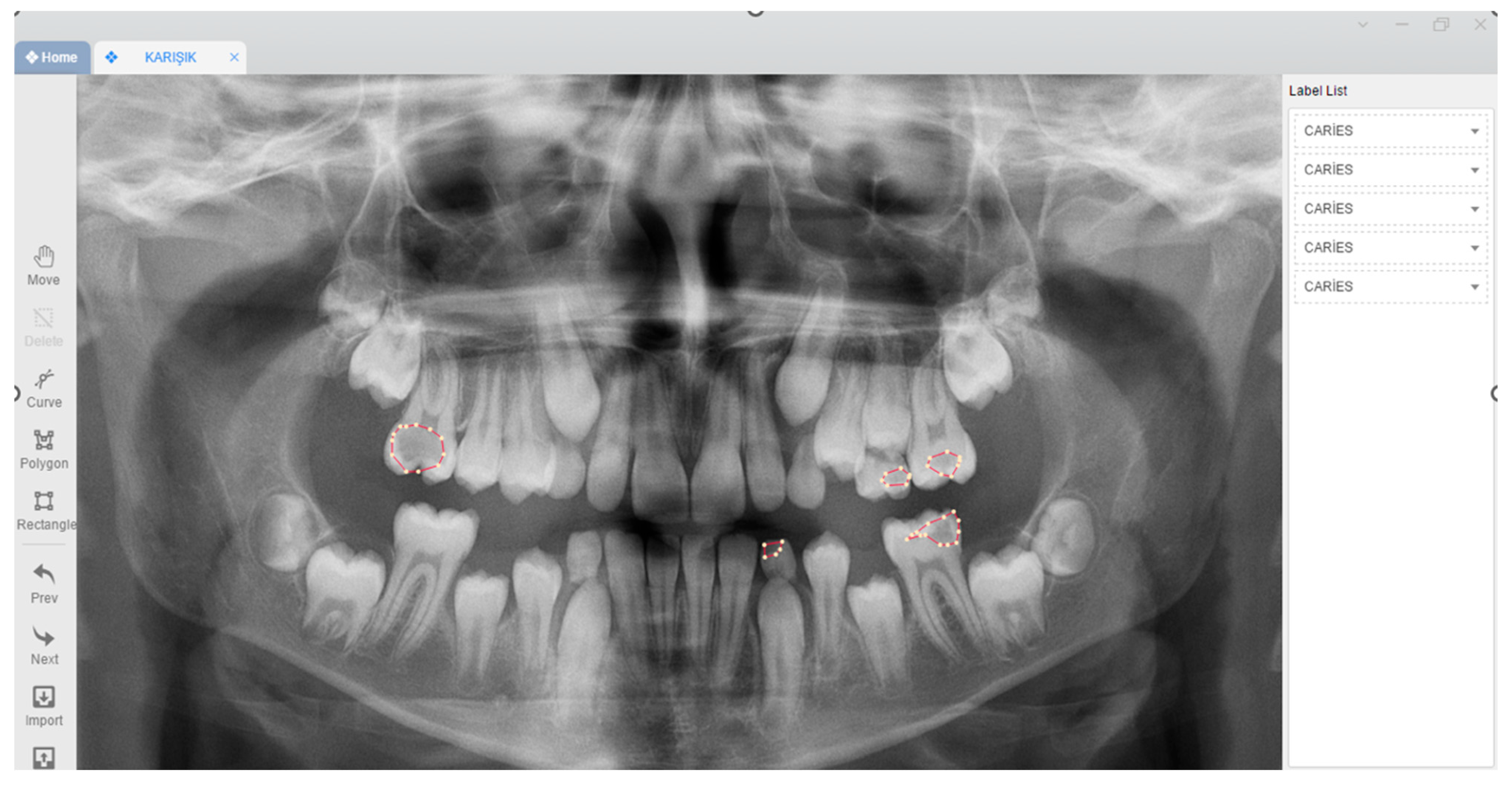

2. Materials and Methods

2.1. Patient Selection

2.2. Radiographic Data

2.3. Image Evaluation

2.4. Deep Convolutional Neural Network Architecture

2.5. Model Pipeline

2.6. Total (Primary Dentition + Mixed Dentition + Permanent Dentition)

2.7. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Main Points

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Featherstone, J.D. The science and practice of caries prevention. J. Am. Dent. Assoc. 2000, 131, 887–899. [Google Scholar] [CrossRef]

- Selwitz, R.H.; Ismail, A.I.; Pitts, N.B. Dental caries. Lancet 2007, 359, 51–59. [Google Scholar] [CrossRef]

- Mortensen, D.; Dannemand, K.; Twetman, S.; Keller, M.K. Detection of non-cavitated occlusal caries with impedance spectroscopy and laser fluorescence: An in vitro study. Open Dent. J. 2014, 8, 28–32. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Baelum, V.; Heidmann, J.; Nyvad, B. Dental caries paradigms in diagnosis and diagnostic research. Eur. J. Oral. Sci. 2006, 114, 263–277. [Google Scholar] [CrossRef]

- Korkut, B.; Tagtekin, D.A.; Yanikoglu, F. Early diagnosis of dental caries and new diagnostic methods: QLF, Diagnodent, Electrical Conductance and Ultrasonic System. EÜ Dişhek Fak Derg. 2011, 32, 55–67. [Google Scholar]

- Akkaya, N.; Kansu, O.; Kansu, H.; Cagirankaya, L.; Arslan, U. Comparing the accuracy of panoramic and intraoral radiography in the diagnosis of proximal caries. Dentomaxillofacial Radiol. 2006, 35, 170–174. [Google Scholar] [CrossRef] [PubMed]

- Kamburoğlu, K.; Kolsuz, E.; Murat, S.; Yüksel, S.; Özen, T. Proximal caries detection accuracy using intraoral bitewing radiography, extraoral bitewing radiography and panoramic radiography. Dentomaxillofacial Radiol. 2012, 41, 450–459. [Google Scholar] [CrossRef]

- Flint, D.J.; Paunovich, E.; Moore, W.S.; Wofford, D.T.; Hermesch, C.B. A diagnostic comparison of panoramic and intraoral radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontology 1998, 85, 731–735. [Google Scholar] [CrossRef] [PubMed]

- Farman, A.G. There are good reasons for selecting panoramic radiography to replace the intraoral full-mouth series. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endod. 2002, 94, 653–655. [Google Scholar] [CrossRef]

- Sklan, J.E.; Plassard, A.J.; Fabbri, D.; Landman, B.A. Toward content-based image retrieval with deep convolutional neural networks. In Medical Imaging 2015: Biomedical Applications in Molecular, Structural, and Functional Imaging; SPIE: Bellingham, WA, USA, 2015; Volume 9417, pp. 633–638. [Google Scholar]

- Lee, J.-H.; Kim, D.-H.; Jeong, S.-N.; Choi, S.-H. Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. J. Periodontal Implant Sci. 2018, 48, 114–123. [Google Scholar] [CrossRef]

- Orhan, K.; Yazici, G.; Kolsuz, M.E.; Kafa, N.; Bayrakdar, I.S.; Çelik, Ö. An Artificial Intelligence Hypothetical Approach for Masseter Muscle Segmentation on Ultrasonography in Patients With Bruxism. J. Adv. Oral Res. 2021, 12, 206–213. [Google Scholar] [CrossRef]

- Schleyer, T.K.; Thyvalikakath, T.P.; Spallek, H.; Torres-Urquidy, M.H.; Hernandez, P.; Yuhaniak, J. Clinical computing in general dentistry. J. Am. Med. Inform. Assoc. 2006, 13, 344–352. [Google Scholar] [CrossRef] [PubMed]

- Mendonça, E.A. Clinical decision support systems: Perspectives in dentistry. J. Dent. Educ. 2004, 68, 589–597. [Google Scholar] [CrossRef]

- Sridhar, N.; Tandon, S.; Rao, N. A comparative evaluation of DIAGNOdent with visual and radiography for detection of occlusal caries: An in vitro study. Indian J. Dent. Res. 2009, 20, 326. [Google Scholar] [CrossRef]

- Schneiderman, A.; Elbaum, M.; Shultz, T.; Keem, S.; Greenebaum, M.; Driller, J. Assessment of dental caries with digital imaging fiber-optic translllumination (DIFOTITM): In vitro Study. Caries Res. 1997, 31, 103–110. [Google Scholar] [CrossRef]

- Takeshita, W.M.; Iwaki, L.C.V.; Da Silva, M.C.; Iwaki Filho, L.; Queiroz, A.D.F.; Geron, L.B.G. Comparison of the diagnostic accuracy of direct digital radiography system, filtered images, and subtraction radiography. Contemp. Clin. Dent. 2013, 4, 338–342. [Google Scholar] [CrossRef] [PubMed]

- Park, W.J.; Park, J.-B. History and application of artificial neural networks in dentistry. Eur. J. Dent. 2018, 12, 594. [Google Scholar] [CrossRef]

- Laitala, M.L.; Piipari, L.; Sämpi, N.; Korhonen, M.; Pesonen, P.; Joensuu, T.; Anttonen, V. Validity of digital imaging of fiber-optic transillumination in caries detection on proximal tooth surfaces. Int. J. Dent. 2017, 2017, 6. [Google Scholar] [CrossRef]

- Diniz, M.B.; Leme, A.F.P.; de Sousa Cardoso, K.; Rodrigues, J.d.A.; Cordeiro, R.d.C.L. The efficacy of laser fluorescence to detect in vitro demineralization and remineralization of smooth enamel surfaces. Photomed. Laser Surg. 2009, 27, 57–61. [Google Scholar] [CrossRef]

- Jablonski-Momeni, A.; Ricketts, D.N.; Rolfsen, S.; Stoll, R.; Heinzel-Gutenbrunner, M.; Stachniss, V.; Pieper, K. Performance of laser fluorescence at tooth surface and histological section. Lasers Med. Sci. 2011, 26, 171–178. [Google Scholar] [CrossRef]

- Rodrigues, J.A.; Diniz, M.B.; Josgrilberg, É.B.; Cordeiro, R.C. In vitro comparison of laser fluorescence performance with visual examination for detection of occlusal caries in permanent and primary molars. Lasers Med. Sci. 2009, 24, 501–506. [Google Scholar] [CrossRef] [PubMed]

- Lussi, A.; Reich, E. The influence of toothpastes and prophylaxis pastes on fluorescence measurements for caries detection in vitro. Eur. J. Oral. Sci. 2005, 113, 141–144. [Google Scholar] [CrossRef] [PubMed]

- Mansour, S.; Ajdaharian, J.; Nabelsi, T.; Chan, G.; Wilder-Smith, P. Comparison of caries diagnostic modalities: A clinical study in 40 subjects. Lasers Surg. Med. 2016, 48, 924–928. [Google Scholar] [CrossRef] [PubMed]

- Lussi, A. Comparison of different methods for the diagnosis of fissure caries without cavitation. Caries Res. 1993, 27, 409–416. [Google Scholar] [CrossRef] [PubMed]

- Bayram, M.; Yıldırım, M.; Adnan, K.; Seymen, F. Pedodonti Anabilim Dali’nda Başlangiç Muayenesinde Alinan Panoramik Radyografilerin Değerlendirilmesi. J. Istanb. Univ. Fac. Dent. 2011, 45, 41–47. [Google Scholar]

- Schwendicke, F.; Golla, T.; Dreher, M.; Krois, J. Convolutional neural networks for dental image diagnostics: A scoping review. J. Dent. 2019, 91, 103226. [Google Scholar] [CrossRef] [PubMed]

- Vinayahalingam, S.; Kempers, S.; Limon, L.; Deibel, D.; Maal, T.; Hanisch, M.; Bergé, S.; Xi, T. Classification of caries in third molars on panoramic radiographs using deep learning. Sci. Rep. 2021, 11, 12609. [Google Scholar] [CrossRef] [PubMed]

- Stehman, S.V. Selecting and interpreting measures of thematic classification accuracy. Remote Sens. Environ. 1997, 62, 77–89. [Google Scholar] [CrossRef]

- Yasa, Y.; Çelik, Ö.; Bayrakdar, I.S.; Pekince, A.; Orhan, K.; Akarsu, S.; Atasoy, S.; Bilgir, E.; Odabaş, A.; Aslan, A.F. An artificial intelligence proposal to automatic teeth detection and numbering in dental bite-wing radiographs. Acta Odontol. Scand. 2020, 79, 275–281. [Google Scholar] [CrossRef]

- Ozturk, O.; Sarıtürk, B.; Seker, D.Z. Comparison of Fully Convolutional Networks (FCN) and U-Net for Road Segmentation from High Resolution Imageries. IJEGEO 2020, 7, 272–279. [Google Scholar] [CrossRef]

- Nishitani, Y.; Nakayama, R.; Hayashi, D.; Hizukuri, A.; Murata, K. Segmentation of teeth in panoramic dental X-ray images using U-Net with a loss function weighted on the tooth edge. Radiol. Phys. Technol. 2021, 14, 64–69. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.; Ramezanghorbani, F.; Isayev, O.; Smith, J.S.; Roitberg, A.E. TorchANI: A free and open source PyTorch-based deep learning implementation of the ANI neural network potentials. J. Chem. Inf. Model. 2020, 60, 3408–3415. [Google Scholar] [CrossRef]

- Kızrak, M.A.; Bolat, B. Derin öğrenme ile kalabalık analizi üzerine detaylı bir araştırma. Bilişim Teknol. Dergisi. 2018, 11, 263–286. [Google Scholar] [CrossRef]

- Collobert, R.; Kavukcuoglu, K.; Farabet, C. Torch7: A Matlab-Like Environment for Machine Learning. 2011. Available online: https://infoscience.epfl.ch/record/192376/files/Collobert_NIPSWORKSHOP_2011.pdf (accessed on 19 May 2024).

- Yoo, S.H.; Geng, H.; Chiu, T.L.; Yu, S.K.; Cho, D.C.; Heo, J.; Choi, M.S.; Choi, I.H.; Cung Van, C.; Nhung, N.V.; et al. Deep learning-based decision-tree classifier for COVID-19 diagnosis from chest X-ray imaging. Front. Med. 2020, 7, 427. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.-H.; Kim, D.-H.; Jeong, S.-N.; Choi, S.-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef] [PubMed]

- Schwendicke, F.; Elhennawy, K.; Paris, S.; Friebertshäuser, P.; Krois, J. Deep learning for caries lesion detection in near-infrared light transillumination images: A pilot study. J. Dent. 2020, 92, 103260. [Google Scholar] [CrossRef] [PubMed]

- Devito, K.L.; de Souza Barbosa, F.; Felippe Filho, W.N. An artificial multilayer perceptron neural network for diagnosis of proximal dental caries. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endod. 2008, 106, 879–884. [Google Scholar] [CrossRef]

- Zhang, Y.; Ye, F.; Chen, L.; Xu, F.; Chen, X.; Wu, H.; Cao, M.; Li, Y.; Wang, Y.; Huang, X. Children’s dental panoramic radiographs dataset for caries segmentation and dental disease detection. Sci. Data 2023, 10, 380. [Google Scholar] [CrossRef]

- Özgür, B.; Ünverdi, G.E.; Çehreli, Z. Diş Çürüğünün Tespitinde Geleneksel ve Güncel Yaklaşımlar. Turk. Klin. J. Pediatr. Dent.-Spec. Top. 2018, 4, 1–9. [Google Scholar]

| Metrics and Measurements | Primary Dentition | Mixed Dentition | Permanent Dentition | Total (Primary + Mixed + Permanent) |

|---|---|---|---|---|

| True positive (TP) | 1006 | 467 | 866 | 2653 |

| False positive (FP) | 96 | 41 | 83 | 255 |

| False negative (FN) | 174 | 166 | 181 | 555 |

| Sensitivity | 0.8525 | 0.7377 | 0.8271 | 0.8269 |

| Precision | 0.9128 | 0.9192 | 0.9125 | 0.9123 |

| F1 score | 0.8816 | 0.8185 | 0.8677 | 0.8675 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asci, E.; Kilic, M.; Celik, O.; Cantekin, K.; Bircan, H.B.; Bayrakdar, İ.S.; Orhan, K. A Deep Learning Approach to Automatic Tooth Caries Segmentation in Panoramic Radiographs of Children in Primary Dentition, Mixed Dentition, and Permanent Dentition. Children 2024, 11, 690. https://doi.org/10.3390/children11060690

Asci E, Kilic M, Celik O, Cantekin K, Bircan HB, Bayrakdar İS, Orhan K. A Deep Learning Approach to Automatic Tooth Caries Segmentation in Panoramic Radiographs of Children in Primary Dentition, Mixed Dentition, and Permanent Dentition. Children. 2024; 11(6):690. https://doi.org/10.3390/children11060690

Chicago/Turabian StyleAsci, Esra, Munevver Kilic, Ozer Celik, Kenan Cantekin, Hasan Basri Bircan, İbrahim Sevki Bayrakdar, and Kaan Orhan. 2024. "A Deep Learning Approach to Automatic Tooth Caries Segmentation in Panoramic Radiographs of Children in Primary Dentition, Mixed Dentition, and Permanent Dentition" Children 11, no. 6: 690. https://doi.org/10.3390/children11060690

APA StyleAsci, E., Kilic, M., Celik, O., Cantekin, K., Bircan, H. B., Bayrakdar, İ. S., & Orhan, K. (2024). A Deep Learning Approach to Automatic Tooth Caries Segmentation in Panoramic Radiographs of Children in Primary Dentition, Mixed Dentition, and Permanent Dentition. Children, 11(6), 690. https://doi.org/10.3390/children11060690