Measurement Method Options to Investigate Digital Screen Technology Use by Children and Adolescents: A Narrative Review

Abstract

:1. Introduction

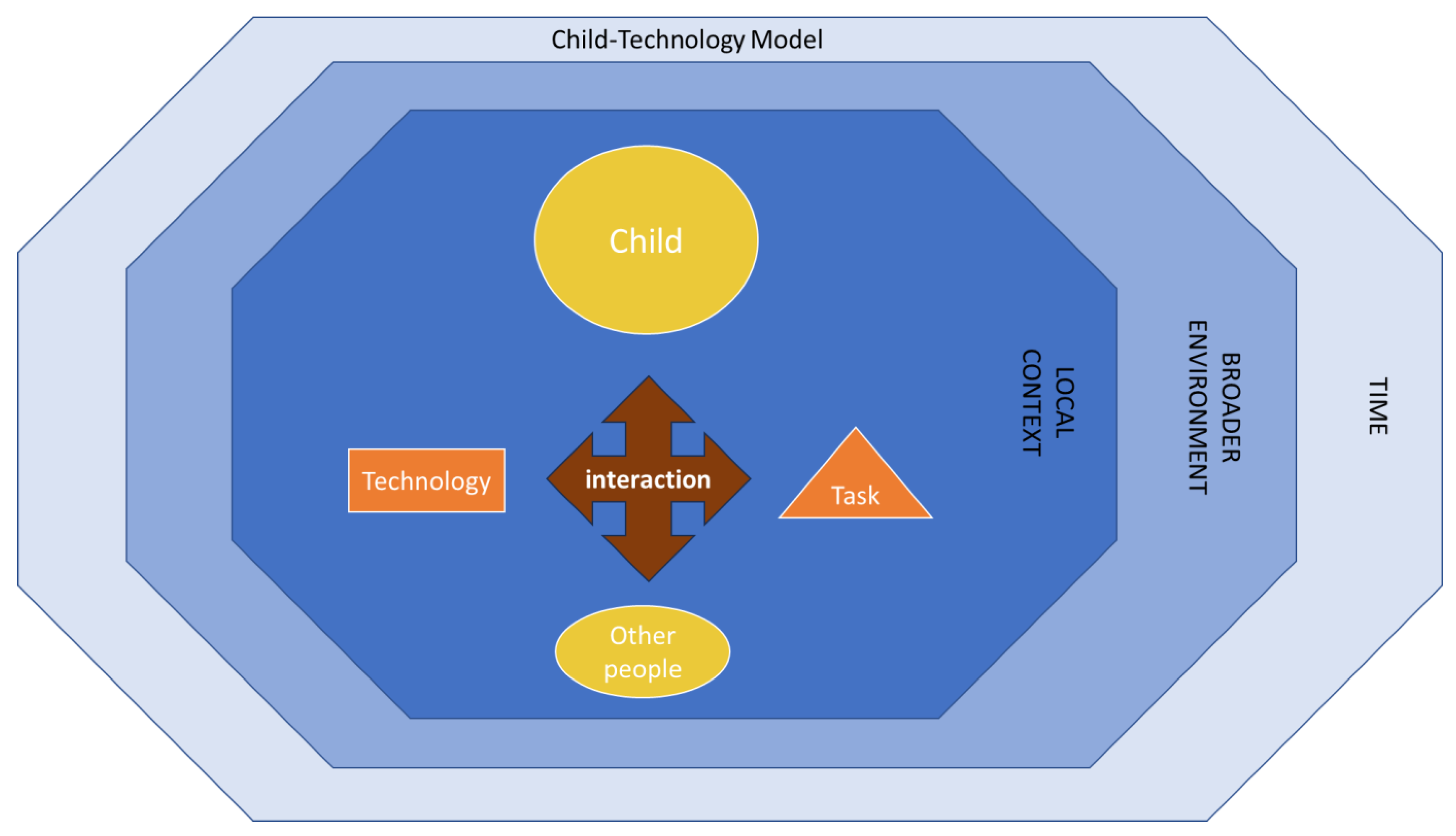

1.1. Challenges in Measuring Children’s Complex Digital Screen Technology Engagement

1.1.1. Child

1.1.2. Technology

1.1.3. Tasks

1.1.4. Interaction

1.1.5. Other People

1.1.6. Local Context

1.1.7. Broader Environment

1.1.8. Time

1.2. Study Aim

2. Narrative Review Approach

3. Summary of Different Measurement Method Options

3.1. Self-/Proxy-Reporting

3.1.1. Questionnaires

3.1.2. Diaries

3.1.3. Electronically Prompted Sampling

3.2. Direct Observation

3.3. Recording Devices

3.3.1. Fixed Room Cameras

3.3.2. Wearable or Portable Cameras

3.3.3. Audio Recorders

3.4. Screen-Device Onboard Logging

3.5. Remote Digital Trace Logging

3.6. Proximity Logging

3.7. Other Systems

| Types of Measure and Example Studies | Methods | Advantages | Disadvantages |

|---|---|---|---|

Self-/Proxy-(e.g., Parent, Teacher etc.) Reporting | |||

| Questionnaire | Retrospective recall of screen use through paper or electronic format. |

|

|

| Diary | Recall of screen use across day through paper or electronic format. |

|

|

| Electronically prompted sampling | Instant recall of screen use or associated factors in response to Text or App messages to participant. |

|

|

| Direct observation | Conemporaneous observation and recording screen use by Trained observer in their natural environment through paper or electronic format. |

|

|

Recording devices | |||

| Fixed room cameras | Contemporaneous fixed camera recording still images or video capturing screen use within one setting per camera. |

|

|

| Wearable or portable camera | Contemporaneous wearable camera (attached to participant usually on chest or head or on neck lanyard) recording still images or video in the field of view of the participant. Contemporaneous portable camera (typically handheld by parent or researcher) recording still images or video. |

|

|

| Audio recording | Contemporaneous fixed room or wearable device capturing sound (screen technology as well as voices of participants and other people nearby). |

|

|

| Screen-device onboard logging | Contemporaneous manual or automated onboard capture of smart phone or tablet use with app or screen recording. |

|

|

| Remote digital trace logging | Contemporaneous automatic capture of network traffic at router, internet service provider or platform. |

|

|

| Proximity logging | Contemporaneous detection when a participant is near to a screen (when both have chips attached) using radio frequency identification. |

|

|

4. Potential Future Methods

5. Researcher Checklist for Measurement Method Selection

Scenario Examples Using the Considerations Checklist

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cerniglia, L.; Cimino, S. A reflection on controversial literature on screen time and educational apps use in 0–5 years old children. Int. J. Environ. Res. Public Health 2020, 17, 4641. [Google Scholar] [CrossRef] [PubMed]

- Campana, K.; Mills, J.E.; Haines, C.; Prendergast, T.; Martens, M. To tech or not to tech? The debate about technology, young children, and the library. Child. Libr. 2019, 17, 20–26. [Google Scholar] [CrossRef]

- Browne, D.; Thompson, D.A.; Madigan, S. Digital media use in children: Clinical vs scientific responsibilities. JAMA Pediatr. 2020, 174, 111–112. [Google Scholar] [CrossRef] [PubMed]

- Straker, L.; Zabatiero, J.; Danby, S.; Thorpe, K.; Edwards, S. Conflicting guidelines on young children’s screen time and use of digital technology create policy and practice dilemmas. J. Pediatr. 2018, 202, 300–303. [Google Scholar] [CrossRef] [PubMed]

- Homer, B.D.; Kinzer, C.K.; Plass, J.L.; Letourneau, S.M.; Hoffman, D.; Bromley, M.; Hayward, E.O.; Turkay, S.; Kornak, Y. Moved to learn: The effects of interactivity in a Kinect-based literacy game for beginning readers. Comput. Educ. 2014, 74, 37–49. [Google Scholar] [CrossRef]

- Fitzpatrick, C.; Binet, M.; Cristini, E.; Almeida, M.L.; Begin, M.; Frizza, G. Reducing harm and promoting positive media use strategies: New perspectives in understanding the impact of preschooler media use on health and development. Psicol. Refexia Crit. 2023, 36, 19. [Google Scholar] [CrossRef] [PubMed]

- Sanders, T.; Noetel, M.; Parker, P.; Del Pozo Cruz, B.; Biddle, S.; Ronto, R.; Hulteen, R.; Parker, R.; Thomas, G.; De Cocker, K. An umbrella review of the benefits and risks associated with youths’ interactions with electronic screens. Nat. Hum. Behav. 2024, 8, 82–99. [Google Scholar] [CrossRef]

- Rosen, L.D.; Lim, A.F.; Felt, J.; Carrier, L.M.; Cheever, N.A.; Lara-Ruiz, J.M.; Mendoza, J.S.; Rokkum, J. Media and technology use predicts ill-being among children, preteens and teenagers independent of the negative health impacts of exercise and eating habits. Comput. Hum. Behav. 2014, 35, 364–375. [Google Scholar] [CrossRef] [PubMed]

- Page, A.S.; Cooper, A.R.; Griew, P.; Jago, R. Children’s screen viewing is related to psychological difficulties irrespective of physical activity. Pediatrics 2010, 126, e1011–e1017. [Google Scholar] [CrossRef]

- del Pozo-Cruz, B.; Perales, F.; Parker, P.; Lonsdale, C.; Noetel, M.; Hesketh, K.D.; Sanders, T. Joint physical-activity/screen-time trajectories during early childhood: Socio-demographic predictors and consequences on health-related quality-of-life and socio-emotional outcomes. Int. J. Behav. Nutr. Phys. Act. 2019, 16, 55. [Google Scholar] [CrossRef]

- Kwon, S.; Armstrong, B.; Wetoska, N.; Capan, S. Screen time, sociodemographic factors, and psychological wellbeing among young children. JAMA Netw. Open 2024, 7, e2354488. [Google Scholar] [CrossRef] [PubMed]

- Sultan, M.; Scholz, C.; van den Bos, W. Leaving traces behind: Using social media digital trace data to study adolescent wellbeing. Comput. Hum. Behav. Rep. 2023, 10, 100281. [Google Scholar] [CrossRef]

- Orben, A.; Przybylski, A.K. The association between adolescent well-being and digital technology use. Nat. Hum. Behav. 2019, 3, 173–182. [Google Scholar] [CrossRef] [PubMed]

- Hood, R.; Zabatiero, J.; Silva, D.; Zubrick, S.; Straker, L. “Coronavirus change the rules on everything”: Parent perspectives on how the COVID-19 pandemic influenced family routines, relationships and technology use in families with infants. Int. J. Environ. Res. Public Health 2021, 18, 12865. [Google Scholar] [CrossRef] [PubMed]

- Straker, L.; Abbott, R.; Collins, R.; Campbell, A. Evidence-based guidelines for wise use of electronic games by children. Ergonomics 2014, 57, 471–489. [Google Scholar] [CrossRef] [PubMed]

- Bronfenbrenner, U.; Morris, P. The bioecological model of human development. In Handbook of Child Psychology: Volume 1 Theoretical Models of Human Development; Lerner, R., Damon, W., Eds.; Wiley: Hoboken, NJ, USA, 2006; Volume 1, pp. 793–828. [Google Scholar]

- Livingstone, S.; Mascheroni, G.; Staksrud, E. Developing a Framework for Researching Children’s Online Risks and Opportunities in Europe; The London School of Economics and Political Science: London, UK, 2015. [Google Scholar]

- ARC Centre of Excellence for the Digital Child. Digital child ethics toolkit: Ethical considerations for Digital Childhoods Research. In Digital Child Working Paper 2024-01; Australian Research Council Centre of Excellence for the Digital Child: Brisbane, Australia, 2024. [Google Scholar] [CrossRef]

- Milkovich, L.M.; Madigan, S. Using mobile device sampling to objectively measure screen use in clinical care. Pediatrics 2020, 146, e20201242. [Google Scholar] [CrossRef] [PubMed]

- Neumann, M.M. Young children and screen time: Creating a mindful approach to digital technology. Aust. Educ. Comput. 2015, 30. Available online: https://journal.acce.edu.au/index.php/AEC/article/view/67/pdf (accessed on 17 June 2024).

- Bjelajac, Ž.Đ.; Merdović, B. Influence of video games on pro-social and anti-social behavior. Kult. Polisa 2019, 16, 53–65. [Google Scholar]

- Montazami, A.; Pearson, H.A.; Dubé, A.K.; Kacmaz, G.; Wen, R.; Alam, S.S. Why this app? How parents choose good educational apps from app stores. Br. J. Educ. Technol. 2022, 53, 1766–1792. [Google Scholar] [CrossRef]

- Okely, A.D.; Ghersi, D.; Loughran, S.P.; Cliff, D.P.; Shilton, T.; Jones, R.A.; Stanley, R.M.; Sherring, J.; Toms, N.; Eckermann, S. A collaborative approach to adopting/adapting guidelines. The Australian 24-hour movement guidelines for children (5–12 years) and young people (13–17 years): An integration of physical activity, sedentary behaviour, and sleep. Int. J. Behav. Nutr. Phys. Act. 2022, 19, 2. [Google Scholar] [CrossRef]

- American Academy of Pediatrics. American Academy of Pediatrics: Children, adolescents, and television. Pediatrics 2001, 107, 423–426. [Google Scholar] [CrossRef] [PubMed]

- Canadian Paediatric Society Digital Health Task Force. Screen time and young children: Promoting health and development in a digital world. Paediatr. Child Health 2017, 22, 461–477. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Guidelines on Physical Activity, Sedentary Behaviour and Sleep for Children under 5 Years of Age; World Health Organization: Geneva, Switzerland, 2019. [Google Scholar]

- Kaye, L.; Orben, A.; Ellis, D.A.; Hunter, S.C.; Houghton, S. The conceptual and methodological mayhem of “screen time”. Int. J. Environ. Res. Public Health 2020, 17, 3661. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Heynderickx, I.; Redi, J.A. Alone or together: Measuring users’ viewing experience in different social contexts. In Proceedings of the Human Vision and Electronic Imaging XIX, San Francisco, CA, USA, 2–6 February 2014; pp. 218–228. [Google Scholar]

- Griffith, S.; Hart, K.; Mavrakis, A.; Bagner, D. Making the best of app use: The impact of parent-child co-use of interactive media on children’s learning in the U.S. J. Child. Media 2022, 16, 271–287. [Google Scholar] [CrossRef]

- Foulds, K. Co-viewing mass media to support children and parents’ emotional ABCs: An evaluation of Ahlan SimSim. Early Child. Educ. J. 2023, 51, 1479–1488. [Google Scholar] [CrossRef] [PubMed]

- Swider-Cios, E.; Vermeij, A.; Sitskoorn, M. Young children and screen-based media: The impact on cognitive and socioemotional development and the importance of parental media. Cogn. Dev. 2023, 66, 101319. [Google Scholar] [CrossRef]

- Browne, D.T.; May, S.S.; Colucci, L.; Hurst-Della Pietra, P.; Christakis, D.; Asamoah, T.; Hale, L.; Delrahim-Howlett, K.; Emond, J.A.; Fiks, A.G. From screen time to the digital level of analysis: A scoping review of measures for digital media use in children and adolescents. BMJ Open 2021, 11, e046367. [Google Scholar] [CrossRef] [PubMed]

- Byrne, R.; Terranova, C.O.; Trost, S.G. Measurement of screen time among young children aged 0–6 years: A systematic review. Obes. Rev. 2021, 22, e13260. [Google Scholar] [CrossRef]

- Atkin, A.J.; Gorely, T.; Clemes, S.A.; Yates, T.; Edwardson, C.; Brage, S.; Salmon, J.; Marshall, S.J.; Biddle, S.J. Methods of measurement in epidemiology: Sedentary behaviour. Int. J. Epidemiol. 2012, 41, 1460–1471. [Google Scholar] [CrossRef]

- de Reuver, M.; Bouwman, H. Dealing with self-report bias in mobile Internet acceptance and usage studies. Inf. Manag. 2015, 52, 287–294. [Google Scholar] [CrossRef]

- Ram, N.; Yang, X.; Cho, M.; Brinberg, M.; Muirhead, F.; Reeves, B.; Robinson, T. Screenomics: A new approach for observing and studying individual’s digital lives. J. Adolesc. Res. 2020, 35, 16–50. [Google Scholar] [CrossRef]

- Agarwal, S.; Charlesworth, M.; Elrakhawy, M. How to write a narrative review. Anaesthesia 2023, 78, 1162–1166. [Google Scholar] [CrossRef]

- Sukhera, J. Narrative reviews in medical education: Key steps for researchers. J. Grad. Med. Educ. 2022, 14, 418–419. [Google Scholar] [CrossRef]

- Perez, O.; Garza, T.; Hindera, O.; Beltran, A.; Musaad, S.M.; Dibbs, T.; Singh, A.; Chug, S.; Sisson, A.; Kumar Vadathya, A. Validated assessment tools for screen media use: A systematic review. PLoS ONE 2023, 18, e0283714. [Google Scholar] [CrossRef] [PubMed]

- Barr, R.; Kirkorian, H.; Coyne, S.; Radesky, J. Early Childhood and Digital Media; Cambridge University Press: Cambridge, UK, 2024. [Google Scholar]

- Işıkoğlu, N.; Erol, A.; Atan, A.; Aytekin, S. A qualitative case study about overuse of digital play at home. Curr. Psychol. 2023, 42, 1676–1686. [Google Scholar] [CrossRef] [PubMed]

- Toh, S.H.; Howie, E.K.; Coenen, P.; Straker, L.M. “From the moment I wake up I will use it… every day, very hour”: A qualitative study on the patterns of adolescents’ mobile touch screen device use from adolescent and parent perspectives. BMC Pediatr. 2019, 19, 30. [Google Scholar] [CrossRef]

- Solomon-Moore, E.; Matthews, J.; Reid, T.; Toumpakari, Z.; Sebire, S.J.; Thompson, J.L.; Lawlor, D.A.; Jago, R. Examining the challenges posed to parents by the contemporary screen environments of children: A qualitative investigation. BMC Pediatr. 2018, 18, 129. [Google Scholar] [CrossRef] [PubMed]

- Lefever, S.; Dal, M.; Matthíasdóttir, Á. Online data collection in academic research: Advantages and limitations. Br. J. Educ. Technol. 2007, 38, 574–582. [Google Scholar] [CrossRef]

- Lubans, D.R.; Hesketh, K.; Cliff, D.; Barnett, L.; Salmon, J.; Dollman, J.; Morgan, P.J.; Hills, A.; Hardy, L. A systematic review of the validity and reliability of sedentary behaviour measures used with children and adolescents. Obes. Rev. 2011, 12, 781–799. [Google Scholar] [CrossRef]

- Ciccarelli, M.; Straker, L.; Mathiassen, S.E.; Pollock, C. ITKids part I: Children’s occupations and use of information and communication technologies. Work 2011, 38, 401–412. [Google Scholar] [CrossRef]

- Radesky, J.S.; Weeks, H.M.; Ball, R.; Schaller, A.; Yeo, S.; Durnez, J.; Tamayo-Rios, M.; Epstein, M.; Kirkorian, H.; Coyne, S. Young children’s use of smartphones and tablets. Pediatrics 2020, 146, e20193518. [Google Scholar] [CrossRef] [PubMed]

- Parry, D.A.; Davidson, B.I.; Sewall, C.J.; Fisher, J.T.; Mieczkowski, H.; Quintana, D.S. A systematic review and meta-analysis of discrepancies between logged and self-reported digital media use. Nat. Hum. Behav. 2021, 5, 1535–1547. [Google Scholar] [CrossRef]

- Howie, E.K.; McNally, S.; Straker, L.M. Exploring the reliability and validity of the TechU-Q to evaluate device and purpose specific screen use in preschool children and parents. J. Child Fam. Stud. 2020, 29, 2879–2889. [Google Scholar] [CrossRef] [PubMed]

- Brener, N.D.; Kann, L.; McManus, T.; Kinchen, S.A.; Sundberg, E.C.; Ross, J.G. Reliability of the 1999 youth risk behavior survey questionnaire. J. Adolesc. Health 2002, 31, 336–342. [Google Scholar] [CrossRef]

- Schmitz, K.H.; Harnack, L.; Fulton, J.E.; Jacobs, D.R., Jr.; Gao, S.; Lytle, L.A.; Van Coevering, P. Reliability and validity of a brief questionnaire to assess television viewing and computer use by middle school children. J. Sch. Health 2004, 74, 370–377. [Google Scholar] [CrossRef] [PubMed]

- He, M.; Harris, S.; Piché, L.; Beynon, C. Understanding screen-related sedentary behavior and its contributing factors among school-aged children: A social-ecologic exploration. Am. J. Health Promot. 2009, 23, 299–308. [Google Scholar] [CrossRef] [PubMed]

- Ogel, K.; Karadag, F.; Satgan, D.; Koc, C. Development of the addiction profile index Internet addiction form (APIINT): Validity and reliability. Dusunen Adam J. Psychiatry Neurol. Sci. 2015, 28, 337–343. [Google Scholar] [CrossRef]

- Sanders, J.L.; Williams, R.J. Reliability and validity of the behavioral addiction measure for video gaming. Cyberpsychol. Behav. Soc. Netw. 2016, 19, 43–48. [Google Scholar] [CrossRef]

- Lin, C.-Y.; Broström, A.; Nilsen, P.; Griffiths, M.D.; Pakpour, A.H. Psychometric validation of the Persian Bergen Social Media Addiction Scale using classic test theory and Rasch models. J. Behav. Addict. 2017, 6, 620–629. [Google Scholar] [CrossRef]

- Brunborg, G.S.; Hanss, D.; Mentzoni, R.A.; Pallesen, S. Core and peripheral criteria of video game addiction in the game addiction scale for adolescents. Cyberpsychol. Behav. Soc. Netw. 2015, 18, 280–285. [Google Scholar] [CrossRef]

- El Asam, A.; Samara, M.; Terry, P. Problematic internet use and mental health among British children and adolescents. Addict. Behav. 2019, 90, 428–436. [Google Scholar] [CrossRef]

- Barnett, T.A.; Kelly, A.S.; Young, D.R.; Perry, C.K.; Pratt, C.A.; Edwards, N.M.; Rao, G.; Vos, M.B.; American Heart Association Obesity Committee of the Council on Lifestyle; Cardiometabolic Health; et al. Sedentary behaviors in today’s youth: Approaches to the prevention and management of childhood obesity: A scientific statement from the American Heart Association. Circulation 2018, 138, e142–e159. [Google Scholar] [CrossRef] [PubMed]

- Tey, C.; Waker, M.; Campbell, M.; Hampton, A.; Williams, J. The Light Time-Use Diary and preschool activity patterns: Exploratory study. Int. J. Pediatr. Obestity 2007, 2, 167–173. [Google Scholar] [CrossRef] [PubMed]

- Straker, L.; Smith, A.; Hands, B.; Olds, T.; Abbott, R. Screen-based media use clusters are related to other activity behaviours and health indicators in adolescents. BMC Public Health 2013, 13, 1174. [Google Scholar] [CrossRef]

- Barr, R.; Kirkorian, H.; Radesky, J.; Coyne, S.; Nichols, D.; Blanchfield, O.; Rusnak, S.; Stockdale, L.; Ribner, A.; Durnez, J.; et al. Beyond screen time: A synergistic approach to a more comprehensive assessment of family media exposure during early childhood. Front. Psychol. 2020, 11, 1283. [Google Scholar] [CrossRef]

- Kubey, R.; Larson, R. The use and experience of the new video media among children and young adolescents. Commun. Res. 1990, 17, 107–130. [Google Scholar] [CrossRef]

- Kubey, R.W.; Csikszentmihalyi, M. Television and the Quality of Life: How Viewing Shapes Everyday Experience; Psychology Press: London, UK, 1990. [Google Scholar]

- Larson, R.; Kubey, R.; Colletti, J. Changing channels: Early adolescent media choices and shifting investments in family and friends. J. Youth Adolesc. 1989, 18, 583–599. [Google Scholar] [CrossRef]

- Heron, K.E.; Everhart, R.S.; McHale, S.M.; Smyth, J.M. Using mobile-technology-based ecological momentary assessment (EMA) methods with youth: A systematic review and recommendations. J. Pediatr. Psychol. 2017, 42, 1087–1107. [Google Scholar] [CrossRef]

- Dunton, G.F.; Liao, Y.; Intille, S.S.; Spruijt-Metz, D.; Pentz, M. Investigating children’s physical activity and sedentary behavior using ecological momentary assessment with mobile phones. Obesity 2011, 19, 1205–1212. [Google Scholar] [CrossRef]

- Nareim, C.; Bickham, D.S.; Rich, M. Exploring use patterns and racial and ethnic differences in real time affective states during social media use among a clinical sample of adolescents with depression: Prospective cohort study. JMIR Form. Res. 2022, 6, e30900. [Google Scholar] [CrossRef]

- Cox, M.F.; Petrucci, G.J.; Marcotte, R.T.; Masteller, B.R.; Staudenmayer, J.; Freedson, P.S.; Sirard, J.R. A novel video-based direct observation system for assessing physical activity and sedentary behavior in children and young adults. J. Meas. Phys. Behav. 2020, 3, 50–57. [Google Scholar] [CrossRef]

- Vandewater, E.A.; Lee, S.-J. Measuring children’s media use in the digital age: Issues and challenges. Am. Behav. Sci. 2009, 52, 1152–1176. [Google Scholar] [CrossRef]

- Krugman, D.M.; Cameron, G.T.; White, C.M. Visual attention to programming and commercials: The use of in-home observations. J. Advert. 1995, 24, 1–12. [Google Scholar] [CrossRef]

- DuRant, R.H.; Baranowski, T.; Johnson, M.; Thompson, W.O. The relationship among television watching, physical activity, and body composition of young children. Pediatrics 1994, 94, 449–455. [Google Scholar] [CrossRef]

- Baranowski, T.; Thompson, W.O.; Durant, R.H.; Baranowski, J.; Puhl, J. Observations on physical activity in physical locations: Age, gender, ethnicity, and month effects. Res. Q. Exerc. Sport 1993, 64, 127–133. [Google Scholar] [CrossRef]

- Lee, R.M.; Emmons, K.M.; Okechukwu, C.A.; Barrett, J.L.; Kenney, E.L.; Cradock, A.L.; Giles, C.M.; deBlois, M.E.; Gortmaker, S.L. Validity of a practitioner-administered observational tool to measure physical activity, nutrition, and screen time in school-age programs. Int. J. Behav. Nutr. Phys. Act. 2014, 11, 145. [Google Scholar] [CrossRef]

- McKenzie, T.L.; Sallis, J.F.; Nader, P.R.; Broyles, S.L.; Nelson, J.A. Anglo-and Mexican-American preschoolers at home and at recess: Activity patterns and environmental influences. J. Dev. Behav. Pediatr. 1992, 13, 173–180. [Google Scholar] [CrossRef]

- Kelly, P.; Marshall, S.; Badland, H.; Kerr, J.; Oliver, M.; Doherty, A.; Foster, C. An ethical framework for automated, wearable cameras in health behavior research. Am. J. Prev. Med. 2013, 44, 314–319. [Google Scholar] [CrossRef]

- Everson, B.; Mackintosh, K.A.; McNarry, M.A.; Todd, C.; Stratton, G. Can wearable cameras be used to validate school-aged children’s lifestyle behaviours? Children 2019, 6, 20. [Google Scholar] [CrossRef]

- Zhou, Q.; Wang, D.; Mhurchu, C.N.; Gurrin, C.; Zhou, J.; Cheng, Y.; Wang, H. The use of wearable cameras in assessing children’s dietary intake and behaviours in China. Appetite 2019, 139, 1–7. [Google Scholar] [CrossRef]

- Bechtel, R.B.; Achelpohl, C.; Akers, R. Correlates between Observed Behavior and Questionnaire Responses on Television Viewing; Television and Social Behavior: Television in Day-to-Day Life: Patterns of Use; Rubinstein, E., Ed.; US Government Printing Office: Washington, DC, USA, 1972; Volume 72, pp. 274–344.

- Fletcher, J.E.; Chen, C.C.-P. Validation of viewing reports: Exploration of a photographic method. In Proceedings of the Annual Meeting of the Broadcast Education Association, Las Vegas, NV, USA, April 1975. [Google Scholar]

- Anderson, D.R.; Field, D.E.; Collins, P.A.; Lorch, E.P.; Nathan, J.G. Estimates of young children’s time with television: A methodological comparison of parent reports with time-lapse video home observation. Child Dev. 1985, 56, 1345–1357. [Google Scholar] [CrossRef]

- Borzekowski, D.L.; Robinson, T.N. Viewing the viewers: Ten video cases of children’s television viewing behaviors. J. Broadcast. Electron. Media 1999, 43, 506–528. [Google Scholar] [CrossRef]

- Allen, C.L. Photographing the TV audience. J. Advert. Res. 1965, 5, 2–8. [Google Scholar]

- Vadathya, A.K.; Musaad, S.; Beltran, A.; Perez, O.; Meister, L.; Baranowski, T.; Hughes, S.O.; Mendoza, J.A.; Sabharwal, A.; Veeraraghavan, A. An objective system for quantitative assessment of television viewing among children (family level assessment of screen use in the home-television): System development study. JMIR Pediatr. Parent. 2022, 5, e33569. [Google Scholar] [CrossRef]

- Given, L.M.; Cantrell Winkler, D.; Willson, R.; Davidson, C.; Danby, S.; Thorpe, K. Parents as coresearchers at home: Using an observational method to document young children’s use of technology. Int. J. Qual. Methods 2016, 15, 1609406915621403. [Google Scholar] [CrossRef]

- Kerr, J.; Marshall, S.J.; Godbole, S.; Chen, J.; Legge, A.; Doherty, A.R.; Kelly, P.; Oliver, M.; Badland, H.M.; Foster, C. Using the SenseCam to improve classifications of sedentary behavior in free-living settings. Am. J. Prev. Med. 2013, 44, 290–296. [Google Scholar] [CrossRef]

- Thomas, G.; Bennie, J.A.; De Cocker, K.; Dwi Andriyani, F.; Booker, B.; Biddle, S.J.H. Using wearable cameras to categorize the type and context of screen-based behaviors among adolescents: Observational study. JMIR Pediatr. Parent. 2022, 5, e28208. [Google Scholar] [CrossRef]

- Ramirez, N.F.; Hippe, D.S.; Shapiro, N.T. Exposure to electronic media between 6 and 24 months of age: An exploratory study. Infant Behav. Dev. 2021, 63, 101549. [Google Scholar] [CrossRef]

- Ambrogio, S.; Narayanan, P.; Okazaki, A.; Fasoli, A.; Mackin, C.; Hosokawa, K.; Nomura, A.; Yasuda, T.; Chen, A.; Friz, A. An analog-AI chip for energy-efficient speech recognition and transcription. Nature 2023, 620, 768–775. [Google Scholar] [CrossRef]

- Christakis, D.A.; Gilkerson, J.; Richards, J.A.; Zimmerman, F.J.; Garrison, M.M.; Xu, D.; Gray, S.; Yapanel, U. Audible television and decreased adult words, infant vocalizations, and conversational turns: A population-based study. Arch. Pediatr. Adolesc. Med. 2009, 163, 554–558. [Google Scholar] [CrossRef]

- Ambrose, S.E.; VanDam, M.; Moeller, M.P. Linguistic input, electronic media, and communication outcomes of toddlers with hearing loss. Ear Hear. 2014, 35, 139–147. [Google Scholar] [CrossRef]

- Brushe, M.E.; Lynch, J.W.; Melhuish, E.; Reilly, S.; Mittinty, M.N.; Brinkman, S.A. Objectively measured infant and toddler screen time: Findings from a prospective study. SSM-Popul. Health 2023, 22, 101395. [Google Scholar] [CrossRef] [PubMed]

- Scharkow, M. The accuracy of self-reported internet use—A validation study using client log data. Commun. Methods Meas. 2016, 10, 13–27. [Google Scholar] [CrossRef]

- Andrews, S.; Ellis, D.A.; Shaw, H.; Piwek, L. Beyond self-report: Tools to compare estimated and real-world smartphone use. PLoS ONE 2015, 10, e0139004. [Google Scholar] [CrossRef] [PubMed]

- Wade, N.E.; Ortigara, J.M.; Sullivan, R.M.; Tomko, R.L.; Breslin, F.J.; Baker, F.C.; Fuemmeler, B.F.; Delrahim Howlett, K.; Lisdahl, K.M.; Marshall, A.T. Passive sensing of preteens’ smartphone use: An Adolescent Brain Cognitive Development (ABCD) cohort substudy. JMIR Ment. Health 2021, 8, e29426. [Google Scholar] [CrossRef] [PubMed]

- Gower, A.D.; Moreno, M.A. A novel approach to evaluating mobile smartphone screen time for iPhones: Feasibility and preliminary findings. JMIR mHealth uHealth 2018, 6, e11012. [Google Scholar] [CrossRef] [PubMed]

- Goedhart, G.; van Wel, L.; Langer, C.E.; de Llobet Viladoms, P.; Wiart, J.; Hours, M.; Kromhout, H.; Benke, G.; Bouka, E.; Bruchim, R. Recall of mobile phone usage and laterality in young people: The multinational Mobi-Expo study. Environ. Res. 2018, 165, 150–157. [Google Scholar] [CrossRef] [PubMed]

- Fischer, C.; Pardox, Z.; Baker, R.; Willians, J.; Smyth, P.; Yu, R.; Slater, S.; Baker, R.; Warschauer, M. Mining big data in education: Affordances and challenges. Rev. Res. Educ. 2020, 44, 130–160. [Google Scholar] [CrossRef]

- Kitchin, R. The Data Revolution: Big Data, Open Data, Data Infrastructures and Their Consequences; Sage: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Boase, J.; Ling, R. Measuring mobile phone use: Self-report versus log data. J. Comput.-Mediat. Commun. 2013, 18, 508–519. [Google Scholar] [CrossRef]

- Lui, M.; Li, C.; Pan, Z.; Pan, X. Mining big data to help make informed decisions for designing effective digital educational games. Interact. Learn. Environ. 2023, 31, 2562–2582. [Google Scholar]

- Alahmadi, M.A. Direct measurement of TV viewing time and physical activity in children. A pilot study. In Proceedings of the 3rd International Congress on Sport Sciences Research and Technology Support (icSPORTS 2015), Lisbon, Portugal, 15–17 November 2015; pp. 145–149. [Google Scholar]

- Nielsen Media Research. 2000 Report on Television: The First 50 Years; Nielson Media Research: New York, NY, USA, 2000. [Google Scholar]

- Danaher, P.J.; Beed, T.W. A coincidental survey of people meter panelists: Comparing what people say with what they do. J. Advert. Res. 1993, 33, 86–93. [Google Scholar]

- Clancey, M. The television audience examined. J. Advert. Res. 1994, 34, 2–11. [Google Scholar]

- Fitzgerald, J. Evaluating return on investment of multimedia advertising with a single-source panel: A retail case study. J. Advert. Res. 2004, 44, 262–270. [Google Scholar] [CrossRef]

- Robinson, J.L.; Winiewicz, D.D.; Fuerch, J.H.; Roemmich, J.N.; Epstein, L.H. Relationship between parental estimate and an objective measure of child television watching. Int. J. Behav. Nutr. Phys. Act. 2006, 3, 43. [Google Scholar] [CrossRef]

- Mendoza, J.A.; McLeod, J.; Chen, T.-A.; Nicklas, T.A.; Baranowski, T. Convergent validity of preschool children’s television viewing measures among low-income Latino families: A cross-sectional study. Child. Obes. 2013, 9, 29–34. [Google Scholar] [CrossRef]

- Douwes, M.; Kraker, H.; Blatter, B. Validity of two methods to assess computer use: Self-report by questionnaire and computer use software. Int. J. Ind. Ergon. 2007, 37, 425–431. [Google Scholar] [CrossRef]

- Groen, M.; Noyes, J. Using eye tracking to evaluate usability of user interfaces: Is it warranted? IFAC Proc. Vol. 2010, 43, 489–493. [Google Scholar] [CrossRef]

- Al Baghal, T.; Wenz, A.; Sloan, L.; Jessop, C. Linking Twitter and survey data: Asymmetry in quantity and its impact. EPJ Data Sci. 2021, 10, 32. [Google Scholar] [CrossRef]

- Sloan, L.; Jessop, C.; Al Baghal, T.; Williams, M. Linking survey and Twitter data: Informed consent, disclosure, security, and archiving. J. Empir. Res. Hum. Res. Ethics 2020, 15, 63–76. [Google Scholar] [CrossRef] [PubMed]

- Barocas, S.; Nissenbaum, H. Big data’s end run around anonymity and consent: Privacy, big data, and the public good. Framew. Engag. 2014, 1, 44–75. [Google Scholar]

- Crawford, K. The Atlas of AI: Power, Politics and the Planetary Costs of Artificial Intelligence; Yale University Press: New Haven, CT, USA, 2021. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Gómez, J.; Aycard, O.; Baber, J. Efficient detection and tracking of human using 3D LiDAR sensor. Sensors 2023, 23, 4720. [Google Scholar] [CrossRef] [PubMed]

- Costanza-Chock, S. Design Justice: Community-Led Practices to Build the World We Need; The MIT Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Rich, M.; Bickham, D.S.; Shrier, L.A. Measuring youth media exposure: A multimodal method for investigating the influence of media on digital natives. Am. Behav. Sci. 2015, 59, 1736–1754. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Beynon, A.; Hendry, D.; Lund Rasmussen, C.; Rohl, A.L.; Eynon, R.; Thomas, G.; Stearne, S.; Campbell, A.; Harris, C.; Zabatiero, J.; et al. Measurement Method Options to Investigate Digital Screen Technology Use by Children and Adolescents: A Narrative Review. Children 2024, 11, 754. https://doi.org/10.3390/children11070754

Beynon A, Hendry D, Lund Rasmussen C, Rohl AL, Eynon R, Thomas G, Stearne S, Campbell A, Harris C, Zabatiero J, et al. Measurement Method Options to Investigate Digital Screen Technology Use by Children and Adolescents: A Narrative Review. Children. 2024; 11(7):754. https://doi.org/10.3390/children11070754

Chicago/Turabian StyleBeynon, Amber, Danica Hendry, Charlotte Lund Rasmussen, Andrew L. Rohl, Rebecca Eynon, George Thomas, Sarah Stearne, Amity Campbell, Courtenay Harris, Juliana Zabatiero, and et al. 2024. "Measurement Method Options to Investigate Digital Screen Technology Use by Children and Adolescents: A Narrative Review" Children 11, no. 7: 754. https://doi.org/10.3390/children11070754

APA StyleBeynon, A., Hendry, D., Lund Rasmussen, C., Rohl, A. L., Eynon, R., Thomas, G., Stearne, S., Campbell, A., Harris, C., Zabatiero, J., & Straker, L. (2024). Measurement Method Options to Investigate Digital Screen Technology Use by Children and Adolescents: A Narrative Review. Children, 11(7), 754. https://doi.org/10.3390/children11070754