Online Age Verification: Government Legislation, Supplier Responsibilization, and Public Perceptions

Abstract

1. Introduction

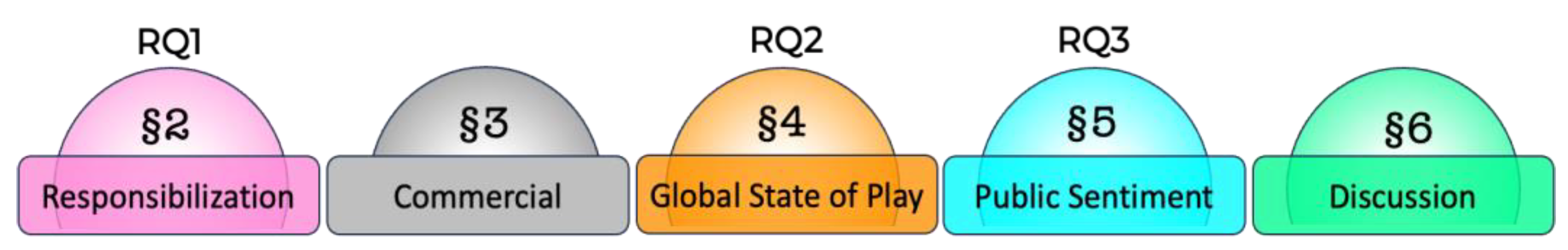

- Research Question 1 (RQ1): Do governments embrace a responsibilization strategy when it comes to age verification?

- Research Question 2 (RQ2): To what extent have different governments legislated age verification controls?

- Research Question 3 (RQ3): How does the UK public feel about online age verification legislation?

2. RQ1: Responsibilization

- Provision of advice—The UK’s Cyber Strategy [34] has charged the National Cyber Security Centre (NCSC) with supporting all sectors of society to ensure that they can protect themselves from online threats. This includes the responsibility of tailoring advice for the different sectors of society.

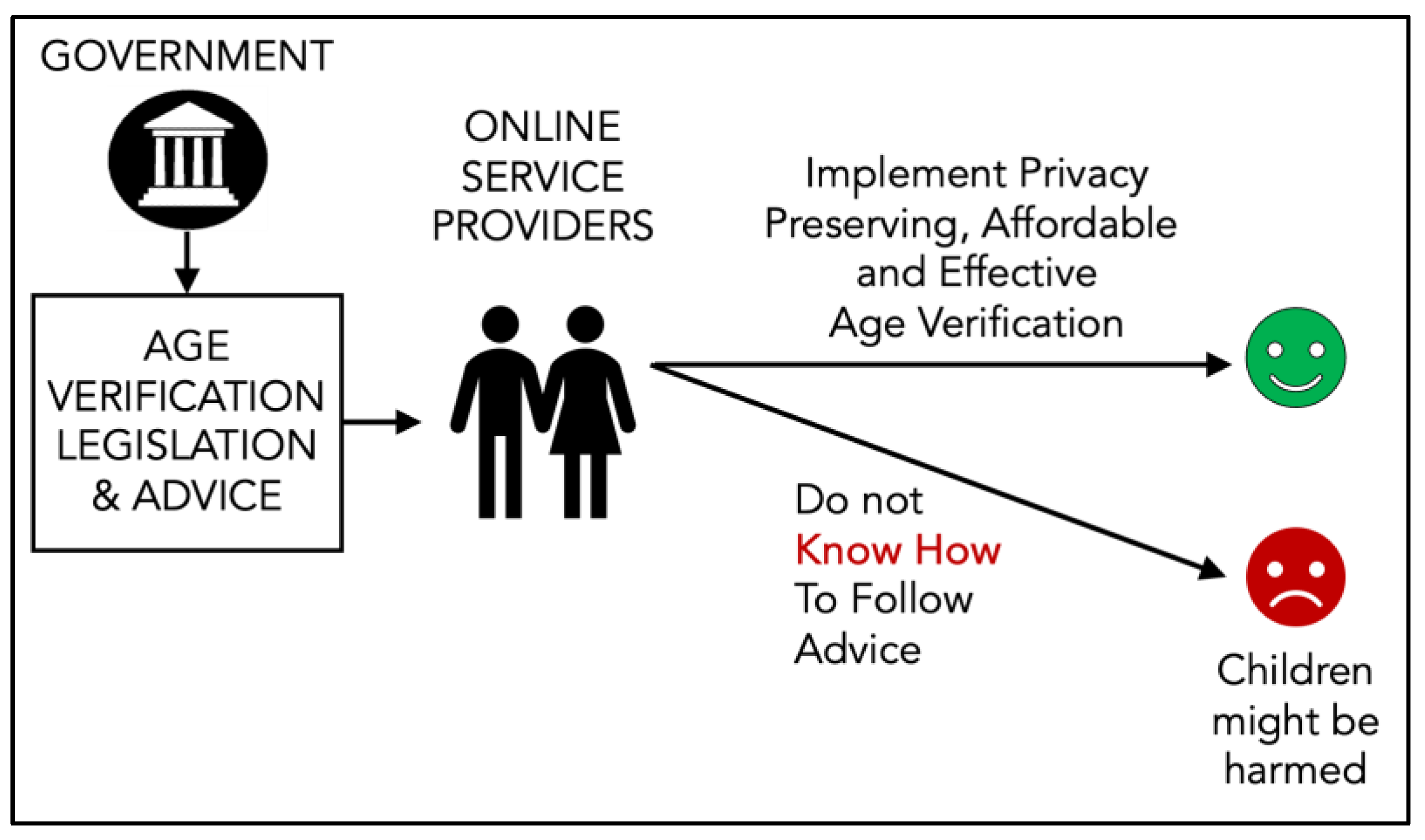

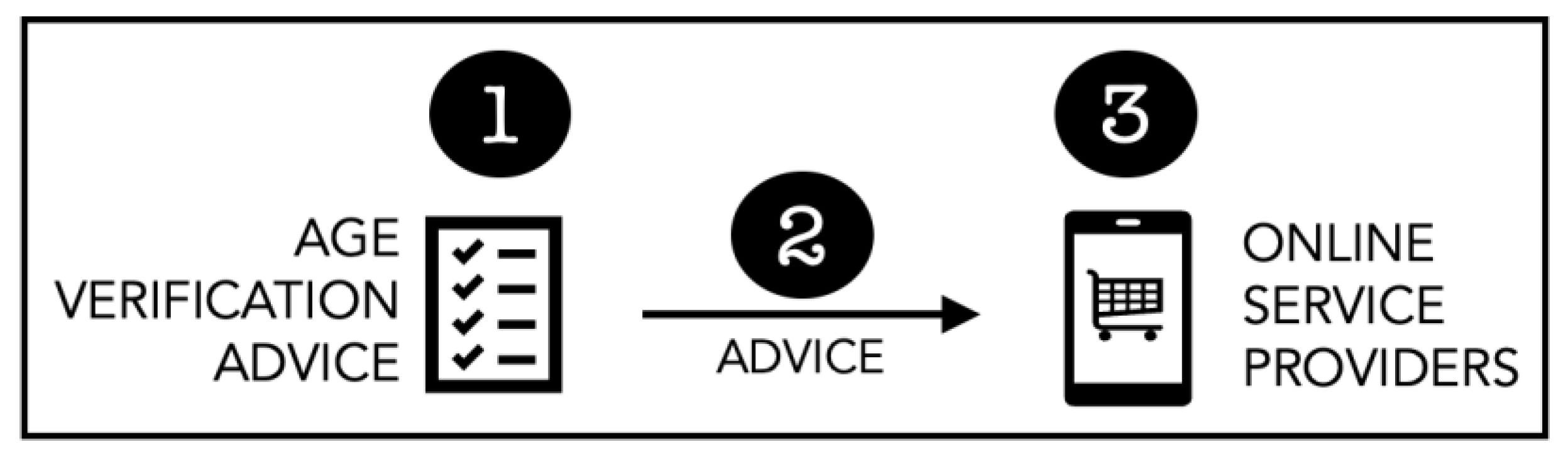

- Responsibilization—Responsibilization hinges on advice. Governments provide such advice, with the assumption that the advice will be followed and that consequences will be accepted if something goes awry [33].

- Infrastructural services—Governments act to reduce the number of threats and harms that individuals have to deal with. For example, the UK government provides a takedown service that removes potentially harmful online content and works with large technical companies and organisations to help them to improve their security offering. Governments also push technology companies to embed security functionality into the core of digital technology. For example, the UK government is spearheading ‘Secure by Design’ legislation called Product Security and Telecommunications Infrastructure (PSTI) Act to push towards more secure architectures for computer hardware.

- What Advice? The following should arguably be included [18]: (a) How to ensure Effectiveness—All solutions must have success requirements and a measurement strategy [35] to ensure that they do indeed act to keep children out of adult spaces. The government should provide guidance on the acceptable false positive and false negative percentages that indicate effectiveness. (b) How to verify Privacy Preservation—Reassurances from third party providers are seldom sufficient to ensure that privacy is preserved. There is a need for guidance in terms of how to ensure that the privacy of children using a mechanism is preserved if a third party supplier is used to provide age verification services. (c) Approved Age Verification Providers—Many online service providers, needing to deploy an age verification solution but not knowing how, will pay someone for their solution. The government could assist by providing a list of approved suppliers. Failing that, service providers could be certified to provide guidance to service providers choosing a third party to supply age verification.

- Advice Delivery: There is a need to measure the effectiveness of the advice being given to service providers. Ensuring the advice is effectively communicated, accessible to a range of reading abilities, and easily understood is critical for achieving success and a high level of compliance with the law. It is crucial to minimise the risk of varying interpretations, or the risk of ignorance, if advice does not reach all stakeholders.

- Online Service Providers: For those service providers and recipients of the advice, once it is understood what is required, there is then the issue of balancing compliance with business concerns such as affordability, effort, and expertise required. For some smaller businesses, balancing their current business models with the changing legal landscape can introduce fundamental dilemmas around how they can operate and remain profitable moving forward [18].

2.1. Current Practice in Child Protection

2.2. Synthesis of Findings

2.2.1. Child Safety

- Content—Exposure to inappropriate adult content online due to the general lack of robust online age verification mechanisms on websites, apps, and particularly on social media platforms [19] is a growing concern. More than half of the 11–16-year-olds surveyed by the NSPCC had seen explicit content online [42], and Ofcom reported that 33% of British children aged 12–15 have come across sexist, racist, or discriminatory content online [43]. Recent studies have found that the age verification mechanisms employed by social media companies when users try to sign up to use the platform are significantly lacking, with children being able to circumvent seven different popular social media apps age verification mechanisms [44].

- Conduct—When learning how to navigate the internet and online platforms, teens in particular can engage in risky online conduct [45]. Sexting is a rising concern [46], but a new type of scam called sextortion has seen tragic consequences with multiple teenage suicides [47,48]. Children are also increasingly exposed to online abuse or cyber bullying [49]. Ngai et al. found that social media has become a growing problem for youth since 2005, particularly when it comes to cyber bullying [50]. Social media is not the only environment where children are at risk, the gaming ecosystem poses similar harms [51].

- Contact—The International Centre for Missing and Exploited Children state that it can take as little as 18 min for an online predator to convince a child to meet them in person [52]. The pandemic has exacerbated online safety concerns, with child abuse cases more than doubling within the first four weeks of lockdown in the US [53]. However, the benefits of the internet and the push towards online learning, particularly during the pandemic, has resulted in a trade-off with online safety being compromised [54]. As technology advances and societal behaviours change, global legal systems have been unable to adapt at the speed necessary to offer the right level of protection [55].

- Commerce—Children are certainly targeted by advertisers [56]. Much of the advertising is deceptive [57] and/or not beneficial [58]. Researchers have raised concerns about the influence that adverts can exert on children when online [59]. There are grave concerns about some kinds of advertising such as for gambling [60] and unhealthy food [61,62].

2.2.2. Age Verification

3. Commercial Products

3.1. Responsibilization of Service Providers

3.2. Conclusion

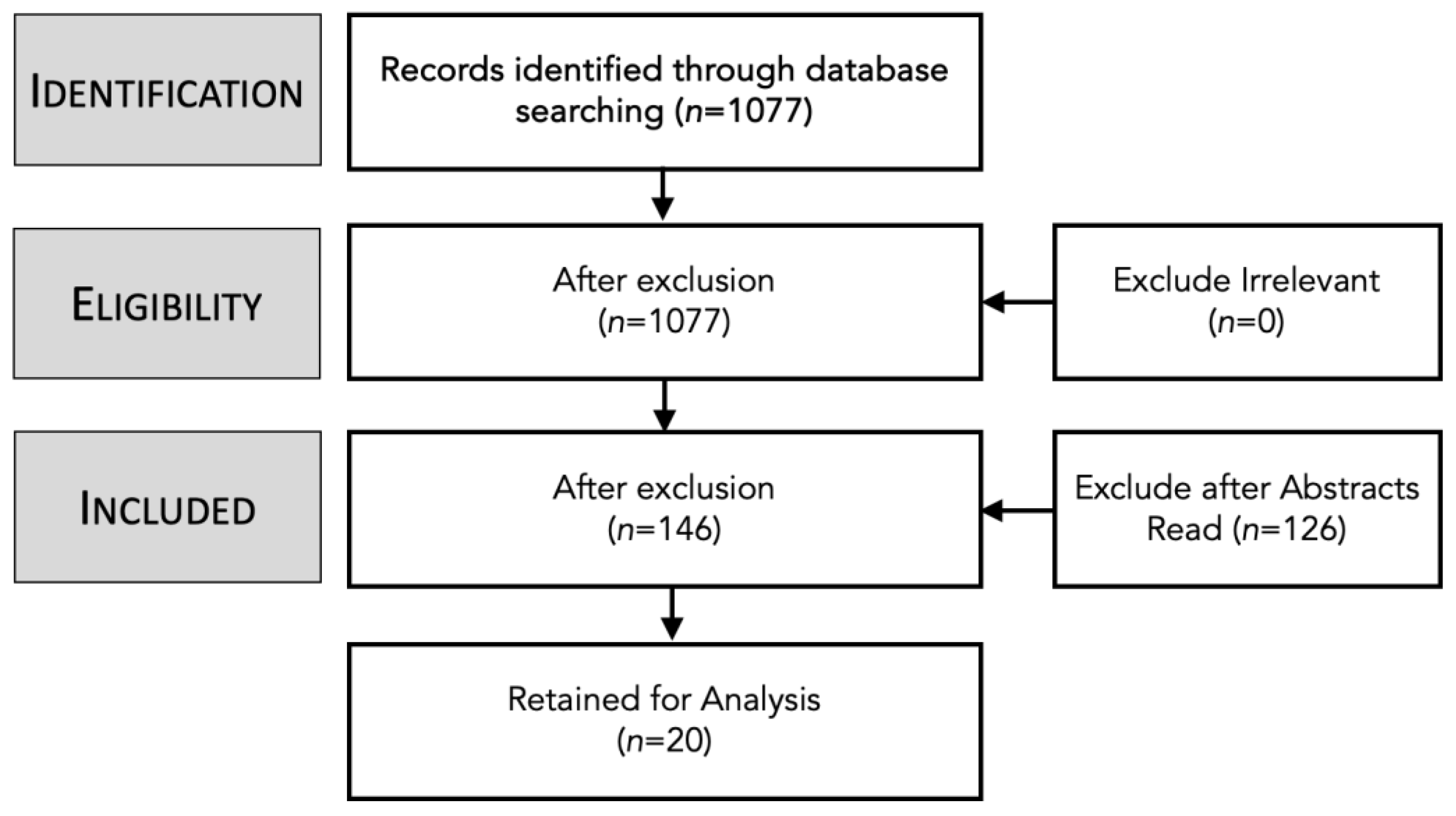

4. RQ2: Global State of Play

4.1. Europe

4.2. Americas

4.3. Africa

4.4. Asia

4.5. Oceania

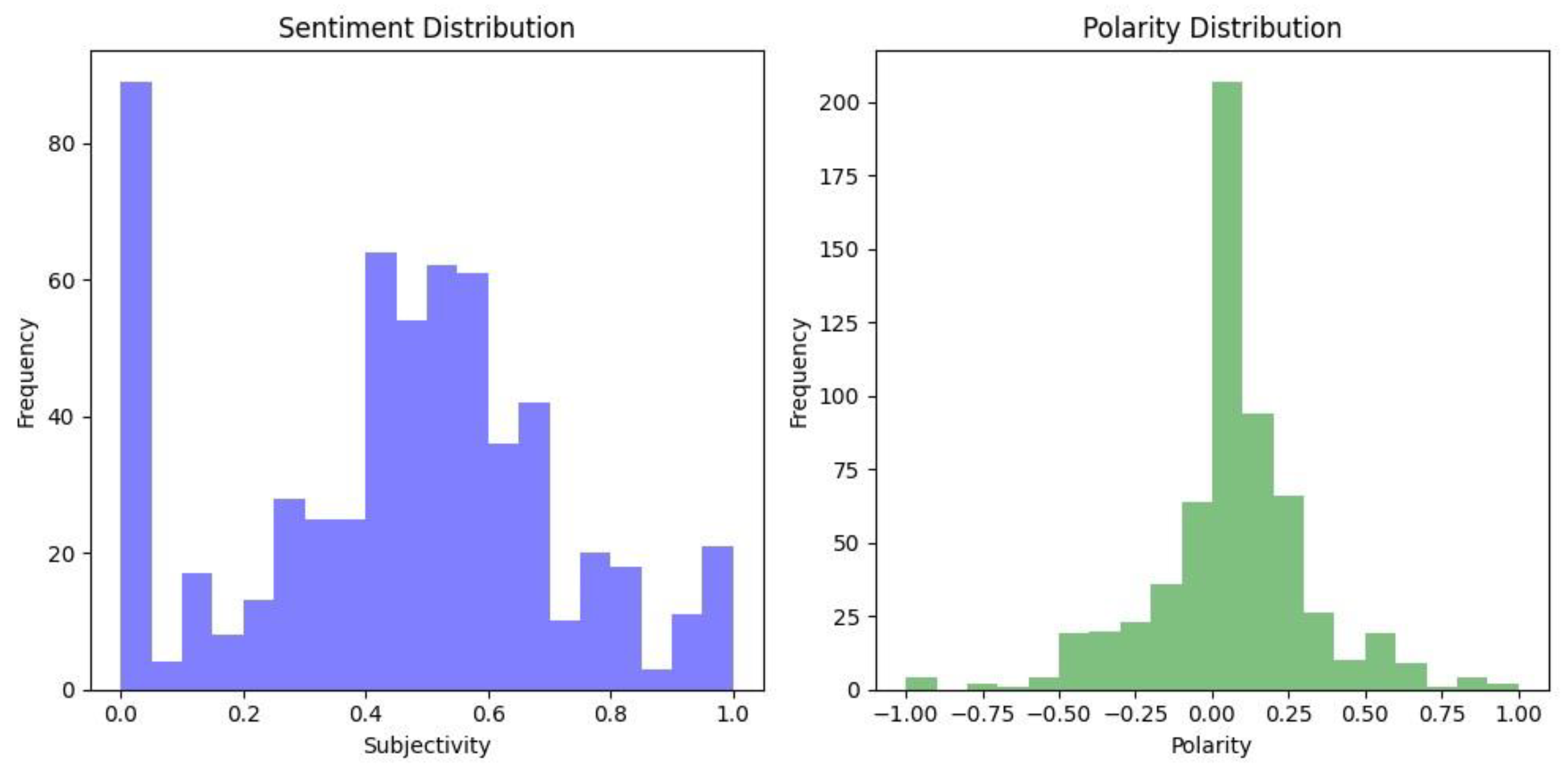

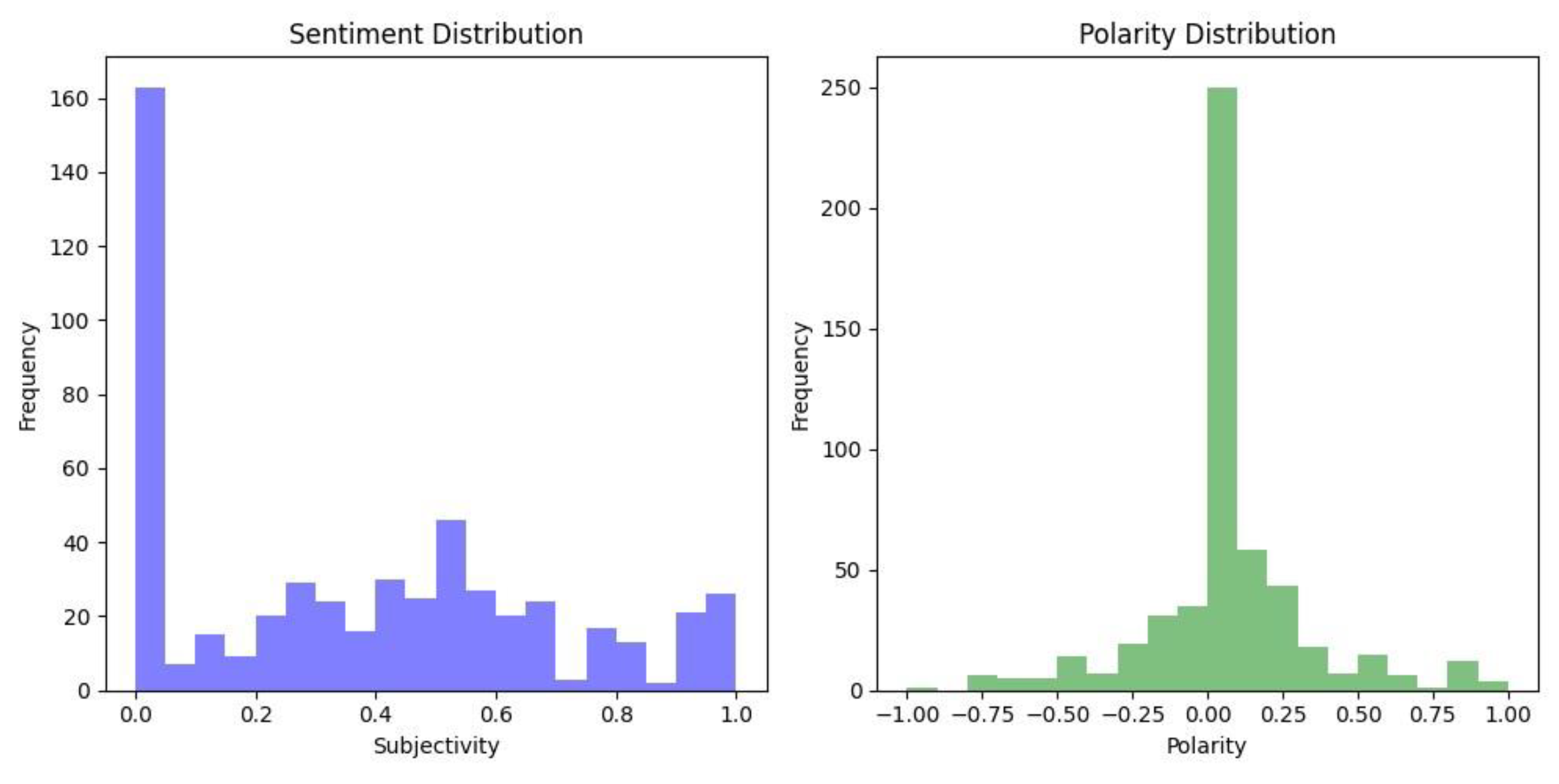

5. RQ3: General Public Perceptions and Sentiment

5.1. Reddit

- “Why walk at all? Continue giving the service, fully encrypted. At worst UK blocks it, which would still allow users to access via VPN”.

- “EXPLETIVE stupid Tory government. We’ve all got access to vpns anyway”.

- “Let me tell you, there’ll be a EXPLETIVE riot if they try to take away Wikipedia”.

5.2. YouTube

- “Won’t be able to say what you like, and won’t be a to protest about it if they get their way”.

- “Authoritarian goverment at his best, but the anglos where always kind of”.

- “ministry of truth brilliant”.

- “This bill is extremely dangerous and must be scrapped”.

- “The scope for abuse of this bill is vast. It is dangerous and must be scrapped”.

- “Is the post office now going to open everyone’s mail to check whether or not people are exchanging illegal pictures or saying dangerous things?”

- “Isn’t it the parent’s responsibility?!? Can I still write my opinion of Islam or will I go to jail now”?

- “Parents couldn’t control their kids, now the GOVERNMENT HAS TO BABYSIT US? Apps are the reason why I didn’t fall into depression”

- “Maybe the parents need to do some parenting”?

- “I mean the reality is that its up to the parents to keep their children protected, rather than an ever-growing list of stringent, restrictive changes to everyone elses life to compensate for it, which is ultimately what these things end up becoming”

6. Discussion

6.1. RQ1: Do Governments Embrace a Responsibilization Strategy When It Comes to Age Verification?

6.2. RQ2: To What Extent Have Different Governments Legislated Age Verification Controls?

6.3. RQ3: How Does the UK Public Feel About Online Age Verification Legislation?

6.4. Practical Implications

- Service Providers: Clearer guidance for service providers who are being impacted by the legislation. This is needed to prevent a fragmented approach to compliance. Although a risk-based approach can be appropriate, it is also crucial to give the context and structure by which these risks need to be evaluated to ensure consistency across Service Providers.

- Citizens: Sentiment analysis suggests that there are differing views and scepticism towards the new-age verification legislation. With this large stakeholder group, it is critical to ensure that the intentions of any new legislation and the wider benefits to society are effectively communicated and understood. However, scepticism towards the government is a wider societal issue which will not only affect the roll out of age verification regulations but fundamental societal change and thus must be addressed.

6.5. Limitations

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Solution | Checks | Price |

|---|---|---|

| WHAT YOU KNOW | ||

| Renaud and Maguire [19] | Knowledge and ability to identify photos of historical figures | N/A |

| WHAT YOU ARE | ||

| Yoti [85] | Picture (AI) | 25p per verification |

| Verify my Age [86] | Video (AI) | 45p per verification (eBay) |

| OneID [98] | Picture (AI) | from 16p per verification |

| Veriff [87] | Picture (AI) | 80 cents per verification plus $49 monthly fee |

| Ageify [89] | Picture (AI) | Basic plan $3.99 per month (Shopify) |

| WHAT YOU HOLD | ||

| Yoti [85] | Government ID | 25p per verification |

| Phone Number | ||

| Verify my Age [86] | Third Party Database Check | 45p per verification (eBay) |

| Government ID | ||

| Credit Card Check | ||

| Phone Check | ||

| VeriMe [92] | Phone Number Check (if using debit card) | Unknown |

| OneID [98] | Online Banking | 16p per verification |

| AgeChecker [91] | Third Party Database Check | $25 per month plus 50 cents per verified user |

| Phone Number Check | ||

| AgeChecked [93] | Driving Licence | Unknown |

| Phone Number Check | ||

| Social Media | ||

| Payment Card | ||

| Address Search | ||

| Trullioo [96] | Government ID | Unknown |

| Third Party Database Check | ||

| Melissa [145] | Address Check | Unknown |

| Equifax [94] | Third Party Database Check | Unknown |

| Experian [95] | Third Party Database Check | Unknown |

| WHAT YOU HOLD & ARE | ||

| AgeChecker [91] | Selfie with ID (AI) | $25 per month plus 50 cents per verified user |

| Jumio [90] | Selfie with ID (AI) | Unknown |

| Tencent [97] | ID Card + Facial Recognition | Unknown |

| Author(s) | Title |

|---|---|

| Eric W.T. Ngai, Spencer S. C. Tao, Karen Ka-Leung Moon [50] | Social media research: Theories, constructs, and conceptual frameworks |

| D. Andrews; S. Alathur; N. Chetty; V. Kumar [54] | Child Online Safety in Indian Context |

| H. Pozniak [53] | The child safety protocol: In dark corners of the internet, there have been horrific consequences to children living more online during the coronavirus lockdown. Are tech giants doing enough to protect them? And will greater privacy measures allow abuse to go unchecked? |

| B. E. Cartwright [55] | Cyberbullying and cyber law |

| A. Faraz; J. Mounsef; A. Raza; S. Willis [51] | Child Safety and Protection in the Online Gaming Ecosystem |

| O. Kovalchuk; M. Masonkova; S. Banakh [141] | The Dark Web Worldwide 2020: Anonymous vs Safety |

| M. Gaborov; M. Kavalic; D. Karuovic; D. Glušac; M. Nikolic [49] | The Impact of Internet Usage on Pupils Internet Safety in Primary and Secondary School |

| R. Farthing; K. Michael; R. Abbas; G. Smith-Nunes [40] | Age Appropriate Digital Services for Young People: Major Reforms |

| L. Pasquale; P. Zippo; C. Curley; B. O’Neill; M. Mongiello [44] | Digital Age of Consent and Age Verification: Can They Protect Children? |

| T. O’Dell; A. K. Ghosh [63] | Online Threats vs. Mitigation Efforts: Keeping Children Safe in the Era of Online Learning |

| C. Doherty [64] | Responsibilising parents: the nudge towards shadow tutoring |

| K. Renaud [33] | Is the responsibilization of the cyber security risk reasonable and judicious? |

| M. Lister [21] | Citizens, doing it for themselves? The big society and government through community |

| K. Renaud [31] | Cyber Security Responsibilization: An Evaluation of the Intervention Approaches Adopted by the Five Eyes Countries and China |

| R. Williams [127] | Internet little cigar and cigarillo vendors: Surveillance of sales and marketing practices via website content analysis |

| R.S. Williams [146] | Age verification and online sales of little cigars and cigarillos to minors |

| C.J. Uittenbroek [27] | Everybody should contribute, but not too much: Perceptions of local governments on citizen responsibilisation in climate change adaptation in the Netherlands |

References

- Norton. 2022 Cyber Safety Insights Report Global Results: Home and Family. 2022. Available online: https://www.nortonlifelock.com/kr/ko/newsroom/press-kits/2022-norton-cyber-safety-insights-report-special-release-home-and-family/ (accessed on 12 August 2024).

- Watkins, M. The 4 Cs of Online Safety: Online Safety Risk for Children. Available online: https://learning.nspcc.org.uk/news/2023/september/4-cs-of-online-safety (accessed on 12 August 2024).

- Bark. What Being Online Was Like for Kids in 2023. 2023. Available online: https://www.bark.us/annual-report-2023/ (accessed on 19 June 2024).

- Office of Communications. A Third of Children Have False Social Media Age of 18+. 2022. Available online: https://www.ofcom.org.uk/news-centre/2022/a-third-of-children-have-false-social-media-age-of-18t (accessed on 15 January 2023).

- Children’s Commissioner. ‘A lot of It Is Actually just Abuse’ Young People and Pornography. 2023. Available online: https://assets.childrenscommissioner.gov.uk/wpuploads/2023/02/cc-a-lot-of-it-is-actually-just-abuse-young-people-and-pornography-updated.pdf (accessed on 19 June 2023).

- Security.org. ‘Cyberbullying: Twenty Crucial Statistics for 2024’. 2023. Available online: https://www.security.org/resources/cyberbullying-facts-statistics/ (accessed on 19 June 2024).

- Livingstone, S.; Stoilova, M.; Nandagiri, R. Children’s data and privacy online. Technology 2019, 58, 157–165. [Google Scholar]

- People, Y. Resisting the Charm of an All-Consuming Life? Exploiting Childhood: How Fast Food, Material Obsession and Porn Culture are Creating New Forms of Child Abuse; Jessica Kingsley Publishers: London, UK, 23 August 2013; p. 187. [Google Scholar]

- Ringrose, J.; Gill, R.; Livingstone, S.; Harvey, L. A Qualitative Study of Children, Young People and ‘Sexting’: A Report Prepared for the NSPCC. 2012. Available online: https://eprints.lse.ac.uk/44216/ (accessed on 12 August 2024).

- De Jans, S.; Hudders, L. Disclosure of vlog advertising targeted to children. J. Interact. Mark. 2020, 52, 1–19. [Google Scholar] [CrossRef]

- Nairn, A.; Dew, A. Pop-ups, pop-unders, banners and buttons: The ethics of online advertising to primary school children. J. Direct Data Digit. Mark. Pract. 2007, 9, 30–46. [Google Scholar] [CrossRef]

- Figueiredo, F.; Giori, F.; Soares, G.; Arantes, M.; Almeida, J.M.; Benevenuto, F. Understanding Targeted Video-Ads in Children’s Content. In Proceedings of the the 31st ACM Conference on Hypertext and Social Media, New York, NY, USA, 13 July 2020; pp. 151–160. [Google Scholar]

- Fisher, F. Age Assurance: A Modern Coming of Age Approach to Ensure the Safety of Children Online and an Age Appropriate Experience. 2024. Available online: https://www.fieldfisher.com/en/insights/age-assurance-a-modern-coming-of-age-approach-to-ensure-the-saftey-of-children-online (accessed on 19 June 2024).

- Care. Family Online Safety. 2021. Available online: https://care.org.uk/cause/online-safety/ (accessed on 18 January 2022).

- GOV.UK. Draft Online Safety Bill. 2021. Available online: https://www.gov.uk/government/publications/draft-online-safety-bill (accessed on 12 June 2021).

- GOV.UK. Online Safety Bill Bolstered to Better Protect Children and Empower Adults. Available online: https://www.gov.uk/government/news/online-safety-bill-bolstered-to-better-protect-children-and-empower-adults (accessed on 9 July 2023).

- Gardner, P. Age Verification: Industry Responds to Ofcom Consultation on Age Verification guidance under UK Online Safety Act. 2024. Available online: https://wiggin.eu/insight/age-verification-industry-responds-to-ofcom-consultation-on-age-verification-guidance-under-uk-online-safety-act/ (accessed on 19 June 2024).

- Jarvie, C.; Renaud, K. Are you over 18? A snapshot of current age verification mechanisms. In Proceedings of the 2021 Dewald Roode Workshop, San Antonio, TX, USA, 8–9 October 2021. [Google Scholar]

- Renaud, K.; Maguire, J. Regulating access to adult content (with privacy preservation). In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, New York, NY, USA, 18 April 2015; pp. 4019–4028. [Google Scholar] [CrossRef]

- AVPA. Estimating the Size of the Global Online Age Verification Market. 2021. Available online: https://avpassociation.com/thought-leadership/estimating-the-size-of-the-global-age-verification-market/ (accessed on 3 April 2024).

- Lister, M. Citizens, doing it for themselves? The Big Society and Government Through Community. Parliam. Aff. 2014, 68, 352–370. [Google Scholar] [CrossRef]

- Local Government Group. The Big Society: Looking after Ourselves. 2010. Available online: https://www.local.gov.uk/sites/default/files/documents/download-big-society-look-97a.pdf (accessed on 3 April 2024).

- Da Costa Vieira, T.; Foster, E.A. The elimination of political demands: Ordoliberalism, the big society and the depoliticization of co-operatives. Compet. Chang. 2022, 26, 289–308. [Google Scholar] [CrossRef]

- Miller, P.N.; Rose, N.S. Governing the Present: Administering Economic, Social and Personal Life; Polity: Cambridge, UK, 2008. [Google Scholar]

- Juhila, K.; Raitakari, S. Responsibilisation in Governmentality Literature. In Responsibilisation at the Margins of Welfare Services; Routledge: London, UK, 2019; pp. 11–34. [Google Scholar]

- Pyysiäinen, J.; Halpin, D.; Guilfoyle, A. Neoliberal governance and ‘responsibilization’ of agents: Reassessing the mechanisms of responsibility-shift in neoliberal discursive environments. Distinktion J. Soc. Theory 2017, 18, 215–235. [Google Scholar] [CrossRef]

- Uittenbroek, C.J.; Mees, H.L.; Hegger, D.L.; Driessen, P.P. Everybody should contribute, but not too much: Perceptions of local governments on citizen responsibilisation in climate change adaptation in the Netherlands. Environ. Policy Gov. 2022, 32, 192–202. [Google Scholar] [CrossRef]

- Peeters, R. Responsibilisation on Government’s Terms: New Welfare and the Governance of.Responsibility and Solidarity. Soc. Policy Soc. 2013, 12, 583–595. [Google Scholar] [CrossRef]

- Brown, B. Responsibilization and recovery: Shifting responsibilities on the journey through mental health care to social engagement. Soc. Theory Health 2021, 19, 92–109. [Google Scholar] [CrossRef]

- Trnka, S.; Trundle, C. Competing Responsibilities: The Ethics and Politics of Contemporary Life; Duke University Press: Durham, NC, USA, 2017. [Google Scholar]

- Renaud, K.; Orgeron, C.; Warkentin, M.; French, P.E. Cyber security responsibilization: An evaluation of the intervention approaches adopted by the Five Eyes countries and China. Public Adm. Rev. 2020, 80, 577–589. [Google Scholar] [CrossRef]

- Prior, S.; Renaud, K. Are UK parents empowered to act on their cybersecurity education responsibilities? In Proceedings of the International Conference on Human-Computer Interaction, Honolulu, HI, USA, 18–20 August 1994; Springer: Berlin/Heidelberg, Germany, 2024; pp. 77–96. [Google Scholar] [CrossRef]

- Renaud, K.; Flowerday, S.; Warkentin, M.; Cockshott, P.; Orgeron, C. Is the Responsibilization of the Cyber Security Risk Reasonable and Judicious? Comput. Secur. 2018, 78, 198–211. [Google Scholar] [CrossRef]

- Cabinet Office. National Cyber Strategy 2022. 2022. Available online: https://www.gov.uk/government/publications/national-cyber-strategy-2022/national-cyber-security-strategy-2022 (accessed on 27 April 2023).

- Pawson, R.; Tilley, N. An introduction to scientific realist evaluation. In Evaluation for the 21st Century: A Handbook; SAGE Publications, Inc.: Thousand Oaks, CA, USA, 1997; pp. 405–418. [Google Scholar] [CrossRef]

- Challen, K.; Lee, A.C.; Booth, A.; Gardois, P.; Woods, H.B.; Goodacre, S.W. Where is the evidence for emergency planning: A scoping review. BMC Public Health 2012, 12, 542. [Google Scholar] [CrossRef] [PubMed]

- Bragge, P.; Clavisi, O.; Turner, T.; Tavender, E.; Collie, A.; Gruen, R.L. The Global Evidence Mapping Initiative: Scoping research in broad topic areas. BMC Med. Res. Methodol. 2011, 11, 92. [Google Scholar] [CrossRef] [PubMed]

- Williams, R.S.; Ribisl, K.M. Internet alcohol sales to minors. Arch. Pediatr. Adolesc. Med. 2012, 166, 808–813. [Google Scholar] [CrossRef]

- Williams, R.S.; Derrick, J.; Ribisl, K.M. Electronic cigarette sales to minors via the internet. JAMA Pediatr. 2015, 169, e1563. [Google Scholar] [CrossRef]

- Farthing, R.; Michael, K.; Abbas, R.; Smith-Nunes, G. Age Appropriate Digital Services for Young People: Major Reforms. IEEE Consum. Electron. Mag. 2021, 10, 40–48. [Google Scholar] [CrossRef]

- Byron, T. Safer Children in a Digital World: The Report of the Byron Review: Be Safe, Be Aware, Have Fun. 2008. Available online: https://childcentre.info (accessed on 15 June 2024).

- National Society for the Prevention of Cruelty to Children. Online Safety during Coronavirus. 2021. Available online: https://learning.nspcc.org.uk/news/covid/online-safety-during-coronavirus (accessed on 12 June 2021).

- Ofcom. Ofcom Report on Internet Safety Measures. Strategies of Parental Protection for Children Online. 2015. Available online: https://www.ofcom.org.uk/__data/assets/pdf_file/0020/31754/Fourth-Internet-safety-report.pdf (accessed on 12 January 2018).

- Pasquale, L.; Zippo, P.; Curley, C.; O’Neill, B.; Mongiello, M. Digital Age of Consent and Age Verification: Can They Protect Children? IEEE Softw. 2022, 39, 50–57. [Google Scholar] [CrossRef]

- Thompson, R. Teen girls’ online practices with peers and close friends: Implications for cybersafety policy. Aust. Educ. Comput. 2016, 31, 1–16. [Google Scholar]

- Strasburger, V.C.; Zimmerman, H.; Temple, J.R.; Madigan, S. Teenagers, sexting, and the law. Pediatrics 2019, 143, e20183183. [Google Scholar] [CrossRef]

- News, B. Sextortion Case: Two Arrested in Nigeria after Australian Boy’s Suicide. 2024. Available online: https://www.bbc.co.uk/news/world-australia-68720247 (accessed on 19 June 2024).

- Geldenhuys, K. The link between teenage alcohol abuse, sexting & suicide. Servamus Community-Based Saf. Secur. Mag. 2017, 110, 14–18. [Google Scholar]

- Gaborov, M.; Kavalic’, M.; Karuovic’, D.; Glušac, D.; Nikolic’, M. The Impact of Internet Usage on Pupils Internet Safety in Primary and Secondary School. In Proceedings of the 2021 44th International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 27 September–1 October 2021; pp. 759–764. [Google Scholar] [CrossRef]

- Ngai, E.W.; Tao, S.S.; Moon, K.K. Social Media Research: Theories, constructs, and conceptual frameworks. Int. J. Inf. Manag. 2015, 35, 33–44. [Google Scholar] [CrossRef]

- Faraz, A.; Mounsef, J.; Raza, A.; Willis, S. Child Safety and Protection in the Online Gaming Ecosystem. IEEE Access 2022, 10, 115895–115913. [Google Scholar] [CrossRef]

- The International Centre for Missing and Exploited Children. ‘Online Grooming of Children for Sexual Purposes Model Legislation and Global Review’. 2017. Available online: https://www.icmec.org/wp-content/uploads/2017/09/Online-Grooming-of-Children_FINAL_9-18-17.pdf (accessed on 19 June 2024).

- Pozniak, H. The Child Safety Protocol [Protection against online abuse]. Eng. Technol. 2020, 15, 74–77. [Google Scholar] [CrossRef]

- Andrews, D.; Alathur, S.; Chetty, N.; Kumar, V. Child online safety in Indian context. In Proceedings of the 2020 5th International Conference on Computing, Communication and Security (ICCCS), Bihar, India, 14–16 October 2020. [Google Scholar] [CrossRef]

- Cartwright, B.E. Cyberbullying and cyber law. In Proceedings of the IEEE International Conference on Cybercrime and Computer Forensic (ICCCF), Vancouver, BC, Canada, 12–14 June 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Calvert, S.L. Children as consumers: Advertising and marketing. Future Child. 2008, 18, 205–234. [Google Scholar] [CrossRef] [PubMed]

- Nairn, A. “It does my head in... buy it, buy it, buy it!” The commercialisation of UK children’s web sites. Young Consum. 2008, 9, 239–253. [Google Scholar] [CrossRef]

- Boyland, E.J.; Harrold, J.A.; Kirkham, T.C.; Halford, J.C. Persuasive techniques used in television advertisements to market foods to UK children. Appetite 2012, 58, 658–664. [Google Scholar] [CrossRef]

- Chima, O.A.; Anorue, L.; Obayi, P.M. Persuading the vulnerable: A study of the influence of advertising on children in Southern Nigeria. IMSU J. Commun. Studies. 2017, 1, 1–12. [Google Scholar]

- Critchlow, N.; Stead, M.; Moodie, C.; Purves, R.; Newall, P.W.; Reith, G.; Morgan, A.; Dobbie, F. The Effect of Gambling Marketing and Advertising on Children, Young People and Vulnerable People; University of Stirling: Stirling, Scotland, 2019. [Google Scholar] [CrossRef]

- Vandevijvere, S.; Soupen, A.; Swinburn, B. Unhealthy food advertising directed to children on New Zealand television: Extent, nature, impact and policy implications. Public Health Nutr. 2017, 20, 3029–3040. [Google Scholar] [CrossRef][Green Version]

- Coleman, P.C.; Hanson, P.; Van Rens, T.; Oyebode, O. A rapid review of the evidence for children’s TV and online advertisement restrictions to fight obesity. Prev. Med. Rep. 2022, 26, 101717. [Google Scholar] [CrossRef]

- O’Dell, T.; Ghosh, A.K. Online Threats vs. Mitigation Efforts: Keeping Children Safe in the Era of Online Learning. In Proceedings of the SoutheastCon 2023, Orlando, FL, USA, 1–16 April 2023; pp. 333–340. [Google Scholar] [CrossRef]

- Doherty, C.; Dooley, K. Responsibilising parents: The nudge towards Shadow Tutoring. Br. J. Sociol. Educ. 2017, 39, 551–566. [Google Scholar] [CrossRef]

- Prior, S.; Renaud, K. Who Is Best Placed to Support Cyber Responsibilized UK Parents? Children 2023, 10, 1130. [Google Scholar] [CrossRef] [PubMed]

- Ofcom. Childrens Code. 2020. Available online: https://ico.org.uk/childrenscode (accessed on 19 June 2021).

- ITV. Molly Russell Inquest: Pinterest ‘Sorry’ over Teen’s Death and Admits Content Was ‘Not Safe’. 2022. Available online: https://www.itv.com/news/london/2022-09-22/molly-russell-inquest-pinterest-sorry-over-teens-death (accessed on 15 January 2023).

- BBC News. Molly Russell: Coroner’s Report Urges Social Media Changes. 2022. Available online: https://www.bbc.co.uk/news/uk-england-london-63254635 (accessed on 15 January 2023).

- Statista. Global Social Networks Ranked by Number of Users. Available online: https://www.statista.com/statistics/272014/global-social-networks-ranked-by-number-of-users/ (accessed on 18 April 2023).

- Meta. Parent Resources. Articles to Help Kids Stay Safer Online. Available online: https://messengerkids.com/parent-resources/ (accessed on 16 January 2023).

- The Guardian. UK Data Watchdog Seeks Talks with Meta over Child Protection Concerns. Available online: https://www.theguardian.com/technology/2022/jan/09/uk-data-watchdog-seeks-talks-with-meta-over-child-protection-concerns (accessed on 18 May 2023).

- The Verge. Facebook Design Flaw Let Thousands of Kids Join Chats with Unauthorized Users. Available online: https://www.theverge.com/2019/7/22/20706250/facebook-messenger-kids-bug-chat-app-unauthorized-adults (accessed on 18 May 2023).

- BBC News. Meta settles Cambridge Analytica Scandal Case for $725m. Available online: https://www.bbc.co.uk/news/technology-64075067s (accessed on 18 May 2023).

- The Guardian. UK Fines Facebook £500,000 for Failing to Protect User Data. Available online: https://www.theguardian.com/technology/2018/oct/25/facebook-fined-uk-privacy-access-user-data-cambridge-analytica/ (accessed on 18 May 2023).

- Tech Crunch. Facebook Fined Again in Italy for Misleading Users over What It Does with Their Data. Available online: https://techcrunch.com/2021/02/17/facebook-fined-again-in-italy-for-misleading-users-over-what-it-does-with-their-data/ (accessed on 18 May 2023).

- Reuters. Turkey Fines Facebook $282,000 over Privacy Breach. Available online: https://www.reuters.com/article/us-facebook-lawsuit-privacy-turkey-idUSKBN1WI0LJ (accessed on 18 May 2023).

- Hunton Privacy Blog. Meta Fined €390 Million by Irish DPC for Alleged Breaches of GDPR, Including in Behavioral Advertising Contexts. Available online: https://www.huntonprivacyblog.com/2023/01/20/meta-fined-e390-million-by-irish-dpc-for-alleged-breaches-of-gdpr-including-in-behavioral-advertising-context/ (accessed on 18 May 2023).

- E&T. Meta Paid over 80 per cent of EU’s 2022 GDPR Fines. Available online: https://eandt.theiet.org/content/articles/2023/01/meta-paid-over-80-per-cent-of-eus-2022-gdpr-fines/ (accessed on 18 May 2023).

- Business of Apps. TikTok Statistics. Available online: https://www.businessofapps.com/data/tik-tok-statistics/#:~:text=TikTok%20reached%201.6%20billion%20users,by%20the%20end%20of%202023/ (accessed on 29 May 2023).

- Kaspersky. TikTok Privacy and Security-Is TikTok Safe to Use? Available online: https://www.kaspersky.co.uk/resource-center/preemptive-safety/is-tiktok-safe (accessed on 29 May 2023).

- The Guardian. A US Ban on TikTok Could Damage the Idea of the Global Internet. Available online: https://www.theguardian.com/business/2023/mar/29/us-ban-tiktok-global-internet-china-tech-world (accessed on 29 May 2023).

- Tech Crunch. TikTok Hit with $15.7M UK Fine for Misusing Children’s Data, Note. Available online: https://techcrunch.com/2023/04/04/tiktok-uk-gdpr-kids-data-fine/ (accessed on 29 May 2023).

- Parent Zone. TikTok, Note. Available online: https://parentzone.org.uk/article/tiktok (accessed on 29 May 2023).

- TikTok. Our Work to Design an Age-Appropriate Experience on TikTok, Note. Available online: https://newsroom.tiktok.com/en-us/our-work-to-design-an-age-appropriate-experience-on-tiktok (accessed on 29 May 2023).

- Yoti. 2021. Available online: https://www.yoti.com/ (accessed on 29 May 2021).

- VerifyMyAge. 2021. Available online: https://www.verifymyage.co.uk/ (accessed on 29 May 2021).

- Veriff. 2024. Available online: https://www.veriff.com/ (accessed on 23 July 2024).

- Luciditi. 2024. Available online: https://luciditi.co.uk/ (accessed on 23 July 2024).

- Ageify. 2024. Available online: https://age-ify.com/ (accessed on 23 July 2024).

- Jumio. 2021. Available online: https://www.jumio.com/use-case/age-verification/ (accessed on 16 June 2021).

- AgeChecker.net. 2021. Available online: https://agechecker.net/ (accessed on 29 May 2021).

- VeriMe. 2021. Available online: https://verime.net/ (accessed on 29 May 2021).

- AgeChecked. 2021. Available online: https://www.agechecked.com/online-verification-solutions/ (accessed on 16 June 2021).

- Equifax. Equifax Age Verification. 2021. Available online: https://www.equifax.co.uk/business/age-verification/en_gb/ (accessed on 29 May 2021).

- Experian. Experian Age Verification. 2021. Available online: https://www.experian.co.uk/business/identity-fraud/validation/age-verification/ (accessed on 29 May 2021).

- Trulioo. 2021. Available online: https://www.trulioo.com/ (accessed on 16 June 2021).

- Borak, M. Kids Are Trying to Outsmart Tencent’s Facial Recognition System by Pretending to Be Their Grandads. 2018. Available online: https://www.scmp.com/abacus/tech/article/3029027/kids-are-trying-outsmart-tencents-facial-recognition-system-pretending (accessed on 17 June 2024).

- OneID. 2024. Available online: https://oneid.uk/ (accessed on 23 July 2024).

- Jung, S.G.; An, J.; Kwak, H.; Salminen, J.; Jansen, B. Assessing the accuracy of four popular face recognition tools for inferring gender, age, and race. In Proceedings of the International AAAI Conference on Web and Social Media, Palo Alto, CA, USA, 25–28 June 2018; Volume 12. [Google Scholar]

- Yoti. Yoti Age Scan. 2021. Available online: https://www.yoti.com/wp-content/uploads/Yoti-age-estimation-White-Paper-May-2021.pdf (accessed on 14 June 2021).

- CNIL. Online Age Verification: Balancing Privacy and the Protection of Minors. Available online: https://www.cnil.fr/en/online-age-verification-balancing-privacy-and-protection-minors (accessed on 12 July 2023).

- Pasculli, L. Coronavirus and Fraud in the UK: From the Responsibilisation of the Civil Society to the Deresponsibilisation of the State. Coventry Law J. 2020, 25, 3–23. [Google Scholar] [CrossRef]

- Global, M. Majority of People around the World Agree: Big Tech Should Be Held Respon-Sible for Online Safety and Social Media’s Harms to Kids. 2024. Available online: https://www.mccourt.com/majority-of-people-around-the-world-agree-big-tech-should-be-held-responsible-for-online-safety-and-social-medias-harms-to-kids/ (accessed on 19 June 2024).

- United Nations. Standard Country or Area Codes for Statistical Use. 2024. Available online: https://unstats.un.org/unsd/methodology/m49/ (accessed on 3 April 2024).

- Library of Congress. Guide to Law Online: Antarctica. 2024. Available online: https://guides.loc.gov/law-antarctica/judicial/ (accessed on 3 April 2024).

- euConsent. Age Verification and Child Protection: An Overview of the Legal Landscape. 2022. Available online: https://euconsent.eu/age-verification-and-child-protection-an-overview-of-the-legal-landscape/ (accessed on 15 January 2023).

- Smahel, D.; Helsper, E.; Green, L.; Kalmus, V.; Blinka, L.; Ólafsson, K. Excessive Internet Use among European Children Report Original Citation: Excessive Internet Use among European Children. 2012. Available online: https://eprints.lse.ac.uk/47344/1/Excessive%20internet%20use.pdf (accessed on 6 February 2023).

- GOV.UK. Digital Economy Bill Receives Royal Assent. 2017. Available online: https://www.gov.uk/government/news/digital-economy-bill-receives-royal-assent (accessed on 3 June 2021).

- BBC News. Porn Blocker ‘Missing’ from Online Safety Bill Prompts Concern. 2021. Available online: https://www.bbc.co.uk/news/technology-57143746 (accessed on 18 May 2021).

- The Guardian. UK Government Faces Action over Lack of Age Checks on Adult Sites. 2021. Available online: https://www.theguardian.com/society/2021/may/05/uk-government-faces-action-over-lack-of-age-checks-on-pornography-websites (accessed on 15 May 2021).

- Biometric Update. Backlash Mounts over UK Govt Failure to Implement Age Verification for Online Porn. 2021. Available online: https://www.biometricupdate.com/202111/backlash-mounts-over-uk-govt-failure-to-implement-age-verification-for-online-porn/ (accessed on 16 January 2022).

- GOV.UK. World-Leading Measures to Protect Children from Accessing Pornography Online. 2022. Available online: https://www.gov.uk/government/news/world-leading-measures-to-protect-children-from-accessing-pornography-online/ (accessed on 8 February 2022).

- BBC News. Online Safety Bill Put on Hold until New Prime Minister in Place. Available online: https://www.bbc.co.uk/news/uk-62158287 (accessed on 3 May 2023).

- The Verge. The UK’s Controversial Online Safety Bill Finally Becomes Law. 2023. Available online: https://www.theverge.com/2023/10/26/23922397/uk-online-safety-bill-law-passed-royal-assent-moderation-regulation/ (accessed on 3 April 2024).

- EUConsent. 2022. Available online: https://euconsent.eu/ (accessed on 16 January 2022).

- EUConsent. euCONSENT’s First Large Scale Pilot. 2022. Available online: https://euconsent.eu/euconsents-first-large-scale-pilot (accessed on 16 January 2022).

- Biometric Update. euCONSENT Age Verification Interoperability: Huge Success, But the Potential to Fail. 2022. Available online: https://www.biometricupdate.com/202206/euconsent-age-verification-interoperability-huge-success-but-the-potential-to-fail (accessed on 16 January 2022).

- McKenzie, B. Requirement to Obtain and Provide Age Ratings and Content Descrip-Tors That Must Meet Certain Requirements. 2021. Available online: https://insightplus.bakermckenzie.com/bm/attachment_dw.action (accessed on 5 March 2023).

- ICO. Data Protection Officers. 2020. Available online: https://ico.org.uk/for-organisations/ (accessed on 21 August 2024).

- Kabelka, L. German Youth Protection Body Endorses AI as Biometric Age-Verification Tool. 2022. Available online: https://www.euractiv.com/section/digital/news/german-youth-protection-body-endorses-ai-as-biometric-age-verification-tool/ (accessed on 16 January 2022).

- Politico. France to Introduce Controversial Age Verification System for Adult Websites. 2020. Available online: https://www.politico.eu/article/france-to-introduce-controversial-age-verification-system-for-adult-pornography-websites/ (accessed on 16 January 2022).

- The Christian Institute. France to Enforce Age Verification Checks for Major Porn Sites. 2022. Available online: https://www.christian.org.uk/news/france-to-enforce-age-verification-checks-for-major-porn-sites/ (accessed on 16 January 2022).

- Bryan Cav Leighton Paisner. CNIL Issues Guidance on Online Age Verification. 2022. Available online: https://www.bclplaw.com/en-US/events-insights-news/cnil-issues-guidance-on-online-age-verification.htmls/ (accessed on 16 January 2022).

- CNIL. Recommendation 7: Check the Age of the Child and Parental Consent while Respecting the 1042 Child’s Privacy. 2021. Available online: https://www.cnil.fr/en/recommendation-7-check-age-child-and-parental-consent-while-respecting-childs-privacy/ (accessed on 16 January 2022).

- Federal Trade Commission. Complying with COPPA Frequently Asked Questions. 2021. Available online: https://www.ftc.gov/tips-advice/business-center/guidance/complying-coppa-frequently-asked-questions-0 (accessed on 21 May 2021).

- Williams, R.S.; Derrick, J.; Liebman, A.K.; LaFleur, K.; Ribisl, K.M. Content analysis of age verification, purchase and delivery methods of internet e-cigarette vendors, 2013 and 2014. Tob. Control. 2018, 27, 287–293. [Google Scholar] [CrossRef] [PubMed]

- Williams, R.S.; Derrick, J.C. Internet little cigar and cigarillo vendors: Surveillance of sales and marketing practices via website content analysis. Prev. Med. 2018, 109, 51–57. [Google Scholar] [CrossRef]

- House of Commons Canada. An Act to Restrict Young Persons’ Online Access to Sexually Explicit Material Information. 2023. Available online: https://www.ourcommons.ca/committees/en/SECU/StudyActivity?studyActivityId=12521982 (accessed on 3 April 2024).

- Biometric Update. Canada Makes another Move towards Age Verification for Porn Sitesy. 2023. Available online: https://www.biometricupdate.com/202312/canada-makes-another-move-towards-age-verification-for-porn-sites (accessed on 3 April 2024).

- OneTrust Data Guidance. Comparing Privacy Laws: GDPR v. POPIA. 2021. Available online: https://www.dataguidance.com/sites/default/files/onetrustdataguidance_comparingprivacylaws_gdprvpopia.pdf (accessed on 3 April 2024).

- Data Guidance. South Africa: Processing of Children’s Personal Information in the Modern Age of Technology. 2022. Available online: https://www.dataguidance.com/opinion/south-africa-processing-childrens-personal (accessed on 3 April 2024).

- Global Privacy Blog. China Issues New Cybersecurity Law to Protect Children, Note. Available online: https://www.globalprivacyblog.com/security/china-issues-new-cybersecurity-law-to-protect-children/ (accessed on 18 January 2023).

- GamesIndustry.biz. China Gaming Regulator Publishes New Rules for Minors Targeting Play-Time Spending. Available online: https://www.gamesindustry.biz/china-gaming-regulator-publishes-new-restrictions-for-minors-targeting-playtime-spending (accessed on 18 January 2023).

- Parliament of Australia. Protecting the Age of Innocence. 2022. Available online: https://www.aph.gov.au/Parliamentary_Business/Committees/House/Social_Policy_and_Legal_Affairs/Onlineageverification/Report/ (accessed on 16 April 2023).

- The Guardian. Online Age Verification Being Trialled for Alcohol Sales Could Be Extended to Gambling and Video Games. 2021. Available online: https://www.theguardian.com/society/2021/dec/18/online-age-verification-being-trialled-for-alcohol-sales-could-be-extended-to-gambling-and-video-games/ (accessed on 16 January 2022).

- The Sydney Morning Herald. ‘Technical Issues’ Hobble Age Verification for Same-Day Alcohol Delivery. 2022. Available online: https://www.smh.com.au/national/nsw/technical-issues-hobble-age-verification-for-same-day-alcohol-delivery-20220609-p5askj.html/ (accessed on 16 April 2023).

- eSafety Commissioner. Age Verification. 2023. Available online: https://www.esafety.gov.au/about-us/consultation-cooperation/age-verification/ (accessed on 16 April 2023).

- eSafety Commissioner. Restricted Access System. 2021. Available online: https://www.esafety.gov.au/about-us/consultation-cooperation/restricted-access-system (accessed on 16 April 2023).

- IT News. Google Rolling Out Age Verification on YouTube, Play Store in Australia, year = 2022, Note. Available online: https://www.itnews.com.au/news/google-rolling-out-age-verification-on-youtube-play-store-in-australia-577499 (accessed on 16 April 2023).

- TextBlob. Tutorial: Quickstarts. 2022. Available online: https://textblob.readthedocs.io/en/dev/quickstart.html#sentiment-analysis (accessed on 2 August 2023).

- Kovalchuk, O.; Masonkova, M.; Banakh, S. The Dark Web Worldwide 2020: Anonymous vs. Safety. In Proceedings of the 2021 11th International Conference on Advanced Computer Information Technologies (ACIT), Deggendorf, Germany, 15–17 September 2021; pp. 526–530. [Google Scholar] [CrossRef]

- Edelman. 2024 Edelman Trust Barometer. Available online: https://www.edelman.com/trust/2024/trust-barometer (accessed on 15 June 2024).

- Trust, C. Loss of Public Trust in Government Is the Biggest Threat to Democracy in England. 2022. Available online: https://carnegieuktrust.org.uk/blog-posts/loss-of-public-trust-in-government-is-the-biggest-threat-to-democracy-in-england/ (accessed on 19 June 2024).

- IPPR. Revealed: Trust in Politicians at Lowest Level on Record. 2021. Available online: https://www.ippr.org/media-office/revealed-trust-in-politicians-at-lowest-level-on-record (accessed on 19 June 2024).

- Mellisa. 2021. Available online: https://www.melissa.com/age-verification/ (accessed on 16 June 2021).

- Williams, R.S.; Phillips-Weiner, K.J.; Vincus, A.A. Age Verification and Online Sales of Little Cigars and Cigarillos to Minors. Tob. Regul. Sci. 2020, 6, 152–163. [Google Scholar] [CrossRef]

| Method | Explanation | Purpose | Breadth | Depth of Process |

|---|---|---|---|---|

| Systematic Review | Carried out to produce an overview of primary studies with a specific set of objectives. It is conducted in such a way that reproducibility is fostered | Summarise a body of research in a particular domain | Specific question | Meticulously documented in-depth searching for studies relevant to specified question |

| Scoping Review | Overview of key concepts underpinning a particular research domain | Uncover research activity and reveal gaps in research | Broad Topic | Identify boundaries of research in a domain |

| Evidence Mappinv | The systematic organisation and illustration of a broad field of research evidence | Making a body of research easily accessible | Broad Topic | Providing a description of the area being studied |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jarvie, C.; Renaud, K. Online Age Verification: Government Legislation, Supplier Responsibilization, and Public Perceptions. Children 2024, 11, 1068. https://doi.org/10.3390/children11091068

Jarvie C, Renaud K. Online Age Verification: Government Legislation, Supplier Responsibilization, and Public Perceptions. Children. 2024; 11(9):1068. https://doi.org/10.3390/children11091068

Chicago/Turabian StyleJarvie, Chelsea, and Karen Renaud. 2024. "Online Age Verification: Government Legislation, Supplier Responsibilization, and Public Perceptions" Children 11, no. 9: 1068. https://doi.org/10.3390/children11091068

APA StyleJarvie, C., & Renaud, K. (2024). Online Age Verification: Government Legislation, Supplier Responsibilization, and Public Perceptions. Children, 11(9), 1068. https://doi.org/10.3390/children11091068