Abstract

Remote methods for data collection allow us to quickly collect large amounts of data, offering several advantages as compared to in-lab administration. We investigated the applicability of an online assessment of motor, cognitive, and communicative development in 4-month-old infants based on several items of the Bayley Scales of Infant Development, 3rd edition (BSID-III). We chose a subset of items which were representative of the typical developmental achievements at 4 months of age and that we could administer online with the help of the infant’s caregiver using materials which were easily available at home. Results showed that, in a sample of infants tested live (N = 18), the raw scores of the BSID-III were significantly correlated with the raw scores of a subset of items corresponding to those administered to a sample of infants tested online (N = 53). Moreover, for the “online” participants, the raw scores of the online assessment did not significantly differ from the corresponding scores of the “live” participants. These findings suggest that the online assessment was to some extent comparable to the live administration of the same items, thus representing a viable opportunity to remotely evaluate infant development when in-person assessment is not possible.

1. Introduction

Online testing during videoconference has represented a unique opportunity to continue developmental studies and clinical practice during the COVID-19 emergency. It has allowed us to overcome the social restrictions imposed by the pandemic, and to enhance accessibility and support for families [1,2,3]. The use of technology in healthcare, including synchronous video consultation, reduces costs and improves access to health services [4], and these positive effects may well extend beyond the pandemic emergency.

The use of online methodologies is also becoming a common practice in psychological research to obtain large amounts of data [5,6,7,8] and to reach people who might not be able to participate in laboratory settings [9]. Online methodologies are flexible and convenient to implement, as they allow fast data collection [10], increase sample diversity, and facilitate longitudinal research [11] (all features which are not always easily achievable with in-person testing). In addition, researchers are able to observe children in a familiar, more naturalistic environment. Nonetheless, online data collection can be also challenging: low-income families could be disadvantaged, likely having a lower availability of technological devices at home, and the safeguarding of privacy and data security is required. Moreover, when online assessments involve young children aged less than two years it can be difficult to ensure the standardization of the procedures, especially when the tasks are administered by the child’s caregiver [12], and to clearly observe the child’s non-verbal reactions to the items.

Over the last two years, there has been a flourishing of online developmental studies investigating a broad range of topics [13]. The findings obtained through remote testing of preschool children have generally been promising: 1- to 4-year-old children’s reaction-time data in a word-recognition paradigm obtained through an online assessment compared favorably with eye tracking and an in-person storybook approach [14]. Similarly, 3- and 4-year-old children’s performances in the change-of-location false belief task were similar between in-lab and online test settings [15]. Moreover, 4- to 5-year-old children tested on a battery of eight standardized cognitive tasks (involving verbal comprehension, fluid reasoning, visual spatial and working memory, attention and executive functioning, social perception, and numerical skills) performed similarly for most of these tasks regardless of whether they were tested live or online [16]. Additionally, online methods proved to be particularly useful when recording mealtimes of children younger than three years of age, allowing to better preserve the ecological validity of the observations [17].

So far, the number of reports on remote testing in infants is more limited as compared to older children and most of these studies involved the use of a looking-time methodology, e.g., [18]. However, results are not always consistent with live testing, probably because of methodological constraints on the stimuli presentation [19,20,21]. To our knowledge, only a few studies have attempted to perform online motor development assessments on infants, by remotely evaluating reaching and sitting skills in 3-to-5-month-old infants [22] and assessing the feasibility for parents of performing home video recordings of their infant to be used for offline motor development assessments [23]. However, there is still little evidence of structured and standardized online neurodevelopmental assessments to be used with infants.

In the present study, we aimed to test the extent to which a subset of items of the Bayley Scales of Infant and Toddler Development, 3rd edition (BSID-III) [24], could be administered online to obtain information about 4-month-old infants’ motor, cognitive and communicative skills, to be used for research purposes when in-person administration of the full scale is not feasible. The BSID-III is one of the most widely used tools for assessing the neurodevelopment of children aged zero to three years and is considered highly informative in terms of motor, cognitive, and communicative abilities. As other developmental assessment tools, the BSID-III is typically administered face-to-face by a qualified examiner in the presence of a caregiver. Specifically, we assessed whether (i) the subset of BSID-III items chosen for the online administration was representative of the full BSID-III scale by evaluating if, in a sample of infants tested live, the raw scores of the subset of BSID-III items were significantly correlated with the raw scores of the full BSID-III scale. Moreover, we assessed whether (ii) the online administration of a subset of BSID-III items was comparable to the live administration of the same BSID-III items by evaluating if the raw scores of the subset of BSID-III items chosen for the online administration differed between the sample of infants tested online and the sample of infants tested live.

2. Materials and Methods

2.1. Participants

We tested a total of 71 full-term Italian infants at 4 months of age. Fifty-three infants were observed online (“online participants”, gestational age: M = 39.51 weeks, SD = 1.1; age: M = 4.11 months, SD = 0.217) and 18 infants were administered the BSID-III [24] at home (“live participants”, gestational age: M = 39.60 weeks, SD = 1.13, age: M = 4.19 months, SD = 0.15). Each infant was tested once, either online or live.

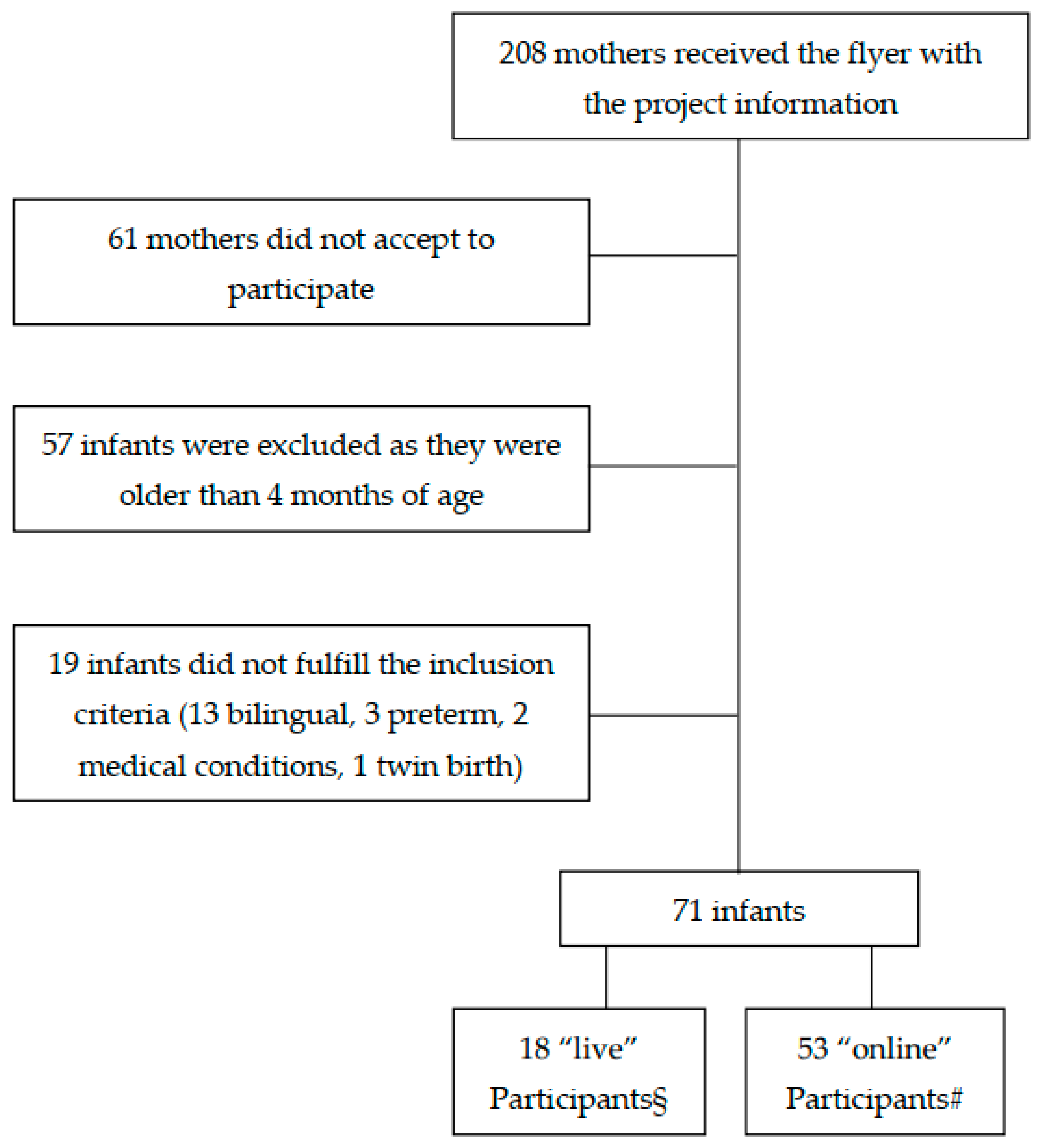

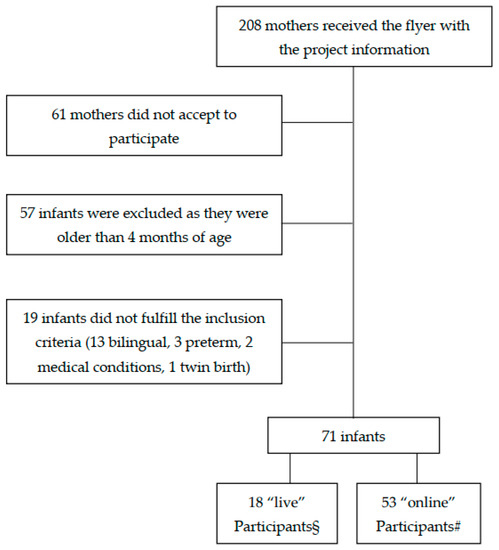

Both “online” and “live” participants were recruited during mother’s pregnancy or soon after birth for a larger longitudinal research project on the development of infant feeding behavior. The recruitment was made through advertising via social media, posters in pediatricians’ offices, and the newsletter of the magazine “Uppa Magazine” addressed to parents. Children who were born before 37 weeks of gestation, with congenital abnormalities, severe neurological deficits, from twin births, and/or systematically exposed to a second language other than Italian, were excluded from the sample. Parents, who accepted to participate with their infant provided a written informed consent for taking part in the study and to be video recorded. Figure 1 shows a flow chart of infant recruitment. All procedures were approved by the Ethics board of the Department of Dynamic and Clinical Psychology and Health Studies of Sapienza University of Rome (Prot. n. 0000315, 14 April 2020 and n. 0001209, 15 December 2020). All data were collected during a period of about seven months.

Figure 1.

Flow chart of infant recruitment. § These participants were tested before COVID-19 restrictions were applied in Italy. # These participants were tested after the applications of COVID-19-related restrictions.

2.2. Measures

We developed an online assessment of infant motor, cognitive, and communicative development based on a subset of items of the BSID-III [24]. BSID-III provides a norm-referenced developmental index for each of the following domains: cognitive (COG), receptive language (RL), expressive language (EL), fine motor (FM), and gross motor (GM). For our online assessment, for each subscale we selected a subset of items on the basis of three criteria: (i) their representativeness of the typical developmental abilities at 4 months of age (choosing, as the first item of each subscale, the item corresponding to the starting point of the BSID-III based on the age range, i.e., D), (ii) the availability at the infant’s home of materials as similar as possible to those used in the BSID-III, and (iii) the ease for the caregiver to administer the item and take care of the child at the same time. The final list of items administered online included, for each subscale: COG items 7, 8, 10, 14, 16, 17, 18, 21; RL items 3, 4, 5, 6, 8; EL items 1, 2, 3, 4, 5, 6; FM items 5, 6, 10, 11, 12, 13, 14; GM items 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 21.

Only the sample of N = 53 “online” participants received the online assessment, carried out by an experienced examiner (as reported in the “Procedure” section). For each item, we scored whether the infant showed (1) or not showed (0) a specific ability, based on the BSID-III manual. For some of the 53 “online” participants we could not include a few items in the calculation of the raw score due to audio or video problems, issues with internet connectivity, or—in a very few cases—examiner’s errors. For each subscale we included in the data analyses only those participants for whom we could score all the items administered: N = 46 for the COG subscale, N = 27 for the RL subscale, N = 46 for the EL subscale, N = 46 for the FM subscale, and N = 30 for the GM subscale. Most of the missing data for the RL and GM subscales concerned items which were sometimes difficult to observe or administer by the caregiver (e.g., RL6 “Turns to the source of the sound at least one time” and GM13 “Keeps the head in balance”).

For the “live” participants (N = 18) the standard BSID-III was administered at home by a trained examiner during a single home visit. In addition to the total score usually computed for each subscale following BSID-III manual rules, we also calculated raw scores considering only the subset of items chosen for the remote administration to the “online” participants (i.e., summing items scored as “1” for each subscale).

All mothers (N = 71) also completed a socio demographic questionnaire.

2.3. Procedure

As reported in the “Measures” section, all child assessments were conducted by a qualified professional with a degree in developmental psychology and trained to administer the BSID-III scale. Specifically, for the sample of “live participants”, BSID-III was administered at the child’s home by the examiner through observation and direct interaction with the child, whereas the sample of “online” participants received the assessment remotely through observations carried out via video conference using Skype or Jitsi Meet and recorded through the software Open Broadcaster Software (OBS) Studio for subsequent coding. A second observer scored 20% of the videoclips; the index of concordance was 1 for all items, except COG21, RL4, RL8, EL6 (0.91), RL6, EL2, FM11, FM13, GM17, GM18 (0.82). Each online assessment was carried out during a single video conference session while the child was at home, using the family’s own camera-equipped computer, phone, or tablet, and lasted approximately 25–30 min. To minimize interference, caregivers were previously instructed by the experimenter about the materials to put at the infant’s disposal (including a book with big and colored figures, a ring, a rattle or a noisy object, and a small toy), and were asked to remove not necessary toys and to be alone with the infant during the assessment. During the online assessment, parents were asked to sit on the floor next to the infant and to provide several infant’s postural adjustments according to the examiner’s instructions. The examiner continuously guided the caregiver in administering the items to the child and in introducing specific items during the session.

2.4. Data Analyses

First, for the “live” participants, for each subscale we performed Spearman correlations between the raw score of the full BSID-III and the raw score of the subset of items corresponding to those administered remotely to the “online” participants. Second, we compared “online” participants and “live” participants regarding demographic characteristics and raw scores of the subset of the BSID-III items administered remotely (“online” participants) or corresponding to those administered remotely to the “online” participants (“live” participants). We used the Chi-squared test to assess differences for categorical variables. For quantitative variables, we previously tested the heterogeneity of variances by the Levene test; we then assessed differences between group by means of the Student’s t-test, or the Welch t-test in case of heterogeneous variances.

3. Results

Among “live” participants, for each subscale the raw score of the full BSID-III was significantly correlated with the raw score obtained by summing the scores of the items corresponding to the subset of BSID-III items administered remotely to the “online” participants (cognitive: rs = 0.549, p = 0.018, receptive language: rs = 0.609, p = 0.007, expressive language: rs = 0.839, p < 0.001, fine motor: rs = 0.940, p < 0.001, gross motor: rs = 0.984, p < 0.001). This suggest the subset of BSID-III items chosen for the online administration was representative of the full BSID-III scale administered to the participants tested in presence.

As reported in Table 1, “online” participants and “live” participants did not significantly differ for demographic characteristics as age, gender, number of siblings in the household, birthweight for gestational age, level of maternal education, or income. Moreover, as reported in Table 2, “online” participants and “live” participants did not significantly differ for the raw scores of the subset of BSID-III items administered remotely to the “online” participants (which were extrapolated from the full BSID-III scale for the “live” participants). This suggests that the online administration of a subset of BSID-III items was comparable to the live administration of the same BSID-III items.

Table 1.

The table reports the descriptive values for the demographic variables for each group of participants (tested “online” or “live”), and the statistical values (Student’s t-test or Chi square) for the comparisons between the two groups.

Table 2.

The table reports, for each subscale of the online developmental assessment, the descriptive values of the raw scores of the subset of BSID-III items administered remotely to the “online” participants and of the same subset of BSID-III items administered in presence to the “live” participants, along with the statistical values for the comparisons between the two groups (Levene’s test, Student’s t-test, Observed and Minimum detectable Cohen’s d).

4. Discussion

In this study, we aimed to assess whether (i) a subset of BSID-III items chosen to be administered online was representative of the full BSID-III scale and (ii) the online assessment of this subset of BSID-III items was comparable to the live administration of the same BSID-III items. For “live” participants (who were administered the full BSID-III at home) we found significant correlations between the raw scores of each BSID-III subscale and the raw scores of a subset of BSID-III items corresponding to those administered remotely to a group of “online” participants. Moreover, we showed that the raw scores obtained for each subscale in the “online” sample were not significantly different from the corresponding scores computed for the “live” sample (as well as socio-demographic characteristics known to potentially affect the BSID-III scores). This suggests that the BSID-III items administered during the online assessment are somewhat representative of the full subscales of the BSID-III, at least for providing a qualitative assessment of child development and that the live and online administration of this subset of BSID-III items were to some extent comparable.

Our study represents the first attempt to remotely assess infants’ neurodevelopment in multiple domains. In fact, previous online studies involving infants and young children mainly collected data about single abilities (such as visual preference, behavioral responses to animations, performance in cognitive tasks or motor development, e.g., 14–16, 22). Strengths of our methodology included the possibility of collecting reasonably large observational datasets regarding the very early phases of motor, cognitive, and communicative development in a relatively short period of time, as also observed in other studies using online methods to evaluate different aspects of children’s development, e.g., [25]. Additionally, remotely observing the child at home (rather than in a laboratory or clinical settings) may be advantageous in terms of preserving the ecological validity of the data when evaluating some of the abilities targeted in the present study, such as early spontaneous communication [26]. Similarly, online assessments also provide the advantage that parents and examiners can easily postpone their meeting, if needed, and reschedule it when the infant is in a quiet alert state, which is fundamental for an optimal performance in perceptual, cognitive, and motor domains [27].

Similarly to other studies using online methods to assess children’s development, the present study has some limitations to consider, e.g., [13,27,28]. Although collecting data via live video connection was easily manageable for both the examiner and the families, the data quality was sometimes affected by this procedure. For instance, technological difficulties (e.g., audio or video problems) and issues with internet connectivity forced us to exclude some observations from the sample. Moreover, since the study took place at participants’ home, the environmental context differed for each participant and some interfering factors, including the furniture disposition, the lighting condition, the presence of potentially distracting objects, and the necessity to manage the setting by the caregiver (e.g., position of the digital device, child’s postural adjustments), may have influenced the quality of the observations. Furthermore, the fact that the parents directly administered the items under the examiner’s guidance may have further increased the variability of the assessment. Additionally, our online assessment may become more difficult to implement when children get older and become capable of independent locomotion, especially when one parent is alone at home with the child and needs to simultaneously administer the items and film the child.

Nonetheless, our exploratory study provides some evidence that online methodologies are a viable alternative to assess infants’ neurodevelopmental abilities by administering a subset of items drawn from the BSID-III when in-person assessment is not feasible. It should be noted that the use of this online assessment should be limited to research purposes. Indeed, a neurodevelopmental assessment carried out for clinical purposes needs a face-to-face visit, as motor, cognitive, and language subtests cannot be administered in a standardized format via telepractice. In this respect, in the recent Fourth Edition of Bayley Scales of Infant and Toddler Development (BSID-IV), the opportunity has been provided to observe children via telepractice by reviewing the BSID-IV items at the age-appropriate starting point. Still, since the Bayley Scales are not standardized in a telepractice mode, the examiner is recommended to treat these data with caution [29] as only qualitative information on children’s motor, cognitive, and communicative skills can be obtained, and standard scores cannot be computed.

Finally, due to COVID-19 restrictions we could test only a small sample of children live and could not test the same children both live and online. This should be the goal of future studies, in order to fully evaluate the robustness of our online assessment.

5. Conclusions

In the present study, we assessed the feasibility of an online assessment of motor, cognitive, and communicative development in 4-month-old infants based on several items of the Bayley Scales of Infant Development, 3rd edition, to be used for research purposes when in-person administration is not possible.

Our online assessment was to some extent comparable to the live administration of the same items, thus representing a viable opportunity to remotely evaluate infant development and to early detect information about motor, cognitive, and communicative skills. In fact (i) for live participants there were significant correlations between the raw scores of each BSID-III full subscale and the raw scores of the subset of BSID-III items corresponding to those administered remotely to a group of “online” participants, and (ii) the subset of the BSID-III items administered online did not significantly differ from the corresponding scores obtained in the sample of infants tested live.

Thus, despite some limitations, the large amount of infant data collected during the period of social restrictions imposed by COVID-19, and the precious developmental information obtained for motor, cognitive, and communicative domains, as well as the minimal costs for implementing this procedure, highlight the value of this online methodology for future investigations, especially when performing a live developmental assessment is not possible.

Author Contributions

Conceptualization, C.G., B.C., F.B. and E.A.; data curation, S.G.; formal analysis, F.C. and E.A.; funding acquisition, B.C., F.B. and E.A.; investigation, C.G., V.F., M.P. and G.P.; methodology, C.G., B.C., F.B. and E.A.; project administration, B.C., F.B. and E.A.; supervision, B.C., F.B. and E.A.; writing—original draft, C.G. and E.A.; writing—review and editing, C.G., B.C., V.F., M.P., G.P., F.B., F.C., S.G. and E.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Italian Ministry of Education, University and Research, Progetti di Rilevante Interesse Nazionale [PRIN 2017], grant number 2017WH8B84, which also covered the APC.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics board of the Department of Dynamic and Clinical Psychology and Health Studies of Sapienza University of Rome (Prot. n. 0000315, 14 April 2020 and n. 0001209, 15 December 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The full dataset is available at the following link: https://osf.io/ug2qm/?view_only=6fa07776ebbd4aa491d90378f40bd252. (accessed on 8 February 2022)

Acknowledgments

For their essential support during the recruitment, we are grateful to: Associazione Culturale Pediatri, Sergio Conti Nibali, Lorenzo Calia, and Giulia Basili of “UPPA Magazine”, Opera Nazionale Montessori, Sara Bettacchini (Associazione Culturale “Nate dalla Luna”), Bruna Sciotti e Tiziana Notarantonio (Centro Medico Pediatrico La Stella), Emilia Alvaro, Franco De Luca, Stefano Falcone, Francesco Gesualdo, Lucio Piermarini, Laura Reali, Paloma Boni, Elisa De Filippi, Stefania Grande, Chiara Guarino, Luigi Macchitella, Ada Maffei, Vito Minonne, Federico Sale, Giovanna Vitelli, Associazione “Millemamme”, Autosvezzamento.it. We warmly thank all the parents and the children who participated in the study: without their continuous cooperation this study would not have been possible.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Badawy, S.M.; Radovic, A. Digital approaches to remote pediatric health care delivery during the COVID-19 pandemic: Existing evidence and a call for further research. JMIR Pediatr. Parent. 2020, 3, e20049. [Google Scholar] [CrossRef] [PubMed]

- Galway, N.; Stewart, G.; Maskery, J.; Bourke, T.; Lundy, C.T. Fifteen-minute consultation: A practical approach to remote consultations for paediatric patients during the COVID-19 pandemic. Arch. Dis. Child.-Educ. Pract. 2020, 106, 206–209. [Google Scholar] [CrossRef]

- Wall-Haas, C.L. Connect, engage: Televisits for children with asthma during COVID-19 and after. J. Nurse Pract. 2021, 17, 293–298. [Google Scholar] [CrossRef] [PubMed]

- Bokolo, A. Jnr Implications of telehealth and digital care solutions during COVID-19 pandemic: A qualitative literature review. Inform. Health Soc. Care 2021, 46, 68–83. [Google Scholar] [CrossRef]

- Adjerid, I.; Kelley, K. Big data in psychology: A framework for research advancement. Am. Psychol. 2018, 73, 899–917. [Google Scholar] [CrossRef]

- van Gelder, M.M.H.J.; Merkus, P.J.F.M.; van Drongelen, J.; Swarts, J.W.; van de Belt, T.H.; Roeleveld, N. The PRIDE study: Evaluation of online methods of data collection. Paediatr. Perinat. Epidemiol. 2020, 34, 484–494. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tran, M.; Cabral, L.; Patel, R.; Cusack, R. Online recruitment and testing of infants with Mechanical Turk. J. Exp. Child Psychol. 2017, 156, 168–178. [Google Scholar] [CrossRef]

- Rhodes, M.; Rizzo, M.; Foster-Hanson, E.; Moty, K.; Leshin, R.; Wang, M.M.; Ocampo, J.D. Advancing developmental science via unmoderated remote research with children. J. Cogn. Dev. 2020, 21, 477–493. [Google Scholar] [CrossRef]

- Birnbaum, M.H. Human research and data collection via the internet. Annu. Rev. Psychol. 2004, 55, 803–832. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Semmelmann, K.; Hönekopp, A.; Weigelt, S. Looking tasks online: Utilizing webcams to collect video data from home. Front. Psychol. 2017, 8, 1582. [Google Scholar] [CrossRef] [Green Version]

- Sheskin, M.; Scott, K.; Mills, C.M.; Bergelson, E.; Bonawitz, E.; Spelke, E.S.; Fei-Fei, L.; Keil, F.C.; Gweon, H.; Tenenbaum, J.B.; et al. Online developmental science to foster innovation, access, and impact. Trends Cogn. Sci. 2020, 24, 675–678. [Google Scholar] [CrossRef]

- Sheskin, M.; Keil, F. TheChildLab.com: A Video Chat Platform for Developmental Research; TheChildLab.com: New Haven, CT, USA, 2018. [Google Scholar] [CrossRef]

- Zaadnoordijk, L.; Buckler, H.; Cusack, R.; Tsuji, S.; Bergmann, C. A global perspective on testing infants online: Introducing ManyBabies-AtHome. Front. Psychol. 2021, 12, 703234. [Google Scholar] [CrossRef]

- Frank, M.C.; Sugarman, E.; Horowitz, A.C.; Lewis, M.L.; Yurovsky, D. Using tablets to collect data from young children. J. Cogn. Dev. 2016, 17, 1–17. [Google Scholar] [CrossRef]

- Schidelko, L.P.; Schünemann, B.; Rakoczy, H.; Proft, M. Online testing yields the same results as lab testing: A validation study with the false belief task. Front. Psychol. 2021, 12, 703238. [Google Scholar] [CrossRef]

- Nelson, P.M.; Scheiber, F.; Laughlin, H.M.; Demir-Lira, Ö. Comparing face-to-face and online data collection methods in preterm and full-term children: An exploratory study. Front. Psychol. 2021, 12, 733192. [Google Scholar] [CrossRef]

- Venkatesh, S.; DeJesus, J.M. Studying children’s eating at home: Using synchronous videoconference sessions to adapt to COVID-19 and beyond. Front. Psychol. 2021, 12, 703373. [Google Scholar] [CrossRef] [PubMed]

- Scott, K.; Chu, J.; Schulz, L. Lookit (part 2): Assessing the viability of online developmental research, results from three case studies. Open Mind 2017, 1, 15–29. [Google Scholar] [CrossRef]

- Smith-Flores, A.S.; Perez, J.; Zhang, M.H.; Feigenson, L. Online measures of looking and learning in infancy. Infancy 2022, 27, 4–24. [Google Scholar] [CrossRef]

- Bochynska, A.; Dillon, M.R. Bringing home baby euclid: Testing infants’ basic shape discrimination online. Front. Psychol. 2021, 12, 734592. [Google Scholar] [CrossRef]

- Bánki, A.; de Eccher, M.; Falschlehner, L.; Hoehl, S.; Markova, G. Comparing online webcam-and laboratory-based eye-tracking for the assessment of infants’ audio-visual synchrony perception. Front. Psychol. 2022, 12, 733933. [Google Scholar] [CrossRef]

- Libertus, K.; Violi, D.A. Sit to talk: Relation between motor skills and language development in infancy. Front. Psychol. 2016, 7, 475. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boonzaaijer, M.; van Wesel, F.; Nuysink, J.; Volman, M.J.; Jongmans, M.J. A home-video method to assess infant gross motor development: Parent perspectives on feasibility. BMC Pediatr. 2019, 19, 392. [Google Scholar] [CrossRef] [PubMed]

- Bayley, N. Bayley Scales of Infant and Toddler Development, 3rd ed.; Bayley-III; The Psychological Corporation: San Antonio, TX, USA, 2006. [Google Scholar]

- Shields, M.M.; McGinnis, M.N.; Selmeczy, D. Remote research methods: Considerations for work with children. Front. Psychol. 2021, 12, 703706. [Google Scholar] [CrossRef] [PubMed]

- Manning, B.L.; Harpole, A.; Harriott, E.M.; Postolowicz, K.; Norton, E.S. Taking language samples home: Feasibility, reliability, and validity of child language samples conducted remotely with video chat versus in-person. J. Speech Lang. Hear. Res. 2020, 63, 3982–3990. [Google Scholar] [CrossRef]

- Lamb, M.E.; Bornstein, M.H.; Teti, D.M. Development in Infancy: An Introduction; Lawrence Erlbaum Associates: Mahweh, NJ, USA, 2002. [Google Scholar]

- Chuey, A.; Asaba, M.; Bridgers, S.; Carrillo, B.; Dietz, G.; Garcia, T.; Leonard, J.A.; Liu, S.; Merrick, M.; Radwan, S.; et al. Moderated online data-collection for developmental research: Methods and replications. Front. Psychol. 2021, 12, 734398. [Google Scholar] [CrossRef]

- Pearson Edition. Administering the Bayley Scales of Infant and Toddler Development, 4th ed.; Bayley-4 Via Telepractice. Available online: https://www.pearsonassessments.com/content/school/global/clinical/us/en/professional-assessments/digital-solutions/telepractice/telepractice-and-the-bayley-4.html (accessed on 28 February 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).