Abstract

With the increasing use of large-scale language model-based AI tools in modern learning environments, it is important to understand students’ motivations, experiences, and contextual influences. These tools offer new support dimensions for learners, enhancing academic achievement and providing valuable resources, but their use also raises ethical and social issues. In this context, this study aims to systematically identify factors influencing the usage intentions of text-based GenAI tools among undergraduates. By modifying the core variables of the Unified Theory of Acceptance and Use of Technology (UTAUT) with AI literacy, a survey was designed to measure GenAI users’ intentions to collect participants’ opinions. The survey, conducted among business students at a university in South Korea, gathered 239 responses during March and April 2024. Data were analyzed using Partial Least Squares Structural Equation Modeling (PLS-SEM) with SmartPLS software (Ver. 4.0.9.6). The findings reveal that performance expectancy significantly affects the intention to use GenAI, while effort expectancy does not. In addition, AI literacy and social influence significantly influence performance, effort expectancy, and the intention to use GenAI. This study provides insights into determinants affecting GenAI usage intentions, aiding the development of effective educational strategies and policies to support ethical and beneficial AI use in academic settings.

1. Introduction

In modern learning environments, the utilization of large language model-based AI tools, particularly those specialized in text generation like Generative AI (GenAI) models has significantly increased. These tools are recognized as valuable resources that provide new dimensions of support for learners, potentially enhancing academic achievement [1,2,3,4]. Among the various Gen AI tools, text-based AI, especially ChatGPT, has been actively introduced [2,5] and used in various academic fields, such as language, tourism, and health education [6,7,8]. Furthermore, on the practical side, GenAI tools facilitate personalized learning experiences and assist in complex problem-solving tasks [9]. For example, platforms like LinkedIn Learning (linkedin.com/learning) and Skillshare (skillshare.com) use GenAI to tailor business and economics courses to individual learning goals and career aspirations. A business student aiming to specialize in digital marketing might receive a personalized learning path that includes courses, case studies, and industry insights tailored to the latest trends in digital marketing.

However, the use of these tools does not come without challenges. As learners in various disciplines increasingly use large language models like ChatGPT, issues such as academic dishonesty, copyright infringement, and biased information generation become more pronounced [10]. Additionally, AI-generated biased information can perpetuate existing stereotypes and misinformation [11,12]. Consequently, many researchers emphasize literacy in using AI in education, which involve not just the technical use of AI tools, but also the understanding of their capabilities and limitations, critical evaluation of their outputs, and the responsible use of these technologies for learning [13,14,15,16,17,18].

In this context, this study aims to systematically understand the diverse factors influencing undergraduates’ intentions to use text-based AI tools like ChatGPT for learning purposes. To achieve this, the study incorporates the Unified Theory of Acceptance and Use of Technology (UTAUT) model [19], along with additional variables related to AI literacy. AI literacy encompasses the capabilities required to effectively use artificial intelligence technology [15,20,21]. These capabilities include understanding AI technology, using it appropriately, and critically evaluating outcomes. Enhancing AI literacy is crucial for ensuring that students can interact responsibly with AI systems [18,20,21]. This study explores how the performance expectancy, effort expectancy, social influence, and facilitating conditions outlined in the UTAUT model interact with AI literacy to influence students’ intentions to use text-based GenAI tools. This comprehensive understanding will provide insight into students’ motivations and experiences when using AI tools, contributing to more effective application and management of these tools in future educational settings [22].

The study initiates a discourse aimed at delving into the determinants underpinning the intention to use text-based GenAI for learning. Subsequently, the research hypotheses are formulated, and the research model is presented in Section 2. Section 3 delineates the chosen research methodology, including the survey design and data collection processes. Section 4 delivers both descriptive and conceptual findings, offering detailed analysis of the data. Section 5 concludes with a comprehensive discussion, interpreting the results within the context of the existing literature, practical and policy implications. It also presents potential opportunities for future research, highlighting the need for ongoing investigation into the educational impacts of AI in learning environments.

2. Literature Review and Research Hypotheses

2.1. Text-Based GenAI in Education and AI Literacy

The adoption of text-based GenAI tools in educational environments has significantly increased, providing enhanced support mechanisms for learners [3]. Text-based GenAI utilizes large language models to generate, analyze, and translate natural language. A notable example is OpenAI’s GPT-3, which can generate new text based on vast amounts of training data and given inputs. The primary functions of text-based GenAI include text generation, summarization, translation, question answering, and conversation generation. These tools can be used in various ways within educational settings, offering new learning experiences for students and more efficient teaching tools for educators [11]. For instance, OpenAI’s ChatGPT can act as an interactive learning assistant, answering students’ questions, explaining concepts, and providing learning materials in virtual classrooms. This personalized support can enhance learning efficiency by allowing students to receive assistance and answers at any time. Such tools are praised for providing personalized support, facilitating complex problem-solving, and improving overall academic performance [9].

However, the use of such advanced AI technologies in education has challenges as well as opportunities [11,22,23,24]. Recently, there has been an increase in instances where students misuse text-based GenAI tools for assignments and exams [10]. For example, students might submit AI-generated text as their own essays. This undermines the true purpose of learning and hinders genuine academic growth. When AI tools generate new text based on existing copyrighted materials, issues of copyright infringement can arise [25]. If the AI models are trained on biased data, the generated text may also contain biased information, reinforcing stereotypes and spreading misinformation [12]. Over-reliance on AI tools can diminish students’ critical thinking and creative problem-solving skills, potentially degrading their learning abilities and academic achievements over time. Furthermore, the gap in access to AI tools can widen educational inequalities, exacerbating social disparities [11].

In this context, AI literacy becomes crucial for students using ChatGPT for learning [15]. AI literacy is a relatively new area of study focused on what abilities people need to live, learn, and work successfully in a world increasingly shaped by AI-driven technologies [26]. AI literacy is the ability to understand, use, evaluate, and ethically engage with AI technologies [20].

Most of all, AI literacy equips students with the understanding of how AI tools work, enabling them to use these technologies effectively and responsibly [20]. Students who comprehend the underlying mechanisms of AI are better positioned to critically evaluate the outputs generated by tools like ChatGPT, distinguishing between reliable information and potential misinformation [18]. This critical evaluation is essential in preventing the spread of biased or inaccurate information [21]. For example, a student with higher AI literacy might be more likely to discern when ChatGPT is a suitable tool for learning (e.g., brainstorming ideas, getting language help) versus when other approaches might be more effective (e.g., in-depth research, critical analysis).

Furthermore, AI literacy helps students appreciate the ethical considerations associated with using AI [18,26]. They become aware of issues such as academic dishonesty, copyright infringement, and data privacy. This awareness encourages students to use AI tools in a manner that upholds academic integrity and respects intellectual property rights. By understanding the ethical implications, students can avoid misusing AI-generated content and adhere to standards that promote genuine learning.

Last, AI literacy can foster greater acceptance of AI technologies [18,21]. Students with higher AI literacy might be more open to exploring and experimenting with AI tools like ChatGPT for learning purposes. They might feel more confident in their ability to use and navigate such technologies effectively. Moreover, fostering AI literacy can mitigate the risks of over-reliance on AI tools. Students who are literate in AI are more likely to use these tools as supplements to their learning rather than replacements for their own critical thinking and problem-solving efforts. This balanced approach ensures that AI tools enhance rather than hinder the development of essential cognitive skills. Additionally, promoting AI literacy helps address educational inequalities by providing all students with the skills needed to navigate and leverage AI technologies. This can bridge the gap between those with easy access to AI tools and those without, fostering a more inclusive and equitable educational environment.

Therefore, this study aims to explore the factors driving students to use text-based GenAI in educational environments by integrating AI literacy into the Unified Theory of Acceptance and Use of Technology (UTAUT). To do this, researchers can gain a more nuanced understanding of the factors that influence students’ use of AI tools. This expanded model helps identify how the core variables of UTAUT interact with AI literacy to affect students’ intentions to use AI tools. Understanding these interactions can inform the development of educational strategies and policies that support the ethical and effective use of AI in learning environments.

2.2. Research Hypotheses

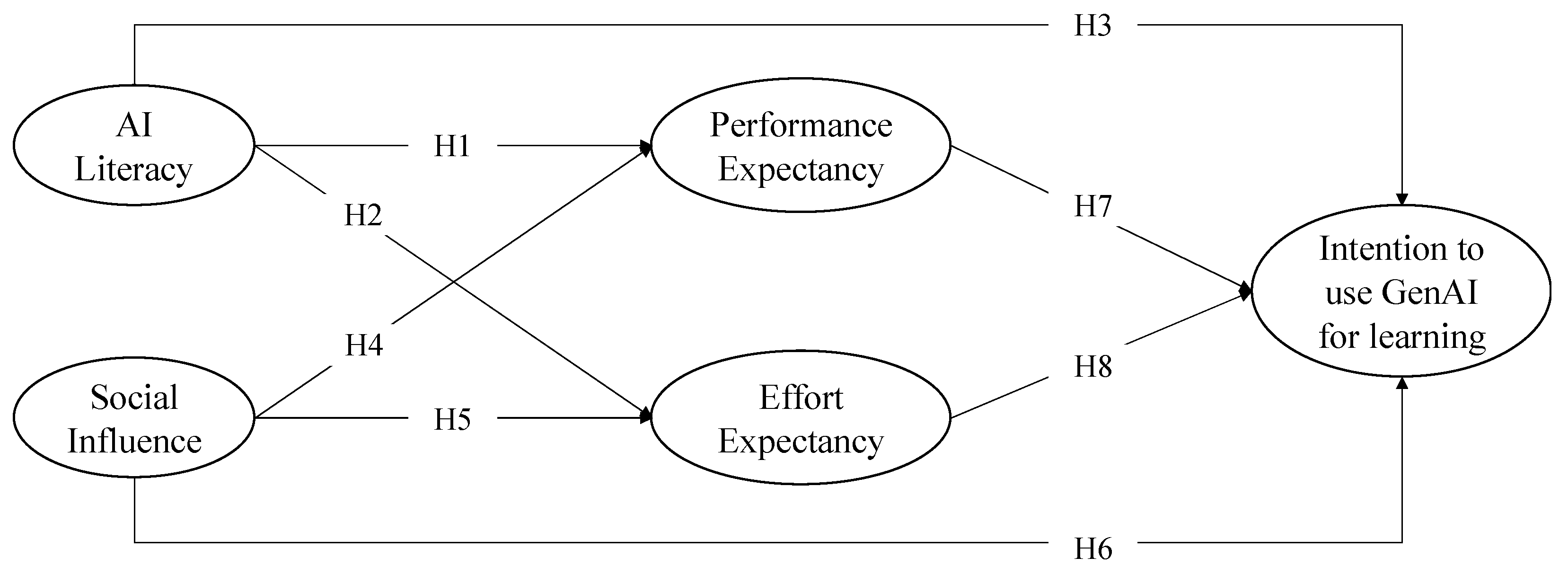

The study developed an enhanced research framework by incorporating AI literacy into the UTAUT model. This enhanced research model, presented in Figure 1, suggests that the intention to use text-based GenAI for learning is influenced by four key factors: performance expectancy, effort expectancy, social influence, and AI literacy.

Figure 1.

Research model.

Drawing from the theoretical definitions delineated by [19], the intention to use text-based GenAI for learning was defined as the degree to which a student plans or intends to use text-based GenAI tools, such as ChatGPT, for legitimate educational purposes within an academic environment. This definition explicitly excludes any form of academic dishonesty, ensuring that the focus is on responsible and ethical use of these tools for learning activities, such as having concepts explained or generating practice questions. Social influence was defined as the degree to which students perceive that important others (e.g., peers, instructors, family members) believe they should use text-based GenAI tools for their learning activities. Performance expectancy was defined as the degree to which students believe that using text-based GenAI tools will help them achieve better academic performance and improve their learning outcomes. Effort expectancy was defined as the degree to which students perceive that using text-based GenAI tools will be easy and require minimal effort.

In the extended components of the research model, AI literacy was assumed to exert direct influences on performance expectancy, effort expectancy, and intention to use text-based GenAI for learning. AI literacy was defined as the competence required to use artificial intelligence technology effectively [15,20,21]. This includes understanding AI technologies, utilizing them appropriately, and critically evaluating their outputs for learning.

When students have a higher level of AI literacy, they are more likely to recognize the potential benefits and capabilities of text-based AI tools like GenAI in educational settings. This awareness can improve their performance expectancy regarding these tools. Additionally, students with greater AI literacy are likely to find text-based AI tools easier to use due to their better understanding of how these tools function and how to interact with them effectively. Consequently, students with high AI literacy are more likely to appreciate the value and benefits of text-based GenAI tools, increasing their intention to use these tools for learning purposes. Based on this discussion, the following hypotheses were formulated:

H1.

AI literacy is positively related to performance expectancy for GenAI use for learning.

H2.

AI literacy is positively related to effort expectancy for GenAI use for learning.

H3.

AI literacy is positively related to intention to use GenAI use for learning.

When students perceive positive social pressure or encouragement from peers, instructors, or family members to use text-based GenAI tools, it can strengthen their beliefs about the performance benefits of these tools. Moreover, encouragement and support from their social circle can reduce the perceived effort required to use text-based GenAI tools, as students feel more confident and supported in their usage. When students perceive positive social pressure or encouragement from peers, instructors, or family members, it can enhance their intention to use text-based GenAI tools. Hence, the following hypotheses were developed:

H4.

Social influence is positively related to performance expectancy for GenAI use for learning.

H5.

Social influence is positively related to effort expectancy for GenAI use for learning.

H6.

Social influence is positively related to intention to use GenAI use for learning.

If students think that text-based GenAI tools will improve their academic performance, they are more likely to intend to use these tools. This stems from the perceived usefulness of the tools in enhancing their learning outcomes, such as better grades, more efficient study processes, and a deeper understanding of the subject matter. When students recognize that these tools can provide significant academic benefits, their motivation to incorporate them into their study routines increases. Furthermore, when students perceive that text-based GenAI tools are easy to use, their intention to use these tools also rise. Ease of use involves the intuitive nature of the tools, user-friendly interfaces, and the minimal effort required to integrate these tools into their daily academic activities. If the tools are straightforward and do not require extensive technical knowledge or training, students are less likely to feel intimidated or frustrated by their use. As a result, the simplicity and accessibility of text-based GenAI tools make them more appealing to students, encouraging them to utilize these resources to support their educational goals. Consequently, the following hypotheses were formulated:

H7.

Performance expectancy is positively related to intention to use GenAI use for learning.

H8.

Effort expectancy is positively related to intention to use GenAI use for learning.

3. Methodology

3.1. Research Instrument Development

The empirical strength of the relationships in the proposed model is assessed using partial least squares structural equation modeling (PLS-SEM) in this study. The operational definitions of the five variables in the research model—performance expectancy, effort expectancy, social influence, AI literacy, and intention to use text-based GenAI for learning—were refined and extended to fit this study’s focus on ChatGPT, a GenAI specialized in text generation. To develop measurement items for these operational definitions, previously established items that have demonstrated reliability and validity in previous research were adapted. The measurement instrument was designed using a 7-point Likert scale, where responses ranged from “Disagree strongly” (1) to “Agree strongly” (7). Table 1 provides a detailed description of the operational definitions and specific measures corresponding to each variable.

Table 1.

Survey items.

3.2. Sample and Data Collection

To validate the research model, an online survey was conducted using an online survey software (Google forms) over a period of two months, from 19 April to 7 May 2024. The survey included individuals comprising both men and women who were university freshmen or above and were aware of ChatGPT. Prior to commencing the survey, participants received a thorough explanation of the research objectives. Only those who demonstrated full comprehension of the study’s purpose and provided written consent were included. A total of 250 responses were collected from the survey, out of which 238 valid responses were used for the final analysis after excluding 12 responses due to non-responsiveness or multiple missing values. The survey respondents were evenly distributed across different genders. Since the survey targeted university students, all participants were in their twenties and had completed high school as their highest level of education. The survey sample included students from various academic years. Most participants were freshmen, accounting for 73.9% (269 students) of the total sample. Sophomores represented 8.2% (30 students), juniors comprised 9.3% (34 students), and seniors made up 3.0% (11 students). This distribution reflects a higher representation of first-year students in the study.

4. Results

4.1. Survey Validation

The research model was evaluated through a comprehensive review and comparison of both the measurement and structural models. To ensure the soundness of the analytical outcomes, rigorous adherence to standard protocols for PLS-SEM analysis, as detailed in the work of [28], was maintained. Criteria such as factor loadings, composite reliability (CR), and average variance extraction (AVE) were rigorously used to evaluate the reliability and validity of the measurement model. Both the calculated values for CR and AVE exceeded the recommended thresholds of 0.70 and 0.50, respectively, indicating the robustness of the measurement model. Detailed findings of the construct reliability assessment are presented in Table 2.

Table 2.

The results of construct reliability.

To assess discriminant validity, cross-loading values were analyzed. Table 3 illustrates that each indicator’s loading on its corresponding construct was higher than its loadings on other constructs. This result confirms the distinctiveness of each construct, demonstrating that the indicators are more strongly correlated with their respective constructs than with others [29,30]. This finding reinforces the discriminant validity within the dataset, affirming that the constructs measure distinct aspects of the conceptual model.

Table 3.

The results of discriminant validity.

Table 4 displays the descriptive statistics and correlation analysis results for measurements including AI literacy, social influence, performance expectancy, effort expectancy, and intention to use text-based GenAI for learning.

Table 4.

Descriptive statistics and correlation analysis results.

4.2. Hypothesis Testing and Discussion

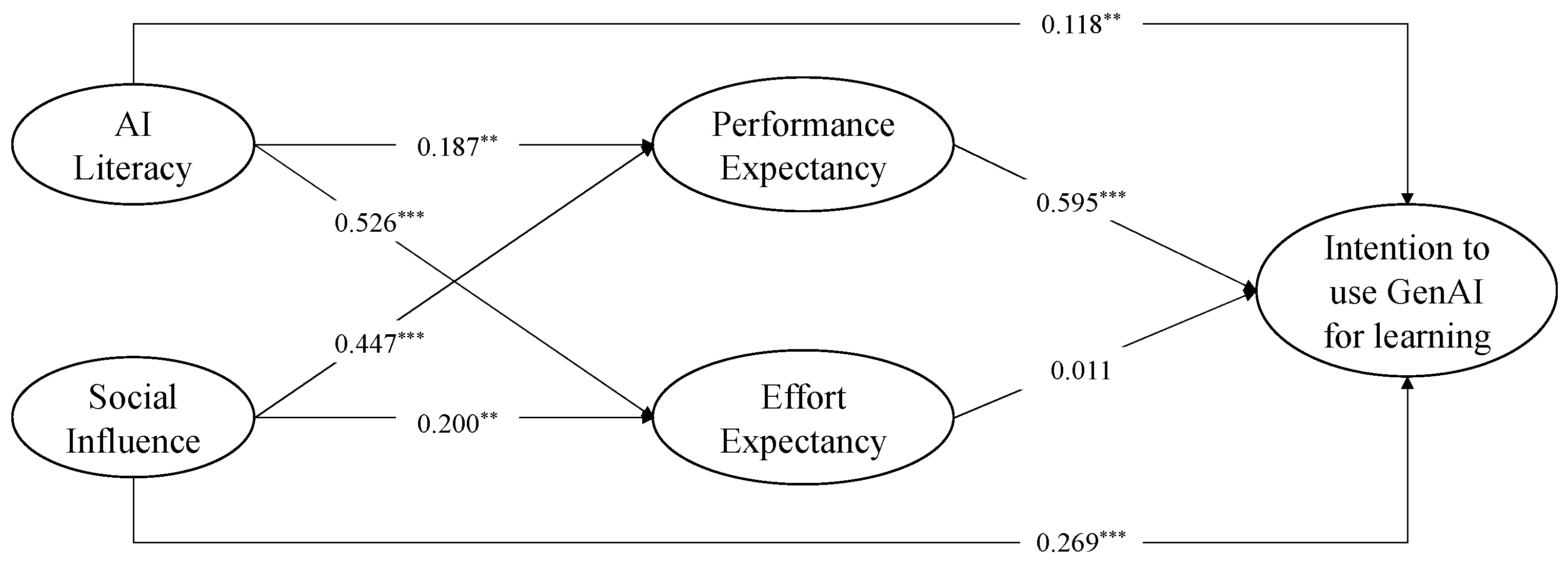

The hypotheses were tested through PLS-SEM, and the findings are illustrated in Figure 2. The results of this study provide significant insights into the factors influencing the intention to use text-based GenAI tools for learning among university students. The model accounted for 34.9% of the variance in intention to use text-based GenAI for learning. Path analysis revealed significant relationships between variables. The analysis demonstrates the relationships between AI literacy, performance expectancy, effort expectancy, social influence, and the intention to use text-based GenAI tools.

Figure 2.

Structural model results. Note: ** p < 0.01. *** p < 0.001.

The path coefficient from AI literacy to performance expectancy (β = 0.187, p < 0.01) is statistically significant, confirming Hypothesis 1 (H1). This indicates that higher levels of AI literacy are associated with increased performance expectancy regarding the use of text-based GenAI tools. This finding aligns with previous research suggesting that a better understanding of AI technologies enhances students’ perceptions of their potential benefits [9]. When students are knowledgeable about AI, they are more likely to recognize the value these tools bring to their academic performance, thereby setting higher performance expectancy.

The significant path coefficient from AI literacy to effort expectancy (β = 0.526, p < 0.001) supports Hypothesis 2 (H2). This result suggests that students with higher AI literacy perceive text-based GenAI tools as easier to use. Familiarity and competence in using AI technologies reduce the perceived effort required to engage with these tools, making them more accessible and user-friendly. This is consistent with the findings of [18], who highlighted the role of AI literacy in lowering perceived complexity and effort.

The direct effect of AI literacy on the intention to use text-based GenAI for learning (β = 0.118, p < 0.01) supports Hypothesis 3 (H3). This suggests that students with higher AI literacy are more inclined to use text-based GenAI tools for their studies. Competence in understanding and utilizing AI technologies likely increases students’ willingness to integrate these tools into their learning processes, confirming the importance of AI literacy in technology adoption [18].

The positive and significant path coefficient from social influence on performance expectancy (β = 0.447, p < 0.001) confirms Hypothesis 4 (H4). This result indicates that social influence, such as encouragement from peers, instructors, and family members, positively impacts students’ beliefs about the performance benefits of text-based GenAI tools. Social encouragement likely enhances the perceived utility of these tools, leading to higher performance expectancy, as suggested by the UTAUT model [19].

Hypothesis 5 (H5) is also supported, with a significant path coefficient from social influence on effort expectancy (β = 0.200, p < 0.01). This finding implies that social influence positively affects the perceived ease of use of text-based GenAI tools. Support and encouragement from influential figures can boost students’ confidence and reduce the perceived effort associated with using these tools. This aligns with the findings of [31], who demonstrated that social influence significantly impacts perceived ease of use in the context of e-learning systems. Their study showed that when students received support and encouragement from peers and instructors, they felt more confident in using the technology, which, in turn, reduced the perceived effort required to navigate and utilize the e-learning platform effectively. Similarly, the positive reinforcement from influential figures in this study likely contributed to a reduced perception of effort associated with using GenAI tools.

The significant path coefficient from social influence on the intention to use GenAI (β = 0.269, p < 0.001) supports Hypothesis 6 (H6). This result indicates that social influence significantly enhances students’ intention to use text-based GenAI tools. The encouragement and approval from peers, instructors, and family members play a crucial role in motivating students to adopt these technologies, reinforcing the importance of social dynamics in technology acceptance.

The path coefficient from performance expectancy to the intention to use GenAI (β = 0.595, p < 0.001) is highly significant, validating Hypothesis 7 (H7). This result highlights that students who believe text-based GenAI tools will enhance their academic performance are more likely to intend to use these tools. This finding is consistent with the core premise of the UTAUT model, where performance expectancy is a critical determinant of technology acceptance [19].

Contrary to Hypothesis 8 (H8), the path coefficient from effort expectancy to the intention to use GenAI (β = 0.011, p = 0.844) is not significant. This suggests that perceived ease of use does not significantly influence the intention to use text-based GenAI tools among students. This finding deviates from the traditional UTAUT model, where effort expectancy is typically a significant predictor of intention. It may imply that for text-based GenAI tools, the perceived benefits (performance expectancy) outweigh the concerns about effort, as students might be more focused on the potential academic advantages.

5. Conclusions and Limitations

This study systematically identified the factors influencing the usage intentions of text-based GenAI tools among undergraduates by modifying the core variables of the UTAUT with AI literacy. A survey was designed to measure text-based GenAI users’ intentions, effectively incorporating variables related to AI literacy. The survey collected 239 responses of business students at a university in South Korea. The data were analyzed using PLS-SEM. The findings of this study underscore the critical roles of AI literacy, performance expectancy, and social influence in shaping students’ intentions to use text-based GenAI tools for learning. While effort expectancy did not show a significant direct effect, the overall model highlights the multifaceted nature of technology adoption in educational settings.

This study suggests implications for academic researchers. First, this study extends the UTAUT by incorporating AI literacy as a crucial factor. By modifying the core variables of UTAUT, this study provides a more comprehensive understanding of technology acceptance in the context of text-based GenAI tools, specifically in educational settings. Second, the study adds to the growing body of literature on AI literacy regarding using AI tools effectively, critically evaluating their outputs, and adhering to ethical standards. This contributes to a deeper theoretical understanding of AI literacy and its impact on technology acceptance. Last, by conducting a survey among business students and analyzing the data using PLS-SEM, the study provides empirical evidence on the factors influencing the intention to use text-based GenAI tools in educational contexts. These findings offer valuable insights for future academic research on AI adoption and integration in education.

This study also suggests implications for practitioners. First, the findings offer practical insights for educators on how to promote the use of text-based GenAI tools effectively. Understanding that performance expectancy significantly affects the intention to use these tools can help educators emphasize the benefits of text-based GenAI in enhancing academic performance. Second, the study highlights the importance of AI literacy in influencing students’ usage intentions. Educational institutions play a crucial role in guiding the ethical use of AI tools. Policies and guidelines that promote academic integrity and provide clear instructions on acceptable AI use are essential in mitigating potential misuse [22]. Institutions must also invest in training programs to enhance AI literacy among students, ensuring they can use these tools effectively and responsibly [18]. This can guide the development of targeted educational programs and workshops to enhance students’ AI literacy, ensuring they can use AI tools effectively and ethically. Last, practical applications of this research can lead to improved learning experiences for students. By understanding the factors that influence text-based GenAI adoption, educational institutions can better integrate these tools into their curricula, offering personalized and efficient learning support.

This study also suggests implications for government agencies. First, this study provides policymakers with evidence-based insights into the determinants of GenAI tool usage in education. This can inform the creation of policies that support the development of AI literacy among students, ensuring they can effectively and responsibly use AI technologies in educational institutions. Second, given the ethical and social issues associated with AI tool usage, the study underscores the need for comprehensive ethical guidelines. Policymakers can develop and enforce regulations that ensure the responsible use of AI in education, protecting both students and educational standards. Third, the importance of AI literacy identified in this study can drive policy initiatives aimed at incorporating AI literacy into the education system. This includes funding for AI literacy programs, teacher training, and curriculum development to prepare students for a future where AI is ubiquitous.

While this study provides valuable insights into the factors influencing the intention to use text-based GenAI tools in educational settings, several limitations should be acknowledged.

One limitation is the generalizability of the findings, as the samples in this study are likely relatively homogeneous. Most of the variables scored slightly higher than neutral, with a relatively small standard deviation, indicating that many students provided similar answers. Despite the homogeneous scores, statistical analyses still showed a significant relationship between the variables and their intentions. This suggests that even within a narrow range of self-reported variables, changes in these variables may affect the intention to use GenAI tools. This may be because the sample in this study consisted mostly of first-year business students. This concentration may not fully represent the perspectives and experiences of senior students, who may have different levels of exposure to and familiarity with text-based GenAI tools. Additionally, the study was conducted on business students at a university in Korea, limiting the generalizability of the results to different populations, such as students from different educational backgrounds, disciplines, universities, or cultural contexts. Future studies should include larger and more diverse samples to increase the generalizability of the results.

Another limitation is the scope of this study, which was limited to the analysis of five key variables and their interactions. This focused approach has allowed for detailed and rigorous investigations of these key factors, but future studies may include additional variables and expand the scope to explore more complex interactions. For example, other factors such as technical infrastructure, institutional support, and specific features of the text-based GenAI tools themselves may also play important roles. Future research may provide a more comprehensive and holistic understanding of GenAI adoption and usage intentions by considering a wider range of factors and investigating these complex interactions.

Additionally, this study measured perceived AI literacy and intention to use GenAI tools using self-report questionnaire items, rather than assessing actual AI competency and the actual degree of GenAI tool usage. According to the Unified Theory of Acceptance and Use of Technology (UTAUT), this decision was based on the fact that intention to use is a powerful predictor of actual usage behavior. Additionally, GenAI tools such as ChatGPT are relatively new in the educational field, so many students may not have had the opportunity to use these tools extensively yet. By measuring intention, this paper aimed to capture the potential for adoption even among students who do not frequently use GenAI tools. Furthermore, self-reported AI literacy is valuable because it reflects students’ confidence and willingness to engage with AI technology, which are important factors in technology adoption and utilization. However, objective measurements of actual AI literacy and the degree of GenAI tool usage may provide a more direct and accurate reflection of GenAI tool adoption and usage. Future studies should consider including these aspects for a more comprehensive understanding of GenAI adoption and usage behavior.

Moreover, while ethical considerations are important, they were not the primary focus of this study. The ethical implications of using GenAI tools, such as issues related to academic integrity, data privacy, and the potential for bias in AI-generated content, were acknowledged but not deeply explored. These aspects are crucial for ensuring the responsible use of AI technologies in educational settings. Future research should incorporate ethical dimensions more explicitly to provide a more balanced understanding of GenAI tool usage. This could include examining how students perceive and navigate ethical challenges when using AI tools like ChatGPT, investigating the effectiveness of existing guidelines and policies in promoting ethical AI use, and exploring the development of new frameworks to better address ethical concerns. Additionally, studies could focus on the long-term impacts of AI literacy programs that include ethical training, assessing how well these programs prepare students to handle ethical dilemmas related to AI usage. By integrating these ethical dimensions, future research can offer a more comprehensive view of the benefits and challenges associated with the adoption of GenAI tools in education, ultimately contributing to the development of more effective and ethically sound educational practices.

Before starting the survey, participants were informed about the purpose of the study and the proper and ethical use of AI tools like ChatGPT for genuine learning purposes was emphasized. As a result, participants confirmed their focus on the correct use of GenAI tools. However, despite these efforts, various interpretations are still possible. For instance, some students might view using ChatGPT to generate answers for assignments as legitimate if they believe they are learning by reviewing the generated content, even though this constitutes academic dishonesty. Additionally, the definition of “learning” could vary among participants; some may see it as simply passing a course, thereby potentially using GenAI tools to complete assignments without truly acquiring knowledge or skills. Achieving or maintaining academic success could also be interpreted differently, with some students considering it to mean success achieved through ethical means, while others might include success obtained through dishonest use of AI tools. Furthermore, students’ ethical standards can differ, leading to varied perceptions of what constitutes proper use of GenAI tools. Lastly, the ease of use of these tools might lead some students to rely heavily on them, regardless of the ethical implications. Future research needs to address these potential variations in interpretation.

Finally, the study primarily focused on text-based GenAI tools like ChatGPT. However, there are various other AI tools and technologies used in education (e.g., adaptive learning systems, virtual tutors, automated grading systems). Given the diversity and specialized functions of these AI tools, the findings of this study may not be directly applicable to these other types of AI tools. Each category of AI tool comes with its own set of benefits, challenges, and ethical considerations that need to be addressed independently. By broadening the research to encompass a wider range of AI tools and technologies, future studies can offer deeper and more nuanced insights into the multifaceted role of AI in education, ultimately contributing to the development of more effective, equitable, and ethically sound educational practices.

Despite these limitations, the study offers important contributions to understanding the determinants of GenAI tool usage intentions in educational settings. Acknowledging these limitations provides a foundation for future research to build upon, ensuring a more nuanced and comprehensive exploration of the factors influencing AI adoption in education.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Institutional Review Board Statement

This research study was determined to be exempt from review by the Institutional Review Board (IRB) of Gachon University (Approval number: 1044396-202404-HR-059-01; Approval date: 19 April 2024). The study involves a non-coercive survey of adult participants who autonomously decide on their involvement. The rights of participants are minimally impacted, and the survey data collected are devoid of personally identifiable information, with all responses anonymized and de-identified.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Alam, A.; Hasan, M.; Raza, M.M. Impact of artificial intelligence (AI) on education: Changing paradigms and approaches. Towards Excell. 2022, 14, 281–289. [Google Scholar] [CrossRef]

- Lo, C.K. What is the impact of ChatGPT on education? A rapid review of the literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Adıgüzel, T.; Kaya, M.H.; Cansu, F.K. Revolutionizing Education with AI: Exploring the Transformative Potential of ChatGPT; Contemporary Educational Technology: London, UK, 2023. [Google Scholar]

- Sah, G.K.; Gupta, D.K.; Yadav, A.P. Analysis of ChatGPT and the future of artificial intelligence: Its effect on teaching and learning. J. AI Robot. Workplace Autom. 2024, 3, 64–80. [Google Scholar] [CrossRef]

- Liu, J.; Wang, C.; Liu, Z.; Gao, M.; Xu, Y.; Chen, J.; Cheng, Y. A bibliometric analysis of generative AI in education: Current status and development. Asia Pac. J. Educ. 2024, 44, 156–175. [Google Scholar] [CrossRef]

- Ali, K.; Barhom, N.; Tamimi, F.; Duggal, M. ChatGPT—A double-edged sword for healthcare education? Implications for assessments of dental students. Eur. J. Dent. Educ. 2024, 28, 206–211. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Wang, Y.; Liu, K.S.X.; Jiang, M.Y.C. Examining the moderating effect of motivation on technology acceptance of generative AI for English as a foreign language learning. Educ. Inf. Technol. 2024, 1–29. [Google Scholar] [CrossRef]

- Dalgıç, A.; Yaşar, E.; Demir, M. ChatGPT and learning outcomes in tourism education: The role of digital literacy and individualized learning. J. Hosp. Leis. Sport Tour. Educ. 2024, 34, 100481. [Google Scholar] [CrossRef]

- Pataranutaporn, P.; Danry, V.; Leong, J.; Punpongsanon, P.; Novy, D.; Maes, P.; Sra, M. AI-generated characters for supporting personalized learning and well-being. Nat. Mach. Intell. 2021, 3, 1013–1022. [Google Scholar] [CrossRef]

- Mohammadkarimi, E. Teachers’ reflections on academic dishonesty in EFL students’ writings in the era of artificial intelligence. J. Appl. Learn. Teach. 2023, 6. [Google Scholar] [CrossRef]

- Trust, T.; Whalen, J.; Mouza, C. Editorial: ChatGPT: Challenges, opportunities, and implications for teacher education. Contemp. Issues Technol. Teach. Educ. 2023, 23, 1–23. [Google Scholar]

- Fang, X.; Che, S.; Mao, M.; Zhang, H.; Zhao, M.; Zhao, X. Bias of AI-generated content: An examination of news produced by large language models. Sci. Rep. 2024, 14, 5224. [Google Scholar] [CrossRef] [PubMed]

- Scott-Branch, J.; Laws, R.; Terzi, P. The Intersection of AI, Information and Digital Literacy: Harnessing ChatGPT and Other Generative Tools to Enhance Teaching and Learning. 88th IFLA World Library and Information Congress (WLIC). 2023. Available online: https://www.ifla.org/resources/?_sfm_unitid=105138&_sfm_resource_type=All (accessed on 30 May 2024).

- Ciampa, K.; Wolfe, Z.M.; Bronstein, B. ChatGPT in education: Transforming digital literacy practices. J. Adolesc. Adult Lit. 2023, 67, 186–195. [Google Scholar] [CrossRef]

- Huang, C.W.; Coleman, M.; Gachago, D.; Van Belle, J.P. Using ChatGPT to Encourage Critical AI Literacy Skills and for Assessment in Higher Education. In Annual Conference of the Southern African Computer Lecturers’ Association; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 105–118. [Google Scholar]

- Weimann-Sandig, N. Digital literacy and artificial intelligence–does chat GPT introduce the end of critical thinking in higher education? In Proceedings of the 15th International Conference on Education and New Learning Technologies, Palma, Spain, 3–5 July 2023; pp. 16–21. [Google Scholar]

- Bender, S.M. Awareness of artificial intelligence as an essential digital literacy: ChatGPT and Gen-AI in the classroom. Change Engl. 2024, 31, 161–174. [Google Scholar] [CrossRef]

- Schiavo, G.; Businaro, S.; Zancanaro, M. Comprehension, apprehension, and acceptance: Understanding the influence of literacy and anxiety on acceptance of artificial intelligence. Technol. Soc. 2024, 77, 102537. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Southworth, J.; Migliaccio, K.; Glover, J.; Reed, D.; McCarty, C.; Brendemuhl, J.; Thomas, A. Developing a model for AI Across the curriculum: Transforming the higher education landscape via innovation in AI literacy. Comput. Educ. Artif. Intell. 2023, 4, 100127. [Google Scholar] [CrossRef]

- Wang, B.; Rau, P.L.P.; Yuan, T. Measuring user competence in using artificial intelligence: Validity and reliability of artificial intelligence literacy scale. Behav. Inf. Technol. 2023, 42, 1324–1337. [Google Scholar]

- UNESCO. Artificial Intelligence in Education: Challenges and Opportunities for Sustainable Development. Available online: https://www.gcedclearinghouse.org/sites/default/files/resources/190175eng.pdf (accessed on 30 May 2024).

- Akgun, S.; Greenhow, C. Artificial intelligence in education: Addressing ethical challenges in K-12 settings. AI Ethics 2022, 2, 431–440. [Google Scholar] [CrossRef] [PubMed]

- Chan, C.K.Y.; Hu, W. Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 2023, 20, 43. [Google Scholar] [CrossRef]

- Ballardini, R.M.; He, K.; Roos, T. AI-generated content: Authorship and inventorship in the age of artificial intelligence. In Online Distribution of Content in the EU 2019; Edward Elgar Publishing: Cheltenham, UK, 2018; pp. 117–135. [Google Scholar]

- Ng, D.T.K.; Leung, J.K.L.; Chu, S.K.W.; Qiao, M.S. Conceptualizing AI literacy: An exploratory review. Comput. Educ. Artif. Intell. 2021, 2, 100041. [Google Scholar] [CrossRef]

- Yu, C.W.; Chao, C.M.; Chang, C.F.; Chen, R.J.; Chen, P.C.; Liu, Y.X. Exploring behavioral intention to use a mobile health education website: An extension of the UTAUT 2 model. Sage Open 2021, 11, 21582440211055721. [Google Scholar] [CrossRef]

- Hair, J.F.; Sarstedt, M.; Ringle, C.M.; Gudergan, S.P. Advanced Issues in Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage: Thousand Oaks, CA, USA, 2018. [Google Scholar]

- Chiu, C.M.; Hsu, M.H.; Lai, H.; Chang, C.M. Re-examining the influence of trust on online repeat purchase intention: The moderating role of habit and its antecedents. Decis. Support Syst. 2012, 53, 835–845. [Google Scholar] [CrossRef]

- Gefen, D.; Straub, D.W. A practical guide to factorial validity using PLS-graph: Tutorial and annotated example. Commun. Assoc. Inf. Syst. 2005, 16, 91–109. [Google Scholar] [CrossRef]

- Rahmi, B.; Birgoren, B.; Aktepe, A. A meta analysis of factors affecting perceived usefulness and perceived ease of use in the adoption of e-learning systems. Turk. Online J. Distance Educ. 2018, 19, 4–42. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).