Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective

Abstract

1. Introduction

- RQ1.

- What are the primary ethical challenges arising from using generative technologies, specifically concerning privacy, data protection, copyright infringement, misinformation, biases, and societal inequalities?

- RQ2.

- How can the development and deployment of generative AI be guided by ethical principles such as human rights, fairness, and transparency to ensure equitable outcomes and mitigate potential harm to individuals and society?

2. Overview of Generative AI Technologies and Features

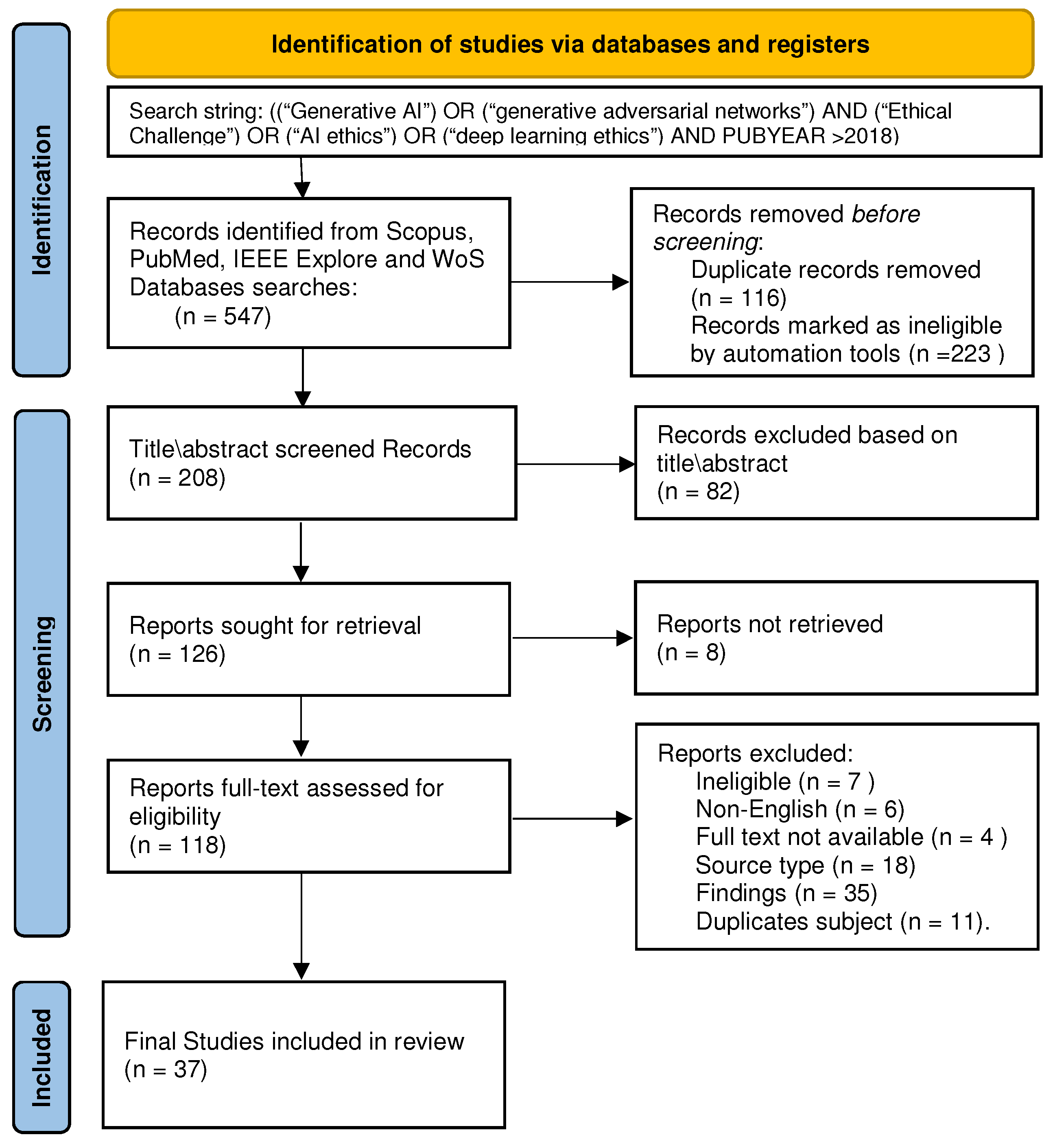

3. The Review Methodology

3.1. Search Strategy

3.2. Eligibility Criteria

3.3. Study Selection

3.4. Data Extraction

3.5. Quality Assessment

3.6. Data Synthesis

3.7. Risk of Bias Assessment

4. Results

4.1. Study Selection Results

4.2. Ethical Concerns of Generative AI

4.2.1. Authorship and Academic Integrity:

4.2.2. IPR, Copyright Issues, Authenticity, and Attribution

4.2.3. Privacy, Trust, and Bias

4.2.4. Misinformation and Deepfakes

4.2.5. Educational Ethics

4.2.6. Transparency and Accountability

4.2.7. Social and Economic Impact

4.3. Proposed Solution to Ethical Concerns of Generative AI

4.3.1. Authorship and Academic Integrity

4.3.2. IPR, Copyright Issues, Authenticity and Attribution

4.3.3. Privacy, Trust, and Bias

4.3.4. Misinformation and Deepfakes

4.3.5. Educational Ethics

4.3.6. Transparency and Accountability

4.3.7. Social and Economic Impact

5. Discussion

5.1. Theoretical Implications of Research in the Theory of AI Ethics

5.2. Practical Implications

6. Future Research Directions

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Reference | Subject | Type | Research Focus | Ethical Challenges |

|---|---|---|---|---|

| [36] | Media | Conf. | The study explores the proactive use of artificial fingerprints embedded in generative models to address deepfake detection and attribution. The focus is on rooting deepfake detection in training data, making it agnostic to specific generative models. | The proliferation of deepfake technology poses significant ethical challenges, including misinformation, privacy violations, and potential misuse for malicious purposes such as propaganda and identity theft. Ensuring responsible disclosure of generative models and attributing responsibility to model inventors are crucial ethical considerations. |

| [25] | Generic | Conf. | Developing a framework to protect the intellectual property rights (IPR) of GANs against replication and ambiguity attacks. | The ease of replicating and redistributing deep learning models raises concerns over ownership and IPR violations. |

| [23] | Media | Book Chapter | Examining the intersection of generative AI and intellectual property rights (IPR), particularly regarding the protection of AI-generated works under current copyright laws. | The inadequacy of current IPR concepts in accommodating AI-generated works. The potential economic implications of extending copyright protection to AI-generated works. |

| [24] | Med | Research Article | The study explores the ethical implications of generative AI, particularly in the context of authorship in academia and healthcare. It discusses the potential impact of AI-generated content on academic integrity and the writing process, focusing on medical ethics. | The ethical challenges include concerns about authorship verification, potential erosion of academic integrity, and the risk of predatory journals exploiting AI-generated content. There are also considerations regarding AI-generated work’s quality, originality, and attribution. |

| [37] | Generic | Conf. | The study analyzes the regulation of LGAIMs in the context of the Digital Services Act (DSA) and non-discrimination law. | LGAIMs present challenges related to content moderation, data protection risks, bias mitigation, and ensuring trustworthiness in their deployment for societal benefit. |

| [7] | Edu | Research Article | The study explores the challenges higher education institutions face in the era of widespread access to generative AI. It discusses the impact of AI on education, focusing on various aspects. | Ethical challenges highlighted include the potential biases in AI-generated content, environmental impact due to increased energy consumption, and the concentration of AI capabilities in well-funded organizations. |

| [9] | Med | Research Article | The study explores the role of generative AI, particularly ChatGPT, in revolutionizing various aspects of medicine and healthcare. It highlights applications such as medical dialogue study, disease progression simulation, clinical summarization, and hypothesis generation. The research also focuses on the development and implementation of generative AI tools in Europe and Asia, including advancements by companies like Philips and startups like SayHeart, along with research initiatives at institutions like Riken. | The use of generative AI in healthcare raises significant ethical concerns, including trust, safety, reliability, privacy, copyright, and ownership. Issues such as the unpredictability of AI responses, privacy breaches, data ownership, and copyright infringement have been observed. Concerns about the collection and storage of personal data, the potential for biases in AI models, and the need for regulatory oversight are highlighted. |

| [42] | Generic | Conf. | Combating misinformation in the era of generative AI models, with a focus on detecting and addressing fake content generated by AI models. | Addressing the ethical implications of AI-generated misinformation, including its potential to deceive and manipulate individuals and communities. |

| [22] | Edu | Research Article | Examines the emergence of OpenAI’s ChatGPT and its potential impacts on academic integrity, focusing on generative AI systems’ capabilities to produce human-like texts. | Discusses concerns regarding the use of ChatGPT in academia, including the potential for plagiarism, lack of appropriate acknowledgment, and the need for tools to detect dishonest use. |

| [29] | Med | Research Article | The study examines the imperative need for regulatory oversight of LLMs or generative AI in healthcare. It explores the challenges and risks associated with the integration of LLMs in medical practice and emphasizes the importance of proactive regulation. | Patient Data Privacy: Ensuring anonymization and protection of patient data used for training LLMs. Fairness and Bias: Preventing biases in AI models introduced during training. Informed Consent: Informing and obtaining consent from patients regarding AI use in healthcare. |

| [28] | Med | Research Article | The commentary discusses the applications, challenges, and ethical considerations of generative AI in medical imaging, focusing on data augmentation, image synthesis, image-to-image translation, and radiology report generation. | Concerns include the potential misuse of AI-generated images, privacy breaches due to large datasets, biases in generated outputs, and the need for clear guidelines and regulations to ensure responsible use and protect patient privacy. |

| [21] | Edu | Book Chapter | The study focuses on exploring the impact of generative artificial intelligence (AI) on computing education. It covers perspectives from students and instructors and the potential changes in curriculum and teaching approaches due to the widespread use of generative AI tools. | Ethical challenges include concerns about the overreliance on generative AI tools, potential misuse in assessments, and the erosion of skills such as problem-solving and critical thinking. There are also concerns about plagiarism and unethical use, particularly in academic assessments. |

| [35] | Generic | Conf. | The study analyzes the social impact of generative AI, focusing on ChatGPT and exploring perceptions, concerns, and opportunities. It aims to understand biases, inequalities, and ethical considerations surrounding its adoption. | Ethical challenges include privacy infringement, reinforcement of biases and stereotypes, the potential for misuse in sensitive domains, accountability for harmful uses, and the need for transparent and responsible development and deployment. |

| [34] | Edu | Research Article | The study explores university students’ perceptions of generative AI (GenAI) technologies in higher education, aiming to understand their attitudes, concerns, and expectations regarding the integration of GenAI into academic settings. | Ethical challenges related to GenAI in education include concerns about privacy, security, bias, and the responsible use of AI. |

| [27] | Generic | Research Article | The research focuses on governance conditions for generative AI systems, emphasizing the need for observability, inspectability, and modifiability as key aspects. | Addressing algorithmic opacity, regulatory co-production, and the implications of generative AI systems for societal power dynamics and governance structures. |

| [20,48] | Media | Research Article | The study focuses on the copyright implications of generative AI systems, exploring the complexity and legal issues surrounding these technologies. | The ethical challenges in generative AI systems include issues of authorship, liability, fair use, and licensing. The study examines these challenges and their connections within the generative AI supply chain. |

| [47] | Generic | Research Article | The focus is on the ethical considerations surrounding the development and application of generative AI, with a particular emphasis on OpenAI’s GPT models. | The study discusses challenges such as bias in AI training data, the ethical implications of AI-generated content, and the potential impact of AI on employment and societal norms. |

| [19] | Edu | Research Article | ChatGPT’s impact on higher education | Academic integrity risks, bias, overreliance on AI |

| [18] | Edu | Research Article | Exploring the potential of generative AI, particularly ChatGPT, in transforming education and training. Investigating both the strengths and weaknesses of generative AI in learning contexts. | Risk of misleading learners with incorrect information. Potential for students to misuse generative AI for cheating in academic tasks. Concerns about undermining critical thinking and problem-solving skills if students rely too heavily on generative AI solutions. |

| [33] | Generic | Research Article | The study explores the ethical challenges arising from the intersection of open science (OS) and generative artificial intelligence (AI). | Generative AI, which produces data such as text or images, poses risks of harm, discrimination, and violation of privacy and security when using open science outputs. |

| [46] | Generic | Conf. | The study explores methods for copyright protection and accountability of generative AI models, focusing on adversarial attacks, watermarking, and attribution techniques. It aims to evaluate their effectiveness in protecting intellectual property rights (IPR) related to both models and training sets. | One ethical challenge is the unauthorized utilization of generative AI models to produce realistic images resembling copyrighted works, potentially leading to copyright infringement. Another challenge is the inadequate protection of the original copyrighted images used in training generative AI models, which may not be adequately addressed by current protection techniques. |

| [17] | Edu | Conf. | Investigating the transformative impact of generative AI, particularly ChatGPT, on higher education (HE), including its implications on pedagogy, assessment, and policy within the HE sector. | Concerns regarding academic integrity and the misuse of generative AI tools for plagiarism, potential bias and discrimination, job displacement due to automation, and the need for clear policies to address AI-related ethical issues. |

| [41] | Generic | Research Article | The study explores various aspects of digital memory, including the impact of AI and algorithms on memory construction, representation, and retrieval, particularly regarding historical events. | Ethical challenges include biases, discrimination, and manipulation of information by AI and algorithms, especially in search engine results and content personalization systems. |

| [32] | Generic | Research Article | An activity system-based perspective on generative AI, exploring its challenges and research directions. | Concerns about biases and misinformation generated by AI models. Potential job displacement due to automation. Privacy and security risks associated with AI-generated content. |

| [40] | Edu | Conf. | The study addresses the challenges posed by deepfakes and misinformation in the era of advanced AI models, focusing on detection, mitigation, and ethical implications. | Ethical concerns include the potential for biases in AI development, lack of transparency, and the impact on human agency and autonomy. |

| [38] | Media | Misc | The study focuses on defending human rights in the era of deepfakes and generative AI, examining how these technologies impact truth, trust, and accountability in human rights study and advocacy. | Ethical challenges include the potential for deepfakes and AI-generated content to undermine truth and trust, the responsibility of technology makers in ensuring authenticity, and the uneven distribution of detection tools and media forensics capacity. |

| [39] | Generic | Research Article | The study explores the implications of generative artificial intelligence (AI) tools, such as ChatGPT, on health communications. It discusses the potential changes in health information production, visibility, the mixing of marketing and misinformation with evidence, and its impact on trust. | Ethical challenges include the need for transparency and explainability to prevent unintended consequences, such as the dissemination of misinformation. The study highlights concerns regarding the balance of evidence, marketing, and misinformation in health information seen by users, as well as the potential for personalization and hidden advertising to introduce new risks of misinformation. |

| [30] | Med | Research Article | The commentary examines the ethical implications of utilizing ChatGPT, a generative AI model, in medical contexts. It particularly focuses on the unique challenges arising from ChatGPT’s general design and wide applicability in healthcare. | Bias and privacy concerns due to ChatGPT’s intricate architecture and general design. Lack of transparency and explainability in AI-generated outputs, affecting patient autonomy and trust. Issues of responsibility, liability, and accountability in case of adverse outcomes raise questions about fault attribution at individual and systemic levels. |

| [44] | Edu | Thesis | The study explores the copyright implications of using generative AI, particularly focusing on legal challenges, technological advancements, and their impact on creators. | Ethical challenges include determining copyright ownership for AI-generated works, protecting creators’ rights, and promoting innovation while avoiding copyright infringement. |

| [14] | Edu | Research Article | The study explores the attitudes and intentions of Gen Z students and Gen X and Y teachers toward adopting generative AI technologies in higher education. It examines their perceptions, intentions, concerns, and willingness regarding the use of AI tools for learning and teaching. | Concerns include unethical uses such as cheating and plagiarism, quality of AI-generated outputs, perpetuation of biases, job market impact, academic integrity, privacy, transparency, and AI’s potential misalignment with societal values. |

| [45] | Edu | Research Article | The research focuses on the use of assistive technologies, including generative AI, by test takers in language assessment. It examines the debate surrounding the theory and practice of allowing test takers to use these technologies during language assessment. | The use of generative AI in language assessment raises ethical challenges related to construct definition, scoring and rubric design, validity, fairness, equity, bias, and copyright. |

| [13] | Generic | Research Article | The study examines the opportunities, challenges, and implications of generative conversational AI for research practice and policy across various disciplines, including but not limited to education, healthcare, finance, and technology. | Ethical challenges include concerns about authenticity, privacy, bias, and the potential for misuse of AI-generated content. There are also issues related to academic integrity, such as plagiarism detection and ensuring fairness in assessment. |

| [43] | Edu | Research Article | The study explores the responsible use of generative AI technologies, particularly in scholarly publishing, discussing how authors, peer reviewers, and editors might utilize AI tools like LLMs such as ChatGPT to augment or replace traditional scholarly work. | The ethical challenges include concerns about accountability and transparency regarding authorship, potential bias within generated content, and the reliability of AI-generated information. There are also discussions about plagiarism and the need for clear disclosure of AI usage in scholarly manuscripts. |

| [15] | Generic | Research Article | The study discusses the necessity of implementing effective detection, verification, and explainability mechanisms to counteract potential harms arising from the proliferation of AI-generated inauthentic content and science, especially with the rise of transformer-based approaches. | The primary ethical challenges revolve around the authenticity and explainability of AI-generated content, particularly in scientific contexts. Concerns include the potential for disinformation, misinformation, and unreproducible science, which could erode trust in scientific inquiry. |

| [26] | Generic | Research Article | Examining the ethical considerations and proposing policy interventions regarding the impact of generative AI tools in the economy and society. | Loss of jobs due to AI automation: exacerbation of wealth and income inequality. Potential dissemination of false information by AI chatbots and manipulation of information by AI for specific agendas |

| [31] | Generic | Research Article | The study explores the use of GANs for generating synthetic electrocardiogram (ECG) signals, focusing on the development of two GAN models named WaveGAN* and Pulse2Pulse. | The main ethical challenges include privacy concerns regarding the generation of synthetic ECGs that mimic real ones, the potential misuse of synthetic data for malicious purposes, and ensuring proper data protection measures. |

| [16] | Edu | Research Article | The study examines the implications of using ChatGPT, a large language model, in scholarly publishing. It delves into the ethical considerations and challenges associated with using AI tools like ChatGPT for writing scholarly manuscripts. | Ethical challenges highlighted include concerns regarding transparency and disclosure of AI involvement in manuscript writing, potential biases introduced by AI algorithms, and the need to uphold academic integrity and authorship standards amid the increasing use of AI in scholarly publishing. |

References

- Bale, A.S.; Dhumale, R.; Beri, N.; Lourens, M.; Varma, R.A.; Kumar, V.; Sanamdikar, S.; Savadatti, M.B. The Impact of Generative Content on Individuals Privacy and Ethical Concerns. Int. J. Intell. Syst. Appl. Eng. 2024, 12, 697–703. [Google Scholar]

- Feuerriegel, S.; Hartmann, J.; Janiesch, C.; Zschech, P. Generative AI. Bus. Inf. Syst. Eng. 2024, 66, 111–126. [Google Scholar] [CrossRef]

- Kshetri, N. Economics of Artificial Intelligence Governance. Computer 2024, 57, 113–118. [Google Scholar] [CrossRef]

- Amoozadeh, M.; Daniels, D.; Nam, D.; Kumar, A.; Chen, S.; Hilton, M.; Srinivasa Ragavan, S.; Alipour, M.A. Trust in Generative AI among Students: An exploratory study. In Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 1, Portland, OR, USA, 20–23 March 2024; pp. 67–73. [Google Scholar]

- Allen, J.W.; Earp, B.D.; Koplin, J.; Wilkinson, D. Consent-GPT: Is it ethical to delegate procedural consent to conversational AI? J. Med. Ethics 2024, 50, 77–83. [Google Scholar] [CrossRef] [PubMed]

- Zhou, M.; Abhishek, V.; Derdenger, T.; Kim, J.; Srinivasan, K. Bias in Generative AI. arXiv 2024, arXiv:2403.02726. [Google Scholar]

- Michel-Villarreal, R.; Vilalta-Perdomo, E.; Salinas-Navarro, D.E.; Thierry-Aguilera, R.; Gerardou, F.S. Challenges and opportunities of generative AI for higher education as explained by ChatGPT. Educ. Sci. 2023, 13, 856. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 1–9. [Google Scholar]

- Zhang, P.; Kamel Boulos, M.N. Generative AI in medicine and healthcare: Promises, opportunities and challenges. Future Internet 2023, 15, 286. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Sarkis-Onofre, R.; Catalá-López, F.; Aromataris, E.; Lockwood, C. How to properly use the PRISMA Statement. Syst. Rev. 2021, 10, 1–3. [Google Scholar] [CrossRef] [PubMed]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Lee, K.K. The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and Millennial Generation teachers? arXiv 2023, arXiv:2305.02878. [Google Scholar] [CrossRef]

- Hamed, A.A.; Zachara-Szymanska, M.; Wu, X. Safeguarding Authenticity for Mitigating the Harms of Generative AI: Issues, Research Agenda, and Policies for Detection, Fact-Checking, and Ethical AI. iScience 2024, 27, 108782. [Google Scholar] [CrossRef] [PubMed]

- Kaebnick, G.E.; Magnus, D.C.; Kao, A.; Hosseini, M.; Resnik, D.; Dubljević, V.; Rentmeester, C.; Gordijn, B.; Cherry, M.J. Editors’ statement on the responsible use of generative AI technologies in scholarly journal publishing. Med. Health Care Philos. 2023, 26, 499–503. [Google Scholar] [CrossRef] [PubMed]

- Malik, T.; Hughes, L.; Dwivedi, Y.K.; Dettmer, S. Exploring the transformative impact of generative AI on higher education. In Proceedings of the Conference on e-Business, e-Services and e-Society, Curitiba, Brazil, 9–11 November 2023; pp. 69–77. [Google Scholar]

- Johnson, W.L. How to Harness Generative AI to Accelerate Human Learning. Int. J. Artif. Intell. Educ. 2023, 1–5. [Google Scholar] [CrossRef]

- Walczak, K.; Cellary, W. Challenges for higher education in the era of widespread access to Generative AI. Econ. Bus. Rev. 2023, 9, 71–100. [Google Scholar] [CrossRef]

- Lee, K.; Cooper, A.F.; Grimmelmann, J. Talkin ‘Bout AI Generation: Copyright and the Generative-AI Supply Chain. arXiv 2023, arXiv:2309.08133. [Google Scholar] [CrossRef]

- Prather, J.; Denny, P.; Leinonen, J.; Becker, B.A.; Albluwi, I.; Craig, M.; Keuning, H.; Kiesler, N.; Kohn, T.; Luxton-Reilly, A.; et al. The robots are here: Navigating the generative ai revolution in computing education. In Proceedings of the 2023 Working Group Reports on Innovation and Technology in Computer Science Education, Turku, Finland, 7–12 July 2023; pp. 108–159. [Google Scholar]

- Eke, D.O. ChatGPT and the rise of generative AI: Threat to academic integrity? J. Responsible Technol. 2023, 13, 100060. [Google Scholar] [CrossRef]

- Smits, J.; Borghuis, T. Generative AI and Intellectual Property Rights. In Law and Artificial Intelligence: Regulating AI and Applying AI in Legal Practice; Springer: Berlin/Heidelberg, Germany, 2022; pp. 323–344. [Google Scholar]

- Zohny, H.; McMillan, J.; King, M. Ethics of generative AI. J. Med. Ethics 2023, 49, 79–80. [Google Scholar] [CrossRef]

- Ong, D.S.; Chan, C.S.; Ng, K.W.; Fan, L.; Yang, Q. Protecting intellectual property of generative adversarial networks from ambiguity attacks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3630–3639. [Google Scholar]

- Farina, M.; Yu, X.; Lavazza, A. Ethical considerations and policy interventions concerning the impact of generative AI tools in the economy and in society. AI Ethics 2024, 1–9. [Google Scholar] [CrossRef]

- Ferrari, F.; van Dijck, J.; van den Bosch, A. Observe, inspect, modify: Three conditions for generative AI governance. New Media Soc. 2023. [Google Scholar] [CrossRef]

- Koohi-Moghadam, M.; Bae, K.T. Generative AI in medical imaging: Applications, challenges, and ethics. J. Med. Syst. 2023, 47, 94. [Google Scholar] [CrossRef] [PubMed]

- Meskó, B.; Topol, E.J. The imperative for regulatory oversight of large language models (or generative AI) in healthcare. NPJ Digit. Med. 2023, 6, 120. [Google Scholar] [CrossRef] [PubMed]

- Victor, G.; Bélisle-Pipon, J.C.; Ravitsky, V. Generative AI, specific moral values: A closer look at ChatGPT’s new ethical implications for medical AI. Am. J. Bioeth. 2023, 23, 65–68. [Google Scholar] [CrossRef] [PubMed]

- Thambawita, V.; Isaksen, J.L.; Hicks, S.A.; Ghouse, J.; Ahlberg, G.; Linneberg, A.; Grarup, N.; Ellervik, C.; Olesen, M.S.; Hansen, T.; et al. DeepFake electrocardiograms using generative adversarial networks are the beginning of the end for privacy issues in medicine. Sci. Rep. 2021, 11, 21896. [Google Scholar] [CrossRef]

- Nah, F.; Cai, J.; Zheng, R.; Pang, N. An activity system-based perspective of generative AI: Challenges and research directions. AIS Trans. Hum. Comput. Interact. 2023, 15, 247–267. [Google Scholar] [CrossRef]

- Acion, L.; Rajngewerc, M.; Randall, G.; Etcheverry, L. Generative AI poses ethical challenges for open science. Nat. Hum. Behav. 2023, 7, 1800–1801. [Google Scholar] [CrossRef] [PubMed]

- Chan, C.K.Y.; Hu, W. Students’ Voices on Generative AI: Perceptions, Benefits, and Challenges in Higher Education. arXiv 2023, arXiv:2305.00290. [Google Scholar] [CrossRef]

- Baldassarre, M.T.; Caivano, D.; Fernandez Nieto, B.; Gigante, D.; Ragone, A. The Social Impact of Generative AI: An Analysis on ChatGPT. In Proceedings of the 2023 ACM Conference on Information Technology for Social Good, Lisbon, Portugal, 6–8 September 2023; pp. 363–373. [Google Scholar]

- Yu, N.; Skripniuk, V.; Abdelnabi, S.; Fritz, M. Artificial fingerprinting for generative models: Rooting deepfake attribution in training data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 14448–14457. [Google Scholar]

- Hacker, P.; Engel, A.; Mauer, M. Regulating ChatGPT and other large generative AI models. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; pp. 1112–1123. [Google Scholar]

- Gregory, S. Fortify the truth: How to defend human rights in an age of deepfakes and generative AI. J. Hum. Rights Pract. 2023, 15, 702–714. [Google Scholar] [CrossRef]

- Dunn, A.G.; Shih, I.; Ayre, J.; Spallek, H. What generative AI means for trust in health communications. J. Commun. Healthc. 2023, 16, 385–388. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.R.; Wang, Z.; Ahvanooey, M.T.; Zhao, J. Deepfakes, Misinformation, and Disinformation in the Era of Frontier AI, Generative AI, and Large AI Models. In Proceedings of the 2023 International Conference on Computer and Applications (ICCA), Cairo, Egypt, 28–30 November 2023; pp. 1–7. [Google Scholar]

- Makhortykh, M.; Zucker, E.M.; Simon, D.J.; Bultmann, D.; Ulloa, R. Shall androids dream of genocides? How generative AI can change the future of memorialization of mass atrocities. Discov. Artif. Intell. 2023, 3, 28. [Google Scholar] [CrossRef]

- Xu, D.; Fan, S.; Kankanhalli, M. Combating misinformation in the era of generative AI models. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October 2023; pp. 9291–9298. [Google Scholar]

- Lin, Z. Supercharging academic writing with generative AI: Framework, techniques, and caveats. arXiv 2023, arXiv:2310.17143. [Google Scholar]

- Sandiumenge, I. Copyright Implications of the Use of Generative AI; SSRN 4531912; Elsevier: Amsterdam, The Netherlands, 2023. [Google Scholar]

- Voss, E.; Cushing, S.T.; Ockey, G.J.; Yan, X. The use of assistive technologies including generative AI by test takers in language assessment: A debate of theory and practice. Lang. Assess. Q. 2023, 20, 520–532. [Google Scholar] [CrossRef]

- Zhong, H.; Chang, J.; Yang, Z.; Wu, T.; Mahawaga Arachchige, P.C.; Pathmabandu, C.; Xue, M. Copyright protection and accountability of generative ai: Attack, watermarking and attribution. In Proceedings of the Companion Proceedings of the ACM Web Conference, Austin, TX, USA, 30 April–4 May 2023; pp. 94–98. [Google Scholar]

- Hurlburt, G. What If Ethics Got in the Way of Generative AI? IT Prof. 2023, 25, 4–6. [Google Scholar] [CrossRef]

- Lee, K.; Cooper, A.F.; Grimmelmann, J. Talkin ‘Bout AI Generation: Copyright and the Generative-AI Supply Chain (The Short Version). In Proceedings of the Symposium on Computer Science and Law, Munich, Germany, 26–27 September 2024; pp. 48–63. [Google Scholar]

| Concern | Sources |

|---|---|

| Authorship and Academic Integrity | [13,14,15,16,17,18,19,20,21,22,23,24,25] |

| Regulatory and Legal Issues | [9,17,20,26,27,28,29] |

| Privacy, Trust, and Bias | [9,13,14,16,17,19,28,29,30,31,32,33,34,35,36,37] |

| Misinformation and Deepfakes | [15,26,31,32,36,38,39,40,41,42] |

| Educational Ethics | [7,18,21,43] |

| Transparency and Accountability | [14,16,30,35,39,40,43] |

| Authenticity and Attribution | [13,38,44,45,46] |

| Social and Economic Impact | [14,17,23,26,27,37,39,47] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-kfairy, M.; Mustafa, D.; Kshetri, N.; Insiew, M.; Alfandi, O. Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective. Informatics 2024, 11, 58. https://doi.org/10.3390/informatics11030058

Al-kfairy M, Mustafa D, Kshetri N, Insiew M, Alfandi O. Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective. Informatics. 2024; 11(3):58. https://doi.org/10.3390/informatics11030058

Chicago/Turabian StyleAl-kfairy, Mousa, Dheya Mustafa, Nir Kshetri, Mazen Insiew, and Omar Alfandi. 2024. "Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective" Informatics 11, no. 3: 58. https://doi.org/10.3390/informatics11030058

APA StyleAl-kfairy, M., Mustafa, D., Kshetri, N., Insiew, M., & Alfandi, O. (2024). Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective. Informatics, 11(3), 58. https://doi.org/10.3390/informatics11030058