Sampling and Reconstruction Jointly Optimized Model Unfolding Network for Single-Pixel Imaging

Abstract

1. Introduction

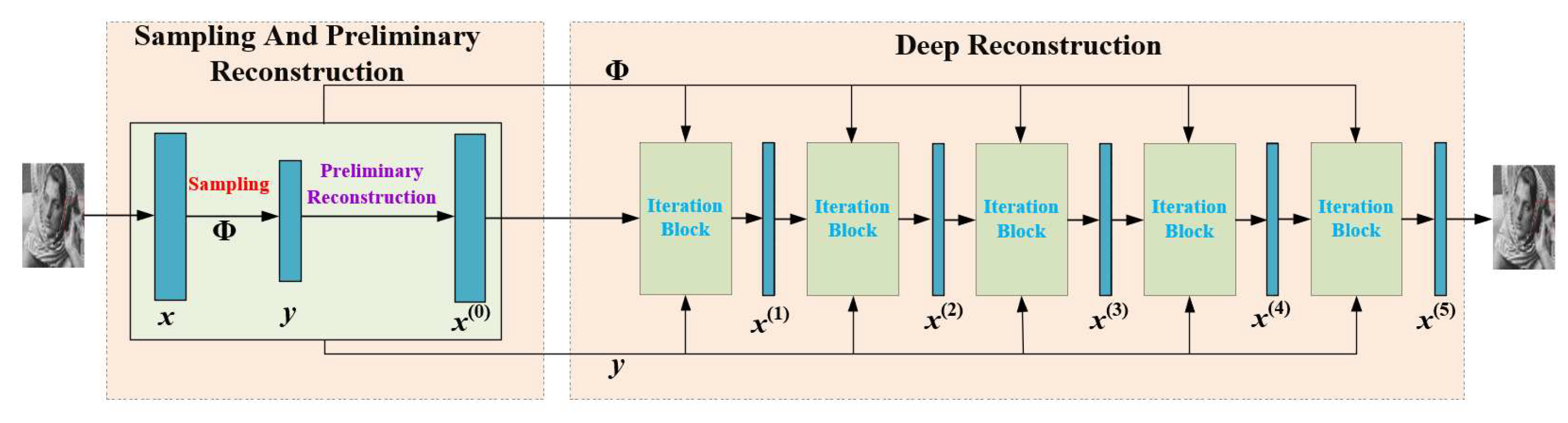

- We propose a sampling and reconstruction jointly optimized model unfolding network (SRMU-Net) for the SPI system. To achieve joint optimization of both sampling and reconstruction, a specially designed network is proposed so that the sampling matrix can be input into each iteration block as a learnable parameter.

- We added a preliminary reconstruction loss term with a regularization parameter to the loss function of SRMU-Net, which can achieve a higher imaging accuracy and faster convergence.

- Extensive simulation experiments demonstrate that the proposed network, SRMU-Net, outperforms existing algorithms. By training the sampling layer to be binary, our designed network can be directly used in the SPI system, which we have verified by experiments.

2. Related Work and Background

2.1. ISTA

2.2. ISTA-Net and ISTA-Net+

3. Proposed Network

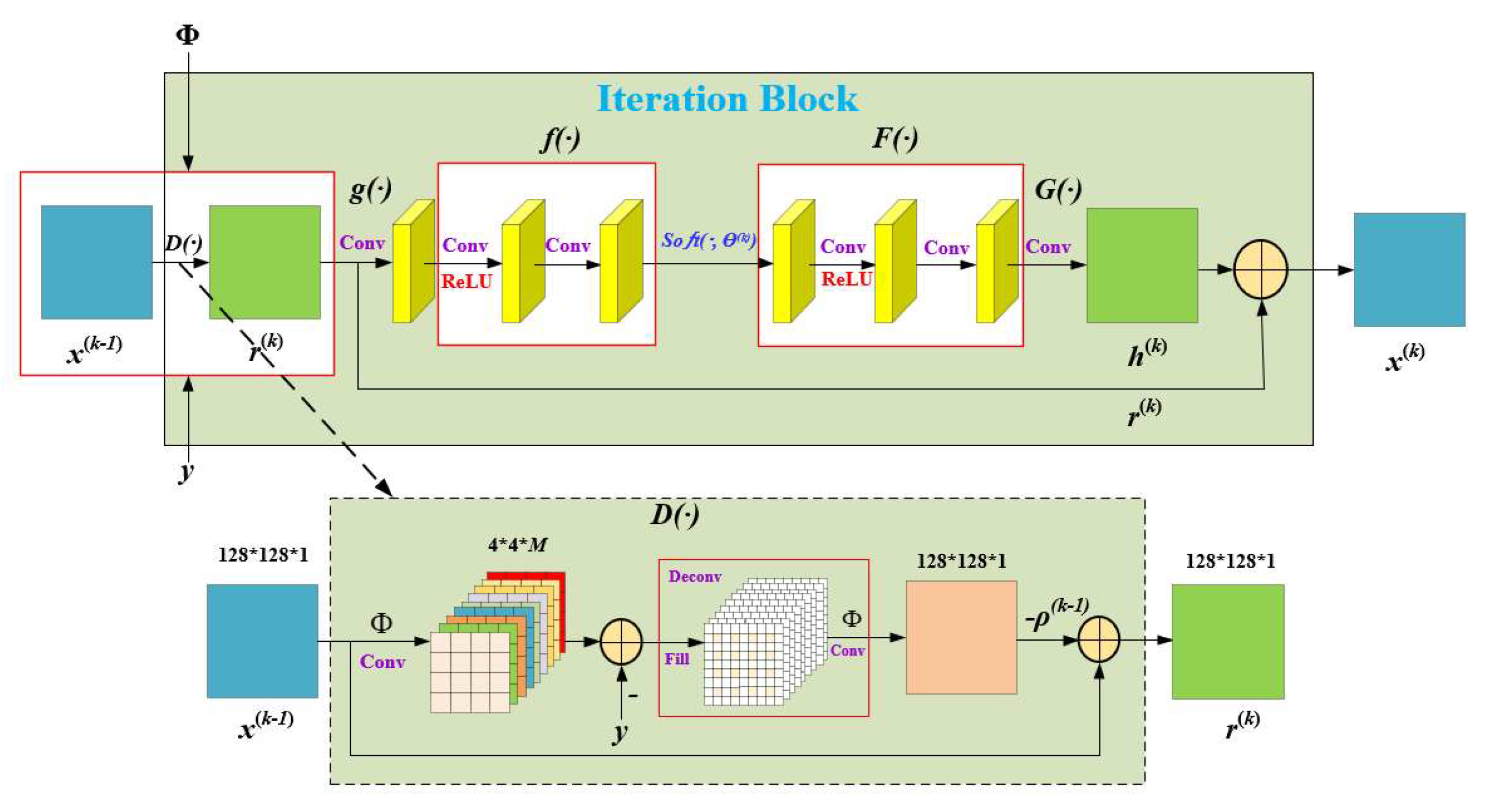

3.1. Network Architecture

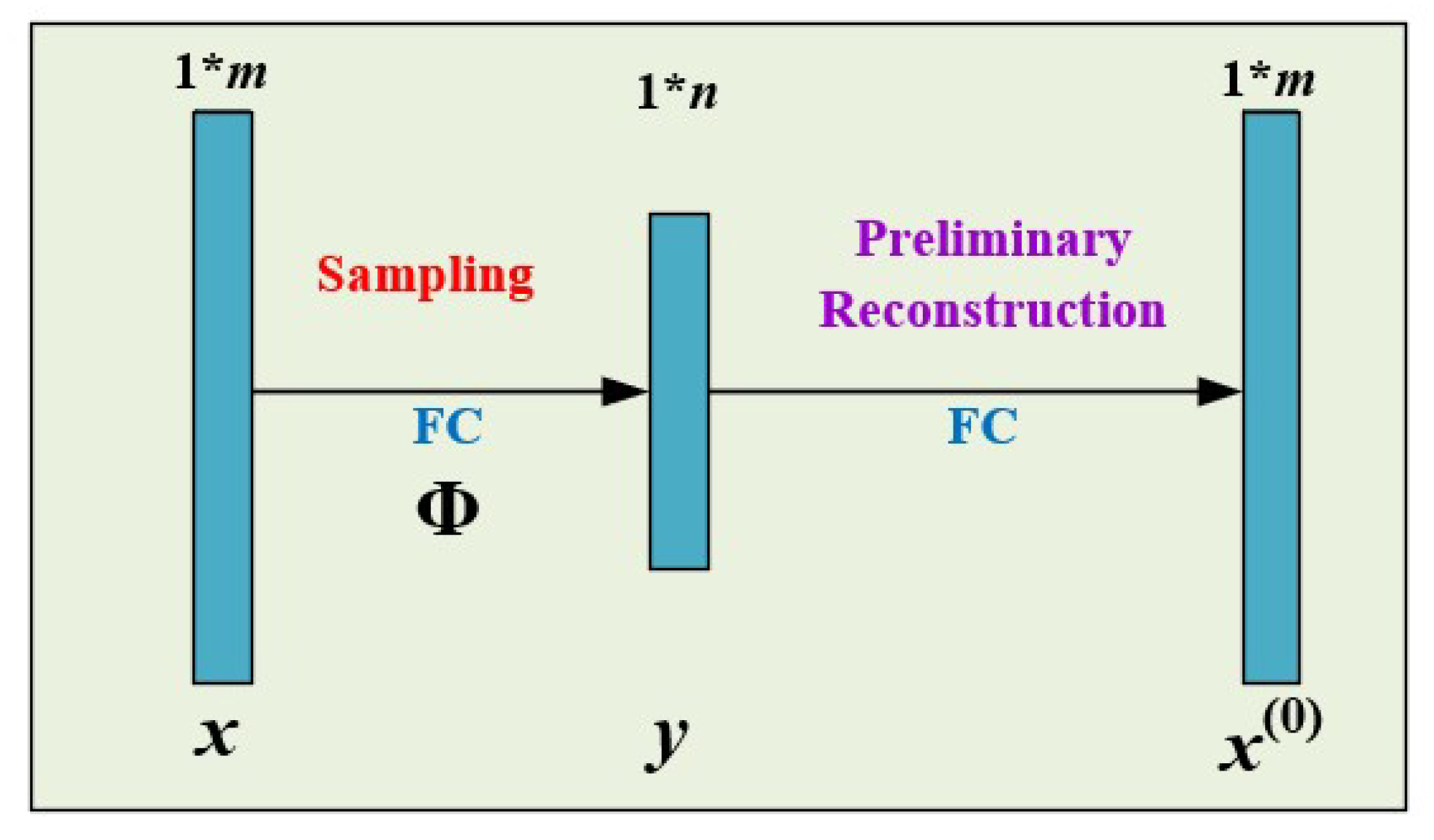

3.2. Sampling and Preliminary Reconstruction Subnetwork

3.2.1. Fully Connected Layer Sampling and Preliminary Reconstruction

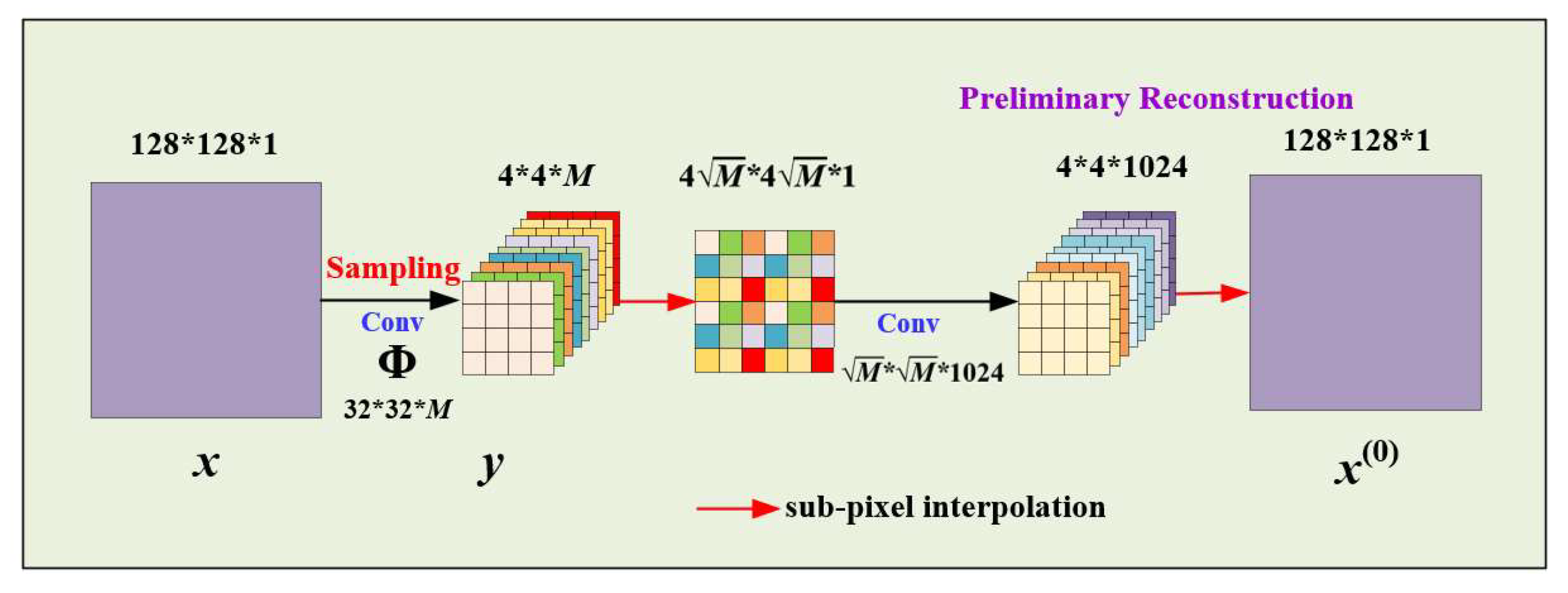

3.2.2. Large Convolutional Layer Sampling and Preliminary Reconstruction

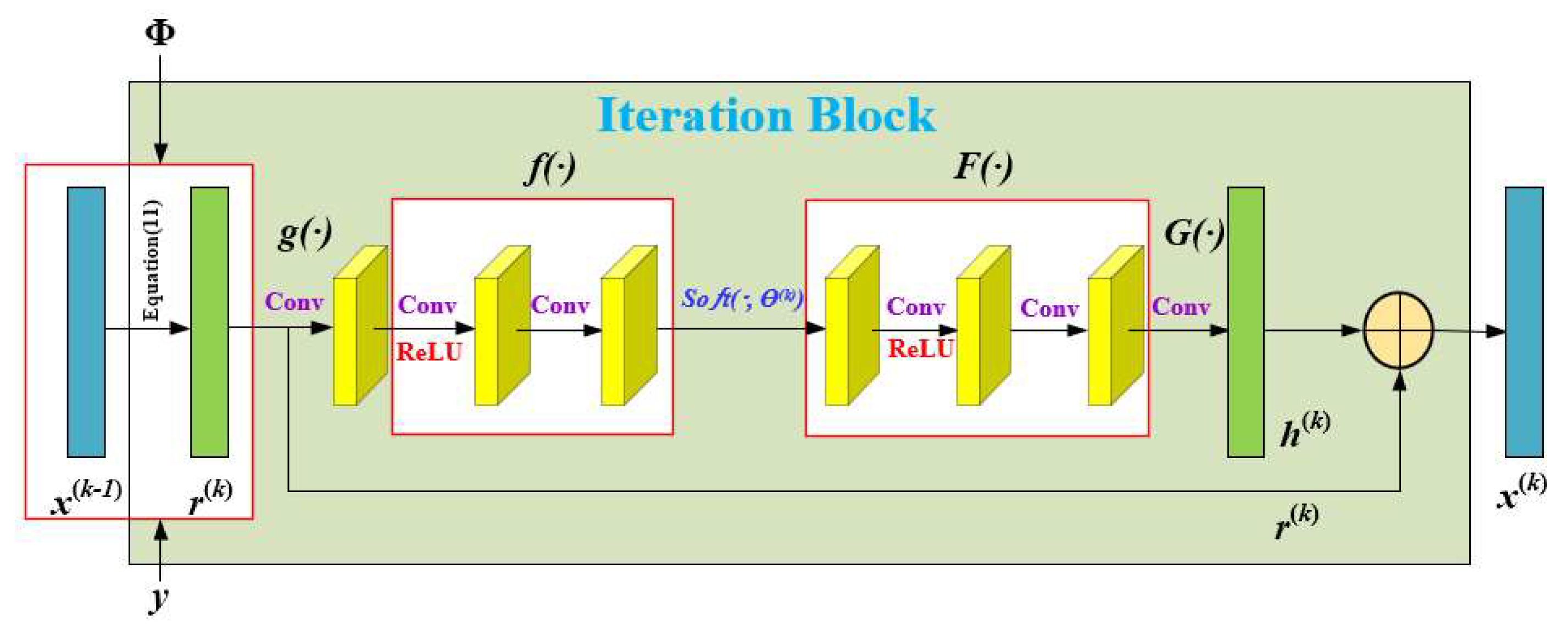

3.3. Deep Reconstruction Subnetwork

3.3.1. The Iteration Block Based on Fully Connected Layer Sampling

3.3.2. The Iteration Block Based on Large Convolutional Layer Sampling

3.4. Loss Function

4. Results and Discussion

4.1. Comparison of Different Sampling Methods

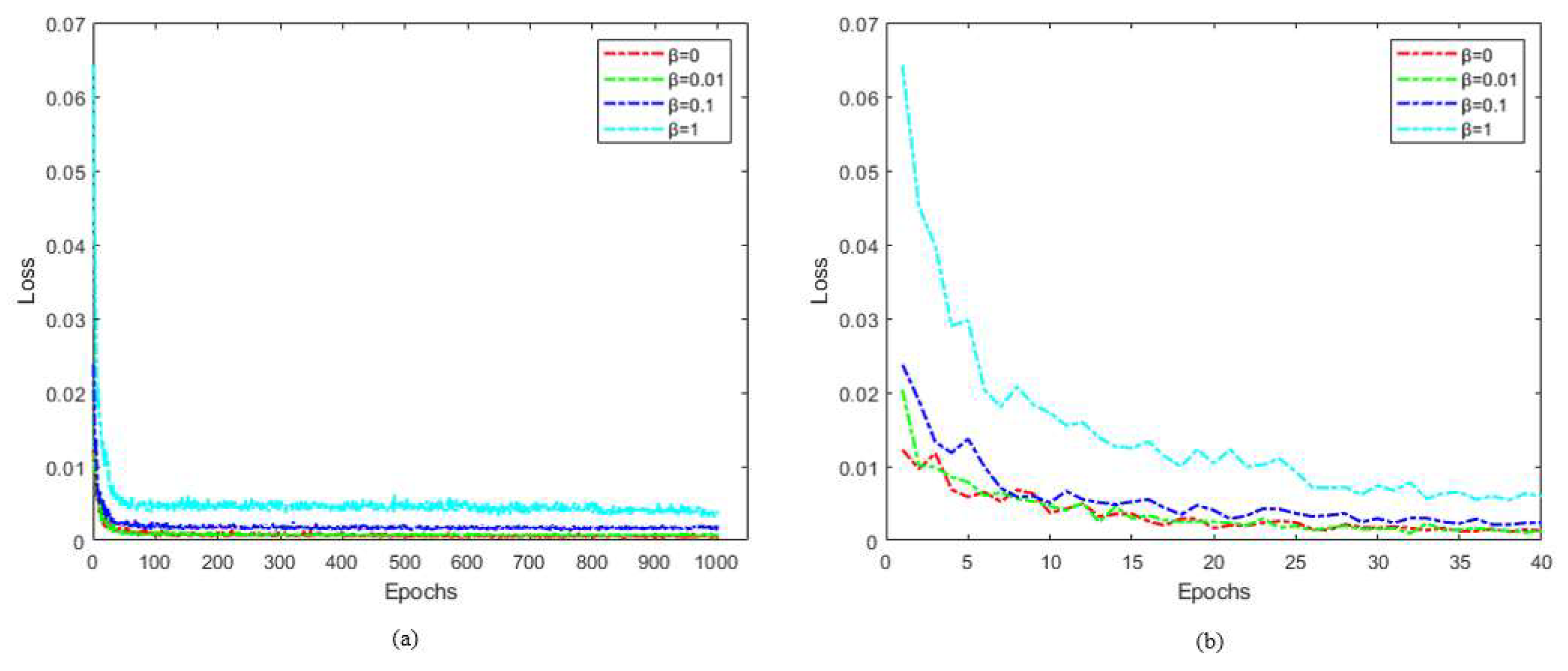

4.2. Discussion on Different β Values of Loss Function

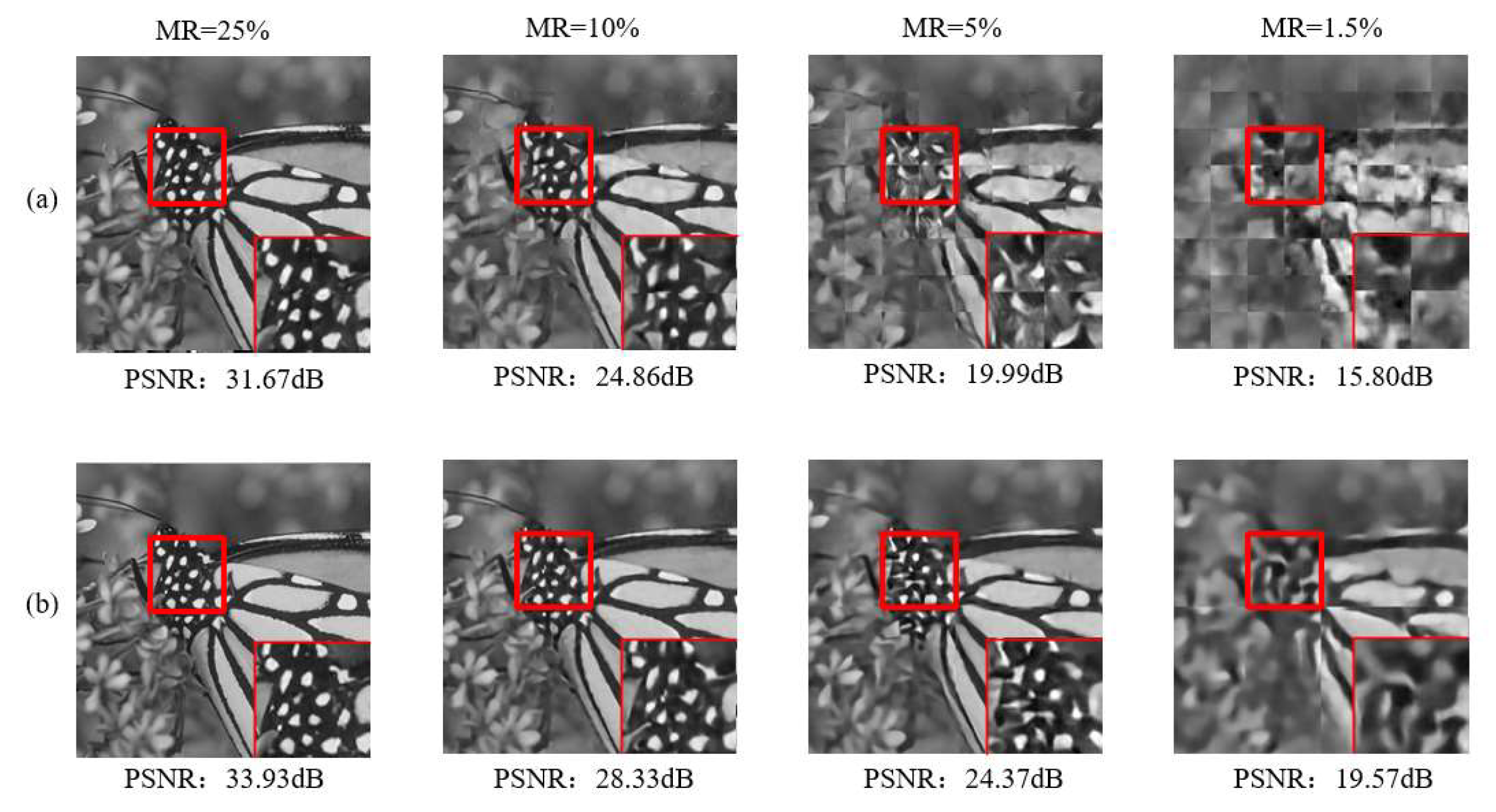

4.3. Comparison with Existing Algorithms

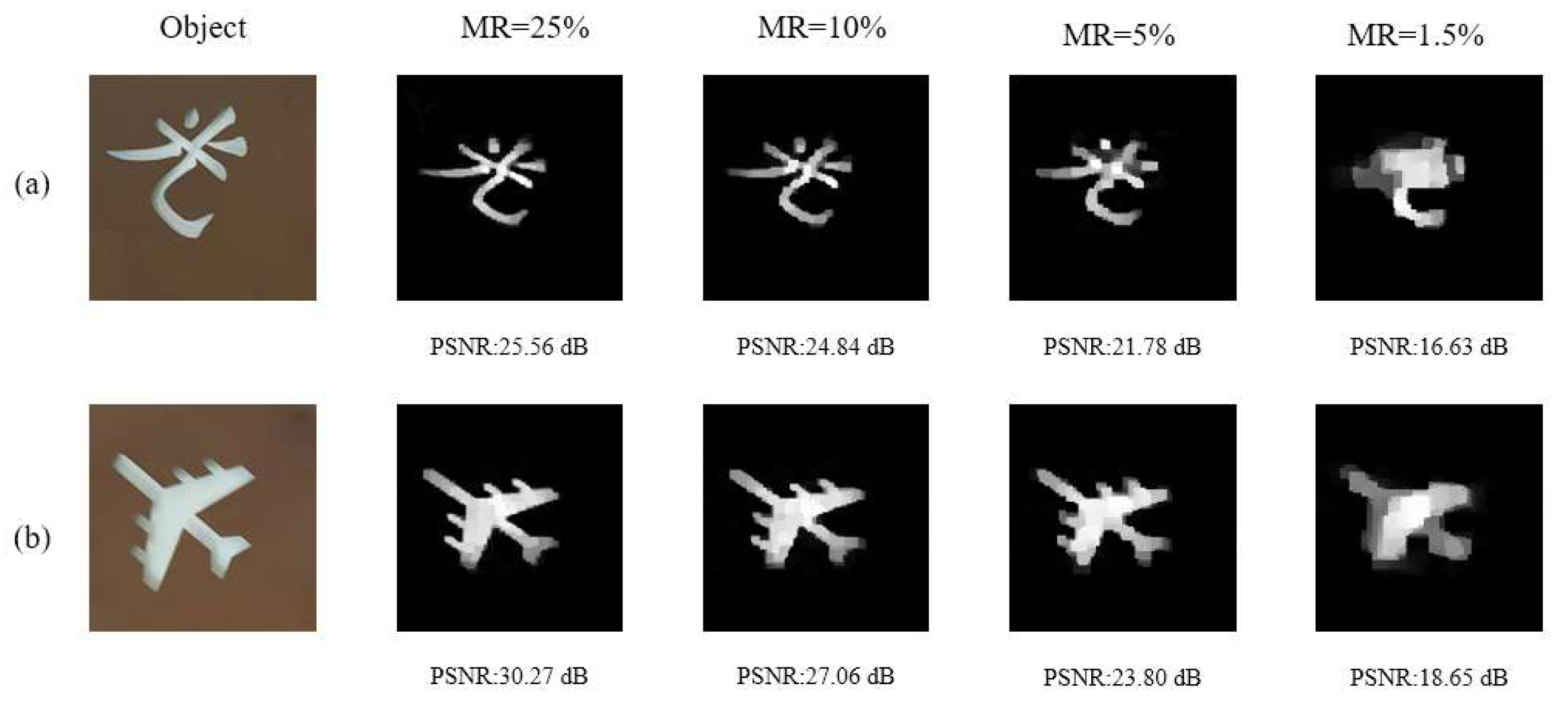

4.4. Imaging Results of SRMU-Net on SPI System

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Komiyama, S.; Astafiev, O.; Antonov, V.; Kutsuwa, T.; Hirai, H. A single-photon detector in the far-infrared range. Nature 2000, 403, 405–407. [Google Scholar] [CrossRef]

- Komiyama, S. Single-photon detectors in the terahertz range. IEEE J. Sel. Top. Quantum Electron. 2010, 17, 54–66. [Google Scholar] [CrossRef]

- Gabet, R.; Stéphan, G.-M.; Bondiou, M.; Besnard, P.; Kilper, D. Ultrahigh sensitivity detector for coherent light: The laser. Opt. Commun. 2000, 185, 109–114. [Google Scholar] [CrossRef]

- Sobolewski, R.; Verevkin, A.; Gol’Tsman, G.-N.; Lipatov, A.; Wilsher, K. Ultrafast superconducting single-photon optical detectors and their applications. IEEE Trans. Appl. Supercond. 2003, 13, 1151–1157. [Google Scholar] [CrossRef]

- Zhao, C.; Gong, W.; Chen, M.; Li, E.; Wang, H.; Xu, W.; Han, S. Ghost imaging lidar via sparsity constraint. Appl. Phys. Lett. 2012, 101, 141123. [Google Scholar] [CrossRef]

- Gong, W.; Han, S. High-resolution far-field ghost imaging via sparsity constraint. Sci. Rep. 2015, 5, 9280. [Google Scholar] [CrossRef]

- Sun, B.; Edgar, M.-P.; Bowman, R.; Vittert, L.-E.; Welsh, S.; Bowman, A.; Padgett, M.-J. 3D computational imaging with single-pixel detectors. Science 2013, 340, 844–847. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.-K.; Yao, X.-R.; Liu, X.-F.; Li, L.-Z.; Zhai, G.-J. Three-dimensional single-pixel compressive reflectivity imaging based on complementary modulation. Appl. Opt. 2015, 54, 363–367. [Google Scholar] [CrossRef]

- Wang, X.-G.; Lin, S.-S.; Xue, J.-D.; Xu, B.; Chen, J.-L. Information security scheme using deep learning-assisted single-pixel imaging and orthogonal coding. Opt. Express 2023, 31, 2402–2413. [Google Scholar] [CrossRef]

- Sinclair, M.-B.; Haaland, D.-M.; Timlin, J.-A.; Jones, H.-D. Hyperspectral confocal microscope. Appl. Opt. 2006, 45, 6283–6291. [Google Scholar] [CrossRef]

- Pian, Q.; Yao, R.; Sinsuebphon, N.; Intes, X. Compressive hyperspectral time-resolved wide-field fluorescence lifetime imaging. Nat. Photonics 2017, 11, 411–414. [Google Scholar] [CrossRef]

- Rock, W.; Bonn, M.; Parekh, S.-H. Near shot-noise limited hyperspectral stimulated Raman scattering spectroscopy using low energy lasers and a fast CMOS array. Opt. Express 2013, 21, 15113–15120. [Google Scholar] [CrossRef] [PubMed]

- Fredenberg, E.; Hemmendorff, M.; Cederström, B.; Åslund, M.; Danielsson, M. Contrast-enhanced spectral mammography with a photon-counting detector. Med. Phys. 2010, 37, 2017–2029. [Google Scholar] [CrossRef] [PubMed]

- Symons, R.; Krauss, B.; Sahbaee, P.; Cork, T.-E.; Lakshmanan, M.-N.; Bluemke, D.-A.; Pourmorteza, A. Photon-counting CT for simultaneous imaging of multiple contrast agents in the abdomen: An in vivo study. Med. Phys. 2017, 44, 5120–5127. [Google Scholar] [CrossRef]

- Yu, Z.; Leng, S.; Jorgensen, S.-M.; Li, Z.; Gutjahr, R.; Chen, B.; McCollough, C.-H. Evaluation of conventional imaging performance in a research whole-body CT system with a photon-counting detector array. Phys. Med. Biol. 2016, 61, 1572. [Google Scholar] [CrossRef] [PubMed]

- Becker, W.; Bergmann, A.; Hink, M.-A.; König, K.; Benndorf, K.; Biskup, C. Fluorescence lifetime imaging by time-correlated single-photon counting. Microsc. Res. Tech. 2004, 63, 58–66. [Google Scholar] [CrossRef] [PubMed]

- Duncan, R.-R.; Bergmann, A.; Cousin, M.-A.; Apps, D.-K.; Shipston, M.-J. Multi-dimensional time-correlated single photon counting (TCSPC) fluorescence lifetime imaging microscopy (FLIM) to detect FRET in cells. J. Microsc. 2004, 215, 1–12. [Google Scholar] [CrossRef]

- Gribonval, R.; Chardon, G.; Daudet, L. Blind calibration for compressed sensing by convex optimization. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 2713–2716. [Google Scholar]

- Do, T.-T.; Gan, L.; Nguyen, N.; Tran, T.-D. Sparsity adaptive matching pursuit algorithm for practical compressed sensing. In Proceedings of the 2008 42nd Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 26–29 October 2008; pp. 581–587. [Google Scholar]

- Tropp, J.A.; Gilbert, A.-C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Li, C. An Efficient Algorithm for Total Variation Regularization with Applications to the Single Pixel Camera and Compressive Sensing. Master’s Thesis, Rice University, Houston, TX, USA, 2010. [Google Scholar]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Ji, S.; Xue, Y.; Carin, L. Bayesian compressive sensing. IEEE Trans. Signal Process. 2008, 56, 2346–2356. [Google Scholar] [CrossRef]

- Candès, E.-J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef]

- Li, C.; Yin, W.; Jiang, H.; Zhang, Y. An efficient augmented Lagrangian method with applications to total variation minimization. Comput. Optim. Appl. 2013, 56, 507–530. [Google Scholar] [CrossRef]

- Kim, Y.; Nadar, M.S.; Bilgin, A. Compressed sensing using a Gaussian Scale Mixtures model in wavelet domain. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 3365–3368. [Google Scholar]

- Lyu, M.; Wang, W.; Wang, H.; Wang, H.; Li, G.; Chen, N.; Situ, G. Deep-learning-based ghost imaging. Sci. Rep. 2017, 7, 17865. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Wang, G.; Dong, G.; Zhu, S.; Chen, H.; Zhang, A.; Xu, Z. Ghost imaging based on deep learning. Sci. Rep. 2018, 8, 6469. [Google Scholar] [CrossRef]

- Wang, F.; Wang, H.; Wang, H.; Li, G.; Situ, G. Learning from simulation: An end-to-end deep-learning approach for computational ghost imaging. Opt. Express 2019, 27, 25560–25572. [Google Scholar] [CrossRef]

- Li, B.; Yan, Q.-R.; Wang, Y.-F.; Yang, Y.-B.; Wang, Y.-H. A binary sampling Res2net reconstruction network for single-pixel imaging. Rev. Sci. Instrum. 2020, 91, 033709. [Google Scholar] [CrossRef]

- Zhu, R.; Yu, H.; Tan, Z.; Lu, R.; Han, S.; Huang, Z.; Wang, J. Ghost imaging based on Y-net: A dynamic coding and decoding approach. Opt. Express 2020, 28, 17556–17569. [Google Scholar] [CrossRef] [PubMed]

- Shang, R.; Hoffer-Hawlik, K.; Wang, F.; Situ, G.; Luke, G. Two-step training deep learning framework for computational imaging without physics priors. Opt. Express 2021, 29, 15239–15254. [Google Scholar] [CrossRef]

- Wang, X.; Zhu, A.; Lin, S.; Xu, B. Learning-based high-quality image recovery from 1D signals obtained by single-pixel imaging. Opt. Commun. 2022, 521, 128571. [Google Scholar] [CrossRef]

- Zhu, Y.L.; She, R.-B.; Liu, W.-Q.; Lu, Y.-F.; Li, G.-Y. Deep Learning Optimized Terahertz Single-Pixel Imaging. IEEE Trans. Terahertz Sci. Technol. 2021, 12, 165–172. [Google Scholar] [CrossRef]

- Rizvi, S.; Cao, J.; Hao, Q. Deep learning based projector defocus compensation in single-pixel imaging. Opt. Express 2020, 28, 25134–25148. [Google Scholar] [CrossRef]

- Woo, B.H.; Tham, M.-L.; Chua, S.-Y. Deep Learning Based Single Pixel Imaging Using Coarse-to-Fine Sampling. In Proceedings of the 2022 IEEE 18th International Colloquium on Signal Processing & Applications (CSPA), Selangor, Malaysia, 12 May 2022; pp. 127–131. [Google Scholar]

- Shang, R.; O’Brien, M.-A.; Luke, G.-P. Deep-learning-driven Reliable Single-pixel Imaging with Uncertainty Approximation. arXiv 2021, arXiv:2107.11678. [Google Scholar]

- Karim, N.; Rahnavard, N. SPI-GAN: Towards single-pixel imaging through generative adversarial network. arXiv 2021, arXiv:2107.01330. [Google Scholar]

- Yang, Z.; Bai, Y.-M.; Sun, L.-D.; Huang, K.-X.; Liu, J.; Ruan, D.; Li, J.-L. SP-ILC: Concurrent Single-Pixel Imaging. Object Location, and Classification by Deep Learning. Photonics 2021, 8, 400. [Google Scholar] [CrossRef]

- Li, W.-C.; Yan, Q.-R.; Guan, Y.-Q.; Yang, S.-T.; Peng, C.; Fang, Z.-Y. Deep-learning-based single-photon-counting compressive imaging via jointly trained subpixel convolution sampling. Appl. Opt. 2020, 59, 6828–6837. [Google Scholar] [CrossRef] [PubMed]

- Gao, W.; Yan, Q.-R.; Zhou, H.-L.; Yang, S.-T.; Fang, Z.-Y.; Wang, Y.-H. Single photon counting compressive imaging using a generative model optimized via sampling and transfer learning. Opt. Express 2021, 29, 5552–5566. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Wang, C.; Deng, C.; Han, S.; Situ, G. Single-pixel imaging using physics enhanced deep learning. Photonics Res. 2022, 10, 104–110. [Google Scholar] [CrossRef]

- Sun, S.; Yan, Q.; Zheng, Y.; Wei, Z.; Lin, J.; Cai, Y. Single pixel imaging based on generative adversarial network optimized with multiple prior information. IEEE Photonics J. 2022, 14, 4. [Google Scholar] [CrossRef]

- Lin, J.; Yan, Q.; Lu, S.; Zheng, Y.; Sun, S.; Wei, Z. A Compressed Reconstruction Network Combining Deep Image Prior and Autoencoding Priors for Single-Pixel Imaging. Photonics 2022, 9, 343. [Google Scholar] [CrossRef]

- Hoshi, I.; Shimobaba, T.; Kakue, T.; Ito, T. Single-pixel imaging using a recurrent neural network combined with convolutional layers. Opt. Express 2020, 28, 34069–34078. [Google Scholar] [CrossRef]

- Peng, Y.; Tan, H.; Liu, Y.; Zhang, M. Structure Prior Guided Deep Network for Compressive Sensing Image Reconstruction from Big Data. In Proceedings of the 2020 6th International Conference on Big Data and Information Analytics (BigDIA), Shenzhen, China, 4–6 December 2020; pp. 270–277. [Google Scholar]

- Shi, W.; Jiang, F.; Liu, S.; Zhao, D. Image compressed sensing using convolutional neural network. IEEE Trans. Image Process. 2019, 29, 375–388. [Google Scholar] [CrossRef] [PubMed]

- Zheng, S.; Yang, X.; Yuan, X. Two-Stage is Enough: A Concise Deep Unfolding Reconstruction Network for Flexible Video Compressive Sensing. arXiv 2022, arXiv:2201.05810. [Google Scholar]

- Zhang, K.; Gool, L.-V.; Timofte, R. Deep unfolding network for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3217–3226. [Google Scholar]

- Yin, H.; Wang, T. Deep side group sparse coding network for image denoising. IET Image Process. 2022, 17, 1–11. [Google Scholar] [CrossRef]

- Mousavi, A.; Patel, A.-B.; Baraniuk, R.-G. A deep learning approach to structured signal recovery. In Proceedings of the 2015 53rd Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 29 September–2 October 2015; pp. 1336–1343. [Google Scholar]

- Kulkarni, K.; Lohit, S.; Turaga, P.; Kerviche, R.; Ashok, A. Reconnet: Non-iterative reconstruction of images from compressively sensed measurements. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 449–458. [Google Scholar]

- Yao, H.; Dai, F.; Zhang, S.; Zhang, Y.; Tian, Q.; Xu, C. Dr2-net: Deep residual reconstruction network for image compressive sensing. Neurocomputing 2019, 359, 483–493. [Google Scholar] [CrossRef]

- Bora, A.; Jalal, A.; Price, E.; Dimakis, A.-G. Compressed sensing using generative models. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 537–546. [Google Scholar]

- Sun, J.; Li, H.; Xu, Z. Deep ADMM-Net for compressive sensing MRI. Adv. Neural Inf. Process. Syst. 2016, 19, 10–18. [Google Scholar]

- Zhang, J.; Ghanem, B. ISTA-Net: Interpretable optimization-inspired deep network for image compressive sensing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1828–1837. [Google Scholar]

- Guan, Y.; Yan, Q.; Yang, S.; Li, B.; Cao, Q.; Fang, Z. Single photon counting compressive imaging based on a sampling and reconstruction integrated deep network. Opt. Commun. 2020, 459, 124923. [Google Scholar] [CrossRef]

| Sampling Methods | MR = 25% | MR = 10% | MR = 5% | MR = 1.5% |

|---|---|---|---|---|

| Fully connected layer sampling | 33.09 | 28.41 | 25.85 | 21.87 |

| Large convolutional layer Sampling | 33.20 | 28.98 | 26.14 | 23.18 |

| Random Gaussian matrix sampling | 31.82 | 26.59 | 23.09 | 19.31 |

| β Value | MR = 25% | MR = 10% | MR = 5% | MR = 1.5% |

|---|---|---|---|---|

| β = 0 | 33.20 | 28.98 | 26.14 | 23.15 |

| β = 0.01 | 33.89 | 29.21 | 26.49 | 23.12 |

| β = 0.1 | 33.66 | 29.10 | 26.67 | 23.05 |

| β = 1 | 32.83 | 28.74 | 26.50 | 23.17 |

| Image Name | Methods | MR = 25% | MR = 10% | MR = 5% | MR = 1.5% | ||||

|---|---|---|---|---|---|---|---|---|---|

| PSNR/dB | SSIM | PSNR/dB | SSIM | PSNR/dB | SSIM | PSNR/dB | SSIM | ||

| Barbara | TVAL3 | 24.07 | 0.72 | 21.76 | 0.55 | 20.45 | 0.46 | 16.80 | 0.32 |

| DR2-Net | 25.30 | 0.80 | 22.81 | 0.63 | 21.72 | 0.54 | 19.72 | 0.44 | |

| ISTA-Net+ | 27.27 | 0.86 | 23.08 | 0.67 | 21.44 | 0.56 | 19.60 | 0.42 | |

| SRMU-Net | 29.25 | 0.90 | 24.35 | 0.72 | 23.74 | 0.66 | 22.47 | 0.54 | |

| Boats | TVAL3 | 28.59 | 0.83 | 24.09 | 0.66 | 21.09 | 0.53 | 17.83 | 0.40 |

| DR2-Net | 30.52 | 0.87 | 26.24 | 0.75 | 23.17 | 0.62 | 20.04 | 0.48 | |

| ISTA-Net+ | 32.55 | 0.91 | 26.79 | 0.78 | 23.05 | 0.63 | 19.40 | 0.45 | |

| SRMU-Net | 35.06 | 0.95 | 29.69 | 0.87 | 26.67 | 0.77 | 23.14 | 0.60 | |

| Cameraman | TVAL3 | 25.83 | 0.82 | 21.67 | 0.67 | 19.55 | 0.57 | 16.37 | 0.46 |

| DR2-Net | 25.71 | 0.82 | 22.56 | 0.72 | 20.72 | 0.63 | 18.18 | 0.53 | |

| ISTA-Net+ | 27.33 | 0.86 | 22.93 | 0.72 | 20.34 | 0.63 | 17.62 | 0.50 | |

| SRMU-Net | 29.51 | 0.90 | 25.64 | 0.82 | 23.57 | 0.75 | 21.28 | 0.65 | |

| Foreman | TVAL3 | 34.82 | 0.91 | 29.26 | 0.81 | 24.76 | 0.69 | 20.03 | 0.56 |

| DR2-Net | 35.36 | 0.92 | 31.70 | 0.87 | 28.41 | 0.81 | 23.93 | 0.70 | |

| ISTA-Net+ | 38.18 | 0.95 | 33.10 | 0.89 | 29.16 | 0.83 | 23.61 | 0.69 | |

| SRMU-Net | 39.43 | 0.97 | 35.59 | 0.93 | 32.59 | 0.89 | 28.23 | 0.81 | |

| House | TVAL3 | 31.75 | 0.86 | 26.37 | 0.75 | 23.16 | 0.64 | 19.41 | 0.54 |

| DR2-Net | 33.26 | 0.87 | 29.07 | 0.80 | 25.75 | 0.73 | 22.21 | 0.63 | |

| ISTA-Net+ | 35.34 | 0.90 | 30.02 | 0.82 | 26.09 | 0.75 | 20.84 | 0.60 | |

| SRMU-Net | 36.85 | 0.92 | 32.64 | 0.87 | 29.80 | 0.82 | 24.88 | 0.71 | |

| Monarch | TVAL3 | 27.39 | 0.86 | 20.93 | 0.65 | 18.02 | 0.52 | 14.96 | 0.37 |

| DR2-Net | 28.55 | 0.90 | 23.36 | 0.78 | 20.33 | 0.64 | 16.76 | 0.46 | |

| ISTA-Net+ | 31.67 | 0.94 | 24.86 | 0.81 | 19.99 | 0.65 | 15.80 | 0.43 | |

| SRMU-Net | 33.93 | 0.97 | 28.33 | 0.91 | 24.37 | 0.82 | 19.57 | 0.61 | |

| Parrot | TVAL3 | 26.87 | 0.86 | 23.26 | 0.75 | 21.07 | 0.65 | 14.43 | 0.44 |

| DR2-Net | 28.71 | 0.89 | 24.66 | 0.82 | 22.47 | 0.75 | 19.97 | 0.64 | |

| ISTA-Net+ | 29.29 | 0.92 | 25.37 | 0.84 | 22.05 | 0.76 | 19.36 | 0.63 | |

| SRMU-Net | 32.70 | 0.95 | 28.57 | 0.90 | 25.63 | 0.84 | 23.18 | 0.75 | |

| Peppers | TVAL3 | 29.47 | 0.85 | 22.95 | 0.68 | 19.68 | 0.54 | 15.13 | 0.38 |

| DR2-Net | 29.73 | 0.86 | 25.09 | 0.76 | 21.98 | 0.64 | 18.52 | 0.51 | |

| ISTA-Net+ | 32.94 | 0.91 | 26.55 | 0.80 | 22.62 | 0.69 | 18.22 | 0.49 | |

| SRMU-Net | 34.22 | 0.94 | 28.91 | 0.89 | 25.59 | 0.83 | 22.21 | 0.69 | |

| Mean | TVAL3 | 28.60 | 0.84 | 23.79 | 0.69 | 20.97 | 0.58 | 16.87 | 0.43 |

| DR2-Net | 29.64 | 0.87 | 25.69 | 0.77 | 23.07 | 0.68 | 19.92 | 0.55 | |

| ISTA-Net+ | 31.82 | 0.91 | 26.59 | 0.79 | 23.09 | 0.69 | 19.31 | 0.53 | |

| SRMU-Net | 33.89 | 0.94 | 29.21 | 0.86 | 26.49 | 0.80 | 23.12 | 0.67 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, Q.; Xiong, X.; Lei, K.; Zheng, Y.; Wang, Y. Sampling and Reconstruction Jointly Optimized Model Unfolding Network for Single-Pixel Imaging. Photonics 2023, 10, 232. https://doi.org/10.3390/photonics10030232

Yan Q, Xiong X, Lei K, Zheng Y, Wang Y. Sampling and Reconstruction Jointly Optimized Model Unfolding Network for Single-Pixel Imaging. Photonics. 2023; 10(3):232. https://doi.org/10.3390/photonics10030232

Chicago/Turabian StyleYan, Qiurong, Xiancheng Xiong, Ke Lei, Yongjian Zheng, and Yuhao Wang. 2023. "Sampling and Reconstruction Jointly Optimized Model Unfolding Network for Single-Pixel Imaging" Photonics 10, no. 3: 232. https://doi.org/10.3390/photonics10030232

APA StyleYan, Q., Xiong, X., Lei, K., Zheng, Y., & Wang, Y. (2023). Sampling and Reconstruction Jointly Optimized Model Unfolding Network for Single-Pixel Imaging. Photonics, 10(3), 232. https://doi.org/10.3390/photonics10030232