Delineating Regional BES–ELM Neural Networks for Studying Indoor Visible Light Positioning

Abstract

:1. Introduction

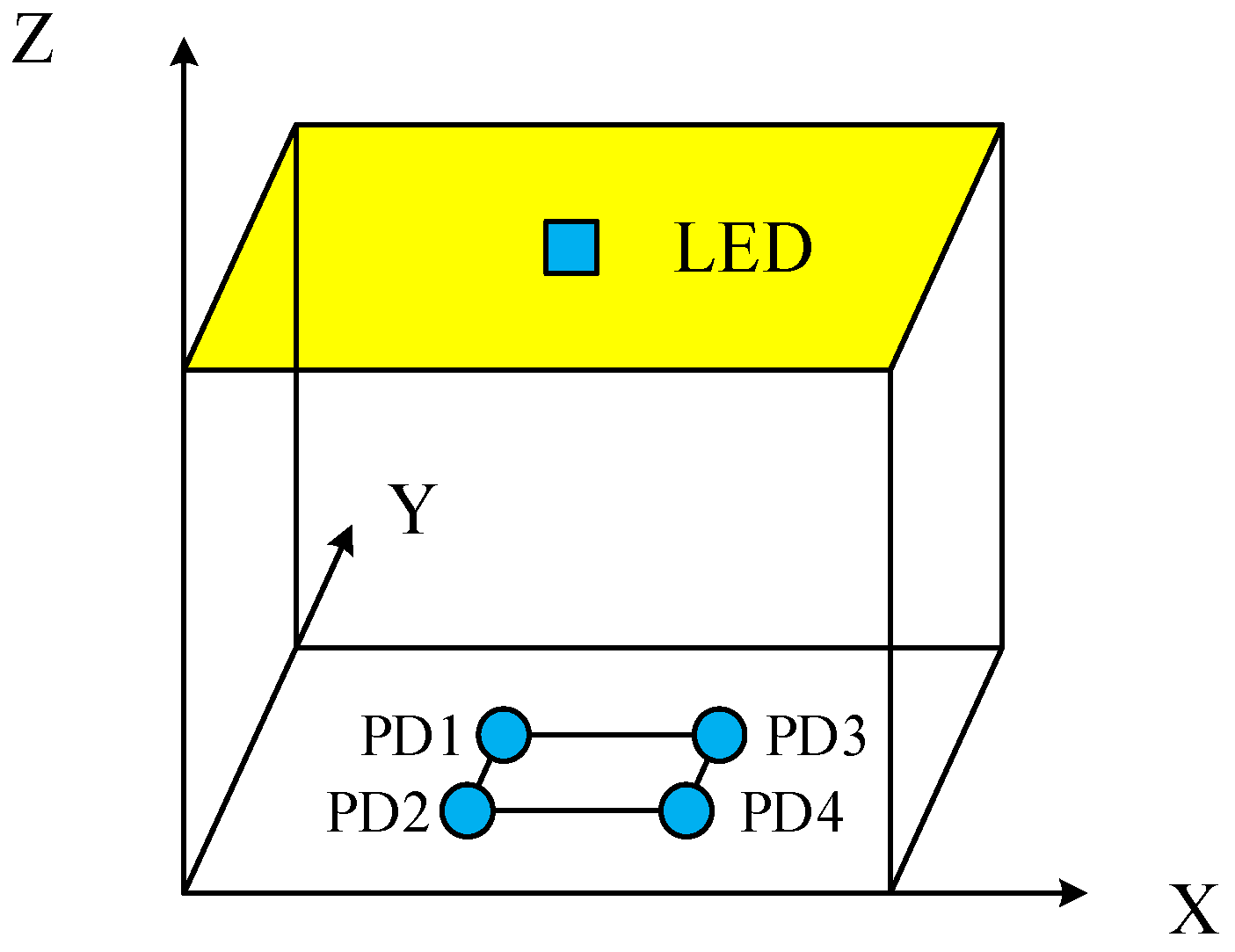

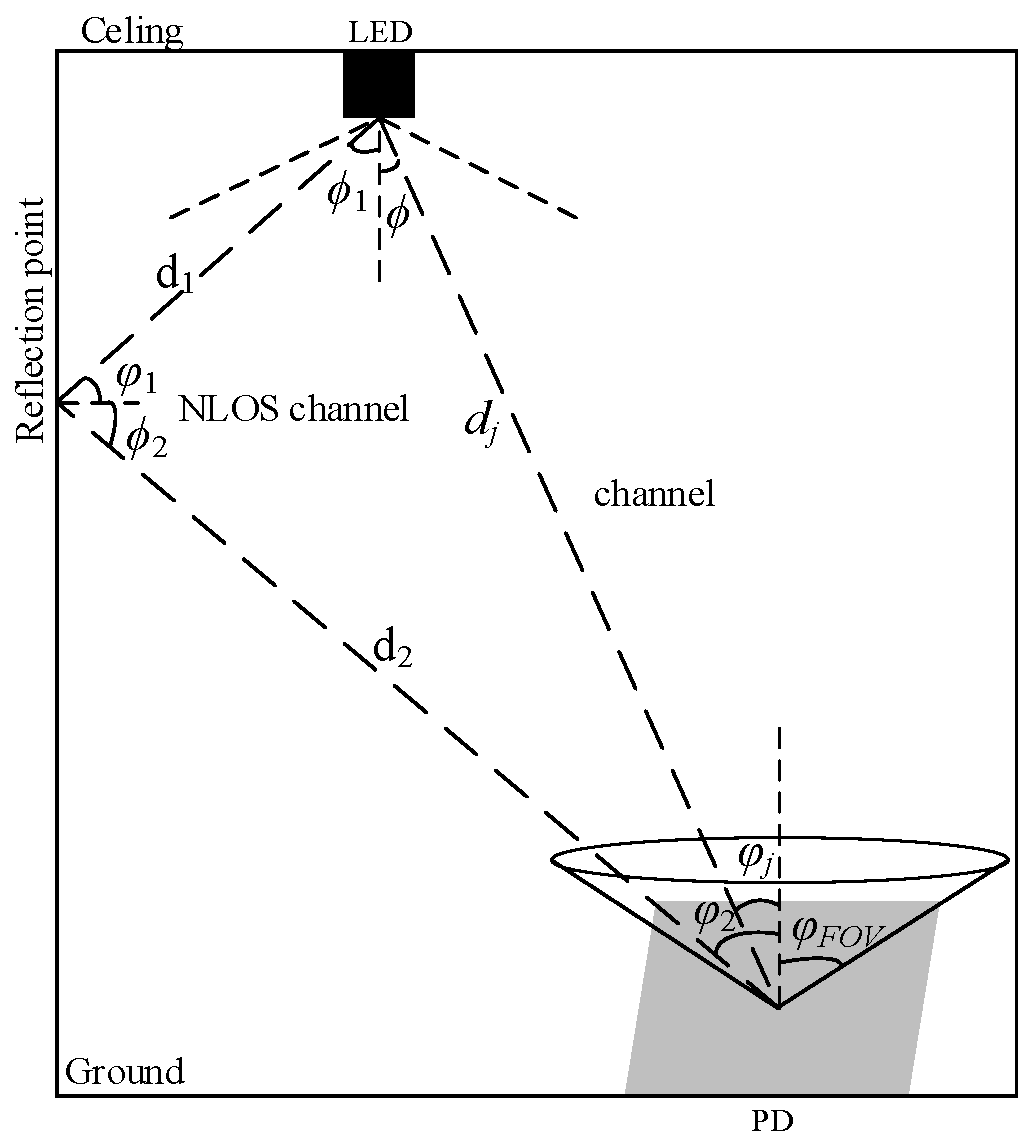

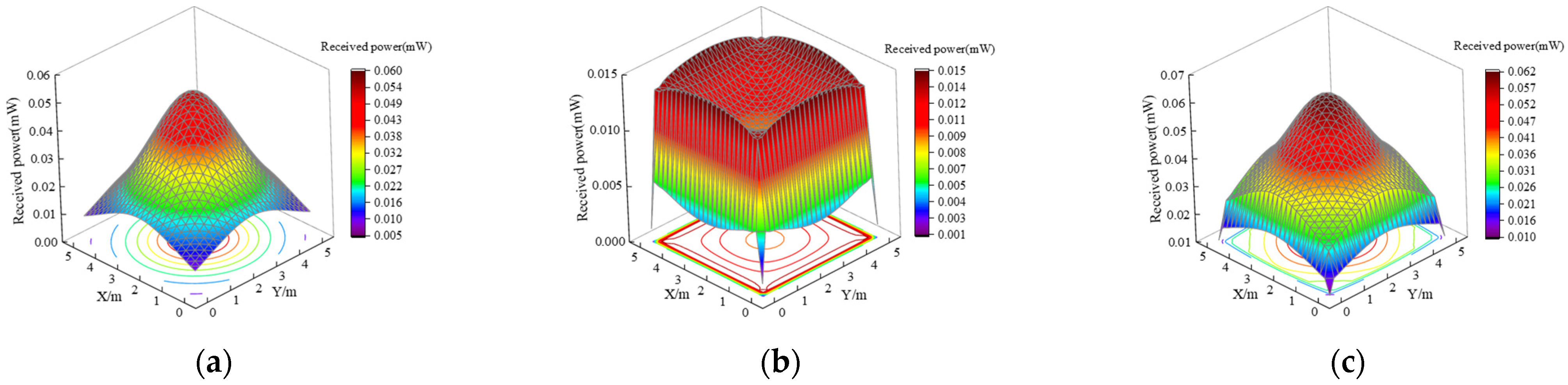

2. Indoor Model

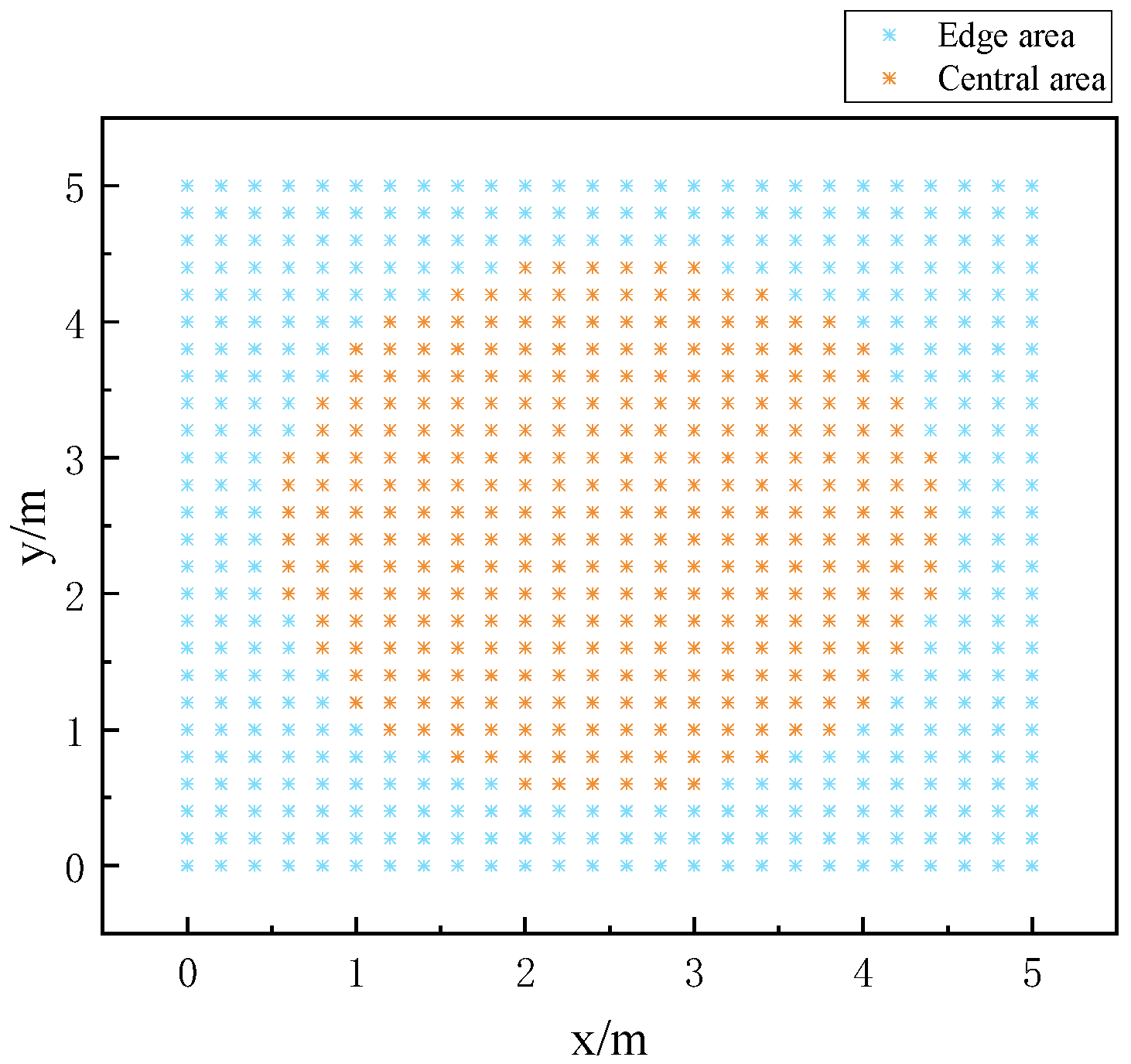

3. Delineating Regional Positioning

3.1. Create a Fingerprint Dataset

3.2. K-Means Clustering Algorithm

- Initialization. First, let t = 0, randomly select k sample data points as the center of the initial clustering, denoted as m(0) = (m1(0), …, ml(0), …, mk(0)).

- Clustering samples. For the set class center m(t) = (m1(t), …, ml(t), …, mk(t)), the corresponding ml(t) is the center of the lth class Cl. Calculate the distance of each data sample to the class center and allocate it to the nearest class center corresponding to the sample. The clustering results are formed, denoted as C(t).

- Determine the new center of classes. Based on the clustering result C(t) in the previous step, calculate the average value of all sample data in each current class, and use the obtained average value as the new center of mass, which is expressed as the following sequence: m(t + 1) = (m1(t + 1), …, ml(t + 1), …, mk(t + 1)).

- Re-allocation. Recalculate the distance of all sample data to the new class center and assign the data to the class represented by its nearest center.

- Iterate to end clustering. If the iteration converges (all the class centers no longer change) or the stopping condition is satisfied, output C* = C(t) at this point; otherwise, set t = t + 1 and return to Step Three.

3.3. BES Algorithm

- Selecting the search space: The vulture randomly identifies a search area and assesses the prey quantity to find the optimal position, which facilitates the search for prey, and the update of the vulture’s position Pi,new in this stage is calculated by multiplying the a priori information of the upcoming search by α. This behavior is mathematically modeled as:where α represents the parameter for controlling position changes, with a range set between 1.5 and 2; r represents a randomly selected value within the range of 0 to 1; Pbest is the best position for a search determined by the current bald eagle search; Pmean represents the mean distribution position upon the conclusion of the prior search; and Pi denotes the position of i bald eagle;

- 2.

- Searching the space for prey. Bald eagles fly in a spiraling pattern in the chosen search area, hastening the search for prey and identifying the optimal position for a diving capture. Bald eagle locations are updated as follows:where x(i) and y(i) indicate the position of the bald eagle in polar coordinates, with the values ranging from (−1, 1).

- 3.

- Dive to capture prey. From the optimal position inside the search area, bald eagles rapidly descend upon the target prey, while the remaining populace converges on the best location and launches an attack. The bald eagle’s position when swooping in a line can be shown as follows:where c1 and c2 denote the bald eagle’s movement strength towards the optimal position as opposed to the central position, both taking values of (1, 2); rand represents a randomly generated value within (0, 1).

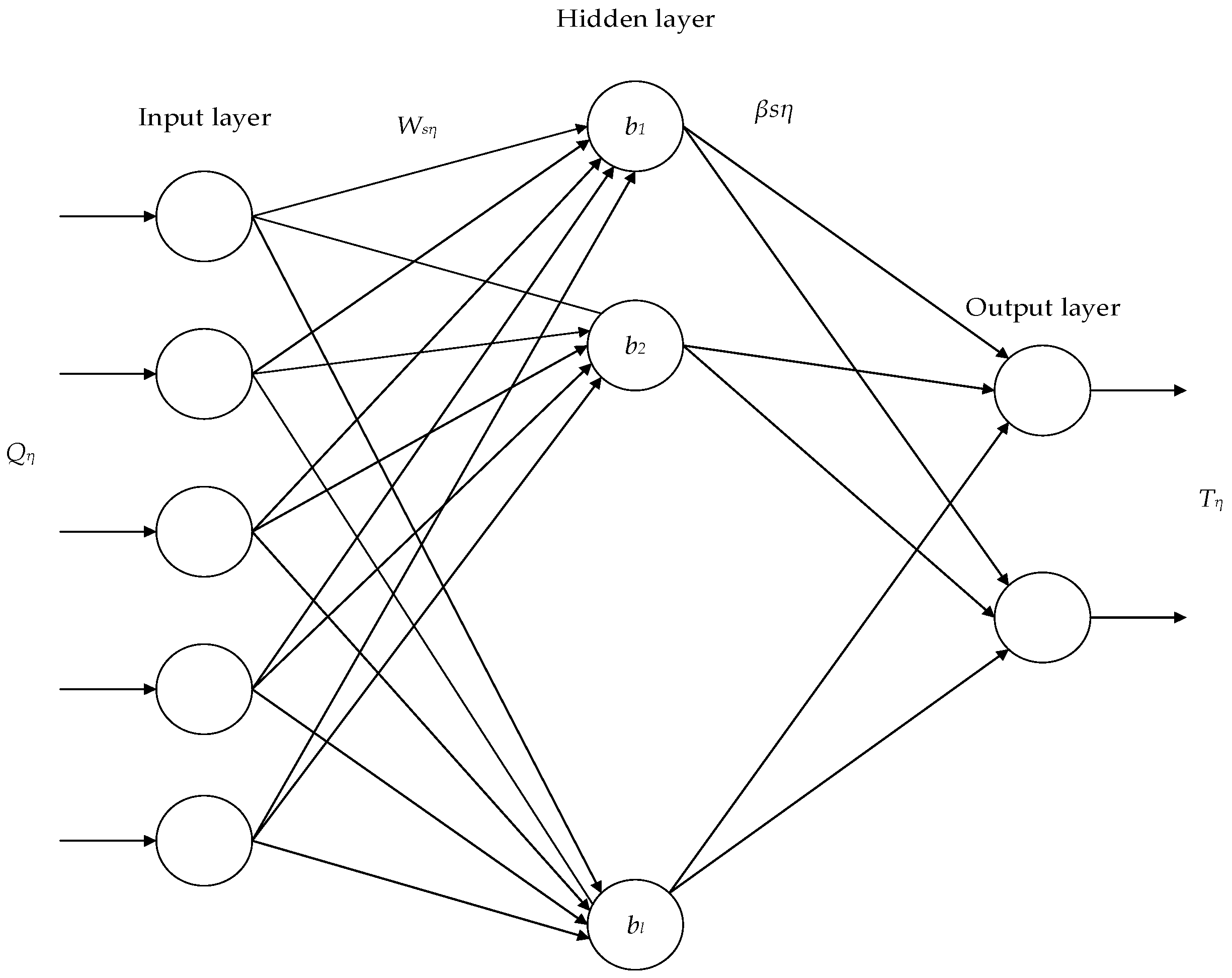

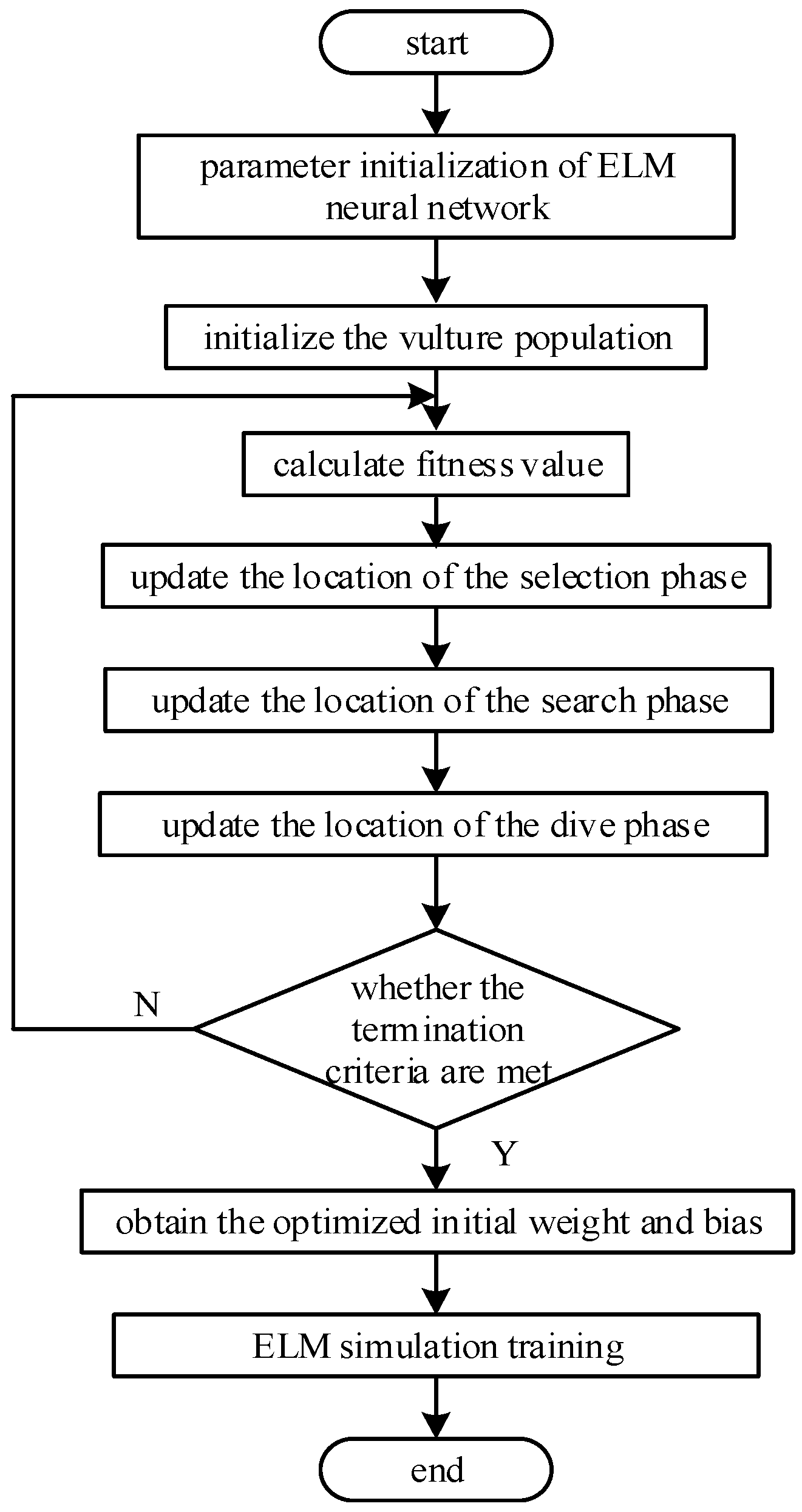

3.4. Modeling the BES–ELM

- Construct the network model. Initialize the model parameters and define the spatial dimensions of individual vultures by the number of input weights and thresholds within the network’s hidden layer.

- Calculate the fitness value of the algorithm using Equation (13). The optimal individual value is then updated based on the fitness obtained, with selection, searching, and swooping performed until the constraints are satisfied and the update is terminated to obtain the optimal solution.

- Train the network model. The parameter value that minimizes the fitness function is employed as the optimal weight threshold for the ELM network, which is then used to construct the BES–ELM network model.

3.5. Edge Area Correction

- Calculate the Euclidean distance of ZB from the optical power of all reference points di.

- 2.

- The obtained N Euclidean distances di are sorted in order of ascension to identify the mean Ed.

- 3.

- Comparing each distance value di with the mean value Ed and removing distances higher than the average value, the number of remaining reference points is denoted as K.

- 4.

- Continue the process described above, progressively decreasing the value of K as it approaches the true value. Select the remaining K reference points with the smallest Euclidean distance to assign weights to determine the coordinates of the points to be measured.

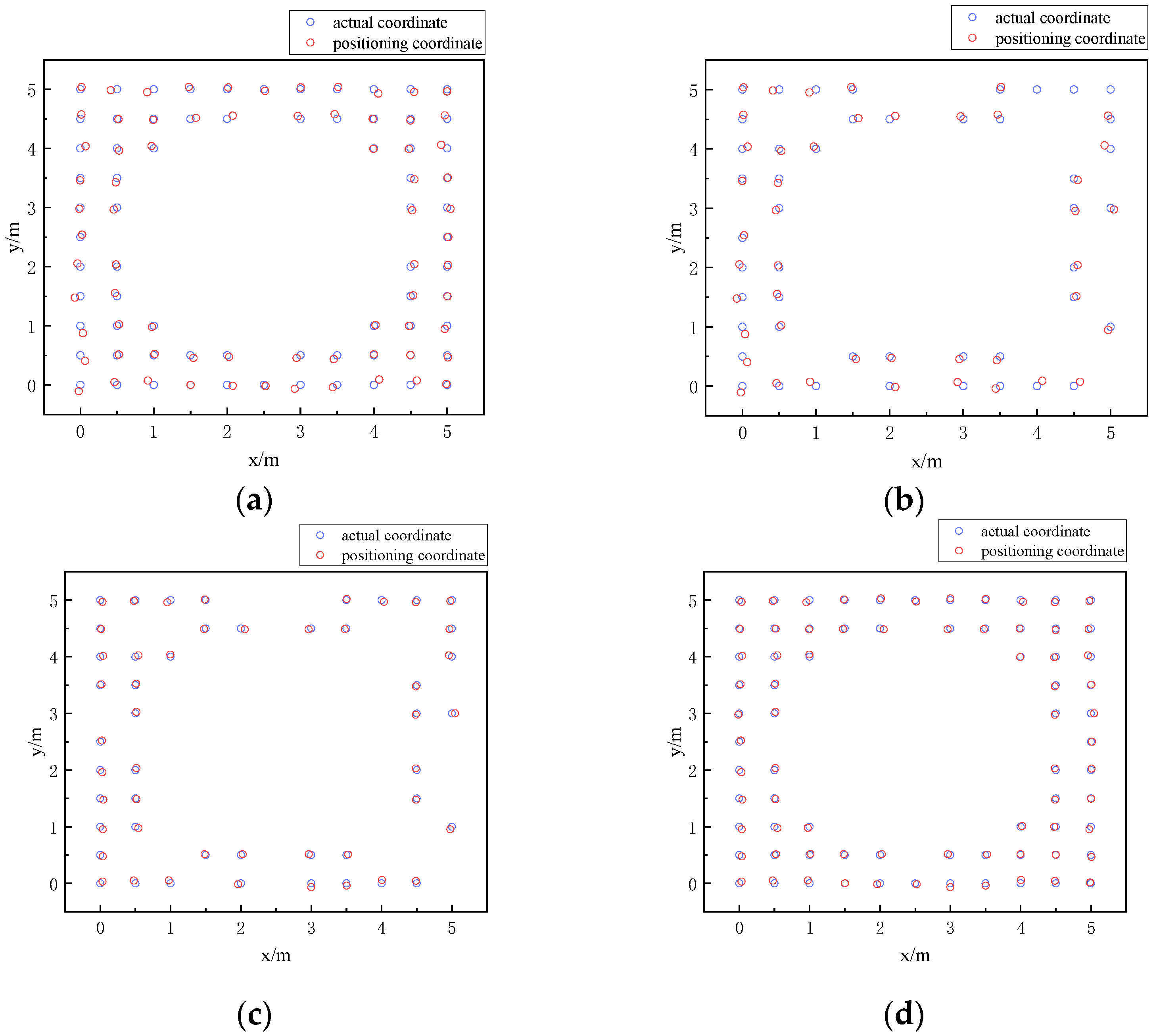

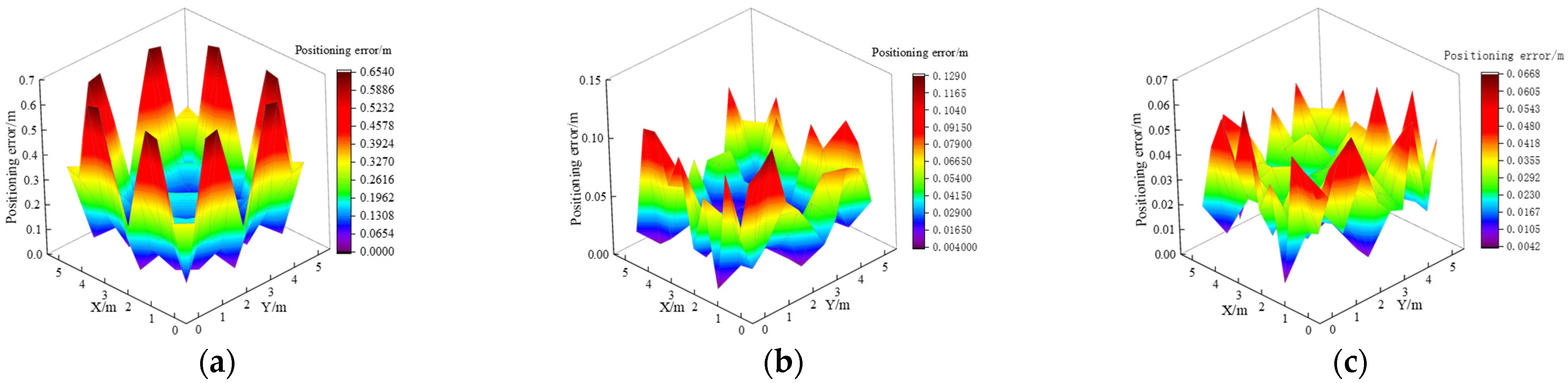

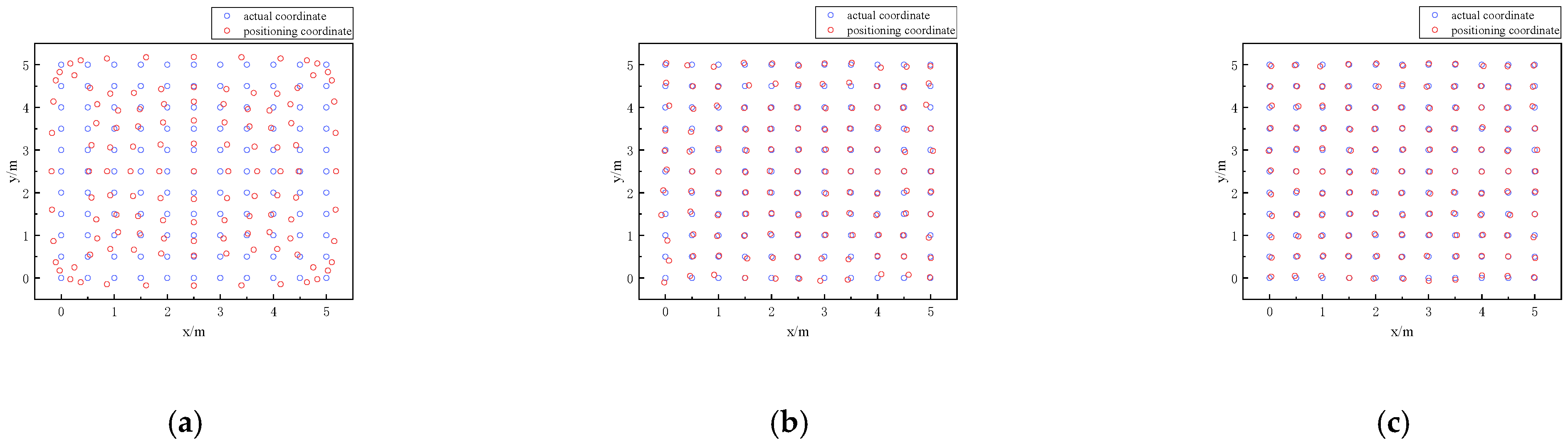

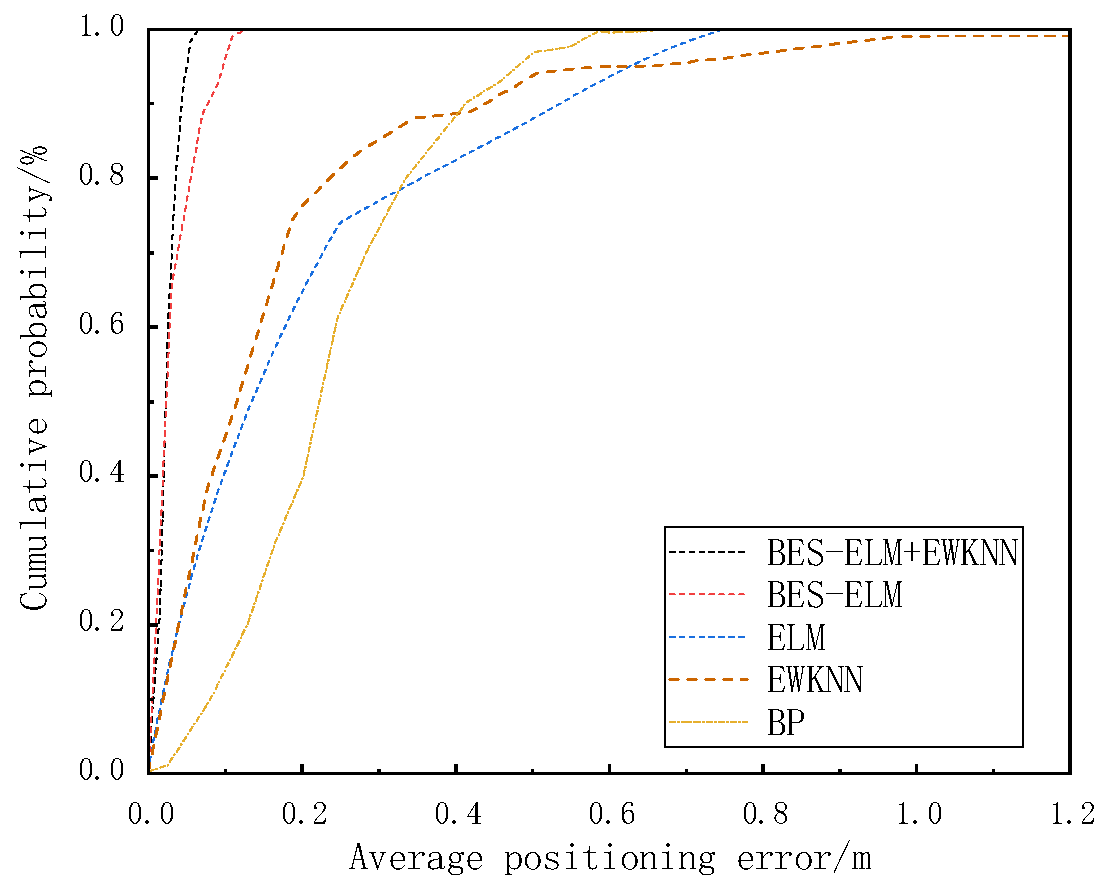

4. Simulation and Result Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Filippoupolitis, A.; Oliff, W.; Loukas, G. Bluetooth Low Energy Based Occupancy Detection for Emergency Management. In Proceedings of the 2016 15th International Conference on Ubiquitous Computing and Communications and 2016 International Symposium on Cyberspace and Security (IUCC-CSS), Granada, Spain, 14–16 December 2016; pp. 31–38. [Google Scholar]

- Tekler, Z.D.; Low, R.; Yuen, C.; Blessing, L. Plug-Mate: An Iot-Based Occupancy-Driven Plug Load Management System in Smart Buildings. Build. Environ. 2022, 223, 109472. [Google Scholar] [CrossRef]

- Balaji, B.; Xu, J.; Nwokafor, A.; Gupta, R.; Agarwal, Y. Sentinel: Occupancy Based Hvac Actuation Using Existing Wifi Infrastructure within Commercial Buildings. In Proceedings of the 11th ACM Conference on Embedded Networked Sensor Systems, Roma, Italy, 11–15 November 2013; p. 17. [Google Scholar]

- Tekler, Z.D.; Chong, A. Occupancy Prediction Using Deep Learning Approaches across Multiple Space Types: A Minimum Sensing Strategy. Build. Environ. 2022, 226, 109689. [Google Scholar] [CrossRef]

- Zhao, C.; Zhang, H.; Song, J. Fingerprint-based indoor visible light positioning method. Chin. J. Lasers. 2018, 45, 202–208. [Google Scholar]

- Cao, Y.; Dang, Y.; Peng, X.; Li, Y. TOA/RSS hybrid information indoor visible light positioning method. Chin. J. Lasers 2021, 48, 133–141. [Google Scholar]

- Dong, W.; Wang, X.; Wu, N. A hybrid RSS/AOA based algorithm for indoor visible light positioning. Laser Optoelectron. Prog. 2018, 55, 88–93. [Google Scholar]

- Wang, R.; Niu, G.; Cao, Q.; Chen, C.S.; Ho, S.-W. A Survey of Visible-Light-Communication-Based Indoor Positioning Systems. Sensors 2024, 24, 5197. [Google Scholar] [CrossRef]

- Almadani, Y.; Ijaz, M.; Adebisi, B.; Rajbhandari, S.; Bastiaens, S.; Joseph, W.; Plets, D. An experimental evaluation of a 3D visible light positioning system in an industrial environment with receiver tilt and multipath reflections. Optics Commun. 2020, 483, 126654. [Google Scholar] [CrossRef]

- Ke, X.; Ding, D. Wireless Optical Communication, 2nd ed.; Science Press: Beijing, China, 2022. [Google Scholar]

- Huy, Q.T.; Cheolkeun, H. Improved Visible Light-Based Indoor Positioning System Using Machine Learning Classification and Regression. Appl. Sci. 2019, 9, 1048. [Google Scholar] [CrossRef]

- Raes, W.; Knudde, N.; De Bruycker, J.; Dhaene, T.; Stevens, N. Experimental Evaluation of Machine Learning Methods for Robust Received Signal Strength-Based Visible Light Positioning. Sensors 2020, 20, 6109. [Google Scholar] [CrossRef]

- Shu, Y.-H.; Chang, Y.-H.; Lin, Y.-Z.; Chow, C.-W. Real-Time Indoor Visible Light Positioning (VLP) Using Long Short Term Memory Neural Network (LSTM-NN) with Principal Component Analysis (PCA). Sensors 2024, 24, 5424. [Google Scholar] [CrossRef]

- Sejan, M.A.S.; Rahman, M.H.; Aziz, M.A.; Kim, D.-S.; You, Y.-H.; Song, H.-K. A Comprehensive Survey on MIMO Visible Light Communication: Current Research, Machine Learning and Future Trends. Sensors 2023, 23, 739. [Google Scholar] [CrossRef]

- Gu, W.; Aminikashani, M.; Deng, P.; Kavehrad, M. Impact of Multipath Reflections on the Performance of Indoor Visible Light Positioning Systems. J. Light. Technol. 2016, 34, 2578–2587. [Google Scholar] [CrossRef]

- Saadi, M.; Zhao, Y.; Wuttisttikulkij, L.; Tahir, M. A heuristic approach to indoor positioning using light emitting diodes. J. Theor. Appl. Inf. Technol. 2016, 2984, 332–338. [Google Scholar]

- Saadi, M.; Ahmad, T.; Zhao, Y.; Wuttisttikulkij, L. An LED Based Indoor Positioning System Using k-Means Clustering. In Proceedings of the 2016 15th IEEE International Conference on Machine Learning and Applications (ICMLA), Anaheim, CA, USA, 18–20 December 2016; pp. 246–252. [Google Scholar]

- Liu, R.; Liang, Z.; Yang, K.; Li, W. Machine learning based visible light indoor positioning with single-LED and single rotatable photo detector. IEEE Photonics J. 2022, 14, 7322511. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, X. Indoor positioning algorithm of delineating regional visible light based on multilayer ELM. J. Hunan Univ. Nat. Sci. 2019, 46, 125–132. [Google Scholar]

- Ding, D.Q.; Ke, X.Z.; Li, J.X. Design and simulation on the layout of lighting for VLC system. Opto-Electr. Eng. 2007, 34, 131–134. [Google Scholar]

- Van, M.T.; Van Tuan, N.; Son, T.T.; Le-Minh, H.; Burton, A. Weighted k-nearest neighbor model for indoor VLC positioning. IET Commun. 2017, 11, 864–871. [Google Scholar] [CrossRef]

- Maheepala, M.; Kouzani, A.Z.; Joordens, M.A. Light-based indoor positioning systems: A review. IEEE Sens. J. 2020, 20, 3971–3995. [Google Scholar] [CrossRef]

- Ke, C.; Shu, Y.; Ke, X. Research on Indoor Visible Light Location Based on Fusion Clustering Algorithm. Photonics 2023, 10, 853. [Google Scholar] [CrossRef]

- Qin, L.; Zhang, C.; Guo, Y. Research on visible light indoor positioning algorithm based on Elman neural network. Appl. Opt. 2022, 42, 24–31. [Google Scholar]

- Alsattar, A.H.; Zaidan, A.A.; Zaidan, B.B. Novel meta-heuristic bald eagle search optimization algorithm. Artif. Intell. Rev. 2020, 53, 2237–2264. [Google Scholar] [CrossRef]

- Chhabra, A.; Hussien, A.G.; Hashim, F.A. Hashim. Improved bald eagle search algorithm for global optimization and feature selection. Alex. Eng. J. 2023, 68, 141–180. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Tran, H.Q.; Ha, C. Machine learning in indoor visible light positioning systems: A review. Neurocomputing 2022, 491, 117–131. [Google Scholar] [CrossRef]

| Simulation Parameter | Simulation Value |

|---|---|

| LED emission power Pt | 1 W |

| The actual receiving area of a single PD | 1 cm2 |

| Wall reflectivity RW | 0.7 |

| Receiver field of view φFOV | 70° |

| The refractive index of the lens n | 0.8 |

| Optical concentrator gain g(φ) | 1.5 |

| Optical filter gain T(φ) SNR ELM training algorithm Number of iterations Target error Search Agents The BES max iteration | 1 30 dB Gradient descent method 200 1 × 10−5 50 200 |

| Algorithm | Average Positioning Error (cm) | Average Positioning Time(s) |

|---|---|---|

| BP | 21.04 | 0.16358 |

| ELM | 23.79 | 0.18461 |

| EWKNN | 17.37 | 0.16694 |

| BES–ELM | 4.15 | 0.21847 |

| BES–ELM + EWKNN | 2.93 | 0.23961 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Ke, X. Delineating Regional BES–ELM Neural Networks for Studying Indoor Visible Light Positioning. Photonics 2024, 11, 910. https://doi.org/10.3390/photonics11100910

Zhang J, Ke X. Delineating Regional BES–ELM Neural Networks for Studying Indoor Visible Light Positioning. Photonics. 2024; 11(10):910. https://doi.org/10.3390/photonics11100910

Chicago/Turabian StyleZhang, Jiaming, and Xizheng Ke. 2024. "Delineating Regional BES–ELM Neural Networks for Studying Indoor Visible Light Positioning" Photonics 11, no. 10: 910. https://doi.org/10.3390/photonics11100910